Abstract

We present a method for enhancing 3D Gaussian Splatting primitives with brushstroke-aware stylization. Previous approaches to 3D style transfer are typically limited to color or texture modifications, lacking an understanding of artistic shape deformation. In contrast, we focus on individual 3D Gaussian primitives, exploring their potential to enable style transfer that incorporates both color- and brushstroke-inspired local geometric stylization. Specifically, we introduce additional texture features for each Gaussian primitive and apply a texture mapping technique to achieve brushstroke-like geometric effects in a rendered scene. Furthermore, we propose an unsupervised clustering algorithm to efficiently prune redundant Gaussians, ensuring that our method seamlessly integrates with existing 3D Gaussian Splatting pipelines. Extensive evaluations demonstrate that our approach outperforms existing baselines by producing brushstroke-aware artistic renderings with richer geometric expressiveness and enhanced visual appeal.

1. Introduction

Style transfer aims to extract artistic style from a reference and apply it to a target object. This technique plays a crucial role in fields like computer-aided design (CAD), where it traditionally requires significant time and expertise to master both artistic skills and specialized software. For instance, mastering 3D modeling often requires at least six months of training in both artistic fundamentals (such as shape, color, and texture) and complex 3D software tools. However, with the advancement of style transfer techniques, individuals with minimal expertise can now generate artistic 3D content, significantly lowering the barrier to digital art creation. As a result, style transfer has become increasingly influential in various visual domains, including games, AR/VR, animation, and digital content creation.

Style transfer can be categorized into two main branches: 2D and 3D style transfer. The 2D image-based approach typically takes a content image (providing semantic structure) and a style image (providing artistic features) as inputs and then synthesizes a stylized 2D image. However, unlike pixel-based representations in 2D images, 3D representations—such as meshes, point clouds, and volumetric data—vary significantly depending on the chosen primitive format. Extending 2D style transfer to 3D introduces several new constraints and challenges. One of the key challenges is choosing an appropriate 3D representation that ensures both accurate geometry and view consistency during style transfer. Previous research has explored various 3D representations, including polygon meshes [1,2,3,4,5], point clouds [6,7,8,9,10,11], and neural radiance fields (NeRFs) [12,13,14,15,16,17]. However, each of these approaches comes with limitations: Meshes require well-defined polygonal connectivity; otherwise, the output may contain undesirable artifacts; point clouds may struggle with geometric accuracy or high memory consumption; NeRF-based approaches often suffer from slow rendering speeds, as they require intensive sampling through deep neural networks.

More importantly, traditional 3D style transfer methods focus primarily on global features, such as texture and color, while neglecting local geometric attributes—such as brushstroke shapes. This contrasts with artistic intuition, as renowned painters, like Van Gogh, are distinguished by their unique brushwork, which imparts a personal and expressive touch to their art. Capturing and transferring such geometric style elements remains an open challenge in 3D style transfer.

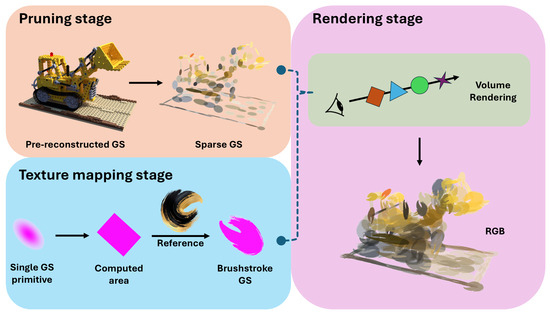

To address these limitations, we propose enhancing 3D Gaussian primitives with texture features for efficient and expressive brushstroke-aware 3D style transfer. Our method transforms a photorealistic 3D Gaussian scene, reconstructed from multi-view images, into a stylized sparse 3D Gaussian scene that supports high-quality and view-consistent renderings from novel viewpoints (See Figure 1). Our approach (Figure 2) consists of three key components: (1) Gaussian Pruning for Efficiency: We first apply an unsupervised clustering algorithm to prune dense 3D Gaussians, improving both visibility and rendering efficiency. (2) Projected Area Computation: We compute the 2D projected area of each 3D Gaussian primitive in image space, providing a basis for texture mapping. (3) Texture Mapping for Stylization: We then project a style texture onto the computed Gaussian regions, ensuring that the brushstroke effects are accurately mapped onto the 3D scene. Compared to previous 3D stylization techniques, which often suffer from inaccurate geometry or a lack of fine-grained style details, our method provides structurally meaningful and visually appealing brushstroke-aware stylization.

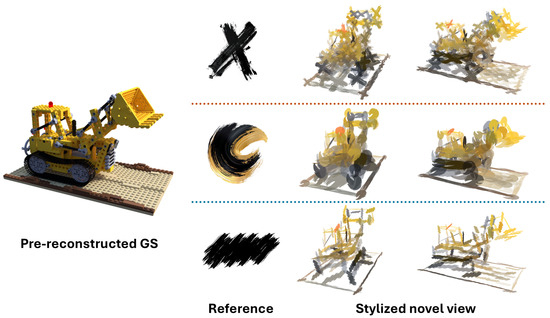

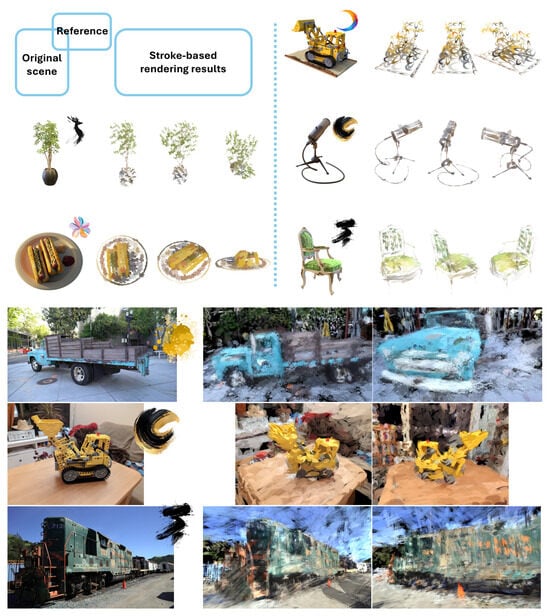

Figure 1.

We propose BrushGS, a novel approach for stroke-based rendering. Our method begins with a pre-reconstructed Gaussian Splatting of a scene (left), which is then transformed into artistic Gaussian Splatting by applying texture mapping with a 2D brushstroke reference (middle). This process leads to high-quality, stylized novel view synthesis (right), demonstrating the effectiveness of our approach in producing artistic creations.

Figure 2.

Overview of our method. We first prune pre-reconstructed 3D Gaussian Splatting (3DGS) into a sparse 3DGS and then apply texture mapping to project brushstroke references onto each Gaussian primitive. Once the stylization is complete, we achieve consistent, free-viewpoint stylized renderings.

In summary, our main contributions are as follows:

- 1.

- An efficient Gaussian pruning algorithm that reduces the density of 3D Gaussian scenes while maintaining structural consistency.

- 2.

- A texture mapping technique that enables the projection of arbitrary style textures onto 3D Gaussian primitives, preserving local geometric style elements such as brushstroke effects.

2. Related Works

In this section, we first introduce 2D image style transfer, which serves as the foundation for 3D style transfer. We then extend the discussion to 3D space, covering both 3D representations and their applications in 3D style transfer.

2.1. Two-Dimensional Image Style Transfer

Image style transfer, also known as image stylization, aims to transform an input content image into a stylized version by extracting and recombining the artistic features of a reference style. The key challenge lies in balancing the preservation of semantic content with artistic expressiveness. A failed attempt may result in an image that is too photorealistic with minimal stylization or, conversely, one where the original content is completely lost.

In the early stages, researchers designed hand-engineered filters to generate artistic effects, utilizing low-level operations such as blurring and sharpening [18,19,20,21,22]. These approaches provided fine-grained control over stylization and offered valuable insights into edge enhancement and texture manipulation. However, crafting effective filters was time-consuming and required expert knowledge [23], making it impractical for broader applications. More importantly, these methods struggled to capture the intricate and unique characteristics of an artist’s style. To overcome these limitations, researchers began exploring example-based approaches, which automatically extract and apply artistic style features to arbitrary images.

A significant breakthrough came with Gatys et al. [24], who first introduced a neural style transfer (NST) algorithm using a pre-trained convolutional neural network (VGG16) [25]. They discovered that a pre-trained VGG, originally designed for object recognition, could effectively separate image content from style, enabling its application to image stylization. This pioneering work marked the beginning of a new era in example-based style transfer, leading to a surge of follow-up research aimed at improving speed [26,27,28,29,30], enhancing visual quality [31,32,33,34], ensuring stability for video stylization [35,36], and introducing finer control over brushstrokes [37,38,39]. In general, these methods formulated style transfer as a feature matching problem, requiring a pre-trained neural network to extract and manipulate style features.

An alternative perspective emerged, viewing image stylization as a domain adaptation problem, where the goal was to transform images from one distribution (content) to another (style). This shift in viewpoint led to the adoption of Generative Adversarial Networks (GANs) [40] for style transfer. Isola et al. [41] introduced pix2pix, a conditional GAN that generates stylized outputs based on paired training images. However, the requirement for well-aligned image pairs made training impractical for many real-world tasks. To address this, Zhu et al. [42] proposed CycleGAN, which introduced cycle consistency loss, enabling style transfer on unpaired datasets and significantly expanding the applicability of GAN-based stylization. Subsequent research extended GANs to multi-modal synthesis [43,44], architectural improvements [45,46,47,48,49,50], and style-conditioned generation [51,52], further demonstrating their potential for artistic content creation. These advancements sparked widespread adoption, with numerous artists experimenting with GAN-based stylization, showcasing its power in image synthesis.

Despite its success, GAN-based style transfer suffered from training instability and mode collapse, leading the research community to seek more robust alternatives. This paved the way for diffusion models [53,54], which soon outperformed GANs in both stability and image quality. Rombach et al. [55] introduced latent space diffusion, significantly reducing computational costs while maintaining high-fidelity stylization. Building upon this work, style transfer researchers widely adopted diffusion models, leveraging their capabilities for conditional image generation [56,57], text-to-image synthesis [58,59,60,61], and interactive image editing [62,63,64]. Diffusion models further democratized digital art creation, lowering the barrier for non-professional users to generate high-quality stylized content.

While diffusion-based methods produce high-quality stylized images, 2D style transfer remains inherently limited to image space, lacking awareness of 3D structure, depth, and view consistency. Consequently, relying solely on 2D style transfer techniques is insufficient for 3D stylization. To bridge this gap, it is essential to incorporate 3D representations, enabling spatially consistent and geometrically aware stylization.

2.2. Three-Dimensional Representation and Style Transfer

Three-dimensional style transfer originates from 2D image style transfer and aims to stylize objects or scenes in 3D space. Compared to 2D style transfer, 3D style transfer is a relatively new and challenging task. In addition to balancing content and style, it must ensure view-consistent stylization across different perspectives. This additional requirement makes 3D style transfer inherently more complex and less explored than its 2D counterpart. To address these challenges, researchers have explored various 3D representations, including polygon meshes, point clouds, neural radiance fields (NeRFs), and 3D Gaussian Splatting, to enable view-consistent 3D style transfer.

A polygon mesh is a structured representation of a 3D object composed of vertices, edges, and faces that define its geometric shape. Mesh-based representations naturally separate shape (polygon structure) and color (UV map or texture), making them an intuitive choice for 3D style transfer. Early research primarily focused on texture stylization, leveraging UV mapping to transfer style-related color information onto 3D objects. For example, Chen et al. [65] incorporated physical reflectance effects to achieve realistic style transfer, while Lukas et al. [3] applied style transfer to mesh-based indoor scene reconstructions. Additionally, Richardson et al. [66] introduced a text-guided approach for texture generation, editing, and transfer on meshes. However, researchers sought not only to stylize texture but also to alter geometric shape. To address this, Liu et al. [67] introduced a differentiable rasterizer, allowing users to edit 3D surfaces using off-the-shelf image processing filters. Similarly, Gao et al. [68] proposed a method to automatically deform triangle meshes based on text descriptions. Despite these advancements, mesh-based methods suffer from a fundamental limitation—they rely on strictly connected polygon structures. Large deformations can introduce topological inconsistencies, leading to holes or distortions in the mesh. This constraint prevents mesh-based approaches from achieving sophisticated, stroke-based rendering, limiting their flexibility in artistic style transfer.

A point cloud is a discrete set of data points in 3D space, representing object geometry without explicit connectivity. Similarly to meshes, point clouds contain both spatial (position) and color attributes, making them a promising 3D style transfer representation. Cao et al. [9] introduced a neural style transfer method for colored point clouds, enabling both geometry and color stylization. Huang et al. [6] further refined this approach by modulating style features via linear transformation matrices, capturing finer stylistic details. Mu et al. [7] extended point cloud stylization to single-image inputs, using depth estimation to infer 3D-aware content features and generate stylized novel views. Unlike meshes, point clouds do not require connectivity, making them less prone to topological issues. Consequently, point cloud-based methods often achieve better visual quality than mesh-based approaches. However, they remain sensitive to geometric reconstruction errors, particularly in complex real-world scenes, and suffer from high memory consumption and slow processing speeds. These limitations hinder their application in detailed and high-resolution stylization.

A neural radiance field (NeRF) [69] is an implicit 3D representation that encodes volumetric radiance and density into a deep neural network, allowing for photo-realistic rendering from novel viewpoints. Unlike mesh-based approaches, which rely on UV maps, NeRF stores learned volumetric features, which are then mapped to RGB values during rendering. Several works have demonstrated the potential of NeRF-based style transfer [12,13,14,15,16,17,70]. Zhang et al. [13] introduced a nearest-neighbor feature matching approach, surpassing previous methods in both visual quality and style preservation. Duan et al. [70] further extended NeRF-based techniques by incorporating stroke-based rendering, simulating the progressive painting process using vector strokes. Although NeRF-based representations outperform all previous approaches in rendering quality, they are computationally expensive, requiring extensive sampling via deep neural networks. The slow rendering speed presents a major bottleneck for real-time applications, prompting researchers to explore more efficient alternatives. To overcome NeRF’s computational bottlenecks, researchers have turned to 3D Gaussian Splatting [71], which provides both high-speed and high-quality rendering. Unlike NeRFs, which requires volumetric sampling, 3D Gaussian Splatting represents a scene as a collection of 3D Gaussians, allowing for efficient real-time rendering. As a result, many recent 3D style transfer approaches have adopted 3D Gaussian primitives as their underlying representation [72,73,74,75]. For example, Wang et al. introduced GaussianEditor [75], a method for editing 3D scenes via 3D Gaussians with text-based instructions, enabling intuitive content creation and stylization.

Our work is most closely related to that of Duan et al. [70] and Chao et al. [76]. While Duan et al. introduced stroke-based rendering into NeRFs with a limited set of predefined stroke shapes requiring manual design, our approach enables arbitrary stroke shapes without additional intervention.

Chao et al. aim to improve photorealistic rendering quality by enriching each Gaussian with learnable texture maps (e.g., RGB or alpha), focusing on the faithful reconstruction of real-world appearances. In contrast, our work targets non-photorealistic rendering (NPR), with a strong emphasis on artistic stylization. We introduce a brushstroke-aware texture mapping method that enables not only color transfer but also shape-aware deformation, enabling visually expressive, painterly renderings. Moreover, we incorporate unsupervised clustering and Gaussian pruning mechanisms to enable stylization at different abstraction levels, which is outside the scope of Chao et al. Our pipeline is explicitly designed to support artistic control rather than photorealistic fidelity. While both approaches enhance 3D Gaussian primitives through texture-based representations, the motivations and technical focuses are fundamentally different.

3. Methods

In this section, we present our stroke-based rendering technique in detail. Given a photo-realistic 3D Gaussian Splatting (3DGS) reconstructed from multi-view images of a complex scene, our approach transforms it into an artistic 3DGS by mapping a 2D brushstroke reference to the 3D scene. To achieve this, we treat each 3D Gaussian primitive as an individual brushstroke unit. First, we compute the 2D projection area of each 3D Gaussian primitive (Section 3.1), which naturally forms an elliptical shape in image space. Next, we apply a transformation to convert this elliptical region into a rectangular one (Section 3.3), ensuring alignment with the shape of the given brushstroke reference. Finally, we apply texture mapping (Section 3.4), allowing each Gaussian to inherit the brushstroke shape of the provided texture reference image. However, directly applying this technique to 3DGS produces imperceptible results, as individual Gaussian primitive are too small to be visually distinguishable. To address this, we introduce a pruning and clustering algorithm (Section 3.2) that expands the size of each 3D Gaussian primitive while reducing the overall count by grouping neighboring Gaussian primitives together, ensuring that the stylization effect becomes visually noticeable.

3.1. Three-Dimensional Gaussian Splatting

In 3DGS, each Gaussian primitive is explicitly defined by its 3D covariance matrix , which determines its shape and orientation, and its center , which defines its position in 3D space. To render a 2D Gaussian ellipse from a 3D Gaussian primitive, the center of the projected 2D Gaussian ellipse is given as follows:

where P is the model-view-projection (MVP) matrix, which projects 3D points onto a 2D image plane.

To transform a 3D Gaussian distribution from world coordinates to camera coordinates, we follow the approach introduced by Zwicker et al. [77], which applies a view transformation matrix , followed by projection onto a 2D image plane via a local affine transformation . The transformed 3D covariance matrix is computed as follows:

Following Zwicker et al. [77], we obtain an approximate 2D covariance matrix by removing the third row and third column of . This symmetric matrix consists of three elements, , , and , which correspond to the variances and covariance of the projected Gaussian distribution in the image plane. These elements define the shape and orientation of the projected 2D Gaussian ellipse.

For color rendering, 3DGS adopts a volume rendering approach similar to NeRF-style volumetric rendering. The final pixel color is computed by accumulating colors from Gaussian primitives, blending them from front to back according to the conventional volume rendering equation:

where is the color of each Gaussian, and is the opacity computed using the 2D covariance matrix and a learned per-point opacity parameter .

Specifically, is given by the following:

where represents the offset vector between the current pixel position and the center of the 2D Gaussian ellipse , measuring the pixel’s spatial distance from the Gaussian center in the image space.

3.2. Gaussian Pruning and Expansion

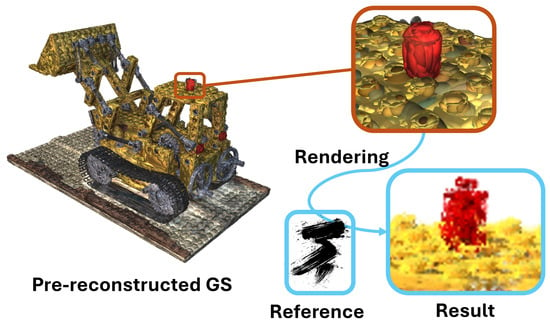

In general, 3DGS excels at representing highly detailed and complex scenes. However, it is not directly suitable for our task, as we treat each Gaussian primitive as a brushstroke unit. In most cases, a single Gaussian primitive in a pre-trained 3DGS covers only a few pixels, making it imperceptible in the final rendering, as illustrated in Figure 3. Moreover, given the growing popularity of 3DGS, numerous pre-trained models have been shared within the community. To leverage existing pre-trained 3DGS and enhance accessibility, we build our method on pre-trained 3DGS models rather than training from scratch.

Figure 3.

Texture mapping without pruning. The brushstroke reference is shown in the bottom left, and it is directly mapped onto each Gaussian primitive in the original pre-reconstructed 3DGS.

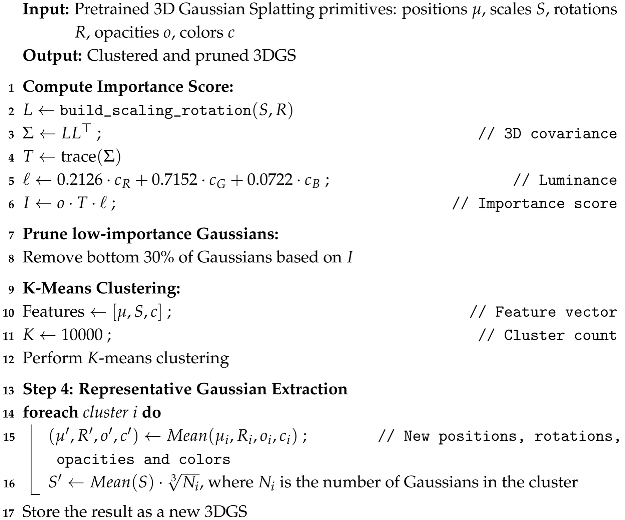

We propose an unsupervised pruning and clustering algorithm (see Algorithm A1) that transforms a dense pre-trained 3DGS into a sparser representation, where each Gaussian primitive is larger and more visually distinguishable. This is achieved through a two-step process: (1) clustering 3DGS to reduce their total number and determine new positions and (2) adjusting their scales to ensure that each new Gaussian remains perceptually significant.

3.2.1. Clustering for 3DGS Reduction

Each Gaussian primitive in 3DGS is defined by its position , scale S, rotation R, opacity o, and spherical harmonics . To enhance the quality of clustering, we first compute the intensity I for each Gaussian primitive and filter out those with low contributions. The intensity is defined as follows:

where o denotes the opacity, is the 3D covariance matrix, and l is the luminance of the Gaussian primitive’s color. The luminance l is calculated from the RGB color converted from the spherical harmonics coefficient by the following:

where R, G, and B are the respective color channels after clamping to the range .

Gaussians with importance scores below a certain threshold are pruned to remove floating noise and redundant primitives.

After the filtering step, we perform clustering over the remaining Gaussians to aggregate them into n clusters, aiming to obtain a compact and efficient representation.

Considering the large number of primitives typically involved, we adopt MiniBatchKMeans instead of standard KMeans to reduce memory consumption and accelerate the clustering process, without significantly compromising the clustering quality. Each Gaussian is encoded as a feature vector that concatenates its 3D position, scaling parameters, and RGB color. Clustering is performed based on these feature vectors.

For each resulting cluster, we compute the mean values of the positions, opacities, scaling factors, rotations, and spherical harmonics coefficients of its assigned Gaussians. This aggregation effectively preserves both the geometric structure and appearance information of the original 3D Gaussian distribution while substantially reducing the number of primitives.

3.2.2. Adaptive Scaling of Gaussian Primitives

A straightforward approach would compute the new scale as the mean of all Gaussians in the cluster. However, this often results in small and barely visible Gaussians, which are not suitable for stroke-based rendering.

To address this issue, we adaptively scale each Gaussian primitive by introducing a scaling factor , where N represents the number of Gaussians within the cluster. The new scale is computed as follows:

where S is the average scale of the original Gaussians in the cluster. This approach ensures that larger clusters produce proportionally larger Gaussians, enhancing perceptibility while maintaining geometric consistency within the sparse 3DGS.

3.3. Computing the 2D Projection Area

After pruning (i.e., removing redundant or insignificant Gaussian primitives), each remaining Gaussian primitive can be treated as a brushstroke unit that is ready for use in the style transfer process. In this section, we describe how to compute the bounding box of the projected 2D Gaussian primitive in the image plane. This process is crucial for ensuring that the 2D Gaussian is mapped accurately with the texture, as the bounding box defines the spatial region where the Gaussian will contribute to the rendered texture.

Equations (1) and (2) show how to compute the mean and covariance of the 2D Gaussian after applying the projection. The mean represents the center of the Gaussian distribution, and the covariance matrix characterizes the spread or orientation of the Gaussian, defining how the Gaussian is distributed in 2D space.

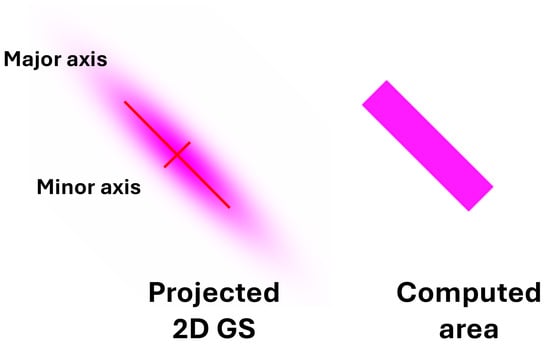

The next step is to convert the 2D Gaussian’s elliptical shape into a rectangular bounding box for the convenience of texture mapping (Figure 4), as rectangular boxes are easier to handle and align within the 2D image space when placing textures. To achieve this, we first compute the major axis (the longest axis) and minor axis (the shortest axis) of the 2D Gaussian ellipse, which together define the size of the ellipse in 2D space. The axis can be computed using the following formulas:

where and are defined as follows:

Figure 4.

Computing the area. By calculating the major and minor axes, we convert the Gaussian distribution from an elliptical to a rectangular area, making it easier for texture mapping.

Here, represents the average of the diagonal elements of the 2D covariance matrix, which corresponds to the mean scale of the ellipse. The determinant () represents the area of the ellipse and provides information about the spread or “flattening” of the Gaussian. A higher determinant means a larger spread, while a smaller determinant indicates a more concentrated Gaussian distribution.

Now, the computed rectangle area needs to be further aligned with the original ellipse area by applying a rotation. This rotation ensures that the major and minor axes of the rectangle match the orientation of the ellipse in the image space.

The rotation can be computed as follows:

where can be computed as follows:

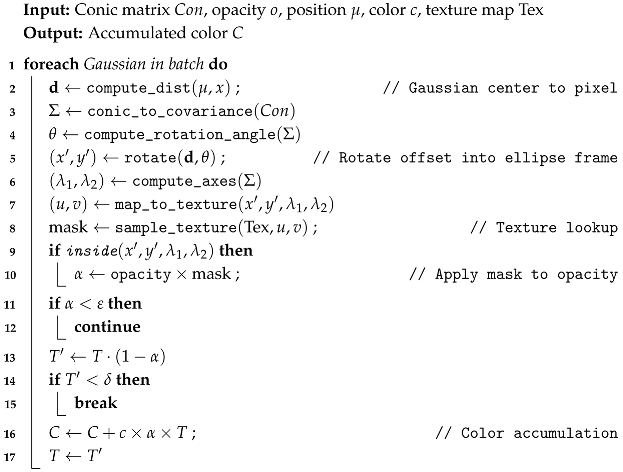

3.4. Texture Mapping

After computing the projected 2D bounding box and aligning it with the original elliptical Gaussian region, the next step is to apply texture mapping (see Algorithm A2). This allows each Gaussian primitive to inherit the shape and detail of a brushstroke texture, enhancing the artistic expressiveness of the rendering.

To ensure smooth texture mapping, we use bilinear interpolation, a technique that blends texture values from neighboring pixels to create a smooth transition, avoiding sharp edges and aliasing artifacts. We assign coordinates to the four corners of the bounding box, which determine how the texture image is mapped onto the Gaussian primitive. Given a texture image of width W and height H, the normalized coordinates are mapped to discrete texture space as follows:

where u and v are computed using the spatial relationships between the projected Gaussian region and the texture’s coordinate system:

However, since x and y may not align exactly with integer pixel positions, direct sampling could cause aliasing or sharp edges in the rendered texture. To prevent this, we apply bilinear interpolation, which blends values from nearby pixels to achieve a smooth transition between them. The interpolated texture value is calculated by blending the texture values from the four neighboring pixels as follows:

where represents the texture values at the four neighboring pixels, and and are the interpolation weights based on the distance between the target sampling point and the pixels’ positions.

Texture mapping, combined with bilinear interpolation, allows for the seamless application of brushstroke textures onto Gaussian primitives. This approach effectively prevents artifacts such as sharp edges or aliasing, enhancing rendering quality and transforming simple Gaussian primitives into expressive artistic elements that reflect the characteristics of the brushstroke style.

4. Experiments

In this section, we present stylized results using various types of brushstrokes and compare the performance of our method against other state-of-the-art 3D style transfer techniques. We conduct experiments on the NeRF Synthetic [69] and Tanks and Temples (TnT) [71] datasets, both of which provide dozens to hundreds of multi-view images with known camera poses. Finally, we present ablation studies to evaluate the contribution of each component in our method.

4.1. Stroke-Based Rendering

Figure 5 presents stylized 3D synthetic scenes rendered using our method, with a variety of brushstroke shapes. In this example, we apply several brushstroke types, including moon, swirl, and flower shapes, among others. These results demonstrate the versatility and effectiveness of our method across different brushstroke shapes.

Figure 5.

Novel view synthesis results of various 3D brushstrokes. Our method produces artistic brushstroke representations while preserving strong semantic accuracy and geometric consistency.

A key hyperparameter in our method is the number of clusters used after the clustering process. In our experiments, we fixed the number of clusters to 150 for the Lego dataset, as all scenes in this dataset are relatively simple, typically containing a single object and lacking complex backgrounds. This value was chosen empirically, as we found, through extensive experiments, that 150 achieves a good balance between preserving semantic meaning and providing an abstract stylization effect.

For more complex scenes such as those in the Tanks and Temples (TnT) dataset, which contain both foreground and background elements, we set the number of clusters to 10% of the total number of pretrained 3D Gaussian primitives. This setting was also determined empirically and offers a reasonable trade-off between preserving detailed structures and achieving abstract stylization. Furthermore, we allow users to adjust this hyperparameter: a smaller number of clusters leads to a more abstract rendering, while a larger number results in a more realistic and detailed appearance, giving users flexible control over the stylization level.

Figure 6 illustrates the transition from abstract to detailed painting results by varying the number of 3D Gaussian primitives used to reconstruct the scene. We observe that a lower number of strokes captures a strong shape-related geometric style, while increasing the number of strokes results in reconstructions with higher fidelity and finer details.

Figure 6.

Results with varying numbers of brushstrokes. By increasing the number of strokes, our stroke-based rendering captures more details and enhances the overall artistic effect.

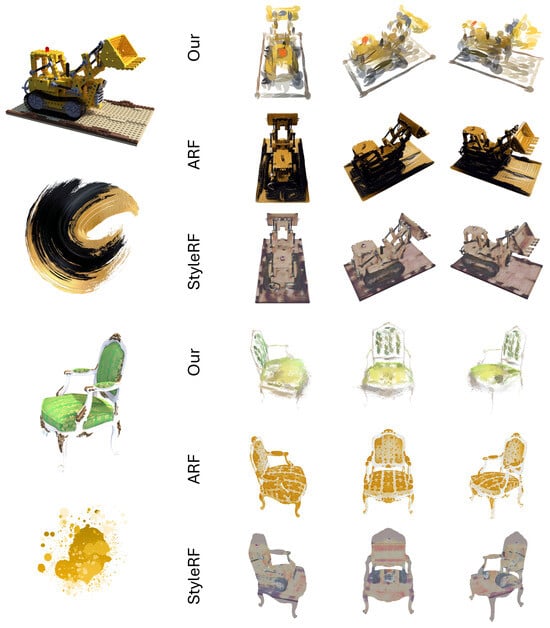

4.2. Comparison with Other Methods

We provide a visual comparison with state-of-the-art 3D style transfer methods [13,14]. Specifically, Zhang et al. [13] introduce a nearest-neighbor-based loss, which is highly effective in capturing style details while maintaining multi-view consistency, replacing the traditional Gram matrix-based VGG loss. Liu et al. [14] propose StyleRF, which restores high-fidelity 3D geometry and enables zero-shot style transfer through a reference-style feature grid. It introduces two innovations: sampling-invariant content transformation for multi-view consistency and deferred style transformation to reduce memory usage. For both methods, we use the released code and train the models from scratch. We choose to carry out comparisons with image reference-based 3D stylization methods rather than text-based methods [16,75], as they offer a more intuitive and fair comparison for our brushstroke-based approach.

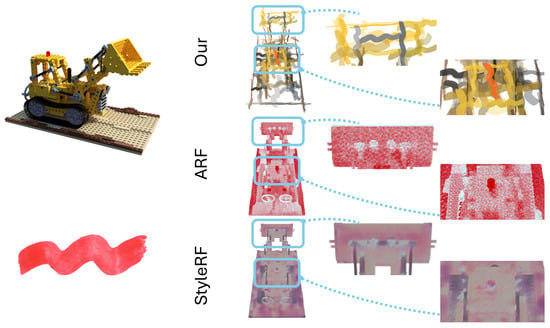

Visually, we observe that our results provide a more accurate brushstroke shape (Figure 7) representation based on the provided image reference compared to the baselines. For example, in the chair scene shown in Figure 8, we use a brushstroke reference that features a “splatting” style. Our method not only preserves this splatter-like appearance, creating a truly “Gaussian Splatting”, but also accurately converts the object’s shape, demonstrating both geometric fidelity and artistic expressiveness. In contrast, the baseline methods of ARF [13] and StyleRF [14] leave the pre-reconstructed density field unchanged, focusing only on stylizing surface color and texture. As a result, their outputs appear less vivid.

Figure 7.

Local-detail comparison with ARF [13] and StyleRF [14]. Our method faithfully captures the characteristic shape and texture of the reference brushstroke, yielding stylizations that are more consistent with artistic intuition and visually pleasing than the baseline approaches.

Figure 8.

Comparison with baseline methods ARF [13] and StyleRF [14] on the NeRF Synthetic dataset. Our stylized novel views faithfully capture the brushstroke features from the provided reference, yielding vivid stroke-based details. In contrast, the baseline methods focus primarily on color and texture, resulting in less vibrant and representative outputs.

To show that our method transfers brush-stroke style without sacrificing scene semantics, we conduct a qualitative study with CLIP [78]. For every stylized rendering, we compute the cosine similarity between its CLIP image embedding and the text prompt <This is an image of #.>, where # is replaced by the NeRF-scene label (chair, ficus, hotdog, lego, materials, and mic). Table 1 lists the CLIP similarity scores for our approach and the baselines under several brush-stroke styles. Higher values indicate the better preservation of the underlying semantic content. As shown in Table 1, our method attains the highest average CLIP similarity among all compared methods, demonstrating its effectiveness in maintaining the original scene’s semantics during 3D style transfer.

Table 1.

Preservation of scene semantics: CLIP similarity ↑ comparison.

4.3. Limitations

Although our method yields promising results, it still has several limitations.

First, while our Gaussian Splatting pruning approach can be applied to complex datasets such as Tanks and Temples (TnT), it requires manual adjustment of the number of clusters. Different cluster settings can significantly affect the rendered artistic style—larger clusters tend to produce coarser, more abstract results, whereas smaller clusters preserve finer details. An automatic method for determining an appropriate number of clusters would further improve usability and consistency.

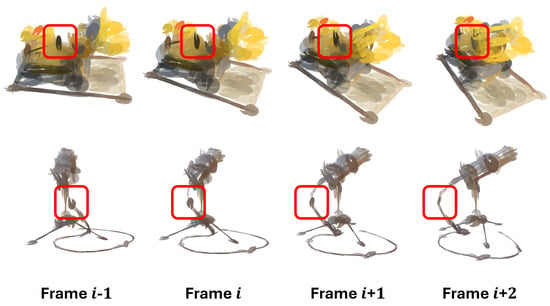

Second, because Gaussian Splatting primitives inherently treat the left–right and up–down directions symmetrically, our texture mapping may produce inverted or inconsistent details in some rendered frames, leading to minor artifacts in video sequences. We show the results of such inconsistencies in Figure 9, presenting four consecutive frames from to . The red rectangular area highlights an individual GS primitive that flips upside down, while most of the surrounding primitives remain stable. This issue only occurs when the affected primitive is aligned perfectly horizontally or vertically, making it sensitive to directional ambiguity during projection. A potential solution is to track the orientation of each 3DGS primitive across consecutive frames during rendering and use the orientation from the previous frame as a temporal constraint to correct inconsistencies in subsequent frames. This can help mitigate abrupt flipping or directional artifacts by ensuring the consistent projection of texture and stroke direction over time.

Figure 9.

Inconsistent frame demonstration. The Gaussian Splatting primitive highlighted by the red box flips upside down in frame i, while remaining stable in adjacent frames.

Finally, our method does not yet support text-based editing, a functionality that many artists find essential for enabling more flexible and creative workflows.

5. Conclusions

We presented a method for reconstructing artistic 3D Gaussian Splatting (3DGS) from photorealistic 3DGS, guided by user-specified brushstroke references. By bridging the gap between color-based and stroke-based stylization, our approach emphasizes the geometry of brushstrokes—capturing the distinctive local structures that define an artist’s personal style, much like the expressive swirls of Van Gogh. In contrast to traditional methods that focus mainly on global texture and color, our method aligns more closely with artistic intuition, recognizing brushwork as a central component of artistic expression.

A key to our method’s success is the coarse-to-fine pruning algorithm for reducing the number of 3DGS primitives, combined with a texture mapping strategy, rather than relying on perceptual losses used in prior works. The experimental results demonstrate that our stroke-based rendering not only preserves the semantic structure of the scene but also faithfully replicates expressive, localized brushstroke characteristics, achieving superior results in 3D stroke-based stylization.

Author Contributions

Conceptualization, Z.-Z.X. and C.X.; methodology, Z.-Z.X.; software, Z.-Z.X.; validation, Z.-Z.X., C.X. and I.K.; formal analysis, C.X.; investigation, Z.-Z.X.; resources, Z.-Z.X.; data curation, Z.-Z.X.; writing—original draft preparation, Z.-Z.X.; writing—review and editing, C.X.; visualization, Z.-Z.X.; supervision, I.K.; project administration, I.K.; funding acquisition, I.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by JST SPRING, Japan Grant Number JPMJSP2124. This research also received partial funding from the JSPS Grant-in-Aid for Scientific Research (Grant Number 25K03146).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available in a publicly accessible repository: https://github.com/Xzzit/BrushGS, accessed on 15 June 2025.

Acknowledgments

The author would like to express sincere gratitude to Tong Yan for her invaluable support and insightful suggestions throughout the preparation of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| The mean value of a 3D Gaussian Splatting (3DGS) primitive; | |

| The covariance matrix of a 3DGS primitive; | |

| Variance of a 3DGS primitive projected onto the image plane; | |

| Covariance of a 3DGS primitive projected onto the image plane; | |

| o | The opacity of a 3DGS primitive; |

| c | The color of a 3DGS primitive. |

Appendix A

| Algorithm A1: Densification and Clustering |

|

| Algorithm A2: Texture Mapping Algorithm for 3DGS |

|

References

- Kato, H.; Ushiku, Y.; Harada, T. Neural 3d mesh renderer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3907–3916. [Google Scholar]

- Gomes Haetinger, G.; Tang, J.; Ortiz, R.; Kanyuk, P.; Azevedo, V. Controllable Neural Style Transfer for Dynamic Meshes. In Proceedings of the ACM SIGGRAPH 2024 Conference Papers, Denver, CO, USA, 27 July–1 August 2024; pp. 1–11. [Google Scholar]

- Höllein, L.; Johnson, J.; Nießner, M. Stylemesh: Style transfer for indoor 3d scene reconstructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6198–6208. [Google Scholar]

- Ma, Y.; Zhang, X.; Sun, X.; Ji, J.; Wang, H.; Jiang, G.; Zhuang, W.; Ji, R. X-mesh: Towards fast and accurate text-driven 3d stylization via dynamic textual guidance. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 2749–2760. [Google Scholar]

- Michel, O.; Bar-On, R.; Liu, R.; Benaim, S.; Hanocka, R. Text2mesh: Text-driven neural stylization for meshes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13492–13502. [Google Scholar]

- Huang, H.P.; Tseng, H.Y.; Saini, S.; Singh, M.; Yang, M.H. Learning to stylize novel views. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 13869–13878. [Google Scholar]

- Mu, F.; Wang, J.; Wu, Y.; Li, Y. 3d photo stylization: Learning to generate stylized novel views from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16273–16282. [Google Scholar]

- Zhang, Y.; Wang, H.; Lin, G.; Nicholas, V.C.H.; Shen, Z.; Miao, C. StarNet: Style-Aware 3D Point Cloud Generation. arXiv 2023, arXiv:2303.15805. [Google Scholar]

- Cao, X.; Wang, W.; Nagao, K.; Nakamura, R. Psnet: A style transfer network for point cloud stylization on geometry and color. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 1–5 March 2020; pp. 3337–3345. [Google Scholar]

- Bae, E.; Kim, J.; Lee, S. Point Cloud-Based Free Viewpoint Artistic Style Transfer. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo Workshops (ICMEW). IEEE, Brisbane, Australia, 10–14 July 2023; pp. 302–307. [Google Scholar]

- Zhou, Y.; Xu, C.; Lin, Z.; He, X.; Huang, H. Point-StyleGAN: Multi-scale point cloud synthesis with style modulation. Comput. Aided Geom. Des. 2024, 111, 102309. [Google Scholar] [CrossRef]

- Chiang, P.Z.; Tsai, M.S.; Tseng, H.Y.; Lai, W.S.; Chiu, W.C. Stylizing 3d scene via implicit representation and hypernetwork. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, New Orleans, LA, USA, 18–24 June 2022; pp. 1475–1484. [Google Scholar]

- Zhang, K.; Kolkin, N.; Bi, S.; Luan, F.; Xu, Z.; Shechtman, E.; Snavely, N. Arf: Artistic radiance fields. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 717–733. [Google Scholar]

- Liu, K.; Zhan, F.; Chen, Y.; Zhang, J.; Yu, Y.; El Saddik, A.; Lu, S.; Xing, E.P. Stylerf: Zero-shot 3d style transfer of neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 8338–8348. [Google Scholar]

- Zhang, Y.; He, Z.; Xing, J.; Yao, X.; Jia, J. Ref-npr: Reference-based non-photorealistic radiance fields for controllable scene stylization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 4242–4251. [Google Scholar]

- Haque, A.; Tancik, M.; Efros, A.A.; Holynski, A.; Kanazawa, A. Instruct-nerf2nerf: Editing 3d scenes with instructions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 19740–19750. [Google Scholar]

- Men, Y.; Liu, H.; Yao, Y.; Cui, M.; Xie, X.; Lian, Z. 3DToonify: Creating Your High-Fidelity 3D Stylized Avatar Easily from 2D Portrait Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 10127–10137. [Google Scholar]

- Haeberli, P. Paint by numbers: Abstract image representations. In Proceedings of the 17th Annual Conference on Computer Graphics and Interactive Techniques, Dallas, TX, USA, 6–10 August 1990; pp. 207–214. [Google Scholar]

- Hertzmann, A. Painterly rendering with curved brush strokes of multiple sizes. In Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques, Orlando, CA, USA, 19–24 July 1998; pp. 453–460. [Google Scholar]

- Hertzmann, A.; Jacobs, C.E.; Oliver, N.; Curless, B.; Salesin, D.H. Image analogies. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Association for Computing Machinery, 2001, SIGGRAPH ’01. Los Angeles, CA, USA, 12–17 August 2001; pp. 327–340. [Google Scholar] [CrossRef]

- Winnemöller, H.; Olsen, S.C.; Gooch, B. Real-time video abstraction. ACM Trans. Graph. 2006, 25, 1221–1226. [Google Scholar] [CrossRef]

- Rosin, P.; Collomosse, J. Image and Video-Based Artistic Stylisation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 42. [Google Scholar]

- Hertzmann, A. A survey of stroke-based rendering. IEEE Comput. Graph. Appl. 2003, 23, 70–81. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar]

- Simonyan, K. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Ulyanov, D. Instance normalization: The missing ingredient for fast stylization. arXiv 2016, arXiv:1607.08022. [Google Scholar]

- Ulyanov, D.; Lebedev, V.; Vedaldi, A.; Lempitsky, V. Texture networks: Feed-forward synthesis of textures and stylized images. arXiv 2016, arXiv:1603.03417. [Google Scholar]

- Chen, D.; Yuan, L.; Liao, J.; Yu, N.; Hua, G. Stylebank: An explicit representation for neural image style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1897–1906. [Google Scholar]

- Li, Y.; Fang, C.; Yang, J.; Wang, Z.; Lu, X.; Yang, M.H. Universal style transfer via feature transforms. Adv. Neural Inf. Process. Syst. 2017, 30, 385–395. [Google Scholar]

- Huang, X.; Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1501–1510. [Google Scholar]

- Risser, E.; Wilmot, P.; Barnes, C. Stable and controllable neural texture synthesis and style transfer using histogram losses. arXiv 2017, arXiv:1701.08893. [Google Scholar]

- Wang, X.; Oxholm, G.; Zhang, D.; Wang, Y.F. Multimodal transfer: A hierarchical deep convolutional neural network for fast artistic style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5239–5247. [Google Scholar]

- Kolkin, N.; Kucera, M.; Paris, S.; Sykora, D.; Shechtman, E.; Shakhnarovich, G. Neural neighbor style transfer. arXiv 2022, arXiv:2203.13215. [Google Scholar]

- Gupta, A.; Johnson, J.; Alahi, A.; Fei-Fei, L. Characterizing and improving stability in neural style transfer. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4067–4076. [Google Scholar]

- Wang, T.C.; Liu, M.Y.; Zhu, J.Y.; Liu, G.; Tao, A.; Kautz, J.; Catanzaro, B. Video-to-video synthesis. arXiv 2018, arXiv:1808.06601. [Google Scholar]

- Kotovenko, D.; Wright, M.; Heimbrecht, A.; Ommer, B. Rethinking style transfer: From pixels to parameterized brushstrokes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12196–12205. [Google Scholar]

- Zou, Z.; Shi, T.; Qiu, S.; Yuan, Y.; Shi, Z. Stylized neural painting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15689–15698. [Google Scholar]

- Liu, S.; Lin, T.; He, D.; Li, F.; Deng, R.; Li, X.; Ding, E.; Wang, H. Paint transformer: Feed forward neural painting with stroke prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6598–6607. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Patashnik, O.; Wu, Z.; Shechtman, E.; Cohen-Or, D.; Lischinski, D. Styleclip: Text-driven manipulation of stylegan imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2085–2094. [Google Scholar]

- Crowson, K.; Biderman, S.; Kornis, D.; Stander, D.; Hallahan, E.; Castricato, L.; Raff, E. Vqgan-clip: Open domain image generation and editing with natural language guidance. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 88–105. [Google Scholar]

- Karras, T. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Karras, T. A Style-Based Generator Architecture for Generative Adversarial Networks. arXiv 2019, arXiv:1812.04948. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- Karras, T.; Aittala, M.; Hellsten, J.; Laine, S.; Lehtinen, J.; Aila, T. Training generative adversarial networks with limited data. Adv. Neural Inf. Process. Syst. 2020, 33, 12104–12114. [Google Scholar]

- Karras, T.; Aittala, M.; Laine, S.; Härkönen, E.; Hellsten, J.; Lehtinen, J.; Aila, T. Alias-free generative adversarial networks. Adv. Neural Inf. Process. Syst. 2021, 34, 852–863. [Google Scholar]

- Chen, X.; Xu, C.; Yang, X.; Song, L.; Tao, D. Gated-gan: Adversarial gated networks for multi-collection style transfer. IEEE Trans. Image Process. 2018, 28, 546–560. [Google Scholar] [CrossRef]

- Kazemi, H.; Iranmanesh, S.M.; Nasrabadi, N. Style and content disentanglement in generative adversarial networks. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, Waikoloa Village, HI, USA, 7–11 January 2019; pp. 848–856. [Google Scholar]

- Park, T.; Zhu, J.Y.; Wang, O.; Lu, J.; Shechtman, E.; Efros, A.; Zhang, R. Swapping Autoencoder for Deep Image Manipulation. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 7198–7211. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 3836–3847. [Google Scholar]

- Zhang, Y.; Huang, N.; Tang, F.; Huang, H.; Ma, C.; Dong, W.; Xu, C. Inversion-based style transfer with diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10146–10156. [Google Scholar]

- Brooks, T.; Holynski, A.; Efros, A.A. Instructpix2pix: Learning to follow image editing instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18392–18402. [Google Scholar]

- Ruiz, N.; Li, Y.; Jampani, V.; Pritch, Y.; Rubinstein, M.; Aberman, K. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22500–22510. [Google Scholar]

- Kawar, B.; Zada, S.; Lang, O.; Tov, O.; Chang, H.; Dekel, T.; Mosseri, I.; Irani, M. Imagic: Text-based real image editing with diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6007–6017. [Google Scholar]

- Shi, M.; Seo, J.; Cha, S.H.; Xiao, B.; Chi, H.L. Generative AI-powered architectural exterior conceptual design based on the design intent. J. Comput. Des. Eng. 2024, 11, 125–142. [Google Scholar] [CrossRef]

- Meng, C.; He, Y.; Song, Y.; Song, J.; Wu, J.; Zhu, J.Y.; Ermon, S. Sdedit: Guided image synthesis and editing with stochastic differential equations. arXiv 2021, arXiv:2108.01073. [Google Scholar]

- Zhang, Z.; Han, L.; Ghosh, A.; Metaxas, D.N.; Ren, J. Sine: Single image editing with text-to-image diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6027–6037. [Google Scholar]

- Avrahami, O.; Fried, O.; Lischinski, D. Blended latent diffusion. ACM Trans. Graph. 2023, 42, 1–11. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, R.; Lei, J.; Zhang, Y.; Jia, K. Tango: Text-driven photorealistic and robust 3d stylization via lighting decomposition. Adv. Neural Inf. Process. Syst. 2022, 35, 30923–30936. [Google Scholar]

- Richardson, E.; Metzer, G.; Alaluf, Y.; Giryes, R.; Cohen-Or, D. Texture: Text-guided texturing of 3d shapes. In Proceedings of the ACM SIGGRAPH 2023 Conference Proceedings, Los Angeles, CA, USA, 6–10 August 2023; pp. 1–11. [Google Scholar]

- Liu, H.T.D.; Tao, M.; Jacobson, A. Paparazzi: Surface editing by way of multi-view image processing. ACM Trans. Graph. 2018, 37, 221. [Google Scholar] [CrossRef]

- Gao, W.; Aigerman, N.; Groueix, T.; Kim, V.; Hanocka, R. Textdeformer: Geometry manipulation using text guidance. In Proceedings of the ACM SIGGRAPH 2023 Conference Proceedings, Los Angeles, CA, USA, 6–10 August 2023; pp. 1–11. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Duan, H.B.; Wang, M.; Li, Y.X.; Yang, Y.L. Neural 3D Strokes: Creating Stylized 3D Scenes with Vectorized 3D Strokes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5240–5249. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3d gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 2023, 42, 139. [Google Scholar] [CrossRef]

- Kovács, Á.S.; Hermosilla, P.; Raidou, R.G. G-Style: Stylized Gaussian Splatting. Comput. Graph. Forum 2024, 43, e15259. [Google Scholar] [CrossRef]

- Zhang, D.; Yuan, Y.J.; Chen, Z.; Zhang, F.L.; He, Z.; Shan, S.; Gao, L. Stylizedgs: Controllable stylization for 3d gaussian splatting. arXiv 2024, arXiv:2404.05220. [Google Scholar]

- Liu, K.; Zhan, F.; Xu, M.; Theobalt, C.; Shao, L.; Lu, S. Stylegaussian: Instant 3d style transfer with gaussian splatting. In Proceedings of the SIGGRAPH Asia 2024 Technical Communications, Tokyo, Japan, 3–6 December 2024; pp. 1–4. [Google Scholar]

- Wang, J.; Fang, J.; Zhang, X.; Xie, L.; Tian, Q. Gaussianeditor: Editing 3d gaussians delicately with text instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 20902–20911. [Google Scholar]

- Chao, B.; Tseng, H.Y.; Porzi, L.; Gao, C.; Li, T.; Li, Q.; Saraf, A.; Huang, J.B.; Kopf, J.; Wetzstein, G.; et al. Textured Gaussians for Enhanced 3D Scene Appearance Modeling. arXiv 2024, arXiv:2411.18625. [Google Scholar]

- Zwicker, M.; Pfister, H.; Van Baar, J.; Gross, M. EWA splatting. IEEE Trans. Vis. Comput. Graph. 2002, 8, 223–238. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the 38th International Conference on Machine Learning. PmLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).