Abstract

Background: Implementing automatic classification of short texts in online healthcare platforms is crucial to increase the efficiency of their services and improve the user experience. A short text classification method combining the keyword expansion technique and a deep learning model is constructed to solve the problems of feature sparsity and semantic ambiguity in short text classification. Methods: First, we use web crawlers to obtain patient data from the online medical platform “Good Doctor”; then, we use TF-IWF to weight the keyword importance and Word2vec to calculate the keyword similarity to expand the short text features; and then we integrate the cue learning and deep learning models to construct a self-adaptive attention model to solve the problem of sparse features and unclear semantics in short text classification in the adaptive-attention-Prompt-BERT-RCNN model to realize effective classification of medical short texts. Results: Empirical studies show that the classification effect after keyword expansion is significantly higher than that before expansion, the accuracy of the model in classifying medical short texts after expansion is as high as 97.84%, and the model performs well in different categories of medical short texts. Conclusions: The short text expansion methods of TF-IWF and Word2vec make up for the shortcomings of not taking into account the keyword rarity and the contextual information of the subwords, and the model can achieve effective classification of medical short texts by combining them. The model further improves the classification accuracy of short text by integrating Prompt’s bootstrapping, self-adaptive attention’s keyword weight weighting, BERT’s deep semantic understanding, and RCNN’s region awareness and feature extraction; however, the model’s accuracy in individual topics still needs to be improved. The results show that the recommender system can effectively improve the efficiency of patient consultation and support the development of online healthcare.

1. Introduction

In recent years, with the rapid development of information technology and the comprehensive promotion of healthcare informatization, online medical platforms have become an important part of modern medical services. According to the “14th Five-Year Plan for Healthcare Informatization of the Whole Population”, health informatization is a key factor in promoting the innovation of medical and health services and improving public health [1]. Online medical platforms provide patients with convenient avenues for medical services through internet technology [2], enabling patients to obtain professional medical advice through online consultation, which greatly improves the accessibility and convenience of medical services. This model not only reduces the time cost for patients to travel to hospitals but also alleviates the problem of uneven distribution of medical resources, which is especially significant in remote areas and regions with scarce medical resources. One of the core functions of online medical platforms is to provide users with a more convenient channel for consultation [3,4]. Users can describe their symptoms or health problems through the platform, and the platform recommends suitable departments or doctors based on the information provided by users. However, consultation messages are usually short text, characterized by sparse features, unclear semantics and high requirements for professionalism. The limited extent of vocabulary in short text makes it difficult to capture enough semantic information, and the expression is often not specific enough, which may lead to ambiguity in categorization. In addition, short text in the medical field involves a large number of specialized terms, which puts higher requirements on the model’s semantic understanding and classification ability.

Some existing short text categorization methods (e.g., TF-IDF, etc.) [5,6,7] have achieved certain results, but they are still deficient in dealing with rare words and contextual information. For example, TF-IDF cannot capture the rarity of words. Deep learning models (e.g., BERT and RCNN) have demonstrated strong performance in text categorization tasks, but their performance largely depends on the feature richness and semantic clarity of the input data. Therefore, how to enhance the semantic information of short texts by keyword expansion techniques and combine them with deep learning models to realize efficient department recommendation has become an urgent problem for online medical platforms.

Users of online medical platforms usually need to select a doctor of a certain department on their own, but due to a lack of comprehensive understanding and medical expertise, they often register with the wrong number and find the wrong doctor. The triage staff guidance method of offline hospitals is not applicable to online consultation platforms, which will increase the platform operation cost and the time cost of patients’ online consultation, in addition to reducing the convenience and timeliness of the platform. Therefore, the automatic categorization of consultation information through intelligent models and the recommendation of appropriate departments for patients have become an urgent problem for online medical platforms. Accurate department recommendation can not only improve the efficiency of users’ medical treatment but also optimize the distribution of medical resources and improve the overall service quality of the platform.

To this end, this study proposes a short text categorization method combining keyword expansion and deep learning models, aiming to solve the feature sparsity and semantic ambiguity problems of short text categorization in online medical platforms, so as to achieve accurate department recommendation. Enhancing the semantic information of short text by the keyword expansion technique and realizing efficient department recommendation by combining deep learning models can significantly improve the service efficiency and user experience of online medical platforms.

2. Related Work

2.1. Short Text Categorization Study

Text categorization is a core task in the field of natural language processing (NLP), which is defined as the classification of textual data into one or more predefined categories according to specific rules or criteria. These categories can be classified based on a variety of dimensions such as topic, sentiment, intent, etc. Text categorization has an extremely wide range of applications, covering a variety of fields such as information retrieval, text mining, intelligent recommender systems, and so on. By categorizing documents, it can help users find the information they need faster and improve retrieval efficiency.

With the rapid development of information technology, various industries have generated massive amounts of text data in their daily operations, with short text data being particularly abundant. Given this, the problem of short text classification has gradually attracted the attention of many researchers, making text classification tasks increasingly prominent in the field of natural language processing research. Traditional machine learning methods have been widely applied in the field of text classification, with common algorithms including Naive Bayes, Support Vector Machines (SVMs), decision trees, and k-Nearest Neighbors (k-NN), among others. He et al. [8] proposed a novel recommendation algorithm, which cleverly combines the advantages of labeled data and Naive Bayes classification. Zhang et al. [9] designed and implemented a Chinese text classification system based on Support Vector Machines. Chen et al. [10] proposed a news text classification method based on KNN. However, these traditional machine learning text classification methods generally rely on manually extracted features, and they have obvious limitations when dealing with large-scale and complex text data.

Research on short text classification based on keyword expansion primarily encompasses two approaches: feature expansion based on external knowledge bases and feature expansion based on internal semantics. Research on expansion using external knowledge bases, such as the method proposed by Wensen et al. [11], introduces a new approach for expanding short text features using Wikipedia and Word2vec and, through empirical studies, demonstrates that expanding short text features can effectively improve classification accuracy. Wang Dong et al. [12] proposed a feature expansion method based on a synonym dictionary, which utilizes synonym dictionaries and collocation resources to identify and screen candidate expansion features. These features are then added to the feature vector through semantic relevance assessment, significantly improving short text classification performance. Research on short text classification based on internal semantic expansion has also attracted significant attention from many scholars, such as Sun and Chen [13], who combined LDA with Word2vec to perform feature expansion on test texts, effectively improving the classification accuracy of the SVN algorithm. Zhang Mengyun et al. [14] enhanced the semantic understanding of medical short texts by combining TF-IDF withWord2vec, finding that after keyword expansion, the classification accuracy of SVN, MNB, and RF increased by 10%, 9%, and 10%, respectively. It is evident that after keyword feature expansion, machine learning algorithms have demonstrated outstanding performance in text classification tasks. However, with the continuous advancement of deep learning technology, it provides reliable technical support for further improving the accuracy of short text classification.

In the field of text categorization, deep learning models are gradually exerting their unique advantages, and their application scope and influence are expanding. Deep learning, on the basis of inheriting the basic concepts of machine learning [15], has been further expanded and deepened. It is able to effectively capture complex patterns and features in data by constructing a neural network architecture containing multiple layers, thus achieving better results in classification tasks. In the process of exploring the optimization of short text classification, researchers began to focus on feature expansion techniques and tried to combine them with deep learning models. For example, Shao Yunfei et al. [16] used TF-IDF and LDA to classify news datasets with the help of TextCNN after feature expansion, and the results show that this method is far better than the traditional KNN algorithm in terms of classification effectiveness. In the field of medical text processing, the Transformer architecture has significantly improved processing performance by combining prompt learning and attention mechanisms. Mansoor [17] systematically described prompt-based and attention-based medical text processing pipelines, providing an important reference for subsequent research. Liu et al. [18] innovatively proposed a multi-attention model integrating Temporal Convolutional Networks (TCNs) and CNNs, and the model can achieve better results in the classification task after introducing external knowledge expansion. The external knowledge extension model has a significant advantage over other well-known short text classification methods in terms of classification accuracy. In addition, Shen et al. [19] used the word cluster embedding technique to extend the features of short medical case texts for the medical field, and they combined CNN and LSTM for classification, and the experimental results show that both classifiers outperform other benchmark models in terms of classification effect.

2.2. Cued Learning Categorization Study

Prompt learning, as an emerging technique, transforms tasks into a form that can be processed by a pre-trained language model through a specific template in order to mine the prior knowledge in the model. It mainly consists of hint engineering and answer engineering. Prompt engineering aims to develop prompt templates suitable for downstream tasks and is divided into two categories: discrete and continuous. Discrete cue templates consist of characters with fixed word vectors, such as the PET approach proposed by Schick et al. [20] for specific tasks and languages, while continuous cue templates consist of optimizable vector tokens, such as the soft cueing approach proposed by Lester et al. [21] for greater adaptability. Answer engineering, on the other hand, transforms model-generated answers into final outputs. Early reliance on experts to design labeling terms was time-consuming and subjective, so Liu X et al. [22] proposed a gradient descent-based search method, but it limits labeling term diversity. To address this issue, Liu P et al. [23] conducted a systematic review of prompt learning methods and summarized how to improve model performance by optimizing the mapping between prompts and answers, further refining the application of prompt learning in answer engineering. Additionally, Ju Zhengyu [24] introduced a dynamic labeling term generation strategy that improves labeling diversity and accuracy for classification tasks. Cue learning provides new ideas for short text classification by optimizing the processing of input information, reconstructing the task to fit the pre-trained model’s capability and fully exploiting its prior knowledge.

In the field of short text categorization, although the combination of traditional methods with other feature expansion methods can improve the results, they are insufficient in dealing with rare word features and contextual associations. Deep learning models address the limitations of traditional machine learning in feature capture through complex structures, but short text categorization still faces challenges. The combination of cue learning and deep learning models provides a new way to improve the model recognition ability. In this paper, we take the user question text of “Good Doctor” as the research object, combine TF-IWF and word2vec technology to enhance the keyword semantic information capture and contextual relevance, and propose the self-adaptive-attention-Prompt-BERT model. By fine-tuning the pre-trained language model and deep learning network, the model performs well in the medical short text classification task and significantly improves the classification effect. This study not only provides a solution to improve the accuracy of medical short text classification but also provides a valuable reference for the feature expansion improvement of short text and the performance optimization of deep learning models.

3. General Framework and Methodology

3.1. General Research Framework

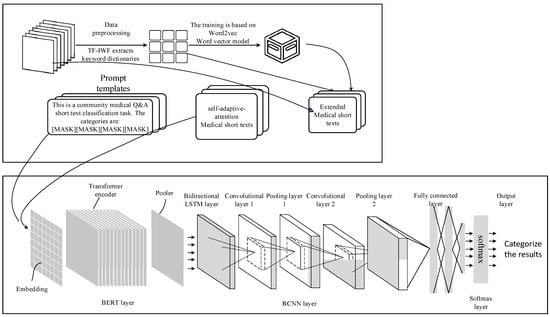

The overall research framework idea of this paper can be categorized into keyword expansion and the incorporation of cue template self-adaptive-attention-BERT-RCNN model training in two aspects. The specific research framework is shown in Figure 1.

Figure 1.

Research framework of the thesis.

In the keyword expansion phase, keywords are first extracted from the text using TF-IWF. TF-IWF identifies words that appear frequently in documents but are not common in the overall document collection. Based on the list of extracted keywords, words with high relevance to symptoms and diseases are selected as keywords, and these keywords are used for subsequent semantic expansion. The keywords are mapped onto a high-dimensional vector space through Word2Vec word vector modeling to capture the semantic relationships between words. The similarity calculation function of the word vector model is utilized to generate relevant synonyms and near-synonyms for each keyword.

In the training phase of the self-adaptive-attention-BERT model incorporating cue templates, the symptom description text is first subjected to the adaptive attention mechanism to weight the keywords, which are then fed into the pre-trained BERT model to extract semantic features from the text. An RCNN layer is added on top of the output of the BERT model to extract local and global features of the text. A fully connected layer is added to the last layer of the model to map the extracted features to section categories for classification prediction.

3.2. Keyword Expansion Technique

3.2.1. TF-IWF

TF-IWF (Term Frequency–Inverse Word Frequency) is an algorithm for assessing the importance of words in a specific corpus. It improves on TF-IDF (Term Frequency–Inverse Document Frequency) by focusing more on the rarity of words in a particular corpus. In TF-IDF, TF indicates the frequency of occurrence of a word in a text, while IWF reflects the rarity of the text containing the word. Specifically, it better distinguishes between different text categories if a word occurs in only a few texts.

TF (Term Frequency):

where denotes the word frequency of vocabulary word t in document d, denotes the number of occurrences of vocabulary word t in document d, and denotes the sum of occurrences of all vocabulary words in document d.

IWF (Inverse Word Frequency):

where denotes the inverse document frequency of vocabulary word t, N denotes the total number of documents in the document collection, and df(t) denotes the number of documents that contain vocabulary word t.

TF-IWF is obtained by multiplying TF and IWF, calculated as follows:

Important keywords are identified by calculating the TF-IWF values of each word in the document. Then, semantic extensions can be performed based on these keywords, such as using synonym dictionaries or word vector models to find synonyms, near-synonyms, etc., related to the keywords in order to enrich the keyword list and improve the accuracy of information retrieval and text analysis.

3.2.2. Word2Vec

Word2Vec is a shallow neural network model for converting words into vectors. It captures the semantic and syntactic relationships between words by analyzing the context of the words. Word2Vec has two main architectures: CBOW (Continuous Bag of Words) and skip-gram. CBOW predicts the target words based on the contextual vocabulary, while skip-gram predicts the contextual vocabulary based on the target words. During training, the model adjusts the weights to minimize the prediction error. Eventually, words are represented as vectors which are semantically similar in terms of proximity in the vector space. Word2Vec performs well in handling large-scale corpora and capturing lexical–semantic relations, and it is widely used in natural language processing tasks.

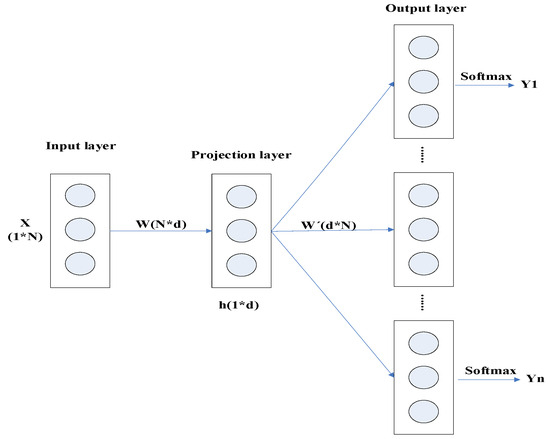

The Continuous Bag of Words (CBOW) model is a word bag model that predicts the word vector of the current word using the word vectors of the surrounding context. The model diagram is shown in Figure 2, which includes an input layer, a projection layer, and an output layer. The training process of the CBOW model is as follows:

Figure 2.

CBOW model.

Assume that there are N words in the document. Select n words before and after the target word for training.

- 1.

- The input layer takes the one-hot vectors X1 to Xn of the n words surrounding the current predicted word as input, each of which is 1 × N-dimensional.

- 2.

- Initialize the weight matrix W N × d. Multiplying the input layer vectors by W N × d yields n one-dimensional vectors of length d, where d is the predefined word vector dimension. Summing these vectors and taking their average yields the projection layer vector h.

- 3.

- Initialize the weight matrix W′. Multiply the vector h by W′ to obtain the vector u. Apply the Softmax function to calculate the probabilities of each predicted word. Set the position of the word with the highest probability to 1 and all others to 0, resulting in the final output being a one-hot vector for a single word.

- 4.

- Compare the calculated vector with the one-hot vector of the currently predicted word, continuously optimize the weight matrix to maximize the predicted probability of the predicted word, and converge. At this point, the weight matrix W is the desired word vector matrix, where each column represents the word vector of each word. Multiplying it by the one-hot vector of a specific word yields the corresponding word vector.

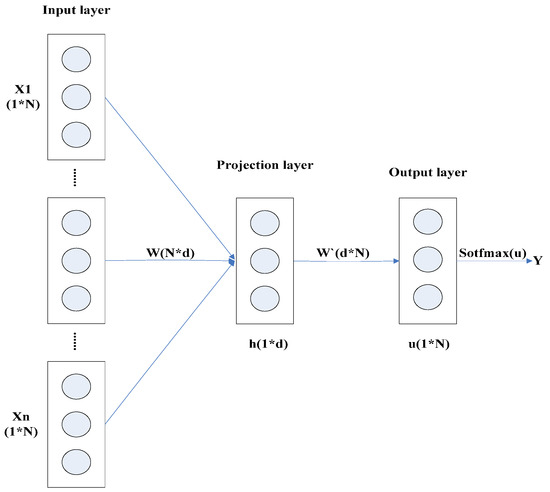

The skip-gram model predicts the word vectors of its context based on the word vector of the current word. Its model diagram is shown in Figure 3. The training process is similar to that of CBOW. The input is the one-hot vector of the current predicted word, and the output is the one-hot vector of the n words above and below it. After training is complete, the final weight matrix W is obtained, which is the word vector matrix.

Figure 3.

Skip-gram model.

By using the trained Word2Vec model, the vector similarity between the keyword and other words is calculated. Based on the similarity, we filter out the words that are semantically similar to the keywords and use them as extended keywords. For example, given the keyword “cold”, we find words with high similarity to the vector of “cold”, such as “influenza”, “fever”, “cough”, “fever”, “cough”, etc., to realize the semantic expansion of the keywords, which helps to cover the related concepts more comprehensively and improve the effect of text analysis and information retrieval.

3.3. The Self-Adaptive-Attention-Prompt-BERT-RCNN Model

3.3.1. Self-Adaptive Attention

The self-adaptive attention mechanism automatically assigns a weight value to each keyword by calculating the correlation between the feature vectors of the input keywords. The weight value reflects the importance of the keyword in the current text, and the higher the weight value, the greater the impact of the keyword on the text representation.

Suppose the feature matrix of the input keywords is X ϵ Rh × d, where n is the number of keywords and d is the feature dimension.

- 1.

- Compute the hidden representation of the features by a linear transformation and an activation function (e.g., tanh):where W ϵ Rh × d and b ϵ Rh are the learnable weight matrix and bias terms, and h is the dimension of the hidden layer.

- 2.

- Calculate the attention score for each keyword:where v ϵ Rh is the learnable attention vector and Hi is the hidden representation of the i-th keyword.

- 3.

- Weigh the keyword features based on attention scores:where αi is the attention weight of the i-th keyword and Xi is the feature vector of the i-th keyword.

3.3.2. Prompts to Learn (Prompt)

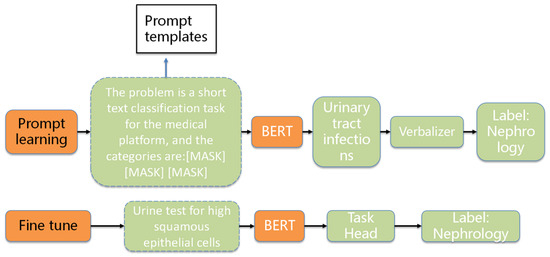

Cue learning is an approach to learning using pre-trained language models (e.g., BERT), and its core idea is to transform downstream tasks such as text categorization into mask prediction problems similar to what language models are good at with the help of specific cue templates. In text categorization tasks based on BERT models, traditional fine-tuning methods directly adapt the model to the specific task, while cue learning directs the model to focus on information relevant to the categorization task while processing the original text by subtly adding cue templates to the input text.

Taking medical text categorization as an example, if the original input text is “What’s the matter with high squamous epithelial cells in urine test?”, in a standard categorization task, the fine-tuned BERT model may directly output a symptom category label. However, in the cue learning strategy, we append a cue template specific to medical categorization to the input text to form a new input: “What’s the matter with high squamous epithelial cells in urine test, this is a community medical Q&A short text categorization task. The category is: [MASK][MASK][MASK][MASK][MASK]”. At this point, the BERT model not only analyzes the original text content, but also predicts the medical category corresponding to the mask position based on the guidance of the cue template when making predictions. This mode of transforming the categorization task into mask prediction allows the model’s output to shift from simple labels to specific medical category names, such as “Urinary Tract Infection” or “Kidney Disease”.

In this way, cue learning prompts the model to focus more on the demands of the current task, improving the accuracy and relevance of the classification. At the same time, cue learning also alleviates the model’s reliance on comprehensive fine-tuning, allowing the model to adapt more quickly to new classification tasks (Figure 4).

Figure 4.

Schematic of fine-tuned contrast cue learning based on BERT modeling.

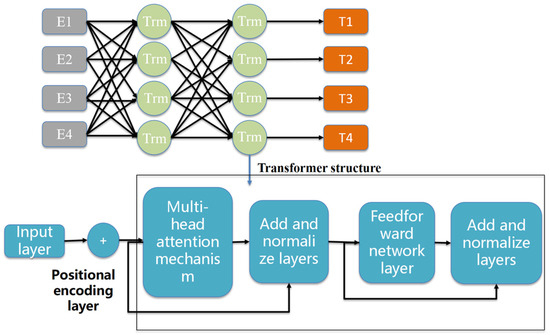

3.3.3. The BERT-RCNN Classification Model

- 1.

- BERT Layer

In the field of medical text processing, BERT, with its deep bi-directional Transformer architecture, has demonstrated a strong ability to capture complex semantic relationships between words in medical texts. In the task of classifying short medical question and answer texts, the BERT model first transforms the input text sequence into word embeddings, which are then deeply processed by multiple Transformer encoder layers. The clever combination of self-attention mechanisms and feed-forward neural networks in these encoder layers enables the model to finely analyze the word embeddings and generate context-sensitive vectors that fully capture the information of the entire text sequence. These vectors retain the rich semantic information of the original text and significantly increase the sensitivity to the specific language patterns in the medical domain, thus laying a solid foundation for the medical text classification task (Figure 5).

Figure 5.

BERT structure diagram.

- 2.

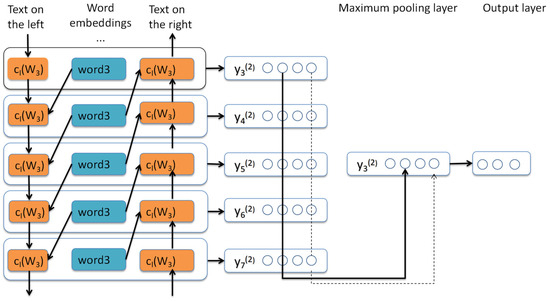

- RCNN layer

RCNNs (Recurrent Convolutional Neural Networks) have a unique advantage in text classification tasks, which can effectively solve the problem of insufficient text window sizes and bring better classification results compared with traditional window-based neural networks. When processing medical Q&A text, the original text is segmented into a number of words, which are subjected to a left–right contextual convolution operation to extract the corresponding contextual information. The extracted contextual information is then processed into a circular convolutional layer to generate new feature representations. Then, the key feature information is extracted through the maximum pooling layer, and finally the classification results are generated through the fully connected layer to realize the accurate classification of medical Q&A text (Figure 6).

Figure 6.

Schematic diagram of circular convolutional layer structure.

4. Empirical Studies

Good Doctor Online is China’s leading internet healthcare platform, established in 2006. It uses internet technology to optimize the allocation of medical resources, providing services such as online consultations, remote expert clinics, appointment referrals, post-treatment disease management, and online follow-up visits, helping patients access medical services more conveniently. The platform has over 280,000 registered doctors, 73% of whom are from top-tier hospitals, and is committed to building a trustworthy medical platform that makes practicing medicine simple and seeking medical care easy.

4.1. Experimental Data, Environment, and Categorical Measures

- 1.

- Experimental data

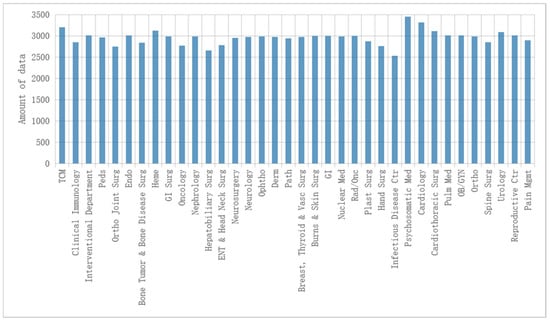

The data used in the experiments of this paper come from the Good Doctor Online medical platform (https://www.haodf.com/, accessed on 10 March 2025), with Xijing Hospital of Air Force Military Medical University as the background, using the Python language to write a web crawler program to obtain the patient consultation data and the corresponding department information in the website. Firstly, the link of each department is obtained, and then the program continues to go into each department to crawl the information of this page and mark the corresponding department. In this chapter of the experiment, a total of 106,692 samples of patient consultation data from 36 departments were crawled. The data are shown in Figure 7. It can be seen that the amount of data in each department category is balanced, at around 3000 samples, which will help the later experiments to be carried out smoothly.

Figure 7.

Patient consultation data distribution map of each department.

- 2.

- Experimental environment

The experimental hardware platform is based on the Windows 10 64-bit operating system with 16G of video memory, the algorithms were written in Python 3.10.14, and the deep learning framework we used is Torch version 2.2.2.

- 3.

- Disaggregated Measurement Indicators

Whether the prediction is correct or not is generally classified into four categories in classification problems, TP (True Positive—actually this category, predicting the result as this category), FP (False Positive—actually aliased, predicting this category), FN (False Negative—actually this category, predicting aliased), and TN (True Negative—actually aliased), as shown in the Table 1, which is a rating scale based on the example of a dichotomous problem. FN (False Negative—actually this category, predicted this category) and TN (True Negative—actually this category, predicted this category), as shown in the Table 1, are the rating scales for the binary classification problem, and the evaluation metrics presented next are calculated around these four types of data.

Table 1.

Evaluation table of binary classification model.

- (1)

- Accuracy is the number of correct predictions in the sample as a proportion of the total sample, as shown in the following equation:

- (2)

- The precision rate, also known as the rate of checking accuracy, is the proportion of samples predicted to be such samples that are actually such samples, which is defined as shown in the following formula:

- (3)

- The recall rate, also known as the check rate, is the proportion of correctly predicted samples among all samples that are actually of this type, which is defined as shown in the following equation:

- (4)

- The F1 score is the reconciled average of precision and recall, which is commonly used to assess model stability, and it is defined as shown in the following equation:

- (5)

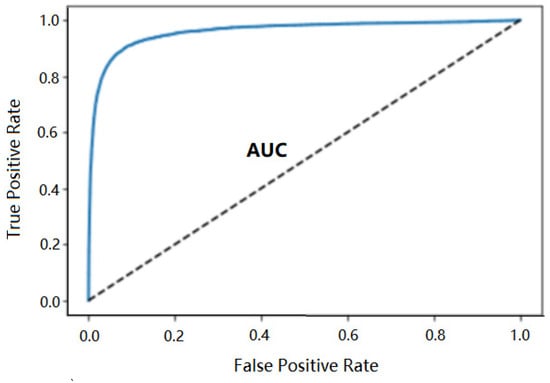

- The ROC curve is obtained using the FPR as the horizontal coordinate and the TPR as the vertical coordinate. The FPR true rate refers to the proportion of such samples that will be predicted correctly and is defined as shown in the equation below:

The TPR refers to the proportion of the number of samples predicted to be in this category to the number of samples in the alias, defined as shown in the following equation:

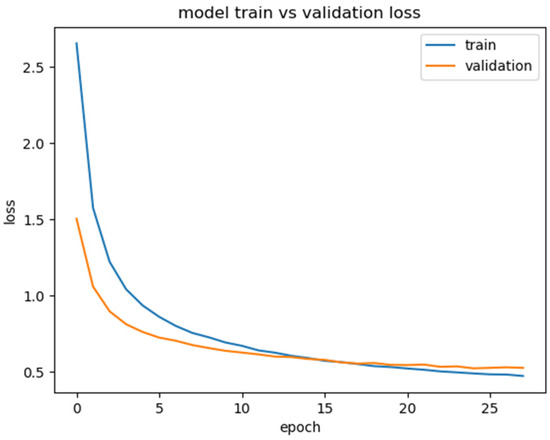

According to these two indicators to draw the ROC curve, as shown in Figure 8, the closer the curve is to the upper left, the better the performance of the classification model. Calculating the area under the ROC, that is, the AUC value, quantitatively measures model performance. Due to the multiclassification model needing to draw too many curves, a too-chaotic model is not easy to judge, so the ROC curve is generally used for the evaluation of binary classification model assessment.

Figure 8.

ROC curve.

4.2. Keyword Feature Expansion

- 1.

- Data pre-processing

The cut algorithm of posseg in the Jieba library is used in this experiment, which is equivalent to using the exact pattern to split words, and it also annotates the lexical properties of each word, such as noun “n”, verb “v”, adjective “a”, etc. This method defaults to using the Hidden Markov Model to split words that are not logged in the lexicon, which is a bit better compared to the others. The deactivation word list used in the experiment is a combination of four deactivation word lists: the Baidu deactivation word list, the HIT deactivation word list, the Sichuan University Machine Intelligence Laboratory deactivation thesaurus, and the Chinese deactivation word list. For example, the word “easy” in the final result of the word division in the table is only a word describing the degree of the patient’s condition. For example, “easy” in the final result of the table is only a word describing the degree, which is not a useful word for the patient’s description of the condition, so it is eliminated after the deactivation process.

- 2.

- Keyword extraction

Text pre-processing is the primary step when processing medical texts. This step aims to transform each medical text into independent units of analysis and integrate them into a unified text collection. Subsequently, the text collection is processed by the TF-IWF algorithm to identify and extract keywords. It is worth noting that the TF-IWF algorithm is more advantageous than the traditional TF-IDF algorithm in professional text category differentiation due to the fact that it pays more attention to the rarity of words in specific categories of text. Taking nephrology text analysis as an example, TF-IWF can accurately identify professional terms with high relevance and diagnostic value for specific categories of text, such as “urinary protein”, “renal failure”, etc. In comparison with TF-IDF, TF-IDF is more effective in distinguishing professional text categories. In contrast, the TF-IDF algorithm is more inclined to focus on generalized terms that appear frequently in all documents, such as “patient” and “symptoms”. Based on the excellent performance of the TF-IWF algorithm in keyword extraction, this paper adopts this algorithm to extract keywords and ranks the keywords in descending order based on the TF-IWF values. Finally, this paper selects the words with higher TF-IWF values from the candidate keywords and constructs a reserved dictionary containing 800 entries.

- 3.

- Keyword expansion results

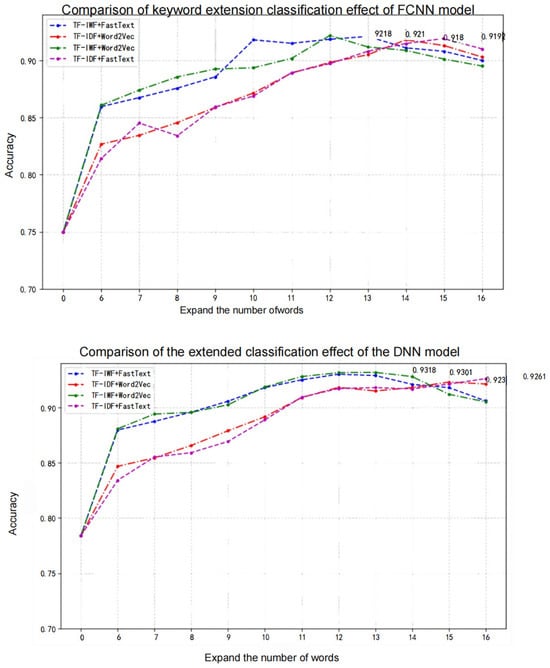

After completing the construction of the keyword dictionary, the Word2Vec model was chosen in this study to match and analyze the lexical dictionary as well as the segmentation results of the medical short text. The model training parameters are as follows: sg = 0, vector_Size = 100, epochs = 5, window = 5, min_count = 1, and workers = 4. By filtering the words with the highest similarity to the keywords of the text, the keywords of the medical text were successfully expanded, and the depth and breadth of the semantic level were significantly improved. In order to ensure the simplicity and efficiency of the expanded content and avoid redundancy of information, the number of expanded keywords was strictly limited in this study. Taking “hypertension and dizziness” as an example, the Word2Vec model only extracts [hypertension, dizziness] as an input feature, which fails to fully consider the sequential relationship between the words, thus restricting the model’s ability to accurately grasp the deeper meaning of the text to a certain extent. However, through in-depth analysis of other sentences in the dataset, e.g., “high blood pressure, chest tightness, cause” and “shortness of breath because of high blood pressure,” the model successfully identifies “chest tightness” and “shortness of breath” as two words that are associated with “high blood pressure”. Ultimately, this key information is integrated into the original text to expand “hypertension dizziness” to “hypertension dizziness chest tightness shortness of breath”. After completing the above steps, this study utilizes the DNN (Deep Neural Network) and FCNN (Fully Connected Neural Network) models for TF-IDF+FastText, TF-IWF+FastText, TF-IDF+Word2Vec, and TF-IWF + Word2Vec. The classification effect of the four combinations of extended models were comprehensively examined, and the relevant results are shown in Figure 9.

Figure 9.

Comparison of keyword expansion classification effect between FCNN and DNN models.

As can be seen in Figure 7, the classification accuracies of the FCNN and DNN models without keyword expansion are only 0.7498 and 0.7841, respectively, which are difficult to meet actual high-precision classification needs, while the classification accuracies of both are significantly improved after keyword expansion. On the whole, the extension effect of TF-IWF+Word2vec is especially outstanding, and FCNN and DNN reach the highest classification accuracy of 0.921 and 0.9318, respectively, which is 22.83% and 18.83% higher than that without extension. Meanwhile, TF-IWF+Word2vec achieves its highest classification accuracy when the number of keyword extensions is 12, while the other combined extension models need more extensions to reach their highest level. In addition, when the number of keyword extensions is greater than 12, the classification accuracy of the deep learning model extended by TF-IWF+Word2vec is significantly higher than that of the other extension models, and even though the other combined extension models also reach their optimal classification results in subsequent extensions, their maximum accuracy is still lower than that of TF-IWF+Word2vect.

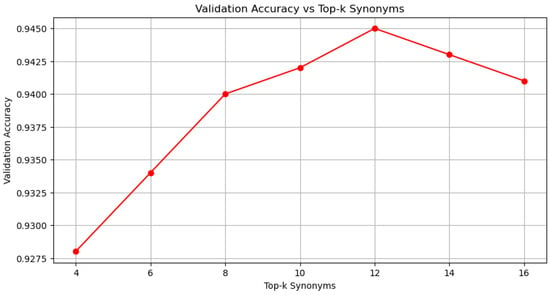

We conducted a grid search on the expanded dictionary size and top-k synonym count to find the optimal combination. The search range was as follows: expanded dictionary size: {500, 600, 700, 800, 900, 1000}; top-k synonym count: {4, 6, 8, 10, 12, 14, 16}.

We used the validation set to evaluate the model’s performance under different parameter combinations to determine the optimal dictionary size and number of top-k synonyms (Figure 10).

Figure 10.

Validation curve for dictionary size and validation curve for top-k synonym count.

As can be seen from Table 2, when the dictionary size is 800, the validation set accuracy is highest, reaching 0.942.

Table 2.

Dictionary-sized validation curve (when k = 12).

As can be seen from Table 3, when the number of top-k synonyms is 12, the validation set accuracy is highest, reaching 0.945.

Table 3.

Verification curve for the number of synonyms in top-k (when the dictionary size is 800).

To demonstrate the robustness of the model, we evaluated its performance for k = 8 and k = 16 while keeping the dictionary size at 800 (Table 4).

Table 4.

Robust results.

Through grid search and validation curves, we validated the empirical adjustments to the expanded dictionary size and top-k synonym count. The optimal settings were a dictionary size of 800 and k = 12 synonyms. The model exhibited robust performance when k ∈ {8,16}, with only slight variations in accuracy. This comprehensive analysis supports the selected parameter settings and indicates that the model is stable and reliable under different conditions.

In summary, it can be seen that whether an FCNN or DNN is used for classification, TF-IWF+Word2vect is able to achieve the best classification effect with less number of expanded words, i.e., it is significantly better than the others in terms of accuracy and efficiency.

4.3. Prompt-BERT Model Classification Effect

- 1.

- Parameter selection

The BERT pre-training model used in this study is based on Google’s Bert-base-chinese version, which performs well in Chinese natural language processing tasks. In order to fully utilize the feature capturing ability of the BERT model, the parameters are set as follows. The batch size is set to 128, which ensures the stability of training and improves efficiency at the same time. The pad size is set to 64, which can adapt to the length of most short medical texts. The learning rate is set to 5 × 10−5 to ensure the convergence of the model training. The hidden size is kept at 768, which is consistent with the original model of BERT and makes full use of its semantic expression capability. The convolutional kernel size is set to (3,4,5) to capture local features of different lengths, and the configuration of 256 convolutional kernels (num_filters) enhances the feature extraction capability. In order to prevent overfitting, the dropout is set to 0.1. For the recurrent neural network part, the rnn_hidden is set to 256, and a two-layer RNN structure is used to strengthen the capture of text sequence information. The early stopping condition is set in training; if the model performance is not improved within 300 batches, the training will be stopped early to avoid the waste of resources and the risk of overfitting. Meanwhile, a self-adaptive attention mechanism is introduced into the model, which assigns weights to each position in the input sequence by calculating its representation vector to determine the key information. Specifically, the input sequence is passed through a linear layer to generate query vectors, key vectors, and value vectors, which are used to calculate the attention score, and then the input sequence is weighted according to the score to generate context-related vectors, so as to realize focusing on key information.

- 2.

- Comparison of classifier classification effects

After determining the parameter configurations, the Prompt-BERT-RCNN model is applied to evaluate the classification effect of the extended short medical text and compare it with other classifiers for ablation experiments. The data of each classifier are converted into embedding data by the bag-of-words model or BERT model. The specific data input steps are as follows:

FCNN: The embedding data are fed into the dropout layer and shallow fully connected layer for classification output.

DNN: The embedding data are fed into the dropout layer and deep fully connected layer for classification output.

TextCNN (Text Convolutional Neural Network): The embedding data are passed into the convolutional layer, which uses 128 convolutional kernels, and each convolutional kernel is of five different sizes, followed by passing the output of the convolutional layer to the maximum pooling layer and then finally classifying the output through the dropout layer and the shallow fully connected layer.

BERT: The pre-trained BERT model feeds the embedding data into the dropout layer and shallow fully connected layer for classification output.

BERT-RNN: Firstly, the embedding data are fed into a bidirectional LSTM network through a pre-trained BERT model to capture the sequence information. Finally, the classification output is performed by dropout layer and shallow fully connected layer.

BERT-RCNN: The embedding data are first fed into a bidirectional LSTM network through a pre-trained BERT model to capture sequence information. Then, feature extraction is performed through the convolutional layer and passed into the maximum pooling layer, and finally classification output is performed through the dropout layer and shallow fully connected layer.

A comparison of the classification effects of different classifiers is shown in Table 5.

Table 5.

Comparison of classification effects of classifiers after short text feature expansion.

As can be seen from Table 1, the classification accuracy of the FCNN, DNN, and TextCNN models is 0.9210, 0.9318, and 0.9548, respectively, after short text extension, while the accuracy of the BERT model is 0.9620, which is because BERT uses a bidirectional Transformer architecture, which can consider the contextual information at the same time, whereas, in contrast, the CNN and other neural networks can only capture local features within a fixed window. This indicates that the BERT model has higher accuracy and stronger feature extraction ability when dealing with the short text classification task. The BERT-RNN model further adds the RNN layer on top of BERT, and its classification accuracy is 0.9632. This shows that by fusing BERT and an RNN, not only the in-depth understanding of contextual information in keyword expansion is retained in BERT but also the RNN captures the sequence data with the help of the RNN. This shows that by fusing BERT and the RNN, not only does BERT retain the ability to understand contextual information in keyword expansion, but also, with the ability of the RNN to capture the temporal dependency in sequence data, the deep semantic information of the text can be extracted more effectively to improve the accuracy of classification. Further, the Prompt-BERT-RCNN model, which introduces the cue learning template, achieves the most outstanding performance in terms of accuracy and weighted average F1 score, reaching 0.9784 and 0.9782, which are 1.19% and 1.22% higher than the BERT-RCNN model, respectively. This result indicates that Prompt can provide explicit task guidance and contextual information for the BERT-RCNN model and better activate the potential of the pre-trained model, which effectively improves the classification performance of the model. It can be seen that Prompt-BERT is proposed in this paper.

The -RCNN model performs the best among many models and is able to achieve effective classification of short medical texts.

4.4. Classification of Short Medical Texts in Different Sections

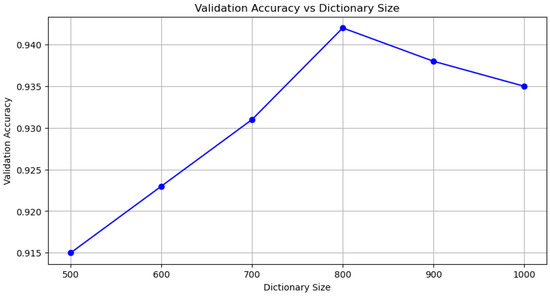

After the training is completed, Python code is used to plot the direction of the loss of the training set and the validation set during the training of the model, as shown in Figure 11. From the figure, it can be seen that around the 10th round of training, the loss value starts to decrease very slowly, until the 26th round, when the loss value of the validation set starts to increase slightly, and the training of the model is completed.

Figure 11.

Trend of model training loss rate.

In order to verify the classification effect of the extended Prompt-BERT-RCNN model on each topic category, the text calculated the weighted average F1 values of short medical texts for each subject category, and the results are presented in Table 6.

Table 6.

Values of each evaluation indicator of the model.

The data in Table 6 show that the weighted average F1 scores for the different medical topic categories are generally high, indicating that the classification method we used achieves satisfactory results in the medical Q&A domain as a whole.

Overall, the Prompt-BERT-RCNN model combined with keyword expansion proposed in this paper performs well overall in categorizing departments. In particular, in the categories of cardiology and respiratory medicine, the model demonstrated a very high weighted average F1 score. However, there is still room for improvement in the classification of other internal medicine categories and cardiac macrovascular surgery, mainly because the cardiac macrovascular surgery category involves a greater diversity of physiological mechanisms and pathological states, whereas the other internal medicine categories have a wider coverage of quiz content and less distinctive features than the other specialties, which results in the model facing more challenges in feature capturing and information processing.

5. Conclusions

With the rapid development of big data and artificial intelligence technology, automatic classification of short medical texts has become an important research field in academia. In order to solve the problems of feature sparsity and semantic ambiguity of medical short texts, this paper applies TF-IWF+Word2vec keyword expansion technology to enhance the richness of text representation and semantic clarity, and it further constructs the Prompt-BERT-RCNN model on this basis to effectively realize the accurate classification of medical short texts. The results show that the proposed method outperforms other models with a classification accuracy of 0.9784, especially in the field of cardiology, with a weighted average F1 score of 0.9944.

Despite the good results achieved in this paper, the classification accuracy on other medical topics needs to be improved, which indicates that there is still room for optimization of the model. Future research will explore specific diseases under different medical topics in depth, by subdividing the prompt learning module or introducing a more efficient model to improve the classification accuracy and fine-grained recognition of specific topics.

Author Contributions

Conceptualization, T.X. and G.D.; Methodology, Y.H.; Software, Y.H.; Validation, T.X., Y.H. and S.Z.; Formal analysis, Y.H.; Investigation, S.Y.; Resources, Y.S.; Data curation, G.D.; Writing—original draft, Y.H.; Writing—review & editing, T.X.; Visualization, S.Y.; Supervision, G.D.; Project administration, Y.H.; Funding acquisition, Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

School-Enterprise Collaborative Innovation Fund for graduate students of Xi’an University of Technology. Department and doctor recommendations based on data from online medical platforms, project number: 105-252062402.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Circular on the issuance of the “14th Five-Year Plan” for national health informatization. In Bulletin of the National Health Commission of the People′s Republic of China; NHC: Beijing, China, 2022; pp. 7–18.

- Song, D.; Zhou, X.; Guo, M. Analysis of Essentials in Network Health Information Ecology System. Libr. Inf. 2015, 35, 11–18. [Google Scholar]

- Wang, Y.; Yu, W.; Chen, J. Automatic Q&A in Chinese medical Q&A community by fusing knowledge graph. Data Anal. Knowl. Discov. 2023, 7, 97–109. [Google Scholar]

- Hu, F. Code of Ethics for Educational Virtual Communities for Learning Assistants; Beijing Science Press: Beijing, China, 2024; pp. 31–32. [Google Scholar]

- Zhang, Q.; Wang, H.; Wang, L. A short text categorization method integrating word vector and LDA. Mod. Book Inf. Technol. 2016, 27–35. [Google Scholar]

- Di, W.; Yang, R.; Shen, C. Sentiment word co-occurrence and knowledge pair feature extraction based LDA short text clustering algorithm. Intell. Inf. Syst. 2020, 56, 1–23. [Google Scholar]

- Tang, X.; Gao, H. A study on health question classification based on keyword word vector feature extension. Data Anal. Knowl. Discov. 2020, 4, 66–75. [Google Scholar]

- He, D.P.; He, Z.L.; Liu, C. Recommendation Algorithm Combining Tag Data and Naive Bayes Classification. In Proceedings of the 2020 3rd International Conference on Electron Device and Mechanical Engineering (ICEDME), Suzhou, China, 1–3 May 2020; IEEE: New York, NY, USA, 2020; pp. 662–666. [Google Scholar]

- Zhang, M.; Ai, X.; Hu, Y. Chinese Text Classification System on Regulatory Information Based on SVM. IOP Conf. Ser. Earth Environ. Sci. 2019, 252, 022133. [Google Scholar] [CrossRef]

- Chen, Z.; Zhou, L.; Li, X.; Zhang, J.; Huo, W. The Lao Text Classification Method Based on KNN. Procedia Comput. Sci. 2020, 166, 523–528. [Google Scholar] [CrossRef]

- Liu, W.; Cao, Z.; Wang, J.; Wang, X. Short text classification based on Wikipedia and Word2vec. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016; IEEE: New York, NY, USA, 2016; pp. 1195–1200. [Google Scholar]

- Wang, D.; Xiong, S. Short Text Classification Based on Synonym Dictionary Expansion. J. Lanzhou Univ. Technol. 2015, 41, 104–108. [Google Scholar]

- Sun, F.; Chen, H. Feature extension for Chinese short text classification based on LDA and Word2vec. In Proceedings of the 2018 13th IEEE Conference on Industrial Electronics and Applications (ICIEA), Wuhan, China, 31 May–2 June 2018; IEEE: New York, NY, USA, 2018; pp. 1189–1194. [Google Scholar]

- Zhang, M.; Ding, J. Research on Semantic Enhancement for Short Text Classification. Libr. Inf. Work 2023, 67, 4–11. [Google Scholar]

- Cheng, J.; Zhu, Y.; Liu, X. Innovation and Development of Library Service System in the Era of Big Data; China Social Science Press: Beijing, China, 2023; p. 149. [Google Scholar]

- Shao, Y.; Liu, D. Research on short text categorization method based on category feature extension. Data Anal. Knowl. Discov. 2019, 3, 60–67. [Google Scholar]

- Mansoor, H.; Supavadee, A. Transformer’s Role in Brain MRI: A Scoping Review. IEEE Access 2024, 12, 12345–12356. [Google Scholar] [CrossRef]

- Liu, Y.; Li, P.; Hu, X. Combining context-relevant features with multi-stage attention network for short text classification. Comput. Speech Lang. 2022, 71, 101268. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, Q.; Zhang, J.; Huang, J.; Lu, Y.; Lei, K. Improving medical short text classification with semantic expansion using word-cluster embedding. In Information Science and Applications 2018: ICISA 2018; Springer: Singapore, 2019; pp. 401–411. [Google Scholar]

- Timo, S.; Hinrich, S. Exploiting cloze questions for few shot text classification and natural language inference. arXiv 2021, arXiv:2001.07676. [Google Scholar] [CrossRef]

- Brian, L.; Rami, A.-R.; Noah, C. The power of scale for parameter-efficient prompt tuning. arXiv 2021, arXiv:2104.08691. [Google Scholar] [CrossRef]

- Liu, X.; Zheng, Y.; Du, Z.; Ding, M.; Qian, Y.; Yang, Z.; Tang, J. GPT understands, too. AI Open 2024, 5, 208–215. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Ju, Z. Research on Small Sample Text Categorization Method Based on Cue Learning. Master’s Thesis, Qilu University of Technology, Jinan, China, 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).