Abstract

High-performance coding solutions are urgently needed for the storage and transmission of 3D point clouds due to the development of 3D data acquisition facilities and the increasing scale of acquired point clouds. Video-based point cloud compression (V-PCC) is the most advanced international standard for compressing dynamic point clouds. However, it still has serious issues of time consumption and the large size of the occupancy map. Considering the aforementioned issues, based on V-PCC, we propose the Voxel Selection-based Refining Segmentation (VS-RS), which is used to accelerate the refining segmentation process of the point cloud. Furthermore, the data-adaptive patch packing (DAPP) is proposed to reduce the size of the occupancy map. In order to specify the effect of the improvement, we also designed novel evaluation indicators. Experimental results show that the proposed method achieves a Bjøntegaard Delta rate (BD-rate) gain of −1.58% in the V-PCC benchmark. Additionally, it reduces encoding time by up to 31.86% and reduces the size of the occupancy map by up to 20.14%.

1. Introduction

Multimedia is one of the most influential technological fields today. With the continuous advancement of multimedia technology, people are paying more and more attention to the visual experience. The introduction of advanced sensors has paved the way for the widespread adoption of 3D visual representation models. The digitization of 3D space allows for the exploration of content from any angle [1,2,3,4,5]. In addition, numerous innovative models for visual representation have emerged, particularly the video point cloud. A video point cloud consists of frames of point cloud data, which are collections of points in space. Each point is composed of coordinate and attribute information (e.g., texture, reflectance, color, and normal). Because video point clouds are more detailed than 2D representations, they contain a larger amount of data. Point cloud data in its raw format requires a significant amount of memory space and bandwidth [6]. Even under the conditions of fifth-generation mobile communication technology (5G), if no compression operation is performed, for an uncompressed DPC with one million points in each frame, its bitrate will reach up to 180 MB/s if its frame rate is 30 fps [7]. This presents significant challenges for data transmission and storage. Therefore, point cloud compression has become a crucial task. Point cloud compression can effectively reduce the amount of data, lower transmission costs, and preserve the integrity of key information, making point cloud technology more widely used in practice. In the fields of virtual reality, augmented reality, autonomous driving, 3D reconstruction, and object detection, point cloud compression can achieve more efficient data transmission and storage, thereby providing a more realistic and immersive experience. Therefore, there is a strong demand for effective point cloud compression technology in various industries [8,9,10,11,12,13]. Its importance and application prospects cannot be ignored, and it is of great significance for technological development and practical application.

MPEG has established standards, including Geometric-based Point Cloud Compression (G-PCC) [14,15], and Video-based Point Cloud Compression (V-PCC) [16,17]. For G-PCC, the concept is to encode the content directly in 3D space. For V-PCC, MPEG published the Final Draft International Standard for V-PCC in 2020 [18]. In the draft, the point cloud is projected onto a 2D grid. This idea allows the traditional video compression algorithm to be used for point cloud compression. It achieves the objective of converting 3D data into 2D data for compression and subsequent recovery. MPEG has also developed the corresponding software, test model for category 2 (TMC2), which is still being updated. V-PCC has a high compression ratio with the assistance of a 2D video codec, enabling efficient processing of video point cloud data [19]. Therefore, this paper focuses on V-PCC.

Although V-PCC has achieved point cloud compression, it also has some limitations. In addition to the 2D video coding load, the process of generating patches through the orthogonal projection of 3D points has the highest computational complexity in the V-PCC encoder. It has been found that refined segmentation treats each voxel equally, resulting in a significantly time-consuming process. At the same time, the size of the generated occupancy map during the point cloud compression process is large, which will result in a decrease in the performance of point cloud compression. To address these issues, we have made enhancements in the refining segmentation and patch packing. The main contributions of this paper can be summarized as follows:

- Addressing the issue of a high time-consuming ratio of the refining segmentation module in the patch generation process, we propose the Voxel Selection-based Refining Segmentation (VS-RS). This method introduces a new voxel selection strategy that accurately selects voxels requiring refinement, eliminating the need for subsequent operations on voxels that do not require refinement. The VS-RS can reduce time consumption while ensuring rate–distortion (RD) performance and decreasing computation time by up to 43.50%.

- In order to solve the problem of the original patch packaging method generating a lot of unoccupied areas and VS-RS generating additional small patches, this paper proposes a data-adaptive patch packing (DAPP), which includes more detailed sorting and positioning metrics as well as novel packing strategies. The DAPP can reduce the unoccupied size by up to 20.14%. They improve compression performance in a fully compatible manner.

- Combining the two methods can lead to a significant improvement in overall performance. Extensive experiments have verified the proposed compression performance, which can save encoding time and achieve a BD-rate gain of −1.58% in the V-PCC benchmark.

- In addition to using common testing conditions to test the proposed method, we have also designed new evaluation metrics to measure improved performance.

The remainder of this paper is organized as follows. Section 2 briefly describes the V-PCC and its related work. Section 3 first introduces refining segmentation and patch packing and their respective deficiencies, then focuses on the proposed method. Section 4 shows a series of experimental results obtained from the proposed method. Section 5 serves as the conclusion of the entire paper.

2. Related Work

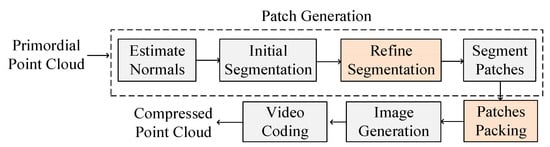

Figure 1 illustrates the encoding process of TMC2 developed based on V-PCC mainly includes four steps: patch generation, patch packaging, image generation, and video encoding.

Figure 1.

The process of V-PCC.

Patches generation aims to utilize the optimal segmentation strategy for projecting 3D data onto a specified plane and decomposing point cloud frames by converting them into 2D data. In TMC2, the objective of patch generation is to divide the point cloud into groups of patches with smooth boundaries, while also ensuring minimal reconstruction errors. The steps include principal component analysis to obtain the normal vector, initial segmentation of point clouds based on the normal vector, refining segmentation based on smoothing scores, and extraction of patches from connected components [6]. The area within the dashed line in Figure 1 represents the patch generation process. The goal of the patch packaging process is to generate geometric and texture images that contribute to video compression. During this process, auxiliary information, such as occupancy maps, will also be generated to indicate effective positions. The occupancy map size should be as small as possible, and the internal patches should be arranged tightly. The image generation process includes optimization operations such as geometric pixel filling, attribute pixel filling, and global allocation. Finally, all the generated images are combined into a video and encoded using HEVC [19] or other video encoders. This paper focuses on refining segmentation and patch-packing sessions. The details of these two parts will be presented in Section 3. A comprehensive description of the remaining components of V-PCC can be found in the literature [11].

Even though MPEG has identified the latest standard, it still has some limitations. Researchers are attempting to enhance coding efficiency by employing various methods, including that of Faramarzi et al. [20,21]. A grid-based refining segmentation (G-RS) method was proposed to reduce the complexity of a point-based refining segmentation method, and its performance was evaluated additionally in a dedicated core experiment [22]. Despite these efforts, the G-RS procedure still accounted for a large time consuming of the V-PCC encoders [23]. Later, Higa et al. [24] proposed an optimization method to avoid unnecessary hash map access. Kim et al. [25,26] used a voxel classification algorithm to improve the refining segmentation process, and, on this basis, they proposed a radius search method for the FGRS algorithm. However, it brings other problems such as a decrease in the quality of point cloud reduction.

In addition to improving coding efficiency, researchers have also made improvements based on patches. Sheikhipour et al. [27] estimate the most important patches of the second layer and incorporate them into the patch generation of the first layer. Compared to the single-layer scheme, the proposed method reduces the bit rate, but it also degrades the subjective quality of the point cloud after reduction. Zhu et al. [28] proposed to optimize the user’s visual experience by allocating more patches related to predefined views and increasing codec time. Li et al. [29] have proposed a method for decreasing unoccupied pixels among different patches due to the inefficiency of coding unused space during video compression. Rhyl et al. [30] proposed their method for obtaining a contextual homogeneity-based patch decomposition affecting compression efficiency. However, it does not work on additional attributes such as reflection and material. There are also some other similar methods [31,32]. The idea of using patch packaging methods to improve occupancy maps is also worth studying.

3. Proposed Method

3.1. V-PCC Method and Its Limitations Explained

The latest refining segmentation method provided by V-PCC is the grid-based refining segmentation [33]. It simplifies the redundant process of point-based refining segmentation by incorporating grid constraints. The refining segmentation iteratively updates the projection plane index based on the results of the initial segmentation. We are investigating the time consumption ratio of each process in patch generation. The time consumed by refining segmentation is shown in Table 1, up to 59.48%. This is because the process operates indiscriminately on all voxels without considering the differences between them. We proposed the VS-RS. It can effectively utilize the characteristics of different voxels.

Table 1.

Time consumption ratio of each procedure in the patch generation process.

The packing method in V-PCC utilizes an exhaustive search algorithm. It first determines the order in which patches are packed. Then, the raster scans the 2D grid to find the correct position, ensuring that the patches are positioned without overlapping. Grids belonging to a patch are considered occupied blocks. The occupancy maps guide the generation of geometric and attribute videos. The result of packing affects the final RD performance. The patch packing should minimize the size of the map.

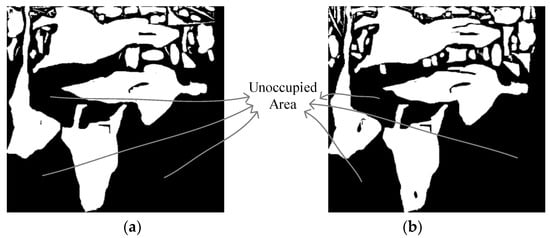

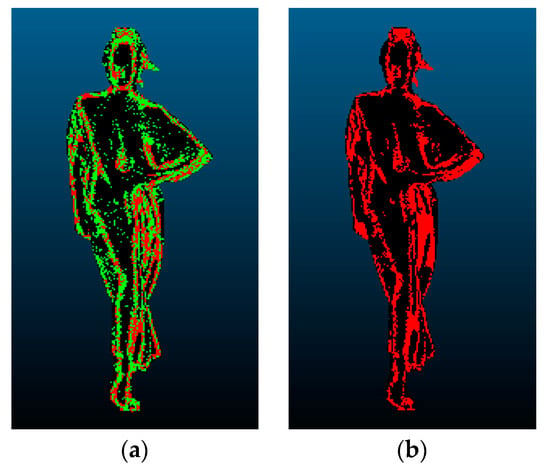

The patch packaging algorithm cannot position the patch very compactly. The occupancy map contains many unoccupied blocks. The black area represents the unoccupied region, as shown in Figure 2a. It is not helpful for the restoration of point cloud data, but it needs to be compressed. This paper investigates the proportion of unoccupied areas in the occupancy map. Table 2 shows the results. Up to 64.65% of the part is unoccupied area. So, the primary objective is to enhance compression efficiency by increasing the proportion of the occupancy area. In addition, when the VS-RS is added, it generates the occupancy map as shown in Figure 2b. It can be observed that the VS-RS generates more small patches, which can impose a burden on the original packing algorithm. The other goal of ours is to ensure that small patches find their appropriate place. Therefore, we proposed the DAPP.

Figure 2.

Occupancy map: (a) generated by V-PCC; (b) generated by adding VS-RS.

Table 2.

Unoccupied areas ratio of the occupancy map.

3.2. Voxel Selection-Based Refining Segmentation (VS-RS)

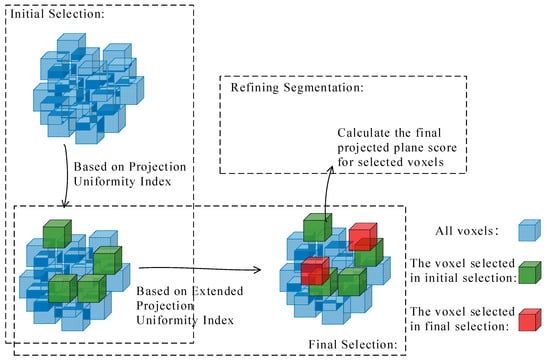

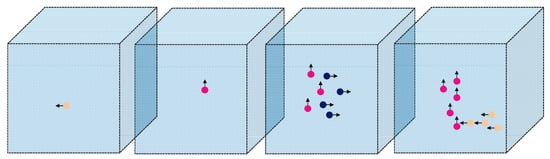

The refining segmentation is aimed at some voxels that cause the patch edge to be not smooth. The characteristic of these voxels is that the selected projection plane is too disarrayed. However, the original method does not consider the differences between voxels, and all voxels are refined segmented. Therefore, the VS-RS is proposed in this paper. Only the voxels with disarray projections are refining segmentation. A reduction of the computation time is required for refining segmentation. Figure 3 shows the overall method of VS-RS. Firstly, based on the Projection Uniformity Index, the voxels with internal projection planes non-uniform are found from all voxels. They are the results of the initial selection of voxels. Then, based on the Expanded Projection Uniformity Index, the voxels that are non-uniform when compared with neighbors are found in the remaining voxels. They are the results of the final selection of voxels. The refining segmentation will only operate on the above-selected voxels. This targeted voxel selection method improves the efficiency of refining segmentation.

Figure 3.

The proposed VS-RS.

3.2.1. Initial Selection of Voxels

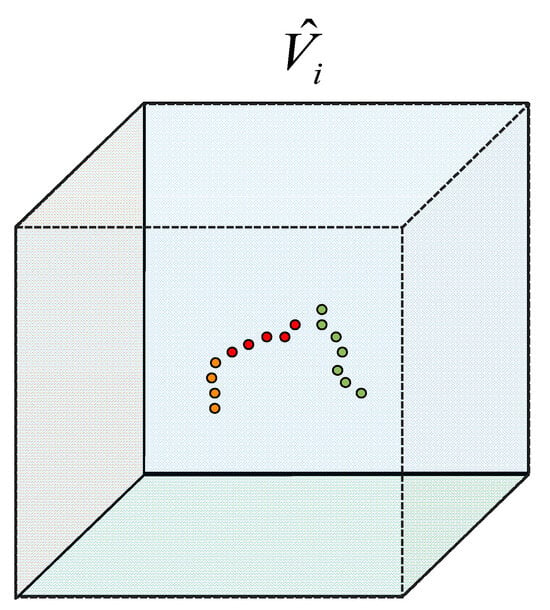

All voxels containing at least one point in the interior are recorded as filled voxels, denoted as . The number of points projected onto each projection plane is counted separately, denoted by , where represents a projection plane. Figure 4 shows an example of voxel interior point projection. There are a total of sixteen points in this voxel. Among them, five points are projected onto the posterior plane (red plane), four points are projected onto the left plane (yellow plane), and the largest number of points is projected onto the lower plane (green plane), which is seven. Therefore, the final projection plane of the voxel is the lower plane, and , where K represents the set of six projective planes.

Figure 4.

An example of the voxel interior point projection.

To solve the problem of refining redundancy caused by not considering the differences between voxels. The concept of the Projection Uniformity Index is proposed in this paper. It can indicate whether the projection planes of all points in the voxel are unified. The Projection Uniformity Index for filled voxels is as follows:

where represents the total number of 3D points in the voxel. Then, each voxel will be selected based on the value of . Only when the value of is not 1 will the voxel be selected. That is, the voxels selected based on the Projection Uniformity Index are those with internal changes within the projection plane. The Projection Uniformity Index can measure the level of chaos within the internal points of a voxel. By default, voxels containing only one point are selected. Figure 5 shows several examples of voxels in the initial selection. The uniformity of these voxels is not affected by the neighboring voxels. Therefore, we employ a parallel computing method at this stage, allowing different filling voxels to calculate the Projection Uniformity Index simultaneously and can reduce the amount of time consumed.

Figure 5.

Several examples of voxels in the initial selection voxels.

3.2.2. Final Selection of Voxels

After completing the initial selection of voxels. The distribution of the selected voxels is shown by the green voxels in Figure 6a. The distribution of the voxels that have changed their projection plane during the V-PCC refine segmentation process is shown by the red voxels in Figure 6b. It can be found that some remaining voxels have not been selected. These voxels cluster around the initial selection voxels. Based on this, the final selection of voxels was determined.

Figure 6.

Distribution of the voxels: (a) the initially selected; (b) projection plane changed in refining segmentation by V-PCC.

At this stage, the reference range of the Projection Uniformity Index is expanded from within voxels to other voxels. They are the nearest neighbor voxels of the initial selection voxels. These nearest neighbor voxels can only be voxels that have not been selected before and then labeled as candidate voxels. The positional relationship is shown in Figure 7. The two red voxels are the initial selection voxels, and the four blue voxels are the candidates for the final selection of voxels. When determining whether the middle red voxel is selected, the lower red voxel is not within the calculation range. Therefore, this calculation only involves 4 blue voxels. We use the KD-tree method to find the nearest neighbor filling voxels.

Figure 7.

An example of voxels for the final selection.

The concept of the Expanded Projection Uniformity Index is proposed. It can indicate whether the projection plane remains uniform when compared to the nearest neighbor voxels. The voxels selected based on the Expanded Projection Uniformity Index exhibit a state of relative chaos and meet the requirements for refining segmentation. The Expanded Projection Uniformity Index for filled voxels is as follows:

where represents the number of 3D points contained in all nearest neighbor voxels of the initial selection voxels. represents the number of points projected onto each projection plane in all nearest neighbor voxels as follows:

where N represents the number of voxels that are the nearest neighbors of the initial selection voxels, from 0 to 26. The candidate voxels will be selected based on the values of . Only when the value of is not 1 will some voxels be selected. These selected voxels are all candidate voxels within the range of step size 2. When the value of is 1, no candidate voxels will be selected. In general, the final selection of voxels is based on their lack of uniformity compared to their nearest neighbor voxels.

3.2.3. Refining Segmentation of Voxels

Next, after the voxel selection is completed, we will refine segmentation. This requires calculating the final refined segmentation score for each projection plane as follows:

where M is the total number of neighboring voxels. There are no additional restrictions on neighboring voxels. defaults to 3.0, as the normal vector score of each projection plane in the voxel is represented as follows:

where is the normal vector of the m-th point in selected voxels, represents the constant unit vector of the projection plane p. represents the influence of the projection of points in neighboring voxels on , as follows:

where is set considering that the influence of voxels with different distances should be different, the neighbor voxels which are closer to the refined segmented voxels have a larger weight, as follows:

where represents the position coordinates of the current refined segmentation voxel, and represents the position coordinates of the neighboring voxel. The default value of is 1.0, and is 1.5. R represents the maximum distance between voxels.

The final projection plane is determined by comparing the values of F, with the projection plane having the largest F value chosen. The above calculations are repeated until the maximum number of iterations is reached. The selected voxels for refined segmentation will adaptively terminate the change of the projection plane during the iteration process. The purpose of this is to avoid unnecessary computation. Specifically, it will record the change in the projection plane of each voxel. Once it is found that the plane is repeating changes, then we skip the voxel.

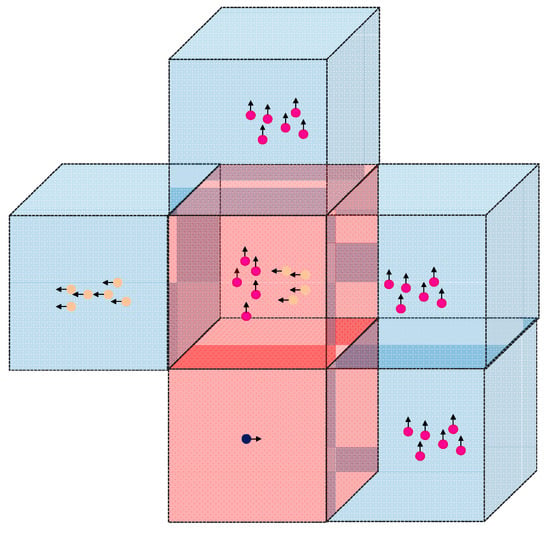

3.3. Data-Adaptive Patch Packing (DAPP)

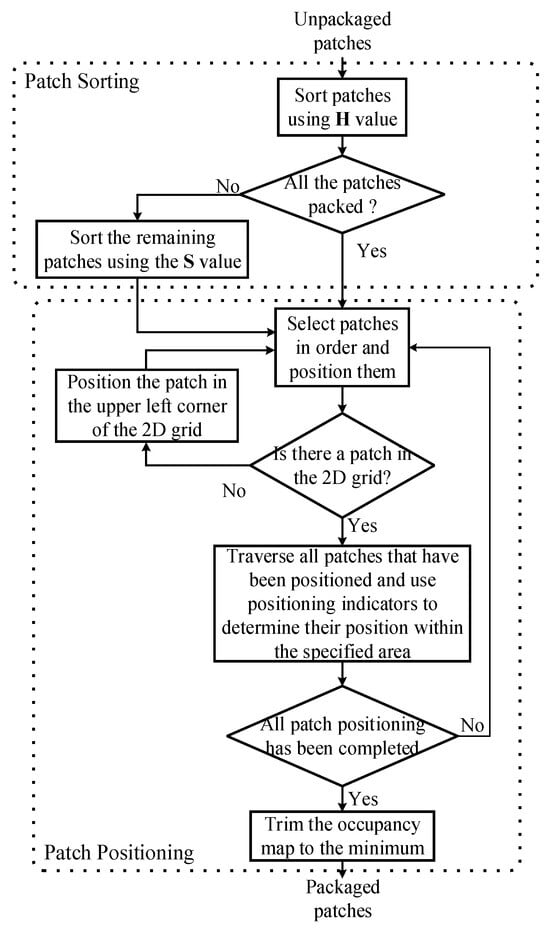

Considering that the occupied graph area is too large and more small patches will increase the burden on the original packaging algorithm. This paper proposes Data-adaptive Patch Packing (DAPP). The goal is to adaptively find the optimal position for each patch based on its characteristics to decrease the size of the occupancy map. The DAPP mainly includes setting up metrics, patch sorting, and patch positioning.

Patch sorting refers to the process of sorting the newly generated patches based on a specific metric. Subsequent patch positions will then be performed in this sorted order. The original patch packing method first sorts the patches according to their height values and then positions them on a 2D grid. Through experiments, it has been found that many patches have the same height, as shown in Table 3. In such cases, additional information needs to be taken into consideration for sorting. To make the sorting more effective. The occupancy map generated by the patch packaging does not include attribute information. The sorting metrics should primarily be associated with the geometric features of the patch.

Table 3.

Number of patches with the same height in a point cloud video frame.

The size of the occupancy map will affect the final compression performance. Referring to [34], the evaluation metrics used in this paper are height (H), Patch Bounding Box Size (), and Patch Valid Size (). represents the number of pixels occupied in the 2D patch bounding box. The height is the original ordering metric of V-PCC. The DAPP first sorts patches by height. and should also be considered for patches with the same height value. A new formula has been designed for this purpose, as follows:

where η is equal to 0.4 and μ is equal to 0.6. The patches with a larger S value are preferentially positioned.

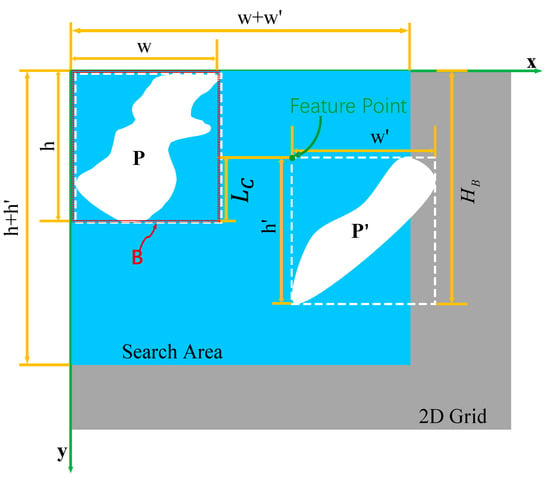

After patch sorting, we will use the positioning metrics to determine where the patch should be positioned. The positioning metrics are a standard used to measure the position of patches. Each patch must calculate the corresponding positioning indicator to determine its final position. The calculation of positioning metrics comprehensively considers the characteristics of patches that have been packaged and to be packaged. Specifically, this includes the Common Area Length, recorded as , and the height of the generated bounding box, recorded as . It is shown in Figure 8. is the alignment between the two patches and is equivalent to the length of the common portion of the two patches projected onto the Y-axis. These positioning metrics need to be calculated during the packing process. The patch to be positioned is compared with each previously positioned patch according to the positioning metrics in order to select the best position. Once the packaging is completed, an occupancy map can be created to store these patches.

Figure 8.

Patch placement area query range (blue area).

Currently, N patches have been placed, and the patch selected for reference is labeled as P. Its width is and height is . The patch to be placed is the (N + 1)th patch, labeled as P′. Its width is and height is . The feature point used is the point at the upper left corner of the bounding box of P′. Taking the upper left corner of the bounding box of P as the starting point, there is a search area with a width of and a height of , as shown in Figure 8. To find the most suitable position of P′, the feature points will be traversed in the search area. Denote the bounding box containing all the patches that have been packed as B. When feature points move across each position in the search area. If P′ falls within the range of B, then the of P and P′ is calculated, and the position with the largest is selected as the optimal position. If P′ does not fall within the range of B, the position that results in the minimum is considered the best position. If no suitable placement position is found in the surrounding area of all packed patches, the height of the occupancy map is expanded by two times. DAPP brings the patches closer together and prevents premature expansion of the 2D grid. Its architecture is presented in Figure 9.

Figure 9.

DAPP architecture.

4. Experimental Results

This paper aims to reduce time consumption while improving RD performance and compares the proposed algorithm with TMC2-v18.0 to demonstrate its advantages. We also compare with methods from [25,26,35]. Generally, the bit rates generated by various methods do not match perfectly. The Bjøntegaard-Delta rate (BD-rate) [36] is used for a fair comparison, allowing the comparison of two coding solutions while considering multiple RD points. We report D1 (point-to-point) PSNR and D2 (point-to-plane) PSNR as geometric distortions [37]. For attributes, the distortions of Luma PSNR, Cb PSNR, and Cr PSNR components are given. The PSNR is calculated using the mean squared error (MSE) [38]. In order to better evaluate performance, we have defined additional evaluation metrics for testing in the refinement segmentation and patch packaging stages.

4.1. Experimental Conditions

The test platform is the Ubuntu 22.04.3 with an Intel Xeon(R) CPU E5-2650 processor and 256 GB of memory. All coding experiments follow the V-PCC Common Test Condition (CTC) [37]. Using the five quantization parameters (QP) shown in Table 4, from low bit rate (R01) to high bit rate (R05) [33]. It has good generalization. Moreover, the test sequence is Loot, Redandblanck, Soldier, Longdress, Basketball_player and Dancer recommended in CTC, where Basket ball_player and Dancer are 11 bit [39,40]. The remaining test sequences are 10 bit. The encoding configuration is set to all intra. The time-saving ratios are calculated as follows:

where and are the coding time consumption of the original version and our method, respectively. For a fair comparison, all test PCs use the same encoding parameters to achieve comparable RD points.

Table 4.

Quantization parameters of the common test conditions.

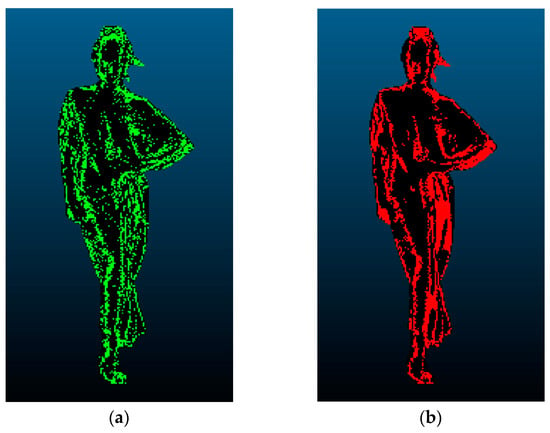

4.2. Correctness Analysis of Selected Voxels

In this paper, the accuracy rate (AR) and determination rate (DR) are designed to measure the accuracy of the VS-RS. They are defined as follows:

where A is the voxel set that changes the projection plane after refining segmentation in the process of using TMC2, and P is the set of voxels selected using VS-RS. N(·) denotes the number of voxels. AR denotes the proportion of A in P to P. DR denotes the proportion of A in P to A. As shown in Table 5, the value of DR is 85.32~95.45%, and on average is 93.22%. This indicates that most of the voxels selected using the VS-RS algorithm are related to the voxels whose projection plane index changes during the refining segmentation process in TMC2. The accuracy of VS-RS is high in predicting voxels that are associated with the refined projection plane index. The average value of AR is 85.19%. Most of the voxels that should be refining segmentation are correctly selected. In addition, the distributions of A and P can be visually compared. The color region in Figure 10a represents the distribution of P. The green voxels are the initial selection voxels, while the red voxels are the final selection of voxels. The red region in Figure 10b represents the distribution of A. The two distributions are essentially identical. Therefore, it has been proven that the VS-RS is highly accurate.

Table 5.

Tables of AR and DR.

Figure 10.

Distribution of the voxels: (a) selected by the VS-RS; (b) the projection plane changed in refining segmentation by TMC2.

The statistical results of the improved BD-rate performance and running time of the proposed algorithm, compared with TMC2, are shown in Table 6. It can be seen that the proposed algorithm can reduce the encoding time consumption by up to 43.50%. On average, it achieves −0.22% D1 BD-rate gains and −0.28% D2 BD-rate gains on average. In terms of attributes, obtains −0.15% Luma BD-rate gains on average and −0.65% Cr BD-rate gains on average. Compared to most methods that can only trade off performance for efficiency, our proposed method can enhance coding performance while saving coding time.

Table 6.

The change of BD-rate performance and running time of adding VS-RS compared with TMC2.

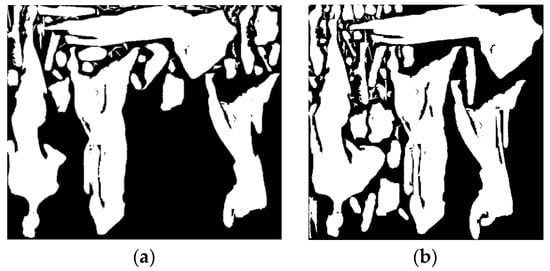

4.3. Packing Performance Evaluation

In addition to using BD-rate to evaluate the packing performance, we also calculated the reduction in occupancy map size as a measure of the packing performance. Figure 11a shows the occupancy map generated by the original method, while Figure 11b shows the occupancy map generated by our method. DAPP can generate smaller occupancy maps. Table 7 shows that the DAPP results in a maximum reduction of 20.14% in the area of the occupancy map. An average reduction of 6.92%. The reduction of the occupancy map also leads to an improvement in the performance of point cloud compression. As shown in Table 8, the BD-rate of D1 has an average gain of −0.91%. The average BD-rate gain of D2 is −1.16%. The BD-rate of Luma gain of −1.03%, the BD-rate of Cb gain of −0.45% on average, and the BD-rate of Cr gain of −0.81% on average.

Figure 11.

Occupancy map: (a) generated by TMC2; (b) generated by ours.

Table 7.

The change of the occupancy area of the occupancy map.

Table 8.

Changes in performance after adding the DAPP compared to TMC2.

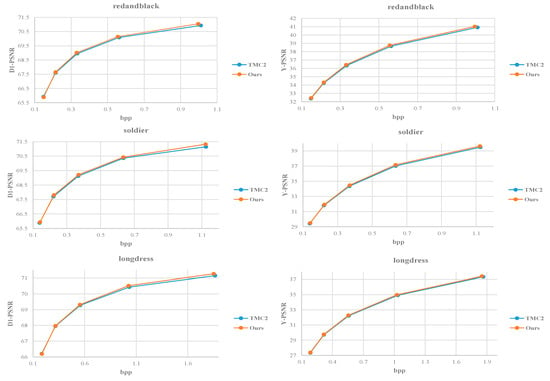

4.4. Final Coding Performance Overall Performance

When the two methods proposed in this paper are used in combination, Table 9 shows the comparison results with TMC2-18.0, indicating that the method has performed well. The algorithm proposed in this paper can reduce the time consumption of coding by up to 43.34%, with an average reduction of 31.86%. The average BD-rate coding gain of D1 is −1.39%, and the soldier test point cloud has the best effect, up to −2.86%. The average BD-rate coding gain of D2 is −1.58%, and the longdress test point cloud has the best effect, up to −3.07%. The BD-rate coding gain of Luma is −1.35% on average, and the redandblack test point cloud has the best effect, up to −2.62%. Therefore, the algorithm proposed in this paper can improve the performance of point cloud compression while reducing its complexity.

Table 9.

Changes in BD-rate performance and running time of the proposed algorithm compared to TMC2.

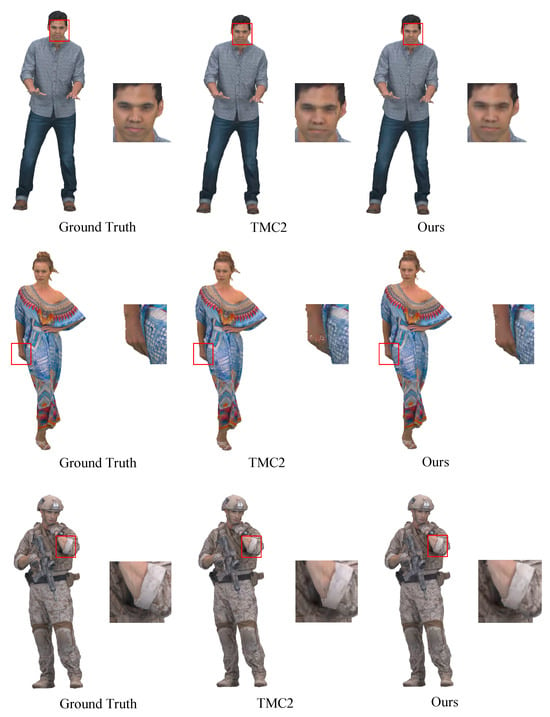

Additionally, we also drew the RD curve according to the new algorithm and TMC2. The bit rate is calculated by the number of bits per input point (bpp). Distortion metrics include D1 and Luma. Figure 12 shows the RD curves of the three test sequences. The proposed method leads to performance improvements, particularly at high bit rates. The proposed method is significantly better than TMC2 in terms of subjective quality. Figure 13 shows a subjective quality comparison between TMC2 and ours. From an overall perspective, our method better preserves the details of the point cloud. In addition, the subjective quality of the localized point cloud reconstructed by our method is also better than that of TMC2.

Figure 12.

RD performance comparison of TMC2 and ours.

Figure 13.

Example of subjective quality comparison between TMC2 and ours.

4.5. Comparison with Related Works

Although there have been numerous studies on improving point cloud coding algorithms in terms of time consumption, regrettably, there are few methods available to enhance the compression performance of the algorithm while also improving speed. This also reflects that our method can comprehensively consider the time and performance requirements. We compare the proposed method with [25,26,35]. As shown in Table 10 and Table 11, the data from their respective papers were used for comparison. Among them, refs. [25,35] compared the overall performance of their algorithms with TMC2-12.0, and ref. [26] compared the overall performance of their algorithms with TMC2-17.0. The method used in [25,26] improves the refining segmentation. The fast CU decision-making method in [35] enhances the video compression process. The time improvement of [26] in attribute sequence compression is 62.41%. Ref. [25] improved the FGRS time by 47.05% and ref. [35] improved the G-RS by 26.9%. The proposed method reduces the overall point cloud encoding time by 31.86%.

Table 10.

Comparison of Geom.BD-rate coding performance with related works.

Table 11.

Comparison of Attr.BD-rate coding performance with related works.

Refs. [25,35] have different levels of loss in D1 and D2 BD-rate metrics. The D1 BD-rate of [26] improves by −0.03%, and the D2 BD-rate improves by −0.02%. The proposed method is improved by −1.39% and −1.58%, respectively. References [25,35] have different degrees of loss on the Luma BD-rate measure. The Luma BD-rate of [26] only improves by −0.09%. The proposed method improves by −1.35%. At the same time, in terms of Cb, Cr BD-rate, the proposed method also has performance improvement.

5. Conclusions

This article aims to address some limitations in V-PCC, such as severe time consumption and excessive occupancy map size. In response to the significant issue of time consumption, we have analyzed and enhanced the refining segmentation process, which contributes significantly to the overall time consumption. We propose the VS-RS algorithm, which bypasses some voxels that do not require refining segmentation. Only voxels with non-uniform distribution of projection planes require refining segmentation. Regarding the issue of excessive size in the occupancy map generated by the original patch packing algorithm and the problem of the VS-RS potentially generating additional fine patches. This paper also proposes the DAPP to perform patch packing more reasonably. It contains a novel patch sorting method, placement area selection method, and patch positioning method. Patches can be placed in a compact manner. The novel solutions still generate compliant V-PCC streams and can be decoded correctly by any V-PCC decoder. The experimental results show that our method achieves an average BD Rate gain of −1.39% and −1.58%, respectively, compared to the V-PCC benchmark, according to the D1 and D2 distortion standards. Simultaneously, we achieved a 31.86% reduction in encoding time and a 20.14% decrease in the size of the occupancy map. This method also improves the quality of point cloud reconstruction and preserves more details. In addition, when compared with the data from recently published papers, our method has made significant improvements in various evaluation indicators. There are several intriguing exploration topics for the future, including (1) enhancing image-filling algorithms, (2) maximizing the utilization of occupied image data for video compression, and (3) integrating deep learning techniques into V-PCC.

Author Contributions

Conceptualization, H.L. and Y.C.; data curation, Y.C. and S.L.; formal analysis, H.L.; funding acquisition, S.L. and C.H.; investigation, H.L.; methodology, H.L. and Y.C.; project administration, Y.D. and S.L.; resources, C.H. and Y.D.; writing—original draft, H.L.; writing—review and editing, H.L. and Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been partially supported by the National Key R&D Program of China under grant No. 2020YFB1709200.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used in this paper is the publicly available 8iVFB and Owlii. They can be downloaded at the following links: https://mpeg-pcc.org/index.php/pcc-content-database/ (accessed on 2 November 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xu, Z.; Chang, W.; Zhu, Y.; Dong, L.; Zhou, H.; Zhang, Q. Building high-fidelity human body models from user-generated data. IEEE Trans. Multimed. 2020, 23, 1542–1556. [Google Scholar] [CrossRef]

- Wang, T.; Li, F.; Cosman, P.C. Learning-based rate control for video-based point cloud compression. IEEE Trans. Image Process. 2022, 31, 2175–2189. [Google Scholar] [CrossRef]

- Li, L.; Li, Z.; Liu, S.; Li, H. Efficient projected frame padding for video-based point cloud compression. IEEE Trans. Multimed. 2020, 23, 2806–2819. [Google Scholar] [CrossRef]

- Li, L.; Li, Z.; Zakharchenko, V.; Chen, J.; Li, H. Advanced 3D motion prediction for video-based dynamic point cloud compression. IEEE Trans. Image Process. 2019, 29, 289–302. [Google Scholar] [CrossRef]

- Ahmmed, A.; Paul, M.; Pickering, M. Dynamic point cloud texture video compression using the edge position difference oriented motion model. In Proceedings of the 2021 Data Compression Conference (DCC), Snowbird, UT, USA, 23–26 March 2021; p. 335. [Google Scholar]

- Cao, C.; Preda, M.; Zakharchenko, V.; Jang, E.S.; Zaharia, T. Compression of sparse and dense dynamic point clouds—Methods and standards. Proc. IEEE 2021, 109, 1537–1558. [Google Scholar] [CrossRef]

- Jang, E.S.; Preda, M.; Mammou, K.; Tourapis, A.M.; Kim, J.; Graziosi, D.B.; Rhyu, S.; Budagavi, M. Video-based point-cloud-compression standard in MPEG: From evidence collection to committee draft [standards in a nutshell]. IEEE Signal Process. Mag. 2019, 36, 118–123. [Google Scholar] [CrossRef]

- Su, H.; Liu, Q.; Liu, Y.; Yuan, H.; Yang, H.; Pan, Z.; Wang, Z. Bitstream-Based Perceptual Quality Assessment of Compressed 3D Point Clouds. IEEE Trans. Image Process. 2023, 32, 1815–1828. [Google Scholar] [CrossRef]

- Akhtar, A.; Li, Z.; Van der Auwera, G. Inter-frame compression for dynamic point cloud geometry coding. arXiv 2022, arXiv:2207.12554. [Google Scholar]

- Liu, Y.; Yang, Q.; Xu, Y. Reduced Reference Quality Assessment for Point Cloud Compression. In Proceedings of the 2022 IEEE International Conference on Visual Communications and Image Processing (VCIP), Suzhou, China, 13–16 December 2022; pp. 1–5. [Google Scholar]

- Akhtar, A.; Gao, W.; Li, L.; Li, Z.; Jia, W.; Liu, S. Video-based point cloud compression artifact removal. IEEE Trans. Multimed. 2021, 24, 2866–2876. [Google Scholar] [CrossRef]

- Liu, Q.; Yuan, H.; Hamzaoui, R.; Su, H.; Hou, J.; Yang, H. Reduced reference perceptual quality model with application to rate control for video-based point cloud compression. IEEE Trans. Image Process. 2021, 30, 6623–6636. [Google Scholar] [CrossRef]

- Liu, Q.; Yuan, H.; Hou, J.; Hamzaoui, R.; Su, H. Model-based joint bit allocation between geometry and color for video-based 3D point cloud compression. IEEE Trans. Multimed. 2020, 23, 3278–3291. [Google Scholar] [CrossRef]

- Liu, H.; Yuan, H.; Liu, Q.; Hou, J.; Zeng, H.; Kwong, S. A hybrid compression framework for color attributes of static 3D point clouds. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1564–1577. [Google Scholar] [CrossRef]

- MPEG. G-PCC Codec Description v12; ISO/IEC JTC 1/SC 29/WG 7; MPEG: Torino, Italy, 2021. [Google Scholar]

- Graziosi, D.; Nakagami, O.; Kuma, S.; Zaghetto, A.; Suzuki, T.; Tabatabai, A. An overview of ongoing point cloud compression standardization activities: Video-based (V-PCC) and geometry-based (G-PCC). APSIPA Trans. Signal Inf. Process. 2020, 9, e13. [Google Scholar] [CrossRef]

- MPEG. V-PCC Codec Description v10; ISO/IEC JTC 1/SC 29/WG7; MPEG: Torino, Italy, 2020. [Google Scholar]

- Schwarz, S.; Preda, M.; Baroncini, V.; Budagavi, M.; Cesar, P.; Chou, P.A.; Cohen, R.A.; Krivokuća, M.; Lasserre, S.; Li, Z. Emerging MPEG standards for point cloud compression. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 9, 133–148. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.-R.; Han, W.-J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Faramarzi, E.; Budagavi, M. On Complexity Reduction of TMC2 Encoder; ISO/IEC JTC1/SC29/WG11; MPEG: Torino, Italy, 2019. [Google Scholar]

- Faramarzi, E.; Budagavi, M.; Joshi, R. Grid-Based Partitioning; ISO/IEC JTC1/SC29/WG11; MPEG: Torino, Italy, 2019. [Google Scholar]

- Faramarzi, E.; Joshi, R.; Budagavi, M.; Rhyu, S.; Song, J. CE2.27 Report on Encoder’s Speedup; ISO/IEC JTC1/SC29/WG11; MPEG: Torino, Italy, 2019. [Google Scholar]

- Becerra, H.; Higa, R.; Garcia, P.; Testoni, V. V-PCC Encoder Performance Analysis; ISO/IEC JTC1/SC29/WG11; MPEG: Torino, Italy, 2019. [Google Scholar]

- Higa, R.; Garcia, P.; Testoni, V. V-PCC Encoder Performance Optimization and Speed Up; ISO/IEC JTC1/SC29/WG11; MPEG: Torino, Italy, 2020. [Google Scholar]

- Kim, J.; Kim, Y.-H. Fast grid-based refining segmentation method in video-based point cloud compression. IEEE Access 2021, 9, 80088–80099. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, Y.-H. Low Complexity Fast Grid-Based Refining Segmentation in the V-PCC encoder. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Yeosu, Republic of Korea, 26–28 October 2022; pp. 1–4. [Google Scholar]

- Sheikhipour, N.; Pesonen, M.; Schwarz, S.; Vadakital, V.K.M. Improved single-layer coding of volumetric data. In Proceedings of the 2019 8th European Workshop on Visual Information Processing (EUVIP), Rome, Italy, 28–31 October 2019; pp. 152–157. [Google Scholar]

- Zhu, W.; Ma, Z.; Xu, Y.; Li, L.; Li, Z. View-dependent dynamic point cloud compression. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 765–781. [Google Scholar] [CrossRef]

- Li, L.; Li, Z.; Liu, S.; Li, H. Occupancy-map-based rate distortion optimization and partition for video-based point cloud compression. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 326–338. [Google Scholar] [CrossRef]

- Rhyu, S.; Kim, J.; Im, J.; Kim, K. Contextual homogeneity-based patch decomposition method for higher point cloud compression. IEEE Access 2020, 8, 207805–207812. [Google Scholar] [CrossRef]

- Wang, D.; Zhu, W.; Xu, Y.; Xu, Y. Visual quality optimization for view-dependent point cloud compression. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Republic of Korea, 22–28 May 2021; pp. 1–5. [Google Scholar]

- Lu, C.-L.; Chou, H.-S.; Huang, Y.-Y.; Chan, M.-L.; Lin, S.-Y.; Chen, S.-L. High Compression Rate Architecture For Texture Padding Based on V-PCC. In Proceedings of the 2023 International Conference on Consumer Electronics-Taiwan (ICCE-Taiwan), PingTung, Taiwan, 17–19 July 2023; pp. 413–414. [Google Scholar]

- MPEG. V-PCC Codec Description v7; ISO/IEC JTC1/SC29/WG11; MPEG: Torino, Italy, 2019. [Google Scholar]

- Costa, A.; Dricot, A.; Brites, C.; Ascenso, J.; Pereira, F. Improved patch packing for the MPEG V-PCC standard. In Proceedings of the 2019 IEEE 21st International Workshop on Multimedia Signal Processing (MMSP), Kuala Lumpur, Malaysia, 27–29 September 2019; pp. 1–6. [Google Scholar]

- Yuan, H.; Gao, W.; Li, G.; Li, Z. Rate-Distortion-Guided Learning Approach with Cross-Projection Information for V-PCC Fast CU Decision. In Proceedings of the 30th ACM International Conference on Multimedia, New York, NY, USA, 10–14 October 2022; pp. 3085–3093. [Google Scholar]

- Bjontegaard, G. Calculation of average PSNR differences between RD-curves. ITU SG16 Doc. VCEG-M33 2001, 4, 401–410. [Google Scholar]

- MPEG. Common Test Conditions for PCC; ISO/IEC JTC1/SC29/WG11; MPEG: Torino, Italy, 2020. [Google Scholar]

- Rasheed, M.; Ali, A.H.; Alabdali, O.; Shihab, S.; Rashid, A.; Rashid, T.; Hamad, S.H.A. The effectiveness of the finite differences method on physical and medical images based on a heat diffusion equation. Proc. J. Phys. Conf. Ser. 2021, 1999, 012080. [Google Scholar] [CrossRef]

- Maja Krivokuća, P.A.C.; Savill, P. 8i Voxelized Surface Light Field (8iVSLF) Dataset. 2018. Available online: https://mpeg-pcc.org/index.php/pcc-content-database/8i-voxelized-surface-light-field-8ivslf-dataset/ (accessed on 2 November 2023).

- Xu, Y.; Lu, Y.; Wen, Z. Owlii Dynamic Human Mesh Sequence Dataset. 2017. Available online: https://mpeg-pcc.org/index.php/pcc-content-database/owlii-dynamic-human-textured-mesh-sequence-dataset/ (accessed on 2 November 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).