Abstract

This paper presents a novel approach to material classification using short-wave infrared (SWIR) imaging, aimed at applications where differentiating visually similar objects based on material properties is essential, such as in autonomous driving. Traditional vision systems, relying on visible spectrum imaging, struggle to distinguish between objects with similar appearances but different material compositions. Our method leverages SWIR’s distinct reflectance characteristics, particularly for materials containing moisture, and demonstrates a significant improvement in accuracy. Specifically, SWIR data achieved near-perfect classification results with an accuracy of 99% for distinguishing real from artificial objects, compared to 77% with visible spectrum data. In object detection tasks, our SWIR-based model achieved a mean average precision (mAP) of 0.98 for human detection and up to 1.00 for other objects, demonstrating its robustness in reducing false detections. This study underscores SWIR’s potential to enhance object recognition and reduce ambiguity in complex environments, offering a valuable contribution to material-based object recognition in autonomous driving, manufacturing, and beyond.

1. Introduction

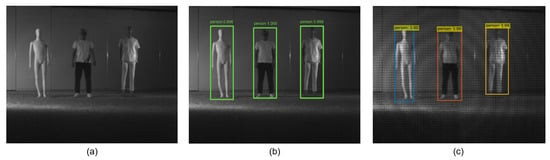

Material classification is a critical technology across numerous fields, including agriculture, medicine, manufacturing, robotics, and autonomous driving [1]. Traditional computer vision methods, predominantly relying on visible spectrum cameras, analyze features like color and texture to distinguish objects [2]. However, these approaches often fail when confronted with objects that appear visually similar but are composed of different materials. This limitation is particularly problematic in safety-critical applications, such as autonomous driving, where misidentification can lead to severe consequences [3]. For instance, as depicted in Figure 1, mannequins and humans may appear identical at a distance in the visible spectrum, posing challenges for accurate detection.

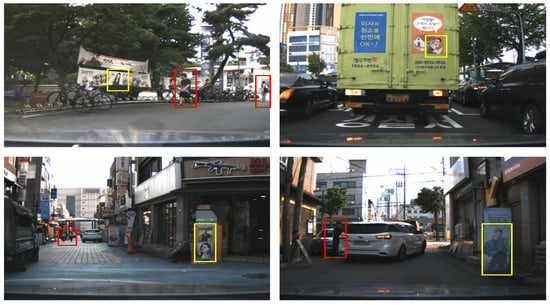

Figure 1.

Human and mannequin in traffic environment.

This limitation is particularly pronounced in autonomous driving systems, where vision systems heavily depend on visible spectrum cameras [4]. Current object detection technology, which is fundamental to autonomous driving, frequently struggles to distinguish between visually similar objects, such as humans vs. human-like objects (mannequins, banners with human images), even at relatively short distances of 20 m, as shown in Figure 1. Such misidentifications can not only impede smooth operation but potentially lead to dangerous situations [3]. Real-world scenarios, such as the misinterpretation of traffic light reflections or human figures in advertisements as actual objects (Figure 2), further highlight the critical need for more sophisticated vision systems.

Figure 2.

Other objects with similar appearances: the red box indicates a real person, and the yellow box indicates a human-shaped object.

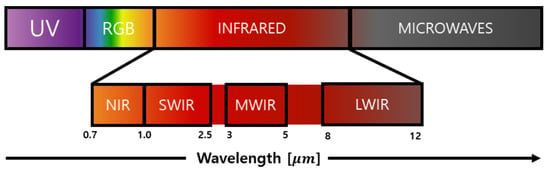

To achieve more robust material classification, researchers have recognized the value of leveraging information from multiple wavelengths [5]. The electromagnetic spectrum encompasses various regions defined by wavelength, as depicted in Figure 3. Among these, short-wave infrared (SWIR) imaging, operating in the 1000 nm to 2500 nm range, offers distinct advantages for material analysis. SWIR’s high transmissivity and capability to penetrate obscurants like fog, smoke, and dust, combined with materials’ unique reflection and absorption characteristics in this range, enables differentiation even when objects appear identical in the visible spectrum.

Figure 3.

Illustration of the electromagnetic spectrum with an emphasis on the infrared range.

In this paper, we are proposing a method to address this challenge by leveraging differences in light reflection and absorption characteristics across various wavelengths. The proposed approach utilizes deep learning, training an object detection model with multi-wavelength data to enable the recognition of objects that are visually similar but materially distinct. Furthermore, we investigate which wavelengths beyond the visible range can be effective in differentiating similar-looking objects based on their material properties. After confirming the feasibility of material-based differentiation through an algorithm-based method to select the correct wavelengths, we use these wavelengths to train an object detection model. The proposed approach aims to mitigate object misidentification during autonomous driving, addressing scenarios like the misinterpretation of traffic light reflections or human figures in advertisements as real objects, as shown in Figure 2. Such misinterpretations, common in autonomous driving, underscore the need for vision systems that incorporate information from diverse wavelength ranges.

The contributions of this paper are as follows:

- 1.

- We assessed multiple SWIR-range wavelengths to determine which one has advantages in distinguishing materials that contain moisture.

- 2.

- We propose a method to distinguish materials by utilizing the radiation characteristics in the SWIR range.

- 3.

- To detect objects required for autonomous driving, we proposed a deep learning method that uses the best-performing wavelengths with a multi-modal fusion approach to differentiate and identify objects with similar appearances.

2. Related Work

2.1. Multi-Spectral Imaging

Multi-spectral imaging, with its ability to capture chemical and physical properties through distinct wavelength bands, has emerged as a transformative technology across diverse domains. Its versatility has been demonstrated in areas ranging from agriculture and heritage conservation to satellite imaging and marine ecosystem monitoring.

In agricultural research, this technology has proven invaluable for addressing challenges in resource management and crop production. For instance, Prananto et al. [6] utilized near-infrared (NIR) spectroscopy to analyze nutrient levels in plant leaf tissues, presenting a rapid and cost-efficient alternative to conventional nutrient assessment methods. Expanding on this, Hively et al. [7] investigated the potential of SWIR bands to detect crop residues and evaluate soil health, enabling more precise soil management strategies. Additionally, He et al. [8] developed a system to identify haploid seeds using spectral cameras operating at 980 nm and 1050 nm, leveraging the absorption properties of these wavelengths to boost corn yield.

The scope of multi-spectral imaging extends beyond land-based applications to environmental monitoring. In marine ecosystems, Román et al. [9] applied a 10-band multi-spectral camera mounted on a UAV to monitor ecological dynamics. By employing advanced classification algorithms—such as Maximum Likelihood Classifier (MLC), Minimum Distance Classifier (MDC), and Spectral Angle Classifier (SAC)—their approach facilitated detailed ecosystem mapping and monitoring.

Heritage conservation has also greatly benefited from the non-invasive capabilities of multi-spectral imaging. Delaney et al. [10] utilized a combination of visible and infrared spectroscopy, including NIR and SWIR, to examine the composition of artworks and refine reflectography methods for enhanced imaging detail. Luo et al. [11] expanded on these techniques by characterizing the composition and preservation state of Xuan paper through NIR spectroscopy. Similarly, Liang [12] showcased the effectiveness of NIR and SWIR wavelengths in uncovering hidden attributes of archaeological and artistic artifacts, driving significant progress in artifact conservation methodologies.

Satellite imaging has also seen significant improvements through multi-spectral techniques. Wang et al. [13] utilized NIR and SWIR bands for the vicarious calibration of satellite ocean color sensors, enhancing data accuracy. Park and Choi [14] leveraged sharpened VNIR (visible and NIR) and SWIR bands from WorldView-3 imagery for mineral detection, demonstrating superior spatial resolution. Yu et al. [15] used SWIR imaging to improve object detection in challenging conditions such as fog, outperforming traditional RGB-based methods.

As the focus shifts to autonomous driving, multi-spectral imaging becomes increasingly important for addressing perception challenges under adverse environmental conditions. Pinchon et al. [16] highlighted the extensive use of SWIR, NIR, and long-wave infrared (LWIR) to enhance vehicle perception across various weather scenarios to ensure reliable operation in daytime and nighttime environments. Bhadoriya et al. [17] used LWIR imaging for vehicle detection and tracking in conditions of low visibility, such as fog and rain. Similarly, St-Laurent et al. [18] demonstrated the effective use of NIR, SWIR, and LWIR for autonomous navigation during severe winter conditions, showcasing their resilience in harsh environments. Building on this, Sheeny et al. [19] explored the integration of polarized LWIR sensors with convolutional neural networks to enhance situational awareness in varying weather conditions. Judd et al. [20] conducted a comprehensive evaluation of LWIR, SWIR, visible wavelengths, and LiDAR performance under foggy conditions. Their findings highlighted the strengths and limitations of these spectral bands in maintaining perception and safety in autonomous systems.

2.2. Material Classification

Generally, material classification relies on characteristics like color or texture to differentiate various materials. However, distinguishing materials with conventional visible-light cameras can be challenging due to lighting variations or the presence of materials with different colors. Consequently, material classification often utilizes the visible spectrum of electromagnetic radiation and radiation from various wavelengths and different types of sensors.

Jiang et al. [21] employed the wavelengths of the visible spectrum (R, G, B) and the NIR region to leverage the correlation between them for material classification. They extracted individual features from RGB and NIR domains using two separate CNN networks and then fused them in a fully connected layer for classification. Saponaro et al. [22] used the LWIR wavelength to perform material classification. They created a dataset incorporating LWIR range imaging, and a classification method utilizing these data was proposed, showing improved performance compared to methods using only visible light data. Xue et al. [23] suggests a material classification method based solely on visible spectrum data. This approach proposes an imaging framework that enhances appearance and recognition performance through slight angular variations when capturing images of materials. Based on this method, a public dataset of 40 types of outdoor ground surfaces was acquired.

Beyond these visible and infrared-based methods, alternative techniques for material classification have been explored. Pandey and Pandey [24] investigated ultrasonic techniques to classify materials based on structural and microstructural properties. Azimi et al. [25] used deep learning methods for microstructural classification in low-carbon steel, providing insights into material properties through advanced imaging and computational techniques. Similarly, Ratnayake et al. [26] utilized acoustic signals and subspace-based classifiers for material classification. Weiß and Santra [27] proposed a Siamese network architecture for material classification using millimeter-wave radar systems. In another study, Ponti and Mascarenhas [28] applied classifier combination methods to noisy multispectral images for soil science applications to evaluate the efficacy of multispectral approaches in natural material analysis. Jurado et al. [29] combined spatial and multispectral features to classify natural materials in 3D point clouds. Han et al. [30] focused on NIR imaging to effectively classify surface materials of objects, while Silva et al. [31] integrated neural networks and principal component analysis (PCA) to distinguish materials based on their reflectance properties. These advancements underscore the significance of multispectral and computational techniques in material classification to enable performance improvement across diverse applications.

3. Approach

3.1. Hardware Setup and Data Acquisition

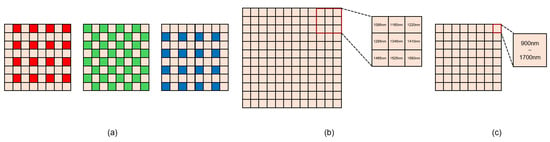

In this study, we used three cameras, one camera capable of capturing visible-range data and two cameras for capturing SWIR-range images (Figure 4). The visible light camera depicted in Figure 4a uses a Bayer filter to capture red, green, and blue wavelengths on the sensor (Figure 5a). This type of filter selectively permits the passage of visible light wavelengths corresponding to the red, green, and blue spectra, which are subsequently captured by the sensor. Figure 4b presents the ANT SWIR multispectral snapshot camera (Somerville, MA, USA), which possesses the capability to acquire images across nine distinct wavelength bands concurrently, again using the Bayer-like filter to store each wavelength’s information on the image (Figure 5b). Figure 4c shows the Crevis HG-A130SW camera (Yongin, Republic of Korea), which can capture light from a range of 400 nm to 1700 nm within a single image. As depicted in Figure 5c, each pixel receives all light within a specific wavelength range. Since our focus is on the SWIR range, we utilized the Crevis HG-A130SW camera with a long-pass filter (900 nm < < 1700 nm) to capture the desired wavelengths selectively.

Figure 4.

The cameras used in this study (a) FLIR RGB camera, (b) ANT SWIR multispectral snapshot camera, and (c) Crevis HG-A130SW camera.

Figure 5.

Principles of image acquisition for each camera: (a) RGB camera, (b) snapshot SWIR camera, and (c) standard SWIR camera.

Each camera offers distinct advantages for data acquisition. The Crevis HG-A130SW camera (Figure 4c) captures a wide range of wavelengths in a single image, allowing flexibility in acquiring data at specific wavelengths through targeted filters (see Figure 6a). On the other hand, the ANT SWIR multispectral snapshot camera (Figure 4b) is effective in constructing a paired dataset for each wavelength because it simultaneously acquires data in each wavelength region for the given nine bands. This has the disadvantage of lowering the resolution. Figure 6b illustrates the data acquired with this camera.

Figure 6.

Examples of SWIR-range data: (a) SWIR-range data captured using a standard SWIR camera and (b) SWIR-range data acquired using a snapshot SWIR camera.

For the fruit and vegetable dataset, we captured RGB and SWIR images synchronously. In contrast, for the human vs. others dataset, we utilized eight visible-range and SWIR-band images captured using an ANT SWIR camera. Since all objects in the scenes were stationary, no calibration or pixel-wise alignment techniques were required.

Additionally, the data were collected under two distinct lighting conditions. Fruit and vegetable images were acquired under natural sunlight, whereas human and mannequin datasets were captured under lighting designed to simulate solar radiation. Although the absolute intensity of the reflected light varied due to factors such as angle, distance from the light source, and acquisition time, the relative intensity differences remained consistent across the scenes.

3.2. Material Analysis and Spectral Band Selection

In this study, we proposed an approach to address the challenge of differentiating objects with similar appearances by using SWIR wavelengths to analyze material-specific characteristics. RGB cameras, which rely on visible spectrum wavelengths, often struggle with this task. However, by leveraging SWIR wavelengths absorbed by moisture, we enabled robust differentiation between materials, allowing us to distinguish a mannequin from a human, even when their appearances are similar.

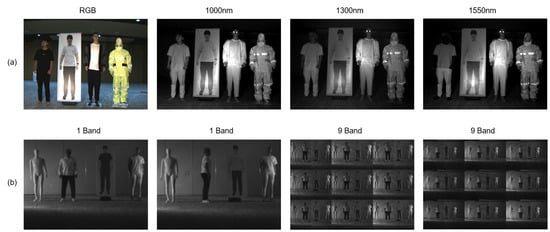

As shown in Figure 7a, a mannequin and a human have highly similar appearances. Using conventional object detection models, such as YOLO [32] and DETR [33], they are both detected as humans. Although fine-tuning could enable the models to distinguish them based on subtle differences in appearance, this would only allow the model to determine that specific situation shown in the training dataset and would not generalize well. In addition, if the distance from the object increases or if the objects are designed to look very similar, it becomes impossible to distinguish them using only information from the visible spectrum. Therefore, additional information is needed to differentiate objects with similar appearances. To do that, we used data acquired from the SWIR camera; the reflection intensity values for each wavelength were obtained, and the differences were analyzed according to the characteristics of the objects. By utilizing the phenomenon where reflectivity varies with the presence of moisture, intensity values are gathered for areas with moisture to analyze the relative differences between wavelengths.

Figure 7.

Zero-shot object detection results on Human vs Others Dataset (a) with YOLO [32] (b) and DETR [33] (c). Both models detect each object as a “person” with high confidence scores, indicating that mannequins share significant visual similarities with humans.

Spectral Band Selection: Inspired by the paper of Manakkakudy et al. [1] on classifying waste materials using SWIR reflectance measurements, we used the properties of water to distinguish between real and fake objects. We looked for wavelengths that had a direct effect on water and humidity. The 1095 nm wavelength is part of the NIR spectrum and shows weak interaction with water, allowing light to pass through moisture with minimal absorption. In contrast, the 1550 nm and 1580 nm wavelengths exhibit stronger absorption characteristics due to water, creating a clear distinction between human and mannequin skin. The 1345 nm wavelength can be considered to have an absorption characteristic that is intermediate between 1095 nm and 1580 nm, showing some water absorption properties in moisture analysis without the strong absorption characteristics of 1580 nm.

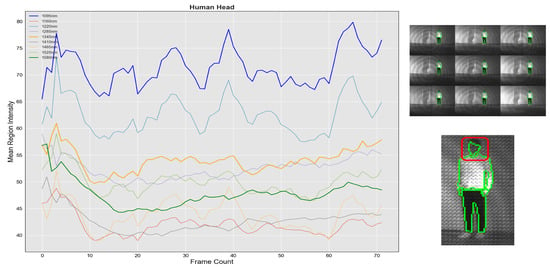

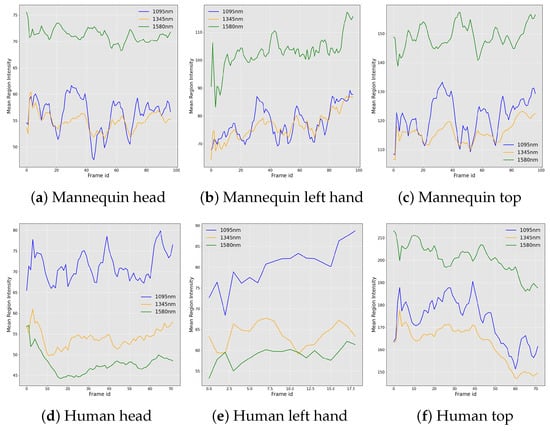

To validate these findings, each pixel was annotated in a polygonal form, covering areas such as the head, clothing, arms, and legs. For the head areas of both the mannequin and the person, pixel values were extracted, and the average intensity for the region was calculated across all frames of the data and presented as the average intensity per frame (Figure 8). Although there was a difference in the absolute intensity value depending on the lighting, the trend of the relative intensity of the wavelength acquired for interpretation was confirmed to be similar. Our analysis revealed that the key difference lies in their interaction with moisture: human skin contains water, while mannequin skin does not. At 1095 nm (shown by the blue line), light passes through with minimal absorption, while, at 1580 nm (shown by the green line), light is strongly absorbed by moisture. This creates a distinct pattern: mannequin skin shows high intensity at 1580 nm due to the absence of moisture, while human skin shows lower intensity at this wavelength due to water absorption. We conducted a detailed comparison of 1095 nm and 1580 nm wavelengths, including the 1345 nm wavelength as an intermediate reference point to help validate our observations about moisture-based discrimination.

Figure 8.

Region-based intensity analysis of the human head in the short-wave infrared (SWIR) range. Key wavelengths at 1095 nm (blue) and 1580 nm (green), serving as discriminators, and an intermediate wavelength at 1345 nm (yellow) are highlighted with bold colored lines.

Figure 9 compares three wavelengths for the head, arms, and clothing of a human and a mannequin. It can be seen that the relative intensity difference between the 1095 nm wavelength and the 1580 nm wavelength is reversed due to the moisture difference. Similarly, as shown in Figure 9b,e, the arm areas of the human and mannequin display a phenomenon similar to that observed in the head, due to the difference between skin and plastic. In contrast, Figure 9c,f show the clothing areas, where both the human and mannequin are wearing similar materials, indicating no relative difference between wavelengths.

Figure 9.

Region-based intensity analysis for mannequin and human subjects: (a–c) show the intensity analysis for different regions of the mannequin (head, left hand, and body), while (d–f) present the corresponding analysis for a human subject.

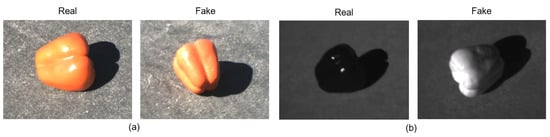

Additionally, we conducted experiments on various objects to differentiate between real and artificial fruits and vegetables. Using a Crevis HG-A130SW camera with a 1550 nm bandpass filter, we captured images that leveraged insights from our previous experiments. At this wavelength, moisture absorption causes real fruits/vegetables to appear darker due to minimal reflection, whereas artificial fruits/vegetables appear brighter due to higher reflectance. Figure 10 provides example images of both real and artificial fruits/vegetables; while conventional visible-spectrum images make it challenging to distinguish between them, SWIR images reveal a clear distinction between real and artificial objects.

Figure 10.

Visible- (a) and SWIR-range (b) imaging examples of real and artificial fruits/vegetables data.

4. Experiments

4.1. Datasets

To test our material-related observations, we utilized two datasets, one for classification and another for object detection.

- Fruit and Vegetable Dataset (Classification): This dataset consists of paired visible-spectrum RGB and SWIR images of fruits and vegetables, captured using a Crevis HG-A130SW camera, as we detailed in Section 3.2. The dataset encompasses a variety of real and artificial fruits and vegetables, including bell peppers, bananas, carrots, and oranges. Three real and three artificial samples were acquired for each fruit type, resulting in a total of 24 objects. For each object, RGB–SWIR paired images were captured, with data from two objects used for training and the remaining object’s image used for inference. Figure 11 showcases examples from this dataset (the RGB–SWIR Fruit and Vegetable Dataset is publicly available for research purposes at the following link: https://huggingface.co/datasets/STL-Yonsei/SWIR-Fruit_and_Vegetable_Dataset (accessed on 25 November 2024)).

Figure 11. Visible- (a) and SWIR (b)-range fruits/vegetables dataset examples.

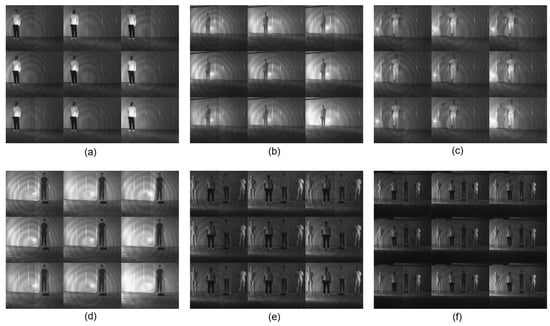

Figure 11. Visible- (a) and SWIR (b)-range fruits/vegetables dataset examples. - Human vs. Others Dataset (Object Detection): This dataset comprises paired data across different wavelengths in both RGB and SWIR ranges, captured using an ANT SWIR multispectral snapshot camera. The objects included in this dataset are a person, a fabric mannequin, a plastic mannequin, and a banner with a human image on it. Data were captured for each object individually and in scenarios where all objects were present within a single image. As depicted in Figure 12, the individually captured data were used for training, while images with all objects were used as inference data.

Figure 12. Eight visible and SWIR-range band image examples of the Human vs. Others Dataset. The figures shown in (a–d) correspond to examples of a human, a cotton mannequin, a plastic mannequin, and a banner object. Figures (e,f) depict test images featuring all objects together in a single scene.

Figure 12. Eight visible and SWIR-range band image examples of the Human vs. Others Dataset. The figures shown in (a–d) correspond to examples of a human, a cotton mannequin, a plastic mannequin, and a banner object. Figures (e,f) depict test images featuring all objects together in a single scene.

4.2. Material Classification

We demonstrated that materials can be distinguished by analyzing their reflection properties in the SWIR range through image processing techniques. Beyond this approach, using deep learning to learn material-specific reflection differences could enable material classification that standard RGB cameras, which capture only visible spectrum data, cannot perform. To validate this, we conducted a classification experiment using our fruits and vegetables dataset with ResNet-18 as our model. While more advanced models are now available, we opted for this earlier CNN-based model to show that even a previous-generation model can effectively learn these features. ResNet’s residual learning framework mitigates performance degradation as network depth increases, achieving a lightweight yet effective structure with 18 layers. We fine-tuned a pre-trained ResNet-18 using our collected data.

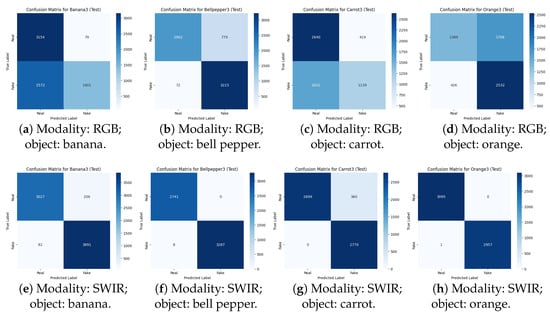

Figure 13a–d presents confusion matrices for each class using RGB data. There were several misclassifications: for instance, bananas and carrots were often classified as real when they were fake, while oranges and sweet peppers were sometimes incorrectly classified as fake when they were real. Though distinguishing real from fake is challenging for humans with visible-spectrum images, deep learning showed some ability to discern subtle appearance differences, achieving partial success in controlled experimental conditions. However, introducing objects lacking clear visual distinctions or testing under various environmental conditions would likely decrease accuracy.

Figure 13.

Confusion matrices of the RGB and SWIR image per-object classification.

In contrast, Figure 13e–h display confusion matrices based on SWIR data, showing near-perfect accuracy. Unlike RGB results, the SWIR data produced minimal errors, with fake objects rarely misclassified as real, except in the case of bananas. The discrepancies with bananas occurred due to a different model type in the test data compared to the training data, which likely contributed to this error. Further training with a wider range of real and fake objects is expected to improve accuracy.

Table 1 and Table 2 present the per object detection results for RGB and SWIR, respectively. In the case of SWIR, the results are close to one for most objects except for the banana, clearly demonstrating that SWIR is significantly better at distinguishing materials, compared to RGB.

Table 1.

Per-object classification result using RGB camera.

Table 2.

Per-object classification result using SWIR camera.

Table 3 compares the accuracy of RGB and SWIR on the exact same objects. As seen, SWIR achieves nearly perfect accuracy, correctly identifying almost all cases without errors. In contrast, the RGB accuracy is relatively lower. Because the model trained on RGB data, achieving an accuracy of around 0.77%, it struggled to distinguish objects like bananas, where there is little visible difference between real and fake, resulting in poor differentiation.

Table 3.

Comparison of RGB and SWIR classification accuracy.

4.3. Object Detection

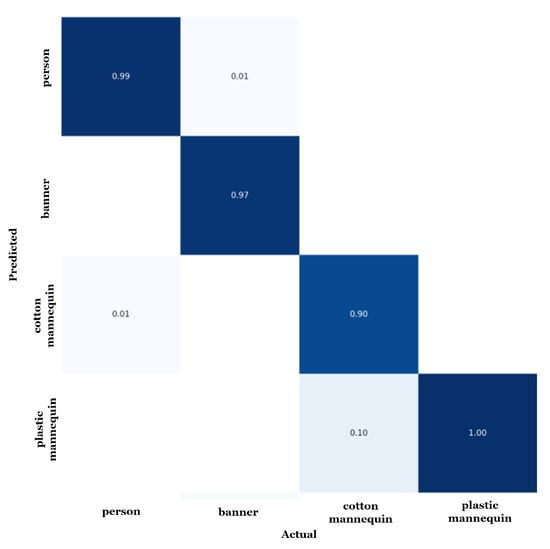

To demonstrate that SWIR image data can be used for object detection as well as classification, we trained the YOLO v7 model [34]. To adapt the YOLO model to use SWIR images, which is normally used with RGB data, we represented the selected three wavelengths as a three-channel image by putting 1095 nm, 1345 nm, and 1580 nm wavelengths into the R, G, and B channels. After training and running inference with the YOLO v7 model on the acquired data, we obtained a confusion matrix, as shown in Figure 14. We used precision, recall, and mAP (mean Average Precision) metrics to evaluate our model’s results (Table 4).

Figure 14.

Confusion matrix of human vs. others object detection evaluation.

Table 4.

Object detection results using SWIR wavelengths.

These results indicate that SWIR-range imaging is effective for the detection and distinguishing of objects in difficult environments with the help of deep learning methods. The dataset used in our experiments included objects with some visual differences and was acquired in a controlled environment, meaning that these objects could potentially be distinguished using conventional RGB images. However, when appearances are highly similar or objects are farther away, as in our classification dataset, distinguishing subtle differences becomes challenging with RGB images alone. Additionally, as the number of object types and environmental variability in the dataset increases, RGB imaging may struggle to differentiate materials effectively, unlike SWIR. Thus, we demonstrate that, while objects with similar appearances are indistinguishable in RGB, SWIR imaging enables their differentiation by capturing physical characteristics. Furthermore, these characteristics can be learned by object detection models, extending the utility of SWIR in complex detection tasks.

5. Discussion

Most studies this work discusses focus on distinguishing materials, such as classifying steel and wood. However, our approach emphasizes leveraging material differences to distinguish objects with similar appearances without focusing on their exact material. This is especially critical in the field of autonomous driving, where accurately identifying humans is a vital safety issue. By utilizing a SWIR camera, we demonstrated the ability to distinguish between visually indistinguishable objects in the visible spectrum. This is achieved by exploiting differences in material reflectivity within the SWIR range, showcasing SWIR imaging’s transformative potential for safety-critical applications.

An essential contribution of this study is the assessment of the SWIR range wavelengths for developing an object detection model that harnesses material reflectivity rather than visual features. Using a diverse dataset, including mannequins, humans, and banners, our model effectively recognized material-based distinctions, even in scenarios where appearance cues were insufficient. To further validate this capability, we created and publicly released a novel fruit and vegetable dataset comprising visually similar objects. The classification task on this dataset confirmed that SWIR imaging enables material-based differentiation in a manner not achievable with visible-spectrum data.

To our knowledge, this work represents the first publicly available SWIR dataset, addressing a notable gap in the research community. Due to the lack of existing resources, we independently acquired data for both model training and inference. Additionally, while ideal dataset construction would involve objects with closely matching material properties and appearances, such as wax mannequins alongside human subjects, practical constraints made this infeasible at scale. Instead, we used standard mannequins, banners, and people, acknowledging that this introduces some appearance-based differences. However, our additional fruit and vegetable dataset experiment, where objects share identical visual appearances, strongly supports our claim that the model primarily learns distinctions based on material properties.

6. Conclusions

In conclusion, this study demonstrates the effectiveness of SWIR (short-wave infrared) imaging for material classification, particularly in distinguishing objects that appear similar in the visible spectrum but have different physical properties. By examining objects like mannequins, humans, and banners, we highlighted how SWIR imaging can enhance object recognition in applications such as autonomous driving, where accurate differentiation is critical for safety. Our results affirm that the unique reflectance characteristics of materials in the SWIR range allow for a level of differentiation that visible-light imaging alone cannot achieve. We further validated our approach through object classification experiments involving real and artificial fruits and vegetables, which confirmed the reliability of SWIR data for material-based recognition. These findings suggest that the integration of SWIR imaging into vision systems could significantly reduce false detections in complex environments. By training a deep learning model on both classification and object detection tasks using SWIR data, we demonstrated that this technology can effectively learn and apply material-specific features, advancing material recognition capabilities in dynamic settings.

As we continue to refine and expand our datasets, future work could focus on optimizing SWIR-based models to handle diverse environments and object types. The scalability of our approach, along with its potential for cross-domain applications in areas like agriculture, manufacturing, and robotics, underscores the versatility of SWIR imaging as a robust tool for material differentiation across varied fields. Furthermore, we plan to conduct experiments to evaluate our method under challenging environmental conditions, including scenarios with fog and fire smoke.

Author Contributions

Conceptualization, Y.N. and S.K.; Methodology, H.S., S.Y., Y.J. and Y.N.; Software, H.S. and I.P.; Validation, H.S.; Formal analysis, H.S.; Data curation, H.S., S.Y., Y.J., I.P. and H.J.; Writing—original draft, H.S. and S.Y.; Writing—review & editing, Y.N.; Visualization, S.Y. and Y.N.; Supervision, Y.N. and S.K.; Project administration, S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Korea Evaluation Institute of Industrial Technology (KEIT) grant, funded by the Korea government (MOTIE) (RS-2022-00154651, 3D semantic camera module development capable of material and property recognition).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in RGB–SWIR Fruit and Vegetable Dataset at https://huggingface.co/datasets/STL-Yonsei/SWIR-Fruit_and_Vegetable_Dataset, accessed on 25 November 2024.

Acknowledgments

This work was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (RS-2023-00236245, Development of Perception/Planning AI SW for Seamless Autonomous Driving in Adverse Weather/Unstructured Environment). Additionally, this research work was supported by the BK21 FOUR (Fostering Outstanding Universities for Research) funded by the Ministry of Education (MOE) of Korea and National Research Foundation (NRF) of Korea.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Manakkakudy, A.; De Iacovo, A.; Maiorana, E.; Mitri, F.; Colace, L. Waste Material Classification: A Short-Wave Infrared Discrete-Light-Source Approach Based on Light-Emitting Diodes. Sensors 2024, 24, 809. [Google Scholar] [CrossRef]

- Sumon, B.U.; Muselet, D.; Xu, S.; Trémeau, A. Multi-View Learning for Material Classification. J. Imaging 2022, 8, 186. [Google Scholar] [CrossRef] [PubMed]

- Xingshuai Dong, M.L.C. Applications of Computer Vision in Autonomous Vehicles: Methods, Challenges and Future Directions. arXiv 2024, arXiv:2311.09093. [Google Scholar]

- Guo, Y.; Jiang, H.; Qi, X.; Xie, J.; Xu, C.Z.; Kong, H. Unsupervised visible-light images guided cross-spectrum depth estimation from dual-modality cameras. arXiv 2022, arXiv:2205.00257. [Google Scholar]

- Adamopoulos, E. Learning-based classification of multispectral images for deterioration mapping of historic structures. J. Build. Pathol. Rehabil. 2022, 6, 41. [Google Scholar] [CrossRef]

- Prananto, J.A.; Minasny, B.; Weaver, T. Near infrared (NIR) spectroscopy as a rapid and cost-effective method for nutrient analysis of plant leaf tissues. Adv. Agron. 2020, 164, 1–49. [Google Scholar]

- Hively, W.D.; Lamb, B.T.; Daughtry, C.S.; Serbin, G.; Dennison, P.; Kokaly, R.F.; Wu, Z.; Masek, J.G. Evaluation of SWIR crop residue bands for the Landsat Next mission. Remote Sens. 2021, 13, 3718. [Google Scholar] [CrossRef]

- He, X.; Zhu, J.; Li, P.; Zhang, D.; Yang, L.; Cui, T.; Zhang, K.; Lin, X. Research on a Multi-Lens Multispectral Camera for Identifying Haploid Maize Seeds. Agriculture 2024, 14, 800. [Google Scholar] [CrossRef]

- Román, A.; Tovar-Sánchez, A.; Olivé, I.; Navarro, G. Using a UAV-Mounted multispectral camera for the monitoring of marine macrophytes. Front. Mar. Sci. 2021, 8, 722698. [Google Scholar] [CrossRef]

- Delaney, J.K.; Thoury, M.; Zeibel, J.G.; Ricciardi, P.; Morales, K.M.; Dooley, K.A. Visible and infrared imaging spectroscopy of paintings and improved reflectography. Herit. Sci. 2016, 4, 1–10. [Google Scholar] [CrossRef]

- Luo, Y.; Liu, Y.; Wei, Q.; Strlič, M. NIR spectroscopy in conjunction with multivariate analysis for non-destructive characterization of Xuan paper. Herit. Sci. 2024, 12, 175. [Google Scholar] [CrossRef]

- Liang, H. Advances in multispectral and hyperspectral imaging for archaeology and art conservation. Appl. Phys. A 2012, 106, 309–323. [Google Scholar] [CrossRef]

- Wang, M.; Shi, W.; Jiang, L.; Voss, K. NIR-and SWIR-based on-orbit vicarious calibrations for satellite ocean color sensors. Opt. Express 2016, 24, 20437–20453. [Google Scholar] [CrossRef] [PubMed]

- Park, H.; Choi, J. Mineral Detection Using Sharpened VNIR and SWIR Bands of Worldview-3 Satellite Imagery. Sustainability 2021, 13, 5518. [Google Scholar] [CrossRef]

- Yu, B.; Chen, Y.; Cao, S.Y.; Shen, H.L.; Li, J. Three-channel infrared imaging for object detection in haze. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Pinchon, N.; Cassignol, O.; Nicolas, A.; Bernardin, F.; Leduc, P.; Tarel, J.P.; Brémond, R.; Bercier, E.; Brunet, J. All-weather vision for automotive safety: Which spectral band? In Advanced Microsystems for Automotive Applications 2018: Smart Systems for Clean, Safe and Shared Road Vehicles, 22nd ed.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 3–15. [Google Scholar]

- Bhadoriya, A.S.; Vegamoor, V.; Rathinam, S. Vehicle detection and tracking using thermal cameras in adverse visibility conditions. Sensors 2022, 22, 4567. [Google Scholar] [CrossRef]

- St-Laurent, L.; Mikhnevich, M.; Bubel, A.; Prévost, D. Passive calibration board for alignment of VIS-NIR, SWIR and LWIR images. Quant. InfraRed Thermogr. J. 2017, 14, 193–205. [Google Scholar] [CrossRef]

- Sheeny, M.; Wallace, A.; Emambakhsh, M.; Wang, S.; Connor, B. POL-LWIR vehicle detection: Convolutional neural networks meet polarised infrared sensors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1247–1253. [Google Scholar]

- Judd, K.M.; Thornton, M.P.; Richards, A.A. Automotive sensing: Assessing the impact of fog on LWIR, MWIR, SWIR, visible, and lidar performance. In Proceedings of the Infrared Technology and Applications XLV. SPIE, Baltimore, MD, USA, 14–18 April 2019; Volume 11002, pp. 322–334. [Google Scholar]

- Jiang, J.; Liu, F.; Xu, Y.; Huang, H. Multi-spectral RGB-NIR image classification using double-channel CNN. IEEE Access 2019, 7, 20607–20613. [Google Scholar] [CrossRef]

- Saponaro, P.; Sorensen, S.; Kolagunda, A.; Kambhamettu, C. Material classification with thermal imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015 2015; pp. 4649–4656. [Google Scholar]

- Xue, J.; Zhang, H.; Dana, K.; Nishino, K. Differential angular imaging for material recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 764–773. [Google Scholar]

- Pandey, D.K.; Pandey, S. Ultrasonics: A Technique of Material Characterization. In Acoustic Waves; InTech: Rijeka, Croatia, 2010; pp. 397–430. [Google Scholar]

- Azimi, S.M.; Britz, D.; Engstler, M.; Fritz, M.; Mücklich, F. Advanced steel microstructural classification by deep learning methods. Sci. Rep. 2018, 8, 2128. [Google Scholar] [CrossRef]

- Ratnayake, T.; Pollwaththage, N.; Nettasinghe, D.; Godaliyadda, G.; Wijayakulasooriya, J.; Ekanayake, M. Material based acoustic signal classification-A subspace-based approach. In Proceedings of the 2013 IEEE International Conference of IEEE Region 10 (TENCON 2013), Xi’an, China, 22–25 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–4. [Google Scholar]

- Weiß, J.; Santra, A. One-shot learning for robust material classification using millimeter-wave radar system. IEEE Sensors Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Ponti, M.; Mascarenhas, N. Material analysis on noisy multispectral images using classifier combination. In Proceedings of the 6th IEEE Southwest Symposium on Image Analysis and Interpretation, Lake Tahoe, NV, USA, 28–30 March 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 1–5. [Google Scholar]

- Jurado, J.M.; Cárdenas, J.L.; Ogayar, C.J.; Ortega, L.; Feito, F.R. Semantic segmentation of natural materials on a point cloud using spatial and multispectral features. Sensors 2020, 20, 2244. [Google Scholar] [CrossRef] [PubMed]

- Han, D.K.; Ha, J.W.; Kim, J.O. Material classification based on multi-spectral nir band image. In Proceedings of the 2022 37th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Phuket, Thailand, 5–8 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 940–942. [Google Scholar]

- Da Silva, W.; Habermann, M.; Shiguemori, E.H.; do Livramento Andrade, L.; de Castro, R.M. Multispectral image classification using multilayer perceptron and principal components analysis. In Proceedings of the 2013 BRICS Congress on Computational Intelligence and 11th Brazilian Congress on Computational Intelligence, Ipojuca, Brazil, 8–11 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 557–562. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).