Abstract

Mobile robot navigation is a critical aspect of robotics, with applications spanning from service robots to industrial automation. However, navigating in complex and dynamic environments poses many challenges, such as avoiding obstacles, making decisions in real-time, and adapting to new situations. Reinforcement Learning (RL) has emerged as a promising approach to enable robots to learn navigation policies from their interactions with the environment. However, application of RL methods to real-world tasks such as mobile robot navigation, and evaluating their performance under various training–testing settings has not been sufficiently researched. In this paper, we have designed an evaluation framework that investigates the RL algorithm’s generalization capability in regard to unseen scenarios in terms of learning convergence and success rates by transferring learned policies in simulation to physical environments. To achieve this, we designed a simulated environment in Gazebo for training the robot over a high number of episodes. The training environment closely mimics the typical indoor scenarios that a mobile robot can encounter, replicating real-world challenges. For evaluation, we designed physical environments with and without unforeseen indoor scenarios. This evaluation framework outputs statistical metrics, which we then use to conduct an extensive study on a deep RL method, namely the proximal policy optimization (PPO). The results provide valuable insights into the strengths and limitations of the method for mobile robot navigation. Our experiments demonstrate that the trained model from simulations can be deployed to the previously unseen physical world with a success rate of over . The insights gained from our study can assist practitioners and researchers in selecting suitable RL approaches and training–testing settings for their specific robotic navigation tasks.

1. Introduction

Mobile robot navigation is a fundamental research topic in the realm of robotics, with applications across a wide range of domains [1,2]. The goal of enabling robots to autonomously navigate through intricate and dynamic environments has spurred the exploration of various methodologies, among which reinforcement learning (RL) has emerged as a promising paradigm. The utilization of RL techniques for mobile robot navigation builds upon a rich body of research. Prior studies have demonstrated the potential of RL in addressing navigation challenges.

Among various RL methods, proximal policy optimization (PPO) has gained popularity due to its efficiency and robustness [3,4,5,6]. PPO is a policy gradient method that updates the policy by taking a step that is close to the previous policy, while ensuring a bounded policy change. This helps to avoid large policy updates that can harm the algorithm’s performance or cause instability. Several previous works have explored the use of PPO for mobile robot navigation, with different aspects and objectives. For instance, the authors of [7] proposed a qualitative comparison of the most recent autonomous mobile robot navigation techniques based on deep reinforcement learning, including PPO. However, according to [8,9], PPO struggles in continuous action space and has a slow covergence rate.

According to the literature, deep reinforcement learning (DRL) has the potential to enable robots to learn complex behaviors from high-dimensional sensory data without explicit programming, and deep Q-network (DQN) [10,11,12,13] and deep deterministic policy gradient (DDPG) [14,15,16,17] are two promising DRL algorithms for mobile robot navigation. For instance, the authors of [18] devised the dynamic epsilon adjustment method integrated with DRL to reduce the frequency of non-ideal agent behaviors and therefore improved the control performance (i.e., goal rate). The authors of [19] proposed an improved deep deterministic policy gradient (DDPG) path planning algorithm incorporating sequential linear path planning (SLP) to address the challenge of navigating mobile robots through large-scale dynamic environments. The proposed algorithm utilized the strengths of SLP to generate a series of sub-goals for the robot to follow, while DDPG was used to refine the path and avoid obstacles in real time. This approach also had a better success rate than the traditional DDPG algorithm and the A+DDPG algorithm [20]. The authors of [21] proposed a new DRL algorithm called a dueling Munchausen deep Q network (DM-DQN) for robot path-planning. DM-DQN combined the advantages of dueling networks and Munchausen deep Q-learning to improve exploration and convergence speed. In static environments, DM-DQN achieved an average path length that was shorter than both DQN and Dueling DQN. In dynamic environments, DM-DQN achieved a success rate that was higher than DQN and Dueling DQN. The authors of [22] proposed a global path planning algorithm for mobile robots based on the prior knowledge of PPoAMR, a heuristic method that used prior knowledge particle swarm optimization (PKPSO) [23,24,25]. They used PPoAMR to find the best fitness value for the path planning problem and then used PPO to optimize the path. Moreover, they showed that their algorithm can generate optimal and smooth paths for mobile robots in complex environments. However, as [26] pointed out, implementing DQN or any extended DQN algorithms on physical robots is more challenging. They are also often more resource-intensive. Moreover, DQN algorithms require extensive training data, are sensitive to environmental changes, and necessitate careful hyperparameter tuning. On the other hand, DDPG requires a large number of samples to converge to the optimal policy [27].

The authors of [28] proposed a generalized computation graph that integrates both model-free and model-based methods. Experiments with a simulated car and real-world RC car demonstrated the effectiveness of the approach. In recent years, there has been a growing interest in developing platforms that enable the evaluation of multi-agent reinforcement learning and general AI approaches. One such platform is SMART, introduced by [29], an open-source system designed for multi-robot reinforcement learning (MRRL). SMART integrated a simulation environment and a real-world multi-robot system to evaluate AI approaches. In a similar vein, the Arcade Learning Environment (ALE) was proposed by [30] as a platform for evaluating domain-independent AI. The ALE provided access to hundreds of Atari 2600 game environments, offering a rigorous testbed for comparing various AI techniques. The platform also includes publicly available benchmark agents and software for further research and development. However, these approaches require specialized hardware or resources, limiting accessibility for those with standard PC setups to conduct research from home.

Sampling efficiency and safety issues limit robot control in the real world. One solution is to train the robot control policy in a simulation environment and transfer it to the real world. However, policies trained in simulations often perform poorly in the real world due to imperfect modeling of reality in simulators [31].

The authors of [32] developed a training procedure, a set of actions available to the robot, a suitable state representation, and a reward function. The setup was evaluated using a simulated real-time environment. The authors compared a reference setup, different goal-oriented exploration strategies, and two different robot kinematics (holonomic and differential). With dynamic obstacles, the robot was able to reach the desired goal in 93% of the episodes. The task scenario was inspired by an indoor logistics setting, where one robot is situated in an environment with multiple obstacles and should execute a transport order. The robot’s goal was to travel to designated positions in the least amount of time, without colliding with obstacles or with the walls of the environment. However, this was all done in the simulated setting. The authors of [33] designed an automated evaluation framework for Rapidly-Exploring Random Tree (RRT) frontier detector for indoor space navigation, which addressed the performance verification aspect. However, the method being verified was not of the RL category. The authors of [34] also designed a simulation framework for training the robot agents. However, the framework was only designed for simulation purposes. These important works have set the stage for the examination of PPO in our study since the algorithm has a decent performance in robotic tasks and is easier to implement in mobile robots.

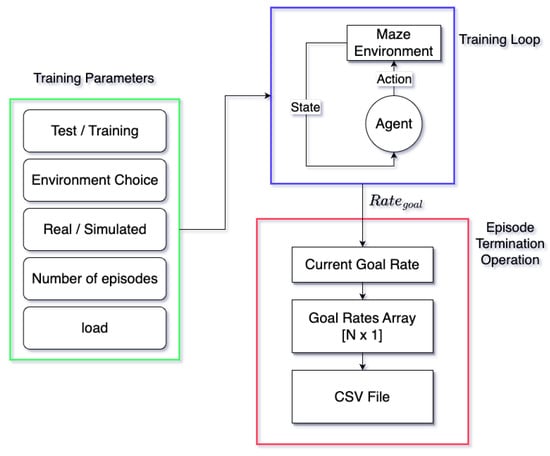

The domain of mobile robot navigation presents a multitude of complexities, including navigating through cluttered spaces, avoiding obstacles, and making decisions in real time. To address these challenges, this study employs an automated evaluation framework tailored for Turtlebot robots, designed to rigorously assess the performance of RL techniques in a controlled environment. The investigation is anchored in an extensive analysis, focusing on the PPO algorithm. This technique is scrutinized in a discrete action space. The contributions of this paper are as follows:

- We redesigned and implemented a personalized automated learning and evaluation framework based on an existing repository [34], allowing users to specify custom training and testing parameters for both simulated and physical robots (). This makes the platform adaptable and accessible to a wider range of users with limited resources.

- To establish a robust benchmark for robotic research study, we design a simulated environment in Gazebo to train the agent. The training environment closely mimics typical indoor scenarios encountered by robots with common obstacles such as walls and barriers, replicating real-world challenges.

- Our implementation provides statistical metrics, such as goal rate, for detailed comparison and analysis, with the flexibility to extend output to additional data such as trajectory paths and robot LiDAR readings. Moreover, our implementation can be extended to output additional metrics such as trajectory data, robot LiDAR data, etc. The insights gleaned from this study are invaluable to practitioners and researchers for their specific robotic navigation tasks.

- We conducted extensive physical experiments to evaluate the real-world navigation performance under varied environment configurations. Our results show that the agent trained in simulation can achieve a success rate of over in our physical environments. Simulated training is not restricted by physical constraints such as the robot’s battery power, and is more efficient in training data collection. This finding demonstrates the value of simulated training with RL for real world mobile navigation.

The organization of the paper is provided as follows. The algorithm background of PPO is presented in Section 2. The automated framework development and the integration of it with the PPO algorithm for robot navigation is presented in Section 3. The experimental set-up along with the set parameters for each environment is presented in Section 4. The results with relevant figures are provided in Section 5. Finally, Section 6 concludes the work.

2. PPO Algorithm Background

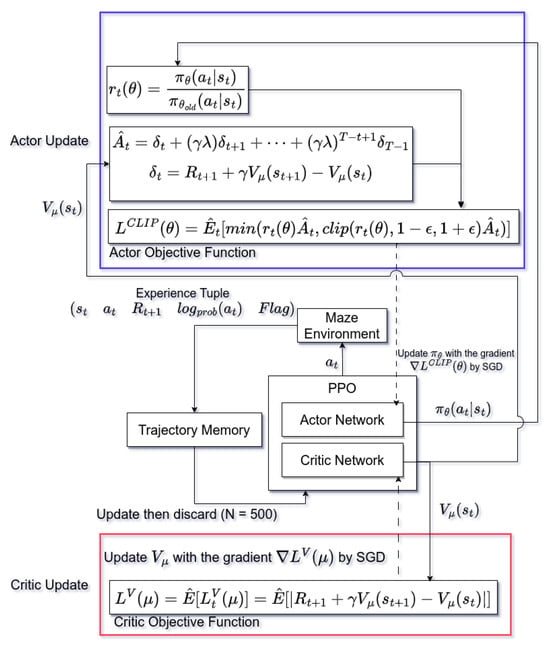

Schulman et al. [3] proposed the Proximal Policy Optimization (PPO) algorithm, which presents a novel approach to reinforcement learning that focuses on improving the stability and sample efficiency of policy optimization. PPO belongs to the family of policy gradient methods and aims to address some of the limitations of earlier algorithms like TRPO [35].

PPO introduces the concept of a clipped surrogate objective function, which plays a central role in its stability. This function limits the extent to which the policy can change during each update, effectively preventing overly aggressive updates that can lead to policy divergence:

where represents the empirical average across time t. is a hyperparameter. is the clipping function that restricts into the interval . denotes the probability ratio, which is the ratio between the current policy, , and the policy from the previous time step, :

denotes the advantage estimator, which is defined as follows:

where is the discount factor. is the learning rate. t is the current time step. T is the total number of time steps in the end. is the reward received in the next time step. is the value function of the next time step, and is the current value function. Both functions are output by the critic network with parameter set . By constraining policy changes, PPO ensures smooth learning and reliable convergence.

Another crucial aspect of PPO is its use of importance sampling. This technique enables a balance between exploration and exploitation by adjusting the policy updates based on the ratio of probabilities between the new and old policies. This allows the agent to explore new actions while still staying close to its current policy, preventing excessive deviations. The PPO algorithm also boasts high sample efficiency, making it particularly suitable for applications where data collection is resource-intensive. It efficiently learns from a relatively small amount of data, which is vital for real-world scenarios where collecting extensive data can be costly or time-consuming.

4. Experimental Set-Up

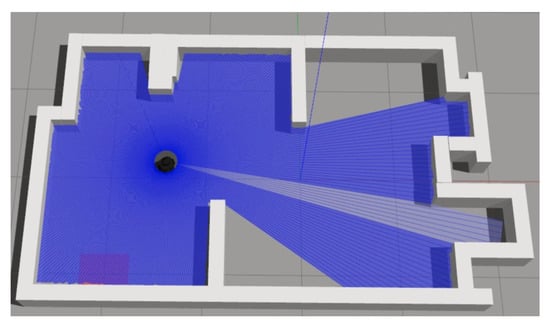

4.1. Source Simulated Maze

The simulated training environment used for this framework was created using the Gazebo simulator [37], which provides realistic robotic movements, a physics engine, and the generation of sensor data combined with noise. The source simulated maze, shown in Figure 8, is a customized map with an area of approximately 82.75 (free space area). This simulated maze is an approximate replica of one of the target mazes. For additional information regarding the parameters chosen for the training phase in this particular simulation environment, see Appendix B.

Figure 8.

The custom simulated environment. Dimension = 12.50 m × 8.00 m. Environment size ≈ 82.75 .

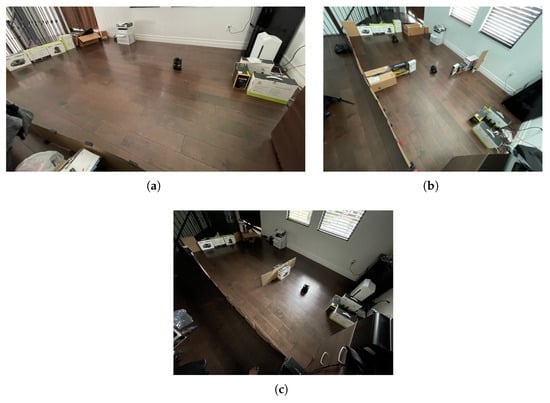

4.2. Testing Physical Environments

The first physical maze, shown in Figure 9a, is a customized map free of obstacles. The second physical maze, shown in Figure 9b, is another customized map that is similar to the simulated environment. Note this maze has walls. The third physical maze, shown in Figure 9c, is another customized map that has a slab of wall in the middle that allows the robot to pass through on both sides. These mazes will be the physical testing environments for the agent. For additional information regarding the parameters chosen for the training and testing phases in this particular physical environment, see Appendix C.

Figure 9.

(a) Physical Maze 1, (b) Maze 2, and (c) Maze 3. (a) Physical Maze 1. Dimension = 12.50 m × 8.00 m. Environment size ≈ 82.75 . (b) Physical Maze 2. Dimension = 12.50 m × 8.00 m. Environment size ≈ 82.75 . (c) Physical Maze 3. Dimension = 12.50 m × 8.00 m. Environment size .

5. Results Analysis

5.1. Simulation Training

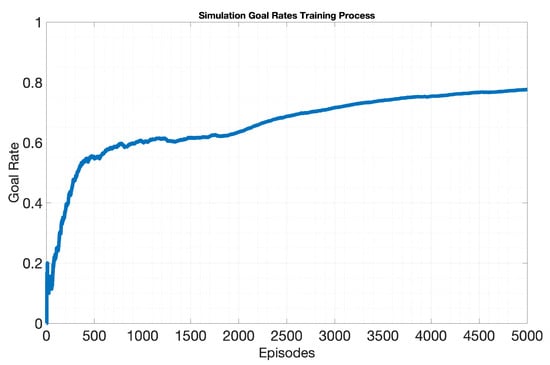

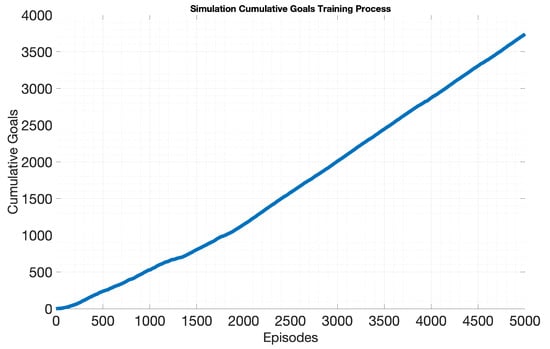

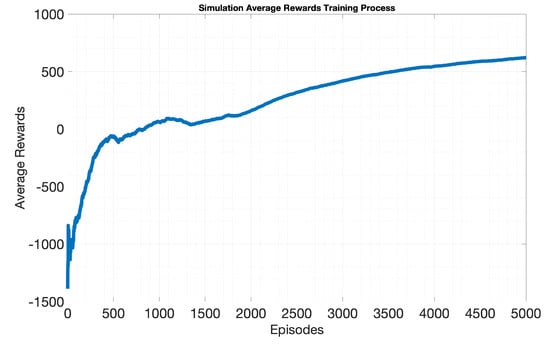

The agent was first trained in a simulated environment as shown in Figure 8 for 5000 episodes. This number of episodes was chosen because a reasonable performance was reached (a goal rate of 0.78). Figure 10, Figure 11 and Figure 12 show the success rate, the total number of goals reached, and the average rewards obtained over all training episodes. The x-axis in each of the figures represents the total number of episodes run, and the y-axis represents the respective metric being evaluated.

Figure 10.

The goal rate for all training episodes in the simulated environment with obstacles. The simulated environment, shown in Figure 8, was used. One of the advantages of simulated training is abundant training episodes without a time-consuming setup. After about 5000 episodes, the agent’s goal rate converged to about 79%.

Figure 11.

The total number of goals reached for all training episodes in the simulated environment with obstacles. The simulated environment, shown in Figure 8, was used. Again, as the agent improves at reaching the target, the curve should appear more linear, which is shown here close to the end of 5000 episodes.

Figure 12.

Average rewards for all training episodes in the simulated environment with obstacles. As the agent improves at the task at hand, the average rewards should become more positive and converge to a value, which is shown here.

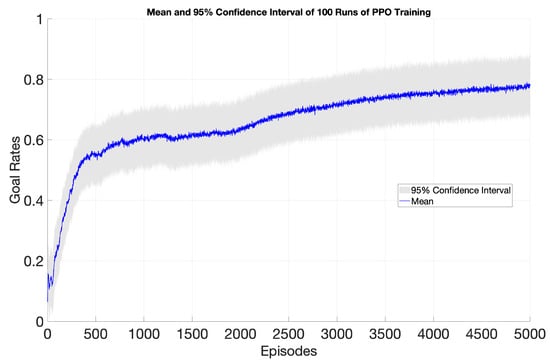

All figures have shown sufficient performance by the agent because the agent showed a goal rate of approximately 79% at the end of the 5000-episode training, as demonstrated in Figure 10. In Figure 11, the line representing the total number of goals reached is almost at 45 degrees relative to the x-axis near the end of the training. This means that the agent is almost always reaching the goal at each episode. In addition, the average rewards obtained was becoming more positive and stabilized (the curve is becoming flatter in the positive region), as shown in Figure 12. The network weights at the end of this training were transferred to environments as shown in Figure 9 for physical testing. To assess the reliability of this training, we repeated the same training process for 100 runs, where each run lasts for 5000 episodes. The results are in Figure 13. The blue line shows the agent’s mean goal rate over 5000 episodes for all 100 runs, whereas the shaded region indicates the 95% confidence interval across all runs. This interval provides insight into the variability of performance, which converges as the agent stabilizes its learning. We have also trained a basic DQN agent under the same settings, but the goal rate converged at around only 10%.

Figure 13.

Confidence interval plot of 100 independent PPO training runs across 5000 episodes. The shaded region around the mean represents the 95% confidence interval, quantifying run variability and training process robustness.

5.2. Physical Testing

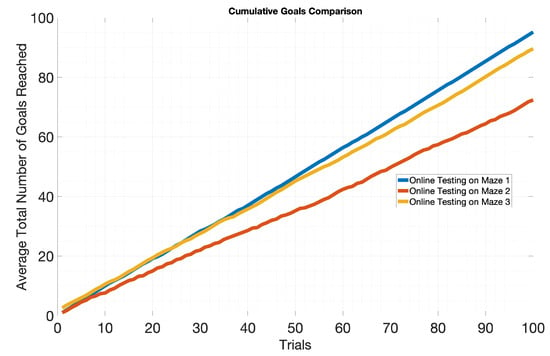

We evaluate the performance of the agent in the physical environments as shown in Figure 9 for 5 runs of 100 trials. A run consists of any number of trials (in this case, 100), whereas the robot attempting to navigate to the goal position is counted as a trial. Table 1 shows the success rates over all the 5 runs of 100 trials in the environments mentioned. We can see that the agent has the highest average goal rate in Maze 1 since Maze 1 is an empty maze. In Maze 2, the agent has a goal rate of 0.724, which is comparable to the converged goal rate in the simulation training. The agent has a goal rate of 0.881 in Maze 3 since there is a slab of wall in the middle and it is easier for the robot to pass through either side. Although the agent achieved a success rate of approximately 79% in the simulated environment (Simulated Maze 2), its performance dropped slightly to 72.4% in the physical environment (Maze 2). This difference is attributed to the environmental noise present in the physical setting, which is absent in simulation.

Table 1.

Average Goal Rate. The goal rate value is first calculated out of 100 trials and then averaged over 5 runs.

Figure 14 shows that the agent reached its goals the quickest in Maze 1, which is an empty maze, as indicated by the steep slope of the blue line. Maze 2, with a goal rate of 0.724, proved to be the most challenging due to its narrower central open space compared to the other mazes. In Maze 3, the agent reached its goals at a moderate pace, navigating around a central wall by successfully learning to pass on either side. This is reflected by the more gradual slope of the yellow line in the figure.

Figure 14.

Average cumulative goals for all 5 runs of 100 trials in physical environments shown in Figure 9. It can be seen clearly that the agent reaches goals more often in Maze 1 since it is a free maze, whereas the agent reaches the goals least often in Maze 2 since there are more walls.

6. Conclusions

In this paper, we design an existing automated evaluation framework so that the user can input more specific training parameters before the actual testing, allowing for easily repeatable experiments with any number of iterations. Our work also allows the framework to evaluate the agent’s testing performance. We show this by first defining a source simulated environment that is similar to one of the target real-world environments. We then set up three physical environments (an empty maze as Maze 1, a maze similar to the simulation maze as Maze 2, and the maze with one slab of wall in the middle as Maze 3) to show the performance difference of the agent in different physical environments including familiar and unforeseen environments. Our experiments show that sufficient training in simulation can greatly improve the agent’s performance when transferred to physical environments. With our framework, statistical results such as goal rates can be output as a CSV file for later analysis.

Author Contributions

W.-C.C., Z.N. and X.Z. identified the topic, formulated the problem, and decided the system-level framework. W.-C.C. integrated the method, conducted the simulation and physical experiments, collected the data, and initialized the draft. M.W. and Z.N. provided related literature, suggested the experiment designs, and discussed the comparative results. All authors analyzed the experimental data, such as figures and tables, and proofread the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Science Foundation under Grant 2047064, 2047010, 1947418, and 1947419.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Source code and case study data are available at https://github.com/Ac31415/RL-Auto-Eval-Framework, accessed on 22 November 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Sensor Specifications

In this Appendix, we present the specifications of the LiDAR sensor used. More details can be found in the manufacturer’s datasheet [38].

- Hardware: 360 Laser Distance Sensor LDS-01, Hitachi-LG Data Storage, Inc., Tokyo, Japan

- Quantity: 1

- Dimensions: 69.5 (W) × 95.5 (D) × 39.5 (H) mm

- Distance Range: 120–3500 mm

- Sensor Position: 192 mm from the ground

Appendix B. Simulation Training Parameters

In this appendix, we specify the hyperparameters used for simulation training, alongside various implementation details. These parameters are set so that the algorithm can converge within a reasonable time frame.

- Episode limit: 5000

- Update time step (memory limit): 500

- Policy update epochs: 50

- PPO clip parameter:

- Discount factor ():

Appendix C. Physical Training and Testing Parameters

In this appendix, we specify the hyperparameters used for physical training and testing, alongside various implementation details. Note that during physical testing, update parameters were not used.

- Number of runs: 5

- Number of trials of each run: 100

- Update time step (memory limit): 500

- Policy update epochs: 50

- PPO clip parameter:

- Discount factor ():

References

- Gonzalez-Aguirre, J.A.; Osorio-Oliveros, R.; Rodriguez-Hernandez, K.L.; Lizárraga-Iturralde, J.; Morales Menendez, R.; Ramirez-Mendoza, R.A.; Ramirez-Moreno, M.A.; Lozoya-Santos, J.d.J. Service robots: Trends and technology. Appl. Sci. 2021, 11, 10702. [Google Scholar] [CrossRef]

- O’Brien, M.; Williams, J.; Chen, S.; Pitt, A.; Arkin, R.; Kottege, N. Dynamic task allocation approaches for coordinated exploration of Subterranean environments. Auton. Robot. 2023, 47, 1559–1577. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-dimensional continuous control using generalized advantage estimation. arXiv 2015, arXiv:1506.02438. [Google Scholar]

- Heess, N.; Tb, D.; Sriram, S.; Lemmon, J.; Merel, J.; Wayne, G.; Tassa, Y.; Erez, T.; Wang, Z.; Eslami, S.; et al. Emergence of locomotion behaviours in rich environments. arXiv 2017, arXiv:1707.02286. [Google Scholar]

- Wang, Y.; Wang, L.; Zhao, Y. Research on door opening operation of mobile robotic arm based on reinforcement learning. Appl. Sci. 2022, 12, 5204. [Google Scholar] [CrossRef]

- Plasencia-Salgueiro, A.d.J. Deep Reinforcement Learning for Autonomous Mobile Robot Navigation. In Artificial Intelligence for Robotics and Autonomous Systems Applications; Springer: Berlin/Heidelberg, Germany, 2023; pp. 195–237. [Google Scholar]

- Holubar, M.S.; Wiering, M.A. Continuous-action reinforcement learning for playing racing games: Comparing SPG to PPO. arXiv 2020, arXiv:2001.05270. [Google Scholar]

- Del Rio, A.; Jimenez, D.; Serrano, J. Comparative Analysis of A3C and PPO Algorithms in Reinforcement Learning: A Survey on General Environments. IEEE Access 2024, 12, 146795–146806. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Kim, K. Multi-agent deep Q network to enhance the reinforcement learning for delayed reward system. Appl. Sci. 2022, 12, 3520. [Google Scholar] [CrossRef]

- Pérez-Gil, Ó.; Barea, R.; López-Guillén, E.; Bergasa, L.M.; Gomez-Huelamo, C.; Gutiérrez, R.; Diaz-Diaz, A. Deep reinforcement learning based control for Autonomous Vehicles in CARLA. Multimed. Tools Appl. 2022, 81, 3553–3576. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Barth-Maron, G.; Hoffman, M.W.; Budden, D.; Dabney, W.; Horgan, D.; Tb, D.; Muldal, A.; Heess, N.; Lillicrap, T. Distributed distributional deterministic policy gradients. arXiv 2018, arXiv:1804.08617. [Google Scholar]

- Egbomwan, O.E.; Liu, S.; Chaoui, H. Twin Delayed Deep Deterministic Policy Gradient (TD3) Based Virtual Inertia Control for Inverter-Interfacing DGs in Microgrids. IEEE Syst. J. 2022, 17, 2122–2132. [Google Scholar] [CrossRef]

- Kargin, T.C.; Kołota, J. A Reinforcement Learning Approach for Continuum Robot Control. J. Intell. Robot. Syst. 2023, 109, 1–14. [Google Scholar] [CrossRef]

- Cheng, W.C.A.; Ni, Z.; Zhong, X. A new deep Q-learning method with dynamic epsilon adjustment and path planner assisted techniques for Turtlebot mobile robot. In Proceedings of the Synthetic Data for Artificial Intelligence and Machine Learning: Tools, Techniques, and Applications, Orlando, FL, USA, 13 June 2023; Volume 12529, pp. 227–237. [Google Scholar]

- Chen, Y.; Liang, L. SLP-Improved DDPG Path-Planning Algorithm for Mobile Robot in Large-Scale Dynamic Environment. Sensors 2023, 23, 3521. [Google Scholar] [CrossRef]

- He, N.; Yang, S.; Li, F.; Trajanovski, S.; Kuipers, F.A.; Fu, X. A-DDPG: Attention mechanism-based deep reinforcement learning for NFV. In Proceedings of the 2021 IEEE/ACM 29th International Symposium on Quality of Service (IWQOS), Tokyo, Japan, 25–28 June 2021; pp. 1–10. [Google Scholar]

- Gu, Y.; Zhu, Z.; Lv, J.; Shi, L.; Hou, Z.; Xu, S. DM-DQN: Dueling Munchausen deep Q network for robot path planning. Complex Intell. Syst. 2023, 9, 4287–4300. [Google Scholar] [CrossRef]

- Jia, L.; Li, J.; Ni, H.; Zhang, D. Autonomous mobile robot global path planning: A prior information-based particle swarm optimization approach. Control Theory Technol. 2023, 21, 173–189. [Google Scholar] [CrossRef]

- Hamami, M.G.M.; Ismail, Z.H. A Systematic Review on Particle Swarm Optimization Towards Target Search in The Swarm Robotics Domain. Arch. Comput. Methods Eng. 2022, 1–20. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Wang, H.; Ding, Y.; Xu, H. Particle swarm optimization service composition algorithm based on prior knowledge. J. Intell. Manuf. 2022, 1–19. [Google Scholar] [CrossRef]

- Escobar-Naranjo, J.; Caiza, G.; Ayala, P.; Jordan, E.; Garcia, C.A.; Garcia, M.V. Autonomous navigation of robots: Optimization with DQN. Appl. Sci. 2023, 13, 7202. [Google Scholar] [CrossRef]

- Sumiea, E.H.; Abdulkadir, S.J.; Alhussian, H.S.; Al-Selwi, S.M.; Alqushaibi, A.; Ragab, M.G.; Fati, S.M. Deep deterministic policy gradient algorithm: A systematic review. Heliyon 2024, 10, e30697. [Google Scholar] [CrossRef] [PubMed]

- Kahn, G.; Villaflor, A.; Ding, B.; Abbeel, P.; Levine, S. Self-supervised deep reinforcement learning with generalized computation graphs for robot navigation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 5129–5136. [Google Scholar]

- Liang, Z.; Cao, J.; Jiang, S.; Saxena, D.; Chen, J.; Xu, H. From multi-agent to multi-robot: A scalable training and evaluation platform for multi-robot reinforcement learning. arXiv 2022, arXiv:2206.09590. [Google Scholar]

- Bellemare, M.G.; Naddaf, Y.; Veness, J.; Bowling, M. The arcade learning environment: An evaluation platform for general agents. J. Artif. Intell. Res. 2013, 47, 253–279. [Google Scholar] [CrossRef]

- Ju, H.; Juan, R.; Gomez, R.; Nakamura, K.; Li, G. Transferring policy of deep reinforcement learning from simulation to reality for robotics. Nat. Mach. Intell. 2022, 4, 1077–1087. [Google Scholar] [CrossRef]

- Gromniak, M.; Stenzel, J. Deep reinforcement learning for mobile robot navigation. In Proceedings of the 2019 4th Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Nagoya, Japan, 13–15 July 2019; pp. 68–73. [Google Scholar]

- Andy, W.C.C.; Marty, W.Y.C.; Ni, Z.; Zhong, X. An automated statistical evaluation framework of rapidly-exploring random tree frontier detector for indoor space exploration. In Proceedings of the 2022 4th International Conference on Control and Robotics (ICCR), Guangzhou, China, 2–4 December 2022; pp. 1–7. [Google Scholar]

- Frost, M.; Bulog, E.; Williams, H. Autonav RL Gym. 2019. Available online: https://github.com/SfTI-Robotics/Autonav-RL-Gym (accessed on 24 April 2022).

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust region policy optimization. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 1889–1897. [Google Scholar]

- ROBOTIS-GIT. turtlebot3_machine_learning. 2018. Available online: https://github.com/ROBOTIS-GIT/turtlebot3_machine_learning (accessed on 24 April 2022).

- Gazebo. Open Source Robotics Foundation. 2014. Available online: http://gazebosim.org/ (accessed on 24 April 2022).

- ROBOTIS-GIT. LDS Specifications. Available online: https://emanual.robotis.com/docs/en/platform/turtlebot3/features/#components (accessed on 24 April 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).