Abstract

Object detection is a fundamental task in computer vision. Recently, deep-learning-based object detection has made significant progress. However, due to large variations in target scale, the predominance of small targets, and complex backgrounds in remote sensing imagery, remote sensing object detection still faces challenges, including low detection accuracy, poor real-time performance, high missed detection rates, and high false detection rates in practical applications. To enhance remote sensing target detection performance, this study proposes a new model, the remote sensing detection transformer (RS-DETR). First, we incorporate cascaded group attention (CGA) into the attention-driven feature interaction module. By capturing features at different levels, it enhances the interaction between features through cascading and improves computational efficiency. Additionally, we propose an enhanced bidirectional feature pyramid network (EBiFPN) to facilitate multi-scale feature fusion. By integrating features across multiple scales, it improves object detection accuracy and robustness. Finally, we propose a novel bounding box regression loss function, Focaler-GIoU, which makes the model focus more on difficult samples, improving detection performance for small and overlapping targets. Experimental results on the satellite imagery multi-vehicles dataset (SIMD) and the high-resolution remote sensing object detection (TGRS-HRRSD) dataset show that the improved algorithm achieved mean average precision (mAP) of 78.2% and 91.6% at an intersection over union threshold of 0.5, respectively, which is an improvement of 2.0% and 1.5% over the baseline model. This result demonstrates the effectiveness and robustness of our proposed method for remote sensing image object detection.

1. Introduction

Object detection is crucial in remote sensing imagery processing, playing an important role in intelligence reconnaissance [1], ocean monitoring [2], and various daily applications [3]. The main task of remote sensing image object detection is to locate and classify objects of interest in optical images [4], such as ports, bridges, aircraft, ships, and vehicles. The inherent characteristics of remote sensing targets, including small size, multi-scale, and dense distribution, make multi-scene remote sensing object detection highly challenging. Traditional remote sensing image target detection algorithms can generally be divided into template matching-based algorithms and manually crafted feature modeling-based algorithms. Specifically, template matching algorithms detect targets by calculating the similarity between a region’s feature vector in the input image and a template feature vector, such as structural similarity measurement [5] and Hausdorff distance matching [6]. Manual feature modeling algorithms establish target feature representations based on manually crafted feature extraction methods, including scale-invariant feature transform [7] and histogram of oriented gradients [8]. However, traditional remote sensing target detection algorithms struggle to represent features effectively in complex scenarios, and their detection accuracy and speed are difficult to meet practical needs.

Over the past decade, with the rapid development of deep convolutional neural networks (CNNs) [9], many mainstream object detection methods are based on CNN architectures and typically categorized into one-stage [10,11,12,13,14,15,16] and two-stage [17,18,19] detectors. Region-based object detection algorithms divide the task into two stages: the first stage generates a series of candidate regions likely to contain the target, while the second stage classifies these candidate regions as target or background and performs bounding box regression. In contrast, regression-based algorithms skip candidate region generation, directly regressing target bounding boxes and categories from multiple locations in the input image, offering faster processing speeds than two-stage methods. Compared to traditional algorithms, CNNs generate strong, high-level feature representations from images. However, due to the inherent limitations of convolutional operations, particularly their locality, both single-stage and two-stage CNN-based algorithms face performance bottlenecks.

In recent years, attention mechanisms have gained prominence for overcoming the short-range modeling limitations of CNNs. By assigning different weights to channels or spatial regions, attention mechanisms enable high-resolution processing of specific areas, focusing on important parts of the image rather than processing the entire image. This targeted weighting extracts key information from images while ignoring irrelevant details. Transformer-based object detection models employ self-attention mechanisms to effectively integrate global contextual information. In 2020, Carion et al. [20] introduced detection transformer (DETR), the first successful application of transformer architecture in object detection. DETR treats object detection as a direct set prediction problem, using a global loss function and bipartite graph matching for unique predictions. Subsequently, researchers recognized that models employing attention mechanisms could capture global features, leading to improvements in DETR to make it applicable to various specific tasks. These enhancements have enabled DETR to achieve detection accuracy surpassing that of traditional CNN-based models. Zhu et al. proposed deformable DETR [21], introducing deformable convolutions and multi-scale features to better focus on target areas, improving convergence speed. Conditional DETR [22] guides the model to focus on specific areas through conditional queries, thereby enhancing DETR’s performance on small targets and in complex scenes. DINO [23] incorporates denoising techniques to enhance DETR’s robustness under real-world noise conditions. In 2023, Zhao et al. proposed the real-time detection transformer (RT-DETR) [24], further enhancing the speed and accuracy of DETR-based detectors. In this context, we propose a new model, remote sensing DETR (RS-DETR), based on RT-DETR, to enhance object detection performance in remote sensing imagery. The main contributions of our work are as follows:

- To enhance the interaction between features at different levels and improve computational efficiency, we incorporate cascaded group attention into the attention-driven feature interaction module.

- To improve multi-scale feature fusion and object detection accuracy, we propose an enhanced bidirectional feature pyramid network.

- To improve detection performance for small and overlapping targets, we propose a novel bounding box regression loss function, Focaler-GIoU.

2. Related Work

2.1. CNN-Based Object Detection

With the great success of CNN-based object detection methods in generic object detection, researchers have extensively endeavored to explore algorithms to enhance object detection in remote sensing images. In 2021, Shivappriya et al. [25] employ the additive activation function (AAF) to Faster R-CNN and propose the AAF-Faster R-CNN. By using the Fourier series and linear combinations of the activation function to update the loss function, this network improves detection efficiency. In 2022, Ma et al. [26] proposed the feature split-merge-enhancement network (SME-Net), an end-to-end scale-aware network. By employing the feature split-and-merge module and the object saliency enhancement strategy, the network produces more effective feature maps. Additionally, the offset-error rectification module corrects spatial layout inconsistencies among multi-level feature maps during network training. In 2023, Li et al. [27] incorporated two important prior knowledges from remote sensing images and proposed the large selective kernel network (LSKNet). This method dynamically adjusts the receptive field during the backbone network’s feature extraction process, enabling more effective handling of the differences in background information required by different targets.

To improve the real-time detection performance of remote sensing image target detection, researchers have proposed many one-stage detectors. In 2021, Lu et al. [28] introduced an end-to-end network based on single-shot object detector, incorporating a multi-layer feature fusion structure to enhance the semantic richness of shallow features and a dual-path attention module to refine feature information. This model significantly improves the performance of single-stage object detection methods for remote sensing imagery. Liu et al. [29] proposed a decoupled classification localization network (DCL-Net) to enhance the independence of the classification and localization branches. The network improves the distinguishability of classification features and rotational robustness through a receptive field aggregation module and enhances the entire feature hierarchy by transmitting detailed shallow feature information through a bottom-up path aggregation module, thereby improving localization performance. In 2022, Li et al. [30] introduced a dual align end-to-end single-stage detector (DA-Net), which adjusts neuron receptive fields via the rotation feature selection module to achieve image-level feature alignment. Additionally, the rotation feature align module enables instance-level feature alignment based on the size, shape, and orientation of corresponding anchor points.

2.2. Transformer-Based Object Detection

In recent years, due to the advantages of attention mechanisms, transformer-based object detection methods have gained substantial attention in remote sensing, leading to a swift increase in research findings. In 2021, Xu et al. [31] proposed an improved model based on the Swin Transformer [32], incorporating a local perception Swin Transformer backbone network to enhance local feature perception and improve small-target detection accuracy. Furthermore, a spatial attention-interleaved execution cascade network further enhances detection and segmentation accuracy. In 2022, Li et al. [33] proposed an end-to-end transformer-based remote sensing object detection framework (TRD). This framework utilizes an attention-based transferring backbone network for feature extraction, which enhances detection performance. Additionally, during training, data augmentation methods, including sample expansion and multiple-sample fusion, are employed to mitigate overfitting. Dai et al. [34] introduced an arbitrary-oriented object detection transformer (AO2-DETR), which removes the need for anchors and complex pre- or post-processing. This network utilizes an oriented proposal generation mechanism to generate rotating candidate boxes as object queries, which enhances positional priors for feature pooling. An adaptive oriented proposal refinement module is also introduced to adaptively adjust rotated boxes based on contextual information, ensuring one-to-one matching via a rotation-aware set matching loss. In 2023, Cheng et al. [35] proposed a network with separate feature refinement (SFRNet). The features processed by region of interest (ROI) align operation are input into two branches for separate refinement. A spatial and channel transformer and multi-ROI loss in the classification branch are employed to regularize fine-grained classification. The oriented response convolution with transformer is utilized in the localization branch to encode rotation information. This network can thus extract subtle differences between fine-grained categories in the classification branch and obtain rotation-sensitive features in the localization branch.

3. Methodology

3.1. Overview of the Proposed RS-DETR Algorithm

In this study, we propose a novel RS-DETR model to improve the performance of remote sensing image object detection. Based on the RT-DETR model, the proposed method makes three effective improvements, including integrating CGA [36] into the attention-driven feature interaction (AIFI) module to improve interaction between features, proposing an EBiFPN to enhance multi-scale feature fusion, and designing a new bounding box regression loss function, Focaler-GIoU, to improve the detection accuracy of small and overlapping objects. The architecture of RS-DETR is shown in Figure 1.

Figure 1.

The architecture of RS-DETR.

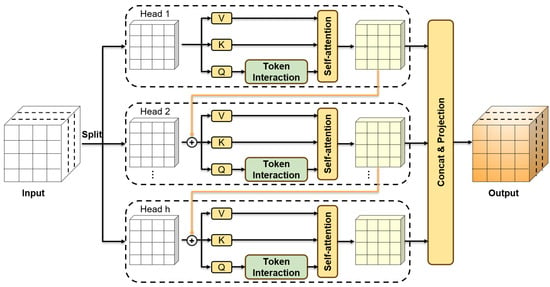

3.2. The Cascaded Group Attention Mechanism

In the AIFI module of RT-DETR, a single-scale transformer encoder is utilized to perform intra-scale interactions on high-level features. However, traditional multi-head self-attention (MHSA) distributes attention equally across the entire input, leading to a significant computational burden, particularly with high-resolution remote sensing images. Additionally, high-level feature maps that undergo multiple downsampling steps may lose information about small targets. This study replaces MHSA with CGA, which splits inputs for each head and concatenates output features across heads. This approach enhances intra-scale feature interactions, improving small-target detection performance while reducing computational redundancy. The architecture of CGA is depicted in Figure 2.

Figure 2.

The architecture of cascaded group attention.

As shown above, CGA divides the complete feature map into different parts as input, with each part being processed by an attention head to produce an output. The outputs from all heads are then concatenated and projected back to the input dimensions via a linear layer to form the final output. In this approach, each attention head focuses on a part of the input, and the combined attention from all heads provides a comprehensive feature representation. CGA enhances model computational efficiency without adding parameters. Additionally, through concatenation, each head’s output is added to the input of the subsequent head, gradually refining the feature representation.

3.3. The EBiFPN Module

In complex scenarios such as occlusion and overlap, RT-DETR’s CNN-based cross-scale feature fusion (CCFF) has difficulty capturing intricate feature interactions, potentially leading to false positives and missed detections. To address this issue, we propose an EBiFPN to strengthen feature fusion across scales, thereby improving detection performance in complex remote sensing images. Furthermore, we incorporate low-level features that contain richer small-target information into the feature fusion process to improve small-target detection accuracy. The bidirectional feature pyramid network (BiFPN) [37] is a feature pyramid structure enabling bidirectional information flow between resolution levels, which better integrates low- and high-level features. It also employs an adaptive feature adjustment mechanism that learns weights to adjust features across different levels, meeting the requirements of various tasks. The EBiFPN adopts an architecture similar to BiFPN, with several key improvements. First, we introduce DySample [38] for upsampling that is designed from the perspective of point sampling. Compared to kernel-based dynamic upsamplers, DySample reduces computational load and increases feature map resolution without adding extra burden. Additionally, we have designed an adaptive fusion method to enhance the overall fusion effect. The architecture of EBiFPN is shown in Figure 3.

Figure 3.

The architecture of EBiFPN.

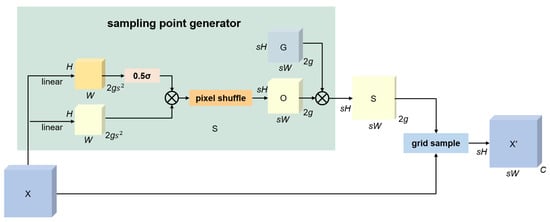

RT-DETR employs the nearest neighbor interpolation method for up-sampling, a simple method that can degrade image quality, making the up-sampled image appear unrealistic or blurred. Additionally, this method does not account for the semantic information in the image. To address these limitations, we introduce the DySample up-sampling operator. The structure of DySample is shown in Figure 4. The input feature map of size is first processed by a sampling point generator to generate a sample set. DySample then utilizes a grid sampling function to resample X to through the sampling set. The sampling point generator, a key component of DySample, generates offsets O through a combination of linear layers, dynamic scope factors, and pixel shuffle sub-pixel convolution. These offsets are added to the original grid positions G to obtain the final sample set S. The dynamic scope factors enhance offset flexibility and reduce overlapping issues. The process is defined as follows:

Figure 4.

The architecture of DySample.

DySample achieves high-quality up-sampling by combining dynamic point sampling with scope factor adjustment and group up-sampling strategies, effectively restoring low-resolution feature maps to high resolution and preserving spatial detail. This enables the model to more accurately localize small targets. Additionally, the upsampling process samples points directly in the feature map instead of using a convolutional kernel. By avoiding time-consuming dynamic convolutions and eliminating additional subnetworks for dynamic kernels, DySample significantly reduces the computational burden, thereby improving the model’s running speed.

The adaptive fusion is shown in Figure 5. Assume the input feature map size is (bs, C, H, W). First, a convolution operation is applied to the input feature map, reducing the number of channels from C to 3, corresponding to the three different inputs that will be weighted. After concatenation and Softmax operations, a spatial adaptive weight map of size (bs, 3, H, W) is obtained. The spatially adaptable weights are subsequently employed to integrate the three input feature maps. For each spatial position (h, w) and batch index b, the output is calculated as follows:

Figure 5.

The architecture of adaptive fusion.

The sum is taken across the three channels to produce the final output at each spatial location. This method effectively combines information from various scales, allowing the model to integrate fine details and broader context.

3.4. The Focaler-GIoU

In remote sensing image object detection tasks, sample imbalance often poses a challenge. For instance, a large number of small target samples may be difficult to detect and accurately localize. Thus, the bounding box regression loss function is crucial in remote sensing image object detection. RT-DETR employs generalized intersection over union (GIoU) [39] as the bounding box regression loss function, but this approach performs poorly in complex remote sensing scenes. To address this issue, we propose a novel bounding box regression loss function, Focaler-GIoU, which combines Focaler-IoU and GIoU. Focaler-IoU [40] emphasizes difficult samples during training by adjusting their contribution to the loss function based on sample difficulty. By employing Focaler-GIoU, our proposed model focuses on small or dense objects during training, enhancing detection performance in complex environments. The definitions of Focaler-GIoU are

where denotes the intersection over union (IoU) value, denotes the area of the smallest enclosing convex object, U represents the union area of predicted and ground truth bounding box, represents the reconstructed Focaler-IoU through linear interval mapping, and . By adjusting the parameters d and u, our proposed method can focus on various regression samples, enhancing detection performance in complex remote sensing scenes.

4. Results and Discussion

4.1. Datasets

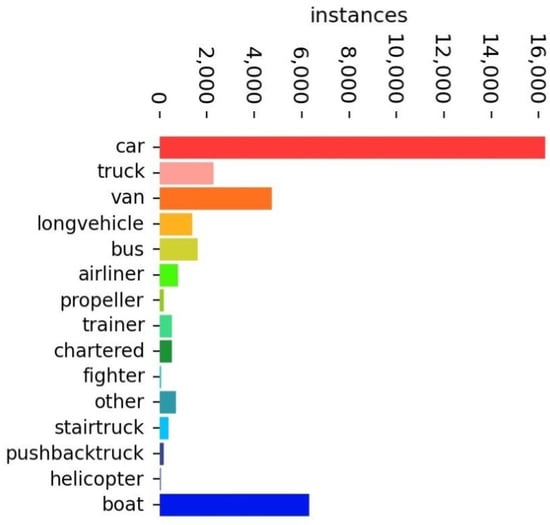

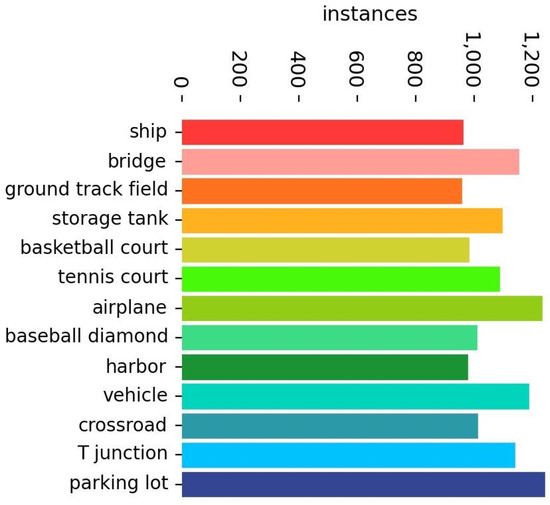

In recent years, numerous datasets for remote sensing target detection have emerged. In this study, we evaluate our proposed model on two publicly available remote sensing image datasets: SIMD and TGRS-HRRSD. The TGRS-HRRSD [41] dataset was released by the University of Chinese Academy of Sciences in 2019 and contains 21,761 images obtained from Google Earth and Baidu Maps with spatial resolutions ranging from 0.15 m to 1.2 m. The dataset includes 55,740 target instances across 13 categories. The sample sizes are relatively balanced between categories, with approximately 4000 instances per category. The SIMD [42] dataset contains 5000 satellite remote sensing images of size 1024 × 768, totaling 45,096 instances across 15 target classes. The distribution of each category in these two datasets is shown in Figure 6 and Figure 7. As illustrated in Figure 6, the distribution of object categories in the SIMD dataset varies significantly. Notably, the examples in the categories of car, van, and boat account for over 90% of the total instances, while other categories, particularly helicopter and fighter, have very few instances. This characteristic of class imbalance is common in real-world remote sensing object detection scenarios. Therefore, it is crucial to verify whether our method can achieve favorable results on this dataset to ensure practical applicability. The category distribution in the TGRS-HRRSD dataset is shown in Figure 7, where the number of instances for each category is relatively balanced. In our experiments, we split the two datasets into training, validation, and testing sets according to their original proportions.

Figure 6.

The number of instances for every category in the SIMD dataset.

Figure 7.

The number of instances for every category in the TGRS-HRRSD dataset.

4.2. Evaluation Metrics

Commonly used metrics for evaluating remote sensing image object detection algorithms include precision (P), recall (R), mean average precision (mAP), number of parameters (Params), and floating point operations (FLOPs). Precision denotes the proportion of true positive samples in the detection results, while recall represents the proportion of positive samples correctly detected among all positive samples, with precision and recall relating to error rate and omission rate, respectively. The precision–recall curve is obtained by plotting precision on the vertical axis and recall on the horizontal axis, where the area under the curve denotes the average precision (AP) for a specific category. The mean average precision (mAP) across multiple categories represents the algorithm’s overall performance on the dataset. mAP can be calculated at various IoU thresholds. In this paper, we primarily select mAP at an IoU threshold of 0.5 and the average of mAP values from an IoU threshold of 0.50 to 0.95 with a step size of 0.05. These two mAP values are represented as mAP50 and mAP, respectively. Additionally, the Params metric measures the total number of parameters to be trained in the network model, while FLOPs denotes the model’s number of floating-point operations per second, measuring the computational complexity of the network. The definitions of these metrics are

where refers to true positives, refers to false positives, and refers to false negatives. N represents the total count of categories.

4.3. Implementation Details

In this study, our proposed method is implemented in the PyTorch framework with Python. The software environment includes Ubuntu 22.04, Python 3.8, PyTorch 1.13.1, and CUDA 11.7. The hardware setup comprises an Intel(R) Xeon(R) CPU E5-2696 v4 processor and an NVIDIA RTXA 3090 graphics card with 24 GB of VRAM. During training, the input image size is 640 × 640 pixels, the training epochs are set to 100, the batch size is 8, the initial learning rate is 0.0001, the weight decay is 0.0001, and the optimizer is AdamW. All other training parameters align with those used in RT-DETR.

4.4. Experiments on the SIMD Dataset

4.4.1. Experimental Results

The experimental results of RS-DETR on the SIMD dataset are presented in Table 1 and Figure 8. As shown in Table 1, RS-DETR achieved an mAP50 of 78.2% and an mAP of 63.1%, proving that our model has achieved relatively good results overall on the SIMD dataset. Notably, RS-DETR exceeded 90% on mAP50 for dense small targets such as cars and boats, demonstrating that our model significantly enhances detection performance for small targets. Additionally, our model achieved a recall of 100% for the fighter category, indicating that RS-DETR correctly classified and located all fighter samples, demonstrating its effectiveness in targets of medium size. However, RS-DETR underperformed in detecting categories, such as other, stair truck, pushback truck, and helicopter. This may be attributed to their smaller sizes and limited sample quantities, which resulted in lower detection accuracy for these categories.

Table 1.

The detection results of RS-DETR for all categories on the SIMD dataset.

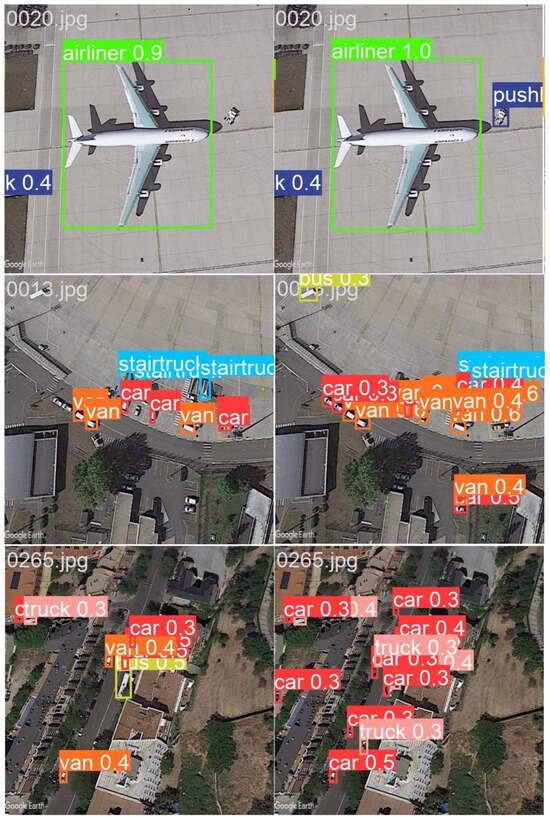

Figure 8.

The visual results of RS-DETR on the SIMD dataset.

Figure 8 shows the visual results of the RS-DETR algorithm on the SIMD dataset, demonstrating its robust object detection performance. Our proposed method effectively identifies and locates objects of various scales, especially small objects such as cars and boats.

4.4.2. Comparison Experiments with Other Models

To verify the superiority of the RS-DETR algorithm in remote sensing image object detection, we compared the algorithm with transformer-based [43,44] object detection algorithms and the classic two-stage Faster R-CNN algorithm. All experiments were conducted on the SIMD remote sensing dataset, with results shown in Table 2. As detailed in Table 2, our proposed method significantly outperforms the DETRs and Faster R-CNN algorithms in terms of Params, FLOPs, and the mAP50 and mAP metrics. Additionally, compared to the baseline model, our proposed RS-DETR achieves improvements of 2.0% in mAP50 and 1.1% in mAP. Moreover, RS-DETR requires fewer parameters and FLOPs than other models, indicating that it achieves lower model complexity and parameter count while maintaining superior performance.

Table 2.

The comparative results of different models on the SIMD dataset.

Figure 9 shows the detection results of the RT-DETR and RS-DETR algorithms on the SIMD dataset. In the top comparison, our proposed method detected a pushback truck instance that the baseline model missed, reducing missed detections. In the middle comparison, our model performs better in densely distributed scenes, successfully detecting all overlapping targets. In the bottom comparison, our model correctly classified a car that the baseline model misclassified as a van, reducing missed detections. Overall, the improved algorithm not only enhances detection accuracy for dense targets but also addresses missed detections and false detections in remote sensing scenes.

Figure 9.

The visual results of RT-DETR (left) and RS-DETR (right) on the SIMD dataset.

4.5. Experiments on the TGRS-HRRSD Dataset

4.5.1. Experimental Results

To verify the generalization of RS-DETR in remote sensing image object detection, we conducted experiments on the TGRS-HRRSD dataset, with results shown in Table 3 and Figure 10. Table 3 presents the detection results of RS-DETR for all categories in the TGRS-HRRSD dataset. Our algorithm achieved an average mAP50 of 91.6% and an average mAP of 65.4% across all categories. As shown in Table 3, RS-DETR achieves high detection accuracy for most categories, with mAP50 exceeding 90%, demonstrating the model’s effectiveness. However, detection performance is relatively poor for categories such as basketball court, parking lot, and T-junction. This may be because the instance features of these categories are not distinct and resemble instances of other categories. For example, basketball courts and playgrounds, as well as tennis courts, appear quite similar at low resolutions, making it difficult for the model to distinguish between them, leading to lower detection accuracy.

Table 3.

The detection results of RS-DETR for all categories on the TGRS-HRRSD dataset.

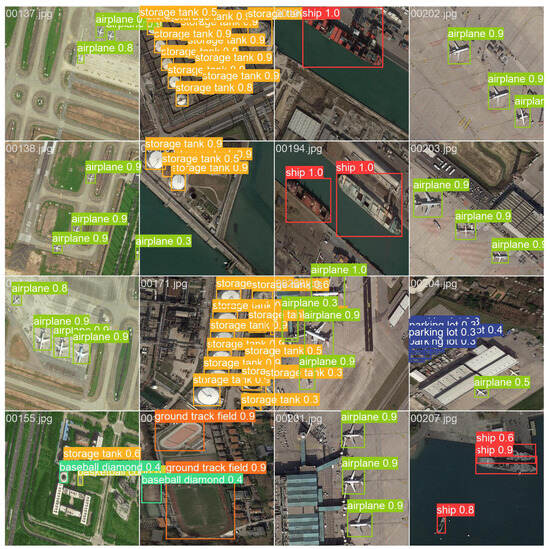

Figure 10.

The visual results of RS-DETR on the TGRS-HRRSD dataset.

To fully assess the detection performance of our proposed improved algorithm, we conducted a visual analysis of detection results on the TGRS-HRRSD dataset. As shown in Figure 10, RS-DETR accurately locates and identifies targets of various categories in remote sensing images, achieving high confidence scores with almost no omissions or false detections. Additionally, dense small targets, such as storage tanks, are accurately detected without omissions. For ships with significant scale variations, the bounding box can accurately regress to correctly locate the target’s position in the original image. These results demonstrate the robustness of our model.

4.5.2. Comparison Experiments with Other Models

Table 4 presents the comparison results of RS-DETR with other mainstream models on the TGRS-HRRSD dataset. Compared to the baseline model, our proposed algorithm improves mAP50 by 1.5% and mAP by 0.8%. For the mAP50 evaluation metric, DINO achieved the highest result. However, our proposed method surpassed DINO on the more stringent mAP metric, indicating superior detection performance across various IoU thresholds. Additionally, our model requires fewer parameters and has a lower computational load than other models, indicating that RS-DETR achieves an excellent balance between accuracy and model size.

Table 4.

The comparative results of different models on the TGRS-HRRSD dataset.

As shown in Figure 11, the RS-DETR model outperforms the baseline model in detection performance on the TGRS-HRRSD dataset. In the top comparison, RT-DETR incorrectly classified buildings as ships, while our model effectively reduced this misclassification. In the middle comparison, RS-DETR successfully detected the basketball court and corrected the ground track field instance that the baseline model incorrectly detected. In the bottom comparison, the baseline model incorrectly detected and classified the airport runway as a bridge, while our model avoided this misclassification, thereby enhancing detection accuracy. Overall, our proposed model significantly improves remote sensing image object detection performance.

Figure 11.

The visual results of RT-DETR (left) and RS-DETR (right) on the TGRS-HRRSD dataset.

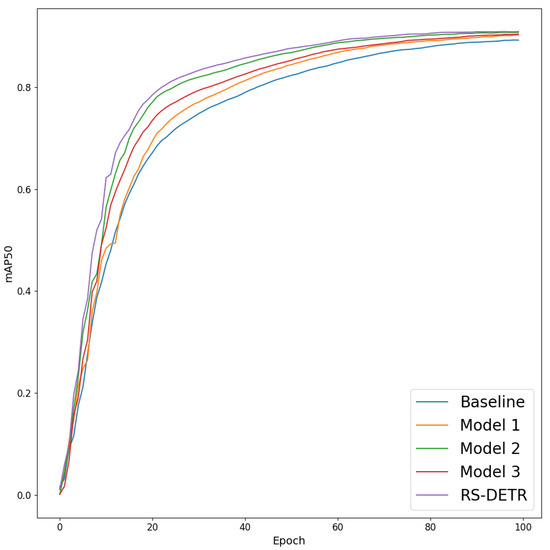

4.6. Ablation Study

To verify the contribution of each module in RS-DETR for remote sensing image object detection, we conducted ablation experiments on the TGRS-HRRSD dataset using the same experimental environment and training parameters. The experimental results are shown in Table 5 and Figure 12. The precision, recall, mAP50, and mAP of the baseline model RT-DETR are 89.2%, 85.6%, 90.1%, and 64.6%, respectively. Model 1 integrates CGA into the AIFI module. It achieved a precision of 90.1%, improving by 0.9% over the baseline model, and reached 90.6% on the mAP50 metric, an increase of 0.5% over the baseline. The results indicate that replacing the traditional multi-head self-attention mechanism with CGA enhances intra-scale feature interaction and improves object detection performance. Model 2 incorporates the proposed EBiFPN into the baseline model to improve cross-scale feature fusion. The results show that after adding EBiFPN, the model’s recall improved significantly by 1.7%, reducing missed detections in remote sensing image object detection while also showing improvements in precision and mAP. The detection results of Model 3 show that when both CGA and EBiFPN are added to the baseline model, it outperforms RT-DETR in all detection metrics, demonstrating that each module enhances detection performance. Finally, combining the various modules allowed RS-DETR to achieve peak detection performance, with mAP50 reaching 91.6%, an improvement of 1.5% over the baseline model, demonstrating significant enhancement in remote sensing object detection performance.

Table 5.

The results of ablation experiments.

Figure 12.

Comparison results of mAP50 metric.

5. Conclusions

With rapid advancements in remote sensing technology, both the quality and quantity of remote sensing images have greatly improved. However, while benefiting from high-quality images, remote sensing image object detection also faces challenges such as significant scale variations, dense target distribution, and the prevalence of small targets. These challenges may lead to inaccurate localization, missed detections, and false detections in practical remote sensing applications. To address these issues, this study proposes the RS-DETR model for remote sensing object detection by improving the RT-DETR algorithm. First, we introduce CGA into the AIFI module to enhance intra-scale feature interaction and increase computational efficiency. To address complex backgrounds and numerous small targets in remote sensing images, we propose EBiFPN to enhance cross-scale feature fusion. By incorporating low-level features rich in small target information, we improve small target detection accuracy and reduce false positives and missed detections. Additionally, applying the Focaler-GIoU loss function as the localization regression loss reduces sensitivity to scale variations, enhances small object detection accuracy, and improves overall localization accuracy. Finally, experimental results on the SIMD and TGRS-HRRSD datasets indicate that our proposed algorithm achieves significant progress in remote sensing target detection, with mAP50 improving by 2.0% and 1.5% over RT-DETR, respectively. Furthermore, these results demonstrate advantages over other mainstream models, highlighting the advancement and effectiveness of the improved algorithm.

In this study, we proposed an improved algorithm to address challenges in remote sensing target detection. Experimental results show that the improved algorithm outperforms the baseline model in detection performance, though some shortcomings remain. In future research, we will tackle other challenges in remote sensing target detection, such as target ambiguity, to enhance the algorithm’s robustness. Additionally, we aim to further refine the algorithm to reduce missed and false detections.

Author Contributions

Conceptualization, H.Z.; methodology, H.Z.; software, H.Z.; validation, H.Z., X.L. and Z.M.; formal analysis, H.Z.; investigation, H.Z.; resources, H.Z.; data curation, H.Z.; writing—original draft preparation, H.Z.; writing—review and editing, H.Z.; visualization, Z.M.; supervision, Z.M.; project administration, X.L.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

The authors are grateful to all individuals who have contributed to this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lv, J.; Zhu, D.; Geng, Z.; Han, S.; Wang, Y.; Yang, W.; Ye, Z.; Zhou, T. Recognition of deformation military targets in the complex scenes via MiniSAR submeter images with FASAR-Net. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Moniruzzaman, M.; Islam, S.M.S.; Bennamoun, M.; Lavery, P. Deep learning on underwater marine object detection: A survey. In Proceedings of the Advanced Concepts for Intelligent Vision Systems: 18th International Conference, ACIVS 2017, Antwerp, Belgium, 18–21 September 2017; Proceedings 18. Springer: Berlin/Heidelberg, Germany, 2017; pp. 150–160. [Google Scholar]

- Zhang, K.; Ming, D.; Du, S.; Xu, L.; Ling, X.; Zeng, B.; Lv, X. Distance Weight-Graph Attention Model-Based High-Resolution Remote Sensing Urban Functional Zone Identification. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Wu, W. Quantized gromov–hausdorff distance. J. Funct. Anal. 2006, 238, 58–98. [Google Scholar] [CrossRef]

- Sedaghat, A.; Ebadi, H. Remote sensing image matching based on adaptive binning SIFT descriptor. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5283–5293. [Google Scholar] [CrossRef]

- Gao, C.; Li, W.; Tao, R.; Du, Q. MS-HLMO: Multiscale histogram of local main orientation for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Ge, Z. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Tian, Z.; Chu, X.; Wang, X.; Wei, X.; Shen, C. Fully convolutional one-stage 3d object detection on lidar range images. Adv. Neural Inf. Process. Syst. 2022, 35, 34899–34911. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3651–3660. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Shivappriya, S.; Priyadarsini, M.J.P.; Stateczny, A.; Puttamadappa, C.; Parameshachari, B. Cascade object detection and remote sensing object detection method based on trainable activation function. Remote Sens. 2021, 13, 200. [Google Scholar] [CrossRef]

- Ma, W.; Li, N.; Zhu, H.; Jiao, L.; Tang, X.; Guo, Y.; Hou, B. Feature split–merge–enhancement network for remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 16794–16805. [Google Scholar]

- Lu, X.; Ji, J.; Xing, Z.; Miao, Q. Attention and feature fusion SSD for remote sensing object detection. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- Liu, E.; Zheng, Y.; Pan, B.; Xu, X.; Shi, Z. DCL-Net: Augmenting the capability of classification and localization for remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7933–7944. [Google Scholar] [CrossRef]

- Li, Y.; Kong, C.; Dai, L.; Chen, X. Single-stage detector with dual feature alignment for remote sensing object detection. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An improved swin transformer-based model for remote sensing object detection and instance segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Li, Q.; Chen, Y.; Zeng, Y. Transformer with transfer CNN for remote-sensing-image object detection. Remote Sens. 2022, 14, 984. [Google Scholar] [CrossRef]

- Dai, L.; Liu, H.; Tang, H.; Wu, Z.; Song, P. Ao2-detr: Arbitrary-oriented object detection transformer. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 2342–2356. [Google Scholar] [CrossRef]

- Cheng, G.; Li, Q.; Wang, G.; Xie, X.; Min, L.; Han, J. SFRNet: Fine-grained oriented object recognition via separate feature refinement. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–10. [Google Scholar] [CrossRef]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to upsample by learning to sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 6027–6037. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zhang, H.; Zhang, S. Focaler-IoU: More Focused Intersection over Union Loss. arXiv 2024, arXiv:2401.10525. [Google Scholar]

- Zhang, Y.; Yuan, Y.; Feng, Y.; Lu, X. Hierarchical and robust convolutional neural network for very high-resolution remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5535–5548. [Google Scholar] [CrossRef]

- Haroon, M.; Shahzad, M.; Fraz, M.M. Multisized object detection using spaceborne optical imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3032–3046. [Google Scholar] [CrossRef]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhu, J.; Zhang, L. Dab-detr: Dynamic anchor boxes are better queries for detr. arXiv 2022, arXiv:2201.12329. [Google Scholar]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. Dn-detr: Accelerate detr training by introducing query denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13619–13627. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).