Abstract

The You Only Look Once (YOLO) series of object detection models is widely recognized for its efficiency and real-time performance, particularly under the challenging conditions of underwater environments, characterized by insufficient lighting and visual disturbances. By modifying the YOLOv9s model, this study aims to improve the accuracy and real-time capabilities of underwater object detection, resulting in the introduction of the YOLOv9s-UI detection model. The proposed model incorporates the Dual Dynamic Token Mixer (D-Mixer) module from TransXNet to improve feature extraction capabilities. Additionally, it integrates a feature fusion network design from the LocalMamba network, employing channel and spatial attention mechanisms. These attention modules effectively guide the feature fusion process, significantly enhancing detection accuracy while maintaining the model’s compact size of only 9.3 M. Experimental evaluation on the UCPR2019 underwater object dataset shows that the YOLOv9s-UI model has higher accuracy and recall than the existing YOLOv9s model, as well as excellent real-time performance. This model significantly improves the ability of underwater target detection by introducing advanced feature extraction and attention mechanisms. The model meets portability requirements and provides a more efficient solution for underwater detection.

1. Introduction

Object detection technology plays a crucial role in various contemporary technological domains, including autonomous driving, smart surveillance, and medical image analysis, where both high real-time performance and accuracy are indispensable. The You Only Look Once (YOLO) series of models [1,2], well known for their efficient processing capabilities, are extensively used in the object field and are commonly applied in diverse practical scenarios. However, object detection encounters numerous challenges in underwater environments. Factors such as inadequate lighting, image quality deterioration due to water scattering and refraction, low contrast, and visual disturbances significantly undermine the efficacy of conventional object detection technologies [3,4].

Traditional methods for underwater object detection primarily depend on feature extraction and image processing techniques to identify and locate targets [5,6]. These approaches involve utilizing edge detection, shape matching, and optical flow techniques for both dynamic and static elements in images and videos [7,8]. However, these methods are often sensitive to environmental conditions, especially in complex underwater environments characterized by fluctuating conditions and murky water quality, leading to a decrease in detection accuracy. Furthermore, these conventional techniques are computationally intensive and exhibit poor real-time performance, rendering them unsuitable for applications that necessitate quick responses [9,10]. Consequently, these constraints significantly diminish the practical utility and dependability of traditional underwater object detection methods in operational scenarios.

In complex underwater environments, increasing the receptive field helps to better capture the contextual information and environmental characteristics of the target, thereby improving the accuracy of prediction. Deep convolutional neural networks (CNNs) have shown excellent processing capabilities in adjusting network depth and convolution kernel size to expand the receptive field [11]. In addition, the adoption of nonlinear activation functions, the integration of attention mechanisms, and the application of data enhancement technology [12] can significantly improve the network’s ability to process input data. Technologies such as feature pyramid network [13], multi-scale fusion module [11], and Atrous Spatial Pyramid Pooling (ASPP) [14] can effectively integrate feature information at different scales and enhance the recognition ability of the system. Advanced architectures such as the standard Transformer and its variants [2,7] and DenseNet [15] further improve model performance and adaptability by managing complex data structures.

In the domain of underwater object detection, deep learning technologies have garnered significant attention [10,16]. Researchers have started to employ deep neural network methodologies in this field [17,18]. While YOLO algorithms have shown remarkable speed and accuracy in numerous applications, their performance necessitates improvement when confronted with the distinctive challenges of underwater settings [19,20]. This is significant in the detection of small or densely clustered underwater objects. Therefore, specific optimizations are essential to improve the effectiveness and robustness of the YOLO algorithm in such extreme conditions [21,22]. Yang et al. [23] proposed a cascaded model based on the User-Generated Content (UGC)-YOLO network structure, which improves underwater target detection by integrating global semantic information between two residual stages of the feature extraction network. However, experimental results revealed only modest improvements over the baseline network. Zhang et al. [24] combined MobileNetv2 and YOLOv4 to achieve a balance between detection efficiency and accuracy, significantly enhancing the recognition of small targets through a lightweight structure and multi-scale attention feature integration. However, the complex design of this model may restrict its applicability in environments with limited computational resources. Jia et al. [25] introduced the Enhanced Detection and Recognition (EDR) model, which, through the incorporation of channel shuffling and improved feature extraction, significantly improved recognition efficiency and feature integration capabilities in complex aquatic environments. However, this model may lead to increased false positives and misses in complex scenarios, such as dense and overlapping objects, and its higher computational requirements may hinder real-time detection on devices with limited resources. Yuan et al. [26] improved the YOLOv5 network by integrating the C3GD and Res-CM modules, thereby improving the accuracy of detecting small complex targets and enhancing image clarity using the Contrast Limited Adaptive Histogram Equalization (CLAHE) algorithm. Although the model’s design was streamlined to suit resource-constrained environments, its complex structure still necessitates fine-tuning and may escalate operational expenses. Furthermore, demonstrating excellent performance on specific datasets, the model’s generalizability across diverse underwater environments requires further validation to confirm its effectiveness under diverse conditions.

To address the challenges mentioned above, this study improves the most recent YOLOv9s model [27] and introduces the YOLOv9s-UI detection model. The improvement involves integrating the Dual Dynamic Token Mixer (D-Mixer) module from TransXNet [28], which improves the model’s feature extraction capabilities. Furthermore, inspired by the feature fusion network from LocalMamba [29], a channel and spatial attention network is devised. This network incorporates both channel and spatial attention modules to steer feature fusion, thereby boosting the accuracy and real-time performance of underwater object detection. The refinement process of the model takes into account the different characteristics of underwater environments. By incorporating the D-Mixer module, the model gains the ability to dynamically adjust token distribution during feature extraction, thereby improving its capacity to identify targets in complex environments. The introduction of channel and spatial attention mechanisms effectively reinforces feature fusion, leading to improved precision and reliability in object detection. Despite the integration of multiple improvement modules, the model remains compact with a total weight of only 9.3 M, ensuring efficiency and portability.

This paper introduces the YOLOv9s-UI model, which offers several key innovations as follows:

- Integration of the D-Mixer module from TransXNet: This improvement dynamically adjusts token distribution during feature extraction, significantly improving target recognition capabilities in complex underwater environments.

- Design of a network featuring channel and spatial attention: The channel attention module and spatial attention module independently improve the significance of different feature channels and spatial positions within the feature maps. This guided feature fusion process significantly improves detection accuracy.

- Model lightweighting: Despite the incorporation of multiple improvement modules, the model maintains a compact total weight of only 9.3 M, ensuring both efficiency and portability.

The structure of this paper is organized as follows: Section 2 reviews the related work and key modules pertinent to this study. Section 3 offers a comprehensive description of the design philosophy and improvements of the YOLOv9s-UI model, including the integration of the D-Mixer module and the implementation of channel and spatial attention mechanisms. Section 4 presents a comparative analysis of the YOLOv9s-UI algorithm against other detection methods. Finally, Section 5 concludes the paper and discusses potential directions for future research.

2. Related Works

2.1. Underwater Object Detection Methods

Underwater object detection is crucial for marine exploration, ecological protection, and underwater engineering, but implementing these technologies faces several challenges, such as poor visibility, complex lighting conditions, the impact of water currents, and acoustic disturbances. In terms of technology, image preprocessing is first performed, including color correction and image enhancement to tackle issues like color distortion and blurring, which are unique to underwater environments. Next, traditional detection methods such as background subtraction and thresholding are applied, which are suitable for simple scenarios [30]. In advanced detection technologies, YOLO (You Only Look Once) [31] offers an efficient balance between time and accuracy, making it suitable for detecting fast-moving objects; SSD (Single Shot MultiBox Detector) [32] performs better than YOLO in detecting small objects; Faster R-CNN [33] provides high-precision recognition in complex environments; and Mask R-CNN [7] focuses on high-precision object segmentation, which is particularly useful for environmental and biodiversity research. These technologies collectively advance underwater detection capabilities, helping to address the unique challenges of underwater environments.

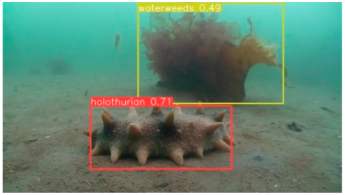

The URPC 2019 dataset is specifically designed for underwater robot professional competitions, used to evaluate the performance of underwater object detection algorithms. This dataset is not only used for competitions but also widely applied in technology development for marine resource surveys and underwater facility maintenance. Paper [34] conducted comparisons on this dataset among multiple advanced underwater object detection models, including Faster R-CNN (VGG16), SSD (VGG16) [34], FCOS (ResNet50) [35], Faster R-CNN (ResNet50), Free-Anchor (ResNet50) [36], EfficientDet [37], CenterNet (ResNet50) [38], YOLOv8n, DDA + YOLOv7-ACmix [39], and YOLOv8-MU, with the results listed in Table 1. For a more intuitive comparison of our model with others, the main metric used is mAP@0.5. The refined URPC 2019 dataset contains 4757 images, while the URPC 2019 dataset excluding aquatic plants contains 4707 images. Despite the difference in the total number of images, the YOLO models still demonstrate superior detection accuracy with fewer images, further highlighting the model’s advantages in detection precision.

Table 1.

Comparison of mAP@0.5 for four object classes on the URPC2019 dataset.

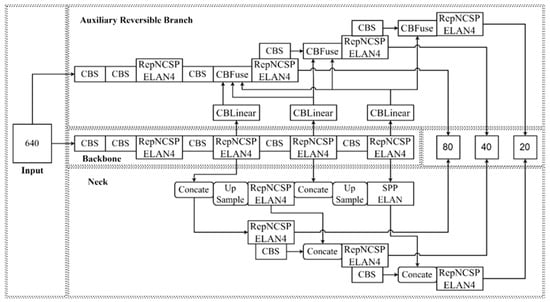

2.2. YOLOv9 Object Detection Network

YOLOv9, which was released on 21 February 2024, represents the most recent version of the YOLO detection network [21]. It introduces an innovative concept of programmable gradient information, upon which a novel Efficient Layer Aggregation Network (ELAN) structure is formulated for feature extraction, in Figure 1. This architectural design aims to mitigate the problem of information degradation during data propagation within deep networks. Through the utilization of reduced parameters and computational resources, it attains detection accuracy that is on par with or surpasses that of the pre-existing YOLO models.

Figure 1.

YOLOv9 network structure.

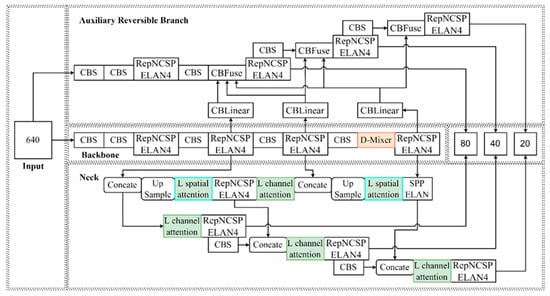

The input end (Input), in alignment with YOLOv7, maintains consistency by utilizing Mosaic data augmentation, adaptive anchor box computation, and adaptive image scaling for preprocessing input images to improve the quality of data provided to the model. The Auxiliary Reversible Branch, which leverages the CBNet composite backbone network, integrates a network between itself and the main branch to the aggregation and flow of gradient information. This integration ensures the preservation of deep features crucial for object detection and helps in reducing information loss during the feedforward processes of the network. Modifications to the Backbone network primarily entail amalgamating features of CSPNet and ELAN to formulate a Generalized ELAN (GELAN) as the feature extraction unit. RepConv module acts as the foundational convolutional module, employing reparameterization techniques to further augment feature extraction capabilities. The section continues to utilize Feature Pyramid Network (FPN) + Path Aggregation Network (PAN) structured PAN but replaces the E-ELAN module with a GELAN layer. At the output end (Prediction), the model continues the practice of employing three different prediction boxes to encompass information on the classification, location, and confidence of targets. The process concludes with non-maximum suppression to eliminate redundant boxes. However, in conjunction with the information flow of the Auxiliary Reversible Branch, improvements have been implemented in the information fusion process.

The YOLOv9 algorithm was originally developed for object detection tasks in various scenarios. To optimize the network for learning and deployment, specific improvements have been implemented to reduce the complexity and improve its ability to detect bounding boxes.

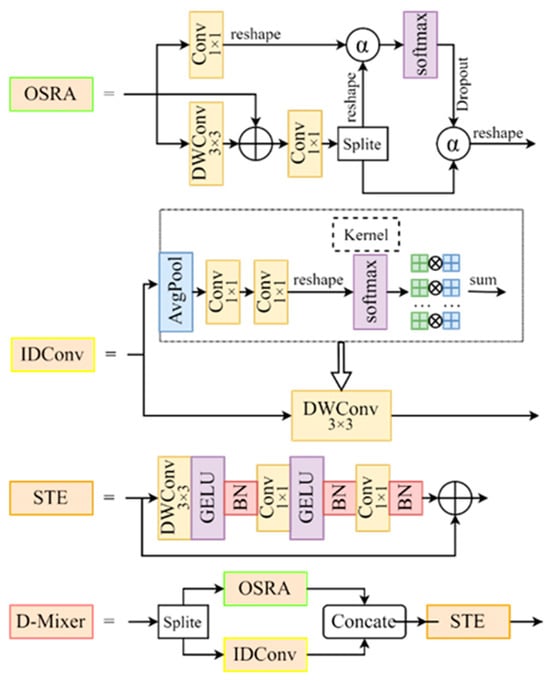

2.3. D-Mixer

Transformer structures, which are constructed based on Multi-Head Self-Attention (MHSA), facilitate extensive modeling of long-range dependencies and encompass global receptive fields. However, they lack the inherent inductive bias that Convolutional Neural Networks (CNNs) possess, which consequently leads to comparatively weaker generalization abilities. Efforts to integrate both systems reveal that while CNNs are independent of the input, this characteristic prevents them from adjusting to diverse inputs. As a result, there exists a discrepancy in representational capacity between mechanisms and convolutional operations.

TransXNet network introduces D-Mixer, which incorporates a lightweight Token Mixer that dynamically leverages both global and local information. This design allows for the simultaneous integration of a large receptive field and a strong inductive bias while maintaining input dependency [23]. The structure is depicted in Figure 2.

Figure 2.

D-Mixer network structure.

D-Mixer divides the input features into two segments, each undergoing different processing stages. One segment is subjected to a global self-attention module known as Object Self-Representing Attention (OSRA), while the other segment is processed through an input-dependent deep convolution module, IDConv. The results from these modules are combined and fed into the Squeezed Token Enhancer module to efficiently consolidate local tokens. This simple structure enables the network to capture both global and local information concurrently, thereby augmenting its inductive bias.

The IDConv module is utilized to introduce inductive bias in a dynamic input-dependent fashion and to conduct local feature aggregation. It consolidates spatial context by employing adaptive average pooling, then creates attention maps through two convolutional layers, ultimately yielding input-dependent deep convolutional kernels. This module provides improved dynamic local feature encoding abilities and is computationally more efficient.

3. Methods

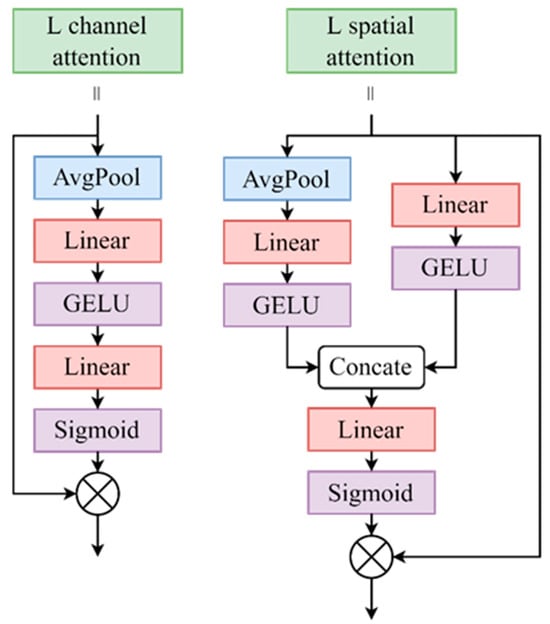

3.1. Local Channel Attention and Local Spatial Attention

The LocalMamba network incorporates an attention module to improve the integration of diverse scanning features and filter out irrelevant information present in these features. This is achieved by employing adaptive feature weighting based on the unique characteristics of each branch, leading to the consolidation of multiple branches into a single entity through addition [24]. Furthermore, the study introduces a Local Channel Attention and a Local Spatial Attention mechanism to dynamically regulate the weights assigned to the combined information. This process improves the neural network’s ability to concentrate on pertinent information while reducing extraneous details. The objective of this approach is to facilitate the detector in judiciously allocating attention during the detection of various targets, thereby augmenting the discernment of valuable information (Figure 3).

Figure 3.

Flowchart of L channel attention and L spatial attention.

The Local Channel Attention module, similar to SENet, leverages inter-channel relationships of features to generate a channel attention map. Unlike SENet, it replaces the Rectified Linear Unit (ReLU) activation function with the Gaussian Error Linear Unit (GELU) for improved performance. Concurrently, the Local Spatial Attention module exploits spatial relationships between features, achieving spatial attention by extracting and integrating both local and global features through a weighted fusion activation process. The computational processes for generating the channel attention map and the spatial attention map are expressed as follows:

3.2. YOLOv9s-UI Network Model

In the official version of the YOLOv9 model, the model weights are substantial, totaling 122.4 M, which poses challenges for both model portability and real-time object detection. Through a comparative analysis of scaling ratios across the YOLO series and adjusting the network depth to 0.33 and the width to 0.25, the YOLOv9s model was developed. This modified version features significantly reduced amounting to only 9.1 M, leading to an improvement in detection speed.

This research focuses on Attention Guided Feature Extraction and Fusion. An examination of the attention computation process reveals that spatial attention involves the weighting of each locality within a feature layer, while channel attention pertains to the weighting of the entire feature layer. As regards the tasks related to object detection, the main goal is to identify important objects within an image, with levels of relevance in various regions. Spatial attention can assist the network in extracting features from crucial areas. The feature layers obtained from the backbone network contain diverse semantic information, which varies across different levels, affecting their respective roles in detecting different targets. Therefore, channel attention mechanisms can be utilized to facilitate the integration of these features.

In the YOLOv9s network, the incorporation of the D-Mixer, Local Channel Attention, and Local Spatial Attention modules has led to the development of the YOLOv9s-UI network, as proposed in this paper. The structure of this improved network is depicted in Figure 4.

Figure 4.

Structure of a neural network model featuring the auxiliary reversible branch and attention mechanism.

4. Experimental Results

4.1. Experimental Setup and Data

The training and validation datasets for the object detection network were obtained from the publicly accessible URPC 2019 underwater object dataset. This dataset encompasses different categories of underwater objects, namely sea cucumbers, sea urchins, scallops, starfish, and aquatic plants, totaling 3765 training samples and 942 validation samples. Subsequently, all samples were amalgamated and randomly allocated into training, validation, and test sets in an 8:1:1 ratio. This stratification was employed for the algorithmic network and the evaluation of its efficacy.

4.2. Evaluation Metrics

In object detection, Average Precision (AP) and Mean Average Precision (mAP) are widely utilized metrics to evaluate the effectiveness and performance of detection models. AP quantifies the area under the Precision-Recall curve, providing a comprehensive measure of precision across different recall levels. The calculation equation is given as follows:

where Pr represents the precision of detection; Re represents the recall of the model; TP represents the number of true positives; FP represents the number of false positives; FN represents the number of false negatives; and N represents the number of categories detected.

4.3. Experimental Setup

The experimental setup included Ubuntu 20.04 OS, an Intel i9-10920X CPU, 32 GB of RAM, and an NVIDIA GeForce RTX 3070 graphics card. Python 3.8 was employed as the programming language, while Pytorch 1.8.0 was utilized as the deep learning framework.

During the training phase, the hyperparameters were configured as follows: the initial learning rate was set to 0.0031, the decay factor was set to 0.12, the momentum was set at 0.826, the batch size was defined as 16, and the number of training epochs was established at 300.

4.4. Comparison of Model

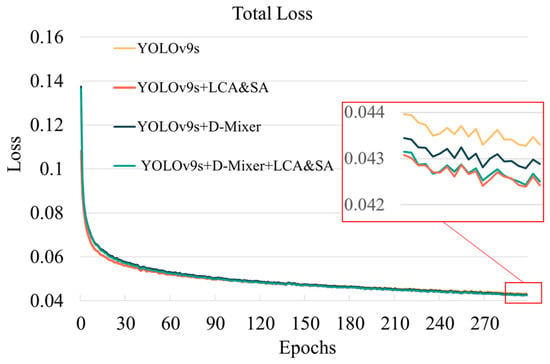

During the training phase, the loss function curves for YOLOv9s and its different modified iterations were documented in Figure 5. All four models displayed a swift decrease in loss function values at the beginning of training, with limited overall variability. By the 20th epoch, the average loss value for each model approached approximately 0.06, and the ultimate losses settled at around 0.043, suggesting a satisfactory convergence among all models.

Figure 5.

Training loss.

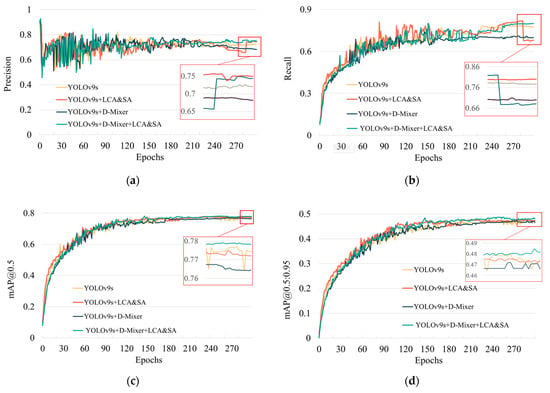

From the training results, the YOLOv9s-UI model achieved a maximum accuracy of 0.7568 (i.e., extracted from the peak value of the last decile of the data due to fluctuations in accuracy). The highest recall obtained was 0.8191. The model’s maximum mAP was 0.7812 at a confidence threshold of 0.5, while the highest mAP within confidence thresholds of 0.5 to 0.95 was 0.4874. In Table 2, AL-YOLOv9s demonstrates a clear superiority in accuracy and outperforms models of similar scale in various metrics.

Table 2.

Comparison of evaluation indexes of YOLOV9 and YOLOV9s models and their improved modules.

In Figure 6 and Table 3, the improved YOLOv9s-UI model significantly enhances its practicality by increasing detection accuracy and recall rates in the fields of marine research and underwater surveillance,. The model achieves high precision (0.7568) and maximum recall (0.8191), enabling more accurate identification and classification of marine life in complex underwater environments, which is crucial for the monitoring and protection of biodiversity. Additionally, the model’s robust performance across different confidence thresholds indicates its adaptability to varied monitoring needs, optimizing detection precision and efficiency in applications such as ship detection or subsea pipeline monitoring. With the integration of the D-Mixer module and the local channel and spatial attention mechanisms, the YOLOv9s-UI model outperforms similar models in precision, recall, and mean Average Precision (mAP) metrics, demonstrating its capability to handle complex tasks in marine environments, such as tracking moving targets or conducting visual analysis under harsh weather conditions. These technological advancements not only enhance the model’s performance but also increase its broad applicability and reliability in practical applications like marine research and underwater surveillance.

Figure 6.

Comparison of train curves.

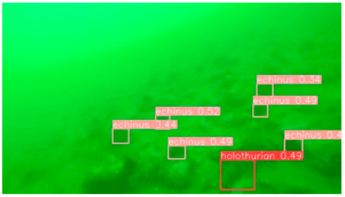

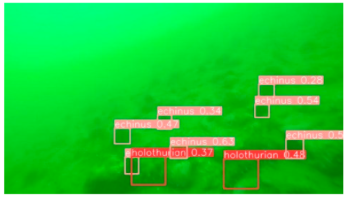

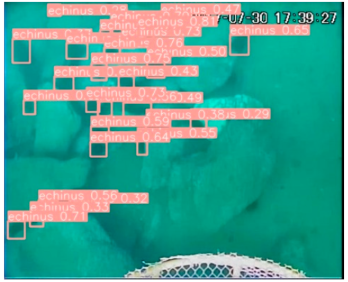

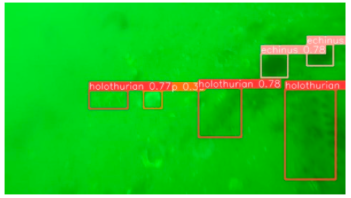

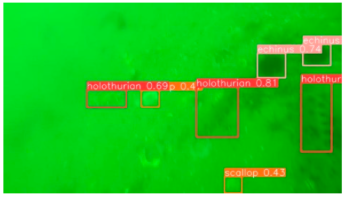

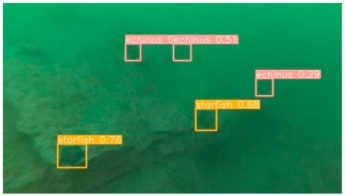

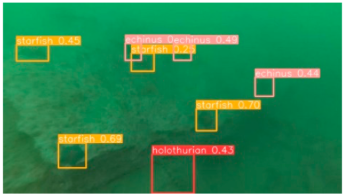

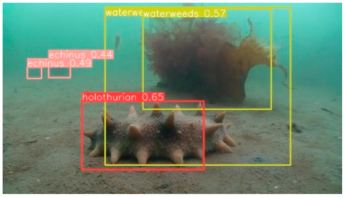

Table 3.

Example of detection results.

Compared to the original YOLOv9s model, the YOLOv9s-UI model shows enhanced performance across various categories, in Table 4. Specifically, for sea cucumbers and scallops, YOLOv9s-UI exhibits the capability to detect these species effectively, a task that posed challenges for the original network. This indicates improved feature extraction and increased performance within the improved network structure. For sea urchins, there is a significant improvement in detection accuracy, showcasing substantial progress in identifying sea urchin characteristics. The model also displays increased accuracy in detecting starfish, highlighting improved precision in both recognition and localization. Furthermore, YOLOv9s-UI avoids generating redundant detections for aquatic plants, indicating superior handling of overlapping or densely packed objects and consequently reducing instances of false positives or repeated detections of the same object.

Table 4.

Comparison of model evaluation metrics.

A comparative analysis of the network parameters is delineated in Table 5. In comparison to the YOLOv9s network, the YOLOv9s-UI model exhibits an augmented number of layers and a marginal increase in weight size, accompanied by a relatively higher parameter count. However, it demonstrates a clear superiority in terms of accuracy. Additionally, following model reparameterization, the weight has been reduced to only 9.3 M, rendering it more adaptable for migration to alternative platforms. This model possesses the capability to fulfill the requirements of real-time detection, even in scenarios involving high-definition images.

Table 5.

Comparison of model complexity.

5. Conclusions and Future Works

In the field of underwater object detection, this study extends the YOLOv9s model by introducing the YOLOv9s-UI detection model. To augment the feature extraction capabilities, the D-Mixer module from TransXNet has been integrated. The D-Mixer module dynamically adjusts token distribution during feature extraction, thereby enhancing the model’s capacity to identify targets in complex underwater settings. Furthermore, inspired by the feature fusion structure of the LocalMamba network, a network incorporating channel and spatial attention mechanisms has been devised. The channel attention module and spatial attention module amplify the significance of different feature channels and spatial locations within the feature maps, respectively, facilitating feature fusion. These improvements increase the accuracy of the model in underwater object detection, while maintaining a modest model size of 9.3 M, ensuring effectiveness and ease of deployment. Experimental results illustrate that the YOLOv9s-UI model excels in underwater object detection tasks, fulfilling the requirements for high detection precision and convenient portability. Due to its high computational demands, the YOLOv9s-UI model faces limitations that restrict its use in resource-constrained environments. Currently, it has only been validated on the URPC2019 dataset. Additionally, the complexity of its advanced modules makes deployment in various applications challenging, thus further optimization is needed to achieve broader usability.

Future research will focus on refining the YOLOv9s-UI model by exploring more effective feature extraction and fusion techniques to enhance its detection capabilities. This includes integrating advanced attention mechanisms and modular designs to improve the model’s performance in complex underwater environments. Furthermore, we provide a new research direction for underwater object detection, i.e., jointing multi-tasks during training and using other task loss to improve the detection task performance. We plan to expand the testing of the model across a wider range of datasets to assess its generalization capabilities and optimize its performance in various real-world scenarios. Future studies will also delve into improved attention mechanisms to enhance the model’s adaptability and recognition accuracy, ensuring it can meet the diverse needs of underwater detection in practical applications.

Author Contributions

W.P. handled the algorithm design and implementation, theoretical proof, and paper writing. J.C. and B.L. contributed to the algorithm design and implementation. L.P. supervised the project and ensured the correctness of the theoretical proof as well as the writing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no potential conflicts of interest.

References

- Redmon, J.; Farhadi, A. YOlOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOlOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Fu, C.; Liu, R.; Fan, X.; Chen, P.; Fu, H.; Yuan, W.; Zhu, M.; Luo, Z. Rethinking general underwater object detection: Datasets, challenges, and solutions. Neurocomputing 2023, 517, 243–256. [Google Scholar] [CrossRef]

- Fayaz, S.; Parah, S.A.; Qureshi, G.J. Underwater object detection: Architectures and algorithms–a comprehensive review. Multimed. Tools Appl. 2022, 81, 20871–20916. [Google Scholar] [CrossRef]

- Awalludin, E.A.; Arsad, T.N.T.; Yussof, W.N.J.H.W.; Bachok, Z.; Hitam, M.S. A comparative study of various edge detection techniques for underwater images. J. Telecommun. Inf. Technol. 2022. [Google Scholar] [CrossRef]

- Song, S.; Zhu, J.; Li, X.; Huang, Q. Integrate MSRCR and mask R-CNN to recognize underwater creatures on small sample datasets. IEEE Access 2020, 8, 172848–172858. [Google Scholar] [CrossRef]

- Zeng, L.; Sun, B.; Zhu, D. Underwater target detection based on Faster R-CNN and adversarial occlusion network. Eng. Appl. Artif. Intell. 2021, 100, 104190. [Google Scholar] [CrossRef]

- Saini, A.; Biswas, M. Object detection in underwater image by detecting edges using adaptive thresholding. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Yuan, X.; Guo, L.; Luo, C.; Zhou, X.; Yu, C. A survey of target detection and recognition methods in underwater turbid areas. Appl. Sci. 2022, 12, 4898. [Google Scholar] [CrossRef]

- Fatan, M.; Daliri, M.R.; Shahri, A.M. Underwater cable detection in the images using edge classification based on texture information. Measurement 2016, 91, 309–317. [Google Scholar] [CrossRef]

- Lin, Y.-H.; Chen, S.-Y.; Tsou, C.-H. Development of an image processing module for autonomous underwater vehicles through integration of visual recognition with stereoscopic image reconstruction. J. Mar. Sci. Eng. 2019, 7, 107. [Google Scholar] [CrossRef]

- Mandal, R.; Connolly, R.M.; Schlacher, T.A.; Stantic, B. Assessing fish abundance from underwater video using deep neural networks. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Sung, M.; Yu, S.-C.; Girdhar, Y. Vision based real-time fish detection using convolutional neural network. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Hu, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yang, X.; Sun, C.; Chen, S.; Li, B.; Zhou, C. Real-time detection of uneaten feed pellets in underwater images for aquaculture using an improved yolo-v4 network. Comput. Electron. Agric. 2021, 185, 106135. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Y.; Sun, X.; Liu, J.; Yang, X.; Zhou, C. Composited fishnet: Fish detection and species recognition from low-quality under-water videos. IEEE Trans. Image Process. 2021, 30, 4719–4734. [Google Scholar] [CrossRef] [PubMed]

- Er, M.J.; Chen, J.; Zhang, Y.; Gao, W. Research challenges, recent advances, and popular datasets in deep learning-based underwater marine object detection: A review. Sensors 2023, 23, 1990. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Zhang, M.; Song, W.; Mei, H.; He, Q.; Liotta, A. A systematic review and analysis of deep learning-based underwater object detection. Neurocomputing 2023, 527, 204–232. [Google Scholar] [CrossRef]

- Yeh, C.H.; Lin, C.H.; Kang, L.W.; Huang, C.H.; Lin, M.H.; Chang, C.Y.; Wang, C.C. Lightweight deep neural network for joint learning of underwater object detection and color conversion. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6129–6143. [Google Scholar] [CrossRef]

- Han, F.; Yao, J.; Zhu, H.; Wang, C. Underwater image processing and object detection based on deep CNN method. J. Sens. 2020, 2020, 6707328. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, X.; Zhang, G. Using Improved YOLOX for Underwater Object Recognition. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19–21 August 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Hou, J.; Zhang, C. Shallow mud detection algorithm for submarine channels based on improved YOLOv5s. Heliyon 2024, 10, e31029. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Jiao, P. YOLO series target detection algorithms for underwater environments. arXiv, 2023; arXiv:2309.03539. [Google Scholar]

- Yang, Y.; Chen, L.; Zhang, J.; Long, L.; Wang, Z. UGC-YOLO: Underwater environment object detection based on YOLO with a global context block. J. Ocean. Univ. China 2023, 22, 665–674. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, S.; Song, W.; He, Q.; Wei, Q. Lightweight underwater object detection based on yolo v4 and multi-scale attentional feature fusion. Remote Sens. 2021, 13, 4706. [Google Scholar] [CrossRef]

- Jia, J.; Fu, M.; Liu, X.; Zheng, B. Underwater object detection based on improved efficientDet. Remote Sens. 2022, 14, 4487. [Google Scholar] [CrossRef]

- Yuan, S.; Luo, X.; Xu, R. Underwater Robot Target Detection Based On Improved YOLOv5 Network. In Proceedings of the 2024 12th International Conference on Intelligent Control and Information Processing (ICICIP), Nanjing, China, 8–10 March 2024; IEEE: Piscataway, NJ, USA, 2024. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Lou, M.; Zhou, H.Y.; Yang, S.; Yu, Y. TransXNet: Learning both global and local dynamics with a dual dynamic token mixer for visual recognition. arXiv 2023, arXiv:2310.19380. [Google Scholar]

- Xu, R.; Yang, S.; Wang, Y.; Du, B.; Chen, H. A survey on vision mamba: Models, applications and challenges. arXiv 2024, arXiv:2404.18861. [Google Scholar]

- Zhu, J.; Yu, S.; Han, Z.; Tang, Y.; Wu, C. Underwater object recognition using transformable template matching based on prior knowledge. Math. Probl. Eng. 2019, 2019, 2892975. [Google Scholar] [CrossRef]

- Chen, R.; Zhan, S.; Chen, Y. Underwater target detection algorithm based on YOLO and Swin transformer for sonar images. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–20 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–7. [Google Scholar]

- Qiang, W.; He, Y.; Guo, Y.; Li, B.; He, L. Exploring underwater target detection algorithm based on improved SSD. Xibei Gongye Daxue Xuebao/J. Northwestern Polytech. Univ. 2020, 38, 747–754. [Google Scholar] [CrossRef]

- Jiang, X.; Zhuang, X.; Chen, J.; Zhang, J.; Zhang, Y. YOLOv8-MU: An Improved YOLOv8 Underwater Detector Based on a Large Kernel Block and a Multi-Branch Reparameterization Module. Sensors 2024, 24, 2905. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. ISBN 978-3-319-46448-0. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Zhang, X.; Wan, F.; Liu, C.; Ji, X.; Ye, Q. Learning to Match Anchors for Visual Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3096–3109. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Ahmed, I.; Ahmad, M.; Rodrigues, J.J.P.C.; Jeon, G. Edge Computing-Based Person Detection System for Top View Surveillance: Using CenterNet with Transfer Learning. Appl. Soft Comput. 2021, 107, 107489. [Google Scholar] [CrossRef]

- Zhang, J.; Yan, X.; Zhou, K.; Zhao, B.; Zhang, Y.; Jiang, H.; Chen, H.; Zhang, J. Marine Organism Detection Based on Double Domains Augmentation and an Improved YOLOv7. IEEE Access 2023, 11, 68836–68852. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).