Abstract

Monitoring the learning process during task solving through different channels will facilitate a better understanding of the learning process. This understanding, in turn, will provide teachers with information that will help them to offer individualised education. In the present study, monitoring was carried out during the execution of a task applied in a self-regulated virtual environment. The data were also analysed using data fusion techniques. The objectives were as follows: (1) to examine whether there were significant differences between students in cognitive load (biomarkers: fixations, saccades, pupil diameter, galvanic skin response—GSR), learning outcomes and perceived student satisfaction with respect to the type of degree (health sciences vs. engineering; and (2) to determine whether there were significant differences in cognitive load metrics, learning outcomes and perceived student satisfaction with respect to task presentation (visual and auditory vs. visual). We worked with a sample of 31 university students (21 health sciences and 10 biomedical engineering). No significant differences were found in the biomarkers (fixations, saccades, pupil diameter and GSR) or in the learning outcomes with respect to the type of degree. Differences were only detected in perceived anxiety regarding the use of virtual laboratories, being higher in biomedical engineering students. Significant differences were detected in the biomarkers of the duration of use of the virtual laboratory and in some learning outcomes related to the execution and presentation of projects with respect to the variable form of the visualisation of the laboratory (visual and auditory vs. visual). Also, in general, the use of tasks presented in self-regulated virtual spaces increased learning outcomes and perceived student satisfaction. Further studies will delve into the detection of different forms of information processing depending on the form of presentation of learning tasks.

1. Introduction

Over the last ten years, the analysis of learning processes has taken a very important turn with respect to recording and analysing information [1]. In terms of recording, using integrated multimodal technology during the learning process helps monitor and visualise the learning process and allows for a variety of indicators to be recorded. Eye tracking technology provides several specific parameters (static—fixation, saccades and pupil diameter—and dynamic—scan path or gaze point) [1]. In addition, eye tracking technology has been accompanied in recent years by other measurement indicators such as psychogalvanic skin response (GSR) and electroencephalographic (EEG) recordings [2] during task execution. Using these resources is referred to as integrated multimodal technology [3]. Coupling this functionality with other subject-specific variables such as age, level of prior knowledge, type of degree, type of instruction, etc., will help researchers specify hypotheses related to the human learning process [4]. However, the volume of data this technology can produce is enormous and will require the application of various statistical and/or machine learning algorithms to process and treat the data [3]. Along these lines, current studies address the difficulty in centralising data. The reasons focus on data privacy, transmission costs and the analysis of large volumes of data. Also, to achieve transfer failure diagnosis with respect to data decentralisation, federated learning comes to reform transfer failure diagnosis methods, where intermediate distribution could serve as a means to indirectly evaluate the discrepancy between domains instead of the centralisation of raw data. Recent studies [5] propose a federated, semi-supervised transfer fault diagnosis method called transfer learning directed through the distribution barycentre medium (TTL-DBM). The results show that TTL-DBM could obtain similar features across domains by adapting through the distribution medium and achieve higher diagnostic accuracy than other federated adaptation methods in the presence of data decentralisation. This aspect is outside the scope of this study but is important to review for researchers focusing on the design of the software needed to tackle data fusion work.

Below, we examine each of these important milestones in the study of learning processes in 21st-century society in depth.

1.1. Simulation-Based Virtual Learning Environments

During and after the COVID-19 pandemic, teaching and learning underwent drastic change all over the world, especially in the higher-education environment. This is because there was a shift from in-person teaching to teaching in hybrid or virtual settings [3]. More specifically, Teaching Based on Training Simulation (TBTS) methodology [6,7,8,9] is being used in teaching environments in health science and engineering degrees. The instructional structure of TBTS is based on a carefully designed learning scenario where conceptual and procedural concepts are presented in a staged way through characters (avatars) that provide a metacognitive, interactive, self-regulated dialogue [10]. Furthermore, this type of instruction improves learning outcomes for students. Specifically, the effect value for this variable was found to be d between 0.45 and 0.72 [11] (d is Cohen’s d, indicating the value of the effect of a variable on other variables. The values are interpreted as follows: values of 0.20 or lower indicate no effect. Values between 0.21 and 0.49 indicate a small effect. Values between 0.50 and 0.70 indicate a medium effect value, and values greater than 0.70 indicate a high effect value). The methodology was also shown to be particularly effective for learning in health science [12] and engineering degrees [13]. These environments also increase motivation during learning [14] and student academic engagement [15].

1.2. Using Cognitive Biomarkers in the Learning Process

Technological resources that help monitor the learning process are a major advancement in understanding the learning process [16]. In this context, eye tracking technology is proving to be an important resource [17]. In addition, in recent years, eye tracking recording has been complemented with GSR and EEG sensors [2]. Specifically, pupillometry analysis has become a relevant indicator [18,19]. For example, a lower pupil magnification is related to a lower cognitive load for the learner. Similarly, higher amplitude and saccade velocity, a higher number of fixations and a shorter average fixation duration are related to information seeking intention [20]. On the other hand, gaze position (x, y or x, y, z axes) in a given task is an indicator of success or failure in solving the task [18]. Table 1 summarises the most representative psychophysiological metrics that can be extracted with sensors, their significance and their relationship to the development of information processing during task or problem solving.

Table 1.

Indicators of multimodal recording in information processing.

Regarding the workload according to the type of task, for example, using simulation videos with self-regulated design seems to increase information in working memory. Related cognitive biomarkers indicators are increased pupil diameter [21], increased electrodermal activity [22], changes in facial expressions [23] and variations in positive and negative valences recorded via EEG recordings [24]. However, interpreting these cognitive biomarkers is complicated, depending on the type of learning and the phase of learning [22]. Problem solving depends on several variables, such as the learner’s metacognitive strategies, the degree of prior knowledge, how the problem is presented (structured vs. unstructured) and whether presentation includes self-regulated instruction [25]. However, the use of this methodology in the study of learning processes is just beginning. Therefore, further studies are needed in order to obtain data that can be used to develop personalised learning [17].

1.3. How to Analyse Cognitive Biomarkers in the Learning Process

Multichannel logs in learning environments that include simulation resources allow for a granular analysis of the learning process. Along these lines, studying multichannel logs is expected to enhance the development of intelligent learning environments [3,17,26]. Specifically, the Multimodal Educational Data (MED) design provides a large volume of data which must be analysed using data fusion techniques [27]. These techniques include various types of algorithms: aggregation, ensemble, statistical, similarity, prediction, probability, etc. The most fundamental data fusion technique consists of combining data in the most basic sense of aggregation or concatenation [3]. Multi-fusion data analysis involves the collection of information from different sources, such as [28] classification, and will enable the creation of explanatory models of human behaviour in the learning process. Furthermore, all of these data can be analysed in combination using statistical techniques, supervised learning techniques of prediction and classification [24,29] and data fusion techniques [3]. The techniques that can be applied are diverse (statistics, machine learning, etc.). The general idea is that different data are collected for the analysis of a specific problem or situation through different procedures [30]. These can guide teachers in designing personalised learning spaces that are expected to improve students’ academic performance [31]. However, monitoring all records in natural environments is complex. The use of multimodal models in the study and analysis of the learning process has great potential compared to traditional analysis models [32,33]. The final objective will be to improve the learning proposals [34,35]. This study will follow the analysis of users’ information processing during task solving from the cognitive load theory which is the one applied in the baseline studies used in this study. This is a theory of instruction based on the assumption that information is processed in working memory for a limited time before being stored for further processing in long-term memory. Once stored, information can be transferred back to working memory to govern action relevant to the existing environment. Working memory has no known limits of capacity or duration when it comes to information transferred from long-term memory. The main consequence for instruction is the accumulation of information in long-term memory. Each person processes information according to his or her prior knowledge of the object of learning and the cognitive and metacognitive strategies he or she applies. Cognitive load theory is a theory of instruction based on the knowledge of developmental psychology leading to human cognitive architecture. This architecture specifies individual differences due to biological or environmental factors. Information stored in long-term memory is the main source of environmentally mediated individual differences [36].

Based on previous studies, the following research questions were posed in this paper:

RQ1: Will there be significant differences in the cognitive biomarkers (fixations, saccades, GSR) depending on what degree the students are pursuing (health sciences vs. biomedical engineering)?

RQ2: Will there be significant differences in the learning outcomes depending on what degree the students are pursuing (health sciences vs. biomedical engineering)?

RQ3: Will there be significant differences in the perceived satisfaction depending on what degree the students are pursuing (health sciences vs. biomedical engineering)?

RQ4: Will there be significant differences in the in the cognitive biomarkers (fixations, saccades, GSR) depending on whether the self-regulated virtual lab uses visual and auditory self-regulation vs. only visual self-regulation?

RQ5: Will there be significant differences in the in the learning outcomes depending on whether the self-regulated virtual lab uses visual and auditory self-regulation vs. only visual self-regulation?

RQ6: Will there be significant differences in the perceived satisfaction depending on whether the self-regulated virtual lab uses visual and auditory self-regulation vs. only visual self-regulation?

2. Materials and Methods

2.1. Participants

We worked with a sample of 31 final-year undergraduates studying for health science degrees, 21 specifically pursuing an Occupational Therapy degree (20 women and 1 man) and 10 pursuing a biomedical engineering degree (8 women and 2 men). In all cases, the age range was between 21 and 22 years. Convenience sampling was applied based on students’ availability to participate in this study. The students did not receive any compensation for their participation other than their own learning in the subject. All students were informed of the objectives of this study and signed the consent form. The higher percentage of women than men is a reality in health-related degrees, as reflected in the CRUE report [37].

2.2. Instruments

- (a)

- Tobii pro lab version 1.194 with 64 Hz. Table 2 presents the measures used in this study, what they mean and their relationship to cognitive and metacognitive processes.

Table 2. Indicators in integrated multimodal eye tracking and their significance in information processing.

Table 2. Indicators in integrated multimodal eye tracking and their significance in information processing. - (b)

- Shimmer3 GSR+ (galvanic skin response) single-channel galvanic skin response data acquisition (Electrodermal Resistance Measurement—EDR/electrodermal activity (EDA)). The GSR+ unit is suitable for measuring the electrical characteristics or conductance of skin. This device is compatible with Tobii pro lab version 1.194 and allows for the integration of records.

- (c)

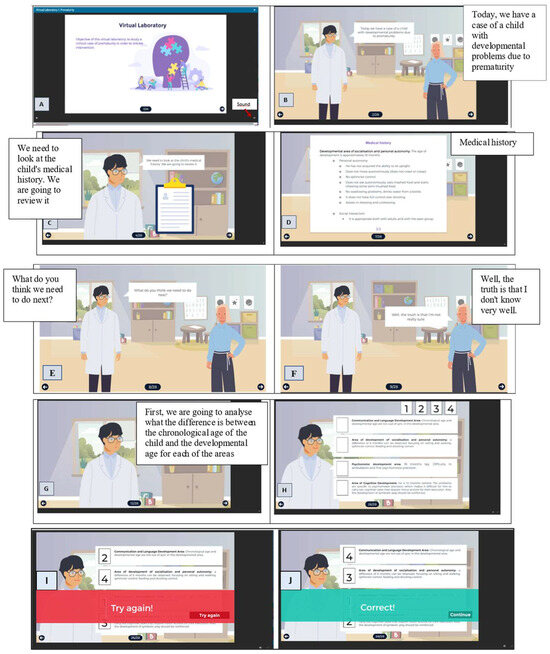

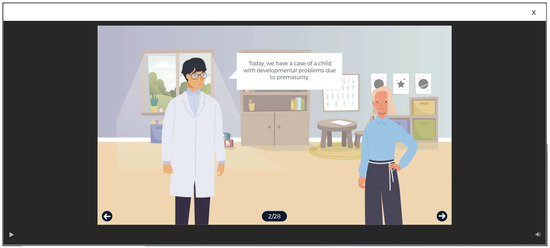

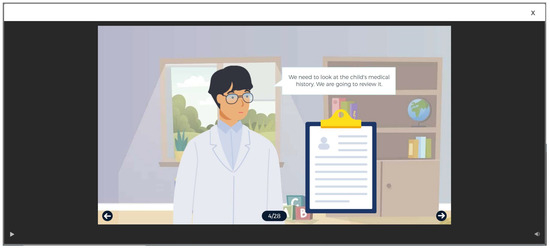

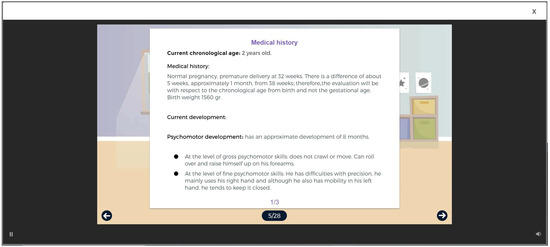

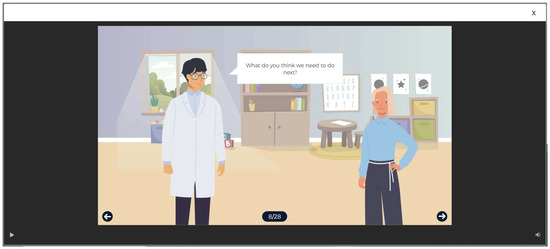

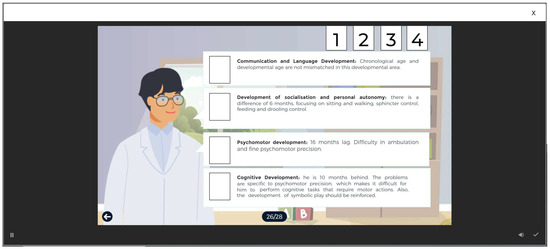

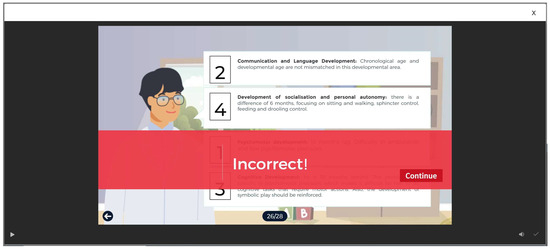

- Virtual lab for the resolution of clinical cases. This lab was developed ad hoc within the European project “Specialized and updated training on supporting advance technologies for early childhood education and care professionals and graduates” No. 2021-1-ES01-KA220-SCH-000032661. In this study, the virtual laboratory consists of the visualisation of a dialogue between the two avatars, a student and a teacher. The teacher asks questions that guide the student’s reflection in the resolution of a task of elaborating a therapeutic intervention programme in a case of prematurity. The student can follow the interaction at the pace he/she needs, as he/she is the one who moves on to the next image. Therefore, it is a laboratory and not a video. The lab was controlled by the voice of an avatar. However, this functionality could be removed by the student by clicking on the sound icon (see image A in Figure 1). The lab included two avatars simulating the role of a teacher–therapist and a student (see image B in Figure 1). The student watching the lab could choose self-regulation via two routes: auditory via the voice of the avatars and visual (through reading the dialogues) or visual only (through reading the dialogues). The teacher–therapist avatar first presents the case and gives information about the clinical history (see scene C and scene D in Figure 1) before regulating the process of solving the clinical case (see scene E in Figure 1). The student avatar also asks the teacher questions (see scene F in Figure 1). The teacher’s answers follow a metacognitive dialogue that uses guiding, planning and evaluation questions (see scene G in Figure 1). Finally, the teacher avatar gives the student watching the video a comprehension task about resolving the clinical history they have been watching. The student has to arrange the answer options (see scene H in Figure 1). The answers given by the learner may be wrong, in which case a message appears telling the learner to try again (see scene I in Figure 1). The student then tries again, and if the solution is correct, they obtain feedback on the correct answer (see scene J in Figure 1). In Appendix A, each of the figures is shown separately to enlarge the display (A = Figure A1; B = Figure A2; C = Figure A3; D = Figure A4; E = Figure A5; F = Figure A6; G = Figure A7; H = Figure A8; I = Figure A9; J = Figure A10). Also, this laboratory is freely accessible after logging into the project’s Virtual Classroom https://www2.ubu.es/eearlycare_t/en/project (accessed on 7 August 2024).

Figure 1. Description of self-regulated virtual lab (red dotted line below “sound” is necessary for the reader to see where the sound was located).

Figure 1. Description of self-regulated virtual lab (red dotted line below “sound” is necessary for the reader to see where the sound was located). - (d)

- Learning outcomes. A project-based learning methodology was used with both groups of students. The students were voluntarily split into small groups of 3 to 5 students, and throughout the semester, they had to solve a practical case (project) related to the therapeutic intervention in a clinical case. During the final week of the semester, the students had to present their proposed solution to the class group. Students were also required to take a test to check the conceptual content of the subject, which they had to complete individually. The test consisted of 30 multiple-choice questions with four answer options, only one of which was correct. The marks for the three tests were given on a scale of 0 to 10, with 0 being the minimum and 10 the maximum. Both groups were taught by the same teacher to avoid the effects of the teacher type variable.

- (e)

- Questionnaire for students’ perceived satisfaction during the learning process. This questionnaire was developed ad hoc and contained three closed questions measured on a Likert-type scale from 1 to 5: Question 1 = “Rate how satisfied you are with how the subject was delivered on a scale of 1 to 5 where 1 is not at all satisfied and 5 is totally satisfied”; Question 2 = “Rate how satisfied you are with the virtual lab on a scale of 1 to 5 where 1 is not at all satisfied and 5 is totally satisfied”; and Question 3 = “Rate whether you felt anxious using the virtual lab on a scale of 1 to 5 where 1 is never and 5 is always”. Reliability indicators were not provided for this instrument; as with only three questions, any reliability indicator would not be significant (see Table A1).

2.3. Procedure

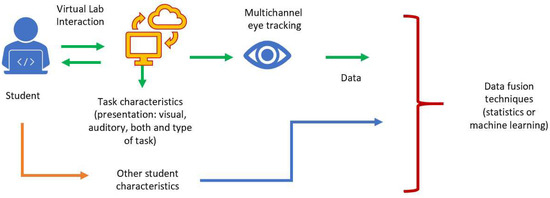

Before this study was carried out, approval was obtained from the Bioethics Commission of the University of Burgos (Spain), No. IO 03/2022. Subsequently, at the beginning of the semester, the students in the two groups [Group 1: health science students pursuing an Occupational Therapy degree and Group 2: biomedical engineering students] were asked to take part in this study. This was voluntary and had no compensation beyond learning through the virtual lab. All participants signed a written informed consent form. The two groups worked in similar ways. The content of the task applied in the virtual lab was novel for both groups. At the end of the fourth week of the semester, the students engaged with the virtual lab in a room with controlled light and temperature conditions. Engagement with the virtual lab was conducted individually under the supervision of an expert in the use of eye tracking technology. She gave students instructions about their posture at the table and in the chair and placed the GSR electrodes. Students’ positions varied according to their height and weight characteristics and ranged from 45 to 50 centimetres from the chair to the table. The virtual lab took varying time to complete depending on the individual student, as they were able to move through the slides depending on their understanding of the content. In addition, students could choose whether to watch the virtual lab with regulating audio from the avatars or only just by looking at the images, which contained in which the self-regulated text. At the end of the semester, the learning results were recorded in 3 tests (an individual knowledge test, a group test on the execution of a project resolving a practical case and a group test on presenting the project). Information was also collected on perceived satisfaction using the virtual lab. Figure 2 shows the process of collecting and analysing information about the learner.

Figure 2.

Collect and analyse learner information.

In this study, we analysed the data in two registers: indicators of internal psychological response collected by eye tracking and integrated GSR were recorded and subjective indicators where students’ indicators of perceived satisfaction with using the virtual lab. Finally, the learning outcomes were collected. The measurement parameters are given in Table 3.

Table 3.

Multimodal Educational Data categories.

2.4. Design

Given the choice characteristics and sample size, non-parametric statistics and a quasi-experimental design without a control group [38] were applied where the independent variables were the ‘type of degree’ (RQ1, RQ2, RQ3) and ‘type of virtual lab display’ (RQ4, RQ5, RQ6). Likewise, the dependent variables were the cognitive biomarkers (fixations, saccades and GSR) (RQ1, RQ4), ‘learning outcomes’ (RQ2, RQ5) and ‘perceived satisfaction’ (RQ3, RQ6).

2.5. Data Analysis

Given the characteristics of the sample (convenience sampling and sample size of n = 31) and based on the choice and number of the sample, non-parametric statistics were applied to test the research questions. Specifically, the Mann–Whitney U test for two independent samples and effect size test r [39] were used. Analysis was performed with the SPSS v.28 statistical package [40]. Also, we used the machine learning techniques of Linear Projection and Principal Component analyses for studying the student’s position. For this, we used Orange Data Mining v.3.35.0 [41]. Likewise, the student’s pathway and heat map were analysed with Tobii pro lab version 1.194.

3. Results

3.1. Influence of Degree Type on Cognitive Biomarker Recording, Learning Outcomes and Perceived Task Satisfaction

Looking at RQ3, we only found significant differences in the students’ perceived anxiety (Anxiety lab use) during the use of the virtual lab. The mean was higher in Group 2 (biomedical engineering students) (M = 2.18 out of 5) than in Group 1 (Occupational Therapy students) (M = 2.02 out of 5). However, in both cases, the perceived anxiety about using the virtual lab was low, with values below 2.19 out of 5 and a medium effect, r = −0.46. Likewise, no significant differences by the type of degree were found in the eye tracking and GSR parameters (RQ1). Similarly, no significant differences in learning outcomes were found either (RQ2) (see Table 4).

Table 4.

Mann–Whitney U test for differences in degree type.

3.2. Influence of Task Presentation on Cognitive Biomarker Recording, Learning Outcomes and Perceived Task Satisfaction

Looking at RQ4, we found significant differences between Group 1 (visual and auditory lab use) vs. Group 2 (visual only) in the time spent watching the virtual lab, in “Average duration” [M = 157.98 ms vs. M = 50 ms], respectively, where the effect size was medium (r = −0.40), and in “Total Time Interest Duration” [M = 307.43 ms vs. M = 268.21 ms], respectively, where the effect size was medium (r = −0.40). With regard to RQ5, we found significant differences in project presentation scores [M = 9.62 out of 10 vs. M = 9.20] out of 10, respectively, where the effect size was medium (r = −0.40). Finally, with regard to RQ6, significant differences were found in perceived satisfaction with learning [M = 5.00 out of 5 vs. M = 4.21] out of 5, respectively, where the effect size was medium (r = −0.40) (see Table 5).

Table 5.

Mann–Whitney U-test for type of virtual lab display.

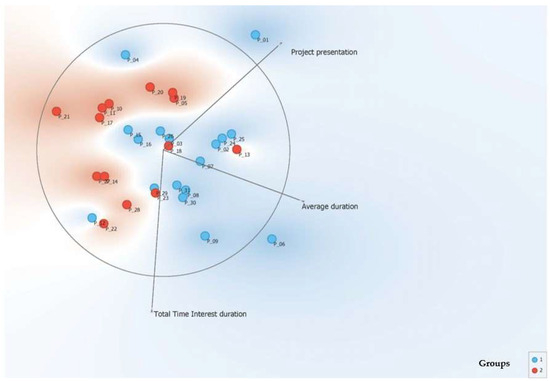

Next, a Linear Projection analysis was carried out for the positioning of each learner according to the variables in which significant differences were found between the group that had visual and auditory self-regulated virtual lab viewing (Group 1) vs. visual self-regulated virtual lab viewing (Group 2); see Figure 3 (the blue colour is Group 1, and the red colour is Group 2).

Figure 3.

Linear Projection.

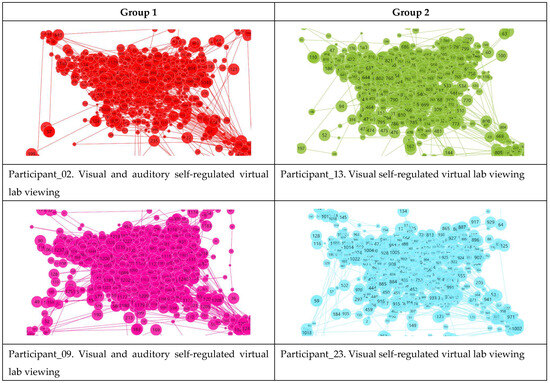

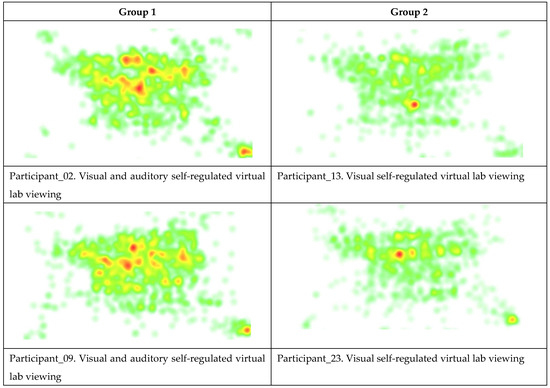

Also, as an example, Figure 4 shows a gaze point of a trainee from Group 1 (visual and auditory self-regulated virtual lab viewing) and Group 2 (visual self-regulated virtual lab viewing). As can be seen in Figure 4, there is a greater concentration of visualisation in the centre of the image (where the dialogues between the avatars take place) in Group 1. In Group 2, there is a greater dispersion of the gaze towards the edges. Figure 5 shows the data in heat map mode.

Figure 4.

Example of gaze point for members of Group 1 vs. Group 2.

Figure 5.

Example of heat map for members of Group 1 vs. Group 2.

In Group 1 (health science students), 10 students chose to visualise the virtual laboratory with voice (50%) and 10 without voice (50%). In Group 2 (biomedical engineering students), six students visualised the virtual laboratory (60%) with voice and four without voice (40%).

4. Discussion

The interpretation of cognitive biomarkers is complicated by learner characteristics [25] and task characteristics [22]. However, the results of this study cannot be generalised given the characteristics of the sample. From a descriptive point of view, it can be noted that the use of simulation-based virtual laboratories seems to facilitate understanding in health science and engineering students [6,7,8,9,12,13]. Likewise, the perceived satisfaction of students from these degrees did not differ [13] except for perceived anxiety.

In sum, no significant differences were found in student learning outcomes after the use of the self-regulated virtual laboratory. This confirms what has already been found in other studies regarding the effectiveness of the use of this type of laboratory based on self-regulated simulation with respect to learning outcomes in students of health science or engineering degrees [6,7,8,9,12,13]. Regarding the perceived satisfaction with the use of the laboratories, students of both degrees were satisfied with their use. These results underline the findings of other studies on the usefulness of using self-regulated virtual laboratories for student motivation and engagement [14,15]. However, the engineering students perceived a higher degree of anxiety, and future studies will explore this further.

Regarding the workload experienced by the students depending on the modality of the visualisation of the laboratory (visual with audio vs. visualisation without audio), significant differences were found with respect to the biomarkers of Average duration (refers to the average duration of the visualisation and can be related to the form of information processing) and Total Time Interest duration (refers to the interest maintained by the learner in the stimuli). Students who chose to view the lab with audio spent more time viewing the stimuli. These results are related to information processing, possibly in working memory, depending on the way the task is presented [21]. Furthermore, it should be considered that in this study, the form of information display was voluntary. Therefore, each student chose the form that was the most appropriate for him or her. Significant differences were also found in the learning outcomes of the students. Students who chose the audio visualisation of the lab had better learning results in the project execution and project presentation tests. They also reported higher satisfaction with the learning process. These data point to a possible relationship between the type of information processing of the learner, learning outcomes and perceived satisfaction. Such a connection is probably related to the learner’s degree of commitment to learning. Therefore, further studies will address this hypothesis. In sum, all results found in this study should be taken with caution and with limitations in their generalisability. The reasons for this are the type of sampling, convenience sampling and the number of participants. However, as values of this work, we can highlight the proposed analysis of learning processes in self-regulated virtual environments monitored through integrated multichannel eye tracking technology.

5. Conclusions

The use of data from different areas (cognitive biomarkers, satisfaction perceived, learning outcome, etc.) is both a challenge and an opportunity. The challenge lies in collecting a lot of information in real time, and the opportunity lies in the personalised learning proposal. In this line, the more information we have about a learning process, the more data we will collect, which will require the use of more complex data analysis techniques. However, the collection of all this information implies highly individualised processes which, although they provide a great deal of information on each learner, limit the generalisation of the results. However, this way of working could facilitate the advancement of educational instruction towards precision in a similar way to what precision medicine conducts in order to propose the best treatment for different afflictions. Future research will delve deeper into the variables of task presentation and the study of different learning patterns (information processing type) as well as the redesign of tasks according to these patterns.

In summary, it is important to stress the difficulty that this type of study has with regard to the use of large samples, as working with integrated multichannel eye tracking technology requires individual work with each student and a high degree of sophistication of the equipment used. In addition, there is an arduous process of data extraction and data processing. This means that the generalisability of the results is limited. However, the advantages centre on a better understanding of the way of human processing, in this case applied to learning, and the possibility of offering teachers and cognitive and computational researchers procedures to continue their complex research into the workings of human cognitive processing.

Author Contributions

Conceptualisation, all authors; methodology, M.C.S.-M., R.M.-S. and L.J.M.-A.; software, M.C.S.-M. and R.M.-S.; validation, M.C.S.-M., R.M.-S. and L.J.M.-A.; formal analysis, M.C.S.-M.; investigation, all authors; resources, M.C.S.-M.; data curation, M.C.S.-M.; writing—original draft preparation, M.C.S.-M., R.M.-S. and L.J.M.-A.; writing—review and editing, all authors; visualisation, M.C.S.-M.; supervision, all authors; project administration, M.C.S.-M.; funding acquisition, M.C.S.-M., R.M.-S. and M.C.E.-L. All authors have read and agreed to the published version of the manuscript.

Funding

Project “Voice assistants and artificial intelligence in Moodle: a path towards a smart university” SmartLearnUni. Call 2020 R&D&I Projects—RTI Type B. MINISTRY OF SCIENCE AND INNOVATION AND UNIVERSITIES. STATE RESEARCH AGENCY. Government of Spain, grant number PID2020-117111RB-I00”. Specifically, in the part concerning the application of multichannel eye tracking technology with university students and Project “Specialized and updated training on supporting advance technologies for early childhood education and care professionals and graduates” (eEarlyCare-T) grant number 2021-1-ES01-KA220-SCH-000032661 funded by the EUROPEAN COMMISSION. In particular, the funding has enabled the development of the e-learning classroom and educational materials.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Ethics Committee of University of Burgos (protocol code IO 03/2022 and date of approval 7 April 2022).

Informed Consent Statement

Written informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data are available upon request and the justification of the reasons for the research to which they are to be applied, as well as the justification of use in compliance with international ethical processes and protocols.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

A questionnaire for students’ perceived satisfaction during the learning process.

Table A1.

A questionnaire for students’ perceived satisfaction during the learning process.

| Questions | Rating Scale | ||||

|---|---|---|---|---|---|

| 1. Rate how satisfied you are with the subject. | 1 | 2 | 3 | 4 | 5 |

| 2. Rate how satisfied you are with the virtual lab on a scale. | 1 | 2 | 3 | 4 | 5 |

| 3. Rate whether you felt anxious using the virtual lab. | 1 | 2 | 3 | 4 | 5 |

Figure A1.

Scene A. A presentation of the virtual laboratory.

Figure A2.

Scene B. A presentation of the virtual laboratory through avatars.

Figure A3.

Scene C. The avatar with the role of therapist–teacher refers to the patient’s medical history.

Figure A4.

Scene D. A presentation of the medical history.

Figure A5.

Scene E. The avatar with the role of therapist–teacher regulates the next step to be performed by the student role avatar.

Figure A6.

Scene F. The student role avatar asks for guidance from the therapist–teacher.

Figure A7.

Scene G. The avatar with the role of therapist–teacher informs about the next step.

Figure A8.

Scene H. The avatar with the role of the therapist–teacher introduces a self-assessment test of knowledge.

Figure A9.

Scene I. An example of feedback on an incorrect answer.

Figure A10.

Scene J. An example of feedback on a correct answer.

References

- Sáiz-Manzanares, M.C.; Marticorena-Sánchez, R.; Martín Antón, L.J.; González-Díez, I.; Carbonero Martín, M.Á. Using Eye Tracking Technology to Analyse Cognitive Load in Multichannel Activities in University Students. Int. J. Hum. Comput. Interact. 2024, 40, 3263–3281. [Google Scholar] [CrossRef]

- Grapperon, J.; Pignol, A.C.; Vion-Dury, J. La mesure de la réaction électrodermale. Encephale 2012, 38, 149–155. [Google Scholar] [CrossRef] [PubMed]

- Chango, W.; Lara, J.A.; Cerezo, R.; Romero, C. A review on data fusion in multimodal learning analytics and educational data mining. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2022, 12, 1–19. [Google Scholar] [CrossRef]

- Rappa, N.A.; Ledger, S.; Teo, T.; Wai Wong, K.; Power, B.; Hilliard, B. The use of eye tracking technology to explore learning and performance within virtual reality and mixed reality settings: A scoping review. Interact. Learn. Environ. 2022, 30, 1338–1350. [Google Scholar] [CrossRef]

- Yang, B.; Lei, Y.; Li, X.; Li, N. Targeted transfer learning through distribution barycenter medium for intelligent fault diagnosis of machines with data decentralization. Expert Syst. Appl. 2024, 244, 122997. [Google Scholar] [CrossRef]

- Calvo-Morata, A.; Freire, M.; Martínez-Ortiz, I.; Fernández-Manjón, B. Scoping Review of Bioelectrical Signals Uses in Videogames for Evaluation Purposes. IEEE Access 2022, 10, 107703–107715. [Google Scholar] [CrossRef]

- Dever, D.; Amon, M.J.; Vrzakova, H.; Wiedbusch, M.; Cloude, E.; Azevedo, R. Capturing patterns of learners’ self-regulatory interactions with instructional material during game-based learning with auto-recurrence quantification analysis. Front. Psychol. 2022, 13, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Ke, F.; Dai, Z.; Pachman, M.; Yuan, X. Exploring multiuser virtual teaching simulation as an alternative learning environment for student instructors. Instr. Sci. 2021, 49, 831–854. [Google Scholar] [CrossRef]

- Sáiz-Manzanares, M.C.; Marticorena-Sánchez, R. Manual for the Development of Self-Regulated Virtual Laboratories; Servicio de Publicaciones de la Universidad de Burgos: Burgos, Spain, 2024. [Google Scholar] [CrossRef]

- Taub, M.; Sawyer, R.; Lester, J.; Azevedo, R. The Impact of Contextualized Emotions on Self-Regulated Learning and Scientific Reasoning during Learning with a Game-Based Learning Environment. Int. J. Artif. Intell. Educ. 2020, 30, 97–120. [Google Scholar] [CrossRef]

- Taub, M.; Azevedo, R. How Does Prior Knowledge Influence Eye Fixations and Sequences of Cognitive and Metacognitive SRL Processes during Learning with an Intelligent Tutoring System? Int. J. Artif. Intell. Educ. 2019, 29, 1–28. [Google Scholar] [CrossRef]

- Hoveyzian, S.A.; Shariati, A.; Haghighi, S.; Latifi, S.M.; Ayoubi, M. The effect of portfolio-based education and evaluation on clinical competence of nursing students: A pretest–posttest quasiexperimental crossover study. Adv. Med. Educ. Pract. 2021, 12, 175–182. [Google Scholar] [CrossRef]

- Mshayisa, V.V.; Basitere, M. Flipped laboratory classes: Student performance and perceptions in undergraduate food science and technology. J. Food Sci. Educ. 2021, 20, 208–220. [Google Scholar] [CrossRef]

- Jamshidifarsani, H.; Tamayo-Serrano, P.; Garbaya, S.; Lim, T. A three-step model for the gamification of training and automaticity acquisition. J. Comput. Assist. Learn. 2021, 37, 994–1014. [Google Scholar] [CrossRef]

- Yu, Q.; Gou, J.; Li, Y.; Pi, Z.; Yang, J. Introducing support for learner control: Temporal and organizational cues in instructional videos. Br. J. Educ. Technol. 2024, 55, 933–956. [Google Scholar] [CrossRef]

- Lee, H.Y.; Zhou, P.; Duan, A.; Wang, J.; Wu, V.; Navarro-Alarcon, D. A Multisensor Interface to Improve the Learning Experience in Arc Welding Training Tasks. IEEE Trans. Hum.-Mach. Syst. 2023, 53, 619–628. [Google Scholar] [CrossRef]

- Zarour, M.; Ben Abdessalem, H.; Frasson, C. Distraction Detection and Monitoring Using Eye Tracking in Virtual Reality. In Augmented Intelligence and Intelligent Tutoring Systems; Frasson, C., Mylonas, P., Troussas, C., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 491–503. [Google Scholar] [CrossRef]

- Bektaş, K.; Strecker, J.; Mayer, S.; Garcia, K. Gaze-enabled activity recognition for augmented reality feedback. Comput. Graph. 2024, 119, 103909. [Google Scholar] [CrossRef]

- Lee, B.-C.; Choi, J.; Ahn, J.; Martin, B.J. The different contributions of the eight prefrontal cortex subregions to reactive responses after unpredictable slip perturbations and vibrotactile cueing. Front. Hum. Neurosci. 2024, 17, 1236065. [Google Scholar] [CrossRef]

- Pan, Y.; Xu, J. Gaze-based human intention prediction in the hybrid foraging search task. Neurocomputing 2024, 587, 127648. [Google Scholar] [CrossRef]

- Eberhardt, L.V.; Grön, G.; Ulrich, M.; Huckauf, A.; Strauch, C. Direct voluntary control of pupil constriction and dilation: Exploratory evidence from pupillometry, optometry, skin conductance, perception, and functional MRI. Int. J. Psychophysiol. 2021, 168, 33–42. [Google Scholar] [CrossRef] [PubMed]

- Lutnyk, L.; Rudi, D.; Schinazi, V.R.; Kiefer, P.; Raubal, M. The effect of flight phase on electrodermal activity and gaze behavior: A simulator study. Appl. Ergon. 2023, 109, 103989. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Tao, D.; Luximon, Y. In robot we trust? The effect of emotional expressions and contextual cues on anthropomorphic trustworthiness. Appl. Ergon. 2023, 109, 103967. [Google Scholar] [CrossRef] [PubMed]

- Gupta, S.; Kumar, P.; Tekchandani, R. An optimized deep convolutional neural network for adaptive learning using feature fusion in multimodal data. Decis. Anal. J. 2023, 8, 100277. [Google Scholar] [CrossRef]

- Mayer, C.W.; Rausch, A.; Seifried, J. Analysing domain-specific problem-solving processes within authentic computer-based learning and training environments by using eye-tracking: A scoping review. Empir. Res. Voc. Ed. Train. 2023, 15, 2. [Google Scholar] [CrossRef]

- Molenaar, I.; de Mooij, S.; Azevedo, R.; Bannert, M.; Järvelä, S.; Gašević, D. Measuring self-regulated learning and the role of AI: Five years of research using multimodal multichannel data. Comput. Hum. Behav. 2023, 139, 107540. [Google Scholar] [CrossRef]

- Järvelä, S.; Malmberg, J.; Haataja, E.; Sobocinski, M.; Kirschner, P.A. What multimodal data can tell us about the students’ regulation of their learning process? Learn. Instr. 2021, 72, 101203. [Google Scholar] [CrossRef]

- Mu, S.; Cui, M.; Huang, X. Multimodal Data Fusion in Learning Analytics: A Systematic Review. Sensors 2020, 20, 6856. [Google Scholar] [CrossRef] [PubMed]

- Bhatt, P.; Sethi, A.; Tasgaonkar, V.; Shroff, J.; Pendharkar, I.; Desai, A.; Sinha, P.; Deshpande, A.; Joshi, G.; Rahate, A.; et al. Machine learning for cognitive behavioral analysis: Datasets, methods, paradigms, and research directions. Brain Inform. 2023, 10, 18. [Google Scholar] [CrossRef]

- Er, A.G.; Ding, D.Y.; Er, B.; Uzun, M.; Cakmak, M.; Sadee, C.; Durhan, G.; Ozmen, M.N.; Tanriover, M.D.; Topeli, A.; et al. Multimodal data fusion using sparse canonical correlation analysis and cooperative learning: A COVID-19 cohort study. Digit. Med. 2023, 7, 117. [Google Scholar] [CrossRef]

- Järvelä, S.; Nguyen, A.; Molenaar, I. Advancing SRL research with artificial intelligence. Comput. Hum. Behav. 2023, 147, 107847. [Google Scholar] [CrossRef]

- Pei, B.; Xing, W.; Wang, M. Academic development of multimodal learning analytics: A bibliometric analysis. Interact. Learn. Environ. 2023, 31, 3543–3561. [Google Scholar] [CrossRef]

- Suzuki, Y.; Wild, F.; Scanlon, E. Measuring cognitive load in augmented reality with physiological methods: A systematic review. J. Comput. Assist. Learn. 2024, 40, 375–393. [Google Scholar] [CrossRef]

- Miles, G.; Smith, M.; Zook, N. EM-COGLOAD: An investigation into age and cognitive load detection using eye tracking and deep learning. Comput. Struct. Biotechnol. J. 2024, 24, 264–280. [Google Scholar] [CrossRef] [PubMed]

- Xia, X. Interaction recognition and intervention based on context feature fusion of learning behaviors in interactive learning environments. Interact. Learn. Environ. 2023, 31, 2033–2050. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load theory and individual differences. Learn. Individ. Differ. 2024, 110, 102423. [Google Scholar] [CrossRef]

- Hernández Armenteros, J.; Pérez-García, J.A.; Furió Párraga, B. La Universidad Española en cifras. Informe 2017/2018; CRUE: Madrid, Spain, 2019; Available online: https://www.crue.org/wp-content/uploads/2020/02/UEC-1718_FINAL_DIGITAL.pdf (accessed on 11 July 2024).

- Campbell, D.F. Diseños Experimentales y Cuasiexperimentales en la Investigación Social [Experimental and Quasiexperimental Designs for Research], 9th ed.; Amorrortu: Buenos Aires, Argentina, 2005. [Google Scholar]

- Pallant, J. SPSS Survival Manual, 6th ed.; Open University Press: Berkshire, UK, 2016. [Google Scholar]

- IBM Corp. SPSS Statistical Package for the Social Sciences (SPSS), 28th ed.; IBM Corp.: Armonk, NY, USA, 2024. [Google Scholar]

- Demšar, J.; Curk, T.; Erjavec, A.; Gorup, Č.; Hočevar, T.; Milutinovič, M.; Možina, M.; Polajnar, M.; Toplak, M.; Starič, A.; et al. Orange: Data Mining Toolbox in Python. J. Mach. Learn. Res. 2013, 14, 2349–2353. Available online: http://jmlr.org/papers/v14/demsar13a.html (accessed on 11 July 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).