Abstract

Traditional craftsmanship and culture are facing a transformation in modern science and technology development, and the cultural industry is gradually stepping into the digital era, which can realize the sustainable development of intangible cultural heritage with the help of digital technology. To innovatively generate wickerwork pattern design schemes that meets the user’s preferences, this study proposes a design method of wickerwork patterns based on a style migration algorithm. First, an image recognition experiment using residual network (ResNet) based on the convolutional neural network is applied to the Funan wickerwork patterns to establish an image recognition model. The experimental results illustrate that the optimal recognition rate is 93.37% for the entire dataset of ResNet50 of the pattern design images, where the recognition rate of modern patterns is 89.47%, while the recognition rate of traditional patterns is 97.14%, the recognition rate of wickerwork patterns is 95.95%, and the recognition rate of personality is 90.91%. Second, based on Cycle-Consistent Adversarial Networks (CycleGAN) to build design scheme generation models of the Funan wickerwork patterns, CycleGAN can automatically and innovatively generate the pattern design scheme that meets certain style characteristics. Finally, the designer uses the creative images as the inspiration source and participates in the detailed adjustment of the generated images to design the wickerwork patterns with various stylistic features. This proposed method could explore the application of AI technology in wickerwork pattern development, and providing more comprehensive and rich new material for the creation of wickerwork patterns, thus contributing to the sustainable development and innovation of traditional Funan wickerwork culture. In fact, this digital technology can empower the inheritance and development of more intangible cultural heritages.

1. Introduction

Culture is a prominent benchmark for countries and nations [1], so the protection of cultural heritage is a topic of common concern all over the world. Its essence is to protect the material and spiritual wealth of human society through various means, to realize the inheritance of human history, art, and science. While facing the world and accepting multi-ethnic cultures, maintaining Chinese national characteristics and style in traditional intangible culture is a key problem. As an intangible cultural heritage, the inheritance of arts and crafts should be active and innovative. On the one hand, it should rescue the existing representative intangible art images; on the other, we should also actively guide the reform of intangible art images [1], on the basis of maintaining national tradition and cultural characteristics as far as possible, and to integrate some modern elements to adapt them to the social needs and people aesthetic preferences.

In the background of the national plan to revitalize traditional craftsmanship, it is imperative to explore the innovative development of traditional craftsmanship intangible heritage protection, so as to meet the needs of modern society and daily life. With the rapid development of digital technology, digitization technology has become one of the main ways to protect and spread intangible cultural heritage. The involvement of digital technology can empower the inheritance of arts and crafts, which enriches artistic creation methods through intelligent technology and gives more individuals the opportunity to participate in creation. Digital artists use intelligent technologies to produce a contemporary derivative of traditional craft that coexists with popularization and personalization.

With the continuous development of AI, it provides more choices for the inheritance and development of intangible cultural heritage, so that it can translate inheritance methods into data asset libraries, algorithms, and interactions. At the same time, it also translates traditional arts and crafts elements into machine-identifiable data, which meets the cycle and dynamic needs in sustainable design. Through dynamic collection and analysis of meaningful user information and the pattern information of traditional techniques, it could provide a promising way for the sustainable development of traditional handicraft.

Most scholars acquire user information during the development and design of artworks, products and packaging, and focus on the emotional factors to meet the needs of consumers [2,3]. Generally speaking, Kansei Engineering (KE) is regarded as the most reliable and useful method to deal with users needs [4], and has been applied by many scholars in the fields of industrial products such as automobiles [5], clothing, furniture [6], mobile phones [7], computers [8], electric bicycles [9], etc. Scholar [10] use the KE method to study the relationship between the physical attributes and emotions of products, and Gentner et al. [11] studied user experience and interaction through the physical and digital classification of automotive products. With the rapid development of e-commerce in recent years, many studies on the combination of KE research and computer science to tap customer needs have emerged, such as online customer review extraction [12,13]. Obviously, the current research focus of product Kansei design mainly focuses on the aspects of constructing related research theories and methods by means of various intelligent tools to solve the emotional reactions of individuals and products. The design process has generally three steps [14]: 1. Obtain the user emotional image information and quantify it; 2. Explore the complex relationship between the user emotional image and the product [15], and establish a mapping model, such as artificial neural network [16], quality function deployment [17], support vector machine [18], or genetic algorithm [19] model to fit the nonlinear relationship between consumer emotional response and product design variables; 3. Convert the model into an objective function [9], and use the intelligent algorithm to optimize so as to assist designers to quickly generate design solutions and select product design schemes that meet the user emotional needs. However, there are still some limitations in solving strategies. On the one hand, the correlation model between morphological parameters and user emotions is usually constructed through mathematical models, and these prediction models are established manually. At the same time, due to the subjectivity of the user perception image [20], there is a certain degree of error. On the other hand, the quality of product design scheme could depend entirely on the model accuracy [21], which greatly weakens the diversity of product design schemes.

With the continuous development of deep learning technology in AI technology, its application has achieved extraordinary results in image detection, image recognition, image re-coloring, image art style transfer and so on. For example, the Deep Convolutional Neural Networks (DCNN) [22] has been widely used in achieving creative tasks, including image generation, image composition, image generation and text restoration. Lixiong et al. [23] proposed a framework of product concept generation methods based on deep learning and Kansei engineering (PCGA-DLKE). Ding et al. [24] described a product color emotion design method based on DCNN and search neural network. Some image art style transfer technologies with centered on deep learning are also widely used in art and design innovation. Among them, CycleGAN is an emerging network. Zhu et al. [25] proposed that CycleGAN can realize the mutual conversion of two domain styles, so as to realize the transfer of styles to another area, i.e., to convert images from one style to another, and retain their key attribute characteristics, which plays a positive role in promoting the emergence and development of design creativity. Yu et al. [26] combined the content of one animated clothing with the style of another to transform the artistic style of the animated clothing.

Through the extraction of image features and the transfer of image art style, it is possible to combine the form of craft expression with modern people aesthetics for innovation. However, intangible cultural heritage wickerwork is a traditional skill created by craftsmen, the intervention of digital technology ignores the unique history and culture of wickerwork and the life and experience of the craftsmen, which leads to the homogeneity and fragmentation of wickerwork. The real disadvantage of AI is that it can only passively rely on data and rules when making creations or solving problems [27], but it is unable to solve design problems independently. Therefore, it is necessary for designers to participate in the design process and creatively design the craftsmanship experience, and the craftsman spirit and emotional temperature contained in the tacit knowledge of handicrafts, so as to interpret the connotation and characteristic elements of intangible cultural heritage. Hence, this research proposes a mechanism to establish a cooperative relationship between designers and AI that complements each other, and uses the powerful learning and computing capabilities of AI to assist designers in creative design activities, which effectively promotes the activated inheritance and sustainable development of wickerwork.

Therefore, this study carries out the image recognition and creative design research of wickerwork patterns based on CycleGAN. First, as one of the DCNNs, the ResNet (Residual Network) has strong performance and high accuracy, thus the ResNet50 network is used to establish the emotional image recognition model of product patterns. Second, the wickerwork pattern design model is generated based on the big data set and CycleGAN to generate the images of artistic style transfer. Finally, designers participate in the design process to complete the innovative design and development of the pattern. In summary, this proposed method can innovatively generate a weaving design scheme that conforms to the users’ emotional images, and provides a new way of optimizing the design of intangible cultural heritage wickerwork patterns and textures.

2. Review

2.1. Digital Sustainability of the Wickerwork Craftsmanship

With the booming development of information technology, developed countries have developed their own advantages in digital cultural industries. In 2020, the Centre for American Security released the Designing America Digital Development Strategy, which proposes that the US government upgrade its digital development strategy to create a free, open, and harmonious environment of emerging digital ecosystem. France is a major European country with a strong focus on state support and guarantees the development of its cultural industries. In addition, the Italian government has made the development of cultural industries as fundamental state policy. Italy artifacts, monuments and museums occupy a relatively important position in the world, and emphasis on the role of digital media and tool as a vehicle for traditional cultural heritage.

As the new productivity of the information age, digitization has become the basic condition for the inheritance and development of traditional culture in the information age, which could conduct innovative design and promote the creativity of the design. Ester Alba Pagán et al. [28] presented the SILKNOW project and its application in cultural heritage, creative industries, and design innovation. To this end, it demonstrates the use of image recognition tools in cultural heritage. Wu et al. [29] introduced new digital technologies such as virtual reality (VR) and 3D digital programming into the conservation and transmission of bamboo crafts. Evropi Stefanidi et al. [30] presented a novel approach for craft modeling and visualization and provides a platform to support the creation and visualization of craft processes within virtual environments. In the UK, Aljaberi [31] adopted a questionnaire survey to investigate visitors and bearers of traditional craft, and the results found that visitors were nearly 30% more satisfied with the integration of digital and physical carrier forms than the non-integrated forms.

Weaving is one of the oldest handicrafts in human history [32]. The time-honored Funan wickerwork techniques are the artistic accumulation of production and life by the people of Funan. It contains rich regional characteristics of folk culture. In 2010, Funan wickerwork products were approved by China’s Ministry of Culture and Tourism as “National Intangible Cultural Heritage”. At present, Funan wickerwork can be found mainly in Huanggang Town, where more than 70 wickerwork enterprises exist, producing nearly 10,000 categories of products, and having a reputation both at home and abroad.

The rational use of digital technology is conducive to the innovative and sustainable development of traditional wickerwork crafts, such as the development of 3D simulation systems of wickerwork craft, the adoption of parameterized methods for the digital design of wickerwork textures, and so on. This digital technology cannot only activate the endangered art form, preserves its spiritual culture of “originality”, but also inject the vitality of the new era in the rigid art form, innovates the expression forms of traditional intangible cultural heritage so as to shape the diverse characteristics of intangible cultural heritage. Some scholars have carried out research and discussed the application of 3D scanning, 3D modeling, motion capture, and other technologies in the reconstruction and digital protection of virtual intangible cultural heritage activities [33]. Chang [34] constructed the 3D models with knitting characteristics by means of parametric design methods. Yu et al. [35] develop the 3D simulation system to enable designers to quickly and conveniently design various styles of wickerwork crafts, which should promote the innovative design of wickerwork products. However, the disadvantage is that the massive mathematical calculations in the system may be unfriendly to designers, which cannot be as free and flexible as design manual drawing.

Therefore, this study uses AI technology to achieve the natural integration of “new” and “old” diversified aesthetic styles and traditional wickerwork techniques according to style transfer, which could fill the cultural gaps between traditional and modern art, so that the traditional wickerwork culture could acquire vitality in the new cultural background and innovate to produce more beautiful, harmonious and innovative design schemes of wickerwork products. Moreover, this study could propose a new implementation path for the innovative development of wickerwork pattern design so as to achieve sustainable development goals about this traditional craft.

2.2. Deep Learning

Deep learning could discover distributed feature representations of data by combining low-level features to form more abstract high-level representations (attribute classes or features) [36]. Deep learning essentially contains multilayer perceptron with multiple hidden layers (MLP), which are artificial neural networks with deeper layers that enable computers to reach high human intelligence by simulating the biological nervous system. Deep learning models [37] show a powerful ability to learn sample features by learning a deep nonlinear network structure to achieve complex function approximation and characterize distributed representations of input data.

In deep learning field, convolutional neural networks (CNN) are the most commonly used deep neural network for processing computer vision tasks. Based on sharing convolutional kernels, CNNs can process high-dimensional data and extract features in images such as texture features, shape features, boundary features, grayscale features, topological features, and relational structures by layered convolution as long as the training parameters are set. Krizhevsky et al. [38] proposed AlexNet, which is a convolutional neural network based on LeNet-5. Simonyan et al. [39] proposed VGGNet with 16 to 19 layers of deep convolutional neural nets, and found that deepening the depth could improve the accuracy of object recognition. Then, the Google team proposed an improved convolutional neural network model GoogLeNet model in 2014 [40], whose core idea is to optimize the performance of convolutional neural networks by increasing the depth and width of the network model [41]. He et al. [42] present a residual learning framework network with a depth of 152 layers to improve the accuracy of image recognition. In the field of product design, Ding et al. [24] described a product color sentiment design method based on DCNN and search neural network. Wu and Zhang [43] used deep learning for innovative design of umbrella products.

The continuous integration of convolutional neural networks with some traditional algorithms and the introduction of transfer learning methods have led to the rapid expansion of the application area of convolutional neural networks, which have achieved substantial accuracy improvement on small sample image recognition databases through transfer learning [44]. Some different experts and scholars have conducted different studies on image art style migration processing. Sun et al. [45] proposed a CNN architecture with two paths by extracting object features and texture of images separately, to achieve image style recognition and output image classification. With the continuous extension and development of image processing methods and techniques, some creative style transfer thinking and methods have been developed. Dong et al. [46] introduced artificial intelligence (AI) technology into animation design by taking the film “Van Gogh Eye” as an example, and used the style transfer algorithm to migrate the painting elements in Van Gogh starry sky to the film to derive the unique role played by style transfer in art creation. In summary, the style transfer based on deep learning has become a trend in the field of art creation. However, in the field of pattern and innovative design of traditional heritage crafts, there are few studies on image recognition and style transfer based on deep neural networks. In view of DCNN have strong self-learning feature extraction and nonlinear classification capabilities, the deep learning model algorithms can be applied to interpret and learn the color and graphic features of wickerwork, and could transform the wickerwork patterns skills to digital weaving, thus promoting the digital inheritance and sustainable development of the traditional wickerwork patterns craft.

2.3. CycleGAN

The CycleGAN used in this experiment comes from the generative adversarial networks (GAN). The GAN is a deep neural network model, which is essentially a generative model for learning data distribution through confrontation. Goodfellow et al. [47] proposed a generative adversarial network model consisting of a generator and a discriminator, which essentially trains two networks, a generative network and a discriminator network. The generator aims to fool the discriminator by generating images that are indistinguishable from real images. The discriminator tries to classify “real” and “fake” images. The generator and discriminator are jointly trained by solving the following min and max. The generative model G continuously improves its ability to generate close to real samples through the discriminator D to distinguish true and false, and the discriminator D continuously improves its ability to distinguish the authenticity of generated samples by continuously learning real samples. The two networks continue to improve through mutual games. Their respective performance until the generative model and the discriminative model cannot improve, so the generative model will become a more perfect model [48]. The emergence of the GAN provides a powerful algorithm framework for the creation of unsupervised learning models, and it subverts the traditional artificial intelligence algorithm. It can learn the inherent laws of the real world, which have stronger feature learning and expression capabilities than traditional algorithms.

Subsequently, with the continuous extension and development of image processing methods and technologies, some creative thinking and methods have also been continuously developed. Various adversarial models based on confrontational networks have been continuously proposed, and emerging style transfer algorithms have been widely used in the field of art in recent years, a variety of related migration algorithms have been proposed by scholars to achieve image style conversion. The cycle-consistent GAN (CycleGAN) proposed in the literature [25] is different from GAN in that it is no longer a separate generator G and discriminator D, but it is two G and two D. The mapping relationship between two images can be generated through two G and two D. Setting two generators and discriminators can prevent images in a style field from being mapped to a picture. To ensure the effect of generating images, the network introduces a cycle loss function, so that the cycle during training can be kept consistent. The most important thing is that this model breaks the limitation that the pix2pix model data set can only be paired pictures. This proposed cycle-consistent GAN realizes self-constraint, through two-step transformation of the original domain image: first map it to the target domain, and then return to the original domain obtains the secondary generated image, therefore eliminating the requirement of image pairing in the target domain. To use the generator network to map the image to the target domain, and matching the generator and the discriminator, the quality of the generated image can be improved. Comparing the secondary generated image with the original image, when the distribution of them is consistent, it can be judged that the generated image of the target domain is also reasonable. Zhang proposed [49] a style transfer method for animated clothing combining fully convolutional network (FCN) and CycleGAN, which achieves instance style transfer between animated clothing with specific goals. Zhang proposed [1] to construct an automatic generation network model of art image sketches based on CycleGAN, which is used to extract the edge and contour features of art images. The experimental results show that this network model can effectively realize the automatic generation of art image sketches. Fu used [37] an image art style transfer algorithm to quickly implement image art style transfer based on Generative adversarial networks.

It can be seen that CycleGAN is widely used in the design field, and the advantages of using style transfer learning CycleGAN for design are mainly focused on two aspects. First, they can generate diverse design images in a short time, and complete inter-mapping between two images to produce more diverse results so as to save development time and reduce costs in the design process. Second, innovative designs can be created to meet the needs of customers. However, style transfer has not yet been applied at the creative design level of Funan wickerwork patterns, and its technology has not yet fully exploited its advantages. Therefore, this study proposes the design method based on CycleGAN training and apply this method to the innovative and sustainable design process of Funan wickerwork patterns. The CycleGAN may generate creative image information, and learn favorable immediate features from a large number of unlabeled images through deep learning so as to generate new pattern design solutions.

3. Methods

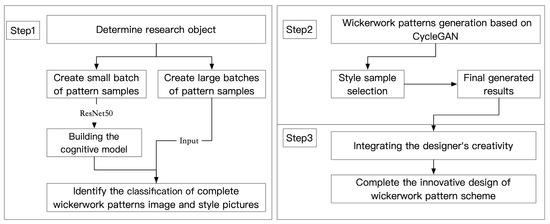

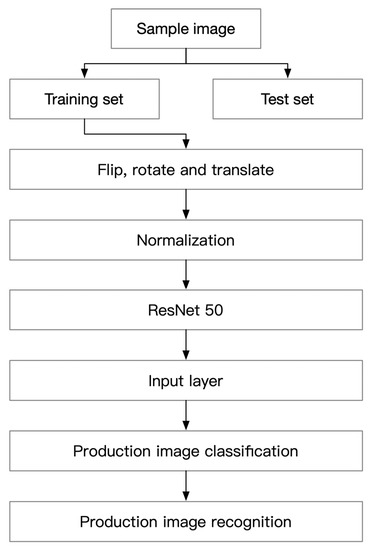

The study constructs a digital design method based on the deep learning method for the sustainable development of patterns, and the specific process are shown in Figure 1. First, it is necessary to establish a database of Funan wickerwork patterns, build an imagery cognitive model of Funan wickerwork patterns based on ResNet-50, and use the model to automatically filter traditional Funan wickerwork patterns, modern design styles and traditional design styles from a large database of pattern images, so as to obtain a large image data set of Funan wickerwork patterns and various style features. Second, the database of traditional Funan wickerwork patterns and style features is used as input, and the database is used for style transfer learning of CycleGAN network training, and by learning the sample probability distribution mapping relationship of traditional wickerwork patterns, the CycleGAN will intelligently generate wickerwork patterns matching specific styles and eventually provide rich inspiration for designers. Finally, the designer will use the generated wickerwork patterns as new design inspiration, and express his creativity on the computer-generated work, and integrating his own ideas and experience, so as to complete the creative design of the wickerwork patterns. Accordingly, this proposed method will generate new patterns of wickerwork styles and provide digital design services for wickerwork patterns, which could help to reduce the risk and time cost in the development process of new wickerwork patterns.

Figure 1.

Sustainable design process for Funan wickerwork patterns.

3.1. Establishment of ResNet Image Recognition Model

The image recognition on wickerwork patterns is constructed based on deep convolutional neural network of ResNet, and the technical route is shown in Figure 2.

Figure 2.

Product image recognition based on the ResNet50.

3.1.1. Constructing a Data Set of Wickerwork Patterns

In this study, a total of 12,450 pictures of traditional pattern samples and modern design patterns of Funan wickerwork were collected through online data collection, literature review, and field survey. The size of each sample in the pattern dataset is different, but roughly square. The samples must be processed to ensure that the size and resolution of each sample picture are identical, so as to build a database of Funan wickerwork patterns. Some samples are selected from the dataset for display, as shown in Figure 3.

Figure 3.

Some experimental sample cases.

In general, the convolutional neural networks require input sample data of the same length and width. To make the length and width of the image sufficient for the training of the convolutional neural network, the sample images are scaled to 224 × 224 by bilinear interpolation. In addition, in order to reduce the risk of overfitting the convolutional neural network training, data augmentation is used to extend the sample diversity using traditional flip, rotate and operations to fully use the data set and extend the diversity of the data set, thus improving the generalization ability of the convolutional neural net.

3.1.2. Product Image Recognition Establishment

The core problem of ResNet (residual network) is in dealing with the side effects (degradation problems) caused by increasing the depth [42]. To improve the performance of the convolutional neural network, when the depth of the network is continuously increased, the effect of the network becomes worse and worse as the depth of the network is increased, and even the problem of gradient disappearance and explosion of the network exists. To this end, to solve the gradient disappearance phenomenon in network deepening, He Kaiming proposed residual convolutional neural networks (ResNet) to further deepen the network while improving the performance of image classification tasks.

The ResNet consists of stacked residual blocks, which in addition to containing weight layers, also connect the input x directly to the output through the cross-layer connection, where F(x) is the residual mapping and H(x) is the original mapping. The residual network makes the stacked weight layers fit the residual mapping F(x) instead of the original mapping H(x), then F(x) = H(x) − x, and learning the residual mapping is simpler than learning the original mapping. In addition, the cross-layer connection allows the features of different layers to be passed to each other, which alleviates the gradient disappearance problem to a certain extent.

The ResNet has achieved greater success in image classification tasks by stacking residual blocks to bring the network depth to 152 layers. In this study, we use ResNet50 for fine-tuning training of wickerwork pattern recognition, and the network structure is shown in Table 1 [42].

Table 1.

Overall structure of ResNet50 network model [42].

The network structure is shown in Table 1. The ResNet50 contains 49 convolutional layers and one fully connected layer, where the ID blocks (identity blocks) × 3 in stages 2 to 5 represent 3 residual blocks of constant size. Each residual block contains three convolutional layers, so there are 1 + 3 × (3 + 4 + 6 + 3) = 49 convolutions.

The ResNet50 consists of five stages and a fully connected classification layer, and the network input is a data preprocessed image of a wickerwork pattern sample. After the image input of the convolution layer and the convolution operation of the convolution kernel, the features of the sample are extracted. The convolution is the main operation of feature extraction. Stage 1 is the convolution block of common CONV+BN+ReLU+MAXPOOL, and stages 2 to 5 are the residual convolution blocks. This is the reason ResNet50 implements this deep layer, and the output of the last fully connected layer FC is a two-dimensional vector representing traditional wickerwork patterns and modern tattoos. Each convolutional layer is followed by the ReLU activation function, which increases the nonlinearity of the network and has the advantages of high computational efficiency, no gradient saturation and fast convergence compared to the traditional S-shaped and tanh activation functions, which can be expressed as:

The output formula of the neuron is as follows:

where, Wi−1,k represents the weight k in the i − 1 layer, bi−1 represents the bias of the i − 1th layer. Next, it is necessary to further calculate the cross entropy loss function of a single sample:

In fact, the training process of the entire network is the process of finding the minimum parameter of the loss function. Then, the loss function (L) is calculated by forward propagation. Then use the stochastic gradient descent algorithm of backpropagation to calculate the loss function derivative of each layer weight.

3.2. Generating Wickerwork Pattern Design Image Based on CycleGAN

The Cycle GAN model is different from the GAN model in that instead of separate generator G and discriminator D, the construction of mapping relationships is done by double G and double D. The model can migrate the artistic style to the wickerwork pattern design, and also migrate the wickerwork pattern design to the specific style design scheme, thus it has a more diverse generation effect than the general style transfer model, which enriches the experimental results and improves the generation variety, thus saving the cost for the enterprise and speeding up the iterative process of the traditional craft pattern of wickerwork pattern.

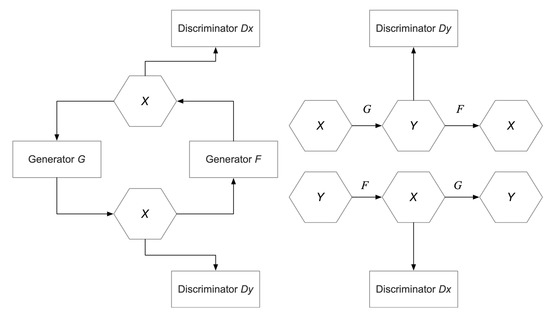

Algorithm Structure

The goal of CycleGAN is to train the mapping function between two domains X and Y. The model includes two generators G:X→Y and F:Y→X, and two adversarial discriminators DX and DY. DX is used to distinguish the image set {x} from the transformed image set {F(y)}, and similarly, DY is used to distinguish the image set {y} from the transformed image set {G(x)}. The network differs from GAN in that there are no longer separate generators G and discriminators D, but two G and two D. The generation of mapping relationships between two images can be accomplished by two G and two D. Setting two generators and discriminators can avoid a style field of images are mapped to one image and ensure the effect of the generated images. The network introduces a cyclic loss function, which can keep the consistent of the cycle training process, and the specific implementation step is shown in Figure 4.

Figure 4.

Overall structure of ResNet50 network model [42].

In the recurrent consistency adversarial network, the generator G represents the mapping relationship from image X to image Y, while the generator F represents the mapping relationship from image Y to image X. The X image can produce images more like the Y style through the mapping relationship, and in turn the Y style images can also produce images from the X style domain through their mapping relationship, and the discriminator Dx to determine the authenticity of images from the X style domain The discriminator Dy is used to determine the authenticity of the images from the Y style domain.

Adversarial Loss

CycleGAN could apply adversarial losses to both mapping functions. For the mapping function G : X→Y and its discriminator DY, the objective function is expressed as [25]:

DY aims to discriminate the transformed sample G(x) from the true sample y. G tries to generate G(x) similar to the image in the domain Y. G aims to minimize the objective function, while DY aims to maximize the objective function, i.e., minGmax DYLGAN(G, DY, X, Y). Similarly, the same adversarial loss function is used for the mapping function F:Y→X and its discriminator DX as well is expressed as: minFmax DXLGAN(F, DX, Y, X).

Cycle Consistency Loss

In fact, the adversarial loss can train mappings G and F that produce outputs with the same distributions as the target domains Y and X, respectively. However, if the network is trained well enough, it can map the same input image to any random image in the target domain, where any trained mapping can make the output distribution match the target distribution, and the output image matches the target domain well, but does not necessarily correspond to the input image. Therefore, only adversarial loss is no guarantee that the learned function can map a single input xi to a desired output yi. Therefore, CycleGAN indicates that the trained mapping function should have cyclic consistency: for each image x in the X domain, the cyclic transformation of the image should convert x back to the original image, i.e., x → G(x) → F(G(x)) ≈ x. This cycle is called forward cyclic consistency. Similarly, for each image y in the Y domain, G and F should satisfy the reverse cyclic consistency: y → F(y) → G(F(y)) ≈ y. To achieve this, the loss function in CycleGAN is defined as [25]:

Full Objective

The full objective is:

where λ controls the relative importance of the two objectives. Zhu et al. [25] made some comparisons when defining the complete loss function, including the use of adversarial loss alone and cyclic consistency loss, and the results showed that both objective functions play a key role in obtaining high-quality results.

3.3. The Network Architecture of CycleGAN

The network structure of the generator consists of three convolutional layers, some residual blocks, two deconvolutional layers with a stride of half, and a convolutional layer that maps features to RGB. It uses six residual blocks for images of size 128 × 128 and nine residual blocks [50] for images of size 256 × 256, while using instance normalization. The network structure of the discriminator uses a 70 × 70 PatchGAN [51], which identifies 70 × 70 partially overlapping image patches as real or fake. Such structures that classify blocks have fewer parameters than those that classify entire images, which can be applied to images of any size in a fully convolutional manner [51].

3.4. Training Parameter Setting

The CycleGAN uses two techniques to make the model training more stable. The first is to use a least squares loss function [52] instead of a negative log-likelihood function for the adversarial loss function. In fact, the discriminator network of traditional GAN uses the cross entropy loss function, which is prone to gradient spread during training. Additionally for some generated samples, if the discriminator D decides that they are real, the samples will not be optimized anymore. Hence, this least squares loss function could make the training more stable and also produces higher quality results. For the adversarial loss function LGAN(G, D, X, Y), the training generator G minimizes Ex~Pdata(x)[(D(G(x))−1)2] and the training discriminator D minimizes Ey~Pdata(y)[(D(y)−1)2]+Ex~Pdata(x)[D(G(x))2] is minimized.

To reduce model oscillation [53], the discriminator was updated using previously generated old data rather than recently generated new data [54]. An image buffer was used for training to store 50 previously generated images. In each training round, a portion of the buffer is updated randomly while the discriminator loss values are calculated by combining the newly generated images and the images in the buffer.

The Adam optimizer [55] with a batch size of 1 was used in the training by setting λ = 10. All networks were trained starting from a learning rate of 0.0002. A total of 200 rounds were trained, maintaining a learning rate of 0.0002 for the first 100 rounds and linearly decreasing the learning rate to 0 gradually for the last 50 rounds.

4. Experimental Results

4.1. The Performance Results of Image Recognition Model

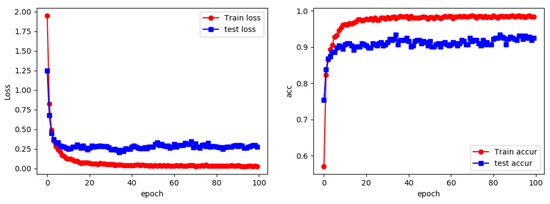

Randomly selected 2350 wickerwork and style patterns from a large database were trained by ResNet50 to fine-tune the traditional wickerwork patterns product images to obtain a product image recognition model and classify the patterns images. The evaluation model was written in Python, and the ResNet structure was implemented by the Pytorch environment, and the model was trained using a small volume of the wickerwork pattern imagery dataset. The number of iterations is set to 100, and the base learning rate α is 0.001. As shown in Figure 5, we can see that as the training time deepens, the network converges more and more, and the loss values of the training and test sets are becoming closer to 0, and the convergence is basically completed at the 40th epoch. Due to the inconsistency between the training and test datasets, the loss and accuracy of all test datasets are lagging. The best recognition rate of ResNet50 for the whole dataset of pattern design images is 93.37%. The recognition rate of modern pattern is 89.47%, while the recognition rate of traditional pattern is 97.14%, the recognition rate of wickerwork pattern is 95.95%, and the recognition rate of novelty is 90.91%. The experimental results are shown in Table 2, which could show that the ResNet50 model has strong effectiveness and is capable of the task of image recognition for classic wickerwork pattern, modern pattern, traditional pattern, and personalized pattern.

Figure 5.

The accuracy and loss curve.

Table 2.

The recognition accuracy.

As shown in Figure 5, the results could reveal that the accuracy rate on the training set has been climbing and achieve the relatively high level of accuracy, while the accuracy rate on the validation set also has been stable, which is remained at around 90%. Thence, it can be found that there is no overfitting effect.

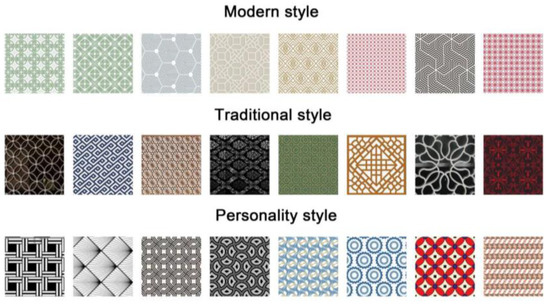

4.2. Identification and Analysis of High-Volume Samples

The ResNet wickerwork patterns cognitive recognition model was applied to classify the remaining 10,100 samples of wickerwork patterns images and pattern patterns in the sample library. The principles of style transfer sample selection include two parts: style samples and content samples. First, this study focuses on the research of the modern style, traditional style, and personalized style of wickerwork patterns design, and to use the emotion recognition model established by ResNet50, the representative patterns of different typical styles, including modern style, traditional style, and novel style patterns, are automatically extracted from the large number of pattern samples as the research objects, which could be used as the samples for further style transfer. Therefore, the small volume of wickerwork patterns data set is established by manual classification is not enough to meet the requirements of the experiments, and the generated experimental results are not good. Therefore, the pattern evaluation model built by ResNet50 was applied to classify the remaining pattern samples in the sample database in order to quickly obtain 2855 real Funan wickerwork patterns, 1880 modern style pattern patterns, 1300 novelty style pattern patterns, and 4065 traditional style pattern patterns from the large volume of pattern imagery dataset. Some of the experimental results are shown in Figure 6.

Figure 6.

Style samples.

4.3. Integration of Wickerwork Patterns Dataset

Based on the determination of the style picture, the content samples need to be clarified. The content samples are derived from the wickerwork patterns identified in the large volume sample set. Therefore, the large volume imagery dataset containing 2855 pattern design samples and the small volume wickerwork pattern imagery dataset containing 917 samples are aggregated to obtain the final imagery dataset containing 3772 wickerwork patterns, which is used for model training and style transfer of subsequent pattern design schemes.

4.4. Transfer Results of Wickerwork Patterns Based on CycleGAN

The test platform is based on the window system, configured with graphics card Nvidia RTX 3070, using the experimental environment conditions for python3.10+pytorch1.12 platform, installed the python common third-party support library, adjusted the feature category, run the program, the initial parameters set for the computing process training rounds set to 200, batch_size set to 4. The networks computational characteristics were trained from scratch with a learning rate of 0.0002, and the learning rate is held constant for the first 100 epochs and decays linearly to zero for the next 100 epochs, and then λ is set to 10 of Equation (6). In addition, the computational time of modern, personalized, and traditional styles is 39, 43, and 56 hours, respectively. Finally, the style migration output of the wickerwork pattern was completed.

The pattern generation model outputs multiple final pattern design schemes as shown in Table 3, Table 4 and Table 5. The model output result is the overall image of the wickerwork pattern, the overall generated result is relatively clear and bright, the details are relatively rich in variation, the color is saturated, and the color contrast is moderate. Compared with the traditional method which only outputs the numerical information of design parameters, it can be more intuitive and faster to feel the overall pattern performance of the product, thus improving the efficiency of designers.

Table 3.

The style transfer results (Traditional).

Table 4.

The style transfer results (Modern).

Table 5.

The style transfer results (Personality).

After 200 rounds of iterations, the CycleGAN model finally converges. It will generate some shallow classical wickerwork patterns with specific styles, and it can be seen that the generated classical wickerwork patterns effectively combine the morphology of the original images with the target style feature information in terms of color distribution and graphics. The outlines and patterns of the elements are clearer overall and conform to the characteristics of traditional Funan wickerwork cultural elements. Obviously, the newly generated image after style transfer includes both the content features in the content image and the basic information such as detail texture and color style in the image. This experimental style transfer-generated picture A compared to the original picture, to achieve B content, integrated with the style of A, and the transfer-generated picture B compared to the original picture, to achieve A content, integrated with the style of B. The transition in color is natural, and the texture of the pattern is presented more spiritually and smoothly. Furthermore, it not only integrates the innovative elements of traditional culture of wickerwork, but also reflects the style characteristics of modernity, tradition, and individuality. However, the clarity of the generated images is relatively low compared with the real traditional wickerwork pattern design, and the quality of the images needs further improvement, which may be due to the the background and color of the traditional wickerwork pattern being more complex, and usually has a richly varied pattern texture, while CycleGAN can only learn and produce blurred images due to technical instability.

The experiment finally combined the wickerwork pattern content samples and three design styles (modern, traditional, and personalized) to generate 5572 newly generated wickerwork pattern images with specific style characteristics, including 1536 personalized style, 2146 modern style, and 1890 traditional style. To verify the validity of the CycleGAN model, many low-quality pattern images that do not meet the requirements need to be eliminated and 12 experts with more than three years of design experience selected 16 creative wickerwork design solutions from each of the newly generated samples with modern style, traditional style, and personalized style for verification based on texture clarity, integrity, and shape of images, as shown in Figure 7.

Figure 7.

The exported wickerwork pattern design scheme.

The final style transfer effect was measured by semantic difference method [56] for user satisfaction. Three hundred twenty-five people were selected to participate in the evaluation, including 140 male users and 185 female users, and basic personal information including age, education, occupation, and consumption ability were researched to ensure the extensiveness and objectivity of the research subjects. The age range of 18 to 25 years old accounted for 44.32%, 25–35 years old accounted for 24.18%; In the education level, 43.21% have a bachelor degree, 29.27% have a graduate degree or above. In addition, participants included 210 design students, 61 designers and wickerwork craft people, and 54 people in the industry. Subjects were asked to score the satisfaction of the experimentally generated style transfer samples considering the dimensions of form, color, texture, and composition, on a scale of 1–5 integers, with 1 indicating dissatisfaction with the generated effect, 3 means that the generation effect is ordinary, and 5 means that the generation effect is very satisfactory. Furthermore, the average of the results was calculated so as to obtain the satisfaction preference of 325 subjects on the generation scheme of wickerwork patterns.

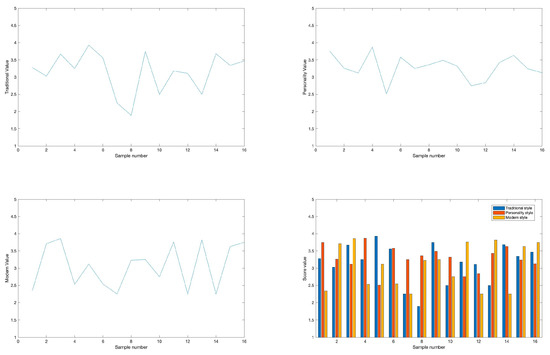

The Cronbach Alpha is performed in SPSS to measure the interior consistency and reliability for questionnaire. According to the Cronbach a reliability analysis results, the reliability value was once 0.845, indicating that the research results indicate a very satisfactory reliability of this study [57]. The obtained results are shown in Table 6, Table 7 and Table 8 in which the average score of traditional wickerwork pattern is 3.15, where the highest score is 3.93, the average score of individual wickerwork pattern is 3.28, of which the highest score is 3.87, and the average score of modern wickerwork pattern is 3.07, of which the highest score is 3.86. The specific experimental results of the three styles are shown in Figure 8.

Table 6.

Generate design solution assessment values (Traditional Style).

Table 7.

Generate design solution assessment values (Personalized style).

Table 8.

Generate design solution assessment values (Modern style).

Figure 8.

The assessment results of the style transfer generation solution.

To set the user satisfaction rating threshold to 3, the number of generated samples with scores higher than 3 were 12, 13, and 9 for traditional, personalized, and modern types, respectively, totaling 34, accounting for 70.83%, which shows that this style transfer experiment is effective in the application of Funan wickerwork patterns. It can be seen that the CycleGAN-based pattern generation model can innovatively generate pattern design solutions, and the generated solutions can effectively stimulate the emotional needs of users while ensuring diversity so as to achieve innovative and sustainable development of wickerwork patterns.

4.5. The Wickerwork Patterns Design Innovation Based on Designers

To use the CycleGAN of transfer learning to design innovative Funan wickerwork patterns, as long as the real wickerwork pattern image is entered and the style is selected as traditional style, personalized style, and modern style, we can obtain the image after conversion between wickerwork and style, which will generate more diversified creative pattern design solutions efficiently. Therefore, this method has high efficiency, easy modification, and low cost. However, there are two limitations: first, the target style and its own content shape information cannot be changed at will, and style transfer can only be carried out based on this information, which lacks the flexibility of the hand-drawn method; second, from the new image generated by CycleGAN, due to the different noise distribution in some specific areas, the generated image has a clear form but blurred pixels, which cannot be used as the final mature willow pattern creative design scheme. In addition, design is a creative activity with strong subjectivity and uncertainty [58], it often does not have clear rules, which require designers to continuously diverge and converge in the design process and integrate social consciousness and aesthetic integrity to design solutions.

To achieve this goal, we invited eight professional designers and six graduate students to refine the creative images, and launch the innovative design. The creative stage mainly started from the generated pattern results, mainly from the innovative design of Funan wickerwork pattern. With the intervention of designers, the design of the traditional wickerwork cultural elements generated by the computer experiment can be improved, so as to complete the innovative development and design of the pattern.

4.5.1. Detailed Design

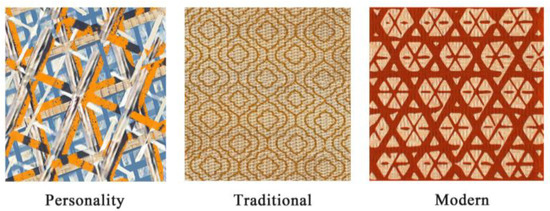

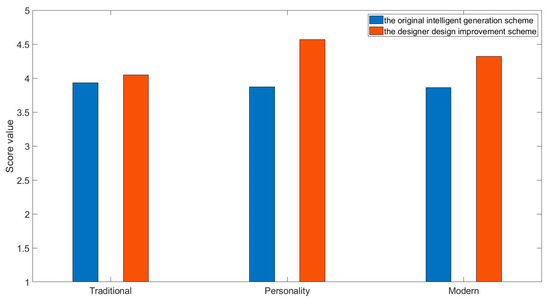

In the process of designers participating in the wickerwork design, the highest rated option of modern, personalized, and traditional styles is selected as a prototype and then designed under the following guidelines: the main features of the selected image should be retained and interesting form designs, pattern designs, or pleasing color designs should be used as design examples. Furthermore, the designer uses design software to work on the creative design. Finally, three wickerwork patterns with clear appearance are created (Figure 9).

Figure 9.

Three modified wickerwork design solutions.

In this in-depth detailed design process, the designers combined their subjective judgment with aesthetic and emotion-based effective guidance of the design, and the process is a human-computer interaction design process [59]. The CycleGAN model of artificial intelligence is used to generate creative wickerwork pattern prototypes, and the designer is responsible for visualizing the detailed design to obtain a final solution design that is attractive to the customer. Through the generated images of diverse stylistic designs, designers could extend their innovations through in-depth creative thinking. Thus, this proposed design approach that integrates computer intelligence and human intelligence reduces the risk of uncertainty and increases the efficiency of emotional design.

4.5.2. Aesthetic Judge of Design Solutions

In this section, we tested customer perceptions of aesthetics and preferences for three new pattern designs. A total of 212 participants (89 males and 123 females) answered the questionnaire online and offline. The Cronbach alpha was 0.83 so as to indicate a high reliability of this survey.

The results of the aesthetic evaluation scored 4.57 for personalized wickerwork patterns, 4.05 for traditional wickerwork patterns and 4.32 for modern wickerwork patterns. We compared three new wickerwork patterns with three highest scoring wickerwork patterns in original styles, the result is shown in Figure 10. We can conclude that the designer wickerwork pattern scheme is significantly higher than the CycleGAN wickerwork pattern score. Thence, this result indicates that this proposed design method of combining CycleGAN training with professional designer can create aesthetically pleasing pattern design solutions so as to complete the innovative and creative design of the pattern scheme.

Figure 10.

Comparison of three modified wickerwork design solutions and original solutions.

5. Discussion

5.1. The Strength of DCNN in the Creative Design

The DCNN model is one of the superior performing deep learning models for various image processing tasks, which can realize supervised and unsupervised learning. Currently, the CNN model has entered a boom period because they are able to automatically extract features with multiple levels of abstraction from a large number of images [6]. The sparsity of the convolutional kernel parameter sharing within the hidden layers and the inter-layer connectivity allows DCNN to produce stable results on lattice-like topological features with a small computational effort. In this study, we use ResNet50 to construct the association relation between the pattern and the user emotional cognition, and achieve the automatic recognition of wickerwork patterns with the advantages of automation and systematization under the imitation of human cognitive features. Compared with the traditional DCNN, ResNet has better image classification ability. In addition, traditional methods make the classification of pattern appearance relies on a series of questionnaires consisting of images and perceptual attributes to perform [14,60,61,62], which have the disadvantages of small data size and one-time so as to undoubtedly consume a lot of time and money [2,20].

To verify the advantages of ResNet in wicker work image recognition, the same dataset was used for experiments in VGGNet. VGGNet16 contains 16 layers with weights, including 13 convolutional layers and three fully connected layers. The model is used to train wickerwork images. The initial learning rate of FC3 is set to 0.001 and the learning rate is divided by 10 every 100 iterations. The whole training process is done using Caffe and is trained on the wickerwork image dataset. Table 9 shows the final recognition accuracy of VGGNet16 and ResNet.

Table 9.

The recognition accuracy results of wickerwork pattern based on VGGNet.

According to Table 9, the ResNet has a significant advantage over VGGNet in the recognition rate of wickerwork pattern images. It can be seen that the ResNet model achieves an accuracy rate of 93.37% for the whole pattern design scheme, but VGGNet only achieves 84.31%. Specifically, the ResNet achieves 89.47%, 97.14%, and 90.91% for three styles of modern, traditional and personalized style. Thus, the usability and robustness of the ResNet model were verified.

5.2. The Advantages of CycleGAN in the Sustainable Development of Traditional Crafts

In today information age, there is unprecedented space for the creativity and expression of traditional non-traditional arts and crafts, and a large number of new art forms have been created. In the wave of art and technology integration, traditional crafts are also actively embracing changes with various forms and different core ideas. The CycleGAN model can be used reasonably to learn the texture of traditional craft patterns, interpret and learn the color and graphic features of wickerwork techniques, and generate rendering results based on input conditions with one click, which can achieve good results even without monitoring learning. The use of style transfer algorithms to generate modernized traditional cultural works and crafts has two advantages, on the one hand, it provides more possibilities for the innovative design of Funan wickerwork patterns and makes the texture of wickerwork patterns more diversified; on the other hand, it also finds a more complete solution for the digital preservation and inheritance of wickerwork craft, so that to help to digitally present the culture of wickerwork pattern techniques.

In fact, the style transfer algorithm based on artificial intelligence can quickly and effectively generate diversified picture styles, which can greatly improve efficiency and save manpower and material resources, while creating unique and novel effects of craft expressions. Then, this model is bound to provide more convenience for the creative design of traditional crafts and powerful technical support for the innovative creation of crafts, thus expanding the dissemination path of wickerwork non-heritage culture.

Finally, the professional designer retains the characteristics of CycleGAN generated images in the final design stage of detailed design visualization, but adds the designer subjective thinking about emotional design. As a result, the final new design retains both the CycleGAN generated image elements and the designer personal creative perspective, incorporating his understanding of traditional cultural and stylistic features. Hence, this proposed method has a more purposeful grasp of customer needs, improved design efficiency, and potentially increased user satisfaction.

6. Conclusions

In the information age, AI technologies has provided unprecedented space for artistic creativity and expression, and has triggered a large number of new art forms. To better assist designers in creative and innovative design, this study proposes the Funan wickerwork image recognition model based on DCNN, the automatic classification of large-scale design attributes can be realized, while avoiding manual repetitive and time-consuming operations. Experimental results show that modern patterns, traditional patterns and personalized patterns all achieve high emotion recognition rates. Second, this study uses the style transfer algorithm technology to combine the Funan wickerwork patterns with the pattern content samples containing modern, traditional and personalized styles. According to the final results of the style transfer experiment, it can be seen that the transfer of the three styles to the wickerwork patterns is relatively satisfactory, which effectively extracts the style features of patterns, including color, composition, texture, and other elements, and reconstructs the content of wickerwork patterns, so as to realize innovative and sustainable development of wickerwork patterns. Then, the designer selected the highest rated scheme based on the generated solutions and completed the design improvement to achieve the goal of satisfying customer needs. The results show that this proposed method can help designers to further develop more popular and competitive Funan wickerwork patterns. The main contribution of this approach is the application of CycleGAN to support the design process, which could facilitate the automated design of innovative images, shorten the design process, reduce design costs for subsequent design work. The main conclusions of this study are as follows:

(1) This study establishes a recognition model of wickerwork patterns based on ResNet, which can quickly complete the automatic recognition of different patterns and styles of wickerwork products.

(2) This study builds a Funan wickerwork pattern generation design model based on the style transfer algorithm of AI, and innovatively generates diversified pattern design styles of traditional wickerwork pattern, which effectively improves the diversity and innovation for design schemes.

(3) The designer uses the creative wickerwork pattern samples as a source of inspiration to develop the creative design of the wickerwork patterns. By combining the designer creation and AI algorithm for creative design, the strengths and weaknesses complemented each other, thus adding human subjective emotion to the computer-generated works of Funan wickerwork crafts from an artistic aspect.

However, this study has some limitations. First, the small-scale wickerwork pattern database used in this study may limit the quality of the results generated by CycleGAN. Second, CycleGAN also suffers from a long training time and unstable results, and more advanced techniques need to be used in the future to enhance effectiveness for transfer-generated pattern schemes. Finally, the style samples in the research are based on three pattern design styles recognized by the modern, personalized, and traditional patterns, which can be further expanded to explore other design style transfer application in this scenario.

Author Contributions

Conceptualization, T.W. and Z.M.; methodology, T.W.; software, T.W.; validation, T.W., F.Z. and L.Y.; writing—review and editing, T.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Anhui quality engineering project, grant number 2021jyxm0082 and Anhui University talent introduction research start-up funding project (No. S020318019).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, W.; Tsai, S.-B. An empirical study on the artificial intelligence-aided quantitative design of art images. Wirel. Commun. Mob. Comput. 2021, 2021, 8036323. [Google Scholar] [CrossRef]

- Wang, W.M.; Li, Z.; Tian, Z.G.; Wang, J.W.; Cheng, M.N. Extracting and summarizing affective features and responses from online product descriptions and reviews: A Kansei text mining approach. Eng. Appl. Artif. Intell. 2018, 73, 149–162. [Google Scholar] [CrossRef]

- Vilares, D.; Alonso, M.A.; Gómez-Rodríguez, C. Supervised sentiment analysis in multilingual environments. Inf. Process. Manag. 2017, 53, 595–607. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Lai, H.-H.; Yeh, C.-H. Consumer-oriented product form design based on fuzzy logic: A case study of mobile phones. Int. J. Ind. Ergon. 2007, 37, 531–543. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, L. Extracting knowledge for product form design by using multiobjective optimisation and rough sets. J. Adv. Mech. Des. Syst. Manuf. 2020, 14, JAMDSM0009. [Google Scholar] [CrossRef]

- Akgül, E.; Özmen, M.; Sinanoğlu, C.; Kizilkaya Aydoğan, E. Rough Kansei Mining Model for Market-Oriented Product Design. Math. Probl. Eng. 2020, 2020, 6267031. [Google Scholar] [CrossRef]

- Wang, C.-H. Incorporating the concept of systematic innovation into quality function deployment for developing multi-functional smart phones. Comput. Ind. Eng. 2017, 107, 367–375. [Google Scholar] [CrossRef]

- Wang, C.-H. Incorporating customer satisfaction into the decision-making process of product configuration: A fuzzy Kano perspective. Int. J. Prod. Res. 2013, 51, 6651–6662. [Google Scholar] [CrossRef]

- Wang, T.; Zhou, M. A method for product form design of integrating interactive genetic algorithm with the interval hesitation time and user satisfaction. Int. J. Ind. Ergon. 2020, 76, 102901. [Google Scholar] [CrossRef]

- Kwong, C.K.; Jiang, H.; Luo, X.G. AI-based methodology of integrating affective design, engineering, and marketing for defining design specifications of new products. Eng. Appl. Artif. Intell. 2016, 47, 49–60. [Google Scholar] [CrossRef]

- Gentner, A.; Bouchard, C.; Favart, C. Representation of intended user experiences of a vehicle in early design stages. Int. J. Veh. Des. 2018, 78, 161–184. [Google Scholar] [CrossRef]

- Jiao, Y.; Qu, Q.-X. A proposal for Kansei knowledge extraction method based on natural language processing technology and online product reviews. Comput. Ind. 2019, 108, 1–11. [Google Scholar] [CrossRef]

- Yadollahi, A.; Shahraki, A.G.; Zaiane, O.R. Current State of Text Sentiment Analysis from Opinion to Emotion Mining. ACM Comput. Surv. 2017, 50, 1–33. [Google Scholar] [CrossRef]

- Wang, T.; Zhou, M. Integrating rough set theory with customer satisfaction to construct a novel approach for mining product design rules. J. Intell. Fuzzy Syst. 2021, 41, 331–353. [Google Scholar] [CrossRef]

- Wu, Y.; Cheng, J. Continuous Fuzzy Kano Model and Fuzzy AHP Model for Aesthetic Product Design: Case Study of an Electric Scooter. Math. Probl. Eng. 2018, 2018, 4162539. [Google Scholar] [CrossRef]

- Yeh, Y.-E. Prediction of Optimized Color Design for Sports Shoes Using an Artificial Neural Network and Genetic Algorithm. Appl. Sci. 2020, 10, 1560. [Google Scholar] [CrossRef]

- Lin, L.-Z.; Yeh, H.-R.; Wang, M.-C. Integration of Kano’s model into FQFD for Taiwanese Ban-Doh banquet culture. Tour. Manag. 2015, 46, 245–262. [Google Scholar] [CrossRef]

- Yang, C.-C. Constructing a hybrid Kansei engineering system based on multiple affective responses: Application to product form design. Comput. Ind. Eng. 2011, 60, 760–768. [Google Scholar] [CrossRef]

- Mok, P.Y.; Xu, J.; Wang, X.X.; Fan, J.T.; Kwok, Y.L.; Xin, J.H. An IGA-based design support system for realistic and practical fashion designs. Comput.-Aided Des. 2013, 45, 1442–1458. [Google Scholar] [CrossRef]

- Guo, F.; Qu, Q.-X.; Nagamachi, M.; Duffy, V.G. A proposal of the event-related potential method to effectively identify kansei words for assessing product design features in kansei engineering research. Int. J. Ind. Ergon. 2020, 76, 102940. [Google Scholar] [CrossRef]

- Yang, C.-C.; Shieh, M.-D. A support vector regression based prediction model of affective responses for product form design. Comput. Ind. Eng. 2010, 59, 682–689. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Li, X.; Su, J.; Zhang, Z.; Bai, R. Product innovation concept generation based on deep learning and Kansei engineering. J. Eng. Des. 2021, 32, 559–589. [Google Scholar] [CrossRef]

- Ding, M.; Cheng, Y.; Zhang, J.; Du, G. Product color emotional design based on a convolutional neural network and search neural network. Color Res. Appl. 2021, 46, 1332–1346. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Yu, C.; Wang, W.; Yan, J. Self-supervised animation synthesis through adversarial training. IEEE Access 2020, 8, 128140–128151. [Google Scholar] [CrossRef]

- Haenlein, M.; Kaplan, A. A brief history of artificial intelligence: On the past, present, and future of artificial intelligence. Calif. Manag. Rev. 2019, 61, 5–14. [Google Scholar] [CrossRef]

- Pagán, E.A.; Salvatella, M.d.M.G.; Pitarch, M.D.; Muñoz, A.L.; Toledo, M.d.M.M.; Ruiz, J.M.; Vitella, M.; Lo Cicero, G.; Rottensteiner, F.; Clermont, D.; et al. From Silk to Digital Technologies: A Gateway to New Opportunities for Creative Industries, Traditional Crafts and Designers. The SILKNOW Case. Sustainability 2020, 12, 8279. [Google Scholar] [CrossRef]

- Wu, J.; Guo, L.; Jiang, J.; Sun, Y. The digital protection and practice of intangible cultural heritage crafts in the context of new technology. In E3S Web of Conferences; EDP Sciences: Les Ulis, France, 2021; p. 05024. [Google Scholar]

- Stefanidi, E.; Partarakis, N.; Zabulis, X.; Adami, I.; Ntoa, S.; Papagiannakis, G. Transferring Traditional Crafts from the Physical to the Virtual World: An Authoring and Visualization Method and Platform. J. Comput. Cult. Herit. 2022, 15, 1–24. [Google Scholar] [CrossRef]

- Saeed, M.A.; Al-Ogaili, A.S. Integration of Cultural Digital form and Material Carrier form of Traditional Handicraft Intangible Cultural Heritage. Fusion Pract. Appl. 2021, 5, 21–30. [Google Scholar] [CrossRef]

- Abdullah, Z.; Fadzlina, N.; Amran, M.; Anuar, S.; Shahir, M.; Fadzli, K. Design and Development of Weaving Aid Tool for Rattan Handicraft. In Applied Mechanics and Materials; Trans Tech Publ: Zurich, Switzerland, 2015; pp. 277–281. [Google Scholar] [CrossRef]

- Yang, C.; Peng, D.; Sun, S. Creating a virtual activity for the intangible culture heritage. In Proceedings of the 16th International Conference on Artificial Reality and Telexistence--Workshops (ICAT’06), Hangzhou, China, 29 November–1 December 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 636–641. [Google Scholar] [CrossRef]

- Chang, H.-C. Parametric Design Used in the Creation of 3D Models with Weaving Characteristics. J. Comput. Commun. 2021, 9, 112–127. [Google Scholar] [CrossRef]

- Yu, R.; Zhang, H.; Liu, C. Study on Three Dimensional Geometry Model of Willow Artwork. Comput. Appl. Softw. 2008, 25, 283–285. [Google Scholar]

- Bengio, Y.; Delalleau, O. On the expressive power of deep architectures. In International Conference on Algorithmic Learning Theory; Springer: Berlin, Germany, 2011; pp. 18–36. [Google Scholar]

- Fu, X. Digital Image Art Style Transfer Algorithm Based on CycleGAN. Comput. Intell. Neurosci. 2022, 2022, 6075398. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Zhuo, L.; Jiang, L.; Zhu, Z.; Li, J.; Zhang, J.; Long, H. Vehicle classification for large-scale traffic surveillance videos using convolutional neural networks. Mach. Vis. Appl. 2017, 28, 793–802. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE: New York, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, H. Image Style Recognition and Intelligent Design of Oiled Paper Bamboo Umbrella Based on Deep Learning. Comput.-Aided Des. Appl. 2021, 19, 76–90. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In European Conference on Computer Vision; Springer: Berlin, Germany, 2014; pp. 818–833. [Google Scholar]

- Sun, T.; Wang, Y.; Yang, J.; Hu, X. Convolution neural networks with two pathways for image style recognition. IEEE Trans. Image Process. 2017, 26, 4102–4113. [Google Scholar] [CrossRef]

- Dong, S.; Ding, Y.; Qian, Y. Application of AI-Based Style Transfer Algorithm in Animation Special Effects Design. ZHUANGSHI 2018, 1, 104–107. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zhang, A.; Lee, J. Animation Costume Style Migration Based on CycleGAN. Wirel. Commun. Mob. Comput. 2022, 2022, 3902107. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In European Conference on Computer Vision; Springer: Berlin, Germany, 2016; pp. 694–711. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA; pp. 1125–1134. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Liu, M.Y.; Huang, X.; Mallya, A.; Karras, T.; Aila, T.; Lehtinen, J.; Kautz, J. Few-shot unsupervised image-to-image translation. In Proceedings of the IEEE/CVF international conference on computer vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10551–10560. [Google Scholar]

- Shrivastava, A.; Pfister, T.; Tuzel, O.; Susskind, J.; Wang, W.; Webb, R. Learning from simulated and unsupervised images through adversarial training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, USA, 21–26 July 2017; pp. 2107–2116. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, T. A Novel Approach of Integrating Natural Language Processing Techniques with Fuzzy TOPSIS for Product Evaluation. Symmetry 2022, 14, 120. [Google Scholar] [CrossRef]

- Dou, R.; Zhang, Y.; Nan, G. Application of combined Kano model and interactive genetic algorithm for product customization. J. Intell. Manuf. 2016, 30, 2587–2602. [Google Scholar] [CrossRef]

- Ball, L.J.; Christensen, B.T. Advancing an understanding of design cognition and design metacognition: Progress and prospects. Des. Stud. 2019, 65, 35–59. [Google Scholar] [CrossRef]

- Gan, Y.; Ji, Y.; Jiang, S.; Liu, X.; Feng, Z.; Li, Y.; Liu, Y. Integrating aesthetic and emotional preferences in social robot design: An affective design approach with Kansei Engineering and Deep Convolutional Generative Adversarial Network. Int. J. Ind. Ergon. 2021, 83, 103128. [Google Scholar] [CrossRef]

- Hsiao, S.-W.; Ko, Y.-C. A study on bicycle appearance preference by using FCE and FAHP. Int. J. Ind. Ergon. 2013, 43, 264–273. [Google Scholar] [CrossRef]

- Wang, C.-H.; Wang, J. Combining fuzzy AHP and fuzzy Kano to optimize product varieties for smart cameras: A zero-one integer programming perspective. Appl. Soft Comput. 2014, 22, 410–416. [Google Scholar] [CrossRef]

- Cai, M.; Wu, M.; Luo, X.; Wang, Q.; Zhang, Z.; Ji, Z. Integrated Framework of Kansei Engineering and Kano Model Applied to Service Design. Int. J. Hum.–Comput. Interact. 2022. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).