Abstract

Speech translation systems have become indispensable in facilitating seamless communication across language barriers. This paper presents a cascade speech translation system tailored specifically for translating speech from the Kazakh language to Russian. The system aims to enable effective cross-lingual communication between Kazakh and Russian speakers, addressing the unique challenges posed by these languages. To develop the cascade speech translation system, we first created a dedicated speech translation dataset ST-kk-ru based on the ISSAI Corpus. The ST-kk-ru dataset comprises a large collection of Kazakh speech recordings along with their corresponding Russian translations. The automatic speech recognition (ASR) module of the system utilizes deep learning techniques to convert spoken Kazakh input into text. The machine translation (MT) module employs state-of-the-art neural machine translation methods, leveraging the parallel Kazakh-Russian translations available in the dataset to generate accurate translations. By conducting extensive experiments and evaluations, we have thoroughly assessed the performance of the cascade speech translation system on the ST-kk-ru dataset. The outcomes of our evaluation highlight the effectiveness of incorporating additional datasets for both the ASR and MT modules. This augmentation leads to a significant improvement in the performance of the cascade speech translation system, increasing the BLEU score by approximately 2 points when translating from Kazakh to Russian. These findings underscore the importance of leveraging supplementary data to enhance the capabilities of speech translation systems.

1. Introduction

Speech translation is a rapidly growing field that aims to bridge the language gap by automatically converting spoken utterances in one language into corresponding translations in another language. The application of speech translation systems has gained significant attention in recent years due to advancements in deep learning approaches. Deep neural networks (DNNs) have brought about a revolution in various speech-related research domains, including automatic speech recognition (ASR) and machine translation (MT). Notable breakthroughs in pure ASR have been achieved using DNNs, as evidenced by studies such as [1,2,3]. DNNs have also played a crucial role in advancing machine translation, as demonstrated by works such as [4,5,6,7]. This progress in MT has consequently propelled the development of speech translation (ST) systems. ST is gaining momentum due to its vast potential for various industry applications, ranging from facilitating person-to-person communication to enabling the subtitling of audiovisual content. The widespread adoption of DNNs has significantly contributed to the advancement of ST technology and its increasing relevance in real-world applications.

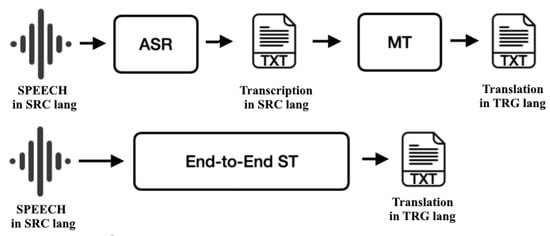

In the field of speech translation (ST), there are two main approaches: end-to-end and cascade (Figure 1). End-to-end models directly convert speech from a source language into a target language text representation, without relying on an intermediate discrete representation. These models train their parameters jointly and offer a promising solution to address error propagation. However, the scarcity of training data that include aligned speech, text and translation poses a challenge, especially for languages with limited resources or those that were not extensively studied with a view to investigating speech translation tasks, such as the Kazakh language.

Figure 1.

Cascade (above) and end-to-end (below) approaches to speech translation.

The cascade approach involves a two-step process where an ASR system transcribes the speech in the source language, and the resulting transcription is then fed into an MT system to produce the target language text. This approach has its own advantages and disadvantages compared to the end-to-end approach. One advantage of the cascade approach is that it allows for the training of strong independent ASR and MT systems. This is because high-resource languages often have abundant manually transcribed audio and parallel data available, which can be used to train accurate ASR and MT models. In contrast, end-to-end models require manually transcribed audio data in the source language aligned with text data in the target language, which is more expensive to produce and may not be available in the same quantity. However, the cascade approach also has its limitations. It relies on the accuracy of the ASR system, and any errors made during the transcription stage can propagate and affect the quality of the translation. In contrast, end-to-end models aim to directly map speech to text without relying on an intermediate transcription, potentially reducing the impact of error propagation. Moreover, the cascade approach faces challenges due to the distinct training of the ASR and MT systems using different parallel corpora. This results in a mismatch between the ASR system’s output and the expected input of the MT system. The ASR output lacks punctuation and case information and may contain errors such as omissions, repetitions and homophones. In contrast, MT systems are typically trained on cleaner written text. Another significant observation is that while there are abundant large-scale parallel corpora available for ASR and MT individually, there is a difficulty in linking them to form the necessary speech-to-translation pairs for end-to-end models or the speech-to-transcription-to-translation triplets for robust pipeline speech translation systems. Hence, despite the potential advantages of the end-to-end approach, the cascade approach remains a practical choice in many cases.

Our research makes several significant contributions in the field of speech translation (ST):

- −

- Firstly, we created the ST-kk-ru dataset. This dataset includes original transcriptions in Kazakh, which we carefully translated into Russian, providing valuable language resources for the Kazakh-Russian language pairs.

- −

- Additionally, we conducted extensive experiments to optimize the ASR component specifically for the challenging Kazakh language. We explored various advanced ASR techniques to achieve the most accurate word error rate (WER) results, ensuring reliable speech-to-text transcription.

- −

- Furthermore, we focused on enhancing the MT process for the Kazakh-Russian language pair. Through rigorous experimentation, we employed different MT methods and strategies, aiming to maximize the BLEU scores, thus ensuring high-quality translations.

- −

- Finally, to assess the overall performance and effectiveness of our speech translation pipeline, we rigorously evaluated its performance using a comprehensive test set, encompassing diverse speech and translation samples. This evaluation provides valuable insights into the system’s capabilities and highlights its potential for practical applications in real-world scenarios.

This paper is organized as follows: Section 2 provides an overview of the existing research on cascade speech translation methods, highlighting the relevant work in the field. In Section 3, we will delve into the details of the Kazakh-to-Russian speech translation dataset, explaining the data collection process and presenting relevant corpus-related statistics. Section 4 comprehensively describes the distinct methodologies employed to construct the ASR and MT modules of the cascade approach, shedding light on the technical aspects and considerations involved. Moving forward, Section 5 elucidates the experimental setup deployed in our study, encompassing the key parameters. We further present the obtained results and provide a detailed analysis of the outcomes. Section 7 focuses on the analysis and interpretation of the obtained outcomes. Finally, in Section 6, we draw the main conclusions derived from this research endeavor, summarizing the findings and implications and discussing potential future directions for further exploration in this field.

2. Related Work

This section presents an overview of related work in the field of cascade speech translation, as no prior research specifically focused on speech translation from Kazakh to any other language. We traced the development of cascade speech translation approaches from their early stages to the current state, providing valuable context for our research. However, it is important to note that this section does not cover the extensive research conducted on ASR and MT for the Kazakh language. Instead, we focused on the broader landscape of cascade speech translation. Additionally, this section discusses speech translation datasets used in research and examines various speech translation evaluation campaigns that have played a crucial role in assessing and advancing the performance of cascade systems.

In the early stages of speech translation, particularly in the Janus project [8,9], a simple cascading approach was utilized. This involved developing separate systems for speech recognition and translation, where the best hypothesis from the speech recognizer was passed as input to the translation system. Another initial approach involved template matching for the translation stage [10]. Researchers also explored the concept of speech-to-speech translation, which aimed to generate spoken output instead of written text [9]. However, the decision of whether to produce text or speech output was typically treated as a user interface concern that required separate evaluation. This could be accomplished by employing speech synthesis to convert the translated text into speech. These early efforts primarily focused on specific domains, such as scheduling dialog scenarios, where promising outcomes were observed. However, a major challenge arose with error propagation from the speech recognizer, particularly when using interlingua-based machine translation methods that relied on parsers expecting well-structured inputs [9,11]. This issue was particularly significant because the prevalent interlingua-based machine translation approach heavily depended on parsers that had certain expectations. Consequently, errors introduced by the speech recognizer posed considerable difficulties. As the field of machine translation progressed, there was a notable shift towards data-driven approaches, aligning with the broader trends in general machine translation research [12]. These data-driven methods began to gain prominence as researchers recognized their potential in improving speech translation accuracy and performance. Researchers shifted their focus towards finding ways to improve the integration of the speech recognition and translation stages. A notable work, described in [13], presented a probabilistic framework for fully integrating data-driven approaches. The study demonstrated that a tight integration of ASR and MT components brings benefits to the speech translation task. One approach to achieving better integration between recognition and translation was the transition from 1-best translation to n-best translation, where multiple translation hypotheses were considered in [14,15].

Another area of focus in making MT robust to ASR errors is through training the MT system on parallel data that include real or emulated ASR errors. By simulating or using real-world ASR errors in the training data, researchers aim to create MT models that are better equipped to manage the challenges posed by imperfect speech recognition outputs. A method for reducing the mismatch between the ASR output and the MT input was presented in [16]. Their approach involved training the translation system using automatically transcribed speech. In their experiments, they observed an improvement of up to 0.9 BLEU on the English-to-French speech translation task at the International Speech Translation Workshop 2012 (IWSLT 2012). The goal of their approach was to leverage the advantages of ASR output in improving the performance of the translation system. In [17], the authors proposed a technique to simulate errors generated by the ASR system. They utilized the ASR system’s pronunciation dictionary and language model to convert lexical entries into phoneme sequences. These sequences were then used to create a phoneme-to-word MT system that introduced errors in clean text to mimic ASR outputs. By training a speech translation system on synthetic ASR data generated through this technique, the authors observed consistent improvements in translation quality for English-French lectures. Another work [18] introduced a training architecture that focuses on enhancing the robustness of NMT models against speech recognition errors. Their approach addressed both the encoder and decoder components through adversarial learning and data augmentation techniques, respectively. By simultaneously addressing these components, their method aimed to bridge the gap between the output of ASR and the input of MT. Experimental results on the IWSLT2018 speech translation task demonstrated that their approach outperformed the baseline by up to 2.83 BLEU points when dealing with noisy ASR output while maintaining comparable performance on clean text. Methods to adapt a robust NMT system that can effectively manage common ASR errors were investigated [19]. In their study, they focused on the application scenarios where transcripts can be post-edited by human experts. To address this, they proposed adaptation strategies to train a single system capable of translating both clean and noisy input without supervision on the input type. This approach aimed to enhance the versatility and adaptability of the NMT system, enabling it to manage different types of input effectively.

A pipeline system specifically designed for the spoken language translation (SLT) task of the IWSLT19 evaluation campaign was developed in [20]. They performed speech recognition experiments using two distinct end-to-end architectures. By combining these two architecture models into an ensemble, they were able to achieve the best results on the development sets of SLT sub-tasks. In terms of machine translation, they employed a transformer-based multilingual model, which allowed them to generate translations for all sub-tasks using just a single model. The integration of these components enabled Pham et al. to create an effective and efficient system for speech translation in the IWSLT19 evaluation campaign. A cascaded approach that combined high-quality hybrid ASR with transformer-based NMT was proposed in [21]. This cascaded approach aimed to integrate accurate speech recognition provided by the ASR system with the powerful translation capabilities of the NMT system. By combining these two components, researchers aimed to enhance the performance of simultaneous speech translation systems in the IWSLT 2020 evaluation. In their study, the authors of [22] conducted a systematic comparison between state-of-the-art systems representing two paradigms in speech translation: cascade and direct. The study focused on three language directions: English to German, Italian and Spanish. To evaluate the performance of these systems, both automatic and manual evaluations were conducted. The evaluations utilized high-quality professional post-edits and annotations, ensuring accurate and reliable assessments. By systematically comparing the performance of cascade and direct systems across multiple language directions, the study provided valuable insights into the strengths and weaknesses of each approach, contributing to a deeper understanding of speech translation techniques. A comparison was made between state-of-the-art cascade and direct approaches for speech translation in [23]. The focus of the study was the under-resourced language pair of Basque-Spanish, which presents challenging linguistic phenomena, including significant differences in morphology and word order. This case study was significant as it provided insights into speech translation beyond the commonly studied English language. By examining the performance of both cascade and direct approaches in this unique language pair, the study contributed to a more comprehensive understanding of speech translation methodologies and their effectiveness in handling diverse linguistic characteristics.

Several prominent speech translation campaigns have made significant contributions to the field. The International Workshop on Spoken Language Translation (IWSLT) is an annual event that serves as a platform for researchers to present their work, participate in shared tasks and engage in evaluations specifically related to spoken language translation [24]. The Workshop on Machine Translation (WMT), although primarily focused on machine translation, also includes a speech translation track where researchers can demonstrate their speech translation systems and take part in evaluations and benchmarking [25].

Speech translation datasets play a crucial role in the development and evaluation of speech translation systems. These datasets consist of parallel audio and text data, enabling the training and evaluation of models that can convert spoken language input into translated text output. The size of speech data in these datasets varies depending on the specific dataset. For instance, MuST-C [26] is a remarkable multilingual speech translation corpus specifically designed to support the training of end-to-end systems for speech-to-text translation (SLT) from English into eight different languages. This dataset stands out due to its substantial size and high quality, making it an invaluable resource for advancing research in the field. MuST-C consists of a minimum of 385 h of audio recordings sourced from the English TED talks. The audio recordings are meticulously aligned at the sentence level with their corresponding manual transcriptions and translations for each target language. The Fisher and Callhome Spanish-English speech translation corpora [27] contribute to the advancement of speech translation research by providing researchers with diverse and accurately aligned speech and text data. The Fisher Spanish dataset is a valuable resource for speech translation research, consisting of 819 transcribed conversations primarily between strangers. It offers approximately 160 h of speech data, meticulously aligned at the utterance level with about 1.5 million tokens. This dataset provides a rich collection of linguistic information for analysis and modeling. The Callhome Spanish corpus, on the other hand, comprises 120 transcripts of spontaneous conversations primarily between friends and family members. It offers approximately 20 h of speech data, aligned at the utterance level, with just over 200,000 words (tokens) of transcribed text. Multilingual TEDx Corpus provides a vast collection of multilingual speeches, contributing to a significant amount of speech data [28]. Similarly, the Europarl-ST Corpus offers extensive multilingual recordings of parliamentary speeches [29].

The direct or end-to-end method is another approach in speech translation that differs from the cascade method discussed in this work. Since the focus of this paper is specifically on the cascade method, the direct method falls outside the scope of this study.

3. Speech Translation Dataset (ST-kk-ru)

In our research, we revisit the concept of constructing a speech translation corpus by utilizing the existing speech recognition dataset for the Kazakh language. Rather than starting from scratch to create a new speech translation dataset, which is a time-consuming and expensive task, we opted for a more efficient approach. We decided to extend the existing ISSAI KSC 1 [30] to include speech translation capabilities. The ISSAI KSC 1 dataset already consisted of a substantial amount of transcribed audio data in the Kazakh language, making it a valuable resource for our purposes. This dataset comprises 332 h of transcribed audio data, containing over 153,000 utterances from individuals representing various regions, age groups and genders. The dataset consists of CSV files with sentences and speaker ID, as well as audio files for each sentence. By default, audio files have randomized IDs, where there is a corresponding audio file for each sentence.

The extension process involved translating the Kazakh transcriptions into the desired target language, in this case, Russian. This allowed us to repurpose the available data for direct speech translation without the need for additional data collection or annotation. By translating the existing transcriptions, we were able to obtain a bilingual dataset suitable for speech translation experiments. This approach significantly reduced the time and resources required to create a new dataset from the ground up. It also enabled us to utilize the diverse range of speech data available in the original Kazakh transcription corpus, which encompassed various speakers from different regions, age groups and genders.

We followed a semi-automated approach using the Google Translate API and Yandex Translate API to translate transcriptions from Kazakh into Russian. The primary aim of utilizing these translation services was to facilitate the translation process for human translators and assist them effectively, as manually translating 153 thousand sentences would be extremely time-consuming. Furthermore, a manual translation process was conducted by four different translators, all of whom are qualified linguists, to ensure the accuracy and quality of the translations. After performing the described operations, we obtained an intermediate table that contained the following fields:

- −

- id—text (and audio) file identifier,

- original—original text in Kazakh,

- google—sentence obtained as a result of translation using Google Translate API,

- yandex—sentence obtained as a result of translation using Yandex Translate API,

- comments—section to write comments for untranslatable sentences (“өлең” for lyrics, “аударылмайды”—untranslatable sentence) and to write correct translation if any of the above services provided wrong translation.

The translations obtained from Google Translate API and Yandex Translate API exhibited numerous errors due to several factors. The lexical meaning of the sentences given in Kazakh is unclear and given incorrectly. In addition, there is no consistency in the form of communication between words and sentences. The lexical-semantic meaning is lost because the words and phrases are not correctly connected with each other. Most of the translations are literal rather than semantic. The above problem arises because the original dataset was mainly made for speech recognition task; thus, the punctuation marks were removed, and the text was flattened. Therefore, to reduce the number of errors additional checking and translation was performed by translators. A round of translation checking was implemented to minimize errors and enhance the reliability of the translations. Each translator was assigned the task of reviewing and verifying the translations performed by another translator. This process helped to identify and correct any potential errors or inconsistencies, ensuring the overall quality and reliability of the translated dataset.

Even though the rigorous checking process, the resulting dataset had human errors, containing empty cells, wrong translations, choosing wrong variant of translation (google or yandex). Therefore, some data cleaning needed to be performed to obtain a clean dataset formatted according to the MuST-C [26] format.

After obtaining the dataset from annotators (translators), the post-processing involved several steps to prepare the data for further analysis. Firstly, the translated sentences from each translator were merged into a single table. To ensure data quality, null values were dropped from the table, followed by the removal of any duplicate values. The next steps involved filtering out specific rows based on the content of the comments field.

The Kazakh transcriptions within the ISSAI KSC 1 dataset were sourced from various domains, including electronic books, laws and websites such as Wikipedia, news portals and blogs. However, transcriptions derived from electronic books presented several challenges when translating them into Russian. These challenges arose due to factors such as poetic elements embedded in the text or the absence of verbs. As a result, these problematic transcriptions namely rows containing the word “өлең” and “аударылмайды” ” in the comments field were removed to ensure accurate translations. The remaining rows, where the comments were not null, were extracted into a new the data frame called “alternative translation”. Further filtering was performed based on the “status” column. Rows with a status equal to “google” were selected, followed by rows with a status equal to “yandex”. The remaining rows that did not fall into either of these categories were also selected. The resulting table was then concatenated with the previously obtained tables for “google”, “yandex” and “comments”. To standardize the field names, they were renamed as “kk” for Kazakh and “ru” for Russian, replacing the previous labels of “google”, “yandex” and “comments”. All of these tables were then concatenated into a single table that included both the “kk” and “ru” fields. Next, the dataset was divided into development, testing and training splits in a manner similar to the MuST-C dataset. The audio (.wav) files associated with each triplet were moved into corresponding directories for the dev, test and train sets based on the newly created dataset. To facilitate further processing, dev, test and train yaml files were created. These files contained the “kk” and “ru” texts from the dataset, with each row representing a separate entry. In summary, the post-processing of the data involved merging, dropping null and duplicate values, filtering based on comments and status, renaming fields, concatenating tables, dividing into splits, moving audio files and creating yaml files.

Overall, our approach to translating the Kazakh transcriptions into Russian proved to be a cost-effective and efficient solution for obtaining a speech translation dataset, enabling us to focus our efforts on developing and evaluating speech translation models rather than investing extensive resources in data collection. Table 1 shows the statistics of the received speech translation dataset.

Table 1.

Statistics of ST-kk-ru dataset.

4. Materials and Methods

4.1. Speech Recognition

This subsection focuses on additional datasets specifically designed for speech recognition in the Kazakh language, along with the approaches employed to develop accurate speech recognition modules. The availability of high-quality datasets plays a crucial role in training robust speech recognition systems. Furthermore, various approaches and techniques have been explored to improve the accuracy and performance of the speech recognition modules.

4.1.1. Additional Dataset

ISSAI KSC. The ISSAI KSC is the largest publicly accessible database created to support Kazakh speech and language processing applications [30]. It consists of over 332 h of data collected through a web-based speech recording platform, which invited volunteers to read sentences from various sources, including books, laws, Wikipedia, news portals and blogs.

M2ASRKazakh-78. The speech corpus [31] created by Xinjiang University and made available through the M2ASR Free Data Program consists of 78 h of recordings obtained from 96 students using a variety of recording equipment. The recordings were made in quiet environments, and the speakers read the sentences in a scripted, reading style. The transcriptions are written using Latin characters and follow the rules established by the authors.

Kazcorpus. The kazcorpus acoustic corpus [32] consists of two independent subcorpuses–kazspeechdb and kazmedia. The kazspeechdb corpus was used as a starting point in creating the corpus for broadcast news. The size of the subcorpus is 22 h of speech. The body of kazmedia is audio and text data collected from the official websites of the television news agencies, namely “Khabar”, “Astana TV” and “Channel 31”. The size of this subcorpus is 21 h of speech.

KazLibriSpeech. We collected audio recordings with relevant texts from open sources. The amount of data collected is 992 h. Each audio file corresponds to one common file with the text of the audiobook, i.e., audio and texts are not aligned either by sentences or by words. Therefore, we employed a segmentation approach based on connectionist temporal classification (CTC) algorithm to extract accurate audiotext alignments even when the audio recording includes unknown speech sections at the beginning or end. In our case, this model defines speech segments in audio files in sentence level. The speech recognition model required for segmentation was trained using the ISSAI KSC dataset in Espnet tool [33].

More details of the available corpora for the speech recognition in the Kazakh language are shown in Table 2.

Table 2.

The structure of the additional speech recognition corpora for the Kazakh language.

4.1.2. Methods

DNN-HMM. The chain models can be considered as a separate design point in the space of acoustic models because they are a sort of DNN-HMM model that is implemented using nnet3 in Kaldi [34]. The neural network’s output uses a frame rate that is three times lower, which dramatically reduces the amount of processing necessary during testing and makes real-time decoding considerably simpler. The log probability of the proper sequence is the sequence-level objective function with which the models are initially trained. By doing a full forward-backward on a decoding graph produced from a phone n-gram language model, it is essentially MMI implemented without lattices on the GPU.

E2E transformer. An encoder-decoder architecture based on the Transformer was trained using the ESPnet framework [33], jointly trained with the connectionist temporal classification (CTC) objective function. The input speech was represented using 80-dimensional filter bank features with pitch computed every 10 ms over a 25 ms window. To process the acoustic features for the E2E architecture, a few initial blocks of VGG network were used. The E2E transformer ASR system was comprised of 12 encoder and 6 decoder blocks, with 4 heads in the self-attention layer, hidden states of 256-dimension and feed-forward network dimensions of 2048. The model was trained for 100 epochs using the Adam optimizer with an initial learning rate of 10 and warm-up steps of 30,000, with a dropout rate and label smoothing of 0.1 set. To assist decoding, a two-layer RNN with 650 long short-term memory (LSTM) units each was utilized to construct a character-level LM, based on the transcripts of the training set. Additionally, the LSTM LM was used during the decoding stage.

Wav2Vec 2.0. and XLSR-53. The experiments were conducted using the Fairseq platform [35]. The Wav2Vec 2.0 base model [36] was pre-trained with unlabeled speech data using various configurations, such as encoder layerdrop set to 0.05, dropout_input, dropout_features and feature_grad_mult set to 0.1 and encoder_embed_dim set to 768. The training hyperparameters included a learning rate of 5 × 10−4 and warmed up in the first 10% of the training time. The number of updates was set to 800,000, and the maximum quantity of tokens was set to 1,200,000. Additionally, the Adam optimizer was used, as in the original work. Standard fine-tuning procedures were used with fine-tuning parameters defined using the following configurations: the number of updates was set to 160,000, and the maximum quantity of tokens was set to 2,800,000. The Adam optimizer was used, and other parameters included a learning rate of 3 × 10−5 and a gradient accumulation of 12 steps. The batch size during training was defined automatically by the framework, depending on the maximum quantity of predefined tokens. During training, the best model was selected based on the lowest WER obtained on the validation set.

The XLSR model was pre-trained using the same configurations as the Wav2Vec large model. The encoder block consisted of 24 layers with a dimension of 1024, and 16 attention blocks were used with no dropout. Fine-tuning parameters were defined using the same configurations used in the original Wav2Vec 2.0 experiment with XLSR.

After fine-tuning the model, decoding was performed using a 3-gram language model, which was trained using Kenlm on ISSAI KSC1, Kazcorpus and KazLibriSpeech. During decoding, a beam search decoder was used, with the beam size set to 1500.

4.2. Machine Translation

In this subsection, we delve into additional datasets that have been specifically designed for machine translation tasks involving the Kazakh-Russian language pair. We also examine the different approaches and techniques employed to develop accurate machine translation modules. The focus is on exploring methods to enhance the accuracy and performance of these modules, thereby enabling more effective and reliable translations between the Kazakh and Russian languages.

4.2.1. Additional Dataset

A parallel corpus for Kazakh-Russian language pair was assembled from two distinct sources (Table 3). The first source was built in our laboratory [37]. It contains over 890 thousand parallel sentences extracted from online news articles across 15 websites. The chosen websites all belong to state bodies, national companies and other quasi-governmental establishments, who adhere to the same language policy of making bilingual releases in Kazakh and Russian languages.

Table 3.

The size of parallel corpora for the Kazakh language.

Moreover, such websites almost always provide page-level alignment, e.g., a Russian version of a page contains a direct link to a Kazakh version of the same page and vice versa. Thus, the document alignment was solved at the stage of crawling. After the initial alignment, a cleaning procedure was performed, which boils down to the removal of (i) duplicate sentences, (ii) sentence pairs where both sentences are identical, (iii) sentence pairs where at least one of the sentences does not contain a single alphabetic character and (iv) sentences longer than fifty and shorter than three alphanumeric tokens. Such a cleaning procedure removes only blatant misalignments; however, there are many aligned pairs that, although pass the cleaning test, are not in fact valid translations of each other. In order to filter out such cases a random forest classifier was trained on a manually labeled dataset of 1600 sentence pairs. The classifier uses a combination of 36 features and for a given pair of sentences estimates the probability of the pair being parallel. The aligned and cleaned sentence pairs were fed to the classifier and created the list of pairs ranked by the classifier’s estimations. Then the pairs for which the estimation was given as 0 were removed, thereby creating the final dataset with a total of 893234 parallel sentences. As a second source for the Kazakh-Russian parallel corpus, the language resource created by researchers from KazNU [38] was chosen. It consists of over 86 thousand parallel sentences and can be used under Creative Commons licenses.

4.2.2. Methods

Baseline transformer model. This subsection describes the technical details of training baseline neural machine translation models based on the transformers architecture. They were trained using Joey NMT, a simple neural machine translation toolkit based on PyTorch. Joey NMT offers several well-known NMT capabilities in a compact and straightforward code base. Joey NMT, despite its emphasis on straightforwardness, affords traditional designs (RNNs, transformers), fast beam search, weight tying and so on and accomplishes performance on common benchmarks that is on par with more complicated toolkits [39]. The NMT based on transformers relies on the amount of data available for training and the configuration parameters. The encoder of the model for the Kazakh-Russian language pair use 512-dimensional embedding and hidden layer sizes. The decoder has the same dimensions as the encoder. Adam optimizer with a maximum learning rate of 0.0003 and a minimum of 10−8 was used to train the model. Dropout probability of 0.3 was set for both the encoder and the decoder. A significant improvement in performance came from using a different tokenization strategy. NMT would often tokenize using words. However, performance was dramatically improved when utilizing a byte pair encoding (BPE). Therefore, BPE with a joint vocabulary size of 32 thousand words was applied as well.

Fine-tuned mBart model. BART is a groundbreaking method for pre-training a complete denoising autoencoder that can handle sequence-to-sequence tasks [40]. It has been primarily trained on a massive corpus of monolingual data in English. The transformer architecture is employed for the standard sequence-to-sequence implementation of BART, which comprises a bidirectional autoencoder and a left-to-right autoregressive decoder. It includes two types of architectures: BERT, which has a bidirectional encoder, and GPT, which has a left-to-right decoder. The base BART model has six encoder and decoder layers, while the large model has twelve.

A multilingual extension of BART, mBART, is designed to pre-train on multiple monolingual languages. Similar to its predecessor, mBART uses 12 layers each of encoder and decoder, with layer-normalization following each one. The former version has 25 languages. An expanded version of mBART now includes up to 50 languages.

The architecture of mBART, which we utilized in our work, follows the conventional sequence-to-sequence transformer-based design with a 12-layer encoder-decoder and a model dimension of 1024 on 16 heads. Our training process involved bidirectional training with 0.3 dropout, 0.2 label smoothing, 2500 warm-up steps and a maximum learning rate of 3 × 10−5, as outlined in [40]. The model was trained for up to 100 thousand updates and selected the final model based on validation likelihood. To evaluate the model’s performance, beam search was used with a beam size of 5 and calculated the results against the true-target tokenized data, reporting the scores in BLEU. To train our model, we employed FairSeq [37].

5. Results

ASR performance is commonly measured using the Word Error Rate (WER), which is computed on lower-cased, tokenized texts without punctuation. WER compares the recognized words from the ASR system’s output to the reference transcript and calculates the percentage of words that were incorrectly recognized or substituted, inserted or deleted. On the other hand, machine translation (MT) and speech-to-text translation (SLT) results are typically evaluated using the BLEU (Bilingual Evaluation Understudy) metric. BLEU measures the similarity between the machine-generated translation and one or more reference translations by comparing n-grams (contiguous sequences of words) in both outputs.

The experiments were conducted on the NVIDIA DGX-1 server, which is equipped with eight V100 GPUs.

5.1. Speech Recognition

The DNN-HMM, E2E transformer and Wav2Vec2.0 models were assessed using the corpora outlined in Section 4.1.1. Table 4, Table 5 and Table 6 present the word error rate (WER) results of the ASR systems. Each architecture was trained in different scenarios, employing various parameters. However, for validation and testing purposes, only the development and test sets of the ST-kk-ru dataset were used consistently across all cases.

Table 4.

DNN-HMM models’ performance.

Table 5.

E2E transformer models’ performance.

Table 6.

Wav2Vec 2.0 models’ performance.

According to the findings presented in Table 4, integrating the ISSAI KSC1 and Kazcorpus datasets during the training phase resulted in significant improvement in performance. This integration led to decreased WER and CER across both the validation and test sets.

By incorporating the ISSAI KSC1 and Kazcorpus datasets into the training process, substantial enhancements were observed in the performance of the E2E transformer model (Table 5). The best results for the character error rate (CER) and word error rate (WER) achieved by the E2E transformer on the test set were 2.8 and 8.7, respectively. Notably, the utilization of an LSTM language model had a significant positive impact on the E2E transformer model, leading to improved performance. Additionally, the application of data augmentation techniques such as SpeedPerturb and SpecAugment proved to be highly effective in enhancing the Kazakh E2E ASR, resulting in further improvements.

Table 6 presents the word error rate and character error rate scores of the fine-tuned Wav2Vec 2.0-base and XLS-R models. In the pre-training phase, the KazLibriSpeech and M2ASRKazakh-78 corpora were exclusively utilized, while the ST-kk-ru (train + dev) or ISSAI KSC1 (train + dev) and Kazcorpus were employed for fine-tuning. The results demonstrate that the pre-trained Wav2Vec 2.0 Base model, which underwent pre-training using the KazLibriSpeech and M2ASRKazakh-78 corpora, followed by fine-tuning with the ISSAI KSC1 (train + dev) and Kazcorpus data, exhibits remarkable performance. It achieves a CER of 2.8 and a WER of 8.7 on the test set, which closely matches the best result achieved by the E2E transformer model. These findings indicate that pre-training significantly enhances the model’s performance, and the size of the dataset used for pre-training plays a crucial role. Moreover, the XLRS-53 model, trained on a vast and diverse corpus of speech text data from over 53 languages, also demonstrates competitive result. It is worth noting that the language model applied to all the models provides significant benefits, since it helps to refine the output of the models and reduce errors, leading to better results in terms of word error rate and character error rate.

5.2. Machine Translation

Two experiments were conducted for the Kazakh-Russian language pair to investigate the training data using a baseline transformer model. In the first experiment, only the ST-kk-ru case was used, and in the second experiment, the cases of NU and KazNU were additionally used. The training size for the first experiment was too small, the performance of the baseline model was relatively low, showing 17.68 BLEU scores on the test set. On the other hand, the second experiment showed 25.65 BLEU scores on the test set (Table 7). Furthermore, we conducted a fine-tuning experiment on the facebook/mbart-large-50-many-to-many-mmt model, which supports 50 languages, including Kazakh and Russian. We observed that the most important factor was pre-training itself. The Kazakh-Russian language pair obtained a significant gain in BLEU, which demonstrates that using the pre-trained models can considerably improve the translation performance. As shown in Table 7, the fine-tuned model has significant improvement over the baseline experiments.

Table 7.

BLEU score on the test set in the MT module.

5.3. Speech Translation

Our cascade speech translation system consists of separate modules for ASR and MT. To ensure a comprehensive evaluation of the system’s performance, we initially chose the top-performing models trained exclusively on the ST-kk-ru dataset. This initial result is presented as ID 1 in Table 8. Afterwards, we selected models that demonstrated significant improvements when additional datasets were utilized (ID 2). These selected models were then evaluated using the test set from the ST-kk-ru dataset to assess their performance within a broader context, specifically in a pipeline mode. In this pipeline mode, the speech input is initially converted into text using ASR technology. The resulting text is then translated from the source language to the target language using MT techniques. To gauge the quality of the MT module’s output, we employed the ground truth test data from the ST-kk-ru dataset and calculated the BLEU score.

Table 8.

Statistics of ST-kk-ru dataset.

6. Discussion

The cascade approach presents challenges due to the differing training methodologies employed for ASR and MT systems. The results of the conducted experiments on cascade speech translation from Kazakh to Russian gave rise to several crucial questions that warrant further investigation. Among these inquiries, it is vital to explore the contributions of ASR and MT modules to the overall evaluation. Understanding the impact of each module’s performance on the final translation output will provide valuable insights for optimizing the entire cascade system. To increase the performance of ASR and MT modules, a combination of data and model enhancements is essential. Data augmentation techniques, such as speed perturbation and paraphrasing, can expand the training dataset, while domain adaptation enables fine-tuning for specific applications. Multilingual training and transfer learning from pre-trained models enhance generalization capabilities. Ensemble methods and advanced language models with attention mechanisms improve overall accuracy and robustness.

The issue of error propagation between the ASR and MT modules must be thoroughly examined. Taking appropriate measures to mitigate error propagation will prevent inaccuracies in the speech recognition phase from adversely affecting the translation output, ensuring a more reliable and coherent end result. For instance, a mismatch occurs between the ASR system’s output and the expected input of the MT system. The ASR output lacks punctuation and case information and may contain errors such as omissions, repetitions and homophones. Conversely, MT systems are typically trained on cleaner written text. Moreover, it is important to note that although there are ample large-scale parallel corpora available for ASR and MT individually, establishing the necessary connections between them to create speech-to-translation pairs for end-to-end models or speech-to-transcription-to-translation triplets for robust pipeline speech translation systems is challenging. Implementing techniques such as confidence scoring or hypothesis rescoring can help in identifying and correcting ASR errors before they affect the translation.

The quality of the created ST-kk-ru corpus data is a critical factor influencing the cascade speech translation’s performance. Investigating the impact of data size, diversity and domain relevance will shed light on how the dataset characteristics influence translation accuracy and fluency. As shown in Table 8, the performance of the cascade speech translation system, which only utilizes modules trained on ST-kk-ru, is lower. However, incorporating additional datasets leads to an approximate 2 BLEU score improvement. It is worth mentioning that our system does not support a punctuation model, so the ASR output is fed directly into the MT module as is. The challenge emphasizes the importance of enhancing the speech translation dataset for improved cascade speech translation. To achieve this, the focus lies on two key aspects: increasing the dataset’s size and diversity while ensuring domain relevance and implementing data augmentation techniques to enhance dataset diversity and model generalization. Moreover, conducting human evaluations is suggested to ensure that the dataset meets high-quality standards.

By addressing these pertinent questions, researchers can gain a comprehensive understanding of the strengths and limitations of the cascade speech translation approach from Kazakh to Russian. Moreover, this study’s insights will not only be valuable for Kazakh-to-Russian translation but could also have broader implications for other low-resource language pairs. The challenges encountered and the strategies devised during the research process can be adapted and applied to similar language pairs facing similar limitations, thereby amplifying the impact of this work. Furthermore, understanding the intricacies of speech translation for low-resource languages opens up new avenues for exploring innovative solutions. As we delve deeper into the nuances of the Kazakh language and its translation to Russian, we might uncover unique opportunities for leveraging context, syntax and domain-specific knowledge to enhance the overall translation accuracy and fluency.

7. Conclusions

In conclusion, the cascade speech translation approach to translating from Kazakh to Russian faces certain challenges but remains a practical choice for achieving accurate and effective translations. The distinct training of the ASR and MT systems using different parallel corpora leads to a mismatch between the ASR output and the MT system’s expected input. The ASR output lacks important linguistic elements such as punctuation and case information and may contain errors.

Despite these challenges, the cascade approach continues to be a viable solution. By leveraging the strengths of ASR technology to convert speech into text and then utilizing MT techniques for language translation, the cascade approach allows for effective communication between Kazakh and Russian speakers. It enables the delivery of translations that, although not perfect, provide valuable linguistic understanding between the two languages.

As research and development in speech translation advance, efforts should be made to address the limitations of the cascade approach. This includes improving the alignment between ASR and MT systems, mitigating errors introduced during ASR conversion and finding innovative ways to link and leverage existing parallel corpora.

In our future work, we intend to explore alternative ASR and MT architectures in order to enhance performance. Additionally, we aim to incorporate a punctuation model to restore the punctuation in the ASR output. Furthermore, we plan to investigate the end-to-end speech translation approach using the ST-kk-ru dataset, comparing it with the cascade method. These efforts will contribute to a more comprehensive analysis and potential improvements in the field of speech translation. Overall, the cascade speech translation from Kazakh to Russian serves as a stepping stone in breaking down language barriers and facilitating cross-cultural communication, thus contributing to the advancement of multilingual interaction in various domains. In addition to our primary research objective, we intend to delve deeper into the intricacies of the end-to-end approach to Kazakh-Russian translation. Our investigation will encompass various aspects, such as the utilization of neural network architectures, attention mechanisms and sequence-to-sequence models. By examining these elements, we aim to gain a comprehensive understanding of how the end-to-end approach fares in comparison to the traditional cascade method.

Author Contributions

Conceptualization, experiments and software, Z.K. and T.I.; methodology, task management and verification of results, Z.K.; writing—draft preparation and editing, Z.K.; funding acquisition, Z.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science Committee of the Ministry of Education and Science of the Republic of Kazakhstan (Grant No. AP13068635).

Data Availability Statement

Data will be available on request from the authors.

Acknowledgments

We would like to thank Moldir Tolegenova, Aidana Zhussip, Aigul Shukemanova and Dinara Kabdylova for their translation and verification work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chan, W.; Jaitly, N.; Le, Q.; Vinyals, O. Listen, attend and spell: A neural network for large vocabulary conversational speech recognition. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 4960–4964. [Google Scholar] [CrossRef]

- Irie, K.; Zeyer, A.; Schlüter, R.; Ney, H. Language Modeling with Deep Transformers. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 3905–3909. [Google Scholar] [CrossRef]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. SpecAugment: A Simple Data Augmentation Method for Automatic Speech Recognition. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 2613–2617. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.H.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2015, arXiv:1409.0473. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 1715–1725. [Google Scholar] [CrossRef]

- Sennrich, R.; Haddow, B.; Birch, A. Improving Neural Machine Translation Models with Monolingual Data. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 86–96. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Waibel, A.; Jain, A.N.; McNair, A.E.; Saito, H.; Hauptmann, A.G.; Tebelskis, J. JANUS: A speech-to-speech translation system using connectionist and symbolic processing strategies. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Toronto, ON, Canada, 14–17 May 1991; pp. 793–796. [Google Scholar] [CrossRef]

- Lavie, A.; Gates, D.; Gavalda, M.; Tomokiyo, L.M.; Waibel, A.; Levin, L. Multi-lingual translation of spontaneously spoken language in a limited domain. In Proceedings of the 16th International Conference on Computational Linguistics (COLING), Copenhagen, Denmark, 5–9 August 1996; pp. 442–447. [Google Scholar] [CrossRef][Green Version]

- Wang, Y.Y.; Waibel, A. A connectionist model for dialog processing. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Toronto, ON, Canada, 14–17 May 1991; pp. 785–788. [Google Scholar] [CrossRef]

- Liu, F.H.; Gu, L.; Gao, Y.; Picheny, M. Use of statistical N-gram models in natural language generation for machine translation. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Hong Kong, China, 6–10 April 2003; pp. 636–639. [Google Scholar] [CrossRef]

- Shimizu, T.; Ashikari, Y.; Sumita, E.; Zhang, J.; Nakamura, S. NICT/ATR Chinese-Japanese-English speech-to-speech translation system. Tsinghua Sci. Technol. 2008, 13, 540–544. [Google Scholar] [CrossRef]

- Ney, H. Speech translation: Coupling of recognition and translation. In Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Phoenix, AR, USA, 15–19 March 1999; pp. 517–520. [Google Scholar] [CrossRef]

- Quan, V.H.; Federico, M.; Cettolo, M. Integrated n-best re-ranking for spoken language translation. In Proceedings of the Interspeech, Lisbon, Portugal, 4–8 September 2005; pp. 3181–3184. [Google Scholar] [CrossRef]

- Lee, D.; Lee, J.; Lee, G.G. POSSLT: A Korean to English spoken language translation system. In Proceedings of the Human Language Technologies: The Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-HLT), Rochester, NY, USA, 22–27 April 2007; pp. 7–8. [Google Scholar] [CrossRef][Green Version]

- Peitz, S.; Wiesler, S.; Nußbaum-Thom, M.; Ney, H. Spoken language translation using automatically transcribed text in training. In Proceedings of the 9th International Workshop on Spoken Language Translation, Hong Kong, China, 6–7 December 2012; pp. 276–283. [Google Scholar]

- Ruiz, N.; Gao, Q.; Lewis, W.; Federico, M. Adapting machine translation models toward misrecognized speech with text-to-speech pronunciation rules and acoustic confusability. In Proceedings of the Interspeech, Dresden, Germany, 6–10 September 2015; pp. 2247–2251. [Google Scholar] [CrossRef]

- Cheng, Q.; Fan, M.; Han, Y.; Huang, J.; Duan, Y. Breaking the Data Barrier: Towards Robust Speech Translation via Adversarial Stability Training. arXiv 2015, arXiv:1909.11430. [Google Scholar]

- Di Gangi, M.; Enyedi, R.; Brusadin, A.; Federico, M. Robust Neural Machine Translation for Clean and Noisy Speech Transcripts. arXiv 2015, arXiv:1910.10238. [Google Scholar]

- Pham, N.Q.; Nguyen, T.S.; Ha, T.L.; Hussain, J.; Schneider, F.; Niehues, J.; Stüker, S.; Waibel, A. The IWSLT 2019 KIT Speech Translation System. In Proceedings of the 16th International Conference on Spoken Language Translation, Hong Kong, China, 2–3 November 2019. [Google Scholar]

- Bahar, P.; Wilken, P.; Alkhouli, T.; Guta, A.; Golik, P.; Matusov, E.; Herold, C. Start-before-end and end-to-end: Neural speech translation by apptek and rwth aachen university. In Proceedings of the 17th International Conference on Spoken Language Translation, Virtual Meeting, 9–10 July 2020; pp. 44–54. [Google Scholar] [CrossRef]

- Bentivogli, L.; Cettolo, M.; Gaido, M.; Karakanta, A.; Martinelli, A.; Negri, M.; Turchi, M. Cascade versus Direct Speech Translation: Do the Differences Still Make a Difference? In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Virtual Meeting, 1–6 August 2021; pp. 2873–2887. [Google Scholar] [CrossRef]

- Etchegoyhen, T.; Arzelus, H.; Gete, H.; Alvarez, A.; Torre, I.G.; Martín-Doñas, J.M.; González-Docasal, A.; Fernandez, E.B. Cascade or direct speech translation? A case study. Appl. Sci. 2022, 12, 1097. [Google Scholar] [CrossRef]

- Bentivogli, L.; Federico, M.; Stüker, S.; Cettolo, M.; Niehues, J. The IWSLT Evaluation Campaign: Challenges, Achievements, Future Directions. In Proceedings of the LREC 2016 Workshop on Translation Evaluation: From Fragmented Tools and Data Sets to an Integrated Ecosystem, Portorož, Slovenia, 23–28 May 2016; pp. 14–19. [Google Scholar]

- Bojar, O.; Federmann, C.; Haddow, B.; Koehn, P.; Post, M.; Specia, L. Ten years of WMT evaluation campaigns: Lessons learnt. In Proceedings of the LREC 2016 Workshop on Translation Evaluation–From Fragmented Tools and Data Sets to an Integrated Ecosystem, Portorož, Slovenia, 23–28 May 2016; pp. 27–34. [Google Scholar]

- Di Gangi, M.A.; Cattoni, R.; Bentivogli, L.; Negri, M.; Turchi, M. Must-c: A multilingual speech translation corpus. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 2012–2017. [Google Scholar] [CrossRef]

- Post, M.; Kumar, G.; Lopez, A.; Karakos, D.; Callison-Burch, C.; Khudanpur, S. Improved speech-to-text translation with the Fisher and Callhome Spanish-English speech translation corpus. In Proceedings of the 10th International Workshop on Spoken Language Translation, Heidelberg, Germany, 5–6 December 2013. [Google Scholar]

- Elizabeth, S.; Matthew, W.; Jacob, B.; Cattoni, R.; Negri, M.; Turchi, M.; Oard, D.W.; Matt, P. The Multilingual TEDx Corpus for Speech Recognition and Translation. In Proceedings of the Interspeech, Brno, Czech Republic, 30 August–3 September 2021; pp. 3655–3659. [Google Scholar] [CrossRef]

- Iranzo-Sánchez, J.; Silvestre-Cerda, J.A.; Jorge, J.; Roselló, N.; Giménez, A.; Sanchis, A.; Civera, J.; Juan, A. Europarl-st: A multilingual corpus for speech translation of parliamentary debates. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 8229–8233. [Google Scholar] [CrossRef]

- Khassanov, Y.; Mussakhojayeva, S.; Mirzakhmetov, A.; Adiyev, A.; Nurpeiissov, M.; Varol, H.A. A Crowdsourced Open-Source Kazakh Speech Corpus and Initial Speech Recognition Baseline. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics, Virtual Meeting, 19–23 April 2021; pp. 697–706. [Google Scholar] [CrossRef]

- Shi, Y.; Hamdullah, A.; Tang, Z.; Wang, D.; Zheng, T.F. A free Kazakh speech database and a speech recognition baseline. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 745–748. [Google Scholar] [CrossRef]

- Makhambetov, O.; Makazhanov, A.; Yessenbayev, Z.; Matkarimov, B.; Sabyrgaliyev, I.; Sharafudinov, A. Assembling the kazakh language corpus. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1022–1031. [Google Scholar] [CrossRef]

- Watanabe, S.; Hori, T.; Karita, S.; Hayashi, T.; Nishitoba, J.; Unno, Y.; Enrique Yalta Soplin, N.; Heymann, J.; Wiesner, M.; Chen, N.; et al. ESPnet: End-to-End Speech Processing Toolkit. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018; pp. 2207–2211. [Google Scholar] [CrossRef]

- Povey, D.; Ghoshal, A.; Boulianne, G. The Kaldi Speech Recognition Toolkit. In Proceedings of the IEEE 2011 Workshop on Automatic Speech Recognition and Understanding, Waikoloa, HI, USA, 11–15 December 2011. [Google Scholar]

- Baevski, A.; Zhou, Y.; Mohamed, A.; Auli, M. wav2vec 2.0: A framework for self-supervised learning of speech representations. Adv. Neural Inf. Process. Syst. 2020, 33, 12449–12460. [Google Scholar]

- Makazhanov, A.; Myrzakhmetov, B.; Kozhirbayev, Z. On various approaches to machine translation from Russian to Kazakh. In Proceedings of the 5th International Conference on Turkic Languages Processing, Kazan, Russia, 18–21 October 2017; pp. 195–209. [Google Scholar]

- Ott, M.; Edunov, S.; Baevski, A.; Fan, A.; Gross, S.; Ng, N.; Grangier, D.; Auli, M. fairseq: A Fast, Extensible Toolkit for Sequence Modeling. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 48–53. [Google Scholar] [CrossRef]

- Balzhan, A.; Akhmadieva, Z.; Zholdybekova, S.; Tukeyev, U.; Rakhimova, D. Study of the problem of creating structural transfer rules and lexical selection for the Kazakh-Russian machine translation system on Apertium platform. In Proceedings of the International Conference “Turkic Languages Processing”, Kazan, Russia, 17–19 September 2015; pp. 5–9. [Google Scholar]

- Kreutzer, J.; Bastings, J.; Riezler, S. Joey NMT: A Minimalist NMT Toolkit for Novices. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 109–114. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual denoising pre-training for neural machine translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).