Abstract

With the rise of DDoS attacks, several machine learning-based attack detection models have been used to mitigate malicious behavioral attacks. Understanding how machine learning models work is not trivial. This is particularly true for complex and nonlinear models, such as deep learning models that have high accuracy. The struggle to explain these models creates a tension between accuracy and explanation. Recently, different methods have been used to explain deep learning models and address ambiguity issues. In this paper, we utilize the LSTM model to classify DDoS attacks. We then investigate the explanation of LSTM using LIME, SHAP, Anchor, and LORE methods. Predictions of 17 DDoS attacks are explained by these methods, where common explanations are obtained for each class. We also use the output of the explanation methods to extract intrinsic features needed to differentiate DDoS attacks. Our results demonstrate 51 intrinsic features to classify attacks. We finally compare the explanation methods and evaluate them using descriptive accuracy (DA) and descriptive sparsity (DS) metrics. The comparison and evaluation show that the explanation methods can explain the classification of DDoS attacks by capturing either the dominant contribution of input features in the prediction of the classifier or a set of features with high relevance.

1. Introduction

Recently, the risk of cyberattacks has increased because of vulnerabilities in some internet-connected devices that often make them easy targets [1]. DDoS attacks are particularly challenging to combat compared to other types of malicious cyberattacks. It has proven difficult to detect these types of attacks because of their ability to mask themselves as legitimate traffic.

Denial of Service (DoS) attacks are among the most popular and threatening attacks on network security [2]. This threat is represented in violating the availability of network services by denying authorized users from accessing the targeted services. Unlike DoS attacks that are launched from a single source, DDoS attacks are launched in a distributed manner from several sources. Attackers perform a DDoS attack in order to overwhelm the target with a relentless flood of traffic, which results in consuming the computational power, as well as the network capacity of network links [3].

The first DDoS attack was performed in August 1999 on the University of Minnesota computer network [4]. The attacker was able to shut down the network computers for about two days. In February 2000, famous websites such as eBay, Yahoo, Buy, and Amazon were attacked by a high-profile DDoS attack [4]. After that, DDoS attacks continued growing in frequency, and today they employ IoT devices and adopt new complicated methods to be widespread [2,5,6,7,8]. According to Cloudflare’s DDoS Threat Report Q3 of 2022 [9], DDoS attacks, in general, increased compared to the previous year. Application layer attacks (HTTP DDoS and Ransom DDoS) increased by 111% and 67%, respectively, in 2022 compared to the previous year. Network layer attacks (Layers 3 and 4 DDoS) increased by 97% in 2022 compared to the previous year and 24% in Q3 compared to the same quartile of the previous year.

Since the advent of DDoS attacks, the research community has tackled this threat through several detection techniques, including the following: trace back scheme, traffic filtering autonomous system, signature-based detection, and anomaly-based detection [10]. The tracing scheme relies on finding the locations of attack sources, while an autonomous traffic filtering system utilizes traffic filtering to isolate traffic that is not originating or destined for the network. The signature-based detection scheme builds its own database from known malicious threats and compares the new traffic to that database to identify malicious activities, whereas the anomaly-based detection scheme monitors the network behavior to distinguish malicious activities from the normal traffic based on a training process. Machine learning (ML) techniques belong to the last-mentioned category.

ML can be used to detect intrusions in network traffic as one of the most effective detection techniques [11]. In particular, deep learning (DL) has exhibited excellent performance in recent years. As real data are nonlinear, complex, and highly dimensional, the construction of DL models has several hidden neurons, and each neuron has a nonlinear function. The complex construction of DL models makes them better at understanding certain complex and nonlinear data in the target domain.

Although DL models are a powerful tool for modeling data and improving performance, they, unfortunately, can complicate the model explanation process. Thus, DL models are considered to be complex black-box models. The tradeoff between performance and explanation is the main issue in black-box models. Though DL models exhibit high performance, they are difficult to explain. To overcome this issue, explanation methods have been expanded to interpret DL models and explain their predictions.

The most prominent explanation methods are local, model-agnostic methods that focus on explaining individual outputs of a given black-box model, including LIME [12], SHAP [13], Anchor [14], and LORE [15] explanation methods. These methods assess the contribution of individual features in a specific prediction by generating perturbation samples of a given data instance and monitoring the effect of these perturbation samples on DL model output.

In this paper, we utilize Recurrent Neural Networks (RNNs) for DDoS attack classification. RNNs are strong and robust neural networks that are among the most effective algorithms as they have internal memory. They utilize their internal memory to recall important characteristics of the input they receive. For this reason, RNNs are the preferred models for dealing with sequential data such as time series [16,17,18,19]. The LSTM network is a modified version of the recurrent neural network. LSTM is well suited for processing, classifying, and predicting time series data with unknown time spans.

We then investigate the explanation of the LSTM predictions using LIME, SHAP, Anchor, and LORE explanation methods. We adopt the above explanation methods to interpret LSTM classification model predictions for two main reasons. First, these explanation methods have local interpretability, meaning they concentrate on interpreting individual predictions for a particular black-box classifier. This property is appropriate to interpret a decision taken and why a certain instance is considered an attack. Second, unlike the other explanation approaches that are designed to interpret specific models, the above four explanation methods are model-agnostic approaches that can be utilized by any classification model.

In this paper, we investigate the use of the long short-term memory (LSTM) model in classifying DDoS attacks while focusing on the explanation of the DL model’s predictions using LIME, SHAP, Anchor, and LORE methods. We can summarize our main contributions as follows:

- Assess the LSTM performance in detecting different classes of DDoS attacks by utilizing commonly used and publicly available CIC datasets;

- Investigate the explanation of LSTM predictions on DDoS attacks in CIC datasets using four different explanation methods;

- Utilize the output of explanation methods to demonstrate intrinsic classification features and improve LSTM classification performance, particularly on the CICDDoS2019 dataset;

- Compare and evaluate the explanation methods in the domain of DDoS attack classification using two evaluation metrics.

The remainder of the paper is organized as follows: in Section 2, we present the background of three explanation methods that we implement in this work. Section 3 shows recent work on the classification of DDoS attacks using the DL models and explanation methods that are used to interpret the DL models. Section 4 describes the methodology we adopt to classify the DDoS attacks using LSTM classifier and the explanation methods to interpret the LSTM predictions. This section, in particular, includes dataset preprocessing, classification model design, and the explanation methods used. The classification of the DDoS attacks using the LSTM model, the evaluation of the predictions, and their explanation are presented in Section 5. We show our conclusions and future work in Section 6.

2. Explanation Methods

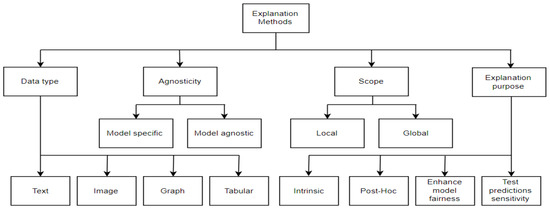

Linardatos et al. [20] summarized the various aspects through which explanation methods can be categorized. Figure 1 visualizes these aspects: data type, agnosticity, scope, and explanation purpose.

Figure 1.

Taxonomy of explanation methods.

Depending on the data that needs to be explained, explanation methods can be graph-based, image-based, text-based, and tabular-based, while the agnosticity indicates if the explanation method is applicable to explain any type of ML model or if it can explain only a specific type. Some of the explanation methods are local explanations because they are used to explain individual predictions instead of the whole model. The explanation methods can also be classified regarding the purpose of explanation into intrinsic, post hoc, enhanced model fairness, and test predictions sensitivity. Intrinsic-based explanation methods create interpretable models instead of complex models, while post hoc-based methods attempt to interpret complex models instead of using interpretable models. Although fairness is a surprisingly new field of ML explanation [20], the development made in the closing few years was not trivial. Several explanation methods have been produced to improve the fairness of ML models and guarantee the fair allocation of resources.

One of the purposes of the explanation methods is to test the sensitivity of model prediction. Several explanation methods try to evaluate and challenge ML models to support the trustworthiness and reliability of predictions of these models. These methods employ a composition used to analyze the sensitivity, where models are examined in terms of how sensitive their predictions are regarding the fine fluctuations in the corresponding inputs.

The taxonomy in Figure 1 focuses on the purpose for which these methods were created and the approaches they use to accomplish that purpose. In accordance with the taxonomy, four main categories for explanation methods can be distinguished [20]: methods to interpret complex black-box models, methods to generate white-box models, methods to ensure fairness and restrict the existence of discrimination, and, finally, methods to analyze the model prediction sensitivity. According to [20], methods to interpret complex black-box models can be categorized into methods to interpret DL models and methods to interpret any black-box models. Methods to analyze the model prediction sensitivity can also be classified as traditional analysis methods and adversarial example-based analysis methods. The LIME, SHAP, Anchor, and LORE explanation methods, the models we employ in our work, belong to the methods to explain any black-box models.

Ribeiro et al. [12] proposed the LIME explanation framework that can interpret the outputs of any classification model in an explainable and trusted way. This method relies on learning an explainable model in a local manner around the model output. They proposed an extension framework called (SP-LIME) to interpret models globally by providing representative explanations of individual outputs in a non-redundant manner. The proposed frameworks were evaluated with simulated and human subjects by measuring the impact of explanations on trust and associated tasks. The authors also showed how understanding the predictions of a neural network on images helps practitioners know when and why they should not trust a model.

Lundberg and Lee [13] proposed the SHAP method to explain the ML model, where the importance of the input features is measured by the Shapley values. These values are inspired by the field of cooperative game theory, which aims to quantify each player’s contribution to the game. They arise in situations where the number of players (features of input data) collaborates to acquire a reward (prediction of ML model). The proposed approach was evaluated by applying kernel SHAP and Deep SHAP approximations on the CNN’s DL model used to classify digital images from the MNIST dataset.

In another work, Ribeiro et al. [14] introduced a model diagnostic system called Anchor. The Anchor model utilizes a strategy that is based on the perturbation technique to generate local explanations for the ML model outputs. Therefore, instead of the linear regression models implemented by the LIME method, the Anchor explanations are presented as IF–THEN rules that are easy to understand. Anchors adopt the concept of coverage to state exactly which other and possibly unseen instances they apply to. Anchors are determined by methods of an exploration or multi-armed bandit problem, which has its roots in the discipline of reinforcement learning. Several ML models, Logistic Regression (LR), Gradient-Boosted trees (GB), and Multilayer Perceptron (MLP), were explained in this paper to show the flexibility of the Anchor method.

Guidotti et al. [15] proposed an agnostic method called LORE which provides interpretable and faithful explanations of the ML models. Firstly, a genetic algorithm is used to generate a synthetic neighborhood around the intended instance to allow LORE to learn a local explainable predictor. Then, LORE exploits the concept of the local explainable predictor to derive significant explanations, including decision rules that explain the causes behind the decision and counterfactual rules. These rules present the fluctuations in the features of inputs that lead to a different prediction. SVM, RF, and MLP ML models were explained, in this work, using LORE on three datasets (adult, German, and compass).

2.1. LIME

LIME stands for Local Interpretable Model-agnostic Explanations. It was developed by Ribeiro et al. [12]. This method is local and model-agnostic because it can explain local individual prediction for any kind of ML model.

The goal of the explanation is to comprehend why the black-box model made a certain prediction. LIME explains the model prediction locally by investigating what occurs to the prediction when the input data instance is varied (perturbed). To this end, LIME generates new samples that consist of perturbed instances, and the black box is used to find corresponding predictions of these new samples. LIME then trains a simple explainable model, which gains weight by the vicinity of the perturbed instances to the interesting instance. The simple explainable model can be chosen from the explainable model’s space; for instance, this model can be Least Absolute Shrinkage and Selection Operator (LASSO) [21]. LASSO uses regularization to avoid problem of overfitting in ML models and improve the prediction accuracy and interpretability. Mathematically, LIME explanation could be expressed as

where is the explainable model for instance that minimizes loss . is the group of available explainable models, for instance, all possible linear regression models. estimates how the explanation is close to the prediction of the intended black-box model . is a penalty term to measure the complexity of explanation result. The vicinity measure, , determines how the neighborhood we consider for the explanation around instance is large.

2.2. SHAP

Shapley Additive Explanation (SHAP) method was presented by Lundberg and Lee [13]. SHAP method is based on calculating the Shapley Values [22]. Shapley value represents the contribution of each feature of input instance x in making the prediction of black-box model.

The Shapley values are inspired by the cooperative game theory that aims to quantify the contribution of each player (the feature in our case) in the game where a number of players collaborate to acquire a reward (the prediction in our case). The reward is supposed to be paid equitably between the players based on their contributions; such a contribution is the Shapley value. Simply, a Shapley value is defined as the average marginal contribution of a feature in an instance for all possible coalitions. The marginal contribution of a given feature is the difference between the prediction that is generated when the feature is present with respect to the prediction that is generated when that feature is absent. This is carried out for each of the coalitions. The coalitions are all possible subsets that can be generated where the feature we are looking to calculate has its contribution present. The mean of marginal contribution is the Shapley value. For instance with features, the Shapley value of the feature could be calculated as

As a real dataset usually has a large number of features, this causes it to be computationally complicated to figure out the Shapley values for each feature individually in the interested instance. To avoid this, Lundberg and Lee introduced Kernel SHAP to approximate the SHAP method. Kernel SHAP is a method for calculating Shapley values with a smaller number of coalition instances. It relies on a weighted linear regression with the Shapley values as the solution coefficients.

2.3. Anchor

The Anchor method was developed by Ribeiro et al. [14], and they compared it to their previous work on LIME. The Anchor method is also called scoped rules method because it is based on IF–THEN rules, and these rules can be used more than one time since they are scoped. Anchors use the concept of coverage to specify which other, presumably unobserved, situations they apply to. Anchors minimize the number of model calls and combine reinforcement learning techniques [23] with a graph search algorithm [24] or recover from local optimum.

If we suppose that is the perturbation generated using the LIME method near the interested instance, the Anchor method constrains the perturbation space with Anchor that contains set of predicates (). For the input instance , returns if all its rules are true. Therefore, is an Anchor if , and represents a sufficient condition for the prediction , with high probability. This means that for a perturbed instance from , . Formally is an Anchor if

where is the desired level of precision. As the precision of the Anchor refers to the proportion of true predictions by the Anchor rules, we can use (3) to express the precision of that achieves as:

For an arbitrary and black-box model , directly computing this precision is impossible. Instead, a probabilistic definition is introduced where Anchors accept the precision constraint with a high probability:

The Anchor coverage is defined as the proportion of the input instances that are covered by the Anchor. Formally, the coverage of an Anchor can be expressed as the probability that it applies to instances from , i.e., . Thus, the searching process for an Anchor is defined as combinatorial optimization problem:

The Anchor method utilizes a multi-armed bandit formulation algorithm [25]. This algorithm can randomly build the Anchors with the highest coverage and a particular threshold of precision.

2.4. LORE

LOcal Rule Explanation (LORE) method was introduced by Guidoti et al. [15]. This method is similar to the Anchor method in using rules to explain the decision of ML models. However, it differs from Anchor by generating perturbations of x by implementing a genetic algorithm (instead of linear regression) and training a decision tree (instead of linear regression) using two fitness functions.

LORE first uses a genetic algorithm to produce a local interpretable prediction based on the perturbations. Then, it generates a coherent explanation from the concept of the local explainable predictor, which includes decision rules set and counterfactual rules set. The decision rules set contains the rules that explain why a decision was taken, whereas the counterfactual rules set specifies fluctuations in the instance’s properties that would result in a contrasting conclusion. LORE defines an explanation as a joint object:

The first part is a decision rule that describes the cause of the decision result , while the second part, , defines the collection of counterfactual rules, i.e., the smallest number of fluctuations in that could cause the predictor’s select to be reversed.

In LORE, the perturbation of instance is composed of two sets: , which represents the decision rules, and , which represents the counterfactual rules. LORE adopts a genetic algorithm to generate when maximizing the fitness functions below:

where , and . The first function seeks to similar to x (term ), but not identical (term ) for which the used learning model produces the similar outcome as (term ). The second fitness function leads to generating similar to x, but identical, so that returns a contrasting decision.

The important term in the listed above fitness functions is the distance . For the various types of features, the sum of a simple matching coefficient is weighted to be appropriate for the categorical features, while the normalized Euclidean distance is used for the continuous features.

3. Related Work

In recent years, research on DDoS attack detection using ML, especially DL, has increased. The nature of DL networks makes them black-box models, which constrains their implementation in some fields [26]. Due to the huge potential of DL, such as high accuracy, explaining neural networks has recently attracted much research attention. In the following two subsections, we review several recent research concerning the detection of DDoS attacks using DL models and the interpretation of these models using recent explanation methods.

3.1. DDoS Detection Using DL Models

Elsayed et al. [27] leveraged and proposed a DL framework using RNN-autoencoder that aims to detect DDoS attacks in Software Defined Networks (SDN). They exploited the combination of RNN-autoencoder and the SoftMax regression model at the output layer of their framework to differentiate malicious network traffic from normal traffic. Their proposed framework was held in two phases: the unsupervised pre-training phase and the fine-tuning phase. The first phase was utilized to obtain the valuable features that represent the inputs. The RNN-autoencoder was trained in an unsupervised mode to obtain the compressed representation of the inputs. The second phase was implemented to further optimize the whole network, where the supervised mode was exploited to train the output layer of the framework by the labeled instances. The authors, in this work, evaluate their approach by performing only binary classification on the CICDDoS2019 dataset, which includes a wide variety of DDoS attacks.

Kim [28] leveraged two machine learning models: a basic neural network (BNN) and a long short-term memory recurrent neural network (LSTM) to analyze DDoS attacks. The author investigated the effect of combining both preprocessing methods and hyperparameters on the model performance in detecting DDoS attacks. In addition, he investigated the suboptimal values of hyperparameters that enable quick and accurate detection. Kim also studied the effect of learning the former on learning sequential traffic and learning one dataset on another dataset in a DDoS attack. In Kim’s work, the well-known methods Box–Cox transformation (BCT) and min-max transformation (MMT) were utilized to preprocess two datasets, CAIDA and DARPA, and then the detection approach was used to perform binary classification.

Hawing et al. [29] proposed an LSTM-based DL framework to conduct packet-level classification in Intrusion Detection Systems (IDSs). Instead of analyzing the entire flow, such as a document, the proposed method considered every packet (as a paragraph). The approach then constructed the key sentence from every considered packet. After that, it applied word embedding to obtain semantics and syntax features from the sentence. The knowledge of the sentence was chosen instead of using the entire paragraph as long as the paragraph content can be obtained from the key sentence. Their work utilized ISCX2012 and USTC-TFC2016 datasets, which include DDoS attacks, to evaluate the binary classification using the proposed approach.

Yuan et al. [30] proposed an approach called DeepDefense that leveraged different neural network models, CNN, RNN, LSTM, and GRU, to distinguish DDoS attacks from normal network traffic. The DeepDefense approach identifies DDoS attacks depending on Recurrent Neural Networks, such as LSTM and GRU, by utilizing a sequence of continuous network packets. Feeding historical information into the RNN model is helpful in figuring out the repeated patterns representing DDoS attacks and setting them in a long-term traffic series. DeepDefense also adopts the CNN model to obtain the local correlations of network traffic fields. In this work, DeepDefense compared four combinations of models (LSTM, CNNLSTM, GRU, and 3LSTM) to detect DDoS attacks. These components were evaluated on the binary classification of the ISCX2012 dataset.

Cui et al. [31] performed a systematic comparison of the DL models based on IDSs to provide fundamental guidance for DL network selection. They compared the performance of basic CNN, inception architecture CNN, LSTM, and GRU models on binary and multiclass classification tasks. The comparison carried out on the ISCX2012 dataset shows that CNNs are suitable for binary classification, while the RNNs provide better performance of some complicated attacks in multiclassification detection tasks.

Zhang et al. [32] presented a deep hierarchical network that combines the LeNet-5 model and the LSTM model. This network consists of two layers: the first layer is established on the inhancedLeNet-5 network to obtain the spatial features from the flow, while the second layer utilizes the LSTM to obtain the temporal features. Both layers are concurrently trained to allow the network to figure out the spatial and temporal features. The extracted features from the flow do not ask for prior knowledge; hence there is no need to manual extracting of the flow features with certain meanings. The proposed approach was used to detect intrusions, including DDoS attacks, in CICIDS2017 and the CTU dataset.

Azizjon et al. [33] proposed a DL framework to develop an effective and flexible IDS by exploiting 1DCNN. They established an ML model based on the 1D-CNN by sending the TCP/IP packets in series over a time range to mimic invasion traffic for the IDS. Using the proposed approach, normal and abnormal network traffic were classified. The authors also performed a comparison between the performance of their framework and two traditional ML models (Random Forests and SVM). The proposed detection model is evaluated by performing a binary classification of the UNSWNB15 dataset that includes DDoS attacks.

3.2. Explanation Methods

Batchu and Seetha [34] developed an approach to address several issues that make the detection of DDoS attacks in the CICDDoS2019 dataset using ML models less efficient. These issues include the existence of irrelevant dataset features, class imbalance, and lack of transparency in the detection model. The authors first preprocessed CICDDoS2019 and used the adaptive synthetic oversampling technique to address the imbalance issue. They then conducted a selection mechanism for the dataset features through embedding SHAP importance to eliminate recursive features with GB, DT, XGBM, RF, and LGBM models. After that, LIME and SHAP explanation methods are performed on the dataset with selected features to ensure model transparency. Finally, binary classification is performed by feeding the selected features to KNORA-E and KNORA-U dynamic ensemble selection techniques. The classification experiment is performed on balanced and imbalanced datasets. The finding shows that the balanced dataset performance outperformed the imbalanced datasets. The obtained accuracy was 99.9878% and 99.9886% when using KNORA-E and KNORA-U, respectively.

Barli et al. [35] proposed two approaches, LLC-VAE and LBD-VAE, to mitigate DoS using the structure of the Variational Autoencoder (VAE). The goal of Latent Layer Classification (LLC-VAE) is to differentiate various types of network traffic using the representations from the latent layer of the VAE, while Loss Based Detection algorithm (LBD-VAE) attempts to discover the patterns of benign and malicious traffic using the VAE loss function. Their approaches were designed to show deep learning algorithms (VAE) able to detect certain types of DoS attacks from network traffic and test how to generalize the model to detect other types of attacks. Authors in this work used CICIDS2017 and CSECICIDS2018 datasets to evaluate their framework. As a further adjustment, they utilized the LIME explanation method to test how the LLC-VAE can be enhanced and to define whether it could be used as a method for constructing a mitigation framework. The result from this work demonstrates that deep learning-based frameworks could be efficient against DoS attacks, and LBD-VAE does not currently conduct enough to be applied as a mitigation framework. The superior findings were obtained using the LLC-VAE for its ability to identify benign and malicious traffic at an accuracy of 97% and 93%, respectively. In this work, the LLC-VAE has shown its capability to compete with the traditional mitigation frameworks, but it needs more settings to achieve better performance.

Han et al. [36] developed an approach to explain and enhance anomaly detection based on DL in security fields called DeepAID. DeepAID was developed by utilizing two techniques, Interpreter and Distiller, to provide interpretation for unsupervised DL models that meet demands in security domains and to solve several problems such as decision understanding, model diagnosing and adjusting, and decreasing false positives (FPs). The Interpreter produces interpretations of certain anomalies to help understand why anomalies happen, while a model-based extension Distiller uses the interpretations from the Interpreter to improve security systems. The authors, in this work, divided the detection methods into three types based on the structure of source data: tabular, time-series, and graph data. They then provided prototype applications of DeepAID Interpreter through three representative frameworks (Kitsune, DeepLog, and GLGV) and Distiller over tabular data-based systems (Kitsune). The results showed that DeepAID could introduce explanations for unsupervised DL models well while meeting the specific demands of security fields and can guide the security administrators to realize the model decisions, analyze system failures, pass feedback to the framework, and minimize false positives.

Le et al. [37] proposed ensemble tree models approach, Decision Tree (DR) and Random Forest (RF), to improve IoT-IDSs performance that evaluated on three IoT-based IDS datasets (IoTID20, NF-BoT-IoT-v2, and NF-ToN-IoT-v2). The authors claim that their proposed approaches provide 100% performance in terms of accuracy and F1 score compared to other methods of the same used datasets, while they demonstrate lower AUC compared to previous DFF and RF methods using the NF-ToN-IoT-v2 dataset. The authors, in this work, also exploited the SHAP method in both global and local explanations. The global explanation was used to interpret the model’s general characteristics by analyzing all its predictions by the heatmap plot technique. On the other hand, the local explanation was used to interpret the prediction results of each input (instance) of the model using the decision plot technique. The object of this work is to provide experts in cyber security networks with more trust and well-optimized decisions when they deal with vast IoT-IDS datasets.

Keshk et al. [19] proposed the SPIP (S: Shapley Additive exPlanations, P: Permutation Feature Importance, I: Individual Conditional Expectation, P: Partial Dependence Plot) framework to assess explainable DL models for IDS in IoT domains. They implemented Long Short-Term Memory (LSTM) model to conduct binary and multiclassification in three datasets: NSL-KDD, UNSW-NB15, and ToN_IoT. The predictions of the LSTM model were interpreted locally and globally using SHAP, PFI, ICE, and PDP explanation methods. The proposed approach extracted a customized set of input features that were able to outperform the original set of features in the three datasets and enhanced the utilization of AI-based IDS in the cyber security system. The results of this work showed that the explanations of the proposed method depend on the performance of IDS models. This indicates that the performance of the framework is affected negatively in the presence of poorly built IDS, which causes the proposed framework to be unable to detect the exploited vulnerability by the attack.

Neupane et al. [38] proposed a taxonomy to address some issues in the systematic review of recent state-of-the-art studies on explanation methods. These issues include the absence of consensus about the definition of explainability, the lack of forming explainability from the user’s perspective, and the absence of measurement indices to evaluate explanations. This work focused on the importance and applicability of the explanation models in the domain of intrusion detection. Two distinct approaches, white and black boxes, were presented in detail in the literature of this survey which addresses the concern of explainability in the IDS field. While the white-box explanation models can provide more detailed explanations to guide decisions, the performance of their prediction is, in general, exceeded by the performance of black-box explanation models. The authors also proposed architecture with three layers for an explanation-based IDS according to the DARPA-recommended architecture for the design of explanation models. They claim that their architecture is generic enough to back a wide set of scenarios and applications that are not limited to a certain specification or technological solution. This work provided research recommendations that state that the domain of IDS needs a high degree of precision to stop attack threats and evade false positives and recommend using black-box models when evolving an explanation-based IDS.

Zhang et al. [39] proposed a survey of state-of-the-art research that uses XAI in cyber security domains. They conducted the basic principles and taxonomies of state-of-the-art explanation models with fundamental tools, including general frameworks and available datasets. Also, the most advanced explanation methods based on cyber security systems from various application scenarios were investigated. These scenarios included explanation methods applications to defend against different types of cyberattacks, including malware, spam, fraud, DoS, DGAs, phishing, network intrusion, and botnet, explanation methods in distinct industrial applications, and cyber threats that target explanation models and corresponding defensive models. The implementation of the explanation models in several smart industrial areas (such as healthcare, financial systems, agriculture, cities, and transportation) and Human–Computer Interaction was presented in this work.

Capuano et al. [40] reviewed the published studies in the past five years that proposed methods that aim to support the relationship between humans and machines through explainability. They performed a careful analysis of explanation methods and the fields of cyber security most affected by the use of AI. The main goal of this work was to explore how each proposed method introduces its explanation for various application fields and illuminate the absence of general formalism and the need to move toward a standard. The final conclusion of this work stated that there is a need for considerable effort to guarantee that ad hoc frameworks and models are constructed for safety and not for the application of general models for post hoc explanation.

Warnecke et al. [41] introduced a standard to compare and assess the explanation methods in the computer security domain. They classified the investigated explanation methods into black-box methods and white-box methods. In this work, six explanation methods (LIME, SHAP, LEMNA, Gradients, IG, and LRP) were investigated and evaluated. The evaluation metrics of completeness, stability, efficiency, and robustness were implemented in this work. The authors applied the DL model RNN to four selected security systems (Drebin+, Mimicus+, DAMD, and VulDeePecker) to provide a diverse view of security. They construct general recommendations to select and utilize explanation methods in network security from their observations of significant differences between the methods.

Fan et al. [42] proposed principled instructions to evaluate the quality of the explanation methods. Five explanation approaches (LIME, Anchor, LORE, SHAP, and LEMNA) were investigated. These approaches were applied to detect Android malware and identify its family. The authors designed three quantitative metrics to estimate stability, effectiveness, and robustness. These metrics are principal properties that an explanation approach should fulfill for crucial security tasks. Their results show that the evaluation metrics are able to evaluate different explanation strategies and enable users to learn about malicious behaviors for accurate analysis of malware.

The aforementioned works included recent research that exploited the DL models to detect the DDoS attack in network traffic and explanation methods that are used to interpret the DL model’s decisions in security applications. Unlike these works, we can distinguish our work as follows:

- Investigate and evaluate four explanation methods (LIME, SHAP, Anchor, and LORE) to explain the use of the LSTM model for DDoS attacks detection;

- Perform multiclassification as well as binary classification on DDoS attacks extracted from three CIC datasets (CICIDS2017, CSECICIDS2018, and CICDDoS2019);

- Explain 18 network traffic classes (17 DDoS attacks plus a benign) and find the common features between explanations for each class;

- Find the intrinsic features extracted by the explanation methods to classify DDoS attacks in CIC datasets.

4. Methodology

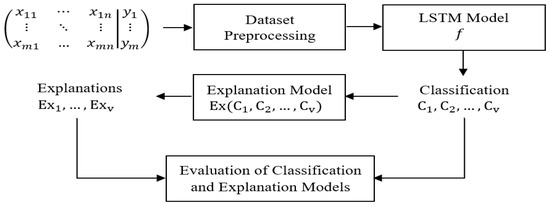

In this section, we present the methodology to classify DDoS attacks using the LSTM model, explain the LSTM predictions using LINE, SHAP, Anchor, and LORE methods, and evaluate the classification and explanation models. Figure 2 illustrates the architecture of our methodology, which consists of four main parts: dataset preprocessing, LSTM classification model, explanation model, and evaluation of classification and explanation models.

Figure 2.

Architecture of our detection and explanation approach.

4.1. Dataset Preprocessing

In this paper, we exploit three different datasets, CICIDOS2017, CSECICIDS2018, and CICDDoS2019, which are provided by the Canadian Institute for Cybersecurity (CIC) [43]. The raw traces of these datasets were analyzed using CICFlowMeter, to produce labeled flows as Comma Separated Value (CSV) format. Table 1 lists 84 network features extracted from CIC datasets. We give these features index representation from 0 to 83 to easily utilize them in this paper. Therefore, from now on, we will refer only to the feature index instead of its name. Furthermore, and for easy representation purposes, we use the grouping of CIC dataset features illustrated in Table 2 [2]. We can see from this table seven groups of features: forward packet, backward packet, flow-based, time-based, packet header-based, packet payload-based, and socket features.

Table 1.

Index representation of CIC dataset features.

Table 2.

Grouping of CIC datasets features. # refers to the number of features.

CIC datasets contain benign flows and the most up-to-date common DDoS attacks that resemble real-world data. CICIDS2017 has several DDoS attacks, including GoldenEye, Hulk, Slowhttptest, and Slowloris. In 2018, the Communications Security Establishment (CSE) released an updated version of CICIDS2017. The updated version (CSECICIDS2018) is structurally similar to CICIDS2017, but it has additional DDoS attacks such as HOIC and LOIC_HTTP.

The need to recognize new attacks and identify new taxonomies motivated the CIC group to analyze new attacks that can be conducted using TCP/UDP-based protocols at the application layer and propose a new taxonomy. Consequently, CICDDoS2019 was released and classified into two main categories, Reflection-based DDoS and Exploitation-based DDoS. The attacks in reflection-based DDoS are subcategorized into TCP (MSSQL, SSDP), UDP (NTP, TFTP), or TCP/UDP (DNS, LDAP, NetBIOS, SNMP, WebDDoS). The concept of exploitation-based DDoS is similar to reflection-based DDoS, except that its attacks can be launched in the application layer using transport-layer protocols. The DDoS attacks included in this category are subcategorized into TCP (SYN-Flood) or UDP (UDP Flood, UDP-Lag). Table 3 lists the DDoS attacks that are contained in CIC datasets.

Table 3.

DDoS attacks in CIC datasets.

The input of our methodology is CIC data in CSV format. The array of the input instances in Figure 2 denotes these data. This array has rows (instances), each with columns (features), and a label column with values that corresponds to the input instances.

As CIC datasets are unbalanced and include missing values that distort the learning process and bias the decision-making of a classification algorithm, we need to prepare the data to be appropriate to train the model by cleaning and balancing it. We remove the socket features group in Table 2 since they vary from network to network. These features are 0, 1, 2, and 3, which correspond to flow ID, source IP, destination IP, and timestamp, respectively. We confine to the characteristics feature groups to train our DL model, as the socket information can result in an overfitting issue because the model decision is biased to this information.

During the analysis of CIC datasets, we found that 13 features contain zero value over all instances. These features are redundant as their value is constant for all traffic classes and are not useful to differentiate these classes.

We also found that these datasets include a few missing (NaN) and infinity values. Missing and infinity values affect the ML model performance and predictive capacity [44]. Therefore, we clean the data by removing the instances that include missing or infinity values. The feature values in the CIC datasets include various numerical values, and training the model without scaling these values can result in classification errors and also causes the model to take more training time. We address this issue by implementing MinMaxScaler [45], a scaling techniques used in Python. This technique can normalize the data so that the minimum value of the feature becomes zero and the maximum becomes one.

In Table 4, we present the distribution of attacks in CICDDoS2019 as an example of unbalanced CIC datasets. As can be seen, there is an extreme unbalancing between the different classes. The TFTP represents the largest number of instances, while the WebDDoS has a much smaller number of instances than the other types of attacks. Therefore, we drop the WebDDoS before resampling the dataset [46].

Table 4.

Unbalanced instances of benign and DDoS attacks in CICDDoS2019 dataset.

Bolodurina et al. [47] investigated the issue of improving classification performance on the imbalanced CICDDoS2019 dataset. They first carried out their classification experiment on the original imbalanced dataset. The result of this experiment returned low performance in the classification process. They then attempted to improve the performance by applying resampling techniques to balance the CICDDosS2019 dataset. The final results of this work showed improvement, though not significant, in the classification performance. Several studies, such as [46,48,49], referred to difficulty obtaining high performance from the classification of the imbalanced CICDDoS2019 dataset, and they addressed this issue by balancing the data and then using additional strategies to improve the performance. Furthermore, Pedro et al. [46] formalized the concept of imbalance through the Pielou index J as a metric of balancing. The values of J are located between 0 and 1, where 0 indicates the largest unbalance, and 1 represents the largest balance. The value of J for the imbalanced CICDDoS2019 dataset was 0.765.

One approach to address the issue of class unbalance in the used dataset is to randomly resample the training dataset using undersampling or oversampling methods [50]. Undersampling works by removing the instances from the majority class, while oversampling works by duplicating the instances from the minority class. Due to hardware limitations and to efficiently allocate the data in the available memory, we apply the resampling process by undersampling all attack classes in the CIC datasets. We conduct the undersampling process by employing the built-in Python module ‘RandomUnderSampler’ [51], which involves randomly selecting examples from the majority class to delete from the training dataset. The instances of CIC datasets after performing the preprocessing become 5499, 10,990, and 55,765 for each class in CICIDS2017, CSECICIDS2018, and CICDDoS2019, respectively. Each of these instances is a vector with 67 features ready to be classified.

In this paper, we adopt both binary and multiclass classification. To conduct the binary classification, we merge the DDoS classes in each dataset, CICIDS2017/CSECICIDS2018/CICDDoS2019, separately into one class and relabel them as an attack class against the benign class. On the other hand, we perform multiclassification on each dataset, CICIDS2017/CSECICIDS2018/CICDDoS2019, separately. CICIDS2017 includes 4 DDoS attacks plus benign, CSECICIDS2018 includes 6 DDoS attacks plus benign, and CICDDoS2019 includes 11 DDoS attacks plus benign. These DDoS classes are illustrated in Table 3. In total, we classify 17 distinct DDoS attacks extracted from CIC datasets in addition to the benign class.

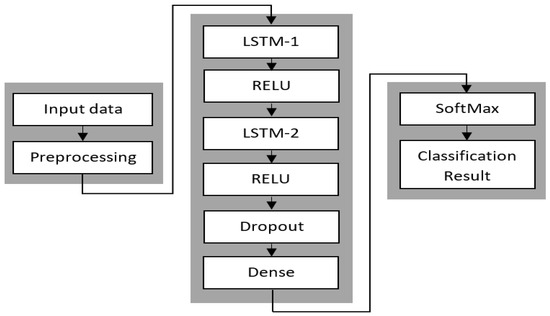

4.2. LSTM Classification Model

In this paper, we use the LSTM network with two layers (LSTM-1 and LSTM-2) of neurons for temporal feature extraction, as shown in Figure 3. Each layer of LSTM includes 64 hidden neurons. The neuron activation function in each layer is represented by the RELU function to perform the nonlinear operations, while the last layer of the LSTM network is a fully connected (dense) layer. The dense layer is tailed by the Softmax layer to compute the probability for each class during the classification process. The learning rate of the Adam optimizer in the LSTM network is 0.003, and the loss functions are the binary cross-entropy and categorical cross-entropy for binary and multiclassification, respectively.

Figure 3.

Architecture of LSTM classification model.

The classification model receives the input as extracted features from the preprocessing part to perform the classification. Since we seek to classify the time-series data, we use an LSTM classifier that yields classification with significant performance for this type of data. The classification model uses the training dataset in the learning phase by fitting the input features vector with its label . We use the validation dataset to give an estimate of model performance while tuning the model’s hyperparameters. The output of the classification model will be either benign or attack class in the case of binary classification or several classes that include DDoS attacks and benign. We then evaluate the predictions of the classification model using the common performance metrics: accuracy (ACC), precision (PR), recall (RE), and F1-score.

4.3. Explanation Models

The explanation methods are used to interpret classification model predictions. In this paper, LIME, SHAP, Anchor, and LORE are used to interpret the predictions of the LSTM model. These methods are model agnostic and work under the assumption that no information is available about the classification model or its parameters. Technically, these methods depend on an approximation of the classification function, which leads them to figure out how the input instances contribute to a classification prediction. The explanation model utilizes the relevance between the input instances and the prediction of the classification model to identify important features and highlight the top features that contribute to the predicted accuracy of the classification model.

To provide the reader with an example of the outputs of explanation methods, in Table 5, we present explanations of the GoldenEye attack for an arbitrary instance selected from the CICIDS2017 dataset. We display only the top 10 important explanations for each explanation model. The numbers beside the features in LIME and SHAP represent the weight of the feature.

Table 5.

Top 10 explanations of a sample of the GoldenEye attack using the explanation methods.

We exploit the explanation methods to interpret the prediction of DDoS attacks and benign classes within CIC datasets. Algorithm 1 shows the procedures we follow to implement the classification and the explanation of each class in the CIC datasets. The input of Algorithm 1 is the test instances and the output is the explanation vector . Each instance from is fed to the LSTM model, which is trained on 18 classes of the train set, to predict .

| Algorithm 1: procedures of explaining each class in CIC datasets. |

| : all instances, : single instance, : true class, : predicted class, : explanation, distinct explanation of class . Input: Output: |

| , : : , , : , where is an explanation, , , |

We then select only the input instances that are correctly classified (e.g., ) and add them to vector . We then use LIME, SHAP, Anchor, and LORE explanation methods to explain each instance in . The output of an explanation method is added to vector . We finally extract the distinct features in each explanation by taking .

The following example illustrates the explanation of the Hulk class using the LIME method:

where here is Hulk.

4.4. Evaluation of Classification and Explanation Models

We evaluate both binary and multiclassification LSTM models on CIC datasets. The performance metrics are different between binary and multiclass cases. For a binary LSTM classifier, we define one of the labels as positive and the other as negative. Choosing the class “Benign” as negative and “Attack” as positive produces the following nomenclature: True Positive () is the number of attack instances that are classified as an attack; True Negative () is the number of benign instances that are classified as benign; False Positive () is the number of benign instances that are classified as an attack; and False Negative () is the number of attack instances that are classified as benign. For a multiclass classifier, is the number of instances correctly classified with the label of . are instances classified as label , but they belong to another class. are the instances of , but mistakenly classified in another class. Table 6 shows the performance metrics: ACC, PR, RE, and F1-score in both binary and multiclassification. is the number of instances in the test set, and is the number of classes in the dataset.

Table 6.

Classification model metrics.

As we implement several explanation methods, each with different explanation techniques, the outputs of the implemented methods for certain predictions are expected to differ. Therefore, we need to compare and evaluate the results of the used explanation methods to figure out those that explain the predictions of our classification models effectively. The evaluation of the explanation model introduces two evaluation metrics [41,52]: Descriptive Accuracy (DA) and Descriptive Sparsity (DS).

DA measures the accuracy of the explanation method in capturing the relevant features to the classification model prediction. As shown in Table 7, DA is obtained by removing the most relevant features from the instance , then finding the new prediction using the classification function and calculating the score of the prediction class in the absence of the features. Removing the relevant features from an instance will decrease accuracy as the classification model has less information to make a correct prediction. The steep decline in the accuracy indicates that the removed features have the dominant contribution to the prediction, while the gradual decline indicates the dependency between the set of removed features in making the prediction.

Table 7.

Definition of evaluation metrics of explanation methods.

DS metric is used to assign a high relevance to the features that have an effect on a prediction in good explanations [41]. To calculate this metric, we scale the relevance values to the range [−1, 1], we then compute a normalized histogram of these values, and finally, we calculate the mass around zero (), as shown in Table 7. The MAZ works as an increasing window that begins from 0 and keeps extending uniformly in opposite directions (positive and negative). The fraction of relevance values located in each window is assessed. Sparse explanations are identified by having a steep rise in MAZ value near 0 and are almost constant around 1 because of the fact that most of the features are not considered relevant. On the other hand, dense explanations are identified by having an outstanding smaller slope near 0, which indicates a significant set of relevant features.

5. Results and Discussion

In this section, we can summarize our experimental results in three steps: (1) the classification of DDoS attacks in CIC datasets using the LSTM model and calculating the classification performance; (2) the explanation of the LSTM predictions using LIME, SHAP, Anchor, and LORE methods; and (3) the evaluation of these explanations using DA and DS matrices.

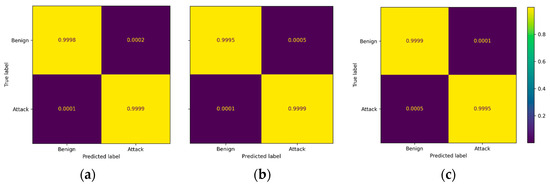

5.1. DDoS Attacks Classification

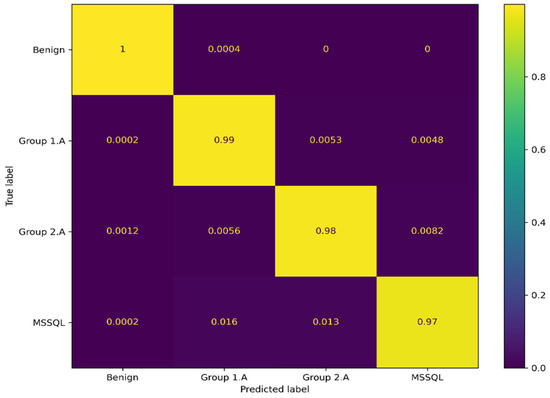

We use the LSTM model designed in Section 4.2 to classify the network flows in the CIC dataset, as mentioned in Table 3. In this experiment, we conduct binary and multiclass classification. Figure 4a–c show the confusion matrices of the binary classification on CICIDS2017, CICIDS2018, and CICDDoS2019 test sets, respectively. We can note that almost 100% of the true attack and benign instances are predicted correctly.

Figure 4.

Confusion matrices of binary classification: (a) CICIDS2017, (b) CICIDS2018, and (c) CICDDoS2019.

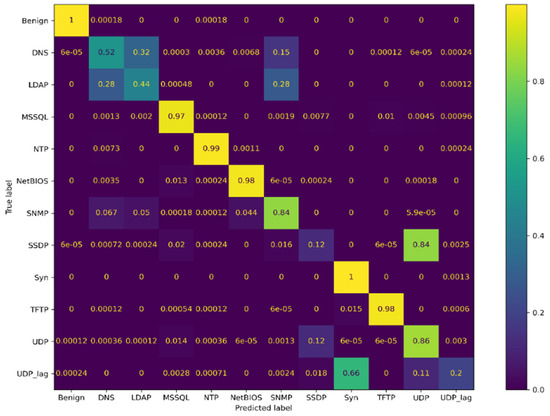

Table 8 presents the outputs of ACC, PR, RE, and F1-score of binary classification on the three CIC datasets. All the performance metrics return values above 0.99 for the binary classification model on the three datasets. We also perform multiclassification on the CIC datasets using the LSTM model. We classify 17 classes of DDoS attacks extracted from CIC 2017, 2108, and 2019 in addition to the benign class. Table 9 shows the classification performance of the LSTM model on these classes. We can note from this table that all performance metrics show very high values of all classes from CICIDS107 and CICIDS2018 datasets and MSSQL, NTP, NetBIOS, and TFTP classes from CICDDoS2019, while the DNS, LDAP, SNMP, SSDP, SYN, UDP, and UDP-Lag classes return low values of the performance metrics.

Table 8.

Binary classification performance on all CIC datasets.

Table 9.

Multiclassification performance on all CIC datasets.

Figure 5 presents the confusion matrix obtained by the CICDDoS2019 dataset classification. This matrix introduces more detailed information on the classes and shows that the LSTM model confuses DNS, LDAP, and SNMP and between UDP, SSDP, SYN, and UDP-Lag classes.

Figure 5.

Confusion matrices of multiclass classification on CICDDoS2019 dataset.

5.2. LSTM Model Explanation

In this subsection, we employ the explanation methods (LIME, SHAP, Anchor, and LORE) to explain the predictions of the LSTM model. We follow the procedures in Algorithm 1 to explain the 18 classes of CIC datasets. Although the explanations of the same class using several explanation methods differ due to the differences in the explanation mechanism of these methods, they can intersect in several features.

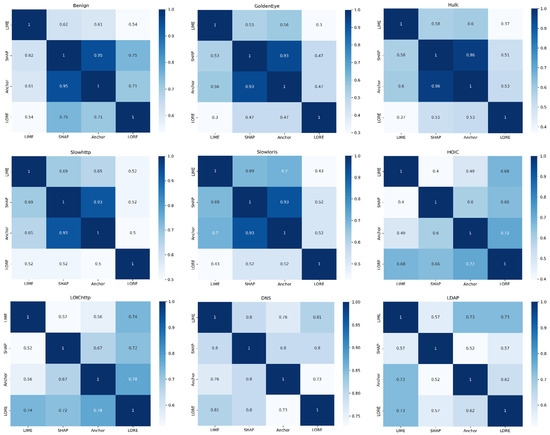

Figure 6 shows the heatmaps of intersections between the explanations of each class. The intersection size is in the range [0, 1]. The value 0 refers to no overlap between the explanations, whereas 1 indicates full overlap. We observe a high overlap (0.93–0.95) between the SHAP and Anchor explanations for CICIDS2017 classes and a low overlap (0.30–0.52) between the LIME and LORE explanations for these classes. LORE and Anchor have a 0.72 overlap in the HOIC class and 0.70 for LOIChttp class, while LIME and SHAP have only a 0.40 and 0.52 overlap for these classes, respectively. LIME and LORE explanations present high overlap (0.73–0.86) in DNS, LDAP, SNMP, and TFTP classes, while SHAP and LORE present high overlap (0.70–0.80) in MSSQL, SSDP, UDP-Lag, and NTP classes.

Figure 6.

Intersections between the explanation methods for each class in CIC datasets.

We calculate the common features between explanations for each dataset class. Table 10 shows the common features of all dataset classes resulting from the intersection between their explanations. The number beside each class in the first column indicates a number of the common features per class. The last row lists 51 distinct features that are extracted from the union between the common features of all classes. These features are the intrinsic features we need to classify the 17 DDoS classes in Table 3.

Table 10.

Common features between LIME, SHAP, Anchor, and LORE explanations for each class. # refers to the number of features.

From the confusion matrix in Figure 5, we note that the model exhibits confusion between DNS, LDAP, and SNMP and between UDP, SSDP, SYN, and UDP-Lag classes. The similarity in the nature of some DDoS attacks can be reflected in the feature values of the captured dataset, thereby confusing the model and resulting in a decrease in the performance detection of these classes. Chartuni et al. [49] presented three scenarios to improve the classification CICDDoS2019 approach. One of these scenarios is unifying the DDoS attacks with significant similarities in the feature space. The similarity between the features of these classes was calculated by statistical metrics (ACV, AFR0, and ASAH). ACV indicates features with a constant value, AFR0 summarizes the features with frequent zeros greater than 98%, and ASAH refers to the features having a significant bias with homogeneity in their values greater than or equal to 96%.

In our work, we exploit the overlap between the common features in Table 10 of each class to explain the low performance of the LSTM and show the similarity between the confused classes. Table 11 shows the percentage of similarity between the pair of common features of CIC classes in Table 10.

Table 11.

Percentage of common features between pair classes.

For easy representation, we map the name of CIC classes into sequence numbers of the letter “C”, as shown in Table 12. We note from Table 11 that almost all the feature similarity that is over or equal to 50%, with bold black, is between CICDDoS2019 classes from C8 to C18. This similarity could confuse the model and result in a decrease in the performance detection of some of the CICDDoS2019 classes.

Table 12.

Mapping of CIC classes.

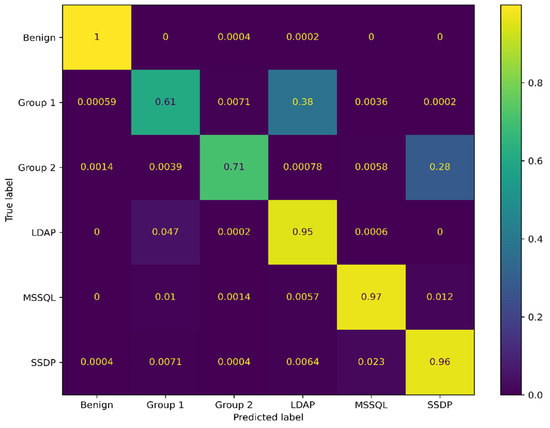

To address the confusion issue, we unify the classes with similar features greater than 50%. From Table 11, we note that the DNS class has similar features with SNMP, NetBIOS, and TFTP with percentages of 65%, 61%, and 56%, respectively. We unify these classes together and call them Group 1. We also note that the UDP class has similar features with NTP, SYN, and UDP-Lag with percentages of 70%, 58%, and 54%, respectively. These classes are unified in Group 2.

Figure 7 shows the confusion matrix on the test part of the CICDDoS2019 dataset after retraining the LSTM classifier with a new grouping of classes. We noticed that there is still confusion between Group 1 and the LDAP class and between Group 2 and the SSDP class. As the LDAP exhibits 46% similarity with DNS and the SSDP exhibits 48% similarity with UDP, we relax the unifying percentage from 50 to 45% to add LDAP to Group 1 and call it Group 1.A and SSDP to Group 2 and call it Group 2.A.

Figure 7.

Confusion matrices of CICDDoS2019 classification. Unifying some classes in Group 1 and Group 2.

Figure 8 shows the confusion matrix after the new unification. Group 1.A contains DNS, SNMP, NetBIOS, TFTP, and LDAP, while Group 2.A contains NTP, SYN, UDP, UDP-Lag, and SSDP. We note that the performance of the classifier is enhanced, the confusion is eliminated, and all the performance metrics in Table 13 return high values.

Figure 8.

Confusion matrices of CICDDoS2019 classification. Unifying some classes in Group 1.A and Group 2.A.

Table 13.

Multiclass classification performance on CICDDoS2019.

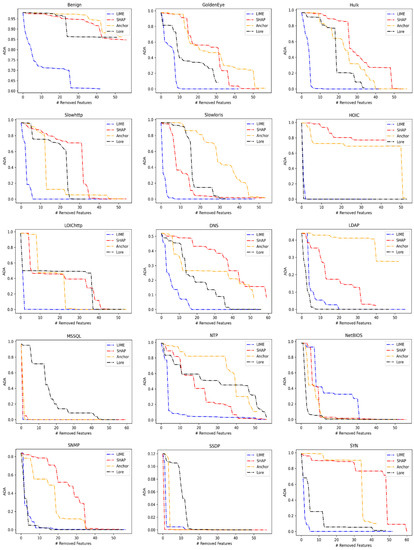

5.3. Evaluation of the Explanation Methods

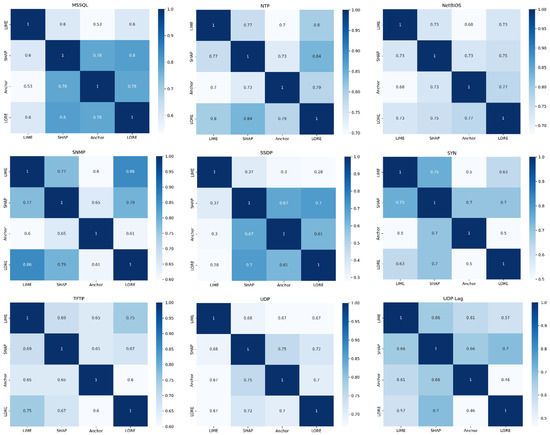

We evaluate the used explanation methods using the DA and DS metrics defined in Table 7 to compare them. We first implement DA by successively removing the most relevant features from the samples of the datasets and then observe the decrease in the classification accuracy. We remove the features in descending order according to their importance scores.

Figure 9 shows the results of this experiment. The horizontal axis represents the number of features we remove, while the vertical axis is the average of LSTM accuracy (ADA) on the test instances of the intended class. We can note that the ADA curves vary significantly among the explanation methods. However, the LIME exhibits steep declines in ADA curves for 10 dataset classes compared to other methods. These classes are Benign, GoldenEye, Hulk, Slowhttp, Slowloris, LOIChttp, DNS, MSSQL, NTP, and SYN classes. The LORE method shows steep declines of the ADA in HOIC, LDAP, NTP, SNMP, and UDP-Lag classes.

Figure 9.

Average descriptive accuracy (ADA) score on each class of CIC datasets. # refers to the number of removed features.

We can also note that the decline of the LIME curves of these classes is close to the LORE curves, practically of HOIC and SNMP classes. The SHAP shows steep declines of the ADA in SSDP and UDP classes, and we also note the closeness in SHAP and LIME curves. The Anchor shows a steep decline of ADA in only TFTP class.

The steep decline in the ADA curve indicates that the corresponding explanation method captures the features with a dominant contribution to predictions. On the other hand, the gradual decline in the ADA curve indicates a dependency between the features of the corresponding explanation method. We note from Figure 9 that the most gradual declines of ADA curves are provided by Anchor and SHAP explanation methods. The Anchor shows a slow decline of ADA in eight classes, which are Benign, GoldenEye, Slowloris, LDAP, NTP, SYN, UDP, and UDP-Lag. The SHAP shows a slow decline of ADA in six classes, which are Hulk, HOIC, LOIChttp, DNS, SNMP, and TFTP. While the LORE shows a slow decline on Slowhttp, MSSQL, and SSDP, the LIME exhibits a slow decline only on NetBIOS.

To compare the ADA of the explanations and clearly figure out the explanation method that exhibits a decline in ADA for each dataset class, we calculate the area under the ADA curve and call it AUDA. AUDA columns in Table 14 list the value of the area under the ADA curve of the explanation methods for each dataset class.

Table 14.

Area under the DA (AUDA) and area under the MAZ (AUMAZ) of all classes of CIC datasets. The colored values are encoded to (Bold Blue: lowest AUDA), (Bold Orange: largest AUDA), and (Bold Red: largest AUMAZ).

The explanations that exhibit a steep decline in ADA curves provide lower values of AUDA. The bold blue values in Table 14 are the lowest AUDA. We can note that LIME exhibits the lowest values of AUDA in 10 classes, and values of AUDA are close to the lowest in the rest of the classes. The explanations that exhibit a slow decline in ADA curves provide larger values of AUDA. The bold orange values in Table 14 are the largest AUDA. We note that the Anchor and SHAP methods show the largest values of AUDA.

Table 15 presents the strong dominant contribution features of the CIC data classes. Removing these features from the input instance results in the steep decline of ADA for each class according to the explanation method that is listed in the second column of Table 15. We can extract 42 unique features from this table. We note that the features that have a strong contribution to the prediction in most classes are captured using the LIME explanation method based on the lowest value of AUDA.

Table 15.

Strong contribution features for each class of the CIC datasets.

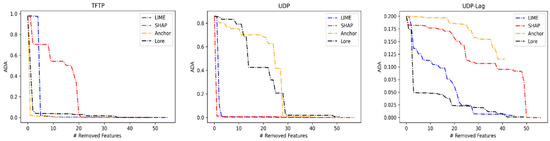

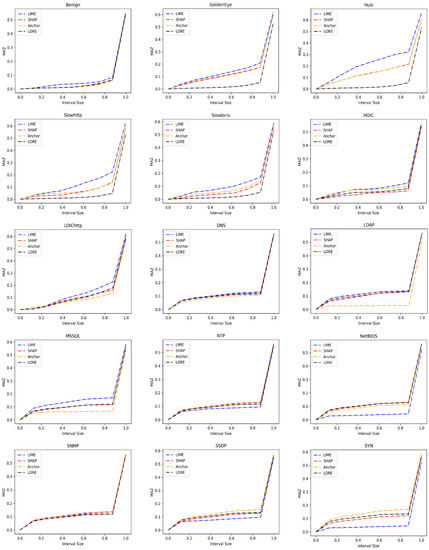

We also note from Table 15 that LIME is good for capturing dominating features for classes that have less confusion with other classes. We also need to assign the relevance of features that impact a prediction to obtain a good explanation. Thus, we use the MAZ score defined in Table 7 to evaluate the explanation methods by investigating the sparsity of the generated explanations. Sparse explanations have a steep slope in MAZ near 0 and are constant around 1, as most of the features are not marked as relevant, while the denes explanations have a slow slope in MAZ near 0.

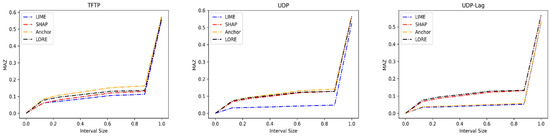

Figure 10 shows the result of the MAZ calculation for all dataset classes and explanation methods. The horizontal axis represents the interval size of the MAZ window around zero, while the vertical axis is the MAZ output value. We observe that LIME shows steep slopes of the MAZ curve for nine dataset classes. These classes are Benign, GoldenEye, Hulk, Slowhttp, Slowloris, HOIC, LOIChttp, DNS, and MSSQL. The Anchor exhibits steep slopes of the MAZ curve for SSDP, SYN, TFTP, and UDP. The MAZ curves of LDAP and NetBIOS classes show steep slopes with the LORE method. The SHAP exhibits steep slopes of the MAZ curve on NTP and SNMP classes.

Figure 10.

Sparsity measured as mass around zero (MAZ) score on each class of CIC datasets.

The steep slope of the MAZ curve around zero indicates that the explanations of the corresponding method are sparse. We note that the explanation methods, particularly the LIME, that captured the dominant contribution features in Figure 9 show sparsity between these features, as shown from the steep slopes of the MAZ curves in Figure 10. This observation can be clear by finding the area under the MAZ in Table 14.

AUMAZ columns in Table 14 list the value of the area under the MAZ curve of the explanation methods for each dataset class. The MAZ curve that has a steep slope around zero returns a larger AUMAZ value that indicates a sparse explanation. The bold red values in the table are the largest AUMAZ. We also observe that the explanation methods, particularly the Anchor and SHAP, that capture the dense features in Figure 9 do not show the largest values of AUMAZ (bold red values) in Table 14, except for three classes. These classes are SNMP, SSDP, and UDP.

6. Conclusions

In this paper, we investigate the explanation of predictions of the DL model on the CIC datasets. In particular, we employ LIME, SHAP, Anchor, and LORE explanation methods to explain the LSTM that is used to classify DDoS attacks in CICIDS2017, CSECICIDS2018, and CICDDoS2019 datasets. We conduct binary and multiclass classification. The result of binary classification on all three datasets demonstrates high values of the performance metrics, while the result of multiclass classification shows good performance only on the CICIDS2017 and CSECICIDS2018 datasets and some classes of CICDDoS2019. The LSTM model has confusion in distinguishing between some attacks in CICDDoS2019, which results in low classification performance.

LIME, SHAP, Anchor, and LORE explanation methods are used to interpret the predictions of the LSTM model. We employ these methods to explain the multiclass classification of 17 DDoS attacks that are extracted from the three CIC datasets. We find the intersection between the four explanations of each class to extract 51 features that represent the intrinsic classification features of DDoS attacks in CIC datasets. We also employ the similarity between the class explanations to unify the DDoS attacks and improve the LSTM classification performance. An explanation of classes in the CICDDoS2019 is used to improve LSTM performance.

We finally compare and evaluate the explanation methods using DA and DS metrics. The DA is used to determine the explanation methods that can capture the dominant contribution features to the prediction of a classification model. Removing these features from the input instance can cause a steep dropping in the prediction. Our results show that the LIME method can capture such features, and it is good to capture dominating features for classes that have less confusion with other classes. The features that are captured using Anchor and SHAP methods in most DDoS classes show a gradual decline in accuracy (DA) after removing them. These methods can capture the dependent features. Our results on the DS metrics show that the dominant contribution features captured by the LIME have less dependency between them, and the features captured by Anchor and SHAP are more dependent. In future work, we will focus on more explanation methods to interpret the recent black-box models that are used to detect the wide spectrum of attacks that threaten network security.

Author Contributions

Conceptualization, A.B., H.B. and B.A.; methodology, A.B.; software, A.B.; validation, A.B.; formal analysis, A.B.; investigation, A.B.; resources, B.A.; data curation, A.B.; writing—original draft preparation, A.B.; writing—review and editing, B.A. and H.B.; visualization, A.B.; supervision, B.A. and H.B.; project administration, B.A. and H.B.; funding acquisition, B.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the International Scientific Partnership Program of King Saud University under Grant ISPP-18-134(2).

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: [https://www.unb.ca/cic/datasets/index.html] (accessed on 17 July 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Almaiah, M.A. Almaiah, M.A. A New Scheme for Detecting Malicious Attacks in Wireless Sensor Networks Based on Blockchain Technology. In Artificial Intelligence and Blockchain for Future Cybersecurity Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 217–234. [Google Scholar]

- Zargar, S.T.; Joshi, J.; Tipper, D. A Survey of Defense Mechanisms Against Distributed Denial of Service (DDoS) Flooding Attacks. IEEE Commun. Surv. Tutor. 2013, 15, 2046–2069. [Google Scholar]

- Hou, J.; Fu, P.; Cao, Z.; Xu, A. Machine Learning Based DDos Detection Through NetFlow Analysis. In Proceedings of the IEEE Military Communications Conference MILCOM, Los Angeles, CA, USA, 29 October 2018. [Google Scholar]

- DDoS Attacks History. Radware. Available online: https://www.radware.com/security/ddos-knowledge-center/ddos-chronicles/ddos-attacks-history/ (accessed on 17 July 2023).

- Choi, H.; Lee, H. Identifying Botnets by Capturing Group Activities in DNS Traffic. Comput. Netw. 2012, 56, 20–33. [Google Scholar]

- Suresh, S.; Ram, N. A Review on Various DPM Traceback Schemes to Detect DDoS Attacks. Indian J. Sci. Technol. 2016, 9, 1–8. [Google Scholar] [CrossRef][Green Version]

- Argyraki, K.; Cheriton, D. Active Internet Traffic Filtering: Real-Time Response to Denial of Service Attacks. arXiv 2003, arXiv:cs/0309054. [Google Scholar]

- Anjum, F.; Subhadrabandhu, D.; Sarkar, S. Signature Based Intrusion Detection for Wireless Ad-Hoc Networks: A Comparative Study of Various Routing Protocols. In Proceedings of the IEEE 58th Vehicular Technology Conference, Orlando, FL, USA, 6 October 2003. [Google Scholar]

- Cloudflare DDoS Threat Report 2022 Q3. Cloudflare. Available online: https://blog.cloudflare.com/cloudflare-ddos-threat-report-2022-q3/ (accessed on 17 July 2023).

- Hoque, N.; Kashyap, H.; Bhattacharyya, D.K. Real-Time DDoS Attack Detection Using FPGA. Comput. Commun. 2017, 110, 48–58. [Google Scholar] [CrossRef]

- Swami, R.; Dave, M.; Ranga, V. Software-Defined Networking-Based DDoS Defense Mechanisms. ACM Comput. Surv. 2019, 52, 36. [Google Scholar]

- Ribeiro, M.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics, San Diego, CA, USA, 12 June 2016. [Google Scholar]

- Lundberg, S.M.; Lee, S. A Unified Approach to Interpreting Model Predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3 December 2017. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Anchors: High-Precision Model-Agnostic Explanations. In Proceedings of the AAAI conference on artificial intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Pedreschi, D.; Turini, F.; Giannotti, F. Local Rule-Based Explanations of Black Box Decision Systems. arXiv 2018, arXiv:1805.10820. [Google Scholar]

- Ugwu, C.C.; Obe, O.O.; Popoọla, O.S.; Adetunmbi, A.O. A Distributed Denial of Service Attack Detection System Using Long Short Term Memory with Singular Value Decomposition. In Proceedings of the IEEE 2nd International Conference on Cyberspac (CYBER NIGERIA), Abuja, Nigeria, 23 February 2021. [Google Scholar]

- Gadze, J.D.; Bamfo-Asante, A.A.; Agyemang, J.O.; Nunoo-Mensah, H.; Opare, K.A.-B. An Investigation into the Application of Deep Learning in the Detection and Mitigation of DDOS Attack on SDN Controllers. Technologies 2021, 9, 14. [Google Scholar]

- Prakash, K.B.; Kanagachidambaresan, G.R. (Eds.) Programming with TensorFlow: Solution for Edge Computing Applications; Springer International Publishing: Cham, Switzerland, 2021; pp. 53–61. [Google Scholar]

- Keshk, M.; Koroniotis, N.; Pham, N.; Moustafa, N.; Turnbull, B.; Zomaya, A.Y. An Explainable Deep Learning-Enabled Intrusion Detection Framework in IoT Networks. Inf. Sci. 2023, 639, 119000. [Google Scholar]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef]

- Turlach, B. Least Angle Regression. Ann. Stat. 2004, 32, 481–490. [Google Scholar]

- Shapley, L.S. A Value for N-Person Games, Contributions to the Theory of Games; Princeton University Press: Princeton, NJ, USA, 1953; Volume 2, pp. 307–317. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement Learning: A Survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Even, S. Graph Algorithms, 2nd ed.; Guy Even, T.-A.U., Ed.; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Kaufmann, E. Information Complexity in Bandit Subset Selection. J. Mach. Learn. Res. 2013, 30, 228–251. [Google Scholar]

- Fan, F.; Xiong, J.; Li, M.; Wang, G. On Interpretability of Artificial Neural Networks: A Survey. IEEE Trans. Radiat. Plasma Med. Sci. 2021, 5, 741–760. [Google Scholar] [CrossRef] [PubMed]

- Elsayed, M.; LeKhac, N.; Dev, S.; Jurcut, A. DDoSNet: A Deep-Learning Model for Detecting Network Attacks. In Proceedings of the 2020 IEEE 21st International Symposium on “A World of Wireless, Mobile and Multimedia Networks” (WoWMoM), Cork, Ireland, 31 August 2020. [Google Scholar]

- Kim, M. Supervised Learning-based DDoS Attacks Detection: Tuning Hyperparameters. ETRI J. 2019, 41, 560–573. [Google Scholar] [CrossRef]

- Hwang, R.; Peng, M.; Nguyen, V.; Chang, Y. An LSTM-Based Deep Learning Approach for Classifying Malicious Traffic at the Packet Level. Appl. Sci. 2019, 9, 3414. [Google Scholar] [CrossRef]

- Yuan, X.; Li, C.; Li, X. DeepDefense: Identifying DDoS Attack via Deep Learning. In Proceedings of the 2017 IEEE International Conference on Smart Computing (SMARTCOMP), Hong Kong, China, 29 May 2017. [Google Scholar]

- Cui, J.; Long, J.; Min, E.; Liu, Q.; Li, Q. Comparative Study of CNN and RNN for Deep Learning Based Intrusion Detection System; Springer: Berlin/Heidelberg, Germany, 2018; pp. 159–170. [Google Scholar]

- Zhang, Y.; Xu, C.; Jin, L.; Wang, X.; Guo, D. Network Intrusion Detection: Based on Deep Hierarchical Network and Original Flow Data. IEEE Access 2019, 7, 37004–37016. [Google Scholar]

- Azizjon, M.; Jumabek, A.; Wooseong, K. 1D CNN Based Network Intrusion Detection with Normalization on Imbalanced Data. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19 February 2020. [Google Scholar]

- Batchu, R.K.; Seetha, H. An Integrated Approach Explaining the Detection of Distributed Denial of Service Attacks. Comput. Netw. 2022, 216, 109269. [Google Scholar]

- Bårli, E.M.; Yazidi, A.; Viedma, E.H.; Haugerud, H. DoS and DDoS Mitigation Using Variational Autoencoders. Comput. Netw. 2021, 199, 108399. [Google Scholar] [CrossRef]

- Han, D.; Wang, Z.; Chen, W.; Zhong, Y.; Wang, S.; Zhang, H.; Yang, J.; Shi, X.; Yin, X. DeepAID: Interpreting and Improving Deep Learning-Based Anomaly Detection in Security Applications. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, New York, NY, USA, 15 November 2021. [Google Scholar]

- Le, T.-T.-H.; Kim, H.; Kang, H.; Kim, H. Classification and Explanation for Intrusion Detection System Based on Ensemble Trees and SHAP Method. Sensors 2022, 22, 1154. [Google Scholar]

- Neupane, S.; Ables, J.; Anderson, W.; Mittal, S.; Rahimi, S.; Banicescu, I.; Seale, M. Explainable Intrusion Detection Systems (x-Ids): A Survey of Current Methods, Challenges, and Opportunities. IEEE Access 2022, 10, 112392–112415. [Google Scholar] [CrossRef]

- Zhang, Z.; Al Hamadi, H.; Damiani, E.; Yeun, C.Y.; Taher, F. Explainable Artificial Intelligence Applications in Cyber Security: State-of-the-Art in Research. IEEE Access 2022, 10, 93104–93139. [Google Scholar] [CrossRef]