Abstract

Automated detection of road damage (ADRD) is a challenging topic in road maintenance. It focuses on automatically detecting road damage and assessing severity by deep learning. Because of the sparse distribution of characteristic pixels, it is more challenging than object detection. Although some public datasets provide a database for the development of ADRD, their amounts of data and the standard of classification cannot meet network training and feature learning. With the aim of solving this problem, this work publishes a new road damage dataset named CNRDD, which is labeled according to the latest evaluation standard for highway technical conditions in China (JTG5210-2018). The dataset is collected by professional onboard cameras and is manually labeled in eight categories with three different degrees (mild, moderate and severe), which can effectively help promote research of automated detection of road damage. At the same time, a novel baseline with attention fusion and normalization is proposed to evaluate and analyze the published dataset. It explicitly leverages edge detection cues to guide attention for salient regions and suppresses the weights of non-salient features by attention normalization, which can alleviate the interference of sparse pixel distribution on damage detection. Experimental results demonstrate that the proposed baseline significantly outperforms most existing methods on the existing RDD2020 dataset and the newly released CNRDD dataset. Further, the CNRDD dataset is proved more robust, as its high damage density and professional classification are more conducive to promote the development of ADRD.

1. Introduction

Road damage detection (RDD) is an important task in the field of traffic infrastructure and involves locating and classifying road damage [1,2,3,4]. It can identify roads that need maintenance to reduce potential safety hazards. However, current RDD approaches still depend on manual inspection in most countries. This not only consumes a lot of time and labor, but it also cannot ensure that the damaged road sections are repaired in time. Targeting this problem, automated detection of road damage (ADRD) based on deep learning began to appear and gradually become an emerging task in computer vision [4,5]. Its research goal is to realize automatic road damage detection and analysis so as to play an important role in intelligent traffic maintenance systems.

Most of the existing methods use convolutional networks to learn classification features and have achieved significant performance improvement on public foreign datasets [3,6]. When these methods are directly applied to actual road images in other countries, however, there is a landslide decline in accuracy. This is because there are differences in data distribution between training data and actual testing data. After all, different climates, road construction standards and traffic rules in every country lead to differences in damage appearance and susceptible locations. In machine learning, transfer learning [3] or semi-supervised training [5] can effectively alleviate the impact of the distribution gap between training and testing data on generalization. However, the premise is that there is insufficient target domain data for training. At present, many datasets of road damage are private and unpublished [7,8], which greatly limits the exploration of ADRD. Therefore, the most important research motivation of this paper is to release a new road damage dataset with professional classification and of uniform size so as to promote the research of relevant algorithms.

In order to realize this research motivation, this paper collected and annotated the first edition of the China Road Damage Detection Dataset (CNRDD) over the course of three months. It consists of 4319 high-definition road images and provides 21,552 road damage annotations. These annotations contain eight types of damage: crack, longitudinal crack, lateral crack, subsidence, rutting, pothole, looseness and strengthening. Each category of damage also contains three different degree labels, namely mild, moderate and severe. Some damage examples are shown in Figure 1.

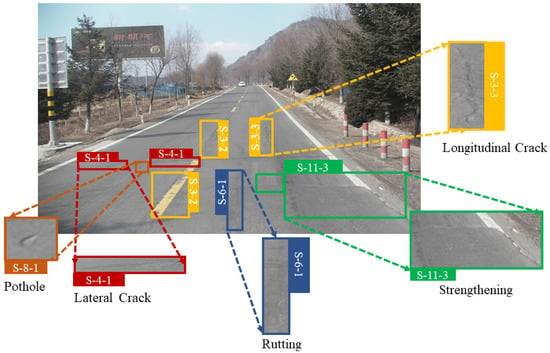

Figure 1.

An example image from CNRDD. Various colored bounding boxes represent different types of road damage.

As shown in Figure 1, multiple types of damage can appear in the same image. Although they do not overlap, the features of each kind of damage are very close to those of healthy road surfaces. In many cases, it is difficult even for the human eye to correctly classify only based on the images taken by vehicle cameras without field investigation. These phenomena make ADRD very challenging. The top view, which is similar to the perspective of a remotely sensed image, can alleviate the above difficulties to some extent [6,9]. Such images need to be taken manually or by an unmanned aerial vehicle (UAV), however, which makes it impossible to quickly patrol large-scale, high-traffic road scenes. In order to effectively patrol multiple long-distance roads, it is also necessary to use onboard cameras that can feed back the collected images to the terminal in a timely manner. This can automatically warn of the damaged sections to be repaired and improve maintenance efficiency. Thus, using the ADRD algorithm to realize the automatic early warning of damaged sections can improve maintenance efficiency.

The images taken by the onboard camera are different from remote sensing images. These onboard images have obvious perspective relationships, so it is impossible to use a similar network for accurate detection [10]. Of course, these images are also different from conventional photos with perspective changes. The size of the collected image is large, but the proportion of damage is small in the image. The apparent distribution of the damage is sparse, and the boundary is not obvious. If we directly use any series of object detection algorithms, such as Faster RCNN [11,12] or YOLO [13,14,15,16,17], it is very easy to have false detection and missed detection [18].

To solve this problem, this paper proposes novel road damage detection with attention learning based on existing detection algorithms. It consists of an attention fusion module, a salient feature learning module and fine-tuned training. The attention fusion module introduces simple and fast candy edge detection to provide salient cues and then guides damage detection by attention learning, which can effectively alleviate the sparse distribution and unclear boundaries of road damage. The salient feature learning module explicitly leverages attention normalization to alleviate the interference of approximate appearance on damage detection. Fine-tuning adopts a strategy from coarse to fine. That is, it first uses coarse labels without severity for training to obtain a pre-training model. Then, we fine-tune the proposed framework with the relatively strict categories of labels. This fine-tuning can enhance the network’s perception of different damage and thus reduce the impact of some types of damage with similar appearance on classification. We evaluate the proposed baseline on the popular RDD2020 dataset [3] and the self-collected CNRDD dataset. It was found that the CNRDD dataset is more challenging than the RDD2020 dataset, and cracks are easier to distinguish than other damage. Further, the proposed baseline has a significantly improved F1-Score and mAP. Although its accuracy does not reach the level of physical object detection, this model at least provides a feasible solution for supervised ADRD.

In summary, this paper’s contributions are threefold:

- (1)

- Release the first China road damage detection dataset, which contains eight types of damage with three degrees of severity. It is named CNRDD and comprises 21,552 annotations from 4319 images, which can effectively promote the development of ADRD.

- (2)

- Design an attention-learning-based road damage detection framework, which can explicitly utilize attention fusion and attention normalization to enhance the learning of salient damage features.

- (3)

- On RDD2020 and CNRDD datasets, the proposed baseline achieved state-of-the-art performance against existing methods without embedded learning. Based on the experiments, this work provides meaningful recommendations for future ADRD research.

2. Related Work

Automatic road damage detection first appeared in the 1980s [19]. It mainly relies on traditional edge detection and filtering to complete image processing, and then uses SVM or AdaBoost to realize classification [20,21,22]. However, these early methods are sensitive to data diversity and thus cannot be applied in real scenes. With the rapid development of artificial intelligence in the field of computer vision, road damage detection based on deep learning has become the mainstream of ADRD [23,24]. This has greatly fostered the development of ADRD, but there are still deficiencies in accuracy and practicability. In this section, therefore, we briefly review some representative research and the datasets supporting this research and then analyze the existing problems and potential solutions.

2.1. Existing Road Damage Datasets

For any deep learning algorithm, trainable data is the key. ADRD is no exception. In order to meet the needs of ADRD training, three road damage datasets have been released. The first one is the German Asphalt Pavement Distress (GAPs) dataset [25], which was collected from three different federal highways in Germany. It consists of 2468 gray-valued images with manual annotations. In practical applications, however, color images are more conducive to road damage detection than gray-scale images. The second dataset is the Pavement Image Dataset (PID) [26], which was captured by street cameras from 22 different road sections of the United States. It provides 7237 images and 67,469 labels. These labels are annotated as nine categories, but eight of them identify cracks, and the other is for potholes. This classification is not conducive to network training. The third dataset is the RDD dataset [3]. It was released in 2018 and has been updated to include three versions so far; it is the most widely used dataset in road damage detection tasks. This dataset was captured by a smartphone installed in a vehicle and contains 31,342 road damage annotations from India, Japan and the Czech Republic. However, the overall size of the images is relatively small due to the capture equipment. The damage density of the RDD dataset is low, meaning a large number of samples have only one damage bounding box. Rather than locating road damage, the dataset focuses more on the recognition of road damage.

The datasets mentioned above have contributed to the development of ADRD. It is not surprising to find that most data are collected from developed countries. Due to various climates, road infrastructure standards and traffic rules, there are obvious differences in the data distributions of road damage among countries. In order to further promote the development of ADRD, this paper releases a new road damage dataset named CNRDD. In the process of data collection and labeling, types of damage are classified into eight categories according to the latest evaluation standards for highway technical conditions (JTG5210-2018). Considering the advantages of deep learning in recognition and classification, samples in which a single image contains multiple types of damage are selected to increase the difficulty and practical value of the dataset. In addition, in order to better access future intelligent road maintenance system, a professional onboard camera is chosen as the acquisition device. In this way, when road maintenance vehicles or driverless cars patrol daily in the future, they can provide timely feedback via uploading collected images to the cloud or server through 5G technology. Then, the ARDR algorithm can distinguish whether there is damage in the feedback images and give maintenance warnings for serious segments. This mode of ADRD can improve maintenance efficiency, reduce the economic cost caused by untimely maintenance and can reduce the number of traffic accidents caused by road damage. This work hopes to attract more researcher attention to ADRD and to create a new paradigm for the realization of intelligent road maintenance as soon as possible.

2.2. Existing Damage Detection Approaches

As we all know, object detection based on deep learning is the most successful task in the field of computer vision. Most of the existing methods can be divided into one-stage or two-stage according to the network structure [11,12,13,17]. Two-stage methods consist of bounding box regions and proposal classification. This technique began with RCNN and then evolved into Faster RCNN through the introduction of a region proposal network and an ROI pooling layer [11,12]. Faster RCNN is widely used in pedestrian detection, remote sensing image detection and other practical applications. With the support of pre-training models from the ImageNet dataset, this method can generally obtain good accuracy, but its complex structure leads to a large amount of time and memory space for training and testing. The one-stage method, represented by the you-only-look-once (YOLO) series, directly regards target detection as a regression problem [13,14,15,17]. It can carry out end-to-end object detection in the whole image. The original YOLO v1 gradually evolved into YOLO v5 by introducing batch normalization (BN) and Darknet structures, which improve the accuracy and accelerate the convergence speed. The main advantages of one-stage methods lie in their ability to detect small objects and their high efficiency. Because of these attributes, they are more suitable as the baseline for road damage detection.

Zhang et al. used YOLO v4 to detect road damage on the RDD dataset [6] and introduced a generative adversarial network (GAN) for data augmentation. Additionally considering data augmentation are PG-GAN proposed by Maeda et al. [27] and the improved transfer learning proposed by Arya et al. [3]. They all hope to use GAN to generate more diverse training samples to improve the generalization ability of networks in real road scenes. However, they did not consider semi-supervised training for the generated data, so they failed to give full play to the role of data augmentation. In addition, there are some algorithms of road damage detection assisted by 3D sensors and laser cameras. They utilize the depth information and sensing signals of the road surface to improve the location and classification of road damage [23,28]. Relevant professional equipment is generally expensive, which makes it suitable for quantitative analysis of road damage prior to road maintenance, but it is not convenient for daily road damage detection.

Almost all of the methods mentioned above are evaluated on the RDD dataset [3]. Based on this dataset, the BigData Road Damage Detection Challenge was held three times from 2018 to 2020. In a recent challenge, DiDi and the Shenzhen Urban Transport Planning Center achieved excellent performance by improving Faster RCNN and YOLO v4 [5]. This demonstrates that the road damage detection task has attracted much attention and has potential research value. Although its development is not mature at this stage, most methods rely on modification of object detection networks [7,29,30,31]. With the release of the CNRDD dataset and its subsequent continuous updating, however, there will be more targeted research to promote the development of ADRD. Aiming at the regional dispersion of road damage, this paper proposes a road damage detection baseline with attention learning. It introduces attention fusion and attention normalization to damage detection for the first time and sheds light on ADRD with attention learning.

3. China Road Damage Detection Dataset

Existing datasets can be divided into wide-view and top-view according to their shooting perspective. Damage captured from top-view images has no distortion and is easier to recognize. However, collecting top-view images needs UAVs and other professional equipment. This limits it to single acquisition areas, which makes it impossible for it to access the intelligent road damage detection system on a large scale. It may be more suitable for dangerous or inaccessible sections. Wide-view images can be easily obtained by smartphones or ordinary cameras. The shooting angle of wide-view images is free and changeable, and such images have obvious perspective relationships. Moreover, this kind of image usually contains interference items, such as buildings, vehicles or pedestrians, which makes it more difficult to detect road damage compared to top-view images. These datasets, no matter what perspective, have greatly fostered the development of ADRD.

However, the published parts of these datasets are collected from foreign roads. Further, there is a variety of data distribution within them due differences in acquisition equipment and shooting perspectives. This makes it difficult to establish a scientific cross-domain bridge by transfer learning. In order to solve these problems, this paper puts forward a CNRDD dataset that may become the standard for road damage detection. It adopts unified collection and annotation according to the actual situation of roads in China. This can facilitate subsequent expansion and cross-domain transfer learning, which will help the model improve the generalization ability for road damage detection.

The CNRDD dataset has the following three advantages over existing datasets: (1) Data collection adopts a professional onboard camera, and the image size is unified, which is conducive to the access of ADRD to intelligent road maintenance system in the future. (2) Classification meets the evaluation standards of China’s highway technical status, and the damage categories are richer, which can provide data support for the application and promotion of ADRD algorithms. (3) The dataset has high damage density in single images and provides damage degree classification, which is more challenging for the damage detection algorithm. The specific introduction of dataset classification statistics and evaluation indicators is as follows.

3.1. Data Collection and Annotation

The G303 road segment in China is selected to collect road damage data at this time. Compared with other pavement, G303 has a large number of trucks/cars passing every day. Further, the temperature differences of this road segment are large across the four seasons. These problems lead to higher road damage density in collected single images, and road damage detection carried out on the dataset is more challenging. Ultimately, the dataset included 4319 images with 21,552 annotations. These pictures were uniformly captured using professional onboard cameras. All image are 1600 × 1200 pixels and wide-view perspective. According to the evaluation standard for highway technical conditions, the CNRDD provides eight types of damage labels, including crack, longitudinal crack, lateral crack, subsidence, rutting, looseness, pothole and strengthening. Visual examples of these types of damage are shown in Figure 2.

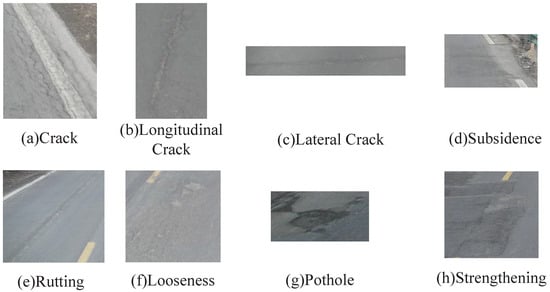

Figure 2.

Schematic diagram of each damage.

As shown in Figure 2, (a) cracks, (b) longitudinal cracks and (c) lateral cracks are the most common types of damage on daily roads. Among these, cracks appear like crocodile skin, and their initial shape is multiple parallel longitudinal cracks along the tire track line. With the repeated rolling of vehicles, lateral and oblique cracks appear between parallel longitudinal cracks to form cracks. (d) Subsidence refers to local depression of the road surface caused by foundation settlement. (e) Rutting is longitudinal depression of the road surface along the wheel track and is usually caused by insufficient compaction and poor composition of the mixed material during construction. (f) Looseness mainly comes from asphalt aging. It has various appearances, such as coarse and fine aggregate loss, pitted surfaces and even surface peeling. (g) Potholes are usually caused by looseness, cracks, subsidence and other types of damage that are not repaired in time. Its classification features are relatively obvious. (h) Strengthening is not real road damage. It represents areas where the damaged pavement has been repaired. Due to the diversity of in road damage repair, strengthening has many forms of appearance. Therefore, the degree of strengthening is not classified in the CNRDD dataset.

For the above-mentioned road damage, LabelImg software based on Python is used for strict annotation. The personnel responsible for labeling have received professional training and can scientifically distinguish road damage. While the damage classification and bounding box are labeled, the degree of each damage is also finely classified (excluding strengthening). The annotation information of each image is saved in XML format, and the corresponding relationship between the classification code and the damage name is shown in Figure 3.

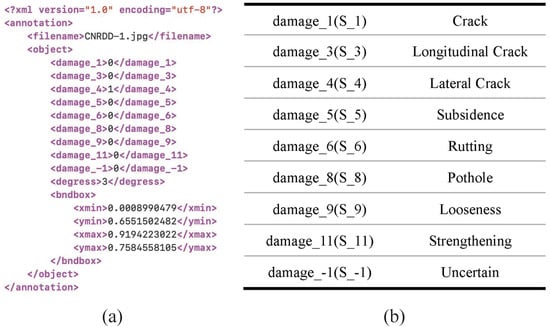

Figure 3.

Description of road damage annotation. (a) Screenshot of annotation file. (b) Correspondence between classification codes and damage name.

As shown in Figure 3, the image name, damage type and bounding box position are provided in XML files. The ‘degress’ means the severity of damage: 1, 2 and 3 correspond to mild, moderate and severe, respectively. Moreover, 113 uncertain types of damage annotation are included in the CNRDD dataset, which facilitates users to exploit data flexibly. The CNRDD dataset provides 21,552 labels for 4319 images, including 3022 images in the training set and 1273 images in the test set, and 24 images do not contain any road damage. Detailed damage annotation statistics are displayed in Table 1.

Table 1.

Mathematical statistics of different types of damage in training set and test set.

As shown in Table 1, the training set contains 15,085 annotations and the test set contains 6467 annotations. Both of them cover eight types of damage. However, the total number of four types of damage is less than 1000, which forms an obvious data imbalance with the other types of damage with mass annotations. In the training process, data augmentation may be considered to alleviate the negative impact of data imbalance on model generalization.

3.2. Evaluation Measures

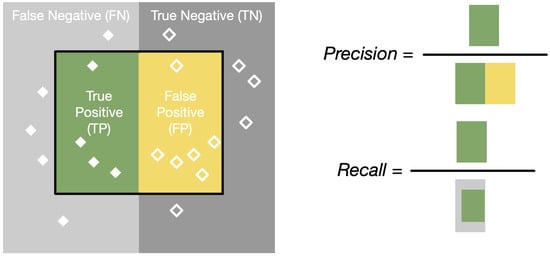

The evaluation system of the CNRDD dataset is the same as other detection datasets. Precision, Recall, mAP@0.5, and F1-Score are used as evaluation indicators. Precision refers to the number of correct prediction results divided by the total number of predictions. It is related to true positives (TP) and false positives (FP) and is defined in Equation (1). Recall indicates the correctly predicted proportion in all annotation samples, which is related to true positives (TP) and false negatives (FN). It can be calculated by Equation (2).

where TP, FN and FP are related to Intersection over Union (IoU). Between the predicted box and the ground-truth (GT) box, IoU calculates the quotient of the overlap area and the union area. When the IoU between the predicted bounding box and GT exceeds 0.5, the predicted instance is considered as a TP. When a predicted box has less than 0.5 IoU overlap with the GT, it is categorized as a FP. In addition, if the predicted result is not in GT at all or the predicted damage label is different from the GT label, the result is counted as an FP. FN indicates the number of road damage instances that the model fails to predict or mispredicts. According to these rules, Precision and Recall can be calculated by Equations (1) and (2), respectively. This concept can be better explained by Figure 4.

Figure 4.

Description of Precision and Recall.

In Figure 4, each solid white diamond represents a positive sample, each hollow white diamond represents a negative sample, and diamonds in the black solid line represent the instances predicted as positive samples by the model. As shown in Figure 4, TP (green area) represents the positive samples predicted as positive samples, FP (yellow area) represents the negative samples predicted as positive samples, and FN (light grey area) represents the positive samples predicted as negative samples.

However, Recall and Precision calculated according to Equations (1) and (2) are in a relationship based on ebb and flow. When Recall is improved, Precision is bound to decrease. In order to better evaluate the model performance on the CNRDD dataset, the F1-Score, defined in Equation (3), and the mAP@0.5 need be introduced.

In Equation (3), F1 refers to the F1-Score. It is the harmonic average of Precision and Recall and can represent the average accuracy of a network to detect road damage. The goal of training networks is to maximize the F1-Score and obtain the optimal balance between Precision and Recall. The mAP@0.5 represents the mean average precision when the IoU is set to 0.5. In a Precision–Recall Curve (PRC) where Recall is the abscissa and Precision is the ordinate, the area under the PRC is recorded as the AP@0.5. The mean of all categories of the AP@0.5 is the mAP@0.5. It can measure the detection performance of the network for different types of road damage.

4. Our Proposed Baseline with Attention Learning

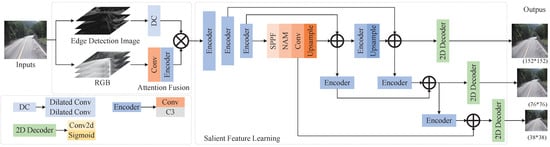

In the labeling process of the CNRDD dataset, it was found that road damage detection is far more difficult than conventional object detection. The main reason behind this is that the damage foreground pixels are sparse, and the proportion of pixels in the corresponding bounding box is also relatively low. While other example objects (e.g., animals or buildings) have abundant and dense pixel areas in the bounding box. This leads to strong similarity between different types of damage marked with bounding boxes. If the bounding box is changed to pixel-level annotation and the detection is transformed into a segmentation task, this problem can be alleviated. This is bound to increase labor and time costs, and does not promote the development of road damage classification. Therefore, we still maintain bounding box annotation on the published CNRDD dataset and propose a damage detection method based on attention learning. Its outline is shown in Figure 5.

Figure 5.

Outline of the proposed method.

As shown in Figure 5, the method takes raw images as input and realizes the prediction of road damage through an attention fusion module and a salient feature learning module. The attention fusion module exploits edge detection to achieve the location cues for road damage and then guides the network to pay attention to the salient area through multiplication from a self-attention mechanism. The salient feature learning module introduces attention normalization for spatial pyramid pooling. It not only strengthens the learning of salient features but also suppresses the weight of insignificant features. It is helpful to alleviate the interference of pixel dispersion and approximate appearance on road damage detection. In the following subsections, each component of the proposed method will be introduced in detail.

4.1. Attention Fusion Based on Edge Detection

Many existing algorithms have achieved success in pedestrian detection, remote sensing detection and other tasks. However, when they are directly applied to road damage detection, they all lose their excellent performance. The main reason lies in the sparse distribution of damage in foreground pixels. To solve this problem, along with adjusting the anchor generation strategy, we also need to correctly guide damage positions. Therefore, we rethought early road damage detection algorithms in this paper. We found that edge detection is the most common idea to detect road damage; it obtains the possible location of damage by analyzing the pixel gradient. It is often disturbed by the noise of pavement diversity and thus has a large rate of false detection. However, if it is only used to provide position cues, it can still help the observer quickly locate the salient damage area. Therefore, we designed an attention fusion module based on edge detection.

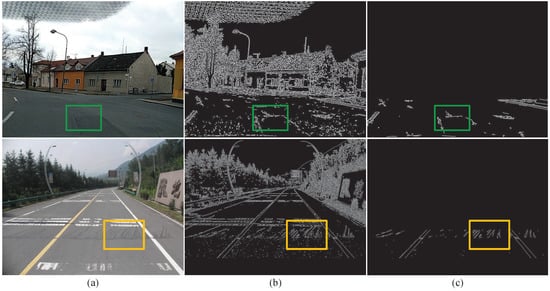

As shown in Figure 5, our method consists of an edge branch and an RGB branch. The inputs of the edge branch come from the classical Canny edge detector, which has the advantages of efficiency and coverage [32]. Considering that wide-view images contain a large number of trees and buildings, which will introduce many edges independent of the damage location, we decided to perform edge detection only on the lower half of each image. An example illustration is shown in Figure 6.

Figure 6.

Comparative illustration of edge detection on full graph and half graph: (a) raw image; (b) edge detection on the whole graph; and (c) edge detection on the half graph.

From Figure 6b,c, it can be seen that extracted edge information is more related to the damage location without the interference of other objects around the road. However, we also observe that edge detection of half images is a single-channel gray image with sparse effective pixels. In order to make it play a better role in attention guidance for feature learning, it is necessary to expand the range of the receptive field. Therefore, we used dilated convolution to design the DC block in Figure 5, which can learn the attention map of damage location according to edge detection.

This DC block takes three same edge detections as inputs. For any input, edge feature will be extracted after two groups of dilated convolutions with a dilation rate of 2. After a softmax calculation, will become the attention maps that can provide damage location. The corresponding RGB branch consists of standard convolution and encoder blocks. This takes the split RGB channel graph as inputs and can achieve a feature map as large as . In order to enable to generate spatial attention guidance to , they are fused through the calculation defined in Equation (4).

where indicates the feature map of edge detection, and represents the feature map of the RGB image. Softmax() refers to the calculation that can activate the attention of the feature map. After the fusion operation defined in Equation (4), the potential location information of damage in edge detection will enter the next module with fusion feature and will play a role in salient feature learning.

4.2. Salient Feature Learning with NAM

According to the observation of road damage, we find that the difficulty in damage detection lies not only in the sparse distribution of key foreground pixels, but also in the fact that the bounding box contains much approximately healthy pavement. The designed attention fusion module based on edge detection may alleviate the first problem. In order to further improve road damage detection by alleviating the second problem, the network has to ignore most of the insignificant approximate features. Therefore, we directly introduced the normalization-based attention module (NAM) [33] into salient feature learning. It can exploit the contributing factors of weights to inhibit the learning of unnecessary feature weights. This can be regarded as a normalization process, and the calculation is defined in Equation (5).

where denotes the scaling factor from BN, and the calculated W means the channel attention weight. It reflects the importance of different channels. If refers to a pixel normalized scaling factor, W calculated in Equation (5) is the spatial attention weight. It represents the influence of different pixels. These two weights will help NAM suppress the weights of unnecessary features. The introduction location of NAM and the design details of the complete network are summarized in Table 2.

Table 2.

Design details of the proposed network.

In Table 2, ‘AF’ is the attention fusion module, and ’SFL’ stands for salient feature learning module. ’C’ denotes the number of channels, ’K’ represents the convolutional kernel size, ’S’ means the step, and ’D’ refers to the dilation rate. C3 represents the double branch block. The feature maps from two branches of C3 are fused by concat. SPPF is the fast version of Spatial Pyramid Pooling. The Neck structure is consistent with that in YOLO v5 [15], and the connection with the front network is shown in Figure 5. NAM is added between SPPF and the Neck block. In this key position of forward propagation, NAM can better integrate spatial attention and channel attention. In this way, the proposed network can improve the discrimination of damage features by strengthening the learning of salient features and restraining the weight of non-salient features.

4.3. Two-Stage Training

Attention fusion and salient feature learning constitute the proposed network framework. In order to give full play to the feature learning ability of this network, we design a two-stage training strategy based on experience. Firstly, the training set with coarse classification labels is used to train the network, which can help the sparse features of road damage to achieve similarity aggregation. Then, we take the obtained network parameters as the pre-training model and fine-tuned the proposed network with fine-grained classification labels. This strengthens the discrimination of features in detail.

For the CNRDD dataset, only the eight kinds of damage labels are utilized in coarse training, and degree classification is not considered in the process. Fine-grained fine-tuning regards the same types of damage with different degrees as different categories so as to increase the distance between classes. For the RDD2020 dataset, it only provides four kinds of damage labels without degree annotations. In the first stage, therefore, two classification training steps are conducted on the RDD2020 dataset. The first only determines whether there is damage and detects the damage location. In the second stage, the original labels provided by the dataset are used for fine-tuning. This is helpful to strengthen the classification ability of the proposed network for road damage with approximate appearance. The implementation details of training and fine-tuning are displayed in Table 3.

Table 3.

Implementation details of training and fine-tuning.

As demonstrated in Table 3, the Canny edge detector sets the double thresholds to 30 and 170, respectively. The batchsize of the training and fine-tuning sets is 25. Coarse training is performed over 420 epochs. In fine-tuning, the dataset is trained over 100 epochs. Moreover, the parameter initialization of the 2D Encoder in the Neck block will not copy from the pre-training model obtained through the first stage of training. In the two-stage training process from coarse to fine, the initial learning rate is reduced from 0.01 to 0.005.

5. Experiment and Analysis

In this section, we conduct extensive experiments to evaluate the proposed road damage detection method on the popular RDD2020 dataset and the published CNRDD dataset. The evaluation metric adopts the F1-Score, mAP@0.5 and Flops. Among them, the F1-Score and mAP@0.5 measure the accuracy of the algorithm, and Flops evaluates the complexity of the model. The specific experiments include: (1) Comparative analysis of multiple models on different datasets; (2) Ablation analysis of contribution components; and (3) Difficulty analysis of different types of damage. These experiments are conducted on the Pytorch framework with two P100 GPUs. The update strategy adopts a stochastic gradient descent (SGD). For other implementation details, refer to Table 3. The best data in the experiments are highlighted in bold.

5.1. Comparative Analysis of Different Datasets

The RDD2020 dataset is the most important public dataset for current damage detection tasks. It provides two test sets without GT and a training set with four types of road damage labels (longitudinal cracks, reverse cracks, complex cracks and potholes). On this dataset and the self-collected CNRDD dataset, we carry out experimental analysis on multiple state-of-the-art algorithms [4,6,9,12,15,18,34,35,36,37,38,39,40,41,42]. Firstly, we compare the F1-Scores of different models on the same dataset to evaluate the proposed method. Secondly, we investigate the detection difficulty of the CNRDD dataset by comparing it with the RDD2020 dataset. The experimental results are shown in Table 4 and Table 5.

Table 4.

Performance comparison on RDD2020 dataset.

Table 5.

Performance comparison on CNRDD dataset.

In Table 4, Test1 Score and Test2 Score represent the F1-Scores on two test sets of RDD2020. All the comparative data come from the evaluation system of the Global Road Damage Detection Challenge (GRDDC) based on RDD2020 (https://rdd2020.sekilab.global/submissions/ (accessed on 10 December 2021)). The term ’Ensemble’ indicates that the algorithm uses ensemble learning, and ’Single’ represents that the algorithm is a single network without ensemble learning. Our proposed method is classified as a single network. As shown in Table 4, our Test1 Score and Test2 Score are 0.614 and 0.6083, respectively. The proposed method achieves the best performance of the single methods. Of course, it is not difficult to find that the accuracy of an ensemble learning method is generally higher than that of single methods. ISMC [34] explores Faster RCNN and YOLO v5 at the same time. SIS [34] adopts YOLO v4 with different resolution. DD-VISION [36] integrates 18 models including Fast RCNN, ResNext, HR Net, CBNet, Resnet, etc. These ensemble learning methods improve the accuracy of road damage detection by integrating different features, but they also bring a huge cost of training resources.

In Table 5, the experimental results of YOLO v5 [15], Faster R-CNN [12], fcos [18] and Dongjuns [41] are obtained by retraining with their open-source codes on the CNRDD dataset. Data are from the average of 10 repeated experiments. Although the proposed method takes YOLO v5 as the baseline, it is improved by introducing an attention fusion module and salient feature learning with NAM. Comparing the experimental data in Table 5, it can be seen that the proposed method has achieved significant performance improvement on the CNRDD dataset. The F1-Score can reach 34.73%. This demonstrates that the introduction of attention fusion and normalization can effectively enhance the learning of salient regional features so as to improve the discrimination of damage features.

After evaluating the effectiveness of the proposed method, we compare the performance of Dongjuns [41] with the proposed method in Table 4 and Table 5. We find that the F1-Score of the same model on the RDD2020 dataset is better than the performance on the CNRDD dataset. This is mainly because the classification number and damage density of the two datasets are different. The classification of the CNRDD dataset is more comprehensive, and there is a degree category among same damage. The damage density of the CNRDD dataset is higher than that of the RDD2020 dataset, which is closer to the actual situation that road damage is easy to gather. Comparing damage identification with the RDD2020 dataset, therefore, the CNRDD dataset has more advantages in challenge and practicability.

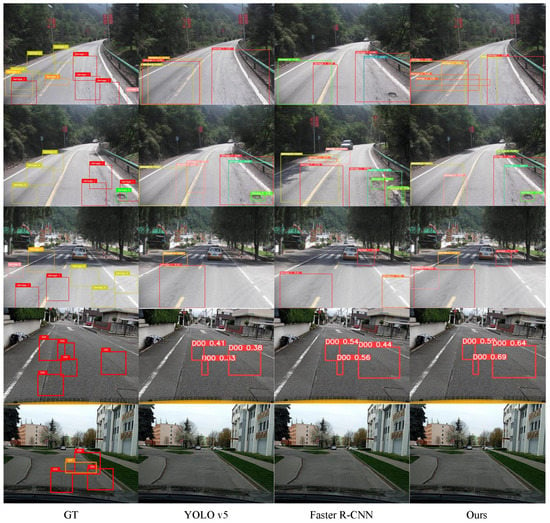

In addition to quantitative analysis, we also perform visualization analysis on different datasets. YOLO v5 [15], Faster R-CNN [12] and our proposed method are selected for comparison; the detection results are shown in Figure 7.

Figure 7.

Visual comparative analysis of RDD2020 and CNRDD datasets. The first two samples are from the RDD2020 dataset, and the last three images are from the CNRDD dataset.

In Figure 7, the first column is the raw image with the GT bounding box, and from the second to the fourth column are the detection results of YOLO v5 [15], Faster R-CNN [12] and the proposed method, respectively. The colored boxes represent the detection results for different types of damage. The comparison shows that the proposed method has better perception of damage location and a relatively lower missed detection rate. However, in addition to damage with obvious features, the existing methods (including the proposed method) still have serious problems of wrong detection and missing detection. This paper only provides an idea of exploiting attention learning for road damage detection. In the follow-up, many researchers need to work together to promote the development of ADRD.

5.2. Comparative Analysis of Different Datasets

In order to analyze the impact of attention fusion, salient feature learning and two-stage training on road damage detection, we also conduct ablation analysis on the CNRDD test set and simulation RDD2020 test set. The simulated test set consists of 20% of the data randomly selected from the RDD2020 training set. YOLO v5 [15] is the baseline of the proposed method. The improved components are added to the baseline in turn, and the experimental results are shown in Table 6.

Table 6.

Evaluation of the effectiveness of each component. ‘AF’ refers to attention fusion module, ‘SFLwN’ represents salient feature learning module with NAM, `SFLoN’ is salient feature learning module without NAM, and `FT’ means fine-tuning strategy.

Comparing the proposed method against the baseline in Table 6, AF improves F1-Score by 0.67% and 0.66% on the CNRDD and RDD2020 datasets, respectively. SFLwN increases the F1-Score by 1.72% and 0.54% on the CNRDD and RDD2020 datasets, respectively. This indicates that the two designed modules are able to improve the discrimination of damage features by enhancing the attention to salient areas. Interestingly, the result of introducing SFLwN alone is not as good as AF on the RDD2020 simulation test set. However, cooperation between the two components improves the F1-Score and mAP@0.5 significantly. In addition, the accuracy of the complete method is further improved through two-stage training. This demonstrates that fine-tuning from coarse to fine is conducive to aggregate similarity of the same damage and push the distance between classes.

5.3. Difficulty Analysis of Different Types of Damage

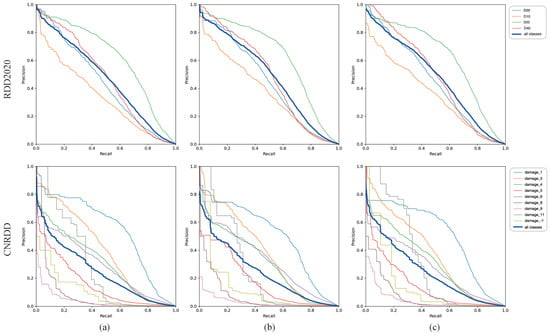

The RDD2020 dataset provides four kinds of damage labels, while our CNRDD dataset provides eight kinds of damage annotations. Some types of damage in these datasets have obvious similarities. For example, longitudinal cracks, reverse cracks and complex cracks all have obvious crack characteristics. This makes the detection of damage more difficult than the detection of actual objects. In addition, subsidence, rutting and looseness are often close to healthy pavement under mild conditions. Even trained models have difficulty identifying them correctly. Based on this background, we compare and analyze the detection results of different damage and hope to find a targeted solution to promote the development of ADRD. The comparison results are shown in Figure 8 in the form of PRC. Since the RDD2020 test sets do not provide GT, the automatic evaluation system does not provide separate evaluation for each type of damage. Therefore, the experiment is implemented on the RDD2020 training set and the CNRDD test set.

Figure 8.

Precision–Recall curves from different methods. The data of (a–c) are from YOLO v5 [15], Faster R-CNN [12] and the proposed method, respectively. Different colors represent the detection results of different types of damage, and the corresponding relationship is shown in the legend.

As shown in Figure 8, the experiments of the first line are performed on the RDD2020 training set, and the experiments in the second row are conducted on the CNRDD test set. By comparing the three subgraphs belonging to the same row, we find that the performance of different methods on different types of damage show the same order. On the RDD2020 training set, no matter which method, D20 damage (crack) has the best performance, and the area under curve of D10 damage (longitudinal crack) is the worst. Coincidentally, the area under the PR curve for different types of damage on the CNRDD dataset also shows similar ranking. The damage_1 damage (crack) performs best, and the results of damage_8 (pothole) and damage_9 (looseness) are poor. The main reason is that the cracks have obvious features, high frequency and rich training samples. However, the number data points for looseness and potholes collected in the CNRDD dataset is less than 1000, which results in poor detection. This gives us a hint that collecting and labeling diverse training samples is the core of improving road damage detection.

6. Conclusions and Discussion

Road damage detection is very important for road maintenance. At this stage, all practical road damage detection solutions depend on expensive equipment with multiple sensors. However, many underdeveloped areas cannot find pavement problems in time because they cannot afford high-priced equipment. This leads to missing the best repair time for road damage. With the success of deep learning in multiple vision tasks, road damage detection based on deep learning brings hope of moving away from the dependence on expensive equipment.

In order to introduce deep learning to ADRD, this paper first presents a road damage detection dataset from China, called the CNRDD. It contains 4319 images with 21,552 professional annotations. The image size is 1600 × 1200 pixels. According to evaluation standard for highway technical conditions (JTG5210-2018), road damage is divided into eight categories. In addition to strengthening, each category also contains three degree labels. Compared with existing datasets, the CNRDD dataset is more challenging and practical. In order to further promote the development of ADRD, this paper also proposes a road detection framework based on attention learning. It utilizes edge detection and attention fusion to provide the salient region for feature learning, and then introduces NAM to suppress the weights of non-salient features. Thus, the influence of sparse damage-pixel distribution and similar appearance between types of damage on detection is effectively alleviated. After two-stage training, the performance of the proposed method is further improved on the RDD2020 and CNRDD datasets. The proposed method sheds light on road damage detection based on attention learning. Nevertheless, according to the experimental analysis described in Section 5, road damage detection based on deep learning remains challenging. It still needs more researchers to work together to solve. Therefore, this paper discusses future expandable work from the following three aspects.

- (1)

- Standardized datasets. The quantity of existing road damage detection data greatly limits the development of ADRD, which is also the main reason for publishing the CNRDD dataset in this paper. However, a CNRDD dataset is not enough to change the research status. Researchers may make further efforts to achieve breakthroughs in the quantity of data with annotations. Then, standardization of road datasets is gradually formed from the perspective of annotation specification and classification basis. This not only helps to improve the performance of road damage detection algorithms, but also provides support for unified access to future intelligent road maintenance systems.

- (2)

- Optimized similarity measurement. A large number of approximate appearances is another main reason why road damage is difficult to be detected correctly. To solve this problem, researchers should not only improve the discrimination of salient features through attention learning, but also optimize the similarity measurement of feature vectors. Aspects such as feature alignment and fine-grained classification strengthen the recognition and location of road damage so as to alleviate false detection and missing detection.

- (3)

- Conditional transfer learning. Although transfer learning is an effective strategy to improve the generalization ability of algorithms, it needs to be based on the premise that the algorithm is excellent in a single data domain. Like the current road damage detection algorithm based on deep learning, the learned features cannot meet the actual needs in the specified domain. On this basis, a cross-domain bridge established by transfer learning is meaningless to the extraction of road damage features. Therefore, supervised road damage detection needs to be developed first. When the conditions are ripe, conditional transfer learning for different acquisition equipment (intelligent phone or 3D camera), climates and road segments is encouraged to promote the application of ADRD in practical scenarios.

Researchers may try to promote the development of road damage detection using the above-mentioned three aspects. Of course, we are also willing to continuously update the CNRDD dataset to provide some data support for ADRD.

Author Contributions

Conceptualization, H.Z., Z.W. (Zhaohui Wu) and N.J.; methodology, H.Z. and N.J.; validation, Y.Q.; formal analysis, Y.Q. and X.Z.; investigation, Z.W. (Zichen Wang), X.Z. and Y.Q.; resources, N.J. and Z.L.; writing—original draft preparation, N.J., Y.Q. and P.X.; writing—review and editing, N.J. and P.X.; visualization, Z.W. (Zichen Wang); supervision, Z.L.; project administration, H.Z., N.J. and Z.W. (Zhaohui Wu); funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

CNRDD dataset can be downloaded from https://transport.ckcest.cn/CatsCategory/asphaltRoadDiseases/1, accessed on 24 July 2022.

Acknowledgments

The research was supported by the Open Fund of the Key Laboratory of the Transportation Industry (2019KFJJ-001), Natural Science Foundation of Inner Mongolia Autonomous Region (2020MS05056), the Basal Research Fund of the Central Public Research Institute of China (20212701) and the general project numbered KM202110028009 of the Beijing Municipal Education Commission.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Yu, B.; Tang, M.; Zhu, G.; Wang, J.; Lu, H. Enhanced Bounding Box Estimation with Distribution Calibration for Visual Tracking. Sensors 2021, 21, 8100. [Google Scholar] [CrossRef] [PubMed]

- Fang, K.; Ouyang, J.; Hu, B. Swin-HSTPS: Research on Target Detection Algorithms for Multi-Source High-Resolution Remote Sensing Images. Sensors 2021, 21, 8113. [Google Scholar] [CrossRef]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Mraz, A.; Kashiyama, T.; Sekimoto, Y. Transfer learning-based road damage detection for multiple countries. arXiv 2020, arXiv:2008.13101. [Google Scholar]

- Naddaf-Sh, S.; Naddaf-Sh, M.M.; Zargarzadeh, H.; Kashanipour, A.R. An Efficient and Scalable Deep Learning Approach for Road Damage Detection. arXiv 2020, arXiv:2011.09577. [Google Scholar]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Omata, H.; Kashiyama, T.; Sekimoto, Y. Global Road Damage Detection: State-of-the-art Solutions. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5533–5539. [Google Scholar]

- Zhang, X.; Xia, X.; Li, N.; Lin, M.; Ding, N. Exploring the Tricks for Road Damage Detection with A One-Stage Detector. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5616–5621. [Google Scholar]

- Zhang, A.; Wang, K.C.P.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated pixel-level pavement crack detection on 3D asphalt surfaces using a deep-learning network. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Zhang, K.; Cheng, H.D.; Zhang, B. Unified Approach to Pavement Crack and Sealed Crack Detection Using Preclassification Based on Transfer Learning. J. Comput. Civ. Eng. 2018, 32, 04018001. [Google Scholar] [CrossRef]

- Mandal, V.; Mussah, A.R.; Adu-Gyamfi, Y. Deep Learning Frameworks for Pavement Distress Classification: A Comparative Analysis. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5577–5583. [Google Scholar]

- Zhang, Y.; Wang, X.; Xie, X.; Li, Y. Salient Object Detection via Recursive Sparse Representation. Remote Sens. 2018, 10, 652. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; CoImagro, A.; Ye, H.; Poznanski, J. yolov5: v4.0. 2021. Available online: https://zenodo.org/record/4418161#.YuILYepByUk (accessed on 5 January 2021).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Caroff, G.; Joubert, P.; Prudhomme, F.; Soussain, G. Classification of pavement distresses by image processing (MACADAM SYSTEM). In Proceedings of the 1st International Conference on Applications of Advanced Technologies in Transportation Engineering ASCE, San Diego, CA, USA, February 1989; pp. 46–51. [Google Scholar]

- Zalama, E.; Gómez-García-Bermejo, J.; Medina, R.; Llamas, J. Road crack detection using visual features extracted by Gabor filters. Comput. Aided Civ. Infrastruct. Eng. 2014, 29, 342–358. [Google Scholar] [CrossRef]

- Ayenu-Prah, A.; Attoh-Okine, N. Evaluating pavement cracks with bidimensional empirical mode decomposition. EURASIP J. Adv. Signal Process. 2008, 2008, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, H.; Correia, P.L. Automatic road crack segmentation using entropy and image dynamic thresholding. In Proceedings of the IEEE 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; pp. 622–626. [Google Scholar]

- Wang, K.C.; Hou, Z.; Gong, W. Automation techniques for digital highway data vehicle (DHDV). In Proceedings of the 7th International Conference on Managing Pavement Assets, Calgary, AB, Canada, 23–28 June 2008. [Google Scholar]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road crack detection using deep convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Eisenbach, M.; Stricker, R.; Seichter, D.; Amende, K.; Debes, K.; Sesselmann, M.; Ebersbach, D.; Stoeckert, U.; Gross, H.-M. How to get pavement distress detection ready for deep learning? A systematic approach. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2039–2047. [Google Scholar]

- Majidifard, H.; Jin, P.; Adu-Gyamfi, Y.; Buttlar, W.G. Pavement image datasets: A new benchmark dataset to classify and densify pavement distresses. Transp. Res. Rec. 2020, 2674, 328–339. [Google Scholar] [CrossRef] [Green Version]

- Maeda, H.; Kashiyama, T.; Sekimoto, Y.; Seto, T.; Omata, H. Generative adversarial network for road damage detection. Comput. Aided Civ. Infrastruct. Eng. 2021, 36, 47–60. [Google Scholar] [CrossRef]

- Mathavan, S.; Kamal, K.; Rahman, M. A review of three dimensional imaging technologies for pavement distress detection and measurements. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2353–2362. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.J.; Ding, M.; Kan, S.; Zhang, S.; Lu, C. Deep proposal and detection networks for road damage detection and classification. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5224–5227. [Google Scholar]

- Silva, W.R.L.D.; Lucena, D.S.D. Concrete cracks detection based on deep learning image classification. Proceedings 2018, 2, 489. [Google Scholar]

- Fei, Y.; Wang, K.C.P.; Zhang, A.; Chen, C.; Li, J.Q.; Liu, Y.; Yang, G.; Li, B. Pixel-level cracking detection on 3D asphalt pavement images through deep-learning-based CrackNet-V. IEEE Trans. Intell. Transp. Syst. 2019, 21, 273–284. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-based Attention Module. NeurIPS 2021 Workshop on ImageNet: Past, Present, and Future. 2021. Available online: https://openreview.net/forum?id=AaTK_ESdkjg (accessed on 28 September 2021).

- Hegde, V.; Trivedi, D.; Alfarrarjeh, A.; Deepak, A.; Kim, S.H.; Shahabi, C. Yet Another Deep Learning Approach for Road Damage Detection using Ensemble Learning. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5553–5558. [Google Scholar]

- Doshi, K.; Yilmaz, Y. Road Damage Detection using Deep Ensemble Learning. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5540–5544. [Google Scholar]

- Pei, Z.; Lin, R.; Zhang, X.; Shen, H.; Tang, J.; Yang, Y. CFM: A Consistency Filtering Mechanism for Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5584–5591. [Google Scholar]

- Hascoet, T.; Zhang, Y.; Persch, A.; Takashima, R.; Takiguchi, T.; Ariki, Y. FasterRCNN Monitoring of Road Damages: Competition and Deployment. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5545–5552. [Google Scholar]

- Vishwakarma, R.; Vennelakanti, R. CNN Model & Tuning for Global Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5609–5615. [Google Scholar]

- Pham, V.; Pham, C.; Dang, T. Road Damage Detection and Classification with Detectron2 and Faster R-CNN. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5592–5601. [Google Scholar]

- Kortmann, F.; Talits, K.; Fassmeyer, P.; Warnecke, A.; Meier, N.; Heger, J.; Drews, P.; Funk, B. Detecting Various Road Damage Types in Global Countries Utilizing Faster R-CNN. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5563–5571. [Google Scholar]

- Jeong, D. Road Damage Detection Using YOLO with Smartphone Images. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5559–5562. [Google Scholar]

- Liu, Y.; Zhang, X.; Zhang, B.; Chen, Z. Deep Network For Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5572–5576. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).