Abstract

An important development direction for the future of the automotive industry is connected and cooperative vehicles. Some functionalities in traffic need the cars to communicate with each other. In platooning, multiple cars driving in succession reduce the distances between them to drive in the slipstream of each other to reduce drag, energy consumption, emissions, and the probability of traffic jams. The car in front controls the car behind remotely, so all cars in the platoon can accelerate and decelerate simultaneously. In this paper, a system for vehicle-to-vehicle communication is proposed using modulated taillights for transmission and an off-the-shelf camera with CMOS image sensor for reception. An Undersampled Differential Phase Shift On–Off Keying modulation method is used to transmit data. With a frame sampling rate of 30 FPS and two individually modulated taillights, a raw data transmission rate of up to 60 bits per second is possible. Of course, such a slow communication channel is not applicable for time-sensitive data transmission. However, the big benefit of this system is that the identity of the sender of the message can be verified, because it is visible in the captured camera image. Thus, this channel can be used to establish a secure and fast connection in another channel, e.g., via 5G or 802.11p, by sending a verification key or the fingerprint of a public key. The focus of this paper is to optimize the raw data transmission of the proposed system, to make it applicable in traffic and to reduce the bit error rate. An improved modulation mode with smoother phase shifts is used that can reduce the visible flickering when data is transmitted. By additionally adjusting the pulse width ratio of the modulation signal and by analyzing the impact of synchronization offsets between transmitter and receiver, major improvements of the bit error rate (BER) are possible. In previously published research, such a system without the mentioned adjustments was able to transmit data with a BER of 3.46%. Experiments showed that with those adjustments a BER of 0.48% can be achieved, which means 86% of the bit errors are prevented.

1. Introduction

The automotive industry is about to approach a new era. Advanced driver assistance systems (ADAS) and semi-autonomous driving is nothing special in the luxury class. However, in the future, cars will not just drive autonomously, but there will also be connected and cooperative cars. Pure autonomous driving means the car can drive on its own; its decisions are purely based on its own sensors. Connected and cooperative vehicles also communicate with each other and the infrastructure, to enable far more precision than by just reacting to sensors, which is needed for some functionalities, e.g., platooning. Multiple vehicles on the road build a platoon by reducing the distances between them. Their individual driving behavior is replaced by a cooperative driving behavior of the platoon. Electronic coupling of the participating cars via a wireless communication channel allows simultaneous acceleration and deceleration. By driving in succession with small distances, the smaller amount of drag due to the slipstream leads to less energy consumption and emissions. The usage of the available road capacity is optimized, and traffic jams caused by traffic overload can be prevented.

Every car needs to be controlled remotely by its predecessor [2]. For this communication, a fast wireless communication channel with low latency is needed. This main communication channel could be realized using 5G or 802.11p. However, the communication channel also needs to be secure. It is crucial to know the true identity of the communication partner and that no third party can interfere with or manipulate the transmitted data. An optical communication channel using modulated taillights and a camera as receiver as proposed in [1] could be used as out-of-band channel. Data transmitted using this out-of-band channel can be used to verify the identity of the communication partner, because the actual transmitter of the message is visible in the camera image. For example, a public key fingerprint of the car in front is transmitted to the car behind and a matching public key is used for the asynchronous encryption on the main channel. This way the fast, low-latency main channel can be combined with the identity verification of the slower optical channel.

This paper is based on the proposed visible light vehicle-to-vehicle communication system using modulated taillights by Plattner et al. [1]. The focus lies on the improvement of the raw data transmission of this optical communication channel between two vehicles. The goal is to optimize the settings of the modulation of the taillights for transmission and the settings on the receiving camera to get the best possible bit error rate (BER). As a basis for the optical communication channel, the Undersampled Differential Phase Shift On–Off Keying (UDPSOOK) modulation method by Liu et al. [3] is used. However, some aspects of this modulation method are not applicable for vehicular visible light communication (V2LC) in traffic or can be optimized for a better BER. These drawbacks of the modulation method and solutions to them are thoroughly addressed in this paper.

This paper is organized as follows. In Section 2, related literature and projects and how they are different compared to this work are discussed. Section 3 explains the basics for this project and the goals and potential problems and how they can be solved. In Section 4 the experimental setup is documented and the approaches are evaluated. Section 5 concludes this paper and gives an outlook to future research.

2. Related Work

There is plenty of literature available in the context of visible light communication (VLC), optical wireless communication (OWC), and optical camera communication (OCC) in various application areas, also in vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication. The application of VLC for V2V or V2I communication is often called vehicular VLC (V2LC).

Generally, VLC refers to optical wireless communication that uses modulated light in a wavelength spectrum that is perceivable by the human eye, i.e., between 400 nm and 700 nm, and hence is usually used for illumination [4]. The transmitted information is then modulated onto the illuminating light. Usually, a precondition for VLC is that the modulation is not perceived by humans in form of noticeable flickering or similar effects. Here it is important that the modulation frequency does not fall below the critical flicker frequency threshold (CFF). The CFF defines the frequency, where an intermittent light stimulus appears completely steady to a human observer [5]. The concrete value for the CFF depends on various factors, e.g., the age of the human observer. On average, frequencies above 35 Hz to 50 Hz are not perceived in noticeable flickering [6].

V2LC was implemented in multiple variations in related projects. Premachandra et al. [7] and Iwasaki et al. [8] focused on road-to-vehicle communication using high-speed cameras. V2V communication using LEDs and cameras is covered by multiple works, too. Some use custom LED transmitters such as LED matrices or LED screens encoding multiple bits at once, e.g., Boubezari et al. [9]. Others use special receivers such as high-speed cameras with 1000 FPS or novel image sensors developed for OCC, e.g., Takai et al. [10] and Cuailean et al. [11]. Some works even use both mentioned aspects, e.g., Yamazato et al. [12] and Goto at al. [13].

In contrast to the mentioned literature, the proposed system uses single LEDs, i.e., LED taillights of cars, to transmit data and uses a camera with 30 FPS such as smartphone cameras or webcams by exploiting the rolling-shutter effect of CMOS cameras. There is also literature about VLC using CMOS cameras by using the rolling-shutter effect, e.g., Do et al. [14], Liang et al. [15], Danakis et al. [16], and Chow et al. [17]; however, those works rely on the modulated light to cover major parts of the image. Thus, the distance between transmitting LEDs and receiving camera is limited to less than one meter. Of course, for V2V communication much larger distances need to be covered. The proposed system strives for common distances in traffic, i.e., between 30 m and 80 m.

A conceptual idea of this project was published in [1], where the Undersampled Differential Phase Shift On–Off Keying (UDPSOOK) modulation method proposed by Liu et al. [3] was used to encode information onto LEDs representing the taillights of a car model. This paper proposes major improvements to the raw data transmission for this visible light vehicle-to-vehicle communication system using a new modulation mode and advanced adjustments of the modulation settings. Additionally, this paper evaluates the impact of timing offsets to the loose synchronization.

3. Approach

In the context of automated driving, vehicle-to-vehicle (V2V) communication will be necessary for many areas. Platooning is an application for automated driving on highways. Multiple vehicles are driving in succession. By decreasing the distance between them, the cars are driving in the slipstream of the car in front of them. Hereby, the drag can be reduced to 55% on average which leads to less fuel and energy consumption and fewer emissions, according to Zabat et al. [18]. In such a platoon, the car ahead needs to control the car behind remotely [2] to enable the cars to accelerate and decelerate simultaneously. This wireless communication needs to be fast and secure, as there must be as little latency as possible for time-critical commands and the car behind must trust the commands it gets from the car in front. This main communication could be realized using 802.11p or 5G.

This paper sets its focus on establishing a secure connection between two vehicles that are driving in succession on the highway before they decrease the distance between them to build a platoon. It proposes an out-of-band communication channel using the LED taillights of a car to transmit data via VLC and a camera on the succeeding car to receive it. The conceptual idea for this out-of-band channel was published in [1]. To encode the data, a modulation method proposed by Liu et al. [3] called Undersampled Differential Phase Shift On–Off Keying (UDPSOOK) is used. Here the transmitting LED is modulated with a frequency that is a multiple of the frame sampling rate of the receiving camera. The modulation frequency must be higher than the CFF of the human eye to be not perceivable by a human observer; however, a camera with a very short exposure time is still able to capture the modulated light source to decode the transmitted data. This is possible by using the rolling-shutter effect of CMOS cameras.

3.1. Rolling-Shutter Effect

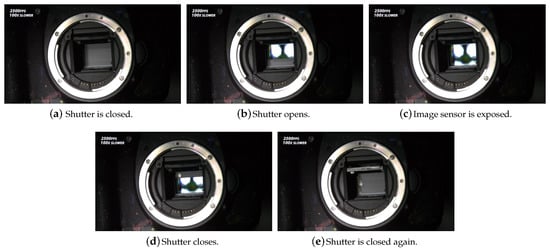

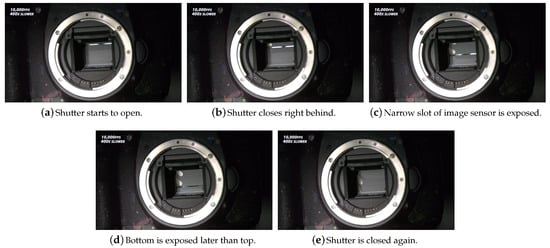

Most cameras, e.g., smartphones and webcams as well as most DSLR (Digital Single-lens Reflex) cameras, are using a rolling shutter. This means the picture is not captured as a whole, but line by line from top to bottom. When taking a photo with a DSLR camera, the shutter opens from top to bottom, then the image sensor is exposed to light, then the shutter closes again from top to bottom. This procedure for a rather slow shutter speed or long exposure time, respectively, of 1/30 of a second is depicted in the images in Figure 1 recorded with a high-speed camera. Figure 2 shows the same recorded operation with the exposure time set to 1/8000 of a second. One can see that immediately after the shutter starts to open, it already starts closing. Only a narrow gap is opened to pass through light to the image sensor and this gap moves from the top of the image to the bottom. This also means that the top of the image is captured earlier than the bottom part of the image.

Figure 1.

Rolling shutter at 1/30 s exposure time [19].

Figure 2.

Rolling shutter at 1/8000 s exposure time [19].

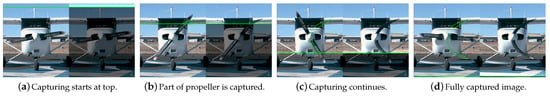

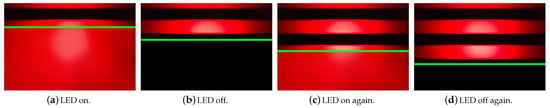

If the scene changes while the image is captured, the rolling-shutter effect gets visible in the image. As shown in Figure 3, the propeller of the plane moved while the image was captured and appears skewed in the image; however, when taking the image with a camera using a global shutter, no distortion happens. The same effect appears when taking an image of a fast-flickering light source. The light turns on and off while the image is captured, and a stripe pattern is visible in the image. The number and the width of those stripes hldepend on the frequency of the flickering of the light source, as shown in Figure 4.

Figure 3.

Plane with moving propeller captured by high-speed camera (left) and rolling-shutter camera (right) [20].

Figure 4.

Stripe pattern occurs when capturing a flickering LED with a rolling-shutter camera.

As Plattner et al. [1] showed, the stripe pattern in the resulting image is only visible in those parts of the image that are occupied by the modulated light source. Hence, if the modulated light source only takes up a small part of the image, only a small part of the stripe pattern can be captured by the camera. As shown in Figure 5, if the taillights of a car are modulated and the camera is far away from the car, only one stripe or state, respectively, is visible in the image. However, the captured state of the taillight, depends on the position of the taillight within the frame, which is also visible in Figure 5. In the top image sequence, the car’s vertical position is slightly lower and hence, in the rightmost image, captured from big distance, a bright part of the stripe pattern is shown in the area of the taillights. In the bottom image sequence, the vertical position is slightly higher and hence, a different part of the stripe pattern is visible, which is dark. Thus, from one single image, just the current states of the taillights in the image can be detected; however, the receiver is not able to get any information regarding encoded data from it.

Figure 5.

Car with modulated taillights at various distances from close-up to farther away [1].

3.2. Modulation

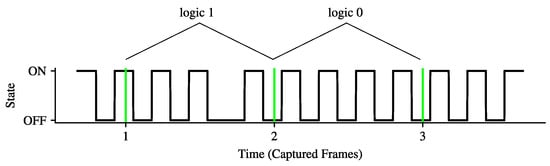

If the phase of the modulation signal is changed by 180°, i.e., the signal and the resulting stripe pattern are inverted, between two consecutive frames of a captured video, the receiving camera can detect if the state has changed since the last recorded frame. This state change always appears, independent of the position of the transmitting light source in the frames, given that the modulated light source did not move a significant amount between the two frames. The UDPSOOK modulation method by Liu et al. [3] uses this characteristic to encode information with those phase shifts between two consecutive frames. If there was no phase shift, which means the state of the modulated light source is the same in both frames, a logic 0 was transmitted, if the state changed because of a phase shift between the frames, a logic 1 was transmitted.

3.3. Phase Shifts Between Frames

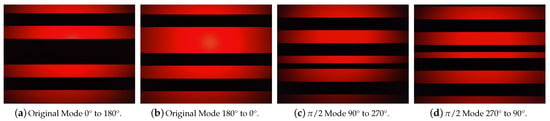

Figure 6 shows how the signal for the modulation looks like, and how the camera samples it. For applying a phase shift to the signal, the proposed modulation signal uses two consecutive ON or two consecutive OFF states, respectively. This signal is inverted by omitting one of its edges. However, with a modulation frequency of 120 Hz, experiments showed that flickering of the modulated light source can be perceived by a human observer. As this is one of the preconditions for the proposed system, the modulation method needs to be adjusted. Without information encoded into the signal, no flickering could be observed. A closer look showed that the perceived flickering is not caused by the modulation frequency itself, but by the applied phase shifts. The short increases of pulse width at the transition of the phase results in a change of the average brightness of the light source, which is perceived as flickering. To counteract this phenomenon, the phase of the base signal is shifted by 90° or , respectively. Instead of switching between 0° and 180° to invert the signal, the system now switches between 90° and 270°. With this simple change of the modulation method, the pulse width at the transition of the phase is now halved instead of doubled. The average brightness is not changed when a phase shift is applied and hence, no flickering can be perceived by a human observer. An example for such a phase shift is shown in Figure 7.

Figure 6.

Original UDPSOOK modulation signal, based on [3].

Figure 7.

UDPSOOK in modulation mode.

3.3.1. Phase Slipping

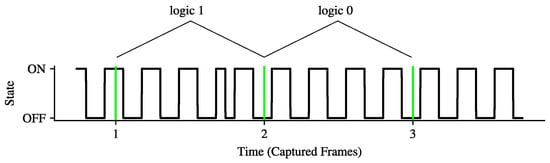

As the modulation of the light source and the sampling rate of the camera are not strictly synchronized, this modulation method is prone to errors caused by small synchronization offsets that result in phase slipping. Phase slipping can be perceived as slow and steady movement of the stripe pattern in the image between frames, even if no phase shifts were applied. This movement of the stripe pattern results in undesired state changes of the modulated LEDs in the captured frames, and hence errors are introduced. Liu et al. [3] target this problem by proposing the usage of a second modulated light source for error detection. One LED sends the data, the second LED is only modulated with the modulation frequency without any phase shifts. In theory, this method can detect errors caused by phase slipping hland correct them, as depicted in Figure 8. They call this expansion UDPSOOK with error detection (UDPSOOKED). This error correction method works best, if the receiver knows exactly which pixel of the image it needs to observe, to detect the current state of the modulated LED and the position of this pixel does not change. However, in this paper’s application, first the modulated light source in the image, i.e., the taillight of a car, is detected and then the state of this taillight is recognized. This means a bigger region of interest (ROI) is observed to detect the state. The experiments for this paper’s application showed that this method is not able to improve the bit error rate (BER) significantly, as errors that could be detected this way rarely happen. For this case, it would be more efficient, to use the second modulated LED to double the raw throughput of the communication and use an adequate channel coding to add redundancy and correct such errors.

Figure 8.

UDPSOOK with Error Detection, based on [3].

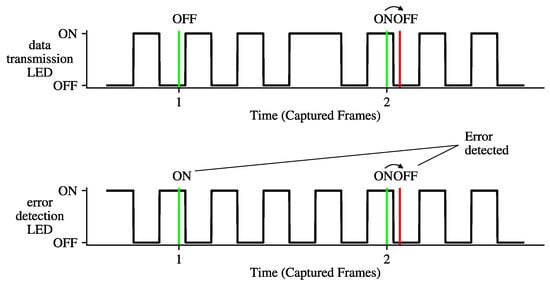

According to Plattner et al. [1] there is also another, more significant error cause in this application area. Due to phase slipping and bigger ROIs, ambiguous LED states, as depicted in Figure 9, lead to errors. Such an ambiguous state appears if the modulated light source is captured in the exact moment when the LED transitions from ON to OFF or vice versa. In this case, the vertical position of the LED matches the position of a transition of the stripe pattern. In such a case, it is very hard to detect the current state of the taillight and to compare it with the previous. If the state is not recognized correctly, an error occurs. Additionally, such ambiguous taillight states usually not only affect a single frame. Often such a transition of the stripe pattern is slowly moving through the area covered by the modulated taillight and hence multiple consecutive frames are affected, which leads to an error burst. Such errors cannot be completely avoided with this modulation method, as there is always a chance of getting ambiguous light source states. Plattner et al. [1] suggest minimizing the probability of such a situation to occur by setting the modulation frequency as low as possible to have wide stripes and only a few such transitions that cause ambiguous states of the taillights. However, this paper proposes a much more efficient way of dealing with this issue as the error is not just caused by the transition itself, and hence by the number of transitions. The main issue is that those transitions are affected by blooming and exposure effects.

Figure 9.

Captured image with ambiguous states of the taillights [1].

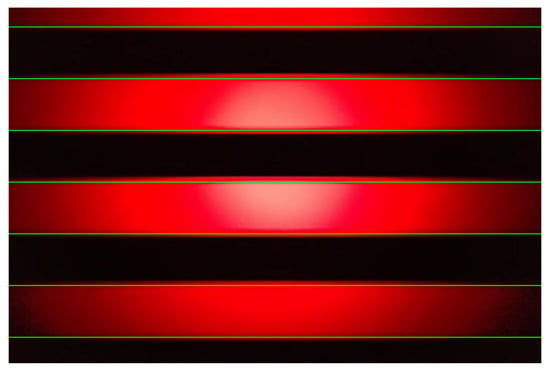

3.3.2. Blooming

Blooming is a phenomenon in which excess charge from a pixel spills over into adjacent pixels, causing blurring and related image artifacts. Blooming may cause the neighboring pixels to look brighter than an accurate representation of the light absorbed by the photo diode in that pixel [21]. In case of UDPSOOK modulation, blooming causes bright stripes of the resulting stripe pattern to be wider than dark stripes, because the charge from a bright stripe spills over to the adjacent pixels of the dark stripe. Figure 10 shows this effect in an example image, where one can see that the blooming effect is strong in the center of the image where the bright center of the LED was captured and is weaker on the left and right side due to the slightly shifted angle of the emitted light. The green lines indicate the edges of the rectangular signal used for the modulation. Due to the blooming effect the bright stripes are wider than the dark stripes when the pulse width of the modulation signal is 50%. The parts of the image affected by blooming cannot represent the encoded data correctly, because in these parts of the image, the modulated LED always appears to be ON, even if the signal is inverted by a phase shift. This paper proposes to adjust the pulse width ratio of the modulation signal to counteract the blooming effect. If the pulse width ratio is intentionally below 50%, the blooming effect is neutralized and the BER should be improved.

Figure 10.

Blooming affecting width of resulting stripes.

A similar effect occurs if the exposure time of the recording camera is set slightly longer, e.g., 1/1000 s instead of 1/8000 s. It makes sense to make the exposure time longer, as it is difficult to detect a car and its modulated taillights in a dark image. However, the exposure time needs to be as short as possible to enable the desired use of the rolling-shutter effect. If the exposure time is slightly longer, the image sensor of the camera is exposed longer to the light of the modulated LED and the transitions from ON to OFF and vice versa are slightly washed out. This means the bright stripes of the stripe pattern are wider than with an extremely short exposure time. This can again be neutralized by having a lower pulse width ratio to optimize the BER.

3.4. Demodulation

The demodulation process of this system is based on the visible light vehicle-to-vehicle communication system using modulated taillights by Plattner et al. [1]. The proposed system is intended to be used for communication between two vehicles driving in succession on the highway. A public key fingerprint is transmitted from the first car by modulating its taillights to the second car, which is receiving the signal using a camera. As the system should be used to establish a secure connection between two vehicles, this communication needs to happen before those two cars decrease the distance between them to build a platoon. Thus, the communication distance is the usual safety distance between two cars on the highway, i.e., between 30 m and 80 m, depending on the current speed.

For receiving the signal the proposed system uses a camera with a common CMOS image sensor recording 30 frames per second (FPS). The shutter speed, or exposure time, respectively, is set manually to a very low level between 1/1000 of a second and 1/8000 of a second, to enable the use of the rolling-shutter effect. Every single frame captured by the camera is needed to detect the taillights of the car in front and to recognize their current states to demodulate the signal correctly.

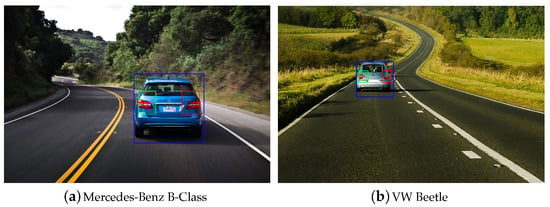

3.4.1. Vehicle and Taillight Detection

The first step of the demodulation process at the receiving vehicle is to detect the modulated light source. This is done by detecting the vehicle itself and infer on the two ROIs where the modulated taillights are expected. For detecting the bounding box of the vehicle in front the MobileNets framework published by Howard et al. [22] is used. In the current version of this proposed system, the vehicle and its taillights are detected in a single frame. Thus, in this implementation, the Single-Shot Multibox Detector (SSD) version of the MobileNets framework is used. Such an SSD discretizes the output space of bounding boxes into a set of default boxes over different aspect ratios and scales per feature map location [23]. This makes the framework faster and more precise compared to other frameworks such as Faster R-CNN [24] or Yolo [25].

As the taillights used for modulation are usually in the same region of a car’s rear, the ROI for the taillights can be detected with a static calculation using the following formulae from Plattner et al. [1], where the positions of the car C and the taillights and are defined by l, r, t and b representing the left, right, top, and bottom border of the bounding box, respectively.

Two examples for real-life scenarios with this detection process are shown in Figure 11. The blue rectangle marks the bounding box of the car, the ROIs for the left and right taillight are highlighted green and red, respectively. Of course, this static ROI calculation relies on an accurate detection of the car’s rear and is only applicable if the car is captured by the camera straight from behind. As this is usually the case for the application in platooning when cars are driving in succession on the highway, such a detection of the taillights is sufficient. However, for future research in this area, a more sophisticated approach will be applied, especially if cars with atypical taillight positions are involved in the communication.

Figure 11.

Taillight detection on example images of cars on a road [1].

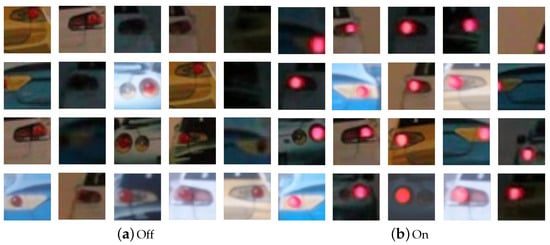

3.4.2. Taillight State Recognition

The ROIs for the left and the right taillights are cropped from every frame and analyzed using a simple convolutional neural network. The first step is to reshape the input image to a size of 28 × 28 pixels with three channels. Additionally, the input pixel values are normalized from the range of 0 to 255 to the range −1 to +1. This helps to train a neural network, as convergence is usually faster if the average of each input variable over the training set is close to zero [26]. The used network consists of three convolutional layers and two dense layers. A summary of the neural network layers is shown in Table 1. The output of the network shows if the taillight in the input image is ON or OFF. For the training of this neural network, a training set with more than 10,000 labeled sample images of the prototype using different lighting settings, car models and cameras was used. An excerpt of those sample images is shown in Figure 12. During the training process, a dropout of 50% was used to prevent overfitting. After 6 epochs of training, the network reached an accuracy of more than 98% in cross-validation. The final network scored an accuracy of 99.4% on the validation test set.

Table 1.

Summary of neural network layers for taillight state recognition.

Figure 12.

Excerpt of images used as training data.

The proposed design of the taillight state recognition network is optimized for the usage with the prototype implementation. In future work, when real cars are used for the communication, this design might be changed, if it is not capable of reliable recognition of the taillight states. However, with this prototype implementation, the performance of the network is sufficient for the use case.

For the demodulation of the signal, the taillight state is recognized in every recorded frame for both modulated taillights using the taillight ROIs. The recognized state is compared with the previously recognized taillight state. If the state is the same as before, the receiver can infer that no phase shift between the two frames happened and hence, a logic 0 is received. Otherwise, if the taillight state changed, due to an applied phase shift, i.e., the modulation signal was inverted, a logic 1 was transmitted. These single transmitted bits are collected into a received bit stream to interpret the transmitted message.

4. Evaluation

4.1. Experimental Setup

For evaluating the proposed system, a prototype in the scale of 1:24 was built. The transmitting car is represented by a printed cardboard vehicle with LEDs used as taillights. From the rear, this cardboard model needs to look like a real car to be detected by the MobileNets framework [22]. The LEDs representing the taillights of the car model are modulated using an Arduino UNO development board. The LEDs are connected to two of the GPIO (General-Purpose Input Output) ports of the Arduino UNO. The program running on it, takes a message, converts it into a bit stream and sets the states of the GPIO ports according to the modulation method and the defined settings.

The system is designed to transmit a code word with a fixed length in an endless loop. The basic settings for the transmission are:

- Bit rate: The bit rate defines how many bits are transmitted per second and per modulated light source. For the used modulation method, the bit rate must be equal to the frame rate of the camera used for receiving the signal. In the experiment, a camera with 30 FPS was used, therefore 30 bits per second per modulated LED could be transmitted.

- Periods per bit: This value defines how many periods of the modulation signal are used to send one bit. This value must be an integer number and actually defines the modulation frequency. In the experiment, a modulation frequency of 120 Hz was used, which means, this value was set to 4 periods per bit to send 30 bits per second and per modulated LED.

- Modulation mode: With this settings flag, it can be selected how phase shifts are applied if a logic 1 needs to be transmitted to encode the message. With the standard mode, the signal is inverted by toggling the phase between 0° and 180°, or 0 and , respectively. In the mode the phase is shifted between 90° and 270°, or and , respectively. This mode allows inversion of the signal with smaller changes of the average brightness of the light source, and hence, the modulation cannot be perceived by a human observer. Figure 13 shows how the application of a phase shift to transmit a logic 1 looks in a close-up image of the modulated light source for both modulation modes.

Figure 13. Phase shift visible in the stripe pattern to transmit a logical 1.

Figure 13. Phase shift visible in the stripe pattern to transmit a logical 1.

This system is very sensitive to timing offsets because there is no strict synchronization between transmitter and receiver. Even if the timing of the modulation is calculated exactly, inconsistencies during runtime cause variances in the range of microseconds, which might lead to bit errors in the communication. Hence, the implementation must not use static delays for the timing, but also needs to take the time needed for executing code into consideration. For those timing mechanisms in the program, additional settings can be adjusted in form of microsecond offsets added or subtracted to the dynamic delays for perfect synchronization. These settings might be adjusted in various cases, e.g., if a different camera is used for receiving. Additionally, effects caused by blooming or slightly longer exposure time on the receiving side must be improved by countermeasures in the modulation method by adjusting the pulse width ratio of the modulation signal. These special adjustments available are the following:

- Delay offset for one bit: The offset in microseconds for the dynamic delay for one bit in the modulation signal.

- Delay offset for one period: The offset in microseconds for the dynamic delay for one period of a bit of the modulation signal.

- Delay offset for a state: The offset in microseconds for the dynamic delay for a single state within a period of the modulation signal.

- Pulse width difference: With this value for a time in microseconds, the pulse with ratio of the modulation signal can be adjusted very precisely. For example, if this value is set to 150 µs, ON states get 150 µs shorter and OFF states are extended by 150 µs.

The Arduino UNO is connected to a PC via USB for power supply and to communicate over the serial interface. One can modify the settings of the program during runtime by sending predefined commands via the serial interface to the Arduino UNO or select the message that is transmitted.

On the receiving side of the experimental setup, a Canon EOS D1100 DSLR camera is used to record videos that are then analyzed using a python script. However, the stock firmware from Canon does not allow setting the exposure time to a fixed value when recording a video. Thus, the third-party firmware-addon Magic Lantern [27] was installed on the receiving camera, which enables various manual settings for recording videos with a Canon DSLR camera. The exposure time was set to a value between 1/8000 s and 1/1000 s, depending on the experiment. As those exposure settings lead to very dark images, except if the environmental lighting is very bright, additionally the aperture was set very low and the sensitivity of the image sensor, i.e., the ISO, was set very high, to get brighter images.

For the experiments, a distance of approx. 1.5 m was chosen between the transmitting car model and the receiving camera. In the scale of 1:24, this equals a distance of 36 m, which is a common distance between two cars on the road, following each other.

This experimental setup only targets the evaluation of the raw data transmission and bit errors caused by the modulation itself. Other influences such as movement of transmitter and receiver and weather are not simulated. Especially the impact of rain or fog cannot be simulated realistically in the small scale. These evaluations will be done in future research when implementing the system using full-sized vehicles on the road.

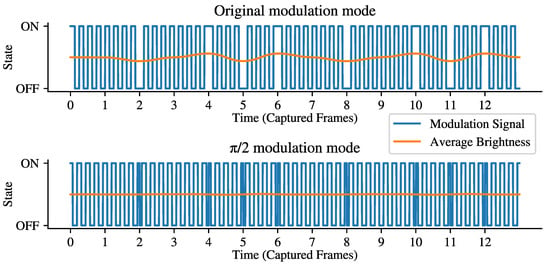

4.2. Modulation Mode

The first simple but effective improvement of the modulation method compared to the UDPSOOK modulation method by Liu et al. [3] is to adapt the application of phase shifts to the signal. The experiments showed that with the original modulation method at a modulation frequency of 120 Hz, an unacceptable amount of flickering can be perceived by human observers if data is transmitted, and hence, this effect would disqualify the system for the usage in traffic. With the modulation mode, where the signal is inverted by switching the phase between 90° and 270° if needed, a steady average brightness of the modulated LED is enabled, and hence, no flickering can by perceived. This difference in modulation modes is hard to depict in real images; however, Figure 14 shows the average brightness of a modulated LED using a sliding window calculation. One can see that the average brightness clearly changes over time when using the original modulation method, with the modulation mode the average brightness remains steady.

Figure 14.

Average brightness of modulated light sources.

4.3. Pulse Width Adjustments

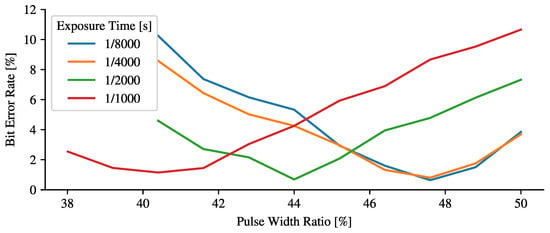

Blooming and exposure time affect the width ratio of the stripes in the resulting stripe pattern in a captured image. Even if the modulation signal has a pulse width ratio of exactly 50%, the bright stripes are slightly wider than the dark stripes. In those areas, the modulated signal cannot be decoded, because if the modulation signal is inverted to transmit a logic 1, the state of the light source will be ON in both captured images. This causes errors in the communication, and hence needs to be optimized. As this effect occurs when a transition of the stripe pattern passes through the area of the modulated taillight, for this evaluation the modulation frequency was slightly adjusted to be marginally slower than the desired 120 Hz, i.e., 119.96 Hz. This way, the communication still works fine; however, the stripe pattern is slowly moving down in the image and hence, more such transitions occur. This way, more relevant data is available to evaluate the approach. To improve the error rate in those transition cases, the pulse width ratio in the system was slightly decreased to counteract the effects of blooming and exposure and get equal stripe widths in the captured frames. In the experiments, the pulse width ratio of the modulation signal was optimized to get the best possible BER. This experiment was carried out for exposure times of 1/8000 s, 1/4000 s, 1/2000 s, and 1/1000 s. The resulting BER for all tested combinations of exposure time and pulse with ratio are depicted in Figure 15. The experiments showed that for an exposure time of 1/1000 s, the best BER of 1.15% can be achieved using a pulse width ratio of 40.4%, i.e., the length of the ON cycle is decreased by 800 µs with unchanged modulation frequency. For 1/2000 s exposure time, the best BER was 0.69%, so better than when using 1/1000 s exposure time. This was achieved by setting the pulse width ratio to 44%, i.e., shortening the ON cycle by 500 µs. For exposure times of 1/4000 s and 1/8000 s similar results were measured. The best BER of 0.81% and 0.64%, respectively, was achieved by setting the pulse width ratio to 47.6%, i.e., the duration of the ON cycle of the modulation signal decreased by 200 µs. As expected, the optimal pulse width ratio decreases for longer exposure times, as a longer exposure time increases the width of the bright stripes in the captured image. However, the optimal pulse width ratio for an exposure time of 1/4000 s and 1/8000 s is equal. It can be concluded that with such a short exposure time, blooming has a more relevant effect on the resulting width of bright stripes in the image, as the blooming effect is independent from the exposure time. The results also show that a balanced pulse width ratio of 50% is not optimal, but the results of previous research [1] was confirmed with a BER between 3.5% and 4%.

Figure 15.

BER depending on pulse width ratio for different exposure settings.

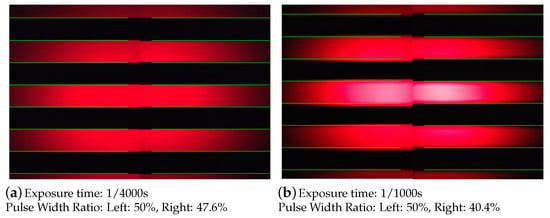

Figure 16 shows close-up images of a modulated light source to compare them, regarding the resulting stripe pattern. Figure 16a compares two images captured using an exposure time of 1/4000 of a second. On the left, the resulting stripe pattern with a pulse with ratio of 50% is depicted, on the right the improved version with an adapted pulse width ratio of 47.6% is shown. The green lines indicate a perfectly balanced distribution between bright and dark stripes, i.e., the edges of the modulation signal with a pulse width ratio of 50%. In the center of the image those green lines are interrupted for better visibility of the differences between the left and the right part of the image. However, with a modulation signal with a pulse width ratio of 50%, the resulting bright stripes are wider than the dark stripes, due to the blooming effect and exposure. With a pulse width ratio of 47.6%, those effects are neutralized to get an optimal distribution of stripe widths for the best possible BER. The effect of this approach can be seen even better, if an exposure time of 1/1000 of a second is used for capturing such close-up images as shown in Figure 16b. Here again a pulse width ratio of 50% was used to capture the left part of the image and the green lines indicate an equal distribution. It is clearly visible that the bright stripes in the left part of the image are wider than the dark stripes. For the right part of the image the pulse width ratio with the best results regarding the BER for data transmission was used, i.e., 40.4%. The effects of blooming and longer exposure time are counteracted using this lower pulse width ratio and the resulting bright and dark stripes have equal widths.

Figure 16.

Comparison of resulting stripe pattern using different pulse width ratios.

4.4. Loose Synchronization

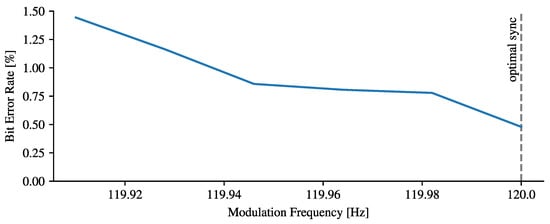

This VLC system is not strictly synchronized. Thus, if the transmitter and receiver systems are not perfectly in sync, errors might occur. The sampling frequency of the receiving system is hard to adjust, hence the modulation frequency was set to a perfect multiple to get a loose synchronization. The impact of synchronization offsets or phase slipping was evaluated by analyzing the BER of the communication system with slightly different modulation frequencies over a long period of 15 min of communication. With such a long time span, even the best adjusted loose synchronization runs into errors caused by phase slipping some time. The results depicted in Figure 17 show the resulting BER over the chosen modulation frequency. As the camera on the receiving side has a frame sampling rate of 30 Hz, the perfect modulation frequency for the transmitter is 120 Hz. A close-up setup was used to see the whole stripe pattern in the captured image and the modulation frequency was tuned, so that no movement of the stripe pattern could be perceived. This is what was considered the best possible loose synchronization. For the other measurements, the modulation frequency was slightly changed to purposely get a synchronization offset. The results show how such a synchronization offset in this not strictly synchronized system affects the BER.

Figure 17.

BER depending on accuracy of loose synchronization.

For the measurements, the exposure time was set to 1/4000 s and the pulse width ratio was chosen according to the best result of the previous experiment, i.e., 47.6%. As expected, the best BER was achieved with the best possible synchronization. In this case the BER was 0.48%. The bigger the offset, the worse the BER. The worst synchronized modulation frequency that was tested was 119.91 Hz, i.e., the modulation of one bit lasts 25 µs too long. With this synchronization offset, the BER increased to 1.44%. From this experiment it can be concluded that the better the loose synchronization between transmitter and receiver, the better the BER. However, even if there is a synchronization offset of up to 25 µs per modulated bit, the BER is still in an acceptable range. The sign of the synchronization offset, i.e., the direction of the stripe pattern movement, does not matter in this system. In both cases errors are caused by transitions of the stripe pattern moving through the area of the modulated light source. The only difference the sign or direction of the offset causes, is that in the long term for a too slow modulation frequency, one bit from each modulated taillight will be duplicated by the receiver. For a modulation frequency that is too fast, one bit from each modulated taillight will be missing in the received bit stream. However, both cases affect the BER in the same way.

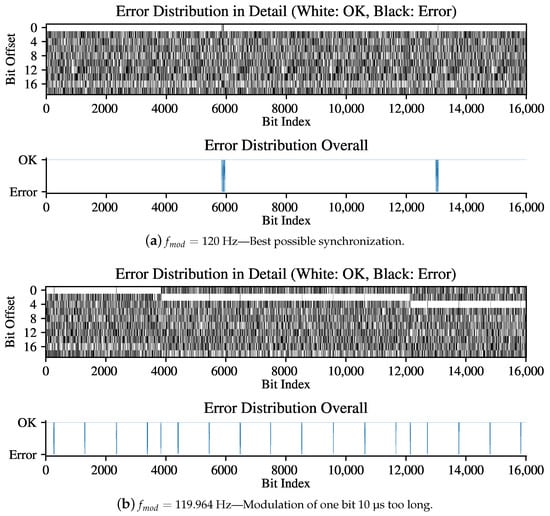

4.5. Error Distribution

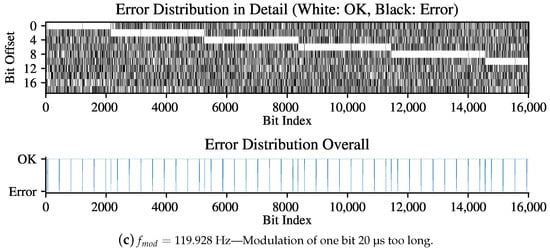

Another interesting aspect is the distribution of bit errors in the raw data transmission. In the experimental setup any environmental errors or errors caused by movements of the transmitting car or the receiving camera were tried to be omitted. The only errors to analyze are the ones caused by the modulation itself, due to ambiguous states as previously shown in Figure 9. Figure 18 shows the error distribution for three data transmissions over approx. 4.5 min with different synchronization qualities. Each of those sub-figures shows two graphs. The top graph depicts the errors in detail by comparing the received bit stream with the expected message taking bit offsets into account. At the start of the data transmission, expected and received bit stream are aligned to get a bit offset of 0. If two bits encoded into one frame are duplicated because the sampling of the receiving camera was faster than the modulation, the bit offset is increased by 2 bits. The bottom graph shows the actual bit errors that occurred during the transmission with adjustment of the bit offset if bits are duplicated.

Figure 18.

Error distribution for different qualities of synchronization.

Figure 18a shows that even with the best possible synchronization the system runs into error bursts, because even here the stripe pattern is slowly moving. In this transmission one can see two error bursts. The first one lasts from bit index 5831 to bit index 5960, i.e., 2.15 s, the second one from bit index 12,972 to bit index 13,077, i.e., 1.75 s. The time between the two error bursts is approx. 2 min. However, within the time of this transmission the bit offset does not change because no bit is missing or duplicated.

With a slightly imperfect synchronization, where the modulation of one bit takes 10 µs too long, the frequency of error bursts increases; however, the duration of a single error burst is shorter, as seen in Figure 18b. During the transmission, equidistant error bursts occur after every 17.3 s on average with an average duration of 392 ms. What can also be seen is that after 8 equidistant error bursts the bit offset is increased by 2, which means the two encoded bits from one frame are duplicated. This causes one additional error burst. Those error bursts always occur when a transition of the stripe pattern, representing the rising and falling edges of the modulation signal, is visible at the vertical position of the taillights. For each rising and falling edge in the modulation signal an error burst occurs. As seen in the previously shown Figure 7, the modulation signal in mode uses 9 edges to modulate one bit. The original modulation mode signal depicted in Figure 6 only uses 8 edges to modulate one bit and hence with the original modulation mode, the additional error burst would not occur. However, this is the only and just a minor downside of the modulation mode.

In the last transmission example shown in Figure 18c, the modulation of one bit takes 20 µs too long. Here the average time between two error bursts is only 5.5 s where each of them lasts about 250 ms. Within the transmission time of approx. 4.5 min, the bit offset is increased 5 times, which causes additional error bursts. However, as in the previous example, 8 equidistant error bursts occur before the bit offset is increased which causes an additional error burst.

5. Conclusions

A VLC system for V2V communication by modulating the taillights of a car using an improved version of the UDPSOOK modulation method by Liu et al. [3] is proposed. On the receiving side of the system, a DSLR camera is used for capturing the scene with 30 FPS. As the system is intended to be used in traffic, where there is a distance of 30 m to 80 m between two cars, i.e., the transmitter and receiver, adjustments to the original UDPSOOK modulation methods were necessary, to optimize it in terms of applicability and bit error rate. With the adjustment of the chosen phase of the modulation signal of and for the inverted signal, it was possible to modulate a light source without visible flickering. This first improvement was crucial for this application, as flickering would distract other drivers on the road.

In this system, the first step on the receiving side is to detect the car in front and its taillights to recognize the states of the taillights. As it is difficult to decide exactly which pixel in the image needs to be analyzed to see the taillight state, a region of interest containing each taillight is selected. However, the problem is that in such a region the captured taillight might be ON, OFF or have an ambiguous state. Such an ambiguous state occurs if the taillight in the camera’s view is captured at the exact time when its state is changed. This results in an image where, e.g., the top of the taillight is ON and the bottom of the taillight is OFF. Such a scenario always results in bit errors and hence, the modulation method was optimized to get as few as possible bit errors caused by such ambiguous states in the communication. The best bit error rate of 0.48% was achieved by setting the exposure time to 1/4000 s, the pulse width ratio to 47.6% and the modulation frequency to 120 Hz using the best possible loose synchronization. This is a major improvement compared to the results of previous research that was publish in [1]. In this first concept of the visible light vehicle-to-vehicle communication system using modulated taillights, an average BER of 3.46% was achieved. This means by optimizing the modulation method, the proposed system now is not only applicable for the usage in traffic, but also the BER is reduced by 86%.

The proposed system is not quick enough to transmit time-sensitive data between two cars driving on the road. However, the optical data transmission using a camera as receiver is very hard to manipulate for a third party and therefore could be used to verify the identity of another car. This information can be used to establish a fast and encrypted connection between two cars driving in succession, where, e.g., 5G or 802.11p is used for the main communication channel, and the slow but secure optical channel is only used to exchange data for the identity verification of the cars.

Future Work

In the ongoing research, this communication system will be applied to full-sized cars and the impact of influences such as distance, angle, absolute and relative velocity, weather, daytime, other interfering light sources, etc. will be analyzed to see how the system can be optimized in this regard. With this information a specific channel model for this optical communication channel can be defined to develop an appropriate security protocol that allows fast, reliable, and secure identity verification for establishing a connection in another wireless channel that can then be used in applications such as platooning or similar.

Author Contributions

Conceptualization, M.P.; methodology, M.P.; software, M.P.; validation, M.P. and G.O.; writing—original draft preparation, M.P.; writing—review and editing, M.P. and G.O.; supervision, G.O.; project administration, G.O.; funding acquisition, G.O. All authors have read and agreed to the published version of the manuscript.

Funding

This project has been co-financed by the European Union using financial means of the European Regional Development Fund (EFRE). Further information to IWB/EFRE is available at http://www.efre.gv.at (accessed on 2 March 2021).

Acknowledgments

This paper and all corresponding results, developments and achievements are a result of the Research Group for Networks and Mobility (NEMO).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADAS | Advanced Driver Assistance Systems |

| BER | Bit Error Rate |

| CMOS | Complementary Metal-Oxide-Semiconductor |

| DSLR | Digital Single-lens Reflex Camera |

| FPS | Frames Per Second |

| GPIO | General-Purpose Input Output |

| OCC | Optical Camera Communication |

| OWC | Optical Wireless Communication |

| ROI | Region of Interest |

| SSD | Single-Shot Multibox Detector |

| UDPSOOK | Undersampled Differential Phase Shift On–Off Keying |

| UDPSOOKED | Undersampled Differential Phase Shift On–Off Keying with Error Detection |

| V2I | Vehicle-to-Infrastructure Communication |

| V2LC | Vehicular Visible Light Communication |

| V2V | Vehicle-to-Vehicle Communication |

| VLC | Visible Light Communication |

References

- Plattner, M.; Ostermayer, G. A Visible Light Vehicle-to-Vehicle Communication System Using Modulated Taillights. In Proceedings of the Twelfth International Conference on Adaptive and Self-Adaptive Systems and Applications, Nice, France, 25–29 October 2020; IARIA XPS Press: Wilmington, DE, USA, 2020. [Google Scholar]

- Swaroop, D. String Stability of Interconnected Systems: An Application to Platooning in Automated Highway Systems. Ph. D. Thesis, University of California, Berkeley, CA, USA, 1997. [Google Scholar]

- Liu, N.; Cheng, J.; Holzman, J.F. Undersampled differential phase shift on-off keying for optical camera communications. J. Commun. Inf. Netw. 2017, 2, 47–56. [Google Scholar] [CrossRef]

- Arnon, S. Visible Light Communication; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Luczak, H. Arbeitswissenschaft. 2, Vollständig Bearbeitete Auflage; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Kircheis, G.; Wettstein, M.; Timmermann, L.; Schnitzler, A.; Häussinger, D. Critical flicker frequency for quantification of low-grade hepatic encephalopathy. Hepatology 2002, 35, 357–366. [Google Scholar] [CrossRef] [PubMed]

- Premachandra, H.C.N.; Yendo, T.; Tehrani, M.P.; Yamazato, T.; Okada, H.; Fujii, T.; Tanimoto, M. High-speed-camera image processing based LED traffic light detection for road-to-vehicle visible light communication. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 793–798. [Google Scholar]

- Iwasaki, S.; Premachandra, C.; Endo, T.; Fujii, T.; Tanimoto, M.; Kimura, Y. Visible light road-to-vehicle communication using high-speed camera. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 13–18. [Google Scholar]

- Boubezari, R.; Le Minh, H.; Ghassemlooy, Z.; Bouridane, A. Smartphone camera based visible light communication. J. Light. Technol. 2016, 34, 4121–4127. [Google Scholar] [CrossRef]

- Takai, I.; Ito, S.; Yasutomi, K.; Kagawa, K.; Andoh, M.; Kawahito, S. LED and CMOS image sensor based optical wireless communication system for automotive applications. IEEE Photonics J. 2013, 5, 6801418. [Google Scholar] [CrossRef]

- Căilean, A.M.; Cagneau, B.; Chassagne, L.; Dimian, M.; Popa, V. Novel receiver sensor for visible light communications in automotive applications. IEEE Sens. J. 2015, 15, 4632–4639. [Google Scholar] [CrossRef]

- Yamazato, T.; Takai, I.; Okada, H.; Fujii, T.; Yendo, T.; Arai, S.; Andoh, M.; Harada, T.; Yasutomi, K.; Kagawa, K.; et al. Image-sensor-based visible light communication for automotive applications. IEEE Commun. Mag. 2014, 52, 88–97. [Google Scholar] [CrossRef]

- Goto, Y.; Takai, I.; Yamazato, T.; Okada, H.; Fujii, T.; Kawahito, S.; Arai, S.; Yendo, T.; Kamakura, K. A new automotive VLC system using optical communication image sensor. IEEE Photonics J. 2016, 8, 1–17. [Google Scholar] [CrossRef]

- Do, T.H.; Yoo, M. Performance analysis of visible light communication using CMOS sensors. Sensors 2016, 16, 309. [Google Scholar] [CrossRef] [PubMed]

- Liang, K.; Chow, C.W.; Liu, Y. RGB visible light communication using mobile-phone camera and multi-input multi-output. Opt. Express 2016, 24, 9383–9388. [Google Scholar] [CrossRef] [PubMed]

- Danakis, C.; Afgani, M.; Povey, G.; Underwood, I.; Haas, H. Using a CMOS camera sensor for visible light communication. In Proceedings of the 2012 IEEE Globecom Workshops, Anaheim, CA, USA, 3–7 December 2012; pp. 1244–1248. [Google Scholar]

- Chow, C.W.; Chen, C.Y.; Chen, S.H. Visible light communication using mobile-phone camera with data rate higher than frame rate. Opt. Express 2015, 23, 26080–26085. [Google Scholar] [CrossRef] [PubMed]

- Zabat, M.; Stabile, N.; Farascaroli, S.; Browand, F. The Aerodynamic Performance of Platoons: A Final Report; University of California: Berkeley, CA, USA, 1995. [Google Scholar]

- The Slow Mo Guys. Inside a Camera at 10,000 fps. Available online: https://www.youtube.com/watch?v=CmjeCchGRQo (accessed on 16 December 2020).

- Smarter Every Day. Rolling Shutter Explained (Why Do Cameras Do This?). Available online: https://www.youtube.com/watch?v=dNVtMmLlnoE (accessed on 16 December 2020).

- Tan, M.A.; Luo, J. Self-Calibrating Anti-Blooming Circuit for CMOS Image Sensor Having a Spillover Protection Performance in Response to a Spillover Condition. U.S. Patent 7,381,936, 3 June 2008. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- LeCun, Y.A.; Bottou, L.; Orr, G.B.; Müller, K.R. Efficient backprop. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 9–48. [Google Scholar]

- Hudson, T. Magic Lantern. Available online: https://magiclantern.fm/ (accessed on 9 April 2019).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).