Abstract

Think-aloud protocols are among the most standard methods for usability evaluation, which help to discover usability problems and to examine improvements because they provide direct information on a user’s thinking and cognitive processes; however, it is often difficult to determine how to analyze the data to identify usability problems because there is no formulaic analysis procedure for textual data. Therefore, the analysis is time-consuming, and the quality of the results varies depending on an analyst’s skills. In the present study, the author proposes a formulaic analysis think-aloud protocol method that specifies the procedure for analyzing participants’ verbal responses during usability tests. The aim of the proposed think-aloud protocol method was to deliver an explicit procedure using step coding (SCAT) and 70 design items for textual data analysis, and then, the method was applied to a case study of usability evaluation to confirm that the method could extract the target system’s problems. By using step coding and 70 design items, the process of extracting usability problems from textual data was made explicit, and the problems were extracted analytically. In other words, the proposed method was less ambiguous. Once a formulaic analysis procedure was established, textual data analysis could be performed easily and efficiently. The analysis could be performed without hesitation after data acquisition, and there were fewer omissions. In addition, it is expected that the procedure would be easy to use, even for novice designers.

1. Introduction

One of the most widely used methods for evaluating the usability of a product, software, or system is a think-aloud protocol [1]. In usability testing, a think-aloud protocol is a method of verbalizing what participants experience, think, act, and feel while performing a task [2]. This method helps to discover usability problems and to examine improvements because it provides direct information on a user’s thinking and cognitive processes, which are challenging to examine through mere observation or questionnaire surveys. For this reason, the think-aloud protocol is often taught in courses that train usability and user experience (UX) specialists [3,4,5]. In addition, McDonald et al. [6] and Fan et al. [7] surveyed usability and UX practitioners and reported that most of the respondents frequently used a think-aloud protocol for usability testing and considered it to be the most common method for finding usability problems. Therefore, a think-aloud protocol is a standard method for usability evaluation [8].

However, there are some challenges associated with implementing and analyzing a think-aloud protocol [7]. In particular, even if qualitative verbal and observational data are obtained through usability testing, it is often difficult to determine how to analyze the data to identify usability problems. The analysis is time-consuming, and the quality of the results varies depending on an analyst’s skills. In addition, usability testing is not necessarily conducted only by expert analysts. When the resources of ergonomic experts are limited, a usability evaluation is conducted by non-expert analysts. In such cases, the analysis of a think-aloud protocol becomes more difficult.

One of the reasons for this challenge is the lack of a clear method for analyzing qualitative data (video and text data) obtained from a think-aloud protocol. Once a formulaic analysis procedure is established, analysts can perform textual data analysis easily and efficiently. They can analyze the data without hesitation after data acquisition, and there are fewer omissions. In addition, it is expected that the procedure would be easy to use, even for novice designers.

The purpose of this study is to propose a formulaic analysis procedure for the think-aloud protocol that specifies the procedure for analyzing verbal responses after user testing. The aim is to develop a method that can extract usability problems and user requirements with few omissions.

2. Related Works

2.1. Usability Evaluation by Think-Aloud Protocol

Think-aloud protocol methods are based on a theoretical framework initially developed by cognitive psychologists Ericsson and Simon [9] and were introduced to the field of usability testing by Lewis [2]. There are three types of think-aloud protocol methods [10,11,12]. The first type is the concurrent think-aloud protocol method in which the participants follow the think-aloud protocol at the same time as the experimental task is performed. The second type is the retrospective think-aloud protocol method in which the participants verbalize their thoughts after the experimental task is performed. The third type is a hybrid think-aloud protocol method in which the above two types are used together. For the concurrent think-aloud protocol method, participants speak in real time while operating the system, and it is the most commonly used protocol type for usability testing [6].

In addition to these three types of think-aloud protocol methods, new usability evaluation methods have also been proposed, such as a method that measures eye gaze in parallel with verbal responses and analyzes it together with the user’s eye gaze information [13,14].

Boren and Ramey [15] pointed out that there was a difference between the original purpose of the think-aloud protocol and its use for usability testing. The original purpose of the think-aloud protocol was to study natural human cognitive processes. Therefore, participant interventions during the experiment should be avoided as much as possible since they could alter the participants’ thought processes [10,16]. However, usability testing aims to obtain the user’s thoughts and also to discover usability problems. For this purpose, it is necessary to have the user speak as much as possible without silence. During usability testing, this is usually handled by asking the user to speak after a certain period of silence or by practicing speaking before starting the experiment. When intervening with the participants during the experiment, the experimenter’s opinion should not be expressed. Instead, a neutral voice should be used.

There are some challenges in using the think-aloud protocol for usability testing. One challenge is that the participants are required to speak during the operation, which is not a usual behavior. Some participants are not good at verbalizing their thoughts, and the complexity and duration of the task may affect the content and quantity of their verbal responses. The second challenge is that the analysis is time-consuming and requires a certain level of skill. In most cases, the think-aloud protocol is analyzed by reviewing the audio and video recordings of the experiment and transcribing the verbal data. According to a survey by Fan et al. [7], many experts felt that this series of work was complicated. In addition, there was no straightforward procedure for extracting usability problems through analysis; therefore, experts had to devise their own way of organizing verbal data and observation notes, which required their skills and experience.

In order to make this analysis more accessible and more efficient, some proposals have been made. For example, Cooke [17] analyzed what users verbalized during a think-aloud session and classified the verbalizations into four categories (reading, procedure, observation, and explanation). These categories could be used as templates for analysis. Fan et al. [18] also studied a method to automatically detect usability problems from user’s verbal responses and voice features by machine learning. Thus, when using think-aloud protocol methods, it is crucial to make the analysis more accessible and the implementation more efficient.

In this study, the aim was to make the analysis easier and more efficient by specifying a clear and formal analysis procedure for extracting usability problems using the think-aloud protocol. A procedure that is clear is expected to be less dependent on experts’ skills and experiences and to reduce the number of omissions.

2.2. Qualitative Data Analysis

In order to analyze and interpret qualitative data such as utterances obtained via the think-aloud protocol method, the qualitative data analysis methods used in qualitative research in the social sciences can be utilized. In qualitative research, the analysts often deal with textual data such as narrative data obtained by the subject of analysis through interviews and fieldwork (verbal data), and descriptive data obtained from literature, documents, and questionnaire surveys. In qualitative research, the process of research question formulation, selection of subjects and data collection, coding and categorization, and theory building are often carried out. The first step in analyzing textual data is to abstract and categorize the raw textual data obtained through coding. Then, through the back and forth between the raw data (the original context) and the abstracted concepts, the information is organized, and theories are constructed.

There are some analytical methods for conducting the qualitative research described above as follows: the KJ method [19], grounded theory approach [20], content analysis [21], discourse analysis [22,23], and interpretative phenomenological analysis [24,25]. In usability analysis, the KJ method and grounded theory approach are often used [26,27,28,29]. However, when coding textual data, it is necessary to perform generative coding, where the analyst examines the code while reading the text. Therefore, an analyst must examine the codes him/herself. In addition, it is not easy to theorize based on these codes, and, therefore, a skilled and experienced analyst is required. This method targets relatively large data and requires a large number of samples or a long data acquisition period, as well as significant training time for analysts. For these reasons, it is not necessarily suitable for analyzing think-aloud protocols for a usability evaluation.

This study focused on step coding (SCAT) [30,31,32,33] to solve the above challenges. SCAT is a method that does not adopt generative coding but uses the following four stages of coding in order to make text data analysis relatively easy even for beginners in qualitative research. In other words, by using SCAT’s coding method in utterance analysis for usability evaluation it will be possible to analyze the data efficiently without hesitation in coding.

- (1)

- Obtain the text data through interviews, questionnaires, or other surveys.

- (2)

- Perform coding.

- (2.1)

- Write down the words or phrases of interest in the text data.

- (2.2)

- Paraphrase the words in the previous section with words that are not in the original text data.

- (2.3)

- Fill in the concept, phrase, or sentence that explains the previous paragraph.

- (2.4)

- Based on (2.1) to (2.3), enter the themes that represent them.

- (2.5)

- Discuss. Write questions, issues, hypotheses to be considered.

- (3)

- Create the storyline. After coding all the interview data, connect the themes entered in (2.4) and write them as a sentence. It is a kind of text that summarizes the interview.

- (4)

- Complete the theoretical description. Consider the storyline. Fill in predictions or hypotheses that can be considered from the original text data through analysis. Write specific things, not general things.

- (5)

- Write questions and issues. Fill in the questions and issues that should be considered further.

In this study, a coding method, similar to the second step of SCAT, is considered to be helpful for the exploratory analysis of a small number of interviews, such as the protocol analysis in usability evaluation. By applying step coding to the protocol analysis, it is possible to clarify the method from the acquisition of text data to the extraction of problems and the construction of hypotheses. Therefore, in this study, a formulaic method for usability evaluation is proposed that mainly uses protocol analysis and coding methods in SCAT.

2.3. Perspectives on an Interpretation of Usability Problems

The purpose of the analysis method used in the qualitative research is to describe the theory in the end. However, the purpose of the think-aloud protocol method in usability evaluation is to extract usability problems, whereas the purpose of theory description and system problem extraction is different. In other words, it is not necessarily desirable to apply the same method directly. Usability problems can be patterned to some extent, as shown in various design principles and guidelines. In fact, in usability expert reviews, it is possible to conduct usability evaluations efficiently and without omissions by following typical usability problems, as typified by heuristic evaluations.

Although there are many types of design guidelines [34,35,36,37,38,39,40,41,42,43,44], this study focused on the 70 design items proposed by Yamaoka [34] to analyze and interpret the utterances obtained in usability tests. The 70 design items are items that exhaustively describe what is necessary for designing a product or system. It consists of the following eight items and their sub-items. Looking at these items, we can see that they include items related to user requirements in many aspects, not only from the visibility and understandability of user interfaces. In addition, it is recommended that novice and inexperienced designers and engineers refer to these items during design and evaluation. Yamaoka [45,46] stated that even novice and inexperienced designers and engineers could design and evaluate products or systems by referring to the 70 design items. These points were considered to be in line with the policy of the text analysis method aimed at in this study.

70 design items:

- User interface design items (29 items).

- Kansei design items (9 items).

- Universal design items (9 items).

- Product liability design items (6 items).

- Robust design items (5 items).

- Maintenance design items (2 items).

- Ecological design items (5 items).

- Others (human-machine interface design items) (5 items).

3. Proposed Method: Usability Textual Data Analysis (UTA)

This study proposes usability textual data analysis (UTA) to extract usability problems by analyzing utterances obtained through usability tests based on a formal and unambiguous procedure. The formal and unambiguous analysis procedure can contribute to a usability evaluation with less confusion for the analyst and less omission of usability problems. The analysis procedure of UTA was inspired by the concept and coding procedure of SCAT and modified to fit the analysis purpose of extracting usability problems. Specifically, it is proposed to use the coding procedure in step (2) of SCAT and 70 design items [34] to extract usability problems from textual data obtained using the think-aloud protocol. SCAT is in line with the objectives of this study because of its formulaic and explicit analysis procedures and its applicability to small-scale data.

In the proposed method, the analysts proceed according to the format shown in Table 1. The analysis is performed for each task of each participant in the usability test. It can also be analyzed by summarizing the verbal responses of each participant.

Table 1.

Format of the UTA.

First, an analyst transcribes the verbal data obtained from the user test and fills in the “speech” column of the format. Then, the analyst codes the data according to the steps described below and extract the problems. The overall flow and steps (1) and (2) are based on the SCAT method, and steps (3) and (4) incorporate a method for extracting usability problems using 70 design items.

The coding procedure is as follows:

- (1)

- Extraction of keywords

Keywords and phrasing should be described from the written text data. The keywords should be selected from the original text data to focus on considering usability problems and user requirements.

- (2)

- Paraphrase of keywords

The words extracted in step (1) are paraphrased using specific and exact words. In this case, the words should be expressed using words other than those found in the text data.

- (3)

- Interpretation using 70 design items

The concepts, phrases, and character strings that explain the previous section (keywords paraphrased with words outside the verbal data) are described. In this case, the 70 design items [34] are used to explain the previous section (keywords paraphrased with words outside of the verbal data).

In the proposed method, 70 design items are used as a framework to explain the previous section. Since the 70 design items comprise a comprehensive list of items that should be considered when designing a product or system, the analyst can paraphrase the previous section without omission when targeting usability problems and user requirements. By applying the 70 design items, the analyst does not have to think about paraphrasing each item but can select appropriate items, which is efficient and reduces omissions. In addition, even novice designers can examine the 70 design items by referring to them.

- (4)

- Extraction of problems based on the 70 design items

Usability problems and user requirements are extracted based on the items that have been filled in so far and the original text data. Specific problem areas and situations are identified from the original text data, and the items entered in steps (1) and (2) and problem types are identified from the 70 design items entered in step (3). The usability problems and user requirements of the target product based on the 70 design items are extracted.

- (5)

- Description of other items

Any questions, issues, or hypotheses that should be considered during the user testing or analysis are described.

4. Usability Evaluation by the UTA

4.1. Purpose

A usability test was conducted to show an example of the usability problems extracted by the proposed method and to examine the possibility of using the proposed method. The usability test was conducted on an in-vehicle audio system, the usability problems were extracted, and improvements were examined.

4.2. Method

4.2.1. Participants

The participants were six undergraduate students (average age of 21.0 years, SD = 1.1). All the participants had 20/20 vision either naturally or with vision correction. All the participants provided informed consent after receiving a brief explanation of the aim and content of the experiment.

4.2.2. Apparatus

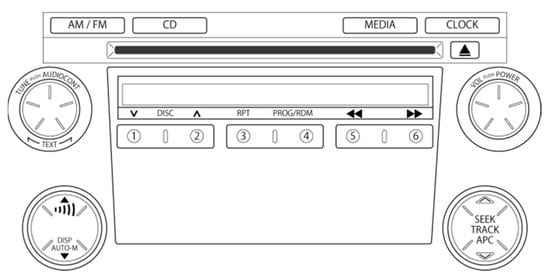

In this study, the user interface was an in-vehicle audio system of the Mazda Demio shown in Figure 1, which was used to conduct a usability test using the proposed method. The participants operated the audio system from the front passenger seat and filmed the operation with a video camera installed in the rear seat.

Figure 1.

The user interface of the evaluated in-vehicle audio system.

4.2.3. Tasks

Five tasks were presented to the participants in the experiment. The five tasks consisted of basic operations of an audio system. The participants were required to verbally express their thoughts during the operations. Before starting the experiment, the participants practiced verbally expressing their thoughts while solving the puzzle ring to become familiar with their verbal responses during the operation. During the experiment, the experimenter’s intervention was kept to a minimum. When the participants were silent for a certain period, the experimenter encouraged them to think aloud. When the participants asked questions, the experimenter did not express an opinion but only gave neutral instructions. The five tasks presented to the participants were the following:

- Task 1 Play the ninth song on the CD.

- Task 2 Set the volume to 4 and listen to the fourth song repeatedly.

- Task 3 Switch to a different CD and play the tracks in random order.

- Task 4 Change the channel of the FM radio by automatic song selection.

- Task 5 Tune in the AM radio by manual selection, listen to the radio, and turn off the power.

4.3. Results

First, the verbal responses of the participants obtained through the usability test were recorded as written textual data. Then, the textual data were coded according to the procedure of the proposed method. As part of the analysis procedure and the results, Task 1 for one of the participants is shown below as follows:

- (1)

- Extraction of keywords

The following words and phrases were extracted as the focus of attention from the speaking (the original textual data were in Japanese): “doesn’t go back”, “what’s this?”, and “SEEK TRACK”.

- (2)

- Paraphrasing of keywords

The words extracted in (1) were paraphrased as follows, referring to the original text data and the context before and after the paraphrasing:

- “Doesn’t go back” → failure in the selection of CD tracks.

- “What’s this?” → blind operation.

- “SEEK TRACK” → confirmation of button name.

- (3)

- Interpretation using 70 design items

The words paraphrased in (2) were interpreted from the viewpoint of 70 design items, referring to the original text data and the context before and after the paraphrased words as follows:

- Failure in the selection of CD tracks → mismatch with user’s mental model, lack of discrimination.

- Blind operation → lack of operation cue.

- Confirmation of button name → terms as operation cues.

- (4)

- Extraction of problems based on 70 design items

The following usability problems in Task 1 were extracted from these step codings and the original text data:

- The method of the CD track selection does not match with users’ mental model.

- The CD selection button is not distinguishable from similar buttons, making it challenging to identify.

- There are few clues for CD selection operation, and users become confused.

- The English words “SEEK TRACK” can be a clue for operation, but it is not clearly understood by Japanese users and is not an appropriate term for Japanese users.

- (5)

- Description of other items

Through the step coding, the analyst wondered whether Japanese users could understand the meaning of “repeat” in the text “RPT”.

This procedure was followed for each of the six participants. As a result, a total of 29 usability problems were extracted. The extracted problems can be roughly classified into four categories. The following is a list of the four categories and the significant problems in each category:

- (1)

- Problems related to terminology (nine problems)

- Terms are difficult to understand.

- Explanation and display are difficult to understand.

Example: The term “TRACK” is difficult to understand and is not highlighted prominently.

- (2)

- Problems related to obtaining information (13 problems)

- There are few clues for operation.

- Lack of distinguishing features.

Example: There are not enough cues and identifiers for the power button.

- (3)

- Problems related to the user’s mental model (three problems)

- Mismatch between the user’s mental model and the system.

Example: The user does not have a mental model of “pressing” the volume button.

- (4)

- Problems related to the understanding of button functions (four problems)

- There is a lack of explanation, display, and feedback about the button functions.

Example: The user cannot understand the button function of the traffic information button with only an icon.

5. Discussion

The proposed method was applied to a case study of usability evaluation, and we confirmed that the method could extract the target system’s problems and could propose improvements. By using step coding and 70 design items, the process of extracting usability problems from textual data was explicit, and the problems were extracted analytically. In other words, the proposed method was less ambiguous. By incorporating 70 design items into the interpretation of the problem points, the usability evaluation was performed from various viewpoints. In addition, since the items were exhaustive and designed to be used by novice designers, they could be easily linked to specific problems.

However, the extraction of keywords to be focused on from the textual data depended on the experience of each analyst. In order to solve this problem, the use of keyword extraction algorithms should be considered. In addition, with the development of deep learning and other technologies, methods to automatically export text from verbal data have been proposed. For example, Fan et al. [18] have worked on the extraction of words and phrases to be focused on from textual data. In the future, it would be possible to improve the efficiency of this method by using it together with automated tools for text export and keyword extraction. In addition, the proposed method should be evaluated by using it in many practical situations. In such practical situations, it is necessary to verify the effectiveness of the proposed method and to improve it by comparing it with conventional methods and by listening to the opinions of practical experts. The author himself analyzed the proposed method in Chapter 3 and found it could be carried out smoothly based on the proposed procedure. However, this study has also mentioned the possibility of using non-experts as one advantage and usage of the proposed method. In this regard, the fact that the procedures are specified does not leave much room for the analyst to think on his or her own, so non-experts can use it. However, since 70 design items are many, it might be difficult for a non-expert to analyze them. The ease of use of the proposed method needs to be examined through actual use by non-experts. In addition, the number of items in the 70 design items could be narrowed down according to the analysis target.

Funding

This research was partly funded by JSPS KAKENHI, grant number 19K15252.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The author declares no conflict of interest.

References

- Nielsen, J. Usability Engineering; Academic Press, Inc.: Cambridge, MA, USA, 1993. [Google Scholar]

- Lewis, C. Using the “Thinking-Aloud” Method in Cognitive Interface Design; IBM TJ Watson Research Center: Albany, NY, USA, 1982. [Google Scholar]

- Dumas, J.S.; Redish, J. A Practical Guide to Usability Testing; Intellect Books: Bristol, UK, 1999. [Google Scholar]

- Preece, J.; Rogers, Y.; Sharp, H. Interaction Design: Beyond Human-Computer Interaction; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Rubin, J.; Chisnell, D. Handbook of Usability Testing: How to Plan, Design and Conduct Effective Tests; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- McDonald, S.; Edwards, H.M.; Zhao, T. Exploring think-alouds in usability testing: An international survey. IEEE Trans. Prof. Commun. 2012, 55, 2–19. [Google Scholar] [CrossRef]

- Fan, M.; Shi, S.; Truong, K.N. Practices and challenges of using think-aloud protocols in industry: An international survey. J. Usability Stud. 2020, 15, 85–102. [Google Scholar]

- Hornbæk, K. Dogmas in the assessment of usability evaluation methods. Behav. Inf. Technol. 2010, 29, 97–111. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Simon, H. Verbal reports as data. Psychol. Rev. 1980, 87, 215. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Simon, H. Protocol Analysis: Verbal Reports as Data; MIT Press: Cambridge, MA, USA, 1984. [Google Scholar]

- Alhadreti, O.; Mayhew, P. Rethinking thinking aloud: A comparison of three think-aloud protocols. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI2018), Montreal, QC, Canada, 21–26 April 2018; p. 44. [Google Scholar]

- Ericsson, K.A.; Simon, H. Protocol Analysis: Verbal Reports as Data, Revised ed.; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Alekhya, P. Think Aloud: Can Eye Tracking Add Value in Detecting Usability Problems? Master’s Thesis, Rochester Institute of Technology, Rochester, NY, USA, 2012. [Google Scholar]

- Li, A.C.; Kannry, J.L.; Kushniruk, A.; Chrimes, D.; McGinn, T.G.; Edonyabo, D.; Mann, D.M. Integrating usability testing and think-aloud protocol analysis with “near-live” clinical simulations in evaluating clinical decision support. Int. J. Med. Inform. 2012, 81, 761–772. [Google Scholar] [CrossRef] [PubMed]

- Boren, T.; Ramey, J. Thinking aloud: Reconciling theory and practice. IEEE Trans. Prof. Commun. 2000, 43, 261. [Google Scholar] [CrossRef]

- Alhadreti, O.; Mayhew, P. To intervene or not to intervene: An investigation of three think-aloud protocols in usability testing. J. Usability Stud. 2017, 12, 111–132. [Google Scholar]

- Cooke, L. Assessing concurrent think-aloud protocol as a usability test method: A technical communication approach. IEEE Trans. Prof. Commun. 2010, 53, 202–215. [Google Scholar] [CrossRef]

- Fan, M.; Li, Y.; Truong, K.N. Automatic detection of usability problem encounters in think-aloud sessions. ACM Trans. Interact. Intell. Syst. 2020, 10, 1–24. [Google Scholar] [CrossRef]

- Kawakita, J. Hasso ho (Methodology for Ideation); Chuko Shinsho: Tokyo, Japan, 1967. (In Japanese) [Google Scholar]

- Glaser, B.G.; Strauss, A.L. The Discovery of Grounded Theory. Strategies for Qualitative Research; Routledge: London, UK, 1967. [Google Scholar]

- Krippendorff, K. Content Analysis: An Introduction to Its Methodology; SAGE Publications: Thousand Oaks, CA, USA, 1981. [Google Scholar]

- Gilbert, N.; Mulkay, M.J. Opening Pandora’s Box: A Sociological Analysis of Scientists’ Discourse; Cambridge University Press: Cambridge, UK, 1984. [Google Scholar]

- Potter, J. Discourse Analysis and Constructionist Approaches: Theoretical Background. In Handbook of Qualitative Research Methods for Psychology and the Social Sciences; Richardson, J.T.E., Ed.; BPS Books: Leicester, UK, 1996. [Google Scholar]

- Smith, J.A. Beyond the divide between cognition and discourse: Using interpretative phenomenological analysis in health psychology. Psychol. Health 1996, 11, 261–271. [Google Scholar] [CrossRef]

- Šašinka, Č.; Stachoň, Z.; Sedlák, M.; Chmelík, J.; Herman, L.; Kubíček, P.; Šašinková, A.; Doležal, M.; Tejkl, H.; Urbánek, T.; et al. Collaborative Immersive Virtual Environments for Education in Geography. ISPRS Int. J. Geoinf. 2019, 8, 3. [Google Scholar] [CrossRef]

- Kawakita, J. Hasso ho (KJ ho) to dezain (KJ Method and Design). Bull. JSSD 1985, 2–8. (In Japanese) [Google Scholar]

- Shigemoto, Y. Beyond IDEO’s Design Thinking: Combining KJ Method and Kansei Engineering for the Creation of Creativity. In Advances in Creativity, Innovation, Entrepreneurship and Communication of Design; AHFE 2021; Lecture Notes in Networks and Systems; Markopoulos, E., Goonetilleke, R.S., Ho, A.G., Luximon, Y., Eds.; Springer: Cham, Switzerland, 2021; Volume 276. [Google Scholar]

- Minen, M.T.; Jalloh, A.; Ortega, E.; Powers, S.W.; Sevick, M.A.; Lipton, R.B. User Design and Experience Preferences in a Novel Smartphone Application for Migraine Management: A Think Aloud Study of the RELAXaHEAD Application. Pain Med. 2019, 20, 369–377. [Google Scholar] [CrossRef] [PubMed]

- Villanueva, R.A. Think-Aloud Protocol and Heuristic Evaluation of Non-Immersive, Desktop Photo-Realistic Virtual Environments. Master’s Thesis, University of Otago, Dunedin, New Zealand, 2004. Available online: http://hdl.handle.net/10523/1324 (accessed on 30 July 2021).

- Aomatsu, M.; Otani, T.; Tanaka, A.; Ban, N.; Dalen, J. Medical students’ and residents’ conceptual structure of empathy: A qualitative study. Educ. Health 2013, 26, 4–8. [Google Scholar] [CrossRef] [PubMed]

- Maeno, T.; Takayashiki, A.; Name, T.; Tohno, E.; Hara, A. Japanese students’ perception of their learning from an interprofessional education program: A qualitative study. Int. J. Med. Educ. 2013, 4, 9–17. [Google Scholar] [CrossRef][Green Version]

- Otani, T. “SCAT” A qualitative data analysis method by four-step coding: Easy startable and small data-applicable process of theorization. Bull. Grad. Sch. Educ. Hum. Dev. Educ. Sci. Nagoya Univ. 2007, 54, 27–44. (In Japanese) [Google Scholar]

- Goto, A.; Rudd, R.E.; Lai, A.Y.; Yoshida, K.; Suzuki, Y.; Halstead, D.D.; Yoshida-Komiya, H.; Reich, M.R. Leveraging public health nurses for disaster risk communication in Fukushima City: A qualitative analysis of nurses’ written records of parenting counseling and peer discussions. BMC Health Serv. Res. 2014, 14, 129. [Google Scholar] [CrossRef] [PubMed]

- Yamaoka, T. Manufacturing attractive products logically by using human design technology: A case of Japanese methodology. In Human Factors and Ergonomics in Consumer Product Design: Methods and Techniques; Karwowski, W., Soares, M.M., Stanton, N.A., Eds.; CRC Press: Boca Raton, FL, USA, 2011; pp. 21–36. [Google Scholar]

- Google’s Design Guidelines. Available online: http://googlesystem.blogspot.com/2008/03/googles-design-guidelines.html (accessed on 19 July 2021).

- Apple Human Interface Guidelines. Available online: https://developer.apple.com/design/human-interface-guidelines/ (accessed on 19 July 2021).

- IBM Design Language. Available online: https://www.ibm.com/design/language/philosophy/principles/ (accessed on 19 July 2021).

- Okada, K. Human Computer Interaction; Ohmsha: Tokyo, Japan, 2002; pp. 39–40. [Google Scholar]

- Rubinstein, R.; Hersh, H.M. The Human Factor—Designing Computer System for People; Digital Press: New York, NY, USA, 1984. [Google Scholar]

- GNOME Human Interface Guidelines. Available online: https://developer.gnome.org/hig/stable/ (accessed on 19 July 2021).

- Windows User Experience Interaction Guidelines. Available online: http://www.glyfx.com/useruploads/files/UXGuide.pdf (accessed on 19 July 2021).

- Saffer, D. Designing for Interaction; Mainichi Communications: Tokyo, Japan, 2008. [Google Scholar]

- Research Group on Universal Design. Human Engineering and Universal Design; Japan Industrial Publishing: Tokyo, Japan, 2008; p. 4. [Google Scholar]

- Lidwell, W.; Holden, K.; Butler, J. Universal Principles of Design: 100 Ways to Enhance Usability, Influence Pereception, Increase Appeal, Make Better Design Decisions, and Teach Through Design; Rockport Pub.: Beverly, MA, USA, 2003. [Google Scholar]

- Kato, S.; Horie, K.; Ogawa, K.; Kimura, S. A human interface design checklist and its effectiveness. IPSJ J. 1995, 36, 61–69. (In Japanese) [Google Scholar]

- Yamaoka, T. How to construct form logically based on human design technology and form construction principles. In Proceedings of the 2nd International Conference on Design Creativity, Glasgow, UK, 18–20 September 2012; pp. 384–392. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).