Abstract

Artificial intelligence (AI) is bringing about enormous changes in everyday life and today’s society. Interest in AI is continuously increasing as many countries are creating new AI-related degrees, short-term intensive courses, and secondary school programs. This study was conducted with the aim of identifying the interrelationships among topics based on the understanding of various bodies of knowledge and to provide a foundation for topic compositions to construct an academic body of knowledge of AI. To this end, machine learning-based sentence similarity measurement models used in machine translation, chatbots, and document summarization were applied to the body of knowledge of AI. Consequently, several similar topics related to agent designing in AI, such as algorithm complexity, discrete structures, fundamentals of software development, and parallel and distributed computing were identified. The results of this study provide the knowledge necessary to cultivate talent by identifying relationships with other fields in the edutech field.

1. Introduction

Information technology (IT) is driving changes in society and leading to a new paradigm shift in national development across the globe. Among them, artificial intelligence (AI)-related research and talent cultivation are becoming the basis for national development, while emerging as a competitive edge. Through AI.gov, the United States has promoted AI research and development at the government level since February 2019 [1], and at the Future Strategy Innovation Conference in March 2019, Japan presented AI, quantization, and biotechnology as three strategic technologies that will lead Japan’s development [2]. South Korea’s Ministry of Science and ICT also announced its plans to foster AI talent by 2022 according to the “AI Research & Development Strategy” of May 2018 [3].

These interests in AI from different countries are motivating talent cultivation. This is seen in the creation of AI degree programs at universities, the dedication of new colleges to AI, the design of new AI degree programs, etc. The U.S. has already implemented talent development plans at universities [4,5], while Japan has introduced its future strategic plans through linking AI with primary and secondary education. China, through the “New Generation Artificial Intelligence Development Plan” and the “AI Innovation Action Plan” of 2017 and 2018, has begun AI-focused primary and secondary education where students study the core technologies of AI [6,7]. India also announced an AI curriculum inclusion for eighth and ninth graders at the 2019 Central Board of Secondary Education [8].

As a way of implementing various AI professional policies, AI is being taught at the primary school level; however, to date, no core knowledge has been defined regarding AI education. In the absence of these core content standards, the structures of AI education vary among different educational institutions [9,10]. In a similar context, the British Prime Minister emphasized the importance of establishing rules and standards for AI technologies at the 2018 World Economic Forum. Although AI is now in the spotlight, both academically and economically, it is widely regarded as a union of knowledge containing topics of various fields. Therefore, developing a body of knowledge in the field of AI will also help in establishing an academic foundation for AI.

A body of knowledge is a reconstruction of the knowledge area (KA) based on the knowledge that experts in academic fields must obtain. It is important for the topics covered in the knowledge areas to be constructed in a way that they correlate with other areas or topics. This means that the body of knowledge based on semantic relations can be said to correspond to the overall knowledge that one must acquire in the given academic field.

Therefore, this study focused on deriving areas and topics that correlate with the field of AI. To fulfill its goals, this study derived knowledge areas and topics using sentence models. AI’s body of knowledge extracted from sentence models will provide implications for which research topics should be continued from the academic perspective. In particular, by identifying relationships with other areas, it will also contribute to constructing the knowledge that is required to develop professionals at the university level.

2. Related Research

A body of knowledge analysis aims to identify the hierarchies of knowledge areas or relationships among topics. In some cases, a visual representation is used for a clear knowledge sequence or secondary utilization of knowledge. Analysis and reorganization of a body of knowledge should either be performed by experts or computer systems. Using an expert can be expensive and time-consuming, and maintaining consistency is quite challenging. Therefore, computer systems are used instead. This section discusses previous studies on body of knowledge analysis and sentence modeling using computer systems.

2.1. Sentence Modeling

Analyzing the body of knowledge for each academic field takes curriculum composition or evaluation into account. Various types of research related to body of knowledge analysis have been conducted. The main types are discussed below.

The first type of body of knowledge analysis research is category-based research. Based on knowledge about the units of the Computing Curricula 2001, which can be regarded as the computer science (CS) body of knowledge, research was performed to set the flow of the teaching syllabus. A syllabus-maker that can develop or analyze a teaching syllabus has been proposed [11]. By using the body of knowledge of Computer Science Curricula 2013 (CS2013), the distribution of knowledge areas for a teaching syllabus has also been predicted [12]. This combined learners with learner information in an attempt to provide them with personalized learning paths. Further, Ida (2009) proposed a new analysis method, called the library classification system, that is based on the classification information in the curriculum’s body of knowledge, instead of the body of knowledge.

In the abovementioned studies, analysis was performed based on categories such as KA and knowledge units (KU), which do not take specific topics or the contents of each body of knowledge into account. Hence, although they can be useful in identifying the entire frame or hierarchy of a body of knowledge, they have a limited ability to identify meaning based on the detailed contents of the subject unit.

The second type of body of knowledge analysis research is word-based research, which uses stochastic topic modeling. Topic modeling estimates topics based on the distribution of words contained in a document. Latent Dirichlet allocation (LDA) is one of the popular methods used in topic modeling [13]. Sekiya (2014) used supervised LDA (sLDA) to analyze the changes in the body of knowledge of CS2008 and CS2013 [14]. More specifically, ten words that could represent each KA in the body of knowledge were extracted and compared based on common words. Another study was based on the CS2013 body of knowledge, which applied an extended method called the simplified, supervised latent Dirichlet allocation (ssLDA) [15]. Syllabus topics from the top 47 universities worldwide in 2014–2015 were quantified. Subsequently, based on the related topics, features of similar topics were analyzed.

In 2018, the Information Processing Society of Japan presented the computer science body of knowledge with 1540 topics. The presented topics ranged from approximately one to five words. For example, if the topic called “Finite-state Machine” is processed on a word basis, the result would include various machine-related topics such as Turing machines, assembly level machines, and machine learning. This means that it is difficult to extract the exact meaning from the given topic. As with previous studies, unigram-based research through topic modeling is suitable for classifying words that appear in the body of knowledge by topic, but it can be semantically limited as it treats topics segmentally.

For analysis based on an accurate understanding of the body of knowledge, it is necessary to apply unprocessed topics. This would help in providing a clear meaning of the topics as well as to guess the relationships among the words. Therefore, this study uses sentence modeling to find topic meanings in the body of knowledge and to identify the relationships among these topics.

2.2. Knowledge Areas Analysis Research

Sentence modeling is an important problem in natural language processing [16]. It allows the insertion of sentences into vector spaces and uses the resulting vectors for classification and text generation [17]. Convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are generally used in sentence modeling approaches.

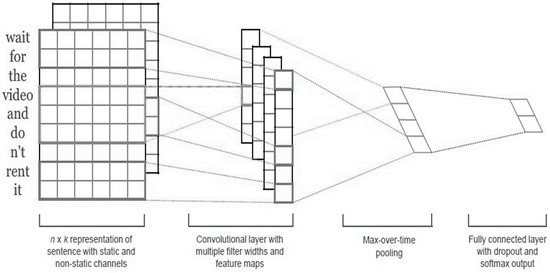

CNNs that save local information about sentences use filters, which are applied to local characteristics on the layers [18]. Figure 1 depicts a simple CNN structure with a convolutional layer on top of a word vector obtained from an unsupervised neural language model. This model achieved several excellent benchmark results even without parameter tuning [19].

Figure 1.

Structure of convolutional neural networks (CNN).

The CNN models used in early computer vision are also effective in natural language processing, and they have been applied in semantic parsing, search query retrieval [20], sentence modeling [21], and other traditional natural language processing (NLP) works [22].

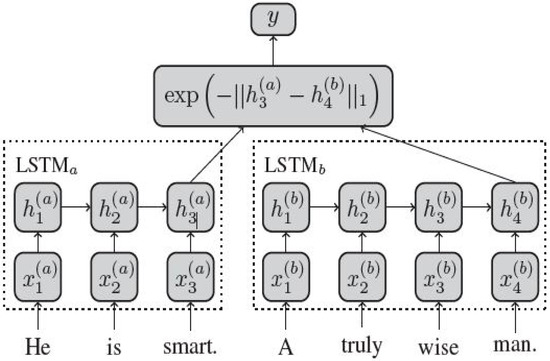

RNNs process word inputs in a particular order and learn from the order of appearance of particular expressions. Thus, modeling can process semantic similarities between sentences and phrases. RNNs can also use their own models, but they are used as an extension of the modified model. Manhattan long short-term memory (LSTM), which is a modified RNN model, uses two LSTMs to read and process word vectors that represent two input sentences. This model has been shown to outperform the complex neural network model. The structure of the Manhattan LSTM is shown in Figure 2 [23]. The main feature of this model is that LSTM and the Manhattan metric are used based on a Siamese network structure that contains two identical subnetwork components. The hidden state h1 is learned through word vectors and randomly generated weights. Then, the hidden state sentence ht is generated based on the input function using hidden state ht-1 and position t. Subsequently, the semantic similarity of sentences is measured using the vectors of the final hidden state. For example, “he,” “is,” and “smart” are the words in the vectors xi; x1 is the input vector of h1 and is used to calculate the status value of h1; h2 is calculated by referring to the previous state value and x2; and the final hidden state, h3, is calculated through x3 and the previous state value h2. “a,” “truly,” “wise,” and “man” are treated in the same manner, and similarities are measured with the final vectors calculated by processing two sentences.

Figure 2.

Structure of Manhattan LSTM.

In addition to the abovementioned models, modified RNN models such as bidirectional LSTM, multidimensional LSTM [24], gated recurrent unit [25], and recursive tree structure [26] have been applied to text modeling through the existing model architectural modifications. RNN-based models are generally calculated from the word order of the input and output sentences. Sequential processing in the model learning process can reflect the syntax and semantics of the given sentences, but it has a slow computational speed and parallelism, which is a limitation.

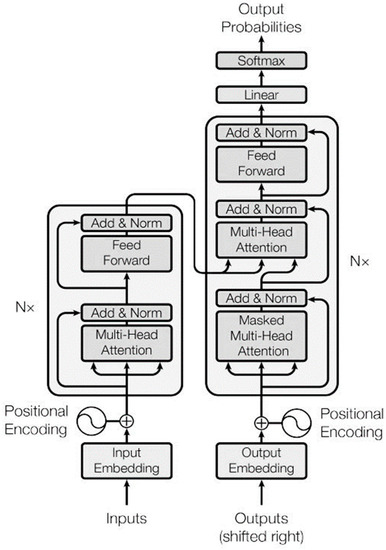

There are also structures that allow modeling dependencies regardless of the length of the input or output sentences for computation efficiency. Figure 3 shows the structure of the transformer model, which is based only on attention mechanisms without using CNN or RNN [27]. Attention mechanisms refer back to the entire input sentence of the encoder at every time-step of the output word prediction from the decoder. At this stage, the entire input sentence is not considered. Instead, the focus is on the input words at a specific time based on the similarity. The transformer model allows parallelism, and it can emphasize the values that are the most closely related to the query through “attention” in the encoder and decoder.

Figure 3.

Structure of the transformer model.

The CNN, Manhattan LSTM, and transformer models complement each other’s strengths and weaknesses. Therefore, it is essential to implement all three models to evaluate the semantic similarity of body of knowledge topics and subsequently select the model with the highest accuracy for conducting cross-referencing among the topics.

3. Methods

3.1. Experimental Procedure

AI is an artificial implementation of human intelligence, partially or fully, based on the broad concept of “smart” computers. Intelligent systems (IS) operate in the same manner as AI, but they do not feature deep neural networks that support self-learning [28]. In the field of CS, IS contain AI-related contents. In this study, an IS was used to derive the body of knowledge of AI. The procedure used to derive cross-references among topics presented in the IS knowledge area of the CS field was as follows.

- Step 1.

- From the CS2013 body of knowledge, the topics of IS and other areas were classified.

- Step 2.

- To calculate the similarity among topics, three different sentence models (CNN, MaLSTM, and transformer) based on machine learning were implemented. This system was developed using Python 3.6 and executed on Linux 16.04.

- Step 3.

- The models were trained using data from Stanford Natural Language Inference (SNLI) corpus and Quora Question Pairs (QQP).

- Step 4.

- The accuracy was calculated after training, and the sentence model with the highest accuracy was selected.

- Step 5.

- Semantic similarities between classified IS topics and topics from other areas were calculated.

- Step 6.

- Through various similarity simulations between the two topics, the similarity levels were divided into “0.95 < similarity” and “0.90 < similarity ≤ 0.95.”

- Step 7.

- A search engine was used to examine the semantic validity of the topics with a similarity of “0.95 < similarity.”

3.2. Subject of Analysis

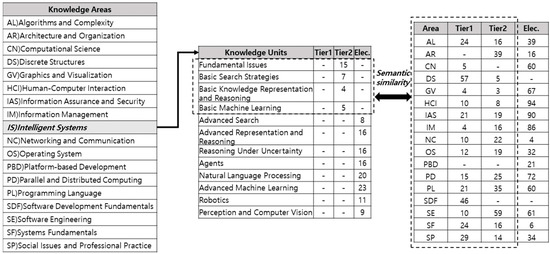

“Computer Science Curricula CS2013,” which is a standard body of knowledge of the CS field presented by ACM and IEEE Computer Association, was selected. The body of knowledge CS2013 comprises eighteen KA, and each KA contains approximately ten KUs, as shown in Figure 4. Each KU subject has three tiers: tier 1 covers the basic introductory concepts, tier 2 covers the undergraduate-major concepts, and the elective tier covers the contents that are more advanced than the undergraduate-major contents.

Figure 4.

Computer Science Curricula CS2013 body of knowledge.

To derive cross-references among the various topics in the body of knowledge of AI, this study was conducted based on the topics of IS, and each topic was classified as follows. First, the CS2013 body of knowledge was divided into knowledge areas, knowledge units, and topics. Second, CS2013 classified topics of KU into Tier 1, Tier 2, and elective for all eighteen KAs. Third, the IS consisted of four units of knowledge: fundamental issues, basic search strategies, basic knowledge representation and reasoning, and basic machine learning. All four KUs are of the Tier 2 level with 31 topics. Fourth, 17 areas excluding IS consisted of 323 Tier 1 and 327 Tier 2 topics. Thus, the similarities among the 31 topics of IS and 660 topics of 17 KUs were analyzed based on levels.

3.3. Sentence Model Performance

CNN, Manhattan LSTM, and multi-head attention networks (a transformer model) were implemented, and their performances were compared. SNLI and QQP were used as corpuses for model training. For each corpus, 90% of the data was used as the training set, and 10% was used as the testing set to verify the accuracy of the model.

- Training set: SNLI number: 330,635, QQP number: 363,861

- Testing set: SNLI number: 36,738, QQP number: 40,430

The content and accuracy of the models are detailed in Table 1.

Table 1.

Content and accuracy of sentence models.

In QQP, CNN had an accuracy of 83.8%, which was superior to Manhattan LSTM’s 82.8% accuracy. Conversely, Manhattan LSTM had an accuracy of 80.6% in SNLI, which was the highest accuracy among the three models. Multi-head attention networks showed better accuracy than CNN in SNLI, but the mean accuracy was the lowest among all. In this study, Manhattan LSTM was used, and it showed the highest accuracy when the model accuracy for both corpuses were converted to average.

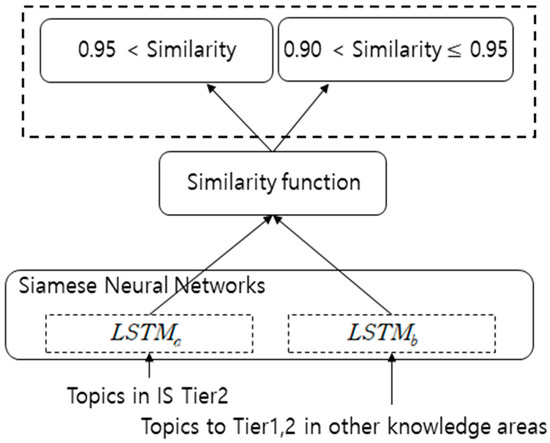

3.4. Setting the Similarity of the Sentence Model

This study was performed based on the Manhattan LSTM, which had the highest average accuracy of the three models used. In the two sentences, the semantic similarity level in the vectors of the final hidden state was modified to provide outputs according to the threshold values, as shown in Figure 5.

Figure 5.

Structure of the sentence model used.

The threshold value was set at two levels through various experiments. In other words, the similarity between topics is either greater than 0.95 or just 0.9 but less than or equal to 0.95.

Manhattan distance was applied as a similarity function. In general, the Euclidean distance is not used in the problem of determining similarity because it causes learning to be slow and it has difficulty correcting errors owing to vanishing gradients in the early stage of learning [29]. On the other hand, Manhattan distance can match values so that two sentences are close to one if they are semantically similar and close to zero otherwise, without a separate activation function to determine the output of the neural network in the form of . The cost function uses MSE for this difference.

4. Application Results

4.1. Sentence Model Performance

Table 2 presents the results of the analysis of the 31 topics of IS and 323 topics in other areas. More semantically similar topics were extracted as having “0.90 < similarity 0.95” than “0.95 < similarity.” This was due to “0.95 < similarity” being more robust than “0.90 < similarity 0.95.”

Table 2.

Tier 1: Topic pairs with high similarity between IS and topics in other areas.

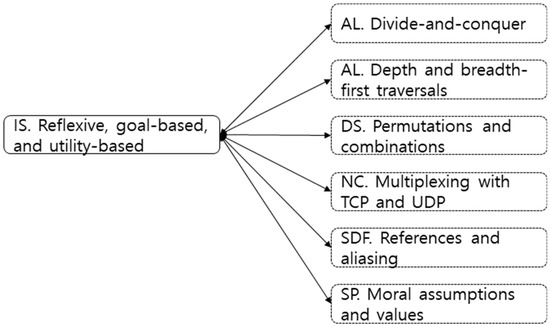

There were 74 pairs extracted from 57 topics of DS with similarity range of “0.90 < similarity 0.95.” In addition, 31 topics of IS and 57 topics of DS were compared as 1,767 (31 × 57) topic pairs. Next, many topic pairs with high similarity appeared in the order of SDF and AL. For “0.95 < similarity,” six pairs were extracted from five areas; namely, AL, DS, NC, SDF, and SP. AL had two pairs while the other four areas had a pair each. The details are shown in Figure 6.

Figure 6.

“0.95 < similarity”: Topics with high relevance in Tier 1.

The topic pairs with “0.95 < similarity” were focused upon because a high similarity is shown in a robust state. In other words, it was determined that knowledge could be extracted in the order of higher similarity with IS first.

We now examine “Reflexive, goal-based, and utility-based” from the perspective of AI. An agent of AI is an autonomous process that automatically performs tasks for users. It is one of the software systems with independent functions that perform tasks and typically operate in a distributed environment [30]. To perform tasks, an agent interacts with different agents through its own reasoning method using a database of non-procedural processing information called knowledge. Then, the agent continues to act based on the learning and purpose-oriented skills obtained from the experiences. This means that “Reflexive, goal-based, and utility-based” explains how to design an agent program. In this study, as shown in Figure 6, the following were included in the knowledge to be handled before learning the agent.

First, agents interact with external environments using sensors to achieve their goals in the complex and fluid real-world environments. In other words, agents can be said to be faced with a complex problem that requires considering various situations. Divide-and-conquer strategies are effective in solving complex problems. With agent designs, it is easy and simple to approach and solve several smaller sub-problems [31]. This problem-solving strategy can be applied not only to agent design but also to general problem-solving.

Second, to achieve a predefined goal or solution, a systematic search method is applied for problem-solving in the agents “depth and breadth-first traversals,” which is a search algorithm in a common search strategy [32].

Third, frames are a means of expressing knowledge in the agents. A knowledge consists of small packets called frames where the contents of a frame are specific slots with values. A topic, “permutations and combinations,” is a basic concept that helps with finding or identifying new knowledge [33].

Fourth, there is a need to understand the “references and aliasing” topic to recognize and reason knowledge in agents. There are physical difficulties such as memory limits in perceiving all the knowledge in a computing environment. To solve this problem, knowledge can be limited to certain categories and then perceived. Processing can then be done by referencing the address of the memory where the knowledge is stored [34].

Fifth, the main rationale for constructing multi-agent systems is that by forming a community, multiple agents can provide more added values than one agent can provide. Additionally, agents can participate in an agent society through communications, and they can acquire services owned by other agents through interactions. In other words, even if an agent does not have all the information, it can provide various services because of its interactions with other agents [35]. The concept of “multiplexing with TCP and UDP” has been applied to implement the means of information exchange and communication in the agent society.

Finally, the progress and globalization of various technologies including agents are affecting society. Ethical and moral issues that can arise from agents are indispensable factors in terms of education. “Moral assumptions and values” can be said to be a required topic for the development and use of technology [36].

The topics that have semantic similarities with IS at the “0.90 < similarity 0.95” range are shown in Table 3.

Table 3.

“0.90 < similarity 0.95”: Topics with high similarity in Tier 1.

The areas with the highest distribution of similar topics were in the order of DS (Discrete Structures), SDF (Software Development Fundamentals), and AL (Algorithms and Complexity). Topics that were extracted from the DS included topics covered by IS, such as mathematical proofs and development of the ability to understand concepts [37]. In other words, these areas include important contents such as set theory, logic, graph theory, and probability theory, which are foundations of computer science.

Students should be able to implement IS to solve problems effectively in the field of AI. This means that they should be able to read and write programs in several programming languages. Topics that were sampled from SDF were more than just programming skill topics. They included basic concepts and techniques in the software development process, such as algorithm design and analysis, proper paradigm selection, and the use of modern development and test tools.

Performance may vary in implementing IS depending on both the accuracy of the model trained with a large amount of data, and the chosen algorithm and its suitability. In other words, algorithm design is very important to improve the efficiency of the IS model design [31]. AL is said to be the basis of computer science and software engineering as well as IS design. As demonstrated by brute-force algorithms, greedy algorithms, and search algorithms, building an understanding and insight into the algorithms is one of the important factors in IS composition. As seen from the results of the study, the topics with high similarity which were sampled from this study are closely related to the IS and AI fields.

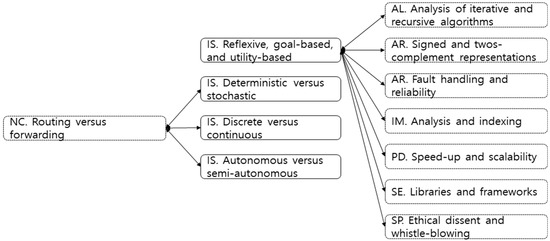

4.2. Results for Tier 2

Table 4 presents the results of analyzing 327 Tier 2 topics in the IS and other areas. As shown in Table 2, Table 4 also shows more similar topic samples at the “0.90 < similarity 0.95” range than at the “0.95 < similarity” level. At the “0.90 < similarity 0.95” range, SE had 31 topic pairs of samples from 59 topics. The next highest area was PD (Parallel and Distributed Computing). At the “0.95 < similarity” level, ten pairs were extracted from seven areas, and the NC (Networking and Communication) topic had the most pairs compared to other topics with three pairs. As shown in Figure 7, the three topics of IS and the topic of “Routing versus forwarding” of NC were similar.

Table 4.

Tier 2: Topic pairs with high similarity between IS and other topic areas.

Figure 7.

“0.95 < Similarity”: Topics with high relevance in Tier 2.

Based on CS2013, Tier 2 features more advanced topics compared to Tier 1. To learn these topics, results from Section 4.1 can be considered as prerequisite knowledge or references.

As shown in Figure 7, seven topic pairs from six areas were extracted from “reflexive, goal-based, and utility-based” of the “0.95 < similarity” level. AR (Architecture and Organization) had two pairs, while the other five areas had a topic pair each.

Looking at the AL around “reflexive, goal-based, and utility-based,” Tier 1 is about problem-solving and graph-based querying (see Figure 6). On the other hand, Tier 2 is about an approach to algorithm recursion or iteration with “analysis of iterative and recursive algorithms.” In other words, the similar topics of Tier 1 are related to theories or concepts for problem-solving, whereas Tier 2 is related to strategies for efficiently implementing agents [37].

Stability is an important factor for processing the knowledge of agents and for agents to interact with one another using processed knowledge. In terms of implementation, “signed and two’s complement representations” is related to the sign and representation range variables to be saved in the memory. Memory overflow occurs when the data overrun the designated boundary of the memory space. This can lead to unexpected behaviors or computer security vulnerabilities [38]. In addition, “fault handling and reliability” is also about ensuring stability by handling the fault of the memory system.

In addition to the abovementioned, concepts related to extending or parallelizing the information system of agents and the topics related to tools that can be utilized without having to implement everything from scratch were extracted. Agents, which are typically complex and massive systems, are developed in a collaborative design environment. “Ethical dissent and whistle-blowing,” which is an ethical and altruistic act, is about helping to improve the development of an organization and further contribute to forming a healthy community [39].

“Deterministic versus stochastic,” “discrete versus continuous,” and “autonomous versus semi-autonomous” were very similar to “routing versus forwarding” of NC. The three topics of IS were related to the characteristics of the given problems, which needed to be optimally inferred or solved by the agents. These concepts are applied in various fields in addition to AI. This means that it is an important topic for agents. However, the importance can also be applied to routing algorithms for deterministic routing and probabilistic routing [40], autonomous systems for network topology management and control [41], and the process of finding a network [42].

Table 5 presents topics with similarity to IS in Tier 2 at the “0.90 < Similarity 0.95” range. Topics were extracted from 13 of 17 areas excluding IS. Of these, the SE (Software Engineering) and PD (Parallel and Distributed Computing) areas had the most topics extracted. Schedule, cost, and quality are important factors in the design, implementation, and testing phases of IS. The SE topics are applicable to software development in all areas of computing, including IS [43]. SE, along with IS, can be said to be related to the application of theory, knowledge, and practice to build general-purpose software more efficiently.

Table 5.

“0.90 < similarity 0.95”: Topics with a high similarity in Tier 2.

In terms of the efficiency of IS, understanding parallel algorithms, strategies for problem decomposition, system architectures, detailed implementation strategy, and performance analysis and tuning is important [31]. For this reason, the topics extracted from PD, such as concurrency and parallel execution, consistency of status and memory operation, and latency, had high similarities.

4.3. KU of IS vs. KU of Other Knowledge Areas

The following four units were the Tier 2 topics of academic major level in the IS areas of “fundamental issues,” “basic search strategies,” “basic knowledge representation and reasoning,” and “basic machine learning.” KUs with high similarity to the KUs of IS are shown in Table 6 below. At “0.95 < similarity,” the KU topic “fundamental issues” was extracted. At “0.90 < similarity 0.95,” all three KU topics except for “basic knowledge representation and reasoning” were extracted.

Table 6.

KU with high similarity to the KUs of IS.

Out of 18 KAs, 53 KUs of 14 KAs showed a high similarity. The areas with high similarities to IS and KU were DS, NC, and SE. “Sets, relations, and functions” of DS had the highest similarity with the 14 KUs. If the topics were limited to KUs with more than five pairs of similar areas, “fundamental issues” was related to the 15 KUs. There were nine KUs corresponding to AL, DS, and SDF of Tier 1, and six KUs corresponding to AL, NC, PD, and SE of Tier 2. “Basic machine learning” had a high similarity with “algorithmic strategies” of AL, and “graphs and tree” and “sets, relations, and functions” of DS from Tier 1. “Fundamental issues,” which covers the general contents of AI relative to the other KUs, had 111 pairs from Tier 1 and 84 pairs from Tier 2 with a high similarity. There were more than 40 pairs of topics on “basic machine learning,” which covers the basics of machine learning, such as supervised learning, unsupervised learning, and reinforcement learning.

4.4. Evaluation

Currently, there is no research available on the curriculum relevance of the topics. The validity of the study was evaluated using two methods because a direct comparison with previous studies was not possible. The first method was a content validation from experts. The degree of similarity among the topics for content validity verification was quantified. At the same time, “rater reliability” among experts was determined. The second method was an index term-based method using a search engine. Specifically, the index term-based method was applied in the study of variables affecting similarities between two documents or two topics. This is because if the two topics appear simultaneously in the same document referring to a specific area, they can be said to be semantically related.

4.4.1. Content Validation by Experts

This study analyzed opinions from five experts based on the topics related to “reflexive, goal-based, and utility-based” for intelligent agent design. The experts met at least three of the following four criteria: Ph.D. in AI, seven years of work experience in the field of AI, seven years of AI research experience, and five years of experience teaching AI at a university.

The content validation proceeded as follows. In the first step, 66 topics were selected as random samples from four different similarity ranges: “0.95 < similarity,” “0.95 similarity > 0.9,” “0.9 similarity > 0.8,” and “0.8 similarity > 0.7.” In the second step, experts were asked to examine the extracted topics based on semantic relevance with “reflexive, goal-based, and utility-based” and the need for those topics. The results of experts on the relevance and need of each range of topics are shown in the Table 7.

Table 7.

Expert review results with regards to topics.

Regarding the relevance of “reflexive, goal-based, and utility-based” to topics in “0.95 < similarity” range, the expert response was close to four at 3.74, suggesting a high “relevance.” The necessity score of the topics for intelligent agent design was also high at 3.74.

For the topics in “0.95 similarity > 0.9” range, the relevance score was 3.49, and the “0.9 similarity > 0.8” range received a relevance score of 3.08. For the topics in the “0.8 similarity > 0.7” range, an “average” relevance score of 3.07 was received. In other words, based on the topics that were sampled in the study, it was shown that the higher the “similarity” range, the higher the relevance was for expert content validation. The “rater reliability” of experts was shown to be high at 0.90–0.78. Therefore, it can be concluded that topics sampled in the study for each range have a high semantic relevance.

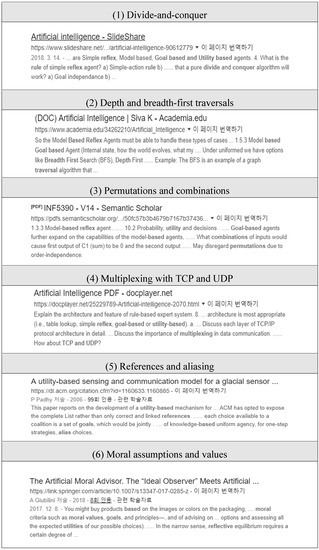

4.4.2. Validation through Index Terms

Each of the six topics having semantic similarities in “reflexive, goal-based, and utility-based” and other areas from Tier 1 of “0.95 < similarity” was entered into a search engine and the results displayed on the first page were checked (see Figure 6). The search engine results are shown in Figure 8.

Figure 8.

Topic search results from search engine.

As shown in Figure 8, for (1), (2), (3), (4), and (6), words included in the two topics appeared together in the documents related to AI. This implies that the two topics are either AI-related or semantically close. Further, for the case of (5), “utility-based” and “alias, references,” which are utility-based mechanisms for managing communications in collaborative multi-sensor networks, were found. From this, it can be interpreted that the terms appeared simultaneously in one document because communications using sensors are directly linked to the communications among agents.

5. Conclusions

The body of knowledge in a specific field of study reflects the linkages and continuity of knowledge. Identifying the inter-topic relationships among bodies of knowledge will help in constructing a hierarchy or a sequence of knowledge areas.

This study examined the interrelationships among the topics based on the understanding of various bodies of knowledge to provide suggestions for topic compositions to construct an academic body of AI knowledge. In constructing new topics through the analysis of the body of knowledge, sentence modeling, which is a data science method, was used to minimize noise in the semantic interpretation of the topics containing multiple words. Further, unprocessed original topics were applied. Regarding extracted contents, the validity was verified through expert validation tests and semantic similarity tests based on index terms.

Based on the obtained results, there were several topics with high similarity related to the agent design in the areas of “algorithm complexity,” “discrete structures,” “fundamentals of software development,” “parallel and distributed computing,” and “software engineering.” These topics included algorithm design strategy, “divide-and-conquer,” “data retrieval method,” “depth and breadth-first traversals,” and a theoretical foundation in the CS field, “permutations and combinations.” The top three units with high similarity topic distribution based on KU were “sets, relations, and functions” and “graphs and trees” of DS, and “algorithmic strategies” of AL. These are the basic theories and concepts applied throughout the CS field, including the agent design.

Among the limitations of this study is sparsity of data on the core topics used in education. A large amount of data is needed to extract stable values through machine learning, but the ultimate limitation is that documents on the curriculum or core topics of education are not sufficient in terms of learning data. Furthermore, it is an area where research has not been conducted sufficiently on how to justify the results after the study through learning models. Consequently, identifying the possibilities through the educational use of the research results is time-consuming. To reflect emerging knowledge in education, the composition of knowledge or modeling of topics is essential. To achieve this, it is necessary to establish various methodologies to overcome limitations in the availability of extracted knowledge.

The field of AI deals with expert knowledge based on basic knowledge. The AI’s body of knowledge compositions is extremely crucial in terms of preparing an academic foundation and deciding the topics to cover in the future. Therefore, the sentence modeling method presented in this study will contribute to the construction of various levels of knowledge hierarchies in the body of knowledge of AI and further understanding of the knowledge of the topics.

Author Contributions

Conceptualization, H.W. and J.K.; methodology, H.W.; software, H.W.; validation, H.W. and J.K.; writing—original draft preparation, H.W. and J.K.; writing—review and editing, H.W. and J.K.; visualization, H.W.; project administration, W.L.; funding acquisition, W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea(NRF) grant funded by the Korea government(MSIT) (No. 2016R1A2B4014471).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Whitehouse Executive Order on AI. Available online: https://www.whitehouse.gov/ai/executive-order-ai/ (accessed on 2 March 2020).

- Cabinet Office, Government of Japan Summit on Artificial Intelligence in Government. Artificial intelligence technology strategy meeting. Available online: https://www8.cao.go.jp/cstp/tyousakai/jinkochino/index.html (accessed on 2 March 2020).

- Ministry of Science and ICT I-Korea 4.0 Artificial Intelligence (AI) R&D Strategy for Realization; Ministry of Science and ICT I: Korea, 2018.

- Carnegie Mellon University (CMU) B.S. in Artificial Intelligence; Carnegie Mellon University (CMU): Pittsburgh, PA, USA, 2020.

- MIT Artificial Intelligence: Implications for Business Strategy (self-paced online). Available online: https://executive.mit.edu/openenrollment/program/artificial-intelligence-implications-for-business-strategy-self-paced-online/ (accessed on 2 March 2020).

- Ministry of Education, PRC Ministry of Education of People’s Republic of China official Website. Available online: http://www.moe.gov.cn/srcsite/A16/s7062/201804/t20180410_332722.html (accessed on 2 March 2020).

- State Council, PRC Notice of the State Council on Printing and Distributing a New Generation of AI Plan. Available online: http://www.gov.cn/zhengce/content/2017-07/20/content_5211996.html (accessed on 2 March 2020).

- CBSE Central Board of Secondary Education. Available online: http://cbseacademic.nic.in/ai.html (accessed on 2 March 2020).

- Cheng, J.; Huang, R.; Jin, Q.; Ma, J.; Pan, Y. An Undergraduate Curriculum Model for Intelligence Science and Technology. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Guangzhou, China, 8–12 October 2018; pp. 234–239. [Google Scholar] [CrossRef]

- Hearst, M. Improving Instruction of Introductory AI; Tech. Report FS-94-05; AAAI: Menlo Park, CA, USA, 1995. [Google Scholar]

- Tungare, M.; Yu, X.; Cameron, W.; Teng, G.; Perez-Quinones, M.A.; Cassel, L.; Fan, W.; Fox, E.A. Towards a syllabus repository for computer science courses. In Proceedings of the 38th SIGCSE Technical Symposium on Computer Science Education, Covington, KY, USA, 7–11 March 2007; pp. 55–59. [Google Scholar]

- Dai, Y.; Asano, Y.; Yoshikawa, M. Course Content Analysis: An initiative step toward learning object recommendation systems for MOOC learners. In Proceedings of the 9th International Conference on Educational Data Mining, Raleigh, NC, USA, 29 June–2 July 2016; pp. 347–352. [Google Scholar]

- Ida, M. Textual information and correspondence analysis in curriculum analysis. In Proceedings of the 2009 IEEE International Conference on Fuzzy Systems, Jeju Island, Korea, 20–24 August 2009; pp. 20–24. [Google Scholar]

- Sekiya, T. Mapping analysis of CS2013 by supervised LDA and isomap. In Proceedings of the Teaching Assessment and Learning (TALE) 2014 International Conference, Wellington, New Zealand, 8–10 December 2014; pp. 33–40. [Google Scholar]

- Matsuda, Y.; Sekiya, T.; Yamaguchi, K. Curriculum analysis of computer science departments by simplified, supervised LDA. J. Inf. Process. 2018, 26, 497–508. [Google Scholar] [CrossRef]

- Jang, Y.; Kim, H. Reliable classification of FAQs with spelling errors using an encoder-decoder neural network in Korean. Appl. Sci. 2019, 9, 4758. [Google Scholar] [CrossRef]

- Yin, X.; Zhang, W.; Zhu, W.; Liu, S.; Yao, T. Improving sentence representations via component focusing. Appl. Sci. 2020, 10, 958. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Yoon, K. Convolutional neural networks for sentence classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Shen, Y.; He, X.; Gao, J.; Deng, L.; Mesnil, G. Learning semantic representations using convolutional neural networks for web search. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Korea, 7–11 April 2014. [Google Scholar]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A convolutional neural network for modelling sentences. arXiv 2014, arXiv:1404.2188. [Google Scholar]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuglu, K.; Kuksa, P. Natural language processing (almost) from scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Mueller, J.; Thyagarajan, A. Siamese recurrent architectures for learning sentence similarity. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI-16), Phoenix, AZ, USA, 12–17 February 2016; pp. 2789–2792. [Google Scholar]

- Graves, A. Supervised Sequence Labelling. In Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Cho, K.; Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Schwenk, F.B.H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Socher, R. Recursive Deep Learning for Natural Language Processing and Computer Vision. Ph.D. Thesis, Stanford University: Stanford, CA, USA, 2014. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 1–15. [Google Scholar]

- Langley, P.; Laird, J.E. Artificial Intelligence and Intelligent Systems; American Association for Artificial Intelligence: Menlo Park, CA, USA, 2013. [Google Scholar]

- Aggarwal, C.C.; Hinneburg, A.; Keim, D.A. On the surprising behavior of distance metrics in high dimensional space. In Database Theory—ICDT 2001. ICDT 2001. Lecture Notes in Computer Science; Bussche, J.V., Ed.; Springer: Berlin, Heidelberg, 2001; Volume 1973. [Google Scholar]

- Pendlebury, J.; Humphrys, M.; Walshe, R. An experimental system for real-time interaction between humans and hybrid AI agents. In Proceedings of the 6th IEEE International Conference Intelligent Systems, Sofia, Bulgaria, 6–8 September 2012; pp. 121–129. [Google Scholar]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson Education: London, UK, 2016. [Google Scholar]

- Zaheer, K. Artificial Intelligence Search Algorithms in Travel Planning; Malardalen University: Västerås, Sweden, 2006. [Google Scholar]

- Srinivasan, S.; Kumar, D.; Jaglan, V. Agents and their knowledge representations. Ubiquitous Comput. Commun. J. 2010, 5, 14–23. [Google Scholar]

- Niederberger, C.; Gross, M.H. Towards a Game Agent; Institute of Visual Computing, ETH Zurich: Zurich, Sweden, 2002. [Google Scholar]

- Ilsoon, S.; Buyeon, J.; JangHyung, J. Changes in E-Commerce and Economic Impact due to the Development of Agent Technology. Inf. Commun. Policy Res. Rep. 2001. Available online: https://www.nkis.re.kr:4445/subject_view1.do?otpId=KISDI00017852&otpSeq=0 (accessed on 2 March 2020).

- Chowdhury, M. Emphasizing morals, values, ethics, and character education in science education and science teaching. Malays. Online J. Educ. Sci. 2016, 4, 1–16. [Google Scholar]

- Poole, D.L.; Mackworth, A.K. Artificial Intelligence Foundations of Computational Agents, 2nd ed.; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Cowan, C.; Wagle, P.; Pu, C.; Beattie, S.; Walpole, J. Buffer overflows: Attacks and defenses for the vulnerability of the decade. In Proceedings of the DARPA Information Survivability Conference and Exposition, Hilton Head, SC, USA, 25–27 January 2000; pp. 119–129. [Google Scholar]

- Banisar, D. Whistleblowing: International Standards and Developments, Corruption and Transparency: Debating the Frontiers between State, Market. and Society; World Bank-Institute for Social Research: Washington, DC, USA, 2011. [Google Scholar]

- Cai, Z.; Wang, C.; Cheng, S.; Wang, H.; Gao, H. Wireless algorithms, systems, and applications. In Proceedings of the 9th International Conference, WASA 2014, Harbin, China, 23–25 June 2014. [Google Scholar]

- Goralski, W. The Illustrated Network How TCP/IP Works in a Modern Network, 2nd ed.; Morgan Kaufmann: Burlington, MA, USA, 2017. [Google Scholar]

- Bose, P.; Carufel, J.L.; Durocher, S.; Langerman, S.; Munro, I. Searching and routing in discrete and continuous domains. In The Casa Matemática Oaxaca-BIRS Workshop; Banff International Research Station: Banff, AB, Canada, 2015. [Google Scholar]

- Lowry, M.R.; McCartney, R.D. Automating Software Design; MIT Press: Cambridge, MA, USA, 1991. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).