Privacy Relevance and Disclosure Intention in Mobile Apps: The Mediating and Moderating Roles of Privacy Calculus and Temporal Distance

Abstract

1. Introduction

2. Literature Review and Research Hypotheses

2.1. Privacy Calculus Theory (PCT)

2.2. Psychological Distance Theory (PDT)

2.3. Elaboration Likelihood Model (ELM)

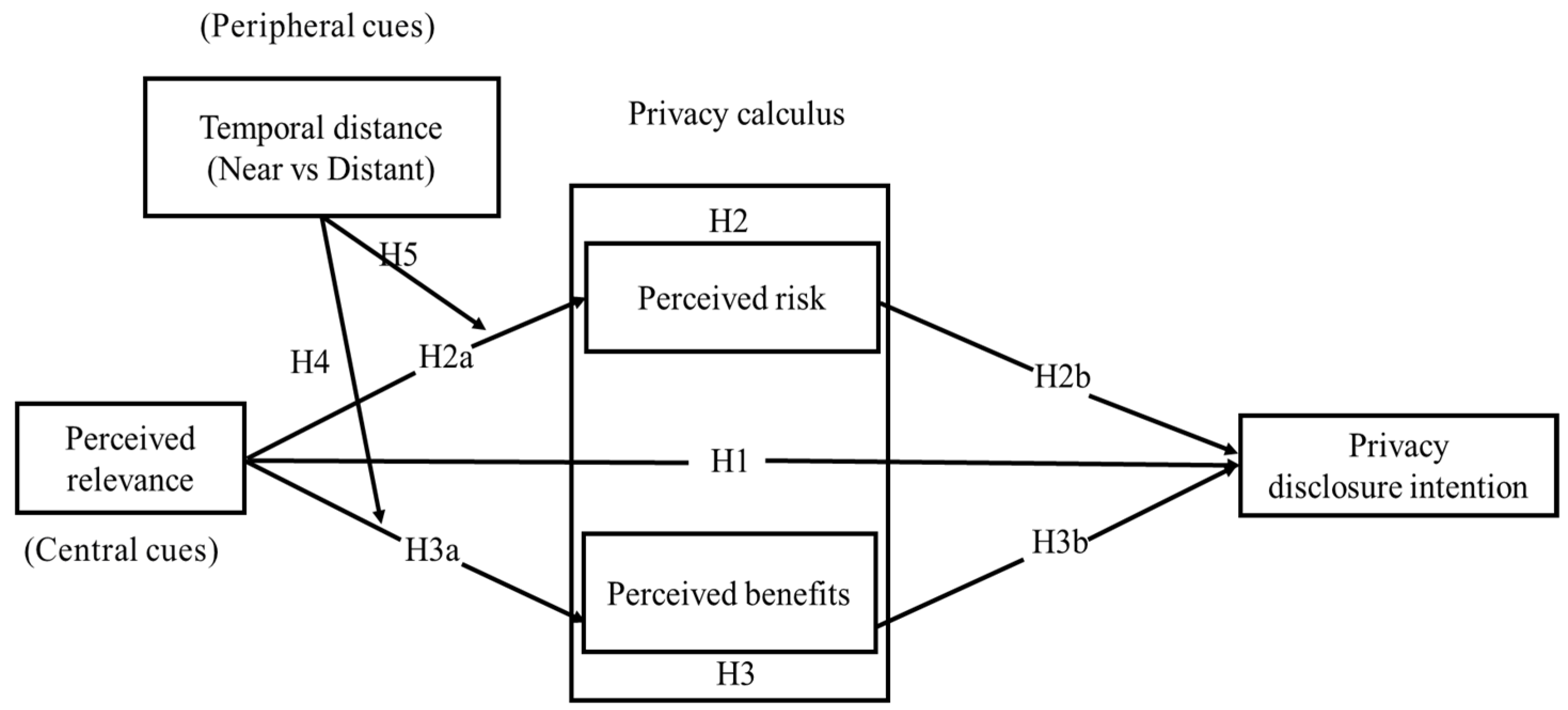

2.4. Research Hypothesis

2.4.1. Perceived Relevance and Privacy Disclosure Intention

2.4.2. The Mediating Role of Perceived Risk and Perceived Benefits

2.4.3. The Moderating Effect of Temporal Distance

3. Method

3.1. Experimental Stimulus

3.2. Experimental Procedures

3.3. Data Screening

3.3.1. Descriptive Analysis

3.3.2. Common Method Bias and Non-Response Bias

3.3.3. Measurement Model

4. Results

4.1. Manipulation Check

4.2. Preliminary Analyses

4.3. Hypothesis Testing

4.3.1. Direct and Indirect Effects

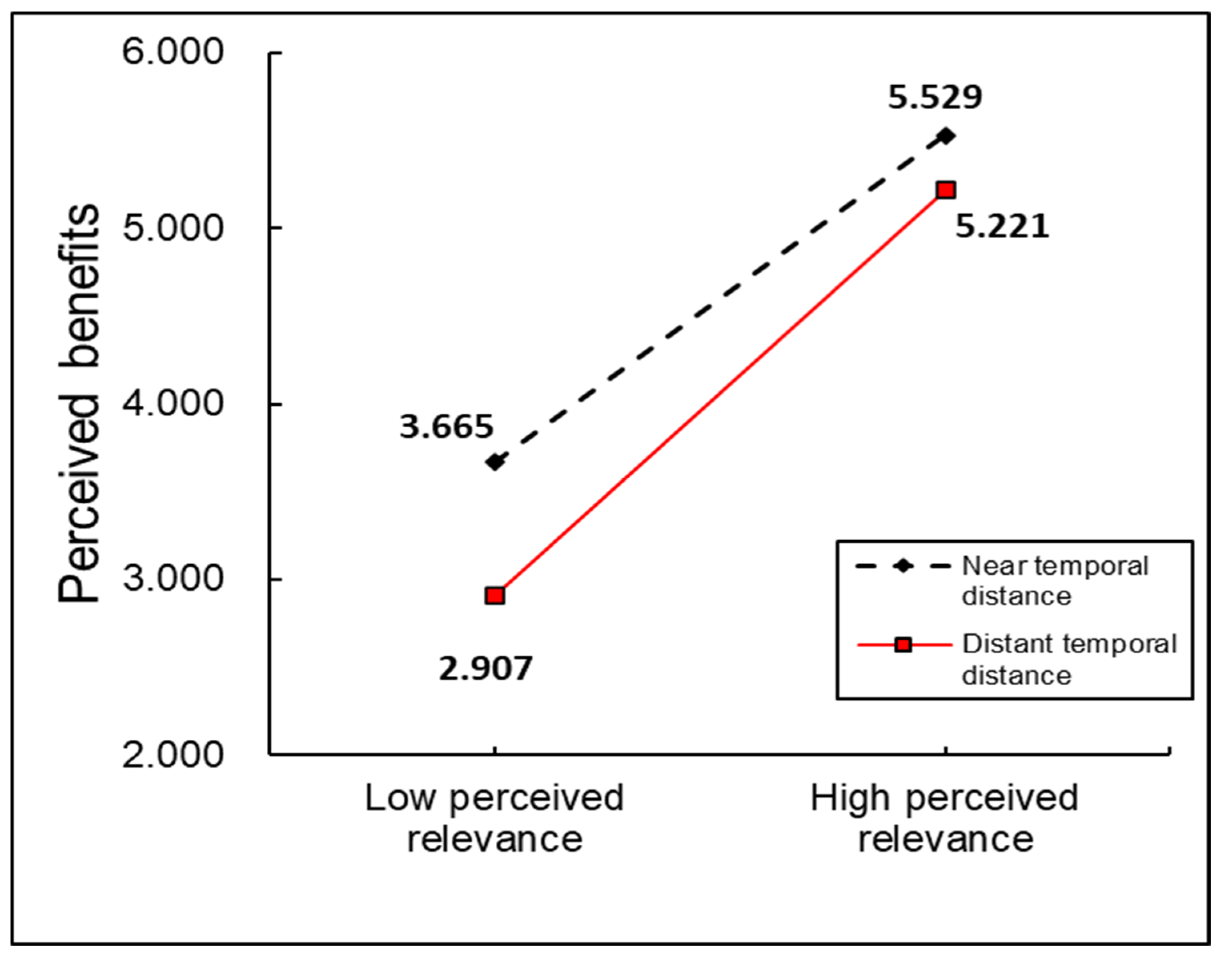

4.3.2. Testing of the Moderated Mediation Model

5. Discussions and Implications

5.1. Summary of Key Findings

5.2. Theoretical Implications

5.3. Practical Implications

6. Research Limitations and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Variable | Questions | References |

|---|---|---|

| Privacy Disclosure Intention (PDI) | Q1: I am willing to authorize or provide access to my geolocation (or list of applications) | (Li et al., 2010; Liu et al., 2022) |

| Q2: I do not perceive any concerns regarding authorizing this application to access my geolocation (or list of applications) | ||

| Q3: I regard it as reasonable and appropriate to authorize or provide access to my geolocation (or list of applications) | ||

| Perceived Risk (PR) | Q4: I perceive a high level of risk in authorizing this application to access my geolocation (or list of applications) | (Zhou, 2011) |

| Q5: I would remain highly vigilant if this application requests access to my geolocation (or list of applications) | ||

| Q6: I am concerned that authorizing or providing this application with access to my geolocation (or list of applications) could lead to improper use | ||

| Q7: I am worried that authorizing or providing this application with access to my geolocation (or list of applications) may result in potential losses | ||

| Perceived Benefits (PB) | Q8: Providing my geolocation (or list of applications) to this application could bring certain benefits | (Sun et al., 2014; T. Wang et al., 2016) |

| Q9: Sharing geolocation information (or list of applications) with this application could enhance my shopping experience | ||

| Q10: Authorizing this application to access my geolocation (or list of applications) could enable me to enjoy better services | ||

| Perceived Relevance (PRE) | Q11: The request for geolocation (or list of applications) by this online shopping app is directly related to the shopping services it provides | (Hajli & Lin, 2016) |

| Q12: The online shopping app’s request for geolocation (or list of applications) is reasonable, as it facilitates the provision of shopping services |

References

- Acquisti, A. (2004, May 17–20). Privacy in electronic commerce and the economics of immediate gratification. 5th ACM Conference on Electronic Commerce, New York, NY, USA. [Google Scholar]

- Acquisti, A., & Grossklags, J. (2003, May 29–30). Losses, gains, and hyperbolic discounting: An experimental approach to information security attitudes and behavior. 2nd Annual Workshop on Economics and Information Security-WEIS (Vol. 3, pp. 1–27), College Park, MD, USA. [Google Scholar]

- Atzmüller, C., & Steiner, P. M. (2010). Experimental vignette studies in survey research. Methodology, 6, 128–138. [Google Scholar] [CrossRef]

- Awad, N. F., & Krishnan, M. S. (2006). The personalization privacy paradox: An empirical evaluation of information transparency and the willingness to be profiled online for personalization. MIS Quarterly, 30, 13–28. [Google Scholar] [CrossRef]

- Bahreini, A. F., Cenfetelli, R., & Cavusoglu, H. (2022). The role of heuristics in information security decision making. HICSS. [Google Scholar]

- Bandara, R. J., Fernando, M., & Akter, S. (2018, January 3–6). Is the privacy paradox a matter of psychological distance? An exploratory study of the privacy paradox from a construal level theory perspective. 51st Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA. [Google Scholar]

- Bandara, R. J., Fernando, M., & Akter, S. (2020). Explicating the privacy paradox: A qualitative inquiry of online shopping consumers. Journal of Retailing and Consumer Services, 52, 101947. [Google Scholar] [CrossRef]

- Bandara, R. J., Fernando, M., & Akter, S. (2021). Construing online consumers’ information privacy decisions: The impact of psychological distance. Information & Management, 58(7), 103497. [Google Scholar]

- Bansal, G., Zahedi, F. M., & Gefen, D. (2015). The role of privacy assurance mechanisms in building trust and the moderating role of privacy concern. European Journal of Information Systems, 24(6), 624–644. [Google Scholar] [CrossRef]

- Barth, S., & De Jong, M. D. (2017). The privacy paradox–Investigating discrepancies between expressed privacy concerns and actual online behavior–A systematic literature review. Telematics and Informatics, 34(7), 1038–1058. [Google Scholar] [CrossRef]

- Bongard-Blanchy, K., Sterckx, J.-L., Rossi, A., Distler, V., Rivas, S., & Koenig, V. (2022, June 6–10). An (un) necessary evil-users’(un) certainty about smartphone app permissions and implications for privacy engineering. 2022 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Genoa, Italy. [Google Scholar]

- Bongard-Blanchy, K., Sterckx, J.-L., Rossi, A., Sergeeva, A., Koenig, V., Rivas, S., & Distler, V. (2023, October 16–17). Analysing the influence of loss-gain framing on data disclosure behaviour: A study on the use case of app permission requests. 2023 European Symposium on Usable Security, Copenhagen, Denmark. [Google Scholar]

- Burghoorn, F., Scheres, A., Monterosso, J., Guo, M., Luo, S., Roelofs, K., & Figner, B. (2024). Pavlovian impatience: The anticipation of immediate rewards increases approach behaviour. Cognitive, Affective, & Behavioral Neuroscience, 1–19. [Google Scholar] [CrossRef]

- Chen, H., & He, G. (2014). The effect of psychological distance on intertemporal choice and risky choice. Acta Psychologica Sinica, 46(5), 677–690. [Google Scholar] [CrossRef]

- Chen, R. (2013). Living a private life in public social networks: An exploration of member self-disclosure. Decision Support Systems, 55(3), 661–668. [Google Scholar] [CrossRef]

- Cheng, X., Hou, T., & Mou, J. (2021). Investigating perceived risks and benefits of information privacy disclosure in IT-enabled ride-sharing. Information & Management, 58(6), 103450. [Google Scholar]

- Cheung, C., Lee, Z. W., & Chan, T. K. (2015). Self-disclosure in social networking sites: The role of perceived cost, perceived benefits and social influence. Internet Research, 25(2), 279–299. [Google Scholar] [CrossRef]

- Chin, W. W., Thatcher, J. B., & Wright, R. T. (2012). Assessing common method bias: Problems with the ULMC technique. MIS Quarterly, 36, 1003–1019. [Google Scholar] [CrossRef]

- Culnan, M. J., & Armstrong, P. K. (1999). Information privacy concerns, procedural fairness, and impersonal trust: An empirical investigation. Organization Science, 10(1), 104–115. [Google Scholar] [CrossRef]

- Dienlin, T. (2023). Privacy calculus: Theory, studies, and new perspectives. In The routledge handbook of privacy and social media (pp. 70–79). Routledge. [Google Scholar]

- Dinev, T., & Hart, P. (2006). An extended privacy calculus model for e-commerce transactions. Information Systems Research, 17(1), 61–80. [Google Scholar] [CrossRef]

- Donaldson, T., & Dunfee, T. W. (1994). Toward a unified conception of business ethics: Integrative social contracts theory. Academy of Management Review, 19(2), 252–284. [Google Scholar] [CrossRef]

- Eyal, T., Sagristano, M. D., Trope, Y., Liberman, N., & Chaiken, S. (2009). When values matter: Expressing values in behavioral intentions for the near vs. distant future. Journal of Experimental Social Psychology, 45(1), 35–43. [Google Scholar] [CrossRef]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar] [CrossRef]

- Hair, J. F., Jr., Hult, G. T. M., Ringle, C. M., Sarstedt, M., Danks, N. P., & Ray, S. (2021). Partial least squares structural equation modeling (PLS-SEM) using R: A workbook. Springer Nature. [Google Scholar]

- Hajli, N., & Lin, X. (2016). Exploring the security of information sharing on social networking sites: The role of perceived control of information. Journal of Business Ethics, 133, 111–123. [Google Scholar] [CrossRef]

- Hallam, C., & Zanella, G. (2017). Online self-disclosure: The privacy paradox explained as a temporally discounted balance between concerns and rewards. Computers in Human Behavior, 68, 217–227. [Google Scholar] [CrossRef]

- Harborth, D., & Pape, S. (2020). How privacy concerns, trust and risk beliefs, and privacy literacy influence users’ intentions to use privacy-enhancing technologies: The case of Tor. ACM SIGMIS Database: The DATABASE for Advances in Information Systems, 51(1), 51–69. [Google Scholar]

- Hassandoust, F., Akhlaghpour, S., & Johnston, A. C. (2021). Individuals’ privacy concerns and adoption of contact tracing mobile applications in a pandemic: A situational privacy calculus perspective. Journal of the American Medical Informatics Association, 28(3), 463–471. [Google Scholar] [CrossRef]

- Hayes, A. F. (2017). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. Guilford Publications. [Google Scholar]

- Henseler, J., Ringle, C. M., & Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science, 43, 115–135. [Google Scholar] [CrossRef]

- Hui, K.-L., Teo, H. H., & Lee, S.-Y. T. (2007). The value of privacy assurance: An exploratory field experiment. MIS Quarterly, 31, 19–33. [Google Scholar] [CrossRef]

- Ioannou, A., Tussyadiah, I., & Marshan, A. (2021). Dispositional mindfulness as an antecedent of privacy concerns: A protection motivation theory perspective. Psychology & Marketing, 38(10), 1766–1778. [Google Scholar] [CrossRef]

- Jabbar, A., Geebren, A., Hussain, Z., Dani, S., & Ul-Durar, S. (2023). Investigating individual privacy within CBDC: A privacy calculus perspective. Research in International Business and Finance, 64, 101826. [Google Scholar] [CrossRef]

- Jiang, J., & Dai, J. (2021). Time and risk perceptions mediate the causal impact of objective delay on delay discounting: An experimental examination of the implicit-risk hypothesis. Psychonomic Bulletin & Review, 28(4), 1399–1412. [Google Scholar]

- Johnson, K. L., Bixter, M. T., & Luhmann, C. C. (2020). Delay discounting and risky choice: Meta-analytic evidence regarding single-process theories. Judgment and Decision Making, 15(3), 381–400. [Google Scholar] [CrossRef]

- Johnson-Laird, P. N. (1983). Mental models: Towards a cognitive science of language, inference, and consciousness. Harvard University Press. [Google Scholar]

- Kahneman, D., & Tversky, A. J. E. (1979). Prospect theory: An analysis of decision under risk. Econometrica, 47(2), 263–292. [Google Scholar] [CrossRef]

- Karataş, M., & Gürhan-Canli, Z. (2020). When consumers prefer bundles with noncomplementary items to bundles with complementary items: The role of mindset abstraction. Journal of Consumer Psychology, 30(1), 24–39. [Google Scholar] [CrossRef]

- Knijnenburg, B. P., & Bulgurcu, B. (2023). Designing alternative form-autocompletion tools to enhance privacy decision-making and prevent unintended disclosure. ACM Transactions on Computer-Human Interaction, 30(6), 1–42. [Google Scholar]

- Kostelic, K. (2021). Temporal and Spatial Perception in Purchase Choice. Eurasian Journal of Business Management, 9(2), 100–122. [Google Scholar] [CrossRef]

- Krafft, M., Arden, C. M., & Verhoef, P. C. (2017). Permission marketing and privacy concerns—Why do customers (not) grant permissions? Journal of Interactive Marketing, 39(1), 39–54. [Google Scholar] [CrossRef]

- Leom, M. D., Deegan, G., Martini, B., & Boland, J. (2021, January 5). Information disclosure in mobile device: Examining the influence of information relevance and recipient. 54th Hawaii International Conference on System Sciences, Kauai, HI, USA. [Google Scholar]

- Levin, I. P., Schneider, S. L., & Gaeth, G. J. (1998). All frames are not created equal: A typology and critical analysis of framing effects. Organizational Behavior and Human Decision Processes, 76(2), 149–188. [Google Scholar] [CrossRef] [PubMed]

- Li, H., Sarathy, R., & Xu, H. (2010). Understanding situational online information disclosure as a privacy calculus. Journal of Computer Information Systems, 51(1), 62–71. [Google Scholar]

- Liberman, N., & Trope, Y. (1998). The role of feasibility and desirability considerations in near and distant future decisions: A test of temporal construal theory. Journal of Personality and Social Psychology, 75(1), 5–18. [Google Scholar] [CrossRef]

- Liu, Z., Wang, X., Li, X., & Liu, J. (2022). Protecting privacy on mobile apps: A principal–agent perspective. ACM Transactions on Computer-Human Interaction (TOCHI), 29(1), 1–32. [Google Scholar]

- Loewenstein, G. (1987). Anticipation and the valuation of delayed consumption. The Economic Journal, 97(387), 666–684. [Google Scholar] [CrossRef]

- Malheiros, M., Preibusch, S., & Sasse, M. A. (2013). “Fairly truthful”: The impact of perceived effort, fairness, relevance, and sensitivity on personal data disclosure. In Trust and trustworthy computing: 6th international conference, TRUST 2013, London, UK, 17–19 June 2013. Proceedings 6. Springer. [Google Scholar]

- Malhotra, N. K., Kim, S. S., & Agarwal, J. (2004). Internet users’ information privacy concerns (IUIPC): The construct, the scale, and a causal model. Information Systems Research, 15(4), 336–355. [Google Scholar] [CrossRef]

- Marzilli Ericson, K. M., White, J. M., Laibson, D., & Cohen, J. D. (2015). Money earlier or later? Simple heuristics explain intertemporal choices better than delay discounting does. Psychological Science, 26(6), 826–833. [Google Scholar] [CrossRef]

- Maseeh, H. I., Jebarajakirthy, C., Pentecost, R., Arli, D., Weaven, S., & Ashaduzzaman, M. (2021). Privacy concerns in e-commerce: A multilevel meta-analysis. Psychology & Marketing, 38(10), 1779–1798. [Google Scholar] [CrossRef]

- Momen, N., Hatamian, M., & Fritsch, L. (2019). Did app privacy improve after the GDPR? IEEE Security & Privacy, 17(6), 10–20. [Google Scholar]

- Morosan, C., & DeFranco, A. (2015). Disclosing personal information via hotel apps: A privacy calculus perspective. International Journal of Hospitality Management, 47, 120–130. [Google Scholar] [CrossRef]

- Najjar, M. S., Dahabiyeh, L., & Algharabat, R. S. (2021). Users’ affect and satisfaction in a privacy calculus context. Online Information Review, 45(3), 577–598. [Google Scholar] [CrossRef]

- O’Donoghue, T., & Rabin, M. (2000). The economics of immediate gratification. Journal of Behavioral Decisión Making, 13(2), 233–250. [Google Scholar] [CrossRef]

- Posey, C., Lowry, P. B., Roberts, T. L., & Ellis, T. S. (2010). Proposing the online community self-disclosure model: The case of working professionals in France and the UK who use online communities. European Journal of Information Systems, 19(2), 181–195. [Google Scholar] [CrossRef]

- Reio Jr., T. G. (2010). The threat of common method variance bias to theory building. Human Resource Development Review, 9(4), 405–411. [Google Scholar] [CrossRef]

- Richardson, H. A., Simmering, M. J., & Sturman, M. C. (2009). A tale of three perspectives: Examining post hoc statistical techniques for detection and correction of common method variance. Organizational Research Methods, 12(4), 762–800. [Google Scholar] [CrossRef]

- Rosenthal, S., Wasenden, O.-C., Gronnevet, G.-A., & Ling, R. (2020). A tripartite model of trust in Facebook: Acceptance of information personalization, privacy concern, and privacy literacy. Media Psychology, 23(6), 840–864. [Google Scholar] [CrossRef]

- Rust, R. T., Kannan, P., & Peng, N. (2002). The customer economics of internet privacy. Journal of the Academy of Marketing Science, 30(4), 455–464. [Google Scholar] [CrossRef]

- Shaw, N., & Sergueeva, K. (2019). The non-monetary benefits of mobile commerce: Extending UTAUT2 with perceived value. International Journal of Information Management, 45, 44–55. [Google Scholar] [CrossRef]

- Simon, H. A. (1955). A behavioral model of rational choice. The Quarterly Journal of Economics, 69, 99–118. [Google Scholar] [CrossRef]

- Smith, H. J., Dinev, T., & Xu, H. (2011). Information privacy research: An interdisciplinary review. MIS Quarterly, 35, 989–1015. [Google Scholar] [CrossRef]

- Smith, H. J., Milberg, S. J., & Burke, S. J. (1996). Information privacy: Measuring individuals’ concerns about organizational practices. MIS Quarterly, 20, 167–196. [Google Scholar] [CrossRef]

- Solove, D. J. (2021). The myth of the privacy paradox. The George Washington Law Review, 89, 1. [Google Scholar] [CrossRef]

- Sun, Y., Fang, S., & Hwang, Y. (2019). Investigating privacy and information disclosure behavior in social electronic commerce. Sustainability, 11(12), 3311. [Google Scholar] [CrossRef]

- Sun, Y., Wang, N., & Shen, X.-L. (2014, June 24–28). Perceived benefits, privacy risks, and perceived justice in location information disclosure: A moderated mediation analysis. 18th Pacific Asia Conference on Information Systems, Chengdu, China. [Google Scholar]

- Trope, Y., & Liberman, N. (2000). Temporal construal and time-dependent changes in preference. Journal of Personality and Social Psychology, 79(6), 876. [Google Scholar] [CrossRef]

- Trope, Y., & Liberman, N. (2003). Temporal construal. Psychological Review, 110(3), 403–421. [Google Scholar] [CrossRef]

- Trope, Y., & Liberman, N. (2010). Construal-level theory of psychological distance. Psychological Review, 117(2), 440. [Google Scholar] [CrossRef] [PubMed]

- van der Schyff, K., & Flowerday, S. (2023). The mediating role of perceived risks and benefits when self-disclosing: A study of social media trust and FoMO. Computers & Security, 126, 103071. [Google Scholar]

- Wakslak, C. J., Trope, Y., Liberman, N., & Alony, R. (2006). Seeing the forest when entry is unlikely: Probability and the mental representation of events. Journal of Experimental Psychology: General, 135(4), 641–653. [Google Scholar] [CrossRef]

- Wang, L., Yan, J., Lin, J., & Cui, W. (2017). Let the users tell the truth: Self-disclosure intention and self-disclosure honesty in mobile social networking. International Journal of Information Management, 37(1), 1428–1440. [Google Scholar] [CrossRef]

- Wang, T., Duong, T. D., & Chen, C. C. (2016). Intention to disclose personal information via mobile applications: A privacy calculus perspective. International Journal of Information Management, 36(4), 531–542. [Google Scholar] [CrossRef]

- Xie, E., Teo, H.-H., & Wan, W. (2006). Volunteering personal information on the internet: Effects of reputation, privacy notices, and rewards on online consumer behavior. Marketing Letters, 17, 61–74. [Google Scholar] [CrossRef]

- Xu, H., Luo, X. R., Carroll, J. M., & Rosson, M. B. (2011). The personalization privacy paradox: An exploratory study of decision making process for location-aware marketing. Decision Support Systems, 51(1), 42–52. [Google Scholar] [CrossRef]

- Youn, S., & Kim, H. (2018). Temporal duration and attribution process of cause-related marketing: Moderating roles of self-construal and product involvement. International Journal of Advertising, 37(2), 217–235. [Google Scholar] [CrossRef]

- Yu, L., Li, H., He, W., Wang, F.-K., & Jiao, S. (2020). A meta-analysis to explore privacy cognition and information disclosure of internet users. International Journal of Information Management, 51, 102015. [Google Scholar] [CrossRef]

- Zhang, J., Wang, W., Khansa, L., & Kim, S. S. (2024). Actual private information disclosure on online social networking sites: A reflective-impulsive model. Journal of the Association for Information Systems, 25(6), 1533–1562. [Google Scholar] [CrossRef]

- Zhang, M., Zhao, P., & Qiao, S. (2020). Smartness-induced transport inequality: Privacy concern, lacking knowledge of smartphone use and unequal access to transport information. Transport Policy, 99, 175–185. [Google Scholar] [CrossRef]

- Zhou, T. (2011). The impact of privacy concern on user adoption of location-based services. Industrial Management & Data Systems, 111(2), 212–226. [Google Scholar]

- Zhou, T. (2017). Understanding location-based services users’ privacy concern: An elaboration likelihood model perspective. Internet Research, 27(3), 506–519. [Google Scholar] [CrossRef]

- Zhu, Y.-Q., & Chang, J.-H. (2016). The key role of relevance in personalized advertisement: Examining its impact on perceptions of privacy invasion, self-awareness, and continuous use intentions. Computers in Human Behavior, 65, 442–447. [Google Scholar] [CrossRef]

- Zimmer, J. C., Arsal, R. E., Al-Marzouq, M., & Grover, V. (2010). Investigating online information disclosure: Effects of information relevance, trust and risk. Information & Management, 47(2), 115–123. [Google Scholar]

| Gender | Age | Educational Level | |||

|---|---|---|---|---|---|

| Category | Number | Category | Number | Category | Number |

| Male | 138 | <18 | 5 | High school and below | 9 |

| Female | 155 | 19–24 | 74 | Undergraduate | 230 |

| 25–30 | 68 | Master’s degree and above | 54 | ||

| 31–35 | 74 | ||||

| 36–40 | 37 | ||||

| >40 | 35 | ||||

| χ2/df | RMSEA | SRMR | GFI | AGFI | CFI | NFI | |

|---|---|---|---|---|---|---|---|

| CFA model1 (Without CLF) | 1.540 | 0.043 | 0.016 | 0.961 | 0.937 | 0.993 | 0.981 |

| CFA model2 (CLF Added) | 1.556 | 0.044 | 0.014 | 0.966 | 0.935 | 0.994 | 0.984 |

| Δ (model1 − model2) | −0.016 | −0.001 | 0.002 | −0.005 | 0.002 | −0.001 | −0.003 |

| Mean | SD | Factor Loading | Cronbach α | CR | AVE | |

|---|---|---|---|---|---|---|

| PDI1 | 4.181 | 0.043 | 0.952 | 0.961 | 0.962 | 0.893 |

| PDI2 | 4.092 | 0.046 | 0.938 | |||

| PDI3 | 4.256 | 0.045 | 0.944 | |||

| PR1 | 4.317 | 0.046 | 0.911 | 0.943 | 0.943 | 0.807 |

| PR2 | 4.614 | 0.058 | 0.895 | |||

| PR3 | 4.590 | 0.063 | 0.886 | |||

| PR4 | 4.464 | 0.057 | 0.900 | |||

| PB1 | 4.420 | 0.110 | 0.659 | 0.870 | 0.878 | 0.711 |

| PB2 | 4.215 | 0.052 | 0.928 | |||

| PB3 | 4.334 | 0.056 | 0.915 | |||

| PRE1 | 4.546 | 0.089 | 0.835 | 0.902 | 0.909 | 0.833 |

| PRE2 | 4.413 | 0.083 | 0.985 |

| PDI | PR | PB | PRE | |

| PDI | - | |||

| PR | 0.843 | - | ||

| PB | 0.846 | 0.796 | - | |

| PRE | 0.800 | 0.709 | 0.844 | - |

| PDI | PR | PB | PRE | |

|---|---|---|---|---|

| PDI | 0.945 | |||

| PR | −0.801 | 0.898 | ||

| PB | 0.779 | −0.721 | 0.843 | |

| PRE | 0.754 | −0.655 | 0.766 | 0.913 |

| Reward Timing | Mean | SD | t-Value | p | |

|---|---|---|---|---|---|

| Geolocation | Immediate | 5.02 | 1.51 | 1.119 | 0.265 |

| One month later | 4.74 | 1.66 | |||

| List of apps | Immediate | 4.22 | 1.54 | 1.496 | 0.137 |

| One month later | 3.81 | 1.59 |

| Mean | SD | PDI | PR | PB | PRE | |

|---|---|---|---|---|---|---|

| PDI | 4.191 | 1.791 | 1 | |||

| PR | 4.488 | 1.531 | −0.801 ** | 1 | ||

| PB | 4.316 | 1.410 | 0.779 ** | −0.721 ** | 1 | |

| PRE | 4.483 | 1.639 | 0.754 ** | −0.655 ** | 0.766 ** | 1 |

| Predictors | Model 1 (PR) | Model 2 (PB) | Model 3 (PDI) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| b | SE | t | b | SE | t | b | SE | t | |

| Constant | 7.274 | 0.279 | 26.026 *** | 1.675 | 0.219 | 7.667 *** | 3.808 | 0.484 | 7.864 *** |

| PRE | −0.615 | 0.041 | −14.878 *** | 0.661 | 0.032 | 20.440 *** | 0.298 | 0.051 | 5.786 *** |

| Gender | −0.259 | 0.141 | −1.823 | −0.082 | 0.111 | −0.740 | 0.013 | 0.110 | 0.118 |

| Age | −0.003 | 0.050 | −0.053 | −0.072 | 0.038 | −1.851 | 0.007 | 0.044 | 0.192 |

| Educational level | 0.067 | 0.130 | 0.516 | −0.157 | 0.102 | −1.539 | −0.060 | 0.101 | −0.590 |

| PR | −0.513 | 0.051 | −10.023 *** | ||||||

| PB | 0.322 | 0.066 | 4.911 *** | ||||||

| R2 | 0.661 | 0.771 | 0.869 | ||||||

| F-Value | 55.850 *** | 105.392 *** | 146.677 *** | ||||||

| DV | IV | b | SE | t | LLCI | ULCI | R2 | F-Value |

|---|---|---|---|---|---|---|---|---|

| PDI | Constant | 5.105 | 0.435 | 11.746 *** | 4.250 | 5.961 | 0.869 | 295.839 *** |

| PRE | 0.296 | 0.051 | 5.816 *** | 0.196 | 0.396 | |||

| PR | −0.514 | 0.051 | −10.160 *** | −0.614 | −0.415 | |||

| PB | 0.323 | 0.065 | 5.005 *** | 0.196 | 0.449 | |||

| PR | Constant | 4.394 | 0.097 | 45.421 *** | 4.204 | 4.584 | 0.659 | 74.004 *** |

| PRE | −0.634 | 0.061 | −10.322 *** | −0.755 | −0.513 | |||

| Temporal | 0.195 | 0.136 | 1.432 | −0.081 | 0.463 | |||

| ARE × Temporal | 0.051 | 0.083 | 0.614 | −0.092 | 0.216 | |||

| PB | Constant | 4.604 | 0.072 | 63.790 *** | 4.462 | 4.746 | 0.793 | 163.291 *** |

| PRE | 0.568 | 0.046 | 12.388 *** | 0.477 | 0.658 | |||

| Temporal | −0.546 | 0.101 | −5.377 *** | −0.746 | −0.346 | |||

| ARE × Temporal | 0.136 | 0.062 | 2.184 * | 0.013 | 0.258 |

| Values of Moderators (Temporal) | Indirect Effect | SE | LLCI | ULCI |

|---|---|---|---|---|

| 0 | 0.183 | 0.039 | 0.110 | 0.265 |

| 1 | 0.227 | 0.048 | 0.138 | 0.324 |

| Index of moderated mediation | 0.044 | 0.023 | 0.001 | 0.091 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Chen, M. Privacy Relevance and Disclosure Intention in Mobile Apps: The Mediating and Moderating Roles of Privacy Calculus and Temporal Distance. Behav. Sci. 2025, 15, 324. https://doi.org/10.3390/bs15030324

Chen M, Chen M. Privacy Relevance and Disclosure Intention in Mobile Apps: The Mediating and Moderating Roles of Privacy Calculus and Temporal Distance. Behavioral Sciences. 2025; 15(3):324. https://doi.org/10.3390/bs15030324

Chicago/Turabian StyleChen, Ming, and Meimei Chen. 2025. "Privacy Relevance and Disclosure Intention in Mobile Apps: The Mediating and Moderating Roles of Privacy Calculus and Temporal Distance" Behavioral Sciences 15, no. 3: 324. https://doi.org/10.3390/bs15030324

APA StyleChen, M., & Chen, M. (2025). Privacy Relevance and Disclosure Intention in Mobile Apps: The Mediating and Moderating Roles of Privacy Calculus and Temporal Distance. Behavioral Sciences, 15(3), 324. https://doi.org/10.3390/bs15030324