A Classification Bias and an Exclusion Bias Jointly Overinflated the Estimation of Publication Biases in Bilingualism Research

Abstract

1. Introduction

2. Method

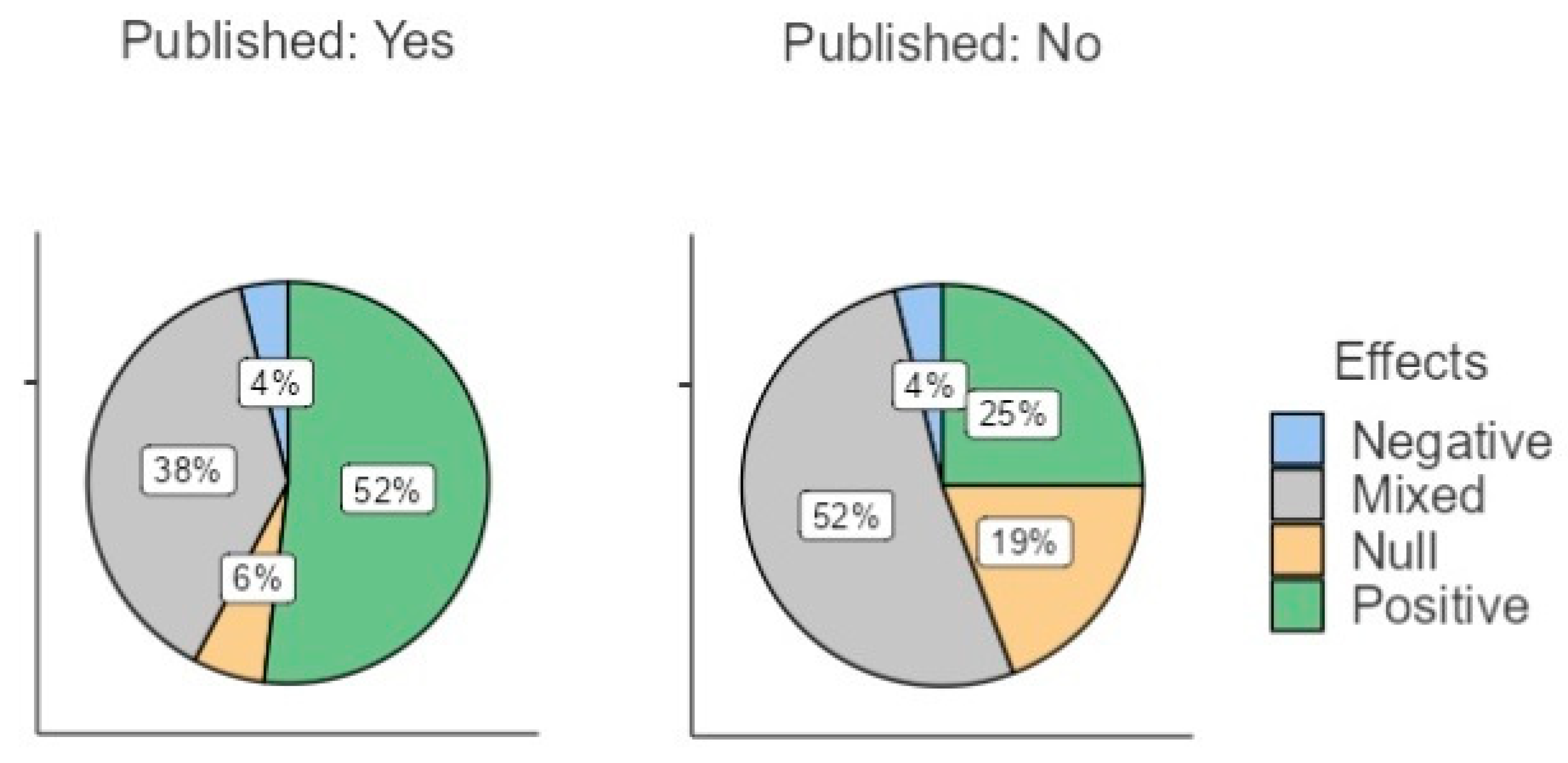

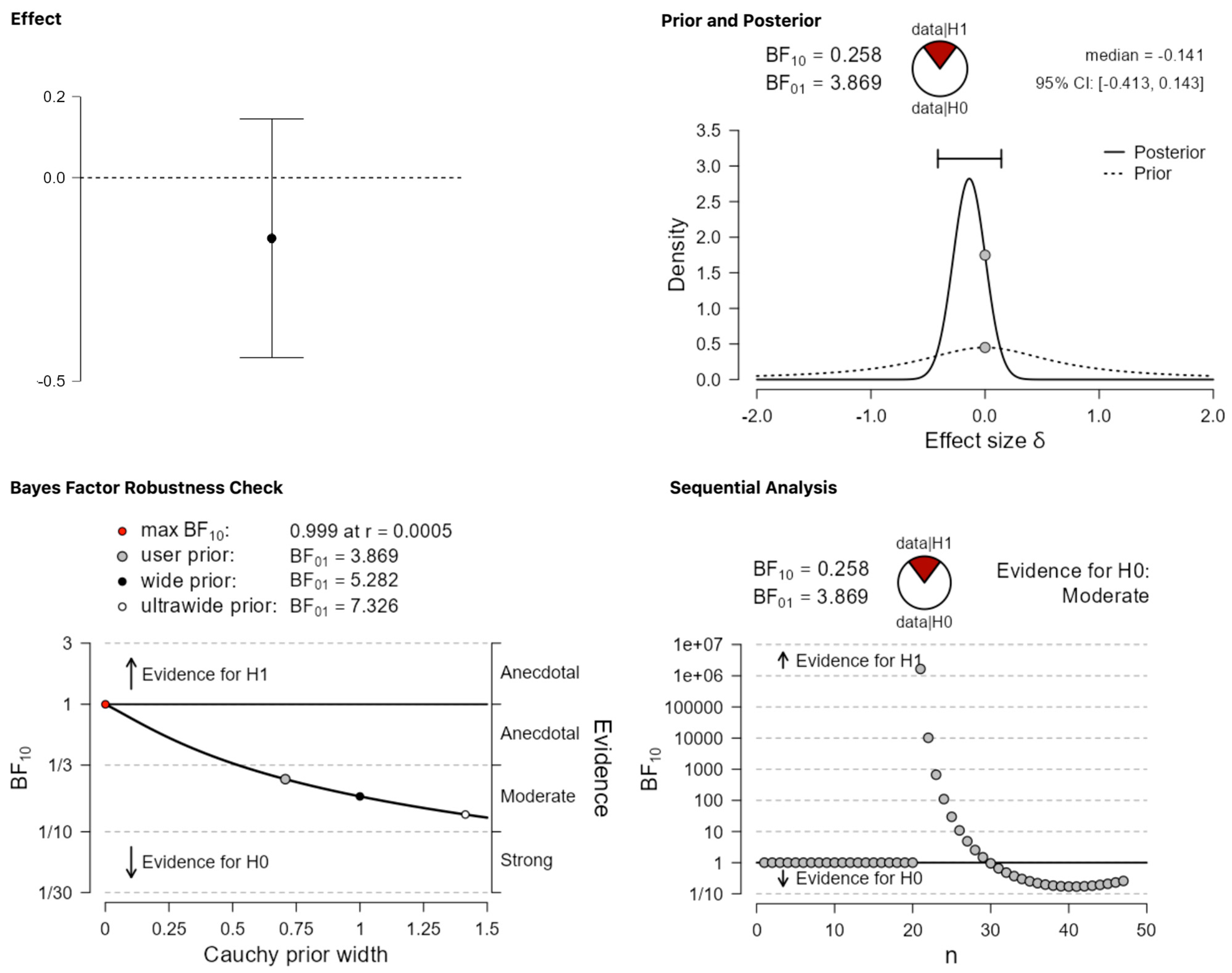

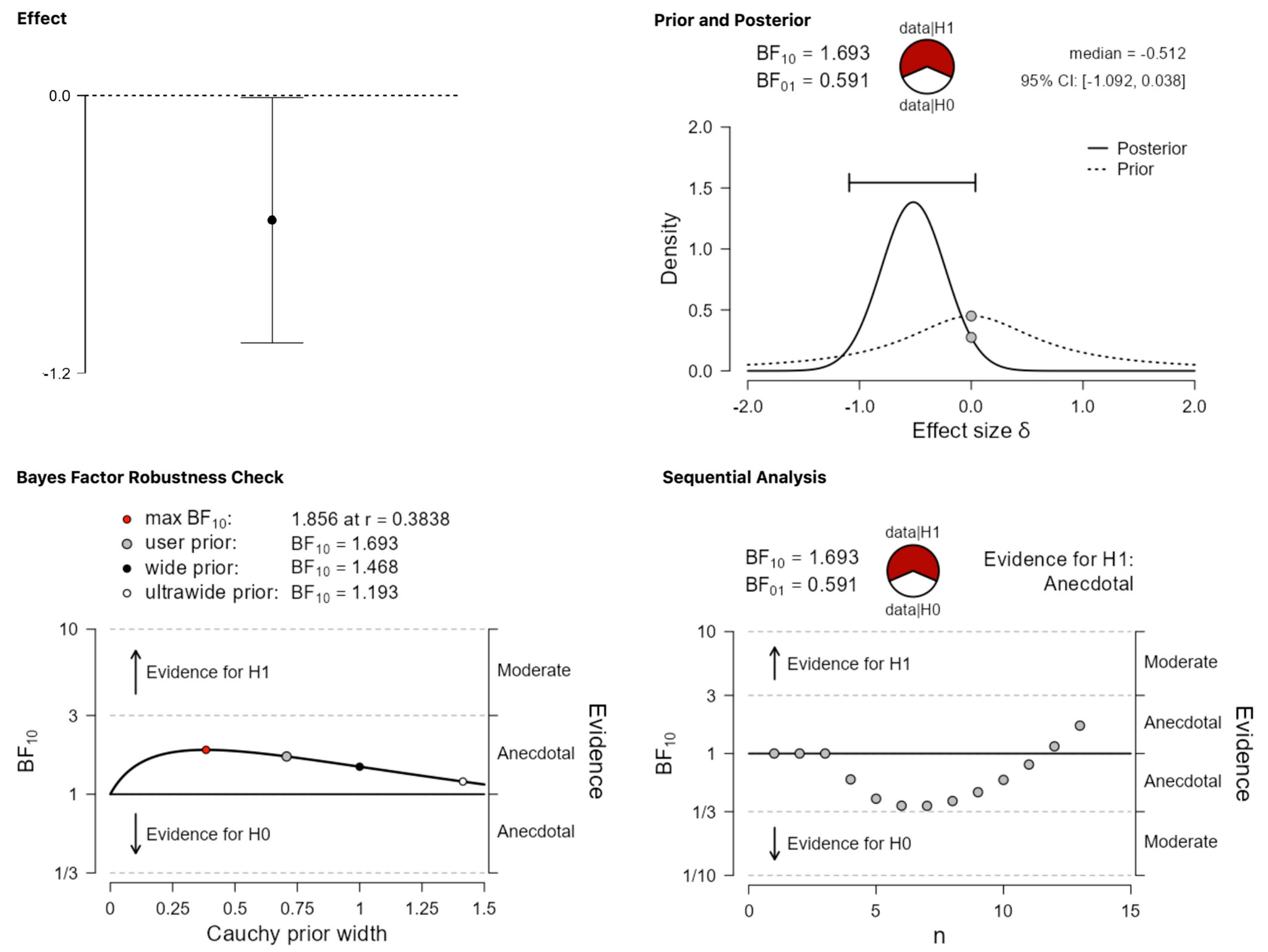

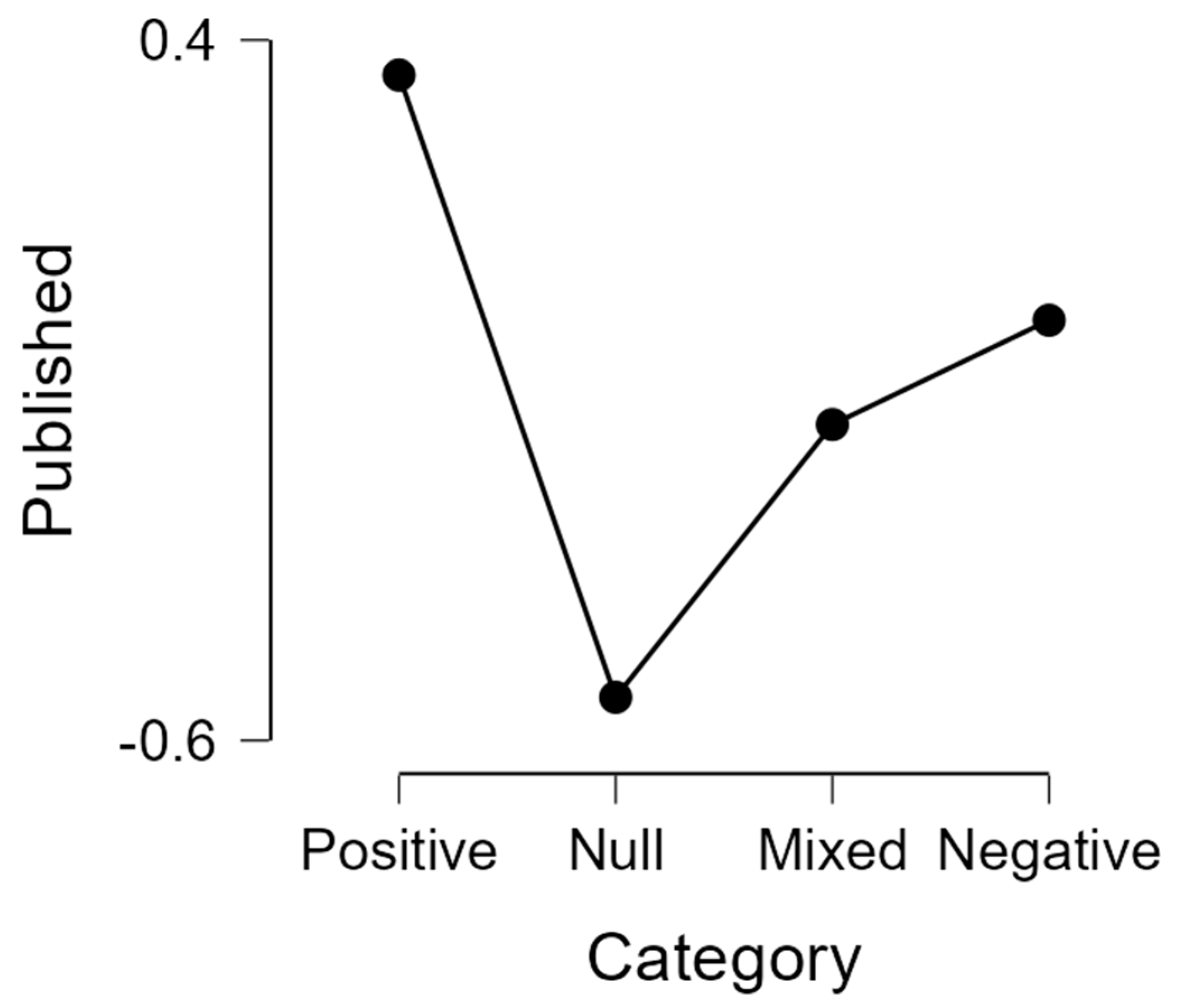

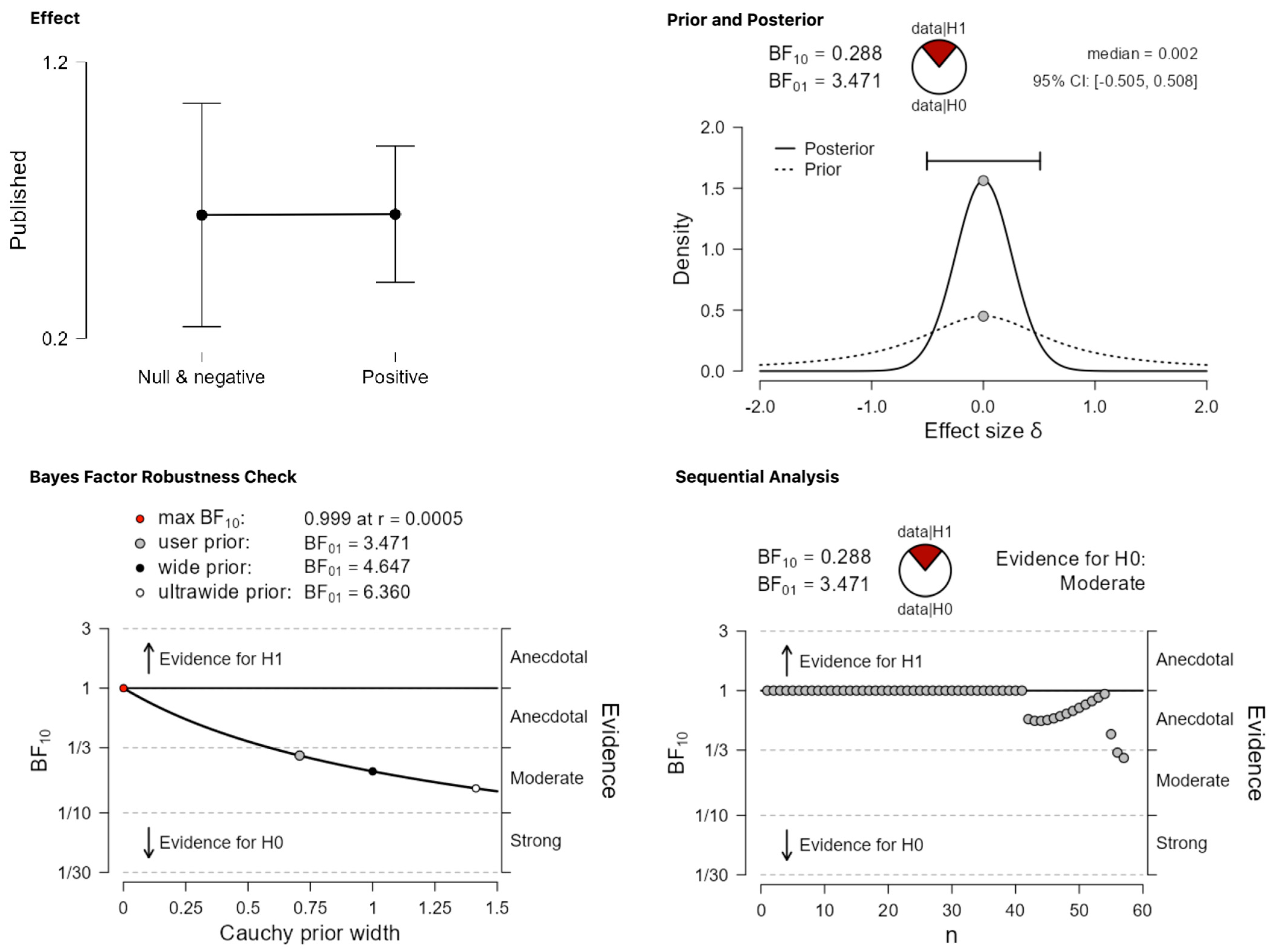

3. Results

4. Discussion

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hubbard, R.; Armstrong, J.S. Publication Bias against Null Results. Psychol. Rep. 1997, 80, 337–338. [Google Scholar] [CrossRef]

- Dwan, K.; Altman, D.G.; Arnaiz, J.A.; Bloom, J.; Chan, A.-W.; Cronin, E.; Decullier, E.; Easterbrook, P.J.; Von Elm, E.; Gamble, C.; et al. Systematic Review of the Empirical Evidence of Study Publication Bias and Outcome Reporting Bias. PLoS ONE 2008, 3, e3081. [Google Scholar] [CrossRef]

- Nissen, S.B.; Magidson, T.; Gross, K.; Bergstrom, C.T. Research: Publication bias and the canonization of false facts. Abstract 2016, 5, e21451. [Google Scholar] [CrossRef][Green Version]

- Rockwell, S.; Kimler, B.F.; Moulder, J.E. Publishing Negative Results: The Problem of Publication Bias. Radiat. Res. 2006, 165, 623–625. [Google Scholar] [CrossRef]

- Bialystok, E. Reshaping the mind: The benefits of bilingualism. Can. J. Exp. Psychol. 2011, 65, 229–235. [Google Scholar] [CrossRef]

- Bialystok, E.; Craik, F.I. How does bilingualism modify cognitive function? Attention to the mechanism. Psychon. Bull. Rev. 2022, 29, 1246–1269. [Google Scholar] [CrossRef]

- Lehtonen, M.; Fyndanis, V.; Jylkkä, J. The relationship between bilingual language use and executive functions. Nat. Rev. Psychol. 2023, 2, 360–373. [Google Scholar] [CrossRef]

- Paap, K.R. The role of componential analysis, categorical hypothesising, replicability and confirmation bias in testing for bilingual advantages in executive functioning. J. Cogn. Psychol. 2014, 26, 242–255. [Google Scholar] [CrossRef]

- de Bruin, A.; Treccani, B.; Della Sala, S. Cognitive advantage in bilingualism: An example of publication bias? Psychol. Sci. 2015, 26, 99–107. [Google Scholar] [CrossRef]

- Donnelly, S. Re-Examining the Bilingual Advantage on Interference-Control and Task-Switching Tasks: A Meta-Analysis. Ph.D. Thesis, City University of New York, New York, NY, USA, 2016. [Google Scholar]

- Lehtonen, M.; Soveri, A.; Laine, A.; Järvenpää, J.; de Bruin, A.; Antfolk, J. Is bilingualism associated with enhanced executive functioning in adults? A meta-analytic review. Psychol. Bull. 2018, 144, 394–425. [Google Scholar] [CrossRef]

- Bylund, E.; Antfolk, J.; Abrahamsson, N.; Olstad, A.M.H.; Norrman, G.; Lehtonen, M. Does bilingualism come with linguistic costs? A meta-analytic review of the bilingual lexical deficit. Psychon. Bull. Rev. 2022, 30, 897–913. [Google Scholar] [CrossRef] [PubMed]

- Schooler, J. Unpublished results hide the decline effect. Nature 2011, 470, 437. [Google Scholar] [CrossRef]

- Leivada, E.; Westergaard, M.; Duñabeitia, J.A.; Rothman, J. On the phantom-like appearance of bilingualism effects on neurocognition: (How) should we proceed? Biling. Lang. Cogn. 2021, 24, 197–210. [Google Scholar] [CrossRef]

- Ioannidis, J.P.; Trikalinos, T.A. Early extreme contradictory estimates may appear in published research: The Proteus phenomenon in molecular genetics research and randomized trials. J. Clin. Epidemiol. 2005, 58, 543–549. [Google Scholar] [CrossRef]

- Costa, A.; Hernández, M.; Costa-Faidella, J.; Sebastián-Gallés, N. On the bilingual advantage in conflict processing: Now you see it, now you don’t. Cognition 2009, 113, 135–149. [Google Scholar] [CrossRef]

- Paap, K.R.; Johnson, H.A.; Sawi, O. Bilingual advantages in executive functioning either do not exist or are restricted to very specific and undetermined circumstances. Cortex 2015, 69, 265–278. [Google Scholar] [CrossRef]

- Paap, K.R.; Mason, L.; Zimiga, B.; Ayala-Silva, Y.; Frost, M. The alchemy of confirmation bias transmutes expectations into bilingual advantages: A tale of two new meta-analyses. Q. J. Exp. Psychol. 2020, 73, 1290–1299. [Google Scholar] [CrossRef]

- Grundy, J.G. The effects of bilingualism on executive functions: An updated quantitative analysis. J. Cult. Cogn. Sci. 2020, 4, 177–199. [Google Scholar] [CrossRef]

- Ware, A.T.; Kirkovski, M.; Lum, J.A.G. Meta-Analysis Reveals a Bilingual Advantage That Is Dependent on Task and Age. Front. Psychol. 2020, 11, 1458. [Google Scholar] [CrossRef]

- Yurtsever, A.; Anderson, J.A.; Grundy, J.G. Bilingual children outperform monolingual children on executive function tasks far more often than chance: An updated quantitative analysis. Dev. Rev. 2023, 69, 101084. [Google Scholar] [CrossRef]

- Donnelly, S.; Brooks, P.J.; Homer, B.D. Is there a bilingual advantage on interference-control tasks? A multiverse meta-analysis of global reaction time and interference cost. Psychon. Bull. Rev. 2019, 26, 1122–1147. [Google Scholar] [CrossRef] [PubMed]

- Ioannidis, J.P.A. Why Most Published Research Findings Are False. PLoS Med. 2005, 2, e124. [Google Scholar] [CrossRef] [PubMed]

- Leivada, E.; Dentella, V.; Masullo, C.; Rothman, J. On trade-offs in bilingualism and moving beyond the Stacking the Deck fallacy. Biling. Lang. Cogn. 2022, 26, 550–555. [Google Scholar] [CrossRef]

- Del Giudice, M.; Crespi, B.J. Basic functional trade-offs in cognition: An integrative framework. Cognition 2018, 179, 56–70. [Google Scholar] [CrossRef]

- Darwin, C. On the Origin of Species by Means of Natural Selection or the Preservation of Favoured Races in the Struggle for Life; Murray, J., Ed.; EZreads Publications: London, UK, 1859. [Google Scholar]

- Leivada, E.; Mitrofanova, N.; Westergaard, M. Bilinguals are better than monolinguals in detecting manipulative discourse. PLoS ONE 2021, 16, e0256173. [Google Scholar] [CrossRef]

- Bialystok, E.; Kroll, J.F.; Green, D.W.; MacWhinney, B.; Craik, F.I. Publication Bias and the Validity of Evidence: What’s the Connection? Psychol. Sci. 2015, 26, 944–946. [Google Scholar] [CrossRef]

- Wetzels, R.; Wagenmakers, E.-J. A default Bayesian hypothesis test for correlations and partial correlations. Psychon. Bull. Rev. 2012, 19, 1057–1064. [Google Scholar] [CrossRef]

- Rouder, J.N.; Speckman, P.L.; Sun, D.; Morey, R.D.; Iverson, G. Bayesian t tests for accepting and rejecting the null hypothesis. Psychon. Bull. Rev. 2009, 16, 225–237. [Google Scholar] [CrossRef]

- Jeffreys, H. Theory of Probability; Oxford University Press: Oxford, UK, 1961. [Google Scholar]

- The Jamovi Project. Jamovi. Version 2.2. 2022. Available online: https://www.jamovi.org (accessed on 1 March 2023).

- Kruschke, J.K.; Liddell, T.M. Bayesian data analysis for newcomers. Psychon. Bull. Rev. 2017, 25, 155–177. [Google Scholar] [CrossRef]

- Westfall, P.H.; Johnson, W.O.; Utts, J.M. A Bayesian perspective on the Bonferroni adjustment. Biometrika 1997, 84, 419–427. [Google Scholar] [CrossRef]

- Guagnano, D. Bilingualism and Cognitive Development: A Study on the Acquisition of Number Skills. Ph.D. Thesis, University of Trento, Trento, Italy, 2010. [Google Scholar]

- Valian, V. Bilingualism and cognition. Biling. Lang. Cogn. 2015, 18, 3–24. [Google Scholar] [CrossRef]

- Inurritegui, S. Bilingualism and Cognitive Control. Ph.D. Thesis, KU Leuven, Leuven, Belgium, 2009. [Google Scholar]

- Kennedy, I. Irish Medium Education: Cognitive Skills, Linguistic Skills, and Attitudes towards Irish. Ph.D. Thesis, Bangor University, Bangor, UK, 2012. [Google Scholar]

- Perriard, B. L’effet du Bilinguisme sur la Mémoire de Travail: Comparaisons avec des Monolingues et étude du Changement de Langue dans des Tâches d’empan Complexe. Ph.D. Thesis, Université de Fribourg, Fribourg, Switzerland, 2015. [Google Scholar]

- Ryskin, R.A.; Brown-Schmidt, S.; Canseco-Gonzalez, E.; Yiu, L.K.; Nguyen, E.T. Visuospatial perspective-taking in conversation and the role of bilingual experience. J. Mem. Lang. 2014, 74, 46–76. [Google Scholar] [CrossRef]

- Sampath, K.K. Effect of bilingualism on intelligence. In Proceedings of the 4th International Symposium on Bilingualism, Tempe, AZ, USA, 30 April–3 May 2003; Cohen, J., McAlister, K.T., Rolstad, K., MacSwan, J., Eds.; Cascadilla Press: Somerville, MA, USA, 2005; pp. 2048–2056. [Google Scholar]

- Vongsackda, M. Working Memory Differences between Monolinguals and Bilinguals. Master’s Thesis, California State University, Long Beach, CA, USA, 2011. [Google Scholar]

- Weber, R.C. How Hot or Cool Is It to Speak Two Languages: Executive Function Advantages in Bilingual Children. Ph.D. Thesis, Texas A&M University, College Station, TX, USA, 2011. [Google Scholar]

- Weber, R.C.; Johnson, A.; Riccio, C.A.; Liew, J. Balanced bilingualism and executive functioning in children. Biling. Lang. Cogn. 2015, 19, 425–431. [Google Scholar] [CrossRef]

- Bak, T.H.; Vega-Mendoza, M.; Sorace, A. Never too late? An advantage on tests of auditory attention extends to late bilinguals. Front. Psychol. 2014, 5, 485. [Google Scholar] [CrossRef]

- Barac, R.; Moreno, S.; Bialystok, E. Behavioral and Electrophysiological Differences in Executive Control Between Monolingual and Bilingual Children. Child Dev. 2016, 87, 1277–1290. [Google Scholar] [CrossRef] [PubMed]

- Grote, K.S. The cognitive advantages of bilingualism: A focus on visual-spatial memory and executive functioning. Ph.D. Thesis, University of California, Merced, CA, USA, 2014. [Google Scholar]

- Luo, L.; Craik, F.I.M.; Moreno, S.; Bialystok, E. Bilingualism interacts with domain in a working memory task: Evidence from aging. Psychol. Aging 2013, 28, 28–34. [Google Scholar] [CrossRef]

- Sullivan, M.D.; Janus, M.; Moreno, S.; Astheimer, L.; Bialystok, E. Early stage second-language learning improves executive control: Evidence from, E.R.P. Brain Lang. 2014, 139, 84–98. [Google Scholar] [CrossRef] [PubMed]

- Viswanathan, M. Exploring the Bilingual Advantage in Executive Control: Using Goal Maintenance and Expectancies. Ph.D. Thesis, York University, York, UK, 2015. [Google Scholar]

- de Graaf, T.A.; Sack, A. When and How to Interpret Null Results in NIBS: A Taxonomy Based on Prior Expectations and Experimental Design. Front. Neurosci. 2018, 12, 915. [Google Scholar] [CrossRef]

- Blanco-Elorrieta, E.; Caramazza, A. On the need for theoretically guided approaches to possible bilingual ad-vantages: An evaluation of the potential loci in the language and executive control systems. Neurobiol. Lang. 2021, 2, 452–463. [Google Scholar] [CrossRef]

- Zvereva, E.L.; Kozlov, M.V. Biases in ecological research: Attitudes of scientists and ways of control. Sci. Rep. 2021, 11, 226. [Google Scholar] [CrossRef]

- Sadat, J.; Costa, A.; Alario, F.X. Noun-phrase production in bilinguals. In Proceedings of the XVI Conference of the European Society for Cognitive Psychology (ESCOP), Cracow, Poland, 2–5 September 2009. [Google Scholar]

- Sadat, J.; Martin, C.D.; Alario, F.X.; Costa, A. Characterizing the Bilingual Disadvantage in Noun Phrase Pro-duction. J. Psycholinguist. Res. 2012, 41, 159–179. [Google Scholar] [CrossRef] [PubMed]

- Bialystok, E.; Craik, F.I.; Luk, G. Lexical access in bilinguals: Effects of vocabulary size and executive control. J. Neurolinguist. 2008, 21, 522–538. [Google Scholar] [CrossRef]

- Higby, E.; Cahana-Amitay, D.; Vogel-Eyny, A.; Spiro, A.; Albert, M.L.; Obler, L.K. The Role of Executive Functions in Object- and Action-Naming among Older Adults. Exp. Aging Res. 2019, 45, 306–330. [Google Scholar] [CrossRef] [PubMed]

- Chabal, S.; Hayakawa, S.; Marian, V. How a picture becomes a word: Individual differences in the development of language-mediated visual search. Cogn. Res. Princ. Implic. 2021, 6, 2. [Google Scholar] [CrossRef] [PubMed]

- Konno, K.; Pullin, A.S. Assessing the risk of bias in choice of search sources for environmental meta-analyses. Res. Synth. Methods 2020, 11, 698–713. [Google Scholar] [CrossRef]

- Hopewell, S.; Loudon, K.; Clarke, M.J.; Oxman, A.D.; Dickersin, K. Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst. Rev. 2009, 2010, MR000006. [Google Scholar] [CrossRef]

- Simonsohn, U. The Funnel Plot is Invalid Because of this Crazy Assumption: R(n,d) = 0. Data Colada: Thinking About Evidence, and Vice Versa. 2017. Available online: http://datacolada.org/58 (accessed on 2 August 2023).

| Positive | Mixed | Negative | Null | Published |

|---|---|---|---|---|

| 40 | 27/40 | |||

| 47 | 20/47 | |||

| 4 | 2/4 | |||

| 13 | 3/13 | |||

| TOTAL | 52/104 | |||

| Post Hoc Comparisons—Category | |||||

|---|---|---|---|---|---|

| Prior Odds | Posterior Odds | BF10,U | Error% | ||

| Positive | Null | 0.414 | 3.851 | 9.297 | 7.08 × 10−6 |

| Mixed | 0.414 | 1.057 | 2.551 | 9.37 × 10−4 | |

| Negative | 0.414 | 0.213 | 0.513 | 5.39 × 10−4 | |

| Null | Mixed | 0.414 | 0.241 | 0.583 | 0.0109 |

| Negative | 0.414 | 0.263 | 0.635 | 3.30 × 10−4 | |

| Mixed | Negative | 0.414 | 0.186 | 0.450 | 2.30 × 10−5 |

| Unpublished Abstracts That Do Not Find Evidence for Bilingual Advantages (List Taken from [9]) | Status in 2023 | Reference |

|---|---|---|

| Guagnano, D., Rusconi, E., Job, R., & Cubelli, R. (2009). Bilingualism and the acquisition of number skills. | Published | Guagnano, D. (2010). Bilingualism and cognitive development: a study on the acquisition of number skills. PhD thesis, University of Trento. [35] |

| Humphrey, A. D., & Valian, V. V. (2012). Multilingualism and cognitive control: Simon and Flanker task performance in monolingual and multilingual young adults. | Published | Valian, V. (2015). Bilingualism and cognition. Bilingualism: Language and Cognition 18, 3–24. [36] |

| Inurritegui, S., & D’Ydewalle, G. (2008). Bilingual advantage inhibited? Factors affecting the relation between bilingualism and executive control. | Published | Inurritegui, S. (2009). Bilingualism and cognitive control. PhD thesis, KU Leuven. [37] |

| Kennedy, I. (2012). Immersion education in Ireland: Linguistic and cognitive skills. | Published | Kennedy, I. (2012). Irish medium education: cognitive skills, linguistic skills, and attitudes towards Irish. PhD thesis, Bangor University. [38] |

| Mallery, S. T. (2005). Bilingualism and the Simon task: Congruency switching influences response latency differentially. | Not published | |

| Mallery, S. T., Llamas, V. C., & Alvarez, A. R. (2006). Performance Advantage on the Tower of London-DX for Monolingual vs. Bilingual Young Adults. | Not published | |

| Perriard, B., & Camos, V. (2011). Working memory capacity in French-German bilinguals. | Published | Perriard, B. (2015). L’effet du bilinguisme sur la mémoire de travail: comparaisons avec des monolingues et étude du changement de langue dans des tâches d’empan complexe. PhD thesis: Université de Fribourg. [39] |

| Ryskin, R. A., & Brown-Schmidt, S. (2012). A bilingual disadvantage in linguistic perspective adjustment. | Published | Ryskin, R. A., Brown-Schmid, S., Canseco-Gonzalez, E., Yiu, L. K. & Nguyen, E. T. (2014). Visuospatial perspective-taking in conversation and the role of bilingual experience. Journal of Memory and Language 74, 46–76. [40] |

| Sampath, K. K. (2003). Effects of bilingualism on intelligence. | Published | Sampath, K. K. (2005). Effect of bilingualism on intelligence. In J. Cohen, K. T. McAlister, K. Rolstad & J. MacSwan (eds.), Proceedings of the 4th International Symposium on Bilingualism, 2048-2056. Somerville, MA: Cascadilla Press. [41] |

| Tare, M., & Linck, J. A. (2011). Bilingual cognitive advantages reduced when controlling for background variables. | Not published | |

| Vongsackda, M., & Ivie, J. L. (2010). Working memory differences between monolinguals and bilinguals. | Published | Vongsackda, M. (2011). Working memory differences between monolinguals and bilinguals. MA thesis: California State University. [42] |

| Weber, R. C., Johnson, A., & Wiley, C. (2012). Hot and cool executive functioning advantages in bilingual children. | Published | 1. Weber R. C. (2011). How hot or cool is it to speak two languages: Executive function advantages in bilingual children. PhD thesis: Texas A&M University. [43] 2. Weber, R. C., Johnson, A., Riccio, C. A. & Liew, J. (2016). Balanced bilingualism and executive functioning in children. Bilingualism: Language and Cognition 19, 425–431. [44] |

| Unpublished Abstracts That Find Evidence for Bilingual Advantages (List Taken from [9]) | Status in 2023 | Reference |

|---|---|---|

| Bak, T. H., Everington, S., Garvin, S. J., & Sorace, A. (2008). Differences in performance on auditory attention tasks between bilinguals and monolinguals. | Published | Bak, T. H.,Vega-Mendoza, M. & Sorace, A. (2014). Never too late? An advantage on tests of auditory attention extends to late bilinguals. Frontiers in Psychology 5: 485. [45] |

| Barac, R., Moreno, S., & Bialystok, E. (2010). Inhibition of responses in young monolingual and bilingual children: Evidence from ERP. | Published | Barac, R., Moreno, S., & Bialystok, E. (2016). Behavioral and electrophysiological differences in executive control between monolingual and bilingual children. Child Development 87(4), 1277–1290. [46] |

| Boros, M. Marzecova, A., & Wodniecka, Z. (2011). Investigating the bilingual advantage on executive control with the verbal and numerical Stroop task: Interference or facilitation account? | Not published | |

| Chin, S., & Sims, V. K. (2006). Working memory span in bilinguals and second language learners. | Not published | * |

| Díaz, U., Facal, D., González, M., Buiza, C., Morales, B., Sobrino, C., Urdaneta, E., & Yanguas, J. (2011). The use of bilingualism and occupational complexity measures as proxies for cognitive reserve: results from a community-dwelling elderly population in the north of Spain. | Not published | |

| Duncan, H., McHenry, C., Segalowitz, N., & Phillips, N. A. (2011). Bilingualism, aging, and language-specific attention control. | Not published | |

| Friesen, D. C., Hawrylewicz, K., & Bialystok, E. (2012). Investigating the bilingual advantage in a verbal conflict task. | Not published | |

| Grote, K. S., & Chouinard, M. M. (2010). The potential benefits of speaking more than one language on non-linguistic cognitive development. | Published | Grote, K. S. (2014). The cognitive advantages of bilingualism: A focus on visual-spatial memory and executive functioning. PhD thesis: University of California, Merced. [47] |

| Luo, L., Seton, B., Bialystok, E., & Craik, F. I. M. (2008). The role of bilingualism in retrieval control: Specificity and selectivity. | Published | Luo, L., Craik, F. I. M., Moreno, S., & Bialystok, E. (2013). Bilingualism interacts with domain in a working memory task: Evidence from aging. Psychology and Aging 28, 28–34. [48] |

| Luo, L., Sullivan, M., Latman, V., & Bialystok, E. (2011). Verbal recognition memory in bilinguals: The word frequency effect. | Not published | |

| Sullivan, M., Moreno, S., & Bialystok, E. (2010). Effects of early-stage L2 learning on nonverbal executive control. | Published | Sullivan, M. D., Janus, M., Moreno, S., Astheimer, L., & Bialystok, E. (2014). Early stage second-language learning improves executive control: evidence from ERP. Brain and Language 139, 84–98. [49] |

| Viswanathan, M., & Bialystok, E. (2007). Effects of bilingualism and aging in multitasking. | Not published | |

| Viswanathan, M. & Bialystok, E. (2007). Exploring the bilingual advantage in executive control: The role of expectancies. | Published | Viswanathan, M. (2015). Exploring the bilingual advantage in executive control: Using goal maintenance and expectancies. PhD thesis: York University. [50] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leivada, E. A Classification Bias and an Exclusion Bias Jointly Overinflated the Estimation of Publication Biases in Bilingualism Research. Behav. Sci. 2023, 13, 812. https://doi.org/10.3390/bs13100812

Leivada E. A Classification Bias and an Exclusion Bias Jointly Overinflated the Estimation of Publication Biases in Bilingualism Research. Behavioral Sciences. 2023; 13(10):812. https://doi.org/10.3390/bs13100812

Chicago/Turabian StyleLeivada, Evelina. 2023. "A Classification Bias and an Exclusion Bias Jointly Overinflated the Estimation of Publication Biases in Bilingualism Research" Behavioral Sciences 13, no. 10: 812. https://doi.org/10.3390/bs13100812

APA StyleLeivada, E. (2023). A Classification Bias and an Exclusion Bias Jointly Overinflated the Estimation of Publication Biases in Bilingualism Research. Behavioral Sciences, 13(10), 812. https://doi.org/10.3390/bs13100812