Simple Summary

Precision livestock farming (PLF) techniques facilitate automated, continuous, and real-time monitoring of animal behaviour and physiological responses. They also have the potential to improve animal welfare by providing a continuous picture of welfare states, thus enabling fast actions that benefit the flock. Using a PLF technique based on images, the present study aimed to test a machine learning tool for measuring the number of hens on the ground and identifying the number of dust-bathing hens in an experimental aviary—a complex environment—by comparing the performance of two machine learning (YOLO, You Only Look Once) models. The results of the study revealed that the two models had a similar performance; however, while PLF was successful in evaluating the distribution of hens on the floor and predicting undesired events, such as smothering due to overcrowding, it failed to identify the occurrence of comfort behaviours, such as dust bathing, which are part of the evaluation of hen welfare.

Abstract

Image analysis using machine learning (ML) algorithms could provide a measure of animal welfare by measuring comfort behaviours and undesired behaviours. Using a PLF technique based on images, the present study aimed to test a machine learning tool for measuring the number of hens on the ground and identifying the number of dust-bathing hens in an experimental aviary. In addition, two YOLO (You Only Look Once) models were compared. YOLOv4-tiny needed about 4.26 h to train for 6000 epochs, compared to about 23.2 h for the full models of YOLOv4. In validation, the performance of the two models in terms of precision, recall, harmonic mean of precision and recall, and mean average precision (mAP) did not differ, while the value of frame per second was lower in YOLOv4 compared to the tiny version (31.35 vs. 208.5). The mAP stands at about 94% for the classification of hens on the floor, while the classification of dust-bathing hens was poor (28.2% in the YOLOv4-tiny compared to 31.6% in YOLOv4). In conclusion, ML successfully identified laying hens on the floor, whereas other PLF tools must be tested for the classification of dust-bathing hens.

1. Introduction

Precision livestock farming (PLF) techniques facilitate the automated, continuous, and real-time monitoring of animal behaviour and physiological responses both at an individual level and at a group level, depending on the farmed species [1]. Under the conditions of poultry production, PLF tools can greatly assist farmers in taking the correct action [2,3,4,5] to guarantee the health and welfare of thousands of animals when environmental conditions are unfavourable [3], illness spreads [6,7], or abnormal behaviours challenge bird welfare and survival [8,9]. In poultry, these systems have received increased attention on a global scale starting from 2020. Commercial applications include several sensors for the continuous measuring of environmental conditions (temperature, ambient dust, relative humidity, vibration, ammonia concentration, carbon dioxide concentrations), including a camera system (Fancom BV) to monitor the distribution and activity of chickens and a sensor for detecting shell thickness and cracked eggs [10].

PLF tools based on images recorded by cameras have been used to obtain information about farm/animal temperature levels, the quality of activity, and animal behaviours, as well as performance [11]. Some of these studies used images to evaluate changes in bird behaviour following changes in environmental conditions or stocking density, even at the nest level in the case of laying hens [12]. The commercially available eYeNamic™ (Fancom BV, The Netherlands) uses images of animal distribution in broiler chicken farms to alert farmers about malfunctioning environmental control and feeding systems and has been found to be effective in identifying 95% of the problems occurring in a production cycle [9]. As for laying hens, since the housing environment is more complex compared to broiler chickens, the development of PLF tools needs to be specific to housing.

In Europe, more than 44% of hens are kept in enriched cages [13], which are intended to provide additional space and resources for satisfying hen behavioural needs; however, conventional cage-free barn systems, including aviaries and free-range and organic systems with outdoor access, are becoming popular and are expected to replace cages soon. In fact, the European Resolution P9_TA(2021)0295 calls for a phasing out of cages by 2027 and the full implementation of cage-free systems, while the European Green Deal and farm-to-fork strategies require more sustainable animal production systems. However, experience in the field and the literature have identified weaknesses in addition to strengths of cage-free systems for the production and welfare of hens [14,15]. As such, we require further insights regarding the identification of on-farm solutions for the control of challenging situations, as well as for farmers’ actions, to prevent major welfare and health issues, where PLF tools could provide great help.

Hens are often synchronous in their behaviours (e.g., movement, dust bathing, laying), which can lead to overcrowding in specific parts of the aviary and provoke unusual behaviours, such as flock piling, i.e., dense clustering of hens mainly along walls and in corners which can result in smothering and high losses [16,17]. In cage-free systems, the possibility of monitoring hen crowding is also crucial for other behaviours, where the expression of some comfort behaviours, such as dust bathing [18,19], may be affected by the available space on the ground [16,20].

Image analysis is a suitable methodology that uses cameras to estimate a number of objects (e.g., number of hens). Among the different image analysis techniques, machine learning (ML) algorithms have proven to be the most effective for object detection. The most-used ML methods in agriculture include dimension reduction, regressions, clustering, k-means, Bayesian models, k-nearest neighbours, decision trees, support vector machines, and artificial neural networks [21,22]. The foundation of artificial neural networks is a network of interconnected nodes that are arranged in a certain topology. When it comes to deep neural networks, there are numerous layers in addition to the single layer of the perceptron [22]. Deep neural networks are commonly referred to as deep learning. The latter has a higher performance and surpasses other ML strategies in image processing according to a meta-analysis of ML by Kamilaris and Prenafeta-Boldú [21]. Convolutional neural networks (CNN) are one of the most significant deep learning models used for image interpretation and computer vision [21]. They can be used to analyse, combine, and extract colour, geometric, and textural data from images. The two primary frameworks on which object-identification models are based are as follows. The first is based on region proposals and classifies each proposal into several object categories; the second treats object detection as a regression or classification problem [23]. The output of object detection is typically bounding boxes over the image, but some models produce semantic segmentation as the result. R-CNN, Faster R-CNN, and Mask R-CNN are examples of region-proposed object-detection algorithms. At the same time, You Only Look Once (YOLO) [24,25,26] and single shot detector (SSD) are examples of regression/classification-based models [27].

Thus, using a PLF technique based on images, the present study aimed to test an ML tool to measure the number of hens on the ground and identify the number of dust-bathing hens in an experimental aviary. In addition, the performance of two YOLO models was compared, with the aim of developing an alert tool for abnormal crowding and a monitoring tool for comfort behaviours and welfare indicators.

2. Materials and Methods

2.1. Animals and Housing

A total of 1800 Lohmann Brown-Classic hens (Lohmann Tierzucht GmbH, Cuxhaven, Germany), aged 17 weeks, were housed at the experimental farm “Lucio Toniolo” of the University of Padova (Legnaro, Padova, Italy) and were randomly divided into 8 pens in an experimental aviary system (225 hens per pen; 9 hens/m2 stocking density), where they were monitored until 45 weeks of age within a specific research project [28].

The experimental farm building was equipped with a cooling system, forced ventilation, radiant heating, and controlled lighting systems. The experimental aviary specifically set up in the farm consisted of two tiers equipped with collective nests (1 nest per 60 hens) that were closed by red plastic curtains, and a third level with only perches and feeders. The two tiers also included perches, nipple drinkers, and automatic feeding systems. The whole aviary system was 2.50 m wide × 19.52 m long × 2.24 m high, and two corridors per side were available, each 1.70 m wide. Thus, free ground space was 5.90 m wide × 19.52 m long. Litter was based on manure of hens. The aviary was divided into 8 pens each with a length of 2.44 m.

2.2. Video Recordings and Test Sets

The aviary was equipped with a real-time video recording system, which used 48 cameras (infrared mini-dome bullet 4 mp; resolution 1080 p) (HAC-HDW1220MP, Zhejiang Dahua Technology Co., Ltd., Hangzhou, China) and 2 full HD video recorders (NVR2116HS-4KS2, Zhejiang Dahua Technology Co., Ltd., Hangzhou, China). The cameras were located to record hens on the ground, hens on the three levels of the aviary, and hens in the nests. One camera per pen was used to record the animals on the floor. Cameras were hung at a height of 2 m with a dome angle of 180° in the middle of each pen. The following settings were used: video recording frame rate at 30 fps, backlight compensation as digital wide dynamic range, auto white balance, and video compression as H.265.

Once per week (Saturday) during the trial from 38 to 45 weeks of age, the behaviour of hens was video recorded from 5:30 until 19:30 during the light hours when the animals were active. Of all video-recorded data, 112 hours of video recordings of the hens on the ground floor were used. The software “Free Video to JPG Converter (v. 5.0.101)” was used to extract 1 frame per second throughout the 112 hours, totalling 403,200 extracted frames. A limited number of images were selected from the whole dataset to avoid autocorrelation between frames and achieve a significant number of labels for hens on the floor and dust-bathing hens. In addition, a sufficient but not excessive number of images makes this methodology applicable for commercial applications by farmers and consultants. Then, 1150 images were randomly selected for the purposes of the present study. A total of 1100 images were used as the training set; the remaining 50 were used as the validation set.

2.3. Set up of the Object-Detection Algorithm

YOLO addresses object detection as a single regression problem, avoiding the region proposal, classification, and duplicating elimination pipeline. In recent years, different versions of YOLO have been proposed (YOLO 9000, YOLOv2-v3-v4-v5, Fast YOLO, versions tiny), but we used two versions of YOLO: YOLOv4-tiny and YOLOv4 [24,25,26]. They are both quick convolutional neural networks that can classify images based on bounding box labelling [29,30]. The two versions of YOLOv4 were selected since they provide a good trade-off between accuracy and digital effort [31].

YOLOv4 is more precise than YOLOv4-tiny [32] because it comprises a higher number of convolutional layers (53 vs. 36). YOLOv4-tiny is expected to be faster but less accurate in its predictions given its reduced number of convolutional layers.

Training and data analysis of YOLO were carried out using the Python programming language. Darknet framework, an open-source neural network framework written in C and CUDA, was installed on a virtual machine on Google Colaboratory. YOLOv4 and YOLOv4-tiny are based on the CSPDarknet53, which includes cross-stage partial connections to the Darknet framework [33]. The cross-stage partial connections divide the input features into two groups: one group is processed by the convolutional layer, while the second sidesteps the convolutional layers and is included in the input for the following layer [34].

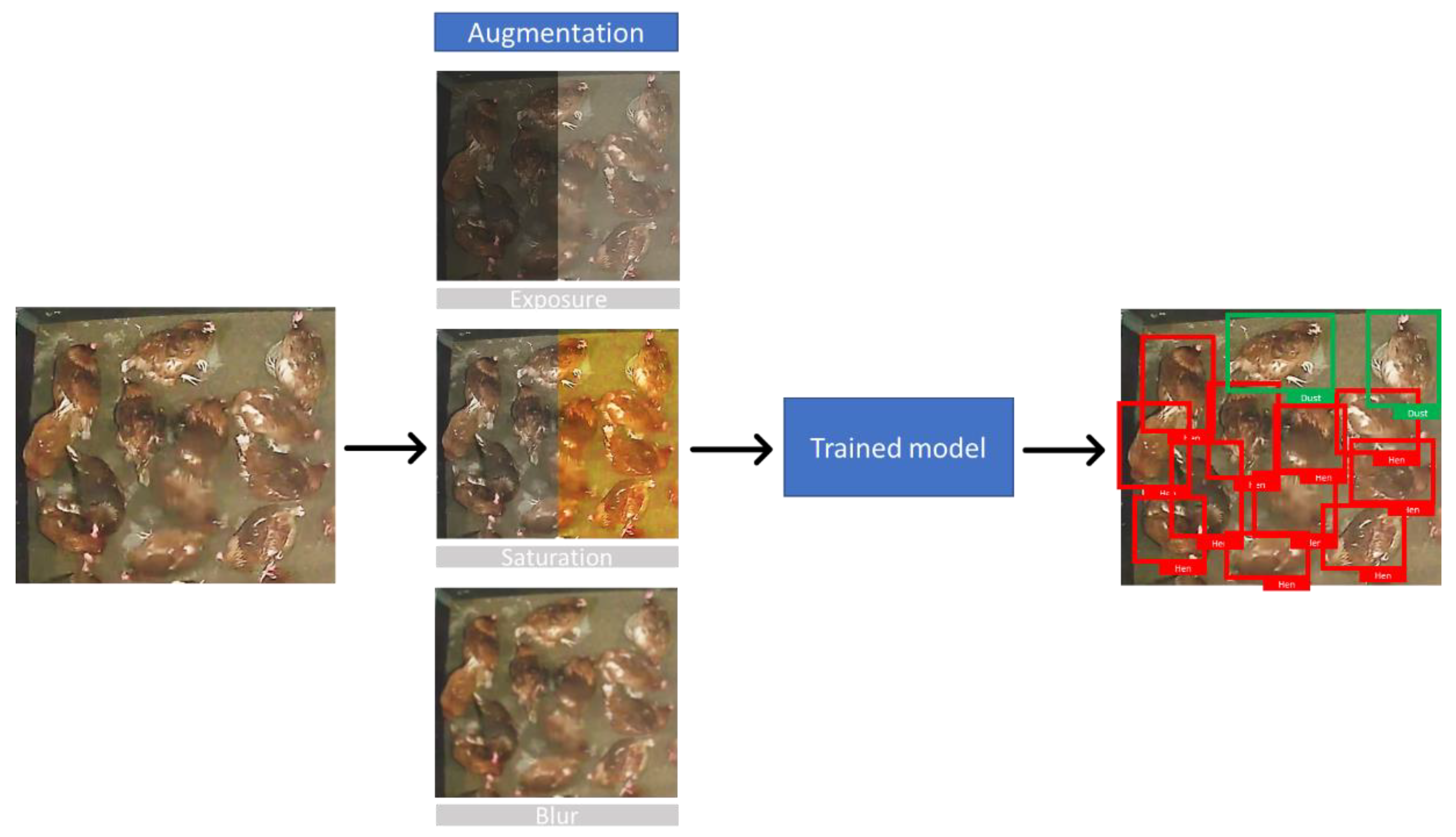

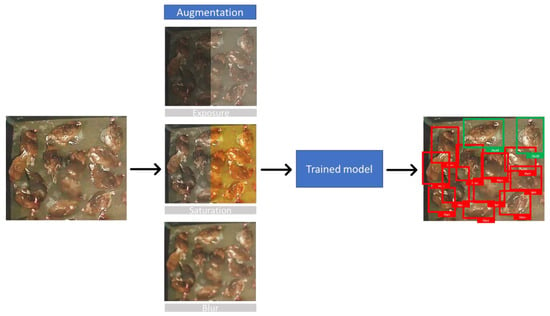

From the whole dataset (403,200 frames), we selected a sample of 1150 images for training. The choice of the sample size was based on the slow movement of animals, for which the images acquired every second slightly differed from each other. Before training, all 1150 frames were labelled by bounding boxes distinguishing between “dust-bathing” hens (hens rotating their body in the litter on the ground) and hens on the “ground” (all the other hens, excluding those climbing towards or landing from the aviary) (Figure 1). The images collected from the dataset mentioned above were manually labelled, drawing bounding boxes on each bunch in the image using YOLO_label V2 project [35]. YOLO_label allows us to create annotations (labels) for the object-detection algorithm using the YOLO_label format, which consists of five columns for each object (object-class, x, y, width, and height).

Figure 1.

A dust-bathing hen (in red boxes) in three consecutive frames, as labelled for the training of the model.

2.4. Training and Validation

Using the specified dataset, the YOLOv4 and YOLOv4-tiny models were trained individually. The pre-trained weights provided by the YOLO developers were used in the training operations [33,36]. Each model performed a 6000-epoch training phase during which detailed calibration of the training hyperparameters was carried out. An epoch is defined as the duration required for one training step. For the best training results, hyperparameters can be modified in the YOLO configuration file. The first section of a configuration file lists the batch size (number of photos utilized each epoch) as well as the dimensions of the resampled images used for training (width and height). An image batch size of 64 images with a pixel size of 608 × 608 was used for training and detection. Online data augmentation was activated in the configuration file for YOLOv4 full models. Data augmentation techniques were introduced in the training process for unobserved data, which were obtained from combinations and modification of the input dataset. In YOLO models, data augmentation randomly applies graphics modification to the input images [37]. For each training period, a different approach to online data augmentation can be applied. In the current training, images were augmented in terms of saturation and exposure, using a coefficient of 1.5. Hue value was randomly augmented with a coefficient of 0.05 (Figure 2). Additionally, random blur and mosaic effects were applied to the input photos. Because of the mosaic created by combining pieces of many photographs to produce a new tiled image, blur increased the fuzziness of the input images. The starting learning rate and its scheduler were chosen in the configuration file. The learning rate controls the adaptation of the models according to the error estimation in each training epoch. The initial learning rate was 0.002 for YOLOv4 and YOLOv4-tiny training. A total of 384,000 augmented images were used based on the batch size (64 images) and the number of epochs (6000).

Figure 2.

Augmentation and classification scheme for laying hens on the floor (red boxes) and dust-bathing hens (green boxes).

All models were trained using the stochastic gradient descent with warm re-starts (SGDR) scheduler [38]. Following a cosine cycle, the SGDR reduces the learning rate from its initial value all the way down to zero. The user specifies the number of epochs for each cycle. In order to increase the number of epochs for a cycle during training, the cycle may be multiplied by a coefficient. The initial SGDR cycle used in this study had a multiplier coefficient of 2- and it lasted for 1000 epochs.

The results of the training were evaluated for precision, recall, and F1-score, as well as mean average precision (mAP) and frame per second (FPS). The F-1 score, precision, and recall were calculated according to Equation (1): Performance metric used to evaluate models’ performances.

The F-1 score represents the harmonic mean of precision and recall, and it was introduced by Dice [39] and Sørensen [40]. Intersection over Union (IoU) [41] and mAP were used as performance metrics. The IoU measures the grade of overlap between the predicted and the labelled bounding boxes. The mAP summarizes the average detection precision and represents the area under the precision–recall curve at a defined value of IoU. The mAP@50 represents the area under the precision–recall curve with a grade of overlapping bounding boxes of 50%. Performance metrics calculation was evaluated on the validation datasets by running the object-detection algorithm obtained by the training.

Frame per second (FPS) expresses the speed achieved by the neural network, that is, the number of images per second that the network can process. Colab provides a virtual machine with the Ubuntu operating system (Canonical Ltd., London, UK), equipped with an Intel Xeon (Intel Corporation, Santa Clara, CA, USA) processor with two cores at 2.3 GHz. In Colab, 25 GB of random-access memory (RAM) is available. Training and detection tasks were performed taking advantage of a Tesla P100 GPU (NVIDIA, Santa Clara, CA, USA) with CUDA parallel computing platform version 10.1 and 16 GB of dedicated RAM.

3. Results

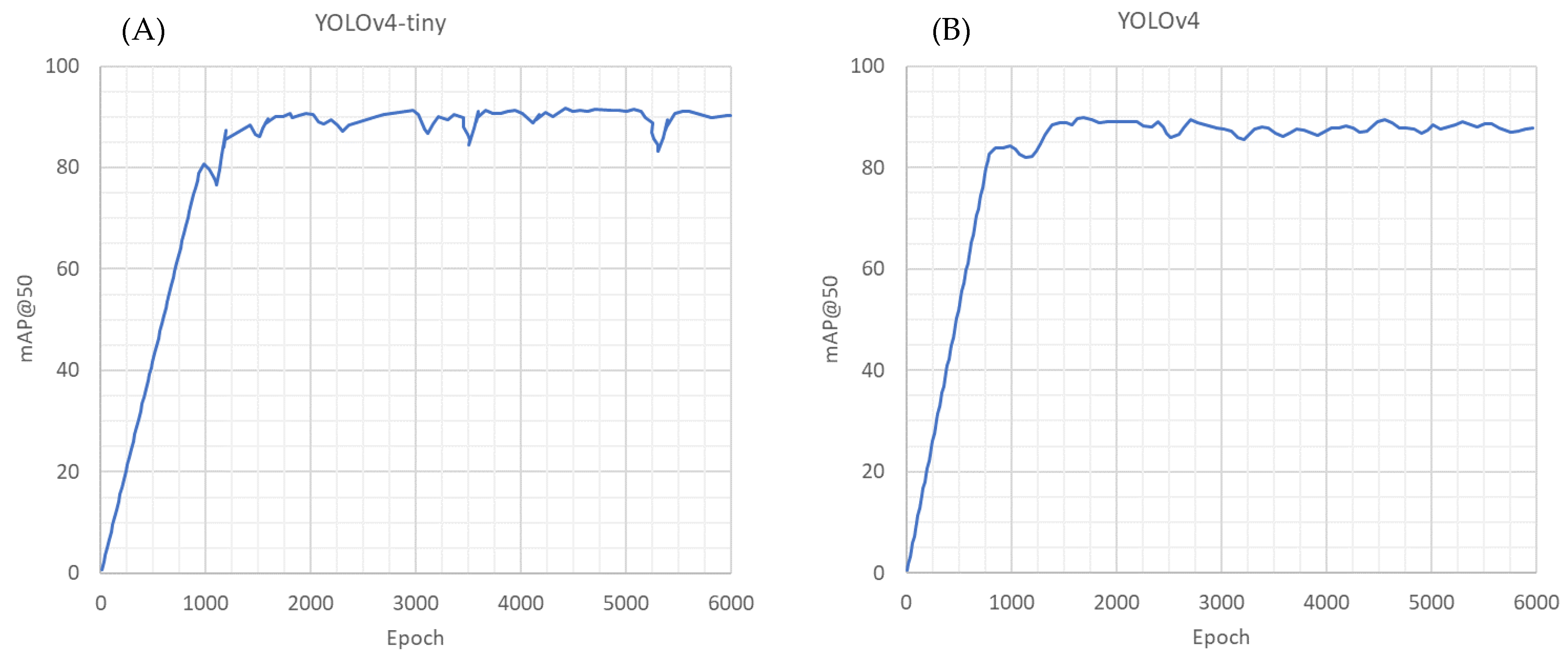

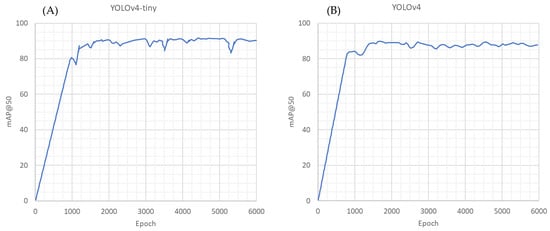

For each YOLO model, the training process (Figure 3) was conducted using the indicated configuration. The model performance on the test dataset was automatically estimated during training. Every 100 epochs, the mAP@50 was calculated, with the last calculation occurring at 6000 epochs. Table 1 reports the last and the best mAP@50 achieved during training, along with the total time spent for 6000 training epochs. YOLOv4-tiny needed about 4.26 hours to train for 6000 epochs (final mAP@50 of 90.3%, best mAP@50 91.7%), compared to about 23.2 hours for the full models of YOLOv4 (final mAP@50 of 87.8%, best mAP@50 90.0%).

Figure 3.

Area under the precision–recall curve with a grade of overlapping bounding boxes of 50% (mAP@50) of YOLOv4-tiny training (A) and YOLOv4 training (B).

Table 1.

Training time, final mAP@50, and best mAP@50 (area under the precision–recall curve with a grade of overlapping bounding boxes of 50%) of the two trained models obtained on the test dataset.

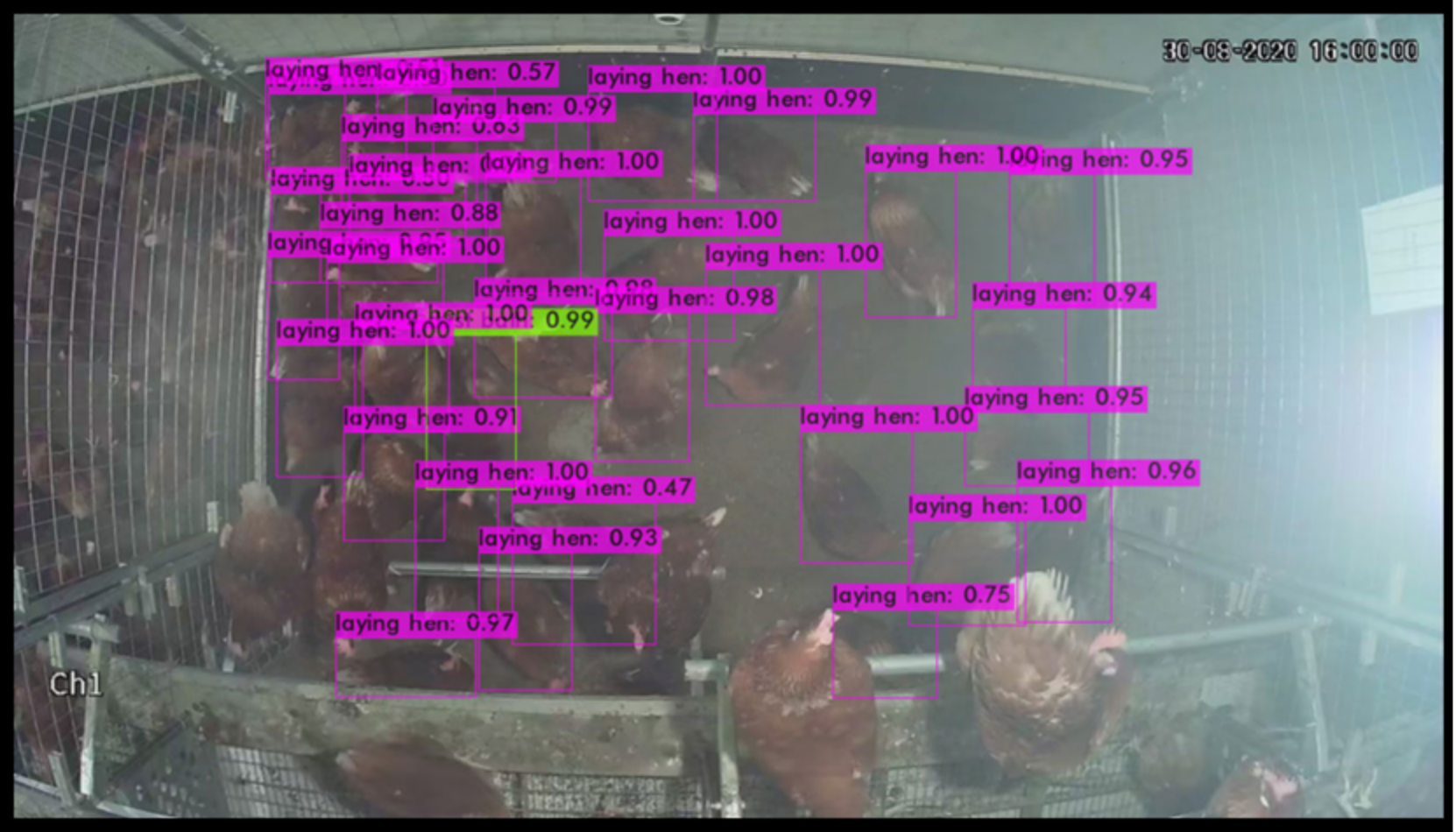

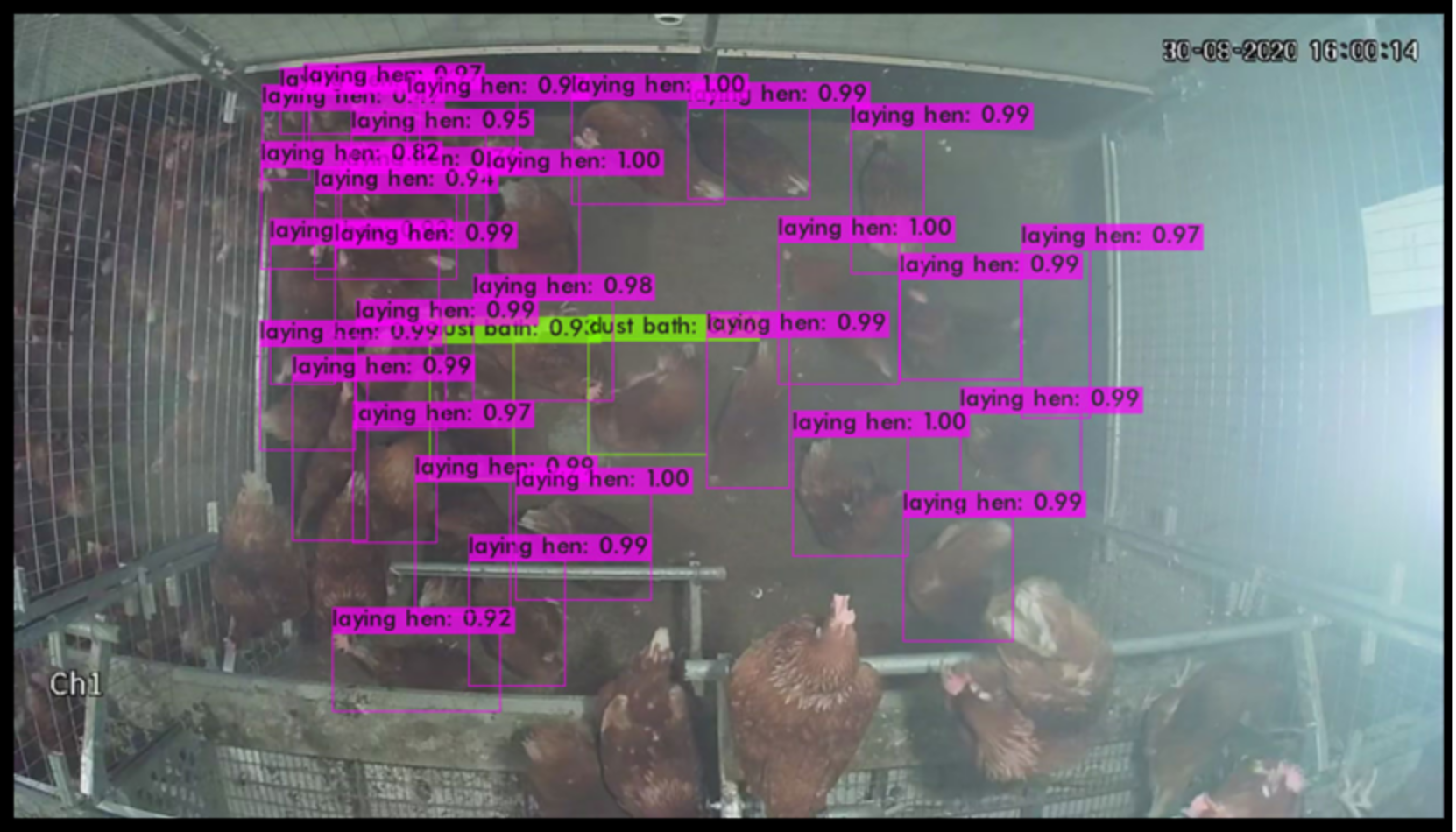

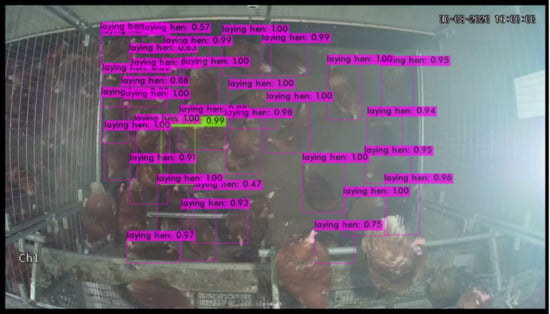

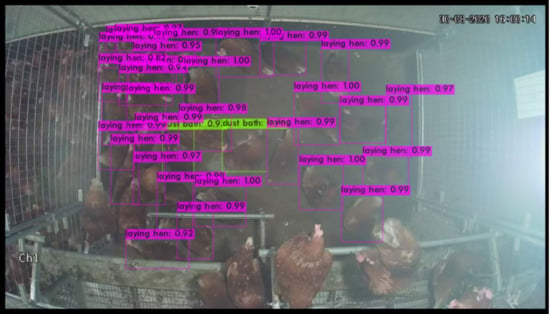

Validation of the External Dataset

Validation was carried out by running the object-detection algorithm on the validation set (50 images randomly selected), which was not used for the training (Figure 4 and Figure 5). Performance of the two models regarding IoU, precision, recall, F1-score, and mAP did not substantially differ, while FPS was largely lower in the case of YOLOv4 compared to the tiny version (31.35 vs. 208.5) (Table 2). Mean average precision stood at 61.4% and 62.9%, while large differences were recorded in mAP for the classification of hens on the floor, with high values around 94%, and a poor classification of dust-bathing hens, ranging from 28.2% in the YOLOv4-tiny compared to 31.6% in the YOLOv4.

Figure 4.

Object-detection classification performed on an image of the validation set using YOLOv4-tiny. Purple boxes identify images as laying hen with the corresponding confidence interval. Green boxes identify images as dust-bathing hens with the corresponding confidence interval.

Figure 5.

Object-detection classification performed on an image of the validation set using YOLOv4. Purple boxes identify images as laying hen with the corresponding confidence interval. Green boxes identify images as dust-bathing hens with the corresponding confidence interval.

Table 2.

Metrics of the two models on the validation dataset (average precision for each class) for the classification of hens on the floor and dust-bathing hens.

4. Discussion

Previously, PLF tools based on images have been successfully used to detect foot problems in broiler chickens [8], to classify sick and healthy birds based on their body posture [6], and to classify species-specific behaviours with an overall success rate of 97% and 70% in calibration and validation, respectively [42].

Pu et al. [43] proposed an automatic CNN to classify the behaviours of broiler chickens based on images acquired by a depth camera under three stocking-density conditions (high, medium, and low crowding in a poultry farm). The latter CNN architecture reached an accuracy of 99.17% in the classification of flock behaviours.

In poultry breeders, six behaviours were classified under low- and high-stocking density in a combined wire cage system with two pens and using the deep-learning YOLOv3 algorithm [44]. The model always identified several behaviours with different but always high degrees of accuracy, i.e., mean precision rate of 94.72% for mating; 94.57% for standing; 93.10% for feeding; 92.02% for spreading; 88.67% for fighting; and 86.88% for drinking. The accuracy of the model was lower in the high-density cages compared to the low-density cages due to shielding among the birds. Based on these results, the same authors succeeded in evaluating animal welfare on different observation days based on the frequencies of mating events and abnormal behaviours, where the latter were related to changes by ±3% in fighting, feeding, and resting compared to a fixed baseline. Recently, Siriani et al. [45] successfully used YOLO to detect laying hens in an aviary with 99.9% accuracy in low-quality videos.

The latest systems based on image analyses use neural network technology to obtain information about animal health and welfare. Regarding health, [7] used CNN algorithms as a tool to detect the emergence of gut infections based on faeces images in broiler chickens kept in multi-tier cages by Faster R-CNN and YOLOv3, with average precisions of 93.3% and 84.3%, respectively. Similarly, Mbelwa et al. [46] used CNN technology to predict broiler chicken health statuses based on images of bird droppings.

In our study, according to the validation dataset results, hens on the floor and dust-bathing hens were detected with an average precision of 61.4% and 62.9% for YOLOv4-tiny and YOLOv4, respectively. Considering the detection of hens on the floor individually, the average precisions were 94.5% and 94.1% for YOLOv4-tiny and YOLOv4, respectively. These latter performance metrics are comparable to those obtained by previous studies [45,47]. On the other hand, the average precision for the classification of dust-bathing hens was only 28.2% and 31.6% for YOLOv4-tiny and YOLOv4, respectively. Thus, the main differences found between the two versions of YOLO were related to the identification of dust bathing, since YOLOv4 was able to achieve an accuracy higher than 3.4% compared to YOLOv4-tiny. On the other hand, YOLOv4-tiny was able to classify hens on the floor and dust-bathing hens much faster than YOLOv4 (208.5 FPS vs. 31.35 FPS).

In our study, although the value of precision in the classification of dust-bathing hens was much lower than that obtained for classification of hens on the floor, this value represents the first application of YOLO for the identification of dust-bathing behaviour. In fact, dust bathing has a functional purpose in laying hens since it permits them to reset their feathers and remove excess lipids from the skin, and the process contributes to their protection from parasites [19]. However, dust bathing is an active behaviour that includes many actions around a litter area (e.g., bill raking, head rubbing, scratching with one and/or two legs, side lying, ventral lying, vertical wing shaking), as well as a synchronous behaviour (which can imply overcrowding of the litter area) [19]. Thus, both the identification of dust-bathing hens during labelling and the classification of dust-bathing hens by the ML algorithms were likely affected by the different positions that dust-bathing hens assume, thus adding a considerable level of uncertainty to our findings as the identification of the right moment when the dust bath begins is stochastic. To reduce the effects of this uncertainty, online real-time tracking could be implemented in order to consider the temporal variation and movements of hens [47]. Finally, a limited number of images (1150 images, 122 MB) was sufficient to train an object-detection algorithm with good results on hens on the floor by using an affordable and widespread platform (Google Colaboratory), thus minimizing the digital impact [48]. In fact, it is increasingly necessary to train suitable models with a limited number of images [49].

5. Conclusions

Under the conditions of the present study, machine learning based on the YOLO algorithm successfully identified laying hens on the floor, regardless of their activity, which could be useful for the control of the challenging piling behaviour of hens during rearing and for the implementation of on-farm early alert systems. On the other hand, while the on-farm evaluation of hens performing comfort behaviour would be useful for measuring the general welfare condition of hens or the occurrence of any challenging event or factors, the classification of dust-bathing hens was rather poor. Nevertheless, this is the first application related to such a behaviour, which comprises a well-defined sequence of different movements, for which PLF tools other than image analysis might be more successful for the classification of dust-bathing behaviours. The peculiarity of images of dust-bathing hens, in terms of illumination and geometry, can lead to complicated classification. On the other hand, the present work took advantage of a relatively low number of training images (1150), where an even further reduction would be desirable in order to ease actual field applications.

Author Contributions

Design of the in vivo trial with laying hens, A.T. and G.X.; design of the methodology for image analyses by deep learning tools, F.M. and M.S.; design of the video recording system in the farm for the image analyses, terms of identification of laying hens for YOLO, management of video recordings, video storage and transfer, F.P.; labelling of images, A.B. and C.C.; data curation of labelled images, M.S. and A.B.; formal analyses of images by deep learning tools, M.S.; resources, G.X. and A.T.; writing—original draft preparation, M.S., G.P., C.C., F.B. and A.T.; writing—review, G.X. and F.M.; writing—editing, all authors; supervision, F.M. and A.T.; project administration, A.T. and G.X.; funding acquisition, A.T. and G.X. All authors have read and agreed to the published version of the manuscript.

Funding

This study was carried out within the Agritech National Research Center and received funding from the European Union Next-GenerationEU (PIANO NAZIONALE DI RIPRESA E RESILIENZA (PNRR)—MISSIONE 4 COMPONENTE 2, INVESTIMENTO 1.4—D.D. 1032 17/06/2022, CN00000022). This manuscript reflects only the authors’ views and opinions, neither the European Union nor the European Commission can be considered responsible for them. Animal facilities (aviary), equipment, animals and feeding were funded by Uni-Impresa (year 2019; CUP: C22F20000020005). The PhD grant of Giulio Pillan was funded by Unismart and Office Facco S.p.A. (call 2019).

Institutional Review Board Statement

The study was approved by the Ethical Committee for Animal Experimentation (Organismo per la Protezione del Benessere Animale, OPBA) of the University of Padova (project 28/2020; Prot. n. 204398 of 06/05/2020). All animals were handled according to the principles stated by the EU Directive 2010/63/EU regarding the protection of animals used for experimental and other scientific purposes. Research staff involved in animal handling were animal specialists (PhD or MS in Animal Science) and veterinary practitioners.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Halachmi, I.; Guarino, M.; Bewley, J.; Pastell, M. Smart Animal Agriculture: Application of Real-Time Sensors to Improve Animal Well-Being and Production. Annu. Rev. Anim. Biosci. 2019, 7, 403–425. [Google Scholar] [CrossRef] [PubMed]

- Li, N.; Ren, Z.; Li, D.; Zeng, L. Review: Automated techniques for monitoring the behaviour and welfare of broilers and laying hens: Towards the goal of precision livestock farming. Animal 2020, 14, 617–625. [Google Scholar] [CrossRef]

- Berckmans, D. General introduction to precision livestock farming. Anim. Front. 2017, 7, 6–11. [Google Scholar] [CrossRef]

- Neethirajan, S. The role of sensors, big data and machine learning in modern animal farming. Sens. Bio-Sens. Res. 2020, 29, 100367. [Google Scholar] [CrossRef]

- Neethirajan, S. Transforming the adaptation physiology of farm animals through sensors. Animals 2020, 10, 1512. [Google Scholar] [CrossRef]

- Zhuang, X.; Bi, M.; Guo, J.; Wu, S.; Zhang, T. Development of an early warning algorithm to detect sick broilers. Comput. Electron. Agric. 2018, 144, 102–113. [Google Scholar] [CrossRef]

- Wang, J.; Shen, M.; Liu, L.; Xu, Y.; Okinda, C. Recognition and Classification of Broiler Droppings Based on Deep Convolutional Neural Network. J. Sens. 2019, 2019, 823515. [Google Scholar] [CrossRef]

- Fernández, A.P.; Norton, T.; Tullo, E.; van Hertem, T.; Youssef, A.; Exadaktylos, V.; Vranken, E.; Guarino, M.; Berckmans, D. Real-time monitoring of broiler flock’s welfare status using camera-based technology. Biosyst. Eng. 2018, 173, 103–114. [Google Scholar] [CrossRef]

- Kashiha, M.; Pluk, A.; Bahr, C.; Vranken, E.; Berckmans, D. Development of an early warning system for a broiler house using computer vision. Biosyst. Eng. 2013, 116, 36–45. [Google Scholar] [CrossRef]

- Rowe, E.; Dawkins, M.S.; Gebhardt-Henrich, S.G. A Systematic Review of Precision Livestock Farming in the Poultry Sector: Is Technology Focussed on Improving Bird Welfare? Animals 2019, 9, 614. [Google Scholar] [CrossRef]

- Wurtz, K.; Camerlink, I.; D’Eath, R.B.; Fernández, A.P.; Norton, T.; Steibel, J.; Siegford, J. Recording behaviour of in-door-housed farm animals automatically using machine vision technology: A systematic review. PLoS ONE 2019, 14, 12. [Google Scholar] [CrossRef] [PubMed]

- Zaninelli, M.; Redaelli, V.; Luzi, F.; Mitchell, M.; Bontempo, V.; Cattaneo, D.; Dell’Orto, V.; Savoini, G. Development of a machine vision method for the monitoring of laying hens and detection of multiple nest occupations. Sensors 2018, 18, 132. [Google Scholar] [CrossRef] [PubMed]

- European Commission 2022. Eggs Market Situation Dashboard. Available online: https://ec.europa.eu/info/sites/default/files/food-farming-fisheries/farming/documents/eggs-dashboard_en.pdf (accessed on 1 August 2022).

- Hartcher, K.M.; Jones, B. The welfare of layer hens in cage and cage-free housing systems. Worlds Poult. Sci. J. 2017, 73, 767–782. [Google Scholar] [CrossRef]

- Gautron, J.; Dombre, C.; Nau, F.; Feidt, C.; Guillier, L. Review: Production factors affecting the quality of chicken table eggs and egg products in Europe. Animal 2022, 16, 100425. [Google Scholar] [CrossRef] [PubMed]

- Campbell, D.L.M.; Makagon, M.M.; Swanson, J.C.; Siegford, J.M. Litter use by laying hens in a commercial aviary: Dust bathing and piling. Poult. Sci. 2016, 95, 164–175. [Google Scholar] [CrossRef] [PubMed]

- Winter, J.; Toscano, M.J.; Stratmann, A. Piling behaviour in Swiss layer flocks: Description and related factors. Appl. Anim. Behav. Sci. 2021, 236, 105272. [Google Scholar] [CrossRef]

- Ferrante, V.; Lolli, S. Specie avicole. In Etologia Applicata e Benessere Animale; Vol. 2—Parte Speciale; Carenzi, C., Panzera, M., Eds.; Point Veterinaire Italie Srl: Milan, Italy, 2009; pp. 89–106. [Google Scholar]

- Grebey, T.G.; Ali, A.B.A.; Swanson, J.C.; Widowski, T.M.; Siegford, J.M. Dust bathing in laying hens: Strain, proximity to, and number of conspecific matter. Poult. Sci. 2020, 99, 4103–4112. [Google Scholar] [CrossRef]

- Riddle, E.R.; Ali, A.B.A.; Campbell, D.L.M.; Siegford, J.M. Space use by 4 strains of laying hens to perch, wing flap, dust bathe, stand and lie down. PLoS ONE 2018, 13, e0190532. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural. Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, j.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic bunch detection in white grape varieties using YOLOv3, YOLOv4, and YOLOv5 deep learning algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Pillan, G.; Trocino, A.; Bordignon, F.; Pascual, A.; Birolo, M.; Concollato, A.; Pinedo Gil, J.; Xiccato, G. Early training of hens: Effects on the animal distribution in an aviary system. Acta fytotech. Zootech. 2020, 23, 269–275. [Google Scholar] [CrossRef]

- Bresilla, K.; Perulli, G.D.; Boini, A.; Morandi, B.; Corelli Grappadelli, L.; Manfrini, L. Single-Shot Convolution Neural Net-works for Real-Time Fruit Detection Within the Tree. Front. Plant Sci. 2019, 10, 611. [Google Scholar] [CrossRef] [PubMed]

- Yi, Z.; Yongliang, S.; Jun, Z. An improved tiny-yolov3 pedestrian detection algorithm. Optik 2019, 183, 17–23. [Google Scholar] [CrossRef]

- Yang, G.; Feng, W.; Jin, J.; Lei, Q.; Li, X.; Gui, G.; Wang, W. Face mask recognition system with YOLOV5 based on image recognition. In Proceedings of the IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 1398–1404. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, 2004, 10934. [Google Scholar] [CrossRef]

- Redmon, J. Darknet: Open Source Neural Networks in C. 2013–2016. Available online: https://pjreddie.com/darknet (accessed on 1 September 2022).

- Wang, J.; Wang, N.; Li, L.; Ren, Z. Real-time behavior detection and judgment of egg breeders based on YOLO v3. Neural. Comput. Appl. 2020, 32, 5471–5481. [Google Scholar] [CrossRef]

- Kwon, Y.; Choi, W.; Marrable, D.; Abdulatipov, R.; Loïck, J. Yolo_label 2020. Available online: https://github.com/developer0hye/Yolo_Label (accessed on 1 September 2020).

- Bochkovskiy, A. YOLOv4. Available online: https://github.com/AlexeyAB/darknet/releases (accessed on 1 September 2022).

- Van Dyk, D.A.; Meng, X.L. The art of data augmentation. J. Comput. Graph. Stat. 2001, 10, 1–50. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic gradient descent with warm restarts. In Proceedings of the International Con-ference on Learning Representations, Toulon, France, 24–26 April 2017. arXiv 2017, 1608, 03983. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the Amount of Ecologic Association between Species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Sørensen, T.J. A Method of Establishing Groups of Equal Amplitudes in Plant Sociology Based on Similarity of Species Content and Its Application to Analyses of the Vegetation on Danish Commons. K. Dan. Vidensk. Selsk. 1948, 5, 1–34. Available online: https://www.royalacademy.dk/Publications/High/295_S%C3%B8rensen,%20Thorvald.pdf (accessed on 1 September 2022).

- Jaccard, P. The distribution of the flora in the alpine zone. New Phytol. 1912, 11, 37–50. [Google Scholar] [CrossRef]

- Pereira, D.F.; Miyamoto, B.C.; Maia, G.D.; Sales, G.T.; Magalhães, M.M.; Gates, R.S. Machine vision to identify broiler breeder behavior. Comput. Electron. Agric. 2013, 99, 194–199. [Google Scholar] [CrossRef]

- Pu, H.; Lian, J.; Fan, M. Automatic Recognition of Flock Behavior of Chickens with Convolutional Neural Network and Kinect Sensor. Int. J. Pattern. Recognit. Artif. Intell. 2018, 32, 7. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; IEEE Computer Society: Washington, DC, USA, 2020; Volume 2020, pp. 1571–1580. [Google Scholar]

- Siriani, A.L.R.; Kodaira, V.; Mehdizadeh, S.A.; de Alencar Nääs, I.; de Moura, D.J.; Pereira, D.F. Detection and tracking of chickens in low-light images using YOLO network and Kalman filter. Neural. Comput. Appl. 2022, 34, 21987–21997. [Google Scholar] [CrossRef]

- Mbelwa, H.; Machuve, D.; Mbelwa, J. Deep Convolutional Neural Network for Chicken Diseases Detection. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 759–765. [Google Scholar] [CrossRef]

- Chang, K.R.; Shih, F.P.; Hsieh, M.K.; Hsieh, K.W.; Kuo, Y.F. Analyzing chicken activity level under heat stress condition using deep convolutional neural networks. In Proceedings of the ASABE Annual International Meeting, Houston, TX, USA, 17–20 July 2022. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Paraforos, D.S.; Rodrigues, F.A., Jr.; Cohen, Y.; Fountas, S.; Francisco, M.J.; Pezzuolo, A.; Grigolato, S.; Marinello, F. How many gi-gabytes per hectare are available in the digital agriculture era? A digitization footprint estimation. Comput. Electron. Agric. 2022, 198, 107080. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Z.; Li, Y. Agricultural Few-Shot Selection by Model Confidences for Multimedia Internet of Things Acquisition Dataset. In Proceedings of the 2022 IEEE International Conferences on Internet of Things (iThings) and IEEE Green Computing & Communications (GreenCom) and IEEE Cyber, Physical & Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics (Cybermatics), Espoo, Finland, 22–25 August 2022; IEEE Computer Society: Washington, DC, USA, 2022; pp. 488–494. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).