Abstract

Background/Objectives: Precise breast ultrasound (BUS) segmentation supports reliable measurement, quantitative analysis, and downstream classification yet remains difficult for small or low-contrast lesions with fuzzy margins and speckle noise. Text prompts can add clinical context, but directly applying weakly localized text–image cues (e.g., CAM/CLIP-derived signals) tends to produce coarse, blob-like responses that smear boundaries unless additional mechanisms recover fine edges. Methods: We propose XBusNet, a novel dual-prompt, dual-branch multimodal model that combines image features with clinically grounded text. A global pathway based on a CLIP Vision Transformer encodes whole-image semantics conditioned on lesion size and location, while a local U-Net pathway emphasizes precise boundaries and is modulated by prompts that describe shape, margin, and Breast Imaging Reporting and Data System (BI-RADS) terms. Prompts are assembled automatically from structured metadata, requiring no manual clicks. We evaluate the model on the Breast Lesions USG (BLU) dataset using five-fold cross-validation. The primary metrics are Dice and Intersection over Union (IoU); we also conduct size-stratified analyses and ablations to assess the roles of the global and local paths and the text-driven modulation. Results: XBusNet achieves state-of-the-art performance on BLU, with a mean Dice of 0.8766 and IoU of 0.8150, outperforming six strong baselines. Small lesions show the largest gains, with fewer missed regions and fewer spurious activations. Ablation studies show complementary contributions of global context, local boundary modeling, and prompt-based modulation. Conclusions: A dual-prompt, dual-branch multimodal design that merges global semantics with local precision yields accurate BUS segmentation masks and improves robustness for small, low-contrast lesions.

1. Introduction

Breast cancer is the most common cancer in U.S. women (about 30% of new cases in 2025), and roughly 42,170 women are expected to die from it [1]. Early detection and timely treatment remain central to lowering mortality [1]. Mammography, magnetic resonance imaging (MRI), and ultrasound are the main imaging modalities used for screening and diagnostic workups [2,3]. Mammography and MRI are accurate, but each has limitations: mammography loses sensitivity in women with dense breast tissue [4], and MRI, though highly sensitive, is costly and impractical for routine use [5]. Ultrasound is safe, painless, nonionizing, affordable, and widely available, which is valuable for younger patients and in settings with limited resources [6]. Its interpretation, however, is highly operator-dependent and is often hindered by speckle noise, heterogeneous tissue appearance, and indistinct lesion boundaries, challenges shown in benchmark datasets such as BUSIS [7,8]. These factors motivate automated segmentation methods that are accurate, robust across imaging conditions, and interpretable to radiologists in routine practice.

Accurate tumor segmentation improves diagnostic precision by enabling measurements aligned with BI-RADS (size, shape, and margins), yielding more reliable radiomics features, keeping classifiers focused on the lesion, and supporting longitudinal tracking [9,10]. Automated segmentation improves reproducibility and can streamline reporting in routine ultrasound. Over the past decade, breast ultrasound (BUS) segmentation has progressed in three broad stages. Early studies used classical image processing—thresholding, edge detection, region growing, and active contours [11]. Next came machine learning pipelines that relied on hand-crafted texture and shape descriptors (e.g., GLCM, local binary patterns, wavelets) paired with supervised classifiers, such as SVMs and random forests, or with clustering and graph-based methods (fuzzy c-means, k-means, conditional random fields, graph cuts) [7]. With deep learning, U-Net and related encoder–decoder models became the standard [12,13]. Variants that add residual or dense connections and attention gates improved boundary quality [14,15,16]. Attention mechanisms and Transformer designs helped capture long-range context [17,18,19], and hybrid CNN–Transformer models reported strong results on BUS data, including a multitask (segmentation and classification) CNN–Transformer trained jointly for both tasks [20,21]. Despite this progress, BUS still presents challenging scenarios: small tumor sizes, low contrast, heterogeneous backgrounds, and variability across scanners and sites. These difficulties are compounded by limited labeled data, class imbalance, and annotation variability. Pixel only training also rarely uses explicit clinical descriptors (e.g., BI-RADS or radiomics), which can make outputs harder to interpret in routine reading [7,22]. Small tumor-aware architectures such as STAN and ESTAN directly target this failure mode [23,24]. In current BUS practice, lesion measurements are predominantly manual; automated, BI-RADS-aligned masks can standardize reporting and reduce inter-operator variability.

To address this gap, prior studies have injected domain knowledge into BUS segmentation in several ways. First, clinical descriptors and radiomics are used as auxiliary supervision or fused with visual features [10,25]. Second, anatomy-aware models and losses encode priors on shape, boundaries, and topology to keep masks plausible [26,27,28,29,30]. Third, ultrasound physics has been leveraged through speckle and attenuation models and quantitative ultrasound features [31,32,33,34]. These strategies improve clinical grounding but still face limits in scalability and generalization, which motivates frameworks that flexibly integrate descriptors while preserving robustness across imaging conditions.

Prompt-based learning treats segmentation as a conditional task steered by auxiliary inputs (“prompts”). In practice, prompts take several forms: (i) Spatial prompts. Image coordinates or regions such as points, bounding boxes, polygons/masks, and scribbles. The Segment Anything Model (SAM) is a canonical spatially promptable segmenter and supports these types at scale [35]. (ii) Textual prompts. Class names or free-form phrases that describe the target. CLIPSeg shows that text or image prompts can condition masks [36]. Pairing open-vocabulary detectors or vision–language models with SAM enables text-conditioned localization and segmentation; for example, pairing Grounding DINO with SAM (“Grounded-SAM”) uses free text to propose regions, and BLIP provides strong image–text pretraining [37,38,39,40,41]. (iii) Other side information. Categorical tags or numeric attributes (e.g., approximate lesion size or laterality) that can be encoded as tokens or embeddings and used alongside visual features.

Adapting these ideas to medical imaging is challenging because lesions often have subtle contrast, boundaries are weak, and domain shift across scanners and sites is common [42]. Several adaptations target these issues: BUSSAM introduces CNN–ViT adapters for breast ultrasound, MedSAM pretrains on large medical datasets, and SAMed applies low-rank adaptation for efficient tuning [43,44,45]. Vision–language approaches have also been explored directly for ultrasound, such as CLIP-TNseg [46]; causal interventions have been proposed to stabilize text guidance under noisy or ambiguous prompts [46,47]. These studies indicate benefits from both spatial and text prompts, yet most methods adopt a single prompt modality. Few approaches jointly exploit global semantic context and fine-grained clinical attributes in a manner consistent with radiologist reasoning. Furthermore, it is desirable that segmentation outputs are consistent with BI-RADS descriptors [9]. Common tools such as Grad-CAM and attention visualizations offer partial insight but often produce diffuse or inconsistent patterns that are difficult to map to clinical terms [48,49,50].

In this work, we present XBusNet, a dual-branch, dual-prompt vision and language framework for BUS segmentation. A global branch based on Contrastive Language Image Pretraining (CLIP) and a Vision Transformer (ViT) is conditioned by a Global Feature Context Prompt (GFCP) that encodes lesion size and centroids to provide scene-level semantics. A local branch based on U-Net with multi-head self-attention preserves boundary detail. The local decoder is modulated by a text conditioned scaling and shifting mechanism, Semantic Feature Adjustment (SFA), which is driven by a Local or Attribute Guided Prompt (LFP) that encodes shape, margin, and Breast Imaging Reporting and Data System (BI-RADS) terms. Prompts are assembled reproducibly from structured metadata, require no manual clicks, and integrate with standard training. We evaluate XBusNet on the BLU dataset using five-fold cross-validation, include ablations to isolate the role of each component, analyze performance across lesion sizes, and provide Grad-CAM visualizations as qualitative views of model focus relative to BI-RADS descriptors. We hypothesize that combining a Global Feature Context Prompt (GFCP; size/location) with a Local Feature Prompt (LFP; shape, margin, BI-RADS) guides the model toward plausible regions while preserving boundaries, improving BUS segmentation—especially for small or low-contrast lesions.

Our contributions are as follows:

- We propose XBusNet, a dual-branch, dual-prompt vision and language segmentation architecture that combines a CLIP ViT global branch conditioned by GFCP with a U-Net local branch with multi-head self-attention that is modulated via SFA driven by LFPs.

- We design a reproducible prompt pipeline that converts structured metadata into natural language prompts, including global prompts for lesion size and centroid and local prompts for shape, margin, and BI-RADS.

- We introduce a lightweight SFA mechanism in the local decoder to inject attribute-aware scaling and shifting, improving boundary focus while preserving fine detail.

- We provide a comprehensive evaluation protocol with five-fold cross-validation, size-stratified analysis, component ablations, and Grad-CAM overlays used as qualitative visualizations of model focus relative to BI-RADS descriptors.

2. Materials and Methods

2.1. Datasets

We used the Breast Lesions USG (BLU) dataset [51], which contains 256 breast ultrasound scans from 256 patients. Each scan includes expert verified annotations for benign and malignant lesions. Four images with multiple annotated lesions (two tumors in the same image) were excluded because they represent a very small fraction of the dataset (4/256, ≈1.6%), which would yield an imbalanced and hard-to-interpret evaluation for per-image metrics. The remaining 252 images include 154 benign and 98 malignant tumors. This dataset is also referred to as ‘BrEaST’. All images and labels are publicly available and fully de-identified in the BLU (‘BrEaST’) release; no new data were collected and no identifiable information was accessed.

The dataset provides pixel-wise segmentation masks, patient-level clinical attributes such as age, breast tissue composition, signs, and symptoms, image-level BI-RADS labels, and tumor-level diagnostic information such as shape, margin, and histopathology. We considered the BUSI [52] dataset; however, it does not provide the structured clinical text fields (e.g., BI-RADS shape/margin) needed for our prompt construction. Extending XBusNet to cohorts without such fields (via automatic prompt inference) is planned.

2.2. Pre-Processing and Prompt Construction

All images were resized to pixels (we therefore took throughout). We chose 352 for compatibility with the pretrained ViT/CLIP encoder (patch size, 16; ) and to align with the backbone stride (). Prompts were derived from structured metadata in the dataset.

The Global Feature Context Prompt encodes lesion size and location. Size is taken from the dataset metadata and discretized using training-set quantiles (small/medium/large). Location handling differs by split: for training images, location is the centroid of the reference segmentation mask computed from image moments,

where is the mask area and and are spatial moments. For validation/test images, location is estimated without masks from a first-pass probability map, as detailed in Section 2.7. The Local Feature Prompt encodes clinically used attributes—shape, margin, and BI-RADS—verbalized directly from metadata. Prompts are tokenized and embedded with the CLIP text encoder, as described in the model subsections.

2.3. Training Setup

We used five-fold cross-validation. For each fold, we split the 252 annotated images into train and validation sets at an 80 to 20 ratio, ensuring non-overlapping images. Unless stated otherwise, training ran for 1000 iterations per fold with a batch size of 4, the AdamW optimizer, an initial learning rate of , and a cosine schedule. Mixed-precision training was enabled with automatic mixed precision.

Hyperparameter Tuning and Overfitting Control

Within each cross-validation fold, we performed a small hyperparameter sweep restricted to the fold’s training partition (no images from the fold’s validation/test partitions were used for selection). We varied a compact set of parameters (learning rate, weight decay, batch size, loss weights) while keeping all other settings fixed. The best configuration was chosen by the mean Dice of an internal split of the training partition and then fixed across all folds and methods we implemented for comparability. To mitigate overfitting, we limited the sweep budget, used weight decay (AdamW), and monitored the train–validation gap; we did not use data augmentation or dropout in order to isolate architectural effects. We did not observe unstable divergence across folds. We acknowledge that nested cross-validation would further decouple tuning from evaluation and leave a fully nested protocol to future work.

2.4. Evaluation Metrics

We report Dice, Intersection over Union (IoU), the false positive rate (FPR), and the false negative rate (FNR). Let be the ground-truth mask, where H and W are the resized image height and width, and is the predicted probability map. We obtain a binary prediction by thresholding at :

The pixel-wise counts (sums over all pixels ) are

The per-image metrics are

We computed metrics per image and the average within each fold; the fold means were then summarized across the folds (mean ± SD). For numerical stability, we used in denominators during implementation.

2.5. Implementation Details and Hardware

All experiments were implemented in PyTorch v 2.9.0 and run on a cluster with NVIDIA A100 Tensor Core GPUs (NSF MRI (#2117941)) in SXM form factor. The CLIP encoders were frozen during training. The ResNet50 encoder was initialized from ImageNet weights and fine-tuned. For deployment, we provided an inference-profiling script and notes on memory/throughput; latency depended on hardware and batch size.

2.6. Overall Architecture

XBusNet is a text-guided medical image segmentation framework that integrates fine-grained local context from medical images with global semantic cues derived from natural language prompts. The model follows a dual-branch, dual-prompt vision and language design with a Global Feature Extractor (GFE) and a Local Feature Extractor (LFE) that run in parallel and exchange information through a Semantic Feature Adjustment (SFA) mechanism (see Figure 1). The GFE uses a CLIP-based Vision Transformer with a patch size of 16 to capture global semantic relationships conditioned on a high-level conditional prompt, . The LFE uses a ResNet50 encoder with Transformer blocks at deep levels and a U-Net-style decoder to represent spatial detail under a phrase-level prompt, .

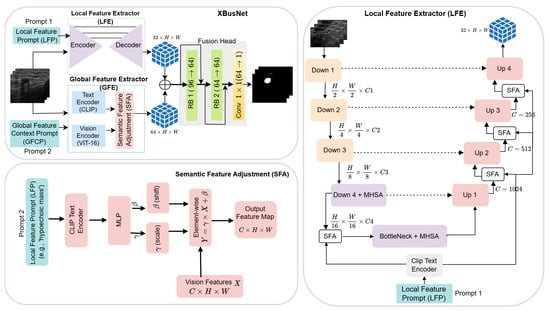

Figure 1.

Overview of the proposed XBusNet architecture, showing the Local Feature Extractor (LFE), Global Feature Extractor (GFE), and Semantic Feature Adjustment (SFA) modules. RB = residual block; MHSA = multi-head self-attention.

Let denote the input image batch and let and be the conditional and local prompts. (Here, H and W denote the resized input-image size, whereas h and w denote the feature-map size at the working resolution.)

The two branches produce

with and . (Here, and denote the channel widths; in our implementation, and ). SFA modulates features in both branches using scaling and shifting parameters computed from the corresponding prompt embedding:

where ⊙ denotes element-wise multiplication. For channel-wise FiLM, and , broadcast over (not pixel-wise). Prompt embeddings (with e being the text-embedding dimension) are linearly projected to a fixed width, d, for conditioning; we use the same fixed d throughout, including Equation (9). A residual form can be used at implementation time as .

The fusion head integrates the modulated features and prepares them for prediction:

Here, denotes the two residual blocks described in Section 2.10. This is followed by a convolution that yields the segmentation logits:

This organization lets the network use language to steer scene-level context while the local pathway preserves boundaries and fine structure.

2.7. Global Feature Extractor (GFE)

2.7.1. Global Features and Prompts

Global features comprise size and location. Lesion size is read from the dataset metadata and discretized using training-set quantiles (small/medium/large). Location handling differs by split: (i) Training. the location is the centroid of the reference segmentation mask, computed from the image moments , and . (ii) Validation/Test. The location is estimated from a first-pass probability map, P, by running the model without spatial text, thresholding at , retaining the largest connected component to form a coarse proposal, R, and computing its centroid, . Centroids are mapped to breast quadrants (upper/lower × inner/outer) to produce a location token. Size and location tokens are verbalized to form the Global Feature Context Prompt . Quantiles and thresholds are fixed a priori per fold using only the corresponding training split.

2.7.2. GFE Computation

The GFE computes a scene-level representation conditioned on using a Vision Transformer. The prompt is embedded by the (frozen) text encoder to obtain (with e being the text-embedding dimension).

Selected Transformer layers provide token sequences, , with . Here, is the number of selected layers, N the token count, and D the hidden token size. Tokens are linearly reduced to a common width and aggregated:

where the class token is removed. Here r is the reduced width, and indexes the selected layers with weights, . When attention logits, A, are used, we write . Prompt conditioning is applied as channel-wise scaling and shifting of the reduced tokens at a chosen Transformer depth:

Here c denotes the channel count of the reduced tokens; and are learned linear maps that yield multiplicative/additive FiLM terms. The conditioned tokens are reshaped to a spatial grid and projected with a transposed convolution to obtain the global feature map

SFA in the global branch follows the affine form in Equation (13) and produces as in Equation (3). The vision and text encoders are frozen; only the reduction, conditioning, and projection layers are trained.

2.8. Local Feature Extractor (LFE)

2.8.1. Local Features and Prompts

Local features comprise shape, margin, and BI-RADS. These attributes are read from the dataset metadata and verbalized to form the Local Feature Prompt (e.g., “irregular shape, microlobulated margin, BI-RADS 4”). The mapping from metadata to tokens is deterministic and applied identically across folds.

2.8.2. LFE Computation

The LFE recovers boundaries and fine structure under guidance from . We use a ResNet50 encoder with Transformer blocks at deep levels and a U-Net-style decoder. The prompt is embedded with the text encoder,

and is linearly projected to the same fixed width, d, for SFA in the specified stages. Note: denotes the (frozen) text encoder. Given , the encoder yields the features . We add a Transformer block in the fourth encoder stage and at the bottleneck:

The decoder upsamples and merges skip connections using up blocks to produce , followed by a final up projection to obtain a 32-channel map at the decoder output.

Semantic Feature Adjustment (SFA) uses the local prompt embedding in encoder stage four and after the first three decoder up blocks:

The final local representation is taken after the last decoder stage:

This configuration preserves boundary detail and small structures while injecting attribute cues in the stages listed above. Unless stated otherwise, the text encoder is frozen, and the LFE encoder–decoder parameters are trainable.

2.9. Semantic Feature Adjustment (SFA)

SFA injects prompt driven semantics into feature maps through channel-wise scaling and shifting. The module takes a visual tensor and a prompt embedding, predicts modulation parameters, and applies an affine transformation with an optional residual (see Figure 1).

Let be a feature map and let be the embedding of the associated prompt. The core operation is

where ⊙ denotes element-wise multiplication and broadcast over spatial dimensions. Here denotes the per-stage projection network (two-layer MLP) that maps the prompt embedding to . Parameters are produced by a small projection network for each stage, k, with channel width, , and are broadcast over spatial dimensions:

Thus, are per-sample, per-channel FiLM parameters broadcast over .

In the global branch, we condition on to obtain ; in the local decoder, we condition on to obtain , as summarized in Equation (3).

An additive skip may be used in implementation:

2.10. Feature Fusion and Prediction Head

We concatenate the modulated global and local feature maps to obtain

and refine with two residual blocks followed by a convolution:

3. Results

We followed the training setup in Section 2.3 and the metrics in Section 2.4. All experiments used the implementation and hardware in Section 2.5.

3.1. Overall Performance

We compared XBusNet with representative non-prompt baselines including U-Net [12], U-ResNet, U_KAN [53], and AnatoSegNet [54], as well as prompt-guided baselines such as BUSSAM [43] and CLIP_TNseg [46]. All models were evaluated on BLU under the same five-fold cross-validation protocol (Section 2.3), the same pre-processing (Section 2.2), and a common operating threshold, (Section 2.4). We report the mean ± SD across folds for Dice, IoU, the FPR, and the FNR in Table 1 and provide a size-stratified analysis in Table 2.

Table 1.

Overall comparison on BLU (five-fold CV). Dice/IoU cells show the fold mean ± SD at a fixed threshold, , with 95% image-level bootstrap CIs in brackets. Lower is better for the FPR and FNR.

Table 2.

Dice and IoU scores (means with 95% CIs) across tumor-length intervals. Bold indicates the best per column.

3.1.1. Fold-Wise Performance

With five-fold cross-validation (Table 3), XBusNet attained consistently strong overlap across folds (Dice: –; IoU: –) with a stable FPR and FNR, indicating reliable behavior across splits.

Table 3.

Fold-wise validation performance of XBusNet (latest run).

3.1.2. Comparison with Prior Methods

Table 1 summarizes mean cross-validation performance against U-Net variants, anatomy-aware models, and text-guided segmenters. XBusNet achieved the best Dice () and IoU () and the lowest FNR (), reflecting fewer missed lesion pixels. The FPR is comparable to that of the text-guided baseline and higher than that of some convolutional baselines that tend to under-segment; this trade-off favors recovering lesion extent while keeping spurious activations controlled.

3.1.3. Qualitative Assessment

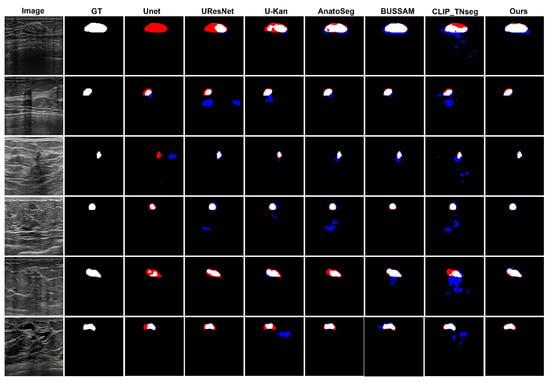

Figure 2 shows typical error modes with false positives (blue) and false negatives (red). First, for high-contrast masses, U-Net variants exhibited small gaps at the posterior margin (missed pixels), whereas XBusNet produced continuous contours with fewer misses, consistent with the lower FNR in Table 1. Second, on small or faint lesions, several baselines fragmented the mask or missed the target entirely; XBusNet recovered a larger fraction of the lesion area, mirroring the gains in the 0–110 px bin in Table 2. Third, in low-contrast heterogeneous backgrounds, some text-guided baselines could add foreground in adjacent tissue, increasing the FPR; XBusNet suppressed these spurious activations while retaining the faint rim. Finally, for irregular echotexture, convolutional baselines scattered foreground outside the mass, while XBusNet better followed the irregular edge. Figure 3 shows an example of the exact prompts used in the proposed work.

Figure 2.

Qualitative comparison of breast ultrasound segmentations. False positives (FPs) are shown in blue and false negatives (FNs) in red.

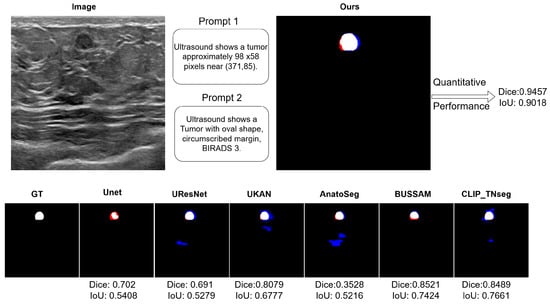

Figure 3.

A qualitative example with the exact prompts used for a case. Top left: An input breast ultrasound (BUS) image showing the Global Feature Context Prompt (GFCP) and the Local Feature Prompt (LFP). Top right: Qualitative and quantitative results for XBusNet in this case. Bottom row: Segmentation overlays; ground truth is in white, false negatives are in red, and false positives are in blue.

3.1.4. Statistical Analysis and Comparison

All between-method comparisons were performed using image-level Dice and IoU using paired Wilcoxon signed-rank tests; when testing XBusNet against multiple baselines, p-values were adjusted via Benjamini–Hochberg FDR (). Uncertainty was summarized with 95% bootstrap confidence intervals (10,000 image-level resamples) and is reported in Table 1 and Table 2. Under this protocol, XBusNet significantly outperformed all baselines (adjusted two-sided ). Effect sizes (rank-biserial correlation) indicated large advantages (median for Dice; median for IoU).

3.2. Ablation Study

We quantified the contribution of the Local Feature Extractor (LFE), the Global Feature Extractor (GFE), and Semantic Feature Adjustment (SFA) by toggling each component. Table 4 shows that removing any single component degraded performance. Without the LFE, Dice fell from to and IoU from to , underscoring the role of local detail and boundary focus. Without the GFE, Dice dropped to and IoU to , highlighting the value of global semantic cues from the conditional prompt. Without SFA, Dice decreased to and IoU to , showing the benefit of prompt-conditioned modulation that aligns visual features with clinical attributes. The full model with all three components yielded the best results, indicating complementary effects.

Table 4.

Ablation study on effect of LFE, GFE, and SFA modules. The bold indicates the best per column.

3.3. Qualitative Visualizations

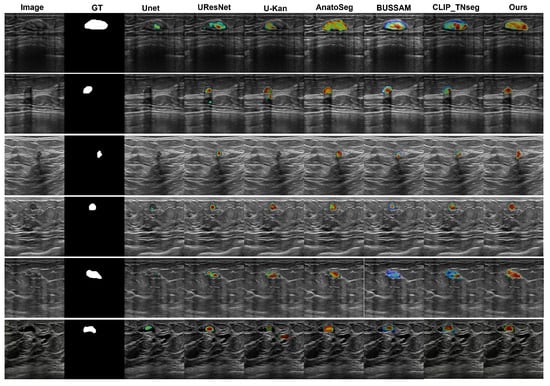

We employed Grad-CAM overlays to visualize image regions that most influenced the predicted masks (Figure 4). Among the compared methods, U-Net [12], U-ResNet, U-KAN [53], and AnatoSegNet [54] are non-prompt methods; BUSSAM [43] and CLIP-TNseg [46] are prompt-guided; and XBusNet uses dual prompts (global and attribute-conditioned). Non-prompt baselines frequently display diffuse responses in low-contrast tissue, particularly posterior to the lesion, corresponding to missed or fragmented masks. Prompt-guided methods improve spatial correspondence; CLIP-TNseg may still exhibit scattered hotspots around speckle, whereas XBusNet shows concentrated activation adjacent to lesion boundaries and within the mass. For very small lesions, the salient regions for XBusNet overlap the recovered mask more consistently, in line with the small-lesion gains reported in Table 2. These visualizations are qualitative and are not treated as formal explanations.

Figure 4.

Grad-CAM comparison. Colors map low→high attribution (blue→red), normalized to [0, 1] with a shared scale across images/models.

4. Discussion

XBusNet showed strong performance on BLU. Across five folds, the model achieved a mean Dice of 0.8766 and an IoU of 0.8150, with narrow fold-to-fold variation, which suggests stable behavior under fold-wise splits. Compared with U-Net variants, anatomy-aware models, and text-guided baselines, XBusNet also produced a lower false negative rate, indicating fewer missed lesion pixels. The false positive rate was comparable to that of text-guided baselines but higher than that of some convolutional models that under-segment; this trade-off favors recovering lesion extent and can be tuned by adjusting the operating threshold when a stricter specificity is required. Findings align with prior BUS segmentation work where prompt signals improve localization under low contrast; remaining limits include single-cohort evaluation and reliance on structured text fields.

Size-stratified analysis supports these observations. The largest relative gains appear for the smallest lesions, where the model maintains competitive Dice and IoU, while performance remains strong for medium and large lesions. This pattern is consistent with the design: global prompt cues (size and approximate location) guide the search toward plausible regions, and local attribute cues (shape, margin, BI-RADS) help the decoder maintain sharp boundaries when pixel evidence is weak.

Ablation results point to complementary roles for both branches and for Semantic Feature Adjustment. Removing the local branch reduces Dice and IoU, highlighting the importance of boundary detail. Disabling the global branch leads to a larger drop, showing that scene-level cues improve localization and reduce misses. Turning off Semantic Feature Adjustment also degrades performance, which indicates that prompt-conditioned scaling and shifting adds value beyond the two-branch backbone. Together, these findings support the view that the full configuration is needed to realize the observed gains.

Qualitative panels mirror these trends. On high-contrast masses, several baselines show small gaps at the posterior margin, whereas XBusNet yields continuous contours, in line with the lower false negative rate. For small or faint lesions, baselines may fragment or miss the target, while XBusNet recovers a larger fraction of the lesion area. In heterogeneous backgrounds, some text-guided baselines spill into adjacent tissue; XBusNet restrains these activations while preserving the rim. With irregular echotexture, convolutional baselines scatter foreground outside the mass, whereas XBusNet follows the irregular edge more closely. Grad-CAM overlays are used as qualitative views of model focus and are not treated as formal explanations.

Clinical Relevance

There is no established minimal clinically important difference (MCID) for segmentation metrics in breast ultrasound. Nevertheless, our error profile—particularly improved coverage on small lesions and smoother boundaries—suggests potential benefits for measurement stability (e.g., size/margins aligned with BI-RADS), reduced inter-reader variability on follow-up, and more reliable pre-read triage. Future reader studies should translate Dice/IoU gains into task-level endpoints such as boundary error (mm), read time, and inter-reader variance to anchor a practical MCID. This study is limited by the availability of suitable public data. To our knowledge, there are no widely available breast ultrasound datasets that jointly provide pixel-level masks and rich clinical descriptors such as BI-RADS terms, structured lesion metadata (e.g., shape and margin), and histopathological outcomes. This is the main reason we did not combine BLU with a public external cohort for evaluation. As a practical recommendation for future dataset releases, we encourage curators to include not only classification labels and segmentation masks but also standardized BI-RADS descriptors, acquisition metadata, radiology reports, and, when possible, histopathology results. Such completeness would enable rigorous text-guided segmentation research, cross-dataset evaluation, and clinically meaningful analyses. While structured BI-RADS fields facilitate prompting, entries can be missing or partial; ablations indicate graceful degradation without text cues, and future work will explore automatic prompt inference from images or reports. In practice, the local/global cues can be extracted from routine structured reporting (BI-RADS, shape, margin, size/quadrant). When such fields are absent, XBusNet remains functional with neutral prompts or image-only input; ablations indicate that Dice decreases from to without the GFE (no global prompt) and to without SFA (no prompt-driven modulation). As future work, we will address partially missing fields via automatic prompt inference (image/report-driven) and investigate radiomics-derived surrogates or pseudo-labels.

5. Conclusions

XBusNet fuses global prompts (size/location) with local prompts (shape, margin, BI-RADS) and applies Semantic Feature Adjustment to guide segmentation. On BLU, it achieves state-of-the-art Dice/IoU with the largest gains on small lesions, reducing missed pixels while keeping spurious activations controlled. Ablations show that the global branch, the local branch, and the prompt-conditioned modulation each contribute; together they yield balanced boundary quality and region coverage. These results indicate that simple, automatically assembled text cues can strengthen ultrasound segmentation without changes to current imaging practice. Integration options include (i) pre-read triage to flag small/low-contrast candidates and (ii) post-read standardization of lesion measurements; prompts can be auto-assembled from routine fields with minimal workflow impact.

Future Work

We will test cross-center generalization, add boundary-oriented metrics and calibration analysis, and explore coupling with detection and structured reporting.

Author Contributions

Conceptualization, B.S. and R.M.; methodology, B.S. and R.M.; software, R.M. validation, B.S. and R.M.; formal analysis, B.S. and R.M.; investigation, R.M.; resources, B.S.; data curation, R.M.; writing—original draft preparation, R.M. and B.S.; writing—review and editing, B.S. and R.M.; visualization, R.M.; supervision, B.S.; project administration, B.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to this study used a publicly available, fully de-identified dataset and involved no interaction with human subject.

Informed Consent Statement

Not applicable.

Data Availability Statement

Training and inference code, configurations, and evaluation scripts are available at github.com/AAR-UNLV/XBusNet, and the dataset is accessed on 9 May 2025 through https://www.cancerimagingarchive.net/collection/breast-lesions-usg/.

Conflicts of Interest

The authors declare no relevant conflicts of interest.

References

- American Cancer Society. Key Statistics for Breast Cancer. 2025. Available online: https://www.cancer.org/cancer/types/breast-cancer/about/how-common-is-breast-cancer.html (accessed on 5 May 2025).

- Nicholson, W.K.; Silverstein, M.; Wong, J.B.; Barry, M.J.; Chelmow, D.; Coker, T.R.; Davis, E.M.; Jaén, C.R.; Krousel-Wood, M.; Lee, S.A.; et al. Screening for Breast Cancer: US Preventive Services Task Force Recommendation Statement. JAMA 2024, 331, 1918–1930. [Google Scholar] [CrossRef]

- Sardanelli, F.; Boetes, C.; Borisch, B.; Deckerd, T.; Federicoe, M.; Gilbertf, F.J.; Helbichg, T.; Heywang-Köbrunnerh, S.H.; Kaiseri, W.A.; Kerin, M.J.; et al. Magnetic resonance imaging of the breast: Recommendations from the EUSOMA working group. Eur. J. Cancer 2010, 46, 1296–1316. [Google Scholar] [CrossRef]

- Carney, P.A.; Miglioretti, D.L.; Yankaskas, B.C.; Kerlikowske, K.; Rosenberg, R.; Rutter, C.M.; Geller, B.M.; Abraham, L.A.; Taplin, S.H.; Dignan, M.; et al. Individual and Combined Effects of Age, Breast Density, and Hormone Replacement Therapy Use on the Accuracy of Screening Mammography. Ann. Intern. Med. 2003, 138, 168–175. [Google Scholar] [CrossRef]

- Marcon, M.; Fuchsjäger, M.H.; Clauser, P.; Mann, R.M. ESR Essentials: Screening for breast cancer—General recommendations by EUSOBI. Eur. Radiol. 2024, 34, 6348–6357. [Google Scholar] [CrossRef]

- Evans, A.; Trimboli, R.M.; Athanasiou, A.; Balleyguier, C.; Baltzer, P.A.; Bick, U.; Camps Herrero, J.; Clauser, P.; Colin, C.; Cornford, E.; et al. Breast ultrasound: Recommendations for information to women and referring physicians by the European Society of Breast Imaging. Insights Imaging 2018, 9, 449–461. [Google Scholar] [CrossRef]

- Zhang, Y.; Xian, M.; Cheng, H.D.; Shareef, B.; Ding, J.; Xu, F.; Huang, K.; Zhang, B.; Ning, C.; Wang, Y. BUSIS: A Benchmark for Breast Ultrasound Image Segmentation. Healthcare 2022, 10, 729. [Google Scholar] [CrossRef]

- Shareef, B.M.; Xian, M.; Sun, S.; Vakanski, A.; Ding, J.; Ning, C.; Cheng, H.D. A Benchmark for Breast Ultrasound Image Classification. SSRN Working Paper. 2023. Available online: https://ssrn.com/abstract=4339660 (accessed on 20 May 2025).

- D’Orsi, C.J.; Sickles, E.A.; Mendelson, E.B.; Morris, E.A. (Eds.) ACR BI-RADS Atlas: Breast Imaging Reporting and Data System, 5th ed.; American College of Radiology: Reston, VA, USA, 2013. [Google Scholar]

- Gu, J.; Jiang, T. Ultrasound radiomics in personalized breast management: Current status and future prospects. Front. Oncol. 2022, 12, 963612. [Google Scholar] [CrossRef]

- Noble, J.A.; Boukerroui, D. Ultrasound image segmentation: A survey. IEEE Trans. Med. Imaging 2006, 25, 987–1010. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the DLMIA/ML-CDS@MICCAI, Granada, Spain, 20 September 2018; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11045, pp. 3–11. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Xie, Y.; Yang, B.; Guan, Q.; Zhang, J.; Wu, Q.; Xia, Y. Attention Mechanisms in Medical Image Segmentation: A Survey. arXiv 2023, arXiv:2305.17937. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Rodriguez, J.; Huang, K.; Xu, M. Multi-Task Breast Ultrasound Image Classification and Segmentation Using Swin Transformer and VMamba Models. In Proceedings of the 2024 7th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Hangzhou, China, 15–17 August 2024; pp. 858–863. [Google Scholar] [CrossRef]

- Shareef, B.; Xian, M.; Vakanski, A.; Wang, H. Breast Ultrasound Tumor Classification Using a Hybrid Multitask CNN-Transformer Network. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2023, Proceedings of the 26th International Conference, Vancouver, BC, Canada, 8–12 October 2023; Springer Nature: Cham, Switzerland, 2023; pp. 344–353. [Google Scholar]

- Guan, H.; Liu, M. Domain Adaptation for Medical Image Analysis: A Survey. IEEE Trans. Biomed. Eng. 2022, 69, 1173–1185. [Google Scholar] [CrossRef]

- Shareef, B.; Xian, M.; Vakanski, A. STAN: Small Tumor-Aware Network for Breast Ultrasound Image Segmentation. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Shareef, B.; Vakanski, A.; Freer, P.E.; Xian, M. ESTAN: Enhanced Small Tumor-Aware Network for Breast Ultrasound Image Segmentation. Healthcare 2022, 10, 2262. [Google Scholar] [CrossRef]

- Hsieh, Y.-H.; Hsu, F.-R.; Dai, S.-T.; Huang, H.-Y.; Chen, D.-R.; Shia, W.-C. Incorporating the Breast Imaging Reporting and Data System Lexicon with a Fully Convolutional Network for Malignancy Detection on Breast Ultrasound. Diagnostics 2022, 12, 66. [Google Scholar] [CrossRef]

- Oktay, O.; Ferrante, E.; Kamnitsas, K.; Heinrich, M.; Bai, W.; Caballero, J.; Cook, S.; de Marvao, A.; Dawes, T.; O’Regan, D.; et al. Anatomically Constrained Neural Networks (ACNN): Application to Cardiac Image Enhancement and Segmentation. IEEE Trans. Med. Imaging 2017, 37, 384–395. [Google Scholar] [CrossRef]

- Kervadec, H.; Bouchtiba, J.; Desrosiers, C.; Granger, E.; Dolz, J.; Ben Ayed, I. Boundary loss for highly unbalanced segmentation. Med. Image Anal. 2021, 67, 101851. [Google Scholar] [CrossRef]

- Karimi, D.; Salcudean, S.E. Reducing the Hausdorff Distance in Medical Image Segmentation with Convolutional Neural Networks. arXiv 2019, arXiv:1904.10030. [Google Scholar] [CrossRef]

- Hu, X.; Li, F.; Samaras, D.; Chen, C. Topology-Preserving Deep Image Segmentation. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- El Jurdi, R.; Petitjean, C.; Honeine, P.; Cheplygina, V.; Abdallah, F. High-level prior-based loss functions for medical image segmentation: A survey. Comput. Vis. Image Underst. 2021, 210, 103248. [Google Scholar] [CrossRef]

- Yu, Y.; Acton, S.T. Speckle reducing anisotropic diffusion. IEEE Trans. Image Process. 2002, 11, 1260–1270. [Google Scholar] [CrossRef]

- Destrempes, F.; Cloutier, G. A Critical Review and Uniformized Representation of Statistical Distributions Modeling the Envelope of Ultrasonic Echoes. Ultrasound Med. Biol. 2010, 36, 1037–1051. [Google Scholar] [CrossRef]

- Muhtadi, S.; Razzaque, R.R.; Chowdhury, A.; Garra, B.S.; Alam, S.K. Texture quantified from ultrasound Nakagami parametric images is diagnostically relevant for breast tumor characterization. J. Med. Imaging 2023, 10 (Suppl. S2), S22410. [Google Scholar] [CrossRef]

- Christensen, A.M.; Rosado-Méndez, I.M.; Hall, T.J. A systematized review of quantitative ultrasound based on first-order speckle statistics. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2024, 71, 872–886. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

- Lüddecke, T.; Ecker, A.S. Image Segmentation Using Text and Image Prompts. arXiv 2021, arXiv:2112.10003. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. arXiv 2023, arXiv:2303.05499. [Google Scholar]

- Ren, T.; Liu, S.; Zeng, A.; Lin, J.; Li, K.; Cao, H.; Chen, J.; Huang, X.; Chen, Y.; Yan, F.; et al. Grounded SAM: Assembling Open-World Models for Diverse Visual Tasks. arXiv 2024, arXiv:2401.14159. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Hoi, S.C.H.; Xiong, C. BLIP: Bootstrapping Language–Image Pre-training for Unified Vision–Language Understanding and Generation. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; Volume 162, pp. 12888–12900. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision–ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Yu, L.; Poirson, P.; Yang, S.; Berg, A.C.; Berg, T.L. Modeling Context in Referring Expressions. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 69–85. [Google Scholar]

- Huang, Y.; Yang, X.; Liu, L.; Zhou, H.; Chang, A.; Zhou, X.; Chen, R.; Yu, J.; Chen, J.; Chen, C.; et al. Segment Anything Model for Medical Images? arXiv 2024, arXiv:2304.14660. [Google Scholar] [CrossRef]

- Tu, Z.; Gu, L.; Wang, X.; Jiang, B. Ultrasound SAM Adapter: Adapting SAM for Breast Lesion Segmentation in Ultrasound Images. arXiv 2024, arXiv:2404.14837. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 1022. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, D. Customized Segment Anything Model for Medical Image Segmentation. arXiv 2023, arXiv:2304.13785. [Google Scholar] [CrossRef]

- Sun, X.; Wei, B.; Jiang, Y.; Mao, L.; Zhao, Q. CLIP-TNseg: A Multi-Modal Hybrid Framework for Thyroid Nodule Segmentation in Ultrasound Images. arXiv 2024, arXiv:2412.05530. [Google Scholar] [CrossRef]

- Chen, Y.; Wei, M.; Zheng, Z.; Hu, J.; Shi, Y.; Xiong, S.; Zhu, X.X.; Mou, L. CausalCLIPSeg: Unlocking CLIP’s Potential in Referring Medical Image Segmentation with Causal Intervention. arXiv 2025, arXiv:2503.15949. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Jain, S.; Wallace, B.C. Attention is not Explanation. In Proceedings of the NAACL-HLT, Minneapolis, MN, USA, 2–7 June 2019; pp. 3543–3556. [Google Scholar]

- Adebayo, J.; Gilmer, J.; Muelly, M.; Goodfellow, I.; Hardt, M.; Kim, B. Sanity Checks for Saliency Maps. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Pawłowska, A.; C´wierz-Pien´kowska, A.; Domalik, A.; Jagus´, D.; Kasprzak, P.; Matkowski, R.; Fura, Ł.; Nowicki, A.; Z˙ ołek, N. Curated benchmark dataset for ultrasound based breast lesion analysis. Sci. Data 2024, 11, 148. [Google Scholar] [CrossRef] [PubMed]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief 2020, 28, 104863. [Google Scholar] [CrossRef]

- Li, C.; Liu, X.; Li, W.; Wang, C.; Liu, H.; Liu, Y.; Chen, Z.; Yuan, Y. U-KAN makes strong backbone for medical image segmentation and generation. In Proceedings of the Thirty-Ninth AAAI Conference on Artificial Intelligence and Thirty-Seventh Conference on Innovative Applications of Artificial Intelligence and Fifteenth Symposium on Educational Advances in Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; AAAI Press: Washington, DC, USA, 2025. AAAI’25/IAAI’25/EAAI’25. [Google Scholar] [CrossRef]

- Xu, M.; Wang, Y.; Huang, K. Anatosegnet: Anatomy Based CNN-Transformer Network for Enhanced Breast Ultrasound Image Segmentation. In Proceedings of the 2025 IEEE 22nd International Symposium on Biomedical Imaging (ISBI), Houston, TX, USA, 14–17 April 2025; pp. 1–5. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).