Abstract

Our study presents an innovative variational Bayesian parameter estimation method for the Quantile Nonlinear Dynamic Latent Variable Model (QNDLVM), particularly when dealing with missing data and nonparametric priors. This method addresses the computational inefficiencies associated with the traditional Markov chain Monte Carlo (MCMC) approach, which struggles with large datasets and high-dimensional parameters due to its prolonged computation times, slow convergence, and substantial memory consumption. By harnessing the deterministic variational Bayesian framework, we convert the complex parameter estimation into a more manageable deterministic optimization problem. This is achieved by leveraging the hierarchical structure of the QNDLVM and the principle of efficiently optimizing approximate posterior distributions within the variational Bayesian framework. We further optimize the evidence lower bound using the coordinate ascent algorithm. To specify propensity scores for missing data manifestations and covariates, we adopt logistic and probit models, respectively, with conditionally conjugate mean field variational Bayes for logistic models. Additionally, we utilize Bayesian local influence to analyze the Ecological Momentary Assessment (EMA) dataset. Our results highlight the variational Bayesian approach’s notable accuracy and its ability to significantly alleviate computational demands, as demonstrated through simulation studies and practical applications.

Keywords:

Dirichlet process; quantile nonlinear dynamic latent variable model; missing data; variational Bayesian method MSC:

Primary 62F15; 62H25; Secondary 62P15

1. Introduction

Latent variable models are designed to estimate the relationships between observed variables and a hypothesized latent construct that is presumed to predict these variables. Dynamic latent variable models (DLVMs) extend this concept by accounting for unobserved heterogeneity through the explicit modeling of dependencies between observations and latent variables. This approach allows for the capture of variable effects across different spatial and temporal dimensions, providing a nuanced understanding of intra-individual dynamics and interindividual differences within ecological momentary assessments, as elucidated by Diener et al. [1] and Chow et al. [2]. Grasping the dynamic interplay and dependencies among latent and manifest variables over time is pivotal. Incorporating these lagged relationships is crucial for developing models that offer more profound insights and greater accuracy in explaining data patterns and trends. The exploration of lagged relationships among model elements is essential for crafting insightful models, which is why dynamic latent variable models have attracted considerable attention recently, as demonstrated by the works of Zhang et al. [3], Chow et al. [4] and Tang et al. [5]. However, the existing literature primarily assumes normality in directly measured error, which is especially susceptible to the influence of outliers and distributions characterized by heavy tails. To address this issue, a typical strategy is to relax the distributional constraints on variables that are directly measurable, focusing on their conditional quantiles instead of the full distribution, as explored by Wang et al. [6] and Tuerde et al. [7]. In our view, there is a sparse body of research concerning quantile nonlinear dynamic latent variable models (QNDLVMs). These models necessitate Markov chain Monte Carlo (MCMC) methods for inference, which can be computationally demanding and impractical for large datasets.

The cornerstone of empirical research is rooted in data collection and analysis, a process where missing values are an almost ubiquitous challenge. This issue spans across various fields, such as education, social sciences, and economics, due to factors like participants’ hesitancy to answer sensitive questions, accidental data loss, or withdrawal from studies (Little and Rubin [8]). Factor analysis with missing data has garnered significant interest from both theoretical researchers and practitioners. A multitude of methodologies have been developed for estimating strict latent variable models when data are incomplete. Banbura and Modugno [9], along with Jungbacker et al. [10], have explored likelihood estimation methods. For approximate factor models, Stock and Mark [11] suggest using the most recent estimate of the common component to fill in missing values in covariates. Despite differences in execution, a prevalent strategy is to utilize estimates from a balanced panel as starting values, as highlighted by Stock and Watson [12]. Recently, Tuerde et al. [7] developed a quantile nonlinear dynamic latent variable model to tackle missing data, but their approach is limited to continuous data and relies on MCMC techniques for inference. To overcome these constraints, we propose a variational Bayesian methodology capable of effectively managing nonignorable missing data.

Our inspiration comes from examining the data gathered through ecological momentary assessment (EMA) methods, as initially explored by Diener and colleagues in 1995. The research involved a group of 174 university students, consisting of 93 males and 81 females with an average age of 20.24 years and a standard deviation of 1.41 years. Participants were instructed to provide daily Ecological Momentary Assessment (EMA) ratings for various emotions using a seven-point Likert scale, with 1 representing “None” and 7 representing “Always”, over a period of 52 days. The directly measurable variables consist of eight ordinal scales: joy, contentment, love, affection, unphappiness, anger, depression, and anxiety. The top four scales are used to denote a latent positive emotion (PE), and the final four scales indicate a latent negative emotion (NE). The missing of data was not negligible; discrete variables are intertwined with individual beliefs and ethical considerations. The missing data made up about 11.2% of the entire dataset. This study seeks to investigate the evolving and nonlinear interactions between positive emotions (PE) and negative emotions (NE), with a particular focus on how these dynamics are affected by prior levels of emotions that possess opposing valences.

We present our innovative contributions: (i) We unveil a deterministic variational Bayesian approach designed to tackle nonlinear dynamic latent variable models (DLVMs), even when faced with the challenges of a nonparametric prior and possible nonignorable missingness data; (ii) To effectively manage nonignorable missing covariates and responses, we employ a set of univariate dimensional logistic and probit models, which facilitate optimizing evidence of the lower bound for robust statistical inference based on their respective posterior distributions; (iii) Our dynamic model benefits from the assumption of a Dirichlet prior for the random effects, offering reasonable representation of the underlying processes; (iv) We take Bayesian local influence analysis to new heights by conducting a comprehensive sensitivity analysis across multiple model components.

This article is organized in a systematic fashion. In Section 2, we begin by delineating the quantile regression model, proceed to elucidate the mechanisms and distributions associated with missing data, and culminate with an introduction to the Dirichlet process. Section 3 introduces a variational Bayesian methodology aimed at estimating the challenging parameters that have been estimated, random effects, and latent factor variables. Section 4 includes simulation studies that analyze the finite sample performance of these techniques. Section 5 illustrates the application of our methodologies through a real-world example. Finally, we conclude with a succinct discussion in Section 6.

2. Model

2.1. Quantile Nonlinear DLVM

A nonlinear dynamic latent variable model (DLVM) comprises two distinct submodels. The first submodel is a measurement model, which serves to elucidate the connections between latent factors and their corresponding variables that are directly observable. The second submodel is a dynamic model, applied to investigate the time-delayed interactions among latent variables. The measurement model that establishes the connection between latent variables and their associated variables that are directly observable is delineated as follows:

where we encounter a vector of observable variables represented as , which is continuous with a vector of variables that are directly observable. Concurrently, there is , a vector of covariates that may have missing data. We also introduce , a vector that signifies hidden factors affecting our observations. The vector contains the K regression coefficients, and acts as the matrix, capturing the interplay between our manifest and latent variables. Furthermore, is a J-dimensional error term, which is uncorrelated with both and . The sample size is denoted by n, and represents the number of repeated measurements for each individual observation. In some cases, we might encounter a scenario where , which results in a balanced design. To move forward without losing any generality, let us set [4]. In the traditional framework of the dynamic latent variable model (DLVM), it is commonly accepted that the error term adheres to a multivariate normal distribution. If the normality assumption for is violated, it may result in biased parameter estimates. Consequently, it is crucial to develop a more robust approach within the context of dynamic factor analysis. To tackle this issue, we will investigate the conditional quantile regression (QR) of conditional on and for a specific quantile level , where . Thus, we focus on the conditional quantile regression (QR) of variable on and for the quantile level :

where the expression represents the th conditional quantile of given the covariates and the parameters . Here, denotes the conditional cumulative distribution function of based on the same covariates and parameters. The vectors and correspond to the jth row of the matrices and , respectively. Additionally, signifies the jth component of the vector , while is a regression coefficient with K-dimensions, and is a loading matrix of the latent factor associated with the quantile level . It is important to note that both and may exhibit variability across different quantiles, indicating that distinct values of are associated with varying coefficients and loading matrices of the latent factors.

According to Kozumi et al. [13], the quantile regression model, denoted by Equation (2), can be re-expressed in the subsequent hierarchical models:

where the variable follows an exponential distribution characterized by the parameter , denoted as Exp(). The probability density function for given is expressed as . Additionally, the variable . Furthermore, the parameters and are defined as follows: and .

The model, which operates on a dynamic basis, for investigating the ties among the latent variables is considered to be:

In the model being proposed, the vector , with dimensions of encapsulates the random influences that are constant over time and unique to each individual. As a vector of functions that vary and are differentiable over time, maps the interaction between the latent variables at time t and their levels one time unit earlier. The vector consists of parameters that are invariant across both time and individuals, while denotes a covariance matrix associated with . In the standard dynamic models, it is a typical assumption that the are independent and identically follow a multivariate normal distribution. Nevertheless, in certain cases [14], the presupposition of a normal distribution for the vector may not be appropriate. To address this issue, we propose that is drawn from an unknown distribution, specifically, , The notation here is meant to represent a probability distribution that is random and without a fixed form.

In order to conduct Bayesian analysis on the parameters , we employ a Dirichlet process (DP) prior to approximate the distribution . Specifically, we assume that , where serves as a basic distribution that sets up a preliminary scaffold for an uncharted distribution. The parameter is a positive constant that represents the probability assigned by the user to the base distribution . The choice of is contingent upon the characteristics of the dataset under investigation.

In accordance with the methodologies established by Blei et al. [15], we make use of the stick-breaking technique to simulate the DP prior. Specifically, we articulate as

Here, we have the Dirac delta measure, denoted as , which is tightly focused on the vector . This vector is an array that encapsulates the potential values of . The values represent random probability weights that adhere to the constraints of being between 0 and 1, with their total summing to 1 across all s from 1 to infinity. The structure of suggests that it is created from a variety of point masses or elongated sticks with different lengths, each anchored at different points defined by . To facilitate the sampling of observations, we delve into the concept of a truncated Dirichlet Process (DP) for :

where the values are set by the stick-breaking strategy presented below:

with for , and .

Given the presence of some complex posterior distributions, optimizing the posterior distribution of using the previously defined DP prior through a variational Bayesian approach is not feasible. To tackle this challenge, we define in relation to a latent variable , which tracks the cluster affiliation of each and correlates with through . Consequently, Equation (4) can be reformulated as:

where .

2.2. Mechanism of Missing Data

In this study, we acknowledge that both and may have some missing values in their data. We can break down into two parts: the observed data, , and the missing data, . Similarly, for , we have the observed portion, , and the missing portion, . This distinction allows us to clearly identify which data points we have and which ones are still elusive. Let be a vector of missing indicators for which has dimensions , i.e., if is missing and if is observed for . Likewise, we establish to represent the missing data indicator for , with indicating missing data for , and indicating observed data. To streamline notation, we define and as the probability density functions for and , respectively, Here, and represent the parameter vectors linked to the missing data indicators and .

Given that is independent of for , owing to the binary nature of , we consider the following model: :

where the probability is contingent upon the missing entries in , signifying that the mechanism of data missingness under consideration is not random, that is, it is nonignorable. Drawing parallels with the work of Tuerde et al. [7], through the use of a collection of univariate dimensional conditional probability distributions, we can detail the mechanism underlying the patterns of missing data within the model for , That is to say

where represents an unknown parameter vector linked to the conditional distribution of , conditioned on the set , where and . Here, denotes the element of for , and . Considering as a binary indicator, it follows that:

Here, the probability s influenced by the missing entries within , suggesting that the data’s missingness mechanism being examined is not ignorable.

In line with the research conducted by Lee and Tang [16], the likelihood Pr can be delineated as

where is a vector of the regression coefficients that are independent of both time and individual factors. In certain scenarios, it is plausible to account for a time-varying mechanism affecting data missingness. Consequently, an analogous model can be applied to determine ; following Tuerde et al. [7], we define using the probit regression model:

where ; the denotes the standard normal CDF’s inverse transformation. To establish a foundational normal regression framework, we introduce latent variables. Specifically, by defining the latent variables , and , model (8) can be rewritten as

where .

Drawing from Equation (9), the conditional probability density function as given can be characterized by the expression

where .

2.3. The Missing Covariates Distribution

Adhering to the methodology of Tuerde et al. [7], the joint density function can be derived by linking a chain of the forthcoming univariate dimensional conditional distributions :

where and can be estimated and linked to the k-th conditional distribution , , , and . Equation (10) demonstrates flexibility in defining the distribution of covariates with missing data, irrespective of whether they are discrete random variables or continuous random variables. To allow for a broader range of distributions, it is posited that the missing covariate and ) conforms to a distribution within the exponential family:

where the functions are strictly positive, while and are predetermined functions. The probability density function , as delineated in Equation (11), encompasses special instances such as the binomial distribution, Gaussian distribution, and gamma distribution. We give thought to the following model for :

where the link function is identified and is strictly monotonically increasing, with differentiable attributes, and .

3. Variational Bayesian Inference

Variational Bayes

For ease of understanding, we refer to , , and , in which encompasses all the parameters that are unknown and related to , and , , , , , , , , , ; in light of the assumption stated above, the integrated posterior density of considering r and , assumes the subsequent form

where , , , is a parameter set related to the distribution of , denotes the prior distribution of . Obviously, the task of obtaining a closed-form representation of is quite arduous, given the intricate high-dimensional integrals at play, suggesting that Bayesian inference on is predicated on . Addressing this issue, augmenting the sets with the observed data in the Bayesian analysis facilitates the estimation of the posterior density that is conducive to Bayesian inference without the complexity of high-dimensional integrals.

According to the principles of variational inference, the initial step involves creating a variational family of probability density functions for the random variable ℜ, which is designed to have the same support as the posterior distribution , where . It is thought that , which is in , is a variational density for approximating . The variational Bayes strategy aims to identify the most fitting approximation to by minimizing the Kullback–Leibler divergence between and , tackling the optimization problem:

where

where the term in this case refers to the expected value relative to . The Kullback–Leibler divergence is zeroed out if, and only if, is equivalent to . The optimization problem is very difficult due to the complex high-dimensional integral.

Alternatively, it is demonstrated from that

Therefore, ℜ is considered to be the minimum achievable value for , commonly known as the Evidence Lower Bound (ELB) (see Appendix A). Following this, minimizing the Kullback–Leibler divergence is the same as maximizing , because is not associated with ℜ. This means that,

The goal of identifying the closest approximation to becomes an issue of maximizing the problem of within the variational family . Due to the complexity inherent in the variational set , the optimization task becomes particularly challenging. As such, it is more reasonable to pursue optimization over a simpler variational set .

To develop a straightforward variational set based on prevalent techniques, we define as the mean-field variational family, where each element operates independently, and every element has a unique factor in the variational density. Thus, it can be inferred that the variational density takes a specific form:

where s are not explicitly defined, the assumed separation into distinct components is already set. Consistent with existing procedures in the variational literature, the best possible solutions for can be obtained by maximizing through the method of coordinate ascent.

Following the approach of the coordinate ascent method as referenced in [17,18,19], when keeping fixed the other variational factors for , i.e., , the optimal variational density maximizing in terms of is given by the following form:

where the function denotes the conditional distribution of given , and signifies the expected value with respect to . Equation (13) shows that is detached from the sth variational component , and the optimal variational density is inaccessible because the on the right-hand side are not optimal. To resolve this issue, the coordinate ascent procedure is iteratively applied to refine according to Equation (13). Upon reaching convergence, either the mean or mode of the optimal variational density is picked to approximate the parameter vector s using a variational Bayesian framework.

From Equation (13), it is straightforward to deduce that the optimal density assumes the form:

where

The optimal density is the multivariate normal distribution

where

The optimal density , ,

where is the l-th element associated with the mass point s in the set or sequence , and is the l-th element in the set or sequence .

The optimal density is a multinomial distribution

where , , , ,

where is a digamma function.

Let be the d unique values (i.e., unique number of a cluster), , and let be components in other than . Then, the optimal density has the form:

The optimal density of each of the elements of is

where is

due to being a differentiable linear or nonlinear function, which is difficult to optimize. In order to optimize convenience, we sometimes relax the conditions.

Let , and the dynamic model have the form

following model (14); model (15) has , where we consider the components optimal density of .

The optimal density of component has the form

where

where is the component of the mean vector , is the diagonal component of covariance , , , .

The optimal density of component has the form

where

where is the component of the mean vector , is the diagonal component of the covariance , , , .

The optimal density of the component has the form

where

where is the component of the mean vector , is the diagonal component of the covariance , , , .

The optimal density of the latent variable , is a multivariate normal distribution,

where , , , .

Let , , . Due to , . We assumption . , . so , let ,

where

The optimal density has the form

where , .

. To define the matrix for model identifiability, we define identifiable indicators for . If is fixed, . If is random, . Let , matrix is delete row. , where .

The optimal density has the form

where ,

,

The optimal density has the form

where , , is generalized Gaussian distribution.

The optimal density has the form

where , , , , , , .

Let , , be the components of corresponding to . According to the assumption of the missing data mechanism ,

following the idea of the coordinate ascent method

in (17) is difficult to optimize. Following Durante et al. [20]

where , following the Pólya-Gamma distribution.

The optimal density has the form

where , , , , , .

Following Durante et al. [20], we introduce the auxiliary variables . The optimal density has the form

where , , ,

The optimal density has the form

where , .

4. Simulation Studies

This section describes three model-based research studies intended to explore the practicality of the previously discussed variational Bayesian methods.

Simulation 1. We examine a quantile dynamic latent variable model represented by the equation:

where and the components and are defined as follows:

where it = (ζ1it, ζ2it) ∼ (0, ). εjit s an error term whose distribution is restricted to have the quantile equal to zero for j = 1, …, J, i = 1, …, n, and t = 1, …, T. Let i = (μ11i, μ22i, μ12i, μ21i), and denote Λ as a J × q loading matrix of the latent factor; corresponds to the j-th row vector within the matrix Λ. For the realization of this simulation, we derive the elements of from the subsequent probability distributions:

Employing these distributions, we aim to show that the Dirichlet Process (DP) prior is well suited to capturing the essence of a normal distribution. For the purpose of ensuring identifiability, we delineate the frameworks of the matrices and in the subsequent manner:

where J = 8 and q = 2. In this experiment, the values of one and zero are considered known parameters, while the parameters , , , , , , , and remain unknown. The true values of the unknown parameters in = (θ1,…, θJ)⊤, and are specified as follows: θ1 = … = θ8 = 1.0, = = 1.0, = −0.5, and = = = = = = 0.8, J = 8, T = 10, n = 30 and τ = 0.75,0.5, 0.25.

To analyze the impact of the measurement error distribution on the precision of the parameter estimation, we will explore three distinct distributions for the variable :

case 1: ;

case 2: ;

case 3: is produced from a mixture of normal distributions, ; for , , and . As per the aforementioned details, the -th conditional quantile for is , , for . The measurement error distributions (case 1)–(case 3), are taken as the -th quantile of the distributions (case 1)–(case 3), respectively.

We recognize that ’s are affected by non-random missing data, and missing indicators are formulated using the logistic regression model:

where . The actual parameter values in the vector are established as follows: , , and . Additionally, the mean proportion of missing responses is approximately 18%.

In order to derive Bayesian estimates for the unknown parameters, it is necessary to define the hyperparameters as follows: , , , , , , , , , , , , and , for , for and , , and for , , , where is the true value of , , , , , , , and to be 0.5 and 0.03 for the first two and last two elements of , respectively.

In this intriguing simulation study, we conducted 100 replications to uncover the pivotal variables and measure the model’s parameters. The results for quantiles 0.75, 0.5, and 0.25 are laid out in Table 1. Here, ‘Bias’ represents the gap between the true value and the average of its estimates from our 100 simulations, while ‘SD’ denotes the standard deviation of these estimates. Meanwhile, ‘RMS’ stands for the root mean square deviation between the replication estimates and the actual value. A glance at Table 1 reveals that our proposed estimate procedure shines brightly, showcasing minimal bias and RMS, with SD values closely mirroring the RMS, regardless of the quantile or error distribution. To keep things concise, we have opted to omit the SD values for the parameters being estimated. The simulation results affirm that our variational estimation method retains impressive efficiency across various error assumptions.

Table 1.

Performance of Bayesian parameter estimates for and 0.75 in Simulation 1.

Simulation 2. Paralleling the procedures of Simulation 1, we assess the forthcoming dynamic latent variable model:

where , , , , with

for , and . For this analysis, is produced following the approach of Simulation 1. The covariates are distributed according to the Bernoulli distribution , where , not dependent on the subjects or observation time points. On the other hand, are simulated from the normal distribution . The true values of the parameters and are adopted from those specified in Simulation 1. In contrast, the true values of , , and are set to , , and , respectively. With these settings, the -th conditional quantile of is expressed as , where and , with prescribed for Simulation 1 for , and for . In this scenario, we set , , , , and .

The assumption is that is fully accounted for, while and are possible due to nonignorable missing. The missing indicators and are deduced from the listed probit models below:

where , and . We set the true values of parameters and as and , respectively. The average portions of missing and are about 18% and 15%, respectively.

In the previous section, we delved into the variational Bayesian approach, utilizing the same hyperparameters as outlined in Simulation 1, with a few tweaks: , , , and for . This setup was employed to derive Bayesian estimates for the unknown parameters across 100 datasets generated multiple times. The findings for quantiles are showcased in Table 2. Table 2 demonstrates that the findings obtained are consistent with our conclusions.

Table 2.

Performance of Bayesian parameter estimates for , 0.5 and 0.75 in Simulation 2.

Simulation 3. In this experiment, we focus on following a quantile nonlinear dynamic factor analysis model:

where with

where it = (ζ1it, ζ2it)⊤ ∼ (0, ), the τ- value quantile of zjit is Qzjit(τ|θτj, it) = θτj + , θτj = θj + Q*(τ), Q*(τ) prescribed in Simulation 1, = for j = 1,…, J. = (, , , )⊤, and Λ is a J × q matrix of latent factor and corresponds to the j-th row vector within the matrix Λ. The elements of follow a normal distribution or uniform distributions:

, , and . We propose that is produced by a mixture of normal distributions, for which . All other parameters and settings are the same as Simulation 1.

For the process of deriving Bayesian estimates for the unknown parameters, it is a prerequisite to designate the hyperparameters as will be described below: , , , , , , , , , , , , and , for , for and , , and for , , , where is the true value of , , , , , , , and are 1 and 10 for the first two and last two elements of , respectively.

The assumption here is that suffers from nonignorable missing data, with the associated missing indicators being determined by the forthcoming logistic regression model:

where . The real values of the parameters in are fixed at , , and ; the expected proportion of missing responses is about 15.7%.

Throughout the simulation, 100 repetitions are undertaken to extract the active variables and to quantify the model parameters. The outcomes for some quantiles (i.e., ) are depicted in Table 3. A review of Table 3 indicates that the Bayesian estimates produced by the proposed methodology demonstrate satisfactory performance.

Table 3.

Performance of Bayesian parameter estimates for , 0.5 and 0.75 in Simulation 3.

5. A Real Example

To bring the aforementioned techniques to life, let us dive into the Ecological Momentary Assessment (EMA) dataset we touched upon earlier. We will be applying the conditional quantile Dynamic Factor Analysis Model (QDFAM) to this intriguing dataset:

for ; here, , . In our exploration, we define corresponding to the j-th element within the elusive parameter vector , while represents the j-th row in the loading matrix of the latent factor, which mirrors the setup from Simulation 1. following the excellent work of Tang et al. [5], We focus on the beginning and ending thresholds (where j ranges from 1 to 8 and k takes on values 1 and 6) for every single one of the eight traceable ordinal entries as . Here, corresponds to the observed aggregate marginal quantity for the j-th observed variable, derived from a sample of 174 individuals and 52 measurements for class k, where is less than or equal to k. Additionally, is . We operate under the premise that the distribution of remains unknown, while grapples with the challenge of missing values. The nature of this missingness is defined by a specific data mechanism, which is outlined as follows:

where ; when , it is a MAR mechanism.

The previously mentioned variational Bayesian method is utilized to derive estimates and to establish 95% confidence intervals for the parameters encompassed by , , and . Table 4 displays the results for .

Table 4.

Bayesian estimates, lower and upper limits of 95% confidence intervals of parameters for in a real example.

In light of Reference [21], to investigate the effects of negligible data perturbations on the priors and statistical distribution of samples using the aforementioned Bayesian local influence measures, the subsequent perturbation strategies are considered: , , for the MAR mechanism, , where is a vector filled with ones, and . When there is no perturbation, this is signified by . Within the framework of the perturbation strategies we discussed, the log-likelihood function for the model under perturbation is depicted as follows:

where and are the hyperparameters of the prior (i.e., ). It is simple to find that , where with for , , and

in which and represent the hyperparameters associated with the prior of (i.e., ), and denote the hyperparameters linked to the prior of (i.e., ) for . The remaining hyperparameters are assigned their respective Bayesian estimates as outlined in Table 4. Additionally, we initialize at 0.5 and set , and .

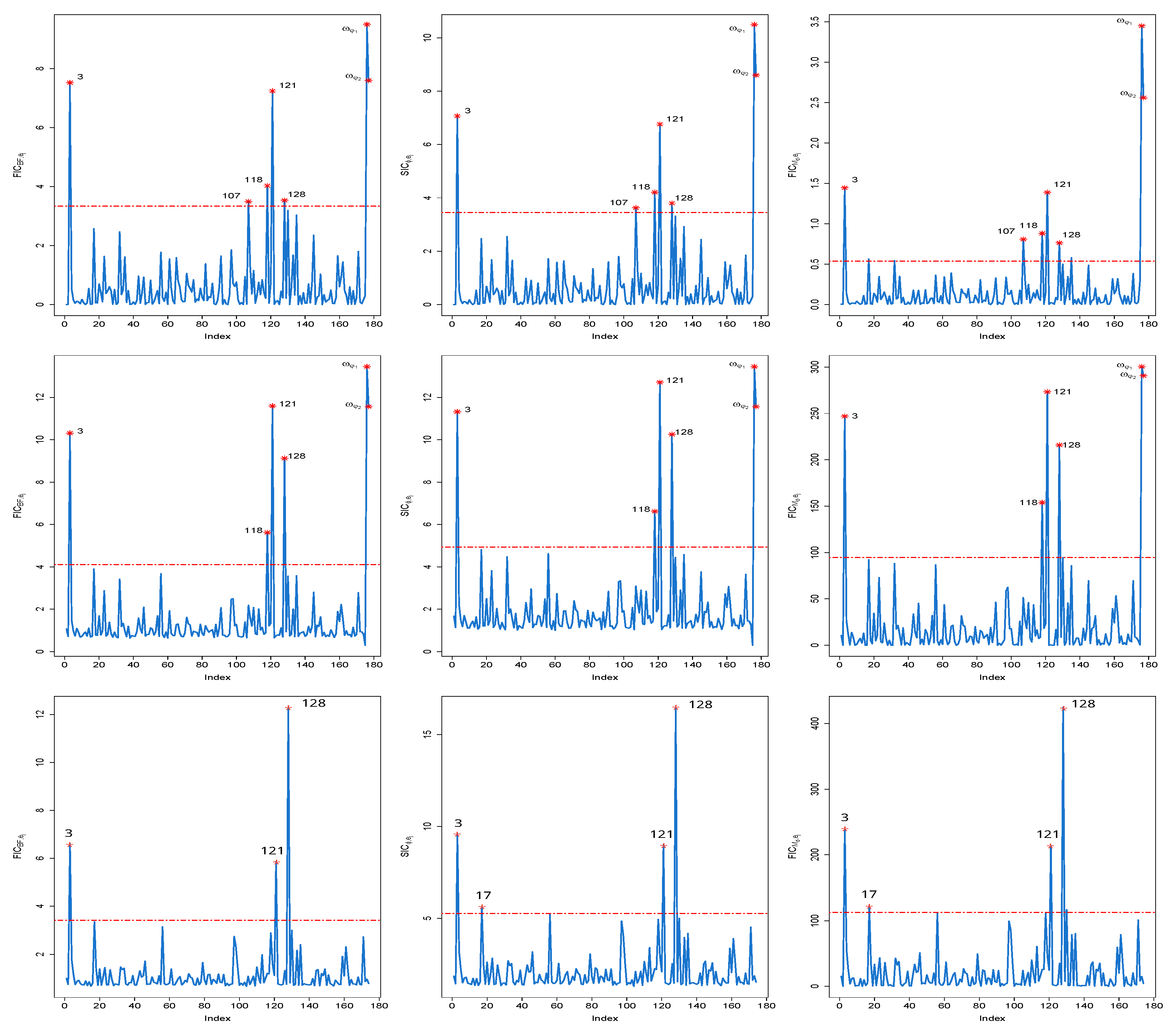

In this real example, the Bayesian local influence diagnostics, which include the Bayes factor (denoted as ), -divergence (represented as ), and posterior mean distance (indicated as ) [21], are assessed based on the parameter vector . This evaluation is conducted using 200 observations, which were obtained through the previously developed variational Bayesian algorithm in conjunction with the specified prior distributions. In the enchanting field of Bayesian local influence diagnostics, we present our findings alongside their benchmarks, beautifully captured in Figure 1. A closer inspection of this visual wonder reveals that cases 3, 121, and 128 emerge as the stars of the show, wielding their influence across all quantile levels, whether it is = 0.25, 0.5, or 0.75. Meanwhile, case 118 stands out at quantiles and . Case 107, alternatively, is continuously influential at quantile 0.25, irrespective of the local influence metrics used. Notably, the identification of as influential by three local influence measures raises concerns about the accuracy of the MAR assumption for missing data.

Figure 1.

Index plots of Bayesian local influence measures: (left panel), (middle panel) and (right panel) for (1st line), 0.5 (2nd line) and 0.75 (3rd line) in a real example.

In order to determine the influence of subjects, case 3, case 118, case 121, and case 128, we recompute the Bayesian estimations for the unknown parameters, omitting these individuals, by applying the variational Bayesian approach introduced earlier along with a mechanism for nonignorable missing data. The Bayesian estimations and their 95% confidence intervals for the parameters in , , , and , with the exclusion of individuals 128, 121, 118, and 3, are displayed in Table 4. Analysis of Table 4 reveals that the 95% confidence intervals for and do not include zero, and the fact that their minimum values are greater than zero substantiates the justification of the nonignorable hypothesis for missing data.

6. Discussion

This investigation considers the complex task of parameter estimation within nonlinear dynamic latent variable models, especially when the challenge of nonignorable missing data is present. These models, marked by their nonlinear latent variables and nonparametric priors, are analyzed using a Bayesian framework. To address the substantial computational demands typically associated with traditional dynamic latent variable models, a novel variational Bayesian technique is introduced to handle missing data and nonlinear dynamics effectively. Utilizing a Dirichlet Process (DP) prior, we can effectively define the unknown distribution of the random effects and integrate it with the variational Bayesian approach. This Bayesian framework has enabled us to develop a practical and robust algorithm that uses an asymmetric Laplace distribution, which combines exponential and normal distributions elegantly. To handle missing data, we employ logistic regression to model the missingness mechanism for observable variables and a truncated normal latent variable with a probit regression model for the propensity scores of missing covariates. In order to handle the mix of continuous and discrete covariates, we conceive a suite of univariate exponential family distributions to portray the joint distribution of the unobserved covariates. We also adapt the Bayesian local influence technique by Zhu et al. [21] to carry out a robustness assessment on quantile nonlinear dynamic latent variable models (DLVMs). Within the variational Bayesian methods framework, we turn the task of precise posterior density estimation into an optimization challenge, focusing on minimizing the evidence lower bound. To ensure computational efficiency, we employ a coordinate ascent algorithm to optimize this lower bound.

Our Bayesian estimates, as evidenced by empirical findings, maintain a high level of accuracy across different quantile levels and missing data mechanisms. We use a real dataset from an EMA study to illustrate our methodologies. However, it is noteworthy that this article refrains from delving into the complexities of high-dimensional random effect models or the more intricate direct relationships between latent variables challenges that are worthy of separate investigation. These engaging issues are perfect for future research pursuits.

Author Contributions

Conceptualization, M.T.; writing—original draft preparation, M.T.; writing—review and editing, A.M.; supervision, M.T.; methodology, M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the Open Project of Key Laboratory of Applied Mathematics of Xinjiang Uygur Autonomous Region (grant no. 2023D04045).

Data Availability Statement

All data generated or analyzed during this study are included in this published article.

Conflicts of Interest

The authors declare that there are no conflicts of interest.

Appendix A. Calculation the Evidence Lower Bound (ELB)

References

- Diener, E.; Fujita, F.; Smith, H. The personality structure of affect. J. Personal. Soc. Psychol. 1995, 69, 130–141. [Google Scholar] [CrossRef]

- Chow, S.M.; Nesselroade, J.R.; Shifren, K.; Mcardle, J.J. Dynamic structure of emotions among individuals with parkinson’s disease. Struct. Equ. Model. Multidiscip. J. 2004, 11, 560–582. [Google Scholar] [CrossRef]

- Zhang, Z.Y.; Nesselroade, J.R. Bayesian Estimation of Categorical Dynamic Factor Models. Multivar. Behav. Res. 2007, 42, 729–756. [Google Scholar] [CrossRef]

- Chow, S.M.; Tang, N.S.; Yuan, Y.; Song, X.Y.; Zhu, H.T. Bayesian estimation of semiparametric dynamic latent variable models using the dirichlet process prior. Br. J. Math. Stat. Psychol. 2011, 64, 69–106. [Google Scholar] [CrossRef] [PubMed]

- Tang, N.S.; Chow, S.M.; Ibrahim, J.G.; Zhu, H.T. Bayesian sensitivity analysis of a nonlinear dynamic factor analysis model with nonparametric prior and possible nonignorable missingness. Psychometrika 2017, 82, 875–903. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.Q.; Tang, N.S. Bayesian quantile regression with mixed discrete and nonignorable missing covariates. Bayesian Anal. 2020, 15, 579–604. [Google Scholar] [CrossRef]

- Tuerde, M.; Tang, N.S. Bayesian semiparametric approach to quantile nonlinear dynamic factor analysis models with mixed ordered and nonignorable missing data. Statistics 2022, 56, 1166–1192. [Google Scholar] [CrossRef]

- Little, R.J.A.; Rubin, D.B. Statistical Analysis with Missing Data, 3rd ed.; John Wiley & Sons: New York, NY, USA, 2019. [Google Scholar]

- Bańbura, M.; Modugno, M. Maximum likelihood estimation of factor models on datasets with arbitrary pattern of missing data. J. Appl. Econ. 2014, 29, 133–160. [Google Scholar] [CrossRef]

- Jungbacker, B.; Koopman, S.J.; van der Wel, M. Maximum likelihood estimation for dynamic factor models with missing data. J. Econ. Dyn. Control 2011, 35, 1358–1368. [Google Scholar] [CrossRef]

- Stock, J.H.; Mark, W.W. Macroeconomic Forecasting Using Diffusion Indexes. J. Bus. Econ. Stat. 2002, 20, 147–295. [Google Scholar] [CrossRef]

- Stock, J.H.; Watson, M.W. Dynamic Factor Models, Factor-Augmented Vector Autoregressions, and Structural Vector Autoregressions in Macroeconomics. In Handbook of Macroeconomics; Elsevier B.V.: Amsterdam, The Netherlands, 2016; pp. 415–525. [Google Scholar]

- Kozumi, H.; Kobayashi, G. Gibbs sampling methods for Bayesian quantile regression. J. Stat. Comput. Simul. 2011, 81, 1565–1578. [Google Scholar] [CrossRef]

- Tang, A.M.; Tang, N.S. Semiparametric Bayesian inference on skew-normal joint modeling of multivariate longitudinal and survival data. Stat. Med. 2015, 34, 824–843. [Google Scholar] [CrossRef] [PubMed]

- Blei, D.; Jordan, M.I. Variational inference for Dirichlet process mixtures. Bayesian Analysis 2006, 1, 121–143. [Google Scholar] [CrossRef]

- Lee, S.Y.; Tang, N.S. Analysis of nonlinear structural equation models with nonignorable missing covariates and ordered categorical data. Stat. Sin. 2006, 16, 1117–1141. [Google Scholar]

- Beal, M.J. Variational Algorithms for Approximate Bayesian Inference. Ph.D. Thesis, University of London, London, UK, 2003. [Google Scholar]

- Bishop, C. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Blei, D.M.; Kucukelbir, A.; McAuliffe, J.D. Variational inference: A review for statisticians. J. Am. Stat. Assoc. 2017, 518, 859–877. [Google Scholar] [CrossRef]

- Durante, D.; Rigon, T. Conditionally Conjugate Mean-Field Variational Bayes for Logistic Models. Stat. Sci. 2019, 34, 472–485. [Google Scholar] [CrossRef]

- Zhu, H.T.; Ibrahim, J.G.; Tang, N.S. Bayesian influence analysis: A geometric approach. Biometrika 2011, 98, 307–323. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).