Abstract

This paper focuses on the weighted complementarity problem (WCP), which is widely used in the fields of economics, sciences and engineering. Not least because of its local superlinear convergence rate, smoothing Newton methods have widespread application in solving various optimization problems. A two-step smoothing Newton method with strong convergence is proposed. With a smoothing complementary function, the WCP is reformulated as a smoothing set of equations and solved by the proposed two-step smoothing Newton method. In each iteration, the new method computes the Newton equation twice, but using the same Jacobian, which can avoid consuming a lot of time in the calculation. To ensure the global convergence, a derivative-free line search rule is inserted. At the same time, we develop a different term in the solution of the smoothing Newton equation, which guarantees the local strong convergence. Under appropriate conditions, the algorithm has at least quadratic or even cubic local convergence. Numerical experiments indicate the stability and effectiveness of the new method. Moreover, compared to the general smoothing Newton method, the two-step smoothing Newton method can significantly improve the computational efficiency without increasing the computational cost.

Keywords:

weighted complementarity problem; derivative-free line search; two-step smoothing Newton method; superquadratic convergence property MSC:

65K05; 90C33

1. Introduction

The weighted complementarity problem (WCP for short) is

in which , , is a known weighted vector, is a nonlinear mapping and represents the vector obtained by multiplying the components of x with s, respectively.

The concept of WCP was introduced first by Potra [1], is an extension of the complementarity problem (CP) [2,3], and is widely used in engineering, economics and science. As shown in [1], Fisher market equilibrium problems from economics can be transformed into WCPs, and quadratic programming and weighted centering problems can be equivalently converted to monotone WCPs. Not only that, the WCP has the potential to be developed into atmospheric chemistry [4,5] and multibody dynamics [6,7].

When is a linear mapping, the WCP (1) can be degenerated into the linear weighted complementarity problem (WLCP) as

where , , . Many scholars have studied the WLCP and have put forward many effective algorithms. Potra [1] proposed two interior-point algorithms and discussed their computational complexity and convergence based on the methods by Mcshane [8] and Mizuno et al. [9]. Gowda [10] discussed a class of WLCP over Euclidean Jordan algebra. Chi et al. [11,12] proposed infeasible interior-point methods for WLCPs, which have good computational complexity. Asadi et al. [13] presented a modified interior-point method and obtained an iteration bound for the monotone WLCP.

On the other hand, recent years have witnessed a growing development of smoothing Newton methods for WCPs whose basic idea is to convert the problem to a smoothing set of nonlinear equations by employing a smoothing function, which is then solved by Newton methods [14,15,16,17,18,19]. Zhang [20] proposed a smoothing Newton method for the WLCP. For WCPs over Euclidean Jordan algebras, Tang et al. [21] presented a smoothing method and analyzed its convergence property under some weaker assumptions.

The two-step Newton method [22,23,24], which typically achieves third-order convergence when solving nonlinear equations , has a higher order of convergence than the classical Newton method. The two-step Newton algorithm computes not only a Newton step defined as

but also an approximate Newton step as

where and represents the Jacobian matrix of . Compared with classical third-order methods such as Halley’s method [25] or super-Newton’s method [26], the two-step Newton algorithm does not need to compute the second-order Hessen matrix, and its computational cost is lower. Without adding additional derivatives and inverse operators, it is possible to raise the order of convergence from second to third order by evaluating the function only once.

In light of those considerations, we present here a two-step Newton algorithm possessing a high-order convergence rate for the WCP (1) on a smoothing complementarity function and an equivalent smoothing system of equations. The new algorithm has the following advantageous properties:

- The proposed method computes the Newton direction twice in each iteration. The first calculation yields a Newton direction, and the second yields an approximate Newton direction. Moreover, both calculations employ the same Jacobian matrix (see Section 3), which saves computing costs.

- The new algorithm utilizes a new term where (see Section 3), when computing the Newton direction, unlike existing Newton algorithms for the WCP [20,21], which determine the local strong convergence. In particular, when , the algorithm has local cubic convergence properties.

- To obtain global convergence properties, we employ a derivative-free line search rule.

This paper is structured as follows. Section 2 presents a smoothing function and discusses its basic properties. Section 3 presents a derivative-free two-step smoothing Newton algorithm for the WCP, which is shown to be feasible. Section 4 deals with convergence properties. Section 5 shows some experiment results. Section 6 gives some concluding remarks.

2. Preliminaries

We define a smoothing function as

where and is a given constant. It readily follows that if and only if

By simple reasoning and calculations, we can conclude the following.

Lemma 1.

For any , is continuously differentiable, where

In addition, and .

Let and ; we define by

where

It follows that the WCP (1) can be transformed into an equivalent equation:

The following lemma states the continuous differentiability of .

Lemma 2.

In order to discuss the nonsingularity of Jacobian matrix , it is necessary to make some assumption.

Assumption A1.

Assuming that is column full rank, then it holds that any with

yields .

For the WLCP (2), i.e., is a linear mapping, then Assumption 1 reduces to

which shows that is monotone, and this case has been discussed for the feasibility of smoothing algorithms for the WLCP, see [1,20,27] and the reference therein.

Theorem 1.

If Assumption 1 holds, then for any , is nonsingular.

Proof of Theorem 1.

By Lemmas 1 and 2, we obtain that the diagonal matrices and are both negative definite. Upon (12), we get

and then

3. A Two-Step Newton Method

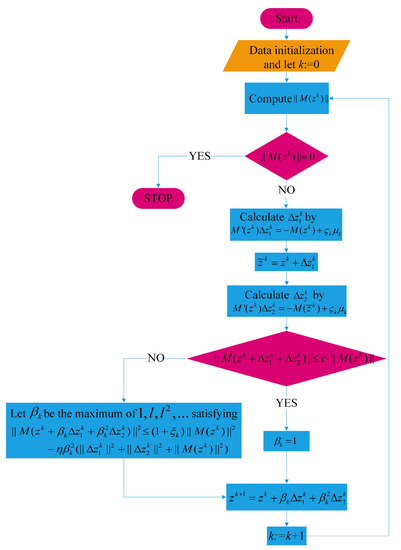

Now, we state the two-step smoothing Newton method. In order to understand Algorithm 1 more intuitively, we also give the flow chart of the new algorithm, as shown in Figure 1.

| Algorithm 1 A Two-Step Newton Method. |

|

Figure 1.

Flow chart of Algorithm 1.

Remark 1.

- 1.

- In each iteration, Algorithm 1 computes the Newton direction by the equationsandusing a new term , which is of significance for discussing the local strong convergence of Algorithm 1. Moreover, although Algorithm 1 computes the Newton direction twice, its computational cost is comparable to the classical Newton method.

- 2.

- Algorithm 1 employs a derivative-free line search rule, a variant of that in [28]. As is shown in Theorem 2, the new derivative-free line search is feasible.

Theorem 2.

If Assumption 1 holds, then Algorithm 1 is feasible. Moreover, we have

- 1.

- for any .

- 2.

- is non-increasing monotonically.

Proof of Theorem 2.

With Theorem 1, we get that is invertible. Then, both Step 1 and Step 2 are feasible. Next, we show the feasibility of Step 4. Supposing not, then for any ,

Hence,

On the other hand, if is not the solution of (1), then it follows from Step 1 that

where the first equality comes from the fact that . This contradicts (21). Hence, Step 4 is feasible, and then Algorithm 1 is well-defined.

Then, it holds by Step 5 that

which means that due to the fact that and . Moreover, it follows that

i.e., is non-increasing monotonically. □

Lemma 3.

If Assumption 1 holds, then is convergent, and the sequence remains in the level set of

Proof of Lemma 3.

According to (18), we have

Since , it follows from Lemma 3.3 in [29] that is convergent. Then, is also convergent.

Moreover, we have

□

4. Convergence Properties

4.1. Global Convergence

We first show a statement of great significance before analyzing the convergence properties of Algorithm 1.

Theorem 3.

If Assumption 1 holds, then it holds that

Proof of Theorem 3.

Define and by

and

Let be the number of elements in .

As , , i.e., , and then .

The proof is completed. □

Theorem 4.

If Assumption 1 holds, then converges to a solution of the WCP (1).

Proof of Theorem 4.

According to Lemma 3, we know that is convergent. Suppose, without loss of generality, that converges to and . Next, we show by contradiction. Assume that , then due to Theorem 3.

Let , it follows from Step 4 that

for sufficiently large k.

On the other hand, since

it follows that

It follows by simple calculation that

4.2. Local Convergence

We then discuss the local superquadratical convergence properties of Algorithm 1.

Theorem 5.

If Assumption 1 holds, all are nonsingular. Suppose that and are both Lipschitz continuous on some neighborhood of , then

- 1.

- for any sufficiently large k.

- 2.

- converges to locally superquadratically. In particular, converges to locally cubically if .

Proof of Theorem 5.

Upon Theorem 4, we have that . All are nonsingular, so we have for any sufficiently large k that

Since is Lipschitz continuous on some neighborhood of , is strongly semismooth and Lipschitz continuous on some neighborhood of , namely,

and

for any sufficiently large k.

By the definition of and , it follows that

Then, combining (15) and (32)–(35) implies

which means that is sufficiently close to for sufficiently large k. Then, according to (34) and (36), we have that

Hence, since , it follows from (16), (32), (35) and (37) that

combining with (34) and (36) yields

for any sufficiently large k, which also means that is sufficiently close to for a sufficiently large k, which, together with the Lipschitz continuity of on some neighborhood of , implies

Then, it holds that

5. Numerical Experiments

We implement Algorithm 1 in practice and use it to solve some numerical examples to verify the feasibility and effectiveness in this section. All programs are implemented on Matlab R2018b and a PC with 2.30 GHz CPU and 16.00 GB RAM. We also code the algorithm in [20], denoted as SNM_Z, and compare it with the new algorithm. To illustrate the performance of the new algorithm, we also code and compare the algorithm in [20], denoted as SNM_Z, with Algorithm 1. The stopping criterion is and the parameters are set as

For SNM_Z, the stopping criterion is the same as that in Algorithm 1, and the parameters are the same as [20].

Example 1.

Consider an example of the WLCP (2) with

where , whose elements are produced by the normal distribution on , are chosen uniformly from and , respectively. The weighted vector is generated by with , where are generated uniformly from .

We test two kinds of problems using Algorithm 1 with different B, denoted by and . is produced by setting , where Q is generated uniformly from . The diagonal matrix is generated randomly on . The initial points , and are chosen as with relevant dimensions in every experiment.

First, in order to state the influence of on the local convergence, we perform different for each case on . We perform three experiments for each problem and present the numerical results in Table 1. In what follows, (AIT)IT represents the (average) number of iterations, (ATime)Time is the (average) time taken for the algorithm to run in seconds, and (AERO)ERO represents the (average) value of in the last iteration. As we can see in Table 1, Algorithm 1 has different local convergence rates with different values of . Moreover, Algorithm 1 has at least a local quadratic rate of convergence.

Table 1.

Numerical results for a WLCP with .

Then, we test an example of and for to visually demonstrate the local convergence properties of Algorithm 1 and SNM_Z. In what follows, we set in Algorithm 1. The results are shown in Table 2, which shows that Algorithm 1 has a local cubic convergence rate whose convergence rate is actually faster than SNM_Z, which possesses the local quadratic convergence rate.

Table 2.

Variation of the value of with the number of iterations for .

Finally, we randomly performed 10 trials for each case. The tested results are shown in Table 3, which demonstrates that Algorithm 1 carries out fewer iterations than SNM_Z. In addition, although Algorithm 1 calculates the Newton direction twice in each iteration, it does not consume too much time compared with SNM_Z.

Table 3.

Numerical comparison results for a WLCP.

Example 2.

Consider an example of the WCP (1), where

with where is generated uniformly from , whose entries are produced from the standard normal distribution randomly. and are all generated randomly from .

We also generated 10 trials for each case. The initial points , and are all chosen as with relevant dimensions. The test results are shown in Table 4, which also indicates that Algorithm 1 is more stable and efficient than SNM_Z.

Table 4.

Numerical comparison results for a WCP.

6. Conclusions

The two-step Newton method, known for its efficiency in solving nonlinear equations, is adopted to solve the WCP in this paper. A novel two-step Newton method designed specifically for solving the WCP is proposed. The best property of this method is its consistent Jacobian matrix in each iteration, resulting in an improved convergence rate without additional computational expenses. To guarantee the global convergence, a new derivative-free line search rule is introduced. With appropriate conditions and parameter selection, the algorithm achieves cubic local convergence. Numerical results show that the two-step Newton method significantly improves the computational efficiency without increasing the computational cost compared to the general smoothing Newton method.

Author Contributions

Conceptualization, X.L.; methodology, X.L.; software, J.Z.; validation, X.L. and J.Z.; formal analysis, J.Z.; writing—original draft, X.L.; writing—review and editing, J.Z.; supervision, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data sets used in this paper are available from the corresponding authors upon reasonable request.

Conflicts of Interest

These authors declare no conflict of interest.

References

- Potra, F. Weighted complementarity problems-a new paradigm for computing equilibria. SIAM J. Optim. 2012, 22, 1634–1654. [Google Scholar] [CrossRef]

- Facchinei, F.; Pang, J. Finite-Dimensional Variational Inequalities and Complementarity Problems; Springer: New York, NY, USA, 2003. [Google Scholar]

- Che, H.; Wang, Y.; Li, M. A smoothing inexact Newton method for P0 nonlinear complementarity problem. Front. Math. China 2012, 7, 1043–1058. [Google Scholar] [CrossRef]

- Amundson, N.; Caboussat, A.; He, J.; Seinfeld, J. Primal-dual interior-point method for an optimization problem related to the modeling of atmospheric organic aerosols. J. Optim. Theory Appl. 2006, 130, 375–407. [Google Scholar]

- Caboussat, A.; Leonard, A. Numerical method for a dynamic optimization problem arising in the modeling of a population of aerosol particles. C. R. Math. 2008, 346, 677–680. [Google Scholar] [CrossRef]

- Flores, P.; Leine, R.; Glocker, C. Modeling and analysis of planar rigid multibody systems with translational clearance joints based on the non-smooth dynamics approach. Multibody Syst. Dyn. 2010, 23, 165–190. [Google Scholar] [CrossRef]

- Pfeiffer, F.; Foerg, M.; Ulbrich, H. Numerical aspects of non-smooth multibody dynamics. Comput. Method Appl. Mech. 2006, 195, 6891–6908. [Google Scholar] [CrossRef]

- McShane, K. Superlinearly convergent O(L)-iteration interior-point algorithms for linear programming and the monotone linear complementarity problem. SIAM J. Optim. 1994, 4, 247–261. [Google Scholar] [CrossRef]

- Mizuno, S.; Todd, M.; Ye, Y. On adaptive-step primal-dual interior-point algorithms for linear programming. Math. Oper. Res. 1993, 18, 964–981. [Google Scholar] [CrossRef]

- Gowda, M.S. Weighted LCPs and interior point systems for copositive linear transformations on Euclidean Jordan algebras. J. Glob. Optim. 2019, 74, 285–295. [Google Scholar] [CrossRef]

- Chi, X.; Wang, G. A full-Newton step infeasible interior-point method for the special weighted linear complementarity problem. J. Optim. Theory Appl. 2021, 190, 108–129. [Google Scholar] [CrossRef]

- Chi, X.; Wan, Z.; Hao, Z. A full-modified-Newton step infeasible interior-point method for the special weighted linear complementarity problem. J. Ind. Manag. Optim. 2021, 18, 2579–2598. [Google Scholar] [CrossRef]

- Asadi, S.; Darvay, Z.; Lesaja, G.; Mahdavi-Amiri, N.; Potra, F. A full-Newton step interior-point method for monotone weighted linear complementarity problems. J. Optim. Theory Appl. 2020, 186, 864–878. [Google Scholar] [CrossRef]

- Liu, L.; Liu, S.; Liu, H. A predictor-corrector smoothing Newton method for symmetric cone complementarity problem. Appl. Math. Comput. 2010, 217, 2989–2999. [Google Scholar] [CrossRef]

- Narushima, Y.; Sagara, N.; Ogasawara, H. A smoothing Newton method with Fischer-Burmeister function for second-order cone complementarity problems. J. Optim. Theory Appl. 2011, 149, 79–101. [Google Scholar] [CrossRef]

- Liu, X.; Liu, S. A new nonmonotone smoothing Newton method for the symmetric cone complementarity problem with the Cartesian P0-property. Math. Method Oper. Res. 2020, 92, 229–247. [Google Scholar] [CrossRef]

- Chen, P.; Lin, G.; Zhu, X.; Bai, F. Smoothing Newton method for nonsmooth second-order cone complementarity problems with application to electric power markets. J. Glob. Optim. 2021, 80, 635–659. [Google Scholar] [CrossRef]

- Zhou, S.; Pan, L.; Xiu, N.; Qi, H. Quadratic convergence of smoothing Newton’s method for 0/1 Loss optimization. SIAM J. Optim. 2021, 31, 3184–3211. [Google Scholar] [CrossRef]

- Khouja, R.; Mourrain, B.; Yakoubsohn, J. Newton-type methods for simultaneous matrix diagonalization. Calcolo 2022, 59, 38. [Google Scholar] [CrossRef]

- Zhang, J. A smoothing Newton algorithm for weighted linear complementarity problem. Optim. Lett. 2016, 10, 499–509. [Google Scholar]

- Tang, J.; Zhang, H. A nonmonotone smoothing Newton algorithm for weighted complementarity problem. J. Optim. Theory Appl. 2021, 189, 679–715. [Google Scholar] [CrossRef]

- Potra, F.A.; Ptak, V. Nondiscrete induction and iterative processes. SIAM Rev. 1987, 29, 505–506. [Google Scholar]

- Kou, J.; Li, Y.; Wang, X. A modification of Newton method with third-order convergence. Appl. Math. Comput. 2006, 181, 1106–1111. [Google Scholar] [CrossRef]

- Magrenan Ruiz, A.A.; Argyros, I.K. Two-step Newton methods. J. Complex. 2014, 30, 533–553. [Google Scholar] [CrossRef]

- Gander, W. On Halley’s iteration method. Amer. Math. 1985, 92, 131–134. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernandez, M.A. On a convex acceleration of Newton’s method. J. Optim. Theory Appl. 1999, 100, 311–326. [Google Scholar] [CrossRef]

- Tang, J. A variant nonmonotone smoothing algorithm with improved numerical results for large-scale LWCPs. Comput. Appl. Math. 2018, 37, 3927–3936. [Google Scholar] [CrossRef]

- Li, D.; Fukushima, M. A globally and superlinearly convergent Gauss-Newton-based BFGS method for symmetric nonlinear equations. SIAM J. Numer. Anal. 1999, 37, 152–172. [Google Scholar] [CrossRef]

- Dennis, J.; More, J. A characterization of superlinear convergence and its applications to quasi-Newton methods. Math. Comput. 1974, 28, 549–560. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).