Abstract

This expository paper advocates an approach to physics in which “typicality” is identified with a suitable form of algorithmic randomness. To this end various theorems from mathematics and physics are reviewed. Their original versions state that some property holds for P-almost all , where P is a probability measure on some space X. Their more refined (and typically more recent) formulations show that holds for all P-random . The computational notion of P-randomness used here generalizes the one introduced by Martin-Löf in 1966 in a way now standard in algorithmic randomness. Examples come from probability theory, analysis, dynamical systems/ergodic theory, statistical mechanics, and quantum mechanics (especially hidden variable theories). An underlying philosophical theme, inherited from von Mises and Kolmogorov, is the interplay between probability and randomness, especially: which comes first?

MSC:

60K35; 68Q30; 82C03

Dedicated to the memory of Marinus Winnink (1936–2023)

1. Introduction

The introduction of probability in statistical mechanics in the 19th century by Maxwell and Boltzmann raised questions about both the meaning of this concept by itself and its relationship to randomness and entropy [1,2,3,4,5]. Roughly speaking, both initially felt that probabilities in statistical mechanics were dynamically generated by particle trajectories, a view which led to ergodic theory. But subsequently Boltzmann [6] introduced his counting arguments as a new start; these led to his famous formula for the entropy on his gravestone in Vienna, i.e.,

probability first, entropy second.

This was turned on its head by Einstein [7], who had rediscovered much of statistical mechanics by himself in his early work, always stressing the role of fluctuations. He expressed the probability of energy fluctuations in terms of entropy seen as a primary concept. This suggests:

entropy first, probability second.

From the modern point of view of large deviation theory [8,9,10,11], what happens is that for finite N some stochastic process fluctuates around its limiting value as (if it has one), and, under favorable circumstances that often obtain in statistical mechanics, the “large” i.e., fluctuations (as opposed to the fluctuations, which are described by the central limit theorem [12]) can be computed via an entropy function whose argument x lies in the (common) codomain of . Since the domain of carries a probability measure to begin with, it seems an illusion that entropy could be defined without some prior notion of probability.

Similar questions may be asked about the connection between probability and randomness (and, closing the triangle, of course also about the relationship between randomness and entropy). First, in his influential (but flawed) work on the foundations of probability, von Mises [13,14] initially defined randomness through a Kollektiv (which, with hindsight, was a precursor to a random sequence). From this, he extracted a notion of probability via asymptotic relative frequencies. See also [5,15,16,17]. Von Plato [5] (p. 190) writes that ‘He [von Mises] was naturally aware of the earlier attempts of Einstein and others at founding statistical physics on classical dynamics’ and justifies this view in his Section 6.3. Thus:

randomness first, probability second.

Kolmogorov [18], on the other hand, (impeccably) defined probability first (via measure theory), in terms of which he hoped to understand randomness. In other words, his (initial) philosophy was:

probability first, randomness second.

Having realized that this was impossible, thirty years later Kolmogorov [19,20] arrived at the concept of randomness named after him, using tools from computer and information science that actually had roots in in the work of von Mises (as well as of Turing, Shannon, and others). See [5,15,21,22,23,24]. So:

Kolmogorov randomness first, measure-theoretic probability second.

But I will argue that even Kolmogorov randomness seems to rely on some prior concept of probability, see Section 3 and in particular the discussion surrounding Theorem 4; and this is obviously the case for Martin-Löf randomness, both in its original form for binary sequences (which is essentially equivalent to Kolmogorov randomness as extended from finite strings to infinite sequences, see Theorem 3) and in its generalizations (see Section 3). So I will defend the view that after all we have

some prior probability measure first, Martin-Löf randomness second.

In any case, there isn’t a single concept of randomness [25], not even within the algorithmic setting [26]; although the above slogan probably applies to most of them.

Motivated by the above discussion and its potential applications to physics, the aim of this paper is to review the interplay between probability, (algorithmic) randomness, and entropy via examples from probability itself, analysis, dynamical systems and (Boltzmann-style) statistical mechanics, and quantum mechanics. Some basic relations are explained in the next Section 2. In Section 3 I review algorithmic randomness beyond binary sequences. Section 4 introduces some key “intuition pumps”: these are results in which ‘for P-almost every x: ’ in some “classical” result can be replaced by ‘for all P-random x: ’ in an “effective” counterpart thereof; this replacement may even be seen as the essence of algorithmic randomness. In Section 5 I apply this idea to statistical mechanics, and close in Section 6 with some brief comments on quantum mechanics. The paper closes with a brief summary.

2. Some Background on Entropy and Probability

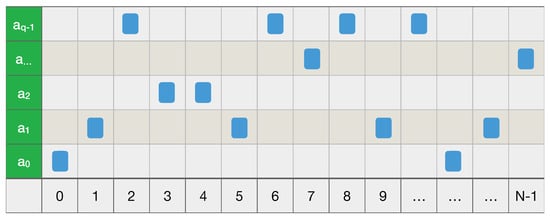

Consider Figure 1, which connects and illustrates the main examples in this paper.

Figure 1.

Sample configuration on N objects each of which can be in q different states.

Here is meant to be some large natural number, whereas , the cardinality of

could be anything (finite), but is small () in the already interesting case of binary strings. In what follows, is the set of all functions , where

as usual in set theory. Such a function is also called a string over A, having length

We write either or for its value at , and may write as . In particular, if , then is a binary string. I write

so that for example is the set of all binary strings. Thus a (binary) string is finite, whereas a (binary) sequence s is infinite. The set of all binary sequences is denoted by , and likewise consists of all functions . For , I write for , to be sharply distinguished from . Using this notation, I now review various ways of looking at Figure 1. Especially in the first two items below it is hard to avoid overlap with e.g., the reviews [27,28], which I recommend for further information.

- In statistical mechanics as developed by Boltzmann in 1877 [6], and more generally in what one might call “Boltzmann-style statistical mechanics”, which is based on typicality arguments [29], N is the number of (distinguishable) particles under consideration, and A could be a finite set of single-particle energy levels. More generally, is some property each particle may separately have, such as its location in cell relative to some partitionof the single-particle phase space or configuration space X accessible to each particle. Here and different are disjoint, which fact is expressed by the symbol ⨆ in (5). One might replace ⨆ by ⋃ as long as one knows that the subsets are mutually disjoint (and measurable as appropriate). The microstate is a functionthat specifies which property (among the possibilities in A) each particle has. Thus also spin chains fall under this formalism, where is some internal degree of freedom at site n. In Boltzmann-style arguments it is often assumed that each microstate is equally likely, which corresponds to the probability on defined byfor each . This is the Bernoulli measure on induced by the flat prior on A,for each . More generally, is the Bernoulli measure on induced by some probability distribution p on A; that is, the product measure of N copies of p; some people write . This extends to the idealized case , as follows. For we definewhere means that for some (in words: is a prefix of ). On these basic measurable (and open) sets we define a probability measure byIn particular, if , then its unbiased probability is simply given byIt is important to keep track of p even if it is flat: making no (apparent) assumption (which is often taken to be) is an important assumption! For example, Boltzmann’s famous counting argument [6] really reads as follows [30,31,32,33]. The formulaon Boltzmann’s grave should more precisely be something likewhere I omit the constant k and take to be the relevant argument of the (extensive) Boltzmann entropy (see below). Furthermore, is the probability (“Wahrscheinlichkeit”) of , which Boltzmann, assuming the flat prior (8) on A, took aswhere is the number of microstates whose corresponding empirical measureequals . Here, for any , is the point measure at b, i.e., , the Kronecker delta. The number is only nonzero if , which consists of all probability distributions on A that arise as for some . This, in turn, means that for some , with . In that case,The term in (14) of course equals for any and hence certainly for any for which . For such , for general Bernoulli measures on we havein terms of the Shannon entropy and the Kullback–Leibler distance (or divergence), given byrespectively. These are simply related: for the flat prior (8) we haveIn general, computing from (13) and, again assuming , we obtainfrom which Stirling’s formula (or the technique in [Section 2.1] in [31]) giveswhere is any sequence of probability distributions on A that (weakly) converges to , i.e., the variable in . For the flat prior (8), Equation (20) yieldsAs an aside, note that the Kullback–Leibler distance or relative entropy (19) is defined more generally for probability measures and p on some measure space . As usual, we write iff is absolutely continuous with respect to p, i.e., implies for . In that case, the Radon–Nikodym derivative exists, and one hasIf is not absolutely continuous with respect to p, one puts . The nature of the empirical measure (15) and the Kullback–Leibler distance (19) comes out well in hypothesis testing. In order to test the hypothesis that by an N-fold trial , one accepts iff , for some . This test is optimal in the sense of Hoeffding [Section 3.5] in [31]. But let us return to the main story.The stochastic process whose large fluctuations are described by (22) isThen almost surely, and large fluctuations around this value are described bywhere is open, or more generally, is such that . Less precisely,which implies that if , whereas is exponentially damped if . Note that the rate function defined in (19) and (26) is convex and positive, whereas the entropy (22) is concave and negative. Thus the former is to be minimized, its infimum (even minimum) over being zero at , whereas the latter is to be maximized, its supremum (even maximum) at the same value being zero. The first term in (23) hides the negativity of the Boltzmann entropy (here for a flat prior), but the second term drives it below zero. Positivity of follows from (or actually is) the Gibbs inequality. Equation (26) is a special case of Sanov’s theorem, which works for arbitrary Polish spaces (instead of our finite set A); see [8,31]. Einstein [7] computes the probability of large fluctuations of the energy, rather than of the empirical measure, as Boltzmann did in 1877 [6], but these are closely related.Interpreting A as a set of energy levels, the relevant stochastic process still has and P as in (25), but this time is the average energy, defined byThis makes the relevant entropy (which is the original entropy from Clausius-style thermodynamics!) a function of , interpreted as energy: instead of (26), one obtainswhich “maximal entropy principle” is a special case of Cramér’s theorem [8,31]. If lies in , then . If not, this probability is exponentially small in N. To obtain the classical thermodynamics of non-interacting particles [32], one may add that the free energyis essentially the Fenchel transform [34] of the entropy , in thatFor , the first equality is a refined version of “”.

- In information theory as developed by Shannon [35] (see also [36,37,38]) the “N” in our diagram is the number of letters drawn from an alphabet A by sampling a given probability distribution , the space of all probability distributions on A. So each microstate is a word with N letters. The entropy of p, i.e.,plays a key role in Shannon’s approach. It is the expectation value of the functioninterpreted as the information contained in , relative to p. This interpretation is evident for the flat distribution on an alphabet with letters, in which case for each , which is the minimal number of bits needed to (losslessly) encode a. The general case is covered by the noiseless coding theorem for prefix (or uniquely decodable) codes. A map is a prefix code if it is injective and is never a prefix of for any , that is, there is no such that . A prefix code is uniquely decodable. Let be a prefix code, let be the length of the codeword , with expectationAn optimal code minimizes this. Then:

- Any prefix code satisfies ;

- There exists an optimal prefix code C, which satisfies .

- One has iff for each (if this is possible).

Of course, the equality can only be satisfied if for some integer . Otherwise, one can find a code for which , the smallest integer . See e.g., [Section 5.4] in [36].Thus the information content is approximately the length of the code-word in some optimal coding C. Passing to our case of interest of N-letter words over A, in case of a memoryless source one simply has the Bernoulli measure on , with entropyExtending the letter-code to a word-code by concatenation, i.e., , and replacing , which diverges as , by the average codeword length per symbol , an optimal code C satisfiesIn what follows, the Asymptotic Equipartition Property or AEP will be important. In its (probabilistically) weak form, which is typically used in information theory, this states thatIts strong form, which is the (original) Shannon–McMillan–Breiman theorem, readsEither way, the idea is that for large N, with respect to “most” strings have “almost” the same probability , whilst the others are negligible [lecture 2] in [30]. For with flat prior this yields a tautology: all strings have . See e.g., [Sections 3.1 and 16.8] in [36]. The strong form follows from ergodic theory, cf. (53). - In dynamical systems along the lines of the ubiquitous Kolmogorov [39], one starts with a triple , where X–more precisely , but I usually suppress the -algebra –is a measure space, P is a probability measure on X (more precisely, on ), and is a measurable (but not necessarily invertible) map, required to preserve P in the sense that for any . A measurable coarse-graining (5) defines a mapin terms of which the given triple is coarse-grained by a new triple . Here is the induced probability on , whilst S is the (unilateral) shiftA fine-grained path is coarse-grained to , and truncating the latter at gives . Hence the configuration in Figure 1 states that our particle starts from at , moves to at , etc., and at time finds itself at . In other words, a coarse-grained path tells us exactly that , for (). Note that the shift satisfiesso if were invertible, then nothing would be lost in coarse-graining; using the bilateral shift on instead of the unilateral one in the main text, this is the case for example with the Baker’s map on with and partition , .The point of Kolmogorov’s approach is to refine the partition (5), which I now denote byto a finer partition of X, which consists of all non-empty subsetsIndeed, if we know x, then we know both the (truncated) fine- and coarse-grained pathsBut if we just know that , we cannot construct even the coarse-grained path . To do so, we must know that , for some (provided ). In other words, the unique element of the partition that contains x, bijectively corresponds to a coarse-grained path , and hence we may take to be the probability of the coarse-grained path . This suggests an information functioncf. (34), and, as in (33), an average (=expected) information or entropy functionAs , this (extensive) entropy has an (intensive) limitin terms of which the Kolmogorov–Sinai entropy of our system is defined bywhere the supremum is taken over all finite measurable partitions of X, as above.We say that is ergodic if for every T-invariant set (i.e., ), either or . For later use, I now state three equivalent conditions for ergodicity of ; see e.g., [Section 4.1] in [40]. Namely, is ergodic if and only if for P-almost every x:The empirical measure (15) is a special case of (50). Equation (51) is a special case of Birkhoff’s ergodic theorem; equation (51) is Birkhoff’s theorem assuming ergodicity. In general, the l.h.s. is in and is not constant P-a.e. Each of these is a corollary of the others, e.g., (50) and (51) are basically the same statement, and one obtains (52) from (51) by taking . Note that the apparent logical form of (51) is: ‘for all f and all x’, which suggests that the universal quantifiers can be interchanged, but this is false: the actual logical for is: ‘for all f there exists a set of P-measure zero’, which in general cannot be interchanged (indeed, in standard proofs the measure-zero set explicitly depends on f). Nonetheless, in some cases a measure zero set independent of f can be found, e.g., for compact metric spaces and continuous f, cf. [Theorem 3.2.6] in [40]. Similar f-independence will be true for the computable case reviewed below, which speaks in their favour. Likewise for (52). Equation (51) implies the general Shannon–McMillan–Breiman theorem, which in turn implies the previous one (39) for information theory by taking . Namely:Theorem 1.If is ergodic, then for P-almost every one hasSee e.g., [Theorem 9.3.1] in [40]. Comparing this with (48) and (47), the average value of the information w.r.t. P can be computed from its value at a single point x, as long as this point is “typical”. As in the explanation of the original theorem in information theory, equation (53) implies that all typical paths (with respect to P) have about the same probability .See [41] for Kolmogorov’s contributions to dynamical systems and ergodic theory. Relevant textbooks include for example [40,42].

3. P-Randomness

The concept of P-randomness (where P is a probability measure on some measure space ) was introduced by Martin-Löf in 1966 [43] for the case and , i.e., the unbiased Bernoulli measure on the space of infinite coin flips. Following [Section 3] in [44], a more general definition of P-randomness which is elegant and appropriate to my goals is as follows:

Definition 1.

- 1.

- A topological space X is effective if it has a countable base with a bijectionAn effective probability space is an effective topological space X with a Borel probability measure P, i.e., defined on the open sets .

- 2.

- An open set as in 1. is computable if for some computable function ,Here f may be assumed to be total without loss of generality. In other words,for some c.e. set (where c.e. means computably enumerable, i.e., is the image of a total computable function ).

- 3.

- A sequence of opens is computable iffor some (total) computable function ; that is,for some c.e. . Without loss of generality we may and will assume that the (double) sequence is computable.

- 4.

- A (randomness) test is a computable sequence as in 3. for which for all one hasOne may (and will) also assume without loss of generality that for all n we have

- 5.

- A point is P-random if for any subset of the formwhere is some test (since , such an N is called an effective null set).

- 6.

- A measure P in an effective probability space is upper semi-computable if the setis c.e. (assuming to some computable isomorphisms and ). Also, P is lower semi-computable if the set , defined like (62) with instead of , is c.e. Finally, P is computable if it is upper and lower semi-computable, in which case is called a computable probability space (and similarly for upper and lower computability).

Note that parts 1 to 5 do not impose any computability requirement on P, but even so it easily follows that , where is the set of all P-random points in X. However, if P is upper semi-computable, one has a generalization of a further central result of Martin-Löf [43] (p. 605).

Definition 2.

A universal test is a test such that for any test there is a constant such that for each we have .

Universal tests exist provided P is upper semi-computable, which in turn implies that is P-random iff . See [Theorem 3.10] in [44]. Compared with computable metric spaces [45], which for all purposes of this paper could have been used, too, Hertling and Weihrauch [44], whom I follow, avoid the choice of a countable dense subset of X. The latter is unnatural already in the case , where has to be injected into via a map like for some fixed (where repeats a infinitely often). On the other hand, the map , , where , is quite natural (here is the topology of X). If P is computable as defined in clause 6, P is a computable point in the effective space of all probability measures on X [46,47,48], where a point x in an effective topological space is deemed computable if for some computable sequence .

A key example is over a finite alphabet A, with topology generated by the cylinder sets , where and . The usual lexicographical order on then gives a bijection , and hence a numbering

The Bernoulli measures on then have the same computability properties as . In particular, the flat prior f makes computable, and in case that , the computability properties of are transferred to . This is all we need for my main theme.

In case of a flat prior f on A, the above notion of randomness of sequences in is equivalent to the definition of Martin-Löf [43], which I will now review, following Calude [49]. Though equivalent to the definition of Martin-Löf random sequences in books like [22,50,51], the construction in [49] is actually closer in spirit to Martin-Löf [43] and has the advantage of being compatible with Earman’s principle (see below) even before redefining randomness in terms of Kolmogorov complexity. The definition in the other three books just cited lacks this feature.

Calude’s definition is based on first defining random strings . Since has the discrete topology, we simply take to consist of all singletons , , with as in (63). Since unlike or the set does not carry a useful probability measure P, we replace (59) by

Definition 3.

A sequential test is a computable sequence of subsets such that:

- 1.

- The inequality (64) holds;

- 2.

- (as in Definition 1 (4));

- 3.

- and imply (i.e., extensions of also belong to ).

Since (64) is the same as

via Equation (65), Definition (3) implies that for all . A simple example of a sequential test for is , i.e., the set of all strings starting with n copies of 1. There exists a universal sequential test such that for any sequential test there is a such that for each we have . See [Theorem 6.16 and Definition 6.17] in [49]. For this (or indeed any) test U we define if , and otherwise

By the comment after (65) we have , since for some N. If for some , then by definition. Since , this implies for all , so that also . But as we have just seen, we may restrict these values to .

Definition 4.

- 1.

- A string is q-random (for some ) if .

- 2.

- A sequence is Calude random (with respect to ) if there is a constant such that each finite segment is q-random, i.e., such that for all N,

Note that the lower q is, the higher the randomness of , as it lies in fewer sets . It is easy to show that Calude randomness is equivalent to any of the following three conditions (the third of these is taken as the definition of randomness in [Theorem 6.16 and Definition 6.25] in [49]:)

Theorem 2.

A string is -random (cf. Definition 1 (5)) iff it is Calude random.

This follows from [Theorem 6.35] in [49] and Theorem 3 below. The point is that randomness of sequences can be expressed in terms of randomness of finite initial segments of s. This is also true via another (much better known) reformulation of Martin-Löf randomness.

Definition 5.

A sequence is Chaitin–Levin–Schnorr random if there is a constant such that each finite segment is prefix Kolmogorov c-random, in the sense that for all N,

Here is the prefix Kolmogorov complexity of with respect to a fixed universal prefix Turing machine; changing this machine only changes the constant c in the same way for all strings (which makes the value of c somewhat arbitrary). Recall that of is defined as the length of the shortest program that outputs and then halts, running on a universal prefix Turing machine T (i.e., the domain of T consists of a prefix subset of , so if then whenever ). Fix some universal prefix Turing machine T, and define

Then is c-prefix Kolmogorov random, for some -independent , if

Note that Calude [49] writes for what, following [22] and others, I call .

A key result in algorithmic randomness [Theorem 6.2.3] in [51], then, is:

Theorem 3.

A sequence is -random iff it is Chaitin–Levin–Schnorr random.

According to [p. 3308, footnote 1] in [52] (with references adapted):

Theorem 3 was announced by Chaitin [53] and attributed to Schnorr (who was the referee of the paper) without proof. The first published proof (in a form generalized to arbitrary computable measures) appeared in the work of Gács [54].

Since Levin [55] also states Theorem 3, the names Chaitin–Levin–Schnorr seem fair.

Hence both Definitions 4 and 5 are compatible on their own terms with Earman’s Principle:

While idealizations are useful and, perhaps, even essential to progress in physics, a sound principle of interpretation would seem to be that no effect can be counted as a genuine physical effect if it disappears when the idealizations are removed. (Earman, [56] (p. 191)).

By Theorem 2, Definition 4 is a special case of Definition 1 (5) and hence it depends on the initial probability on . On the other hand, both (65) and the equivalence between Definitions 3 and 4 suggest that -randomness does not depend on ! To assess this, let us look at a version of Theorem 3 for arbitrary computable measures P on [54,55].

Theorem 4.

Let P be a computable probability measure on . Then is P-random iff there is a constant such that for all N,

If , then , and so (73) reduces to (70). Thus the absence of a P-dependence in Definition 5 is only apparent, since it implicitly depends on the assumption . It seems, then, that Kolmogorov did not achieve his goal of defining randomness in a non-probabilistic way! Indeed, note also that the definition of depends on the hidden assumption that the length function on assigns equal length to 0 and 1 (Chris Porter, email 13 June 2023).

Another interesting example is Brownian motion, which is related to binary sequences via the random walk [12,57]. Brownian motion may be defined as a Gaussian stochastic process in with variance t and covariance . We also assume that . An equivalent axiomatization states that for each n-tuple with the increments , …, are independent, that for each t one has

and that is continuous with probability one. If we add that , these axioms imply

We switch from to . Take , seen as a Banach space in the supremum norm and hence as a metric space (i.e., ) with ensuing Borel structure. For each , define a map

and is defined at all other points of via linear interpolation (i.e., by drawing straight lines between and for each ; I omit the formula). Thus is a random walk with N steps in which each time jump is compressed from unit duration to (so that the time span becomes ), and each spatial step size is compressed from to . Now equip with the fair Bernoulli probability measure . Then induces a probability measure on in the usual way, i.e.,

for measurable . The point, then, is that there is a unique probability measure on , called Wiener measure, such that weakly as . The concept of weak convergence of probability measures on (complete separable) metric spaces X used here is defined as follows: a sequence of probability measures on X converges weakly to P iff

for each . This is equivalent to for each measurable for which . See [58] for both the general theory and its application to Brownian motion, which may now be realized on as , where the evaluation maps are defined by

In fact, the set of all paths of the kind , , is uniformly dense in , the set of all that vanish at (on which is supported). Namely, for and , recursively define

until . In terms of these, define by and then, again recursively until ,

Then as , but is just , cf. [Section 6.4.1] in [12] This enables us to turn (with suppressed Borel structure given by the metric) into an effective probability space. In Definition 1 (1), we take the countable base to consist of all open balls with rational radii around points , where and , numbered via lexicographical ordering of and computable ismorphisms and . The following theorem [59] then characterizes the ensuing notion of -randomness. See also [52,60,61].

Theorem 5.

A path is -random iff (w.r.t. ) effectively for some sequence in for which there is a constant such that for all N,

Here effective convergence means that

where is computable (so plays the role of ).

Compare with Definition 5. It might be preferable if there were a single sequence for which , cf. (70), but unfortunately this is not the case [60,61]. Nonetheless, Theorem 5 is satisfactory from the point of view of Earman’s principle above, in that randomness of a Brownian path is characterized by randomness properties of its finite approximants ; indeed, each is c-Kolmogorov random, even for the same value of c for all N.

4. From ‘for P-Almost Every x’ to ‘for All P-Random x’

Although results of the kind reviewed here pervade the literature on algorithmic randomness (and, as remarked in the Introduction, might be said to be a key goal of this theory), their importance for physics still remains to be explored. The idea is best illustrated by the following example, which was the first of its kind. For binary sequences in equipped with the flat Bernoulli measure , see (8) etc., the strong law of large numbers (cf. [Section 2.1.2] in [12]) states that

for -almost every (or: -almost surely). Recall that this means that there exists a measurable subset with such that (85) holds for each (equivalently: there exists with such that (85) holds for each ). Theorems like this provide no information about A (or B). Martin-Löf randomness (cf. Definition 1) provides this information (usually at the cost of additional computability assumptions), where I recall that, as explained more generally after Definition 1, the set of all P-random elements in X has . In the case at hand, the computability assumption behind this result is satisfied since we use a computable flat prior under which is a computable probability space in the sense of Definition 1.

Theorem 6.

The strong law of large numbers (85) holds for all -random sequences .

See [43] (p. 619), and in detail [Theorem 6.57] in [49]. The law of the iterated logarithm [Section 2.3] in [12] also holds in this sense [62]. More generally, the classical theorem stating that -almost all sequences are Borel normal can be sharpened to the statement that all -random sequences are Borel normal. See [Theorem 6.61 ] in [49] (a sequence is called Borel normal if each string occurs in s with the asymptotic relative frequency given by ). The most spectacular result in this direction is arguably [Theorem 6.50] in [49]:

Theorem 7.

Any occurs infinitely often in every -random sequence .

The original version of this “Monkey typewriter theorem” states that any occurs infinitely often in -almost all sequences . But I wonder if this theorem matches Earman’s principle; I see no interesting and valid version for finite strings (but put this as a challenge to the reader).

The proof of all such theorems, including those to be mentioned below, is by contradiction: x not having the property in question, e.g., (85), would make x fail some randomness test.

Interesting examples also come from analysis. The pertinent computable probability space is , where is Lebesgue measure, and for the basic opens in Definition 1 one takes open intervals with rational endpoints, suitably numbered (here I suppress the usual Borel -algebra B on , which is generated by the standard topology). Alternatively, the map

induces an isomorphism of probability spaces , though not a bijection of sets , since the dyadic numbers (i.e., for and ) have no unique binary expansions (the potential non-uniqueness of binary expansions is irrelevant for the purposes of this section, since dyadic numbers are not random). Although (86) is not a homeomorphism, it nonetheless maps the usual -algebra of measurable subsets of to its counterpart for . By [Corollary 5.2] in [44] we then have:

Theorem 8.

Let . Then is λ-random iff is -random.

This matches Theorem 5 in reducing a seemingly different setting for randomness to the case of binary sequences. See [48] for a general perspective on this phenomenon.

Further to Definition 1 above, taken from [44], in what follows I also adopt [Definition 4.2] in [44] as a definition (or characterizing) of computability (which in this approach presupposes continuity):

Definition 6.

if and are effective topological spaces, then is computable iff for each the inverse image is open and computable.

One of the clearest theorems relating analysis to randomness in the spirit of our theme is the following [Theorem 6.7] in [63] (see also [Section 4] in [64]), which sharpens a classical result to the effect that any function of bounded variation is almost everywhere differentiable. First, recall that f has bounded variation if there is a constant such that for any finite collection of points one has . By the Jordan decomposition theorem, this turns out to be the case iff where g and h are non-decreasing.

Theorem 9.

If is computable and has bounded variation, then f is differentiable at any λ-random . Moreover, is λ-random iff exists for every such f.

Theorems like this give us even more than we asked for (which was the mere ability to replace ‘for P-almost every x’ by ‘for all P-random x’): they characterize random points in terms of a certain property that all members of a specific class of computable functions have. There is a similar result in which bounded variation is replaced by absolute continuity. Theorem 9 also has a counterpart in which f is non-decreasing, but here the conclusion is that f is computably random instead of Martin-Löf random (see [65] for computable randomess, originally defined by Schnorr via martingales, which is weaker than Martin-Löf randomness, i.e., Martin-Löf randomness implies computable randomness). A similar classical theorem returns Schnorr randomness (where is Schnorr random if in Definition 1 (4) we replace (59) by ; this gives fewer tests to pass, and hence, once again, a weaker sense of randomness than Martin-Löf randomness), see [48,63]:

Theorem 10.

If is computable, then exists for all λ-random , and the above limit exists for each computable iff is Schnorr random.

Now for ergodic theory. Recall the equivalent characterizations of ergodicity stated in equations (50) to (52). We then have (cf. [Theorem 8] in [66], [Theorem 3.2.2] in [67], [Theorem 1.3] in [68]):

Theorem 11.

The first author to prove such results was V’yugin [69]. See also the reviews [70,71]. In Theorem 11 one could replace (87) with the property that x satisfy (Poincaré) recurrence, in the sense that for each computable open (not necessarily containing x) there is some such that . If (51) instead of (50) is used, a result like Theorem 11 obtains that characterizes Schnorr randomness. The Shannon–McMillan–Breiman theorem (53) also falls under this scope. We say that a partition of X is computable if each is a computable open set. The defining equation (5) is then replaced by .

Theorem 12.

If P and T are computable and T is ergodic, and also the partition π of X is computable, then for every P-random one has

See [Corollary 6.1.1] in [67]; note the lim sup here.

Things become more interesting if we replace the information function by the (prefix) Kolmogorov complexity . Recall the map (40), which we may truncate to maps

so that (for ) identifies the subspace of the partition our particle occupies after n time steps. Under the same assumptions as Theorem 12, we then have:

for all P-random (and hence for P-almost every ), cf. (48). See [67,72,73,74]. Taking the supremum over all computable partitions , the Kolmogorov–Sinai entropy of equals the limiting Kolmogorov complexity of any increasingly fine coarse-grained P-random path for . Note that the right-hand side of (90) is independent of P, which the left-hand side is not; however, the condition for the validity of (90), namely that x be P-random, depends on P. The equality

for all P-random also illustrates our theme; it shows that (at least asymptotically) each P-random x generates a course-grained path that has “average” Kolmogorov complexity. Applying these results to , with T the unilateral shift and the Bernoulli measure on given by a probability distribution p on some alphabet A, gives a similar expression for the Shannon entropy (18): for all -random (and hence for -almost every ),

Note the lim instead of the , which is apparently justified in this special case. Porter [75] states my equation (92) as his Theorem 3.2 and labels it “folklore”, referencing however [55,76]. See also [28,77] for further connections between entropy and algorithmic complexity.

Finally, here are some nice examples involving Brownian motion. Three classical results are:

Theorem 13.

- 1.

- For -almost every there exists such thatfor all and all , and is the best constant for which this is true.

- 2.

- -almost every is locally Hölder continuous with index .

- 3.

- -almost every is not differentiable at any .

See e.g., [Sections 1.2 and 1.3] in [57]. A path is locally Hölder continuous with index if there is such that if , then for some . This implies the same property for any . The value is optimal: -almost every fails to be locally Hölder continuous with index [Remark 1.21] in [57]. Concerning the critical value , the best one can say is that -almost every B satisfies (ibid. [Theorem 10.30] in [57].

Theorem 14.

Theorem 13 holds verbatim (even without any computability assumption on !) if ‘for -almost every ’ is replaced by ‘for every -random ’.

For continuity see [Section 3] in [78] and [Section 2.3] in [79]. For non-differentiability see [Theorem 7] in [78]. See also [80].

5. Applications to Statistical Mechanics

It is the author’s view that many of the most important questions still remain unanswered in very fundamental and important ways. (Sklar [2] (p. 413)).

What many “chefs” regard as absolutely essential and indispensable, is argued to be insufficient or superfluous by many others. (Uffink [3] (p. 925)).

The theme of the previous section is the mathematical key to a physical understanding of the notorious phenomenon of irreversibility, for the moment in classical statistical mechanics. The literature on this topic is enormous; I recommend [2,3,4,29]. My discussion is based on the pioneering work of Hiura and Sasa [81]. But before getting there, I would like to very briefly review Boltzmann’s take on the general problem of irreversibility (which in my view is correct). In Boltzmann’s approach, irreversibility of macroscopic phenomena in a microscopic world governed by Newton’s (time) reversible equations is a consequence of:

- 1.

- Coarse-graining (only certain macroscopic quantities behave irreversibly);

- 2.

- Probability (irreversible behaviour is just very likely–or, in infinite systems, almost sure).

Boltzmann launched two different scenarios to make this work, both extremely influential. First, in 1872 [82] the coarse-graining of an N-particle system moving in some volume was done by introducing a time-dependent macroscopic distribution function , which for each time t is defined on the single-particle phase space , and which is a probability density in the sense that is the “average” number of particles inside a region at time t (the normalization is ). Boltzmann argued that under various assumptions, notably his Stosszahlansatz (“molecular chaos”), which assumes probabilistic independence of two particles before they collide, as well as the absence of collisions between three or more particles, and finally some form of smoothness, solves the Boltzmann equation

whose right-hand side is a quadratic integral expression in f taking the effect of two-body-collisions (or other two-particle interactions) into account. He then showed that the “entropy”

satisfies whenever solves (94), and saw this as a proof of irreversibility.

Historically, there were two immediate objections to this result (see also the references above). First, there is some tension between this irreversibility and the reversibility of Newton’s equations satisfied by the microscopic variables on which is based (Loschmidt’s Umkehreinwand). Second, in a finite system any N-particle configuration eventually returns to a configuration arbitrarily close to its initial value (Zermelo’s Wiederkehreinwand). A general form of this phenomenon of Poincaré recurrence (e.g., Viana and Oliveira, 2016, Theorem 1.2.1) states that if is a dynamical system, where P is T-invariant and is just assumed to be measurable, and has positive measure, then for P-almost every there exists infinitely many for which . These problems made Boltzmann’s conclusions look dubious, perhaps even circular (irreversibility having been put in by hand via the assumptions leading to the Boltzmann equation). Despite the famous later work by Lanford [83,84] on the derivation of the Boltzmann equation for short times, these issues remain controversial [85]. But I see a promising way forward, as follows [86,87,88,89]. From the point of view of Boltzmann [6], as rephrased in Section 2 (first bullet), the distribution function is just the empirical measure (15) for an N-particle system with (hence A is uncountably infinite, but nonetheless the measure-theoretic situation remains unproblematic). Each N-particle configuration

at time t determines a probability measure on A via

Physicists prefer densities (with respect to Lebesque measure ) and Dirac -functions, writing

where is the space of probability distributions on A. The connection is [Section 1.3] in [87]

The hope, then, is that has a limit as that has some smoothness and satisfies the Boltzmann equation. To accomplish this, the idea is to turn into a stochastic process taking values in or in , based on a probability space , indexed by , and study the limit . More precisely, in a dilute gas (for which the Boltzmann equation was designed in the first place) one has , where a is the atom size (or some other length scale), is the particle density, and ℓ is the mean free path (between collisions). Defining , the limit “” is the Boltzmann–Grad limit and at constant .

The probability measure on should, via “propagation of chaos”, implement the Stosszahlansatz. The simplest way to do this [83] is to take some initial value for the envisaged solution of the Boltzmann equation. This induces a probability measure on A, which in turn yields the corresponding Bernoulli measure on . This construction may be generalized by taking some and averaging Bernoulli measures with respect to , as in de Finetti’s theorem [90]. Alternatively, take permutation-invariant probability measures on for which the empirical measures on A converge (in law) to some as [87]. By [Prop. 2.2] in [91] this is equivalent to the factorization property, for all ,

Either way, one’s hope is that -almost surely the random variables have a smooth limit as , which limit satisfies the Boltzmann equation, so that the macroscopic time-evolution is induced by the microscopic time-evolution at least for the -a.e. for which exists, where x is some configuration of infinitely many particles in , including their velocities, cf. (96)–(100). This would even derive the Boltzmann equation.

Using large deviation theory, Bouchet [88] showed all this assuming the Stosszahlansatz (albeit at a mathematically heuristic level). This is impressive, but the argument would only be complete if one could prove that, in the spirit of the previous section, exists for all -random , preferably by showing first that the Stosszahlansatz and the other assumptions used in the derivation of the Boltzmann equation (such as the absence of multiple-particle collisions) hold for all -random x. In particular, this would make it clear that the Stosszahlansatz is really a randomness assumption. Earman’s prinicple applies: Bouchet [88] showed that for finite N, the Boltzmann equation holds approximately for a set of initial conditions with high probability . The resolution of the Umkehreinwand is then standard, see e.g., [Chapter 8] in [29]. Similarly, the Wiederkehreinwand is countered by noting that in an infinite system the recurrence time is infinite, whilst in a large system it is astronomically large (beyond the age of the universe).

While its realization for the Boltzmann equation may still be remote (for mathematical rather than conceptual reasons or matters of principle), this scenario can be demonstrated in the Kac ring model [92] (see also [81,93,94]), which is a caricature of the Boltzmann equation. Namely:

- The microstates of the Kac ring model for finite N are pairswith . Here is seen as a spin that can be “up” () or “down” (), whereas denotes the presence () or absence () of a scatterer, located between and . These replace the variables for the Boltzmann equation. In the thermodynamic limit we then have

- The microdynamics replacing the time evolution generated by Newton’s equations with some potential, is now discretized, and is given by mapswhere , with periodic boundary conditions, i.e.,The same formulae define the thermodynamic limit . The idea is that in one time step the spin moves one place to the right and flips iff it hits a scatterer ().

- The macrodynamics, which replaces the solution of the Boltzmann equation, is given byIn particular, for one hasand hence every initial state with reaches the “equilibrium” state , as

I now state Theorem 3.5 in [81] due to Hiura and Sasa, which sharpens earlier results by Kac [92] in replacing a ‘for P-almost every x’ result by a ‘for all P-random x’ result that provides much more precise information on randomness. First, recall that if is -random, then

Theorem 15.

It follows that the “Boltzmann equation” (110) holds, and that the macrodynamics is autonomous: the dynamics of the macrostates does not explicitly depend on the underlying microstates.

Theorem 15 uses biased Martin-Löf randomness on and hence defines “typicality” out of equilibrium. As we have seen, equilibrium corresponds to (for arbitrary ), for which the corresponding -random states are arguably the most random ones: for it follows from (73) that if s is -random for some and is -random, then

so that the approach to equilibrium increases (algorithmic) randomness, as expected. Similarly [81,93], the fine-grained (microscopic) entropy of some is defined by

For example, as in (36), the Bernoulli measure has fine-grained entropy

on which (92) gives an algorithmic perspective: for all -random microstates we have

On the other hand, the coarse-grained (macroscopic) entropy (22) for the flat prior on A and the probability on defined by etc. is given by

cf. (23). Despite the similarity of (118) and (120), we should keep them apart. Irreversibility of the macroscopic dynamics does not contradict reversibility of the microscopic dynamics, even though the fine-grained and coarse-grained entropies practically coincide here. In this case, is given by (107) with (where ⇝ stands for ‘is replaced by’) and , so that the spin now moves to the left. Defining time reversal by

so that as appropriate, one even has . But the real point is that if is -random, then “typically”, is not. In fact, the entire (neo) Boltzmannian program can be carried out in this model [29,81,93]. In particular, the coarse-grained entropy (118) is invariant under the microscopic time evolution T, whereas the fine-grained entropy (120) increases along solutions of the “Boltzmann equation” (110).

6. Applications to Quantum Mechanics

There is yet another interpretation of the diagram at the beginning of Section 2: in quantum mechanics a string denotes the outcome of a run of N repeated measurements of the same observable with finite spectrum A in the same quantum state, so that the possible outcomes are distributed according to the Born rule: if H is the Hilbert space pertinent to the experiment, is the observable that is being measured, with spectrum and spectral projections onto the eigenspace for eigenvalue a, and is the density operator describing the quantum state, then . It can be shown that if we consider the run as a single experiment, the probability of outcome is , as in a classical Bernoulli trial. This extends to the idealized case of an infinitely repeated experiment, described by the probability measure on [95,96]. In particular, for a “fair quantum toss” (in which with ), it follows that the outcome sequences sample the probability space , just as in the classical case.

For quantum mechanics itself, this implies that -almost every outcome sequence is -random. The theme of Section 4 then leads to the circular conclusion that all -random outcome sequences are -random. Nonetheless, this circularity comes back with a vengeance if we turn to hidden variable theories, notably Bohmian mechanics [97]. Let me first summarize my original argument [96,98], and then reply to a potential counterargument.

In hidden variable theories there is a space of hidden variables, and if the theory has the right to call itself “deterministic”, then there must be functions and such that

The existence of g expresses the idea that the value of determines the outcome of the experiment. The function g tacitly incorporates all details of the experiment that may affect its outcome, except the hidden variable (which is the argument of g). Such details may include the setting, a possible context, and the quantum state. The existence of g therefore does not contradict the Kochen–Specker theorem (which excludes context-dependence). But g is just one ingredient that makes a hidden variable theory deterministic. The other is the function h that gives the value of in experiment number n in a long run, for each n. Furthermore, in any hidden variable theory the probability of the outcome of some measurement if the hidden variable λ is unknown is given by averaging the determined outcomes given by g with respect to some probability measure on defined by the quantum state supposed to describe the experiment within quantum mechanics.

Theorem 16.

The functions g and h cannot both be provided by any deterministic theory (and hence deterministic hidden variable theories that exactly reproduce the Born rule cannot exist).

Proof.

The Born rule is needed to prove that outcome sequences are -distributed [Theorem 3.4.1] in [96]. If g and h were explicitly given by some deterministic theory T, then the sequence s would be described explicitly via (122). By (what I call) Chaitin’s second incompleteness theorem, the sequence s cannot then be -random. □

The theorem used here states that if is -random, then ZFC (or any sufficiently comprehensive mathematical theory T meant in the proof of Theorem 16) can compute only finite many digits of s. See e.g., [Theorem 8.7] in [49], which is stated for Chaitin’s famous random number but whose proof holds for any -random sequence. Consistent with Earman’s principle, Theorem 16 does not rely on the idealization of infinitely long runs of measurements, since for finite runs Chaitin’s (first) incompleteness theorem leads to a similar contradiction. The latter theorem states that for any sound mathematical theory T containing enough arithmetic there is a constant such that T cannot prove any sentence of the form although infinitely many such sentences are true. In other words, T can only prove randomness of finitely many strings, although infinitely many strings are in fact random. See e.g., [Theorem 8.4] in [49].

The upshot is that a deterministic theory cannot produce random sequences. Against this, fans of deterministic hidden variable theories could argue that the (unilateral) Bernoulli shift S on (equipped with for simplicity) is deterministic and yet is able to produce random sequences. Indeed, following a suggestion by Jos Uffink (who is not even a Bohmian!), this can be done as follows (readers familiar with [99] will notice that the following scenario would actually be optimal for these authors!). With , and the simplest experiment for which is the identity (so that the measurement just reveals the actual value of the pertinent hidden variable), take an initial condition , and define by

Then . In other words, imagine that experiment number takes place at time , at which time the hidden variable takes the value . The measurement run then just reads the tape . Trivially, if the initial condition is -random, then so is the outcome sequence s.

According to the key Bohmian doctrine [99], the randomness of outcomes in the deterministic world envisaged in Bohmian mechanics originates in the random initial condition of universe, which is postulated to be in “quantum equilibrium”. In the above toy example, the configuration space (which in Bohmian mechanics is ) is replaced and idealized by , i.e., the role of the position variable is now played by ; the dynamics (replacing the Schrödinger equation) is the shit map S; and the “quantum equilibrium condition” (which is nothing but the Born rule) then postulates that its initial value is distributed according to the Born rule, which here is the fair Bernoulli measure . The Bohmian explanation of randomness then comes down to the claim that despite the determinism inherent in the dynamics S as well as in the measurement theory g:

Each experimental outcome s(n) is random because the hidden variable λ is randomly distributed.

Since s′ = s, this simply says that s is random because s is random.

Even in less simplistic scenarios, using the language of computation theory (taking computability as a metaphor for determinism) we may say: deterministic hidden variable theories need a random oracle to reproduce the randomness required for quantum mechanics. This defeats their determinism.

7. Summary

This paper was motivated by a number of (closely related) questions, including these:

- 1.

- Is it probability or randomness that “comes first”? How are these concepts related?

- 2.

- Could the notion of “typicality” as it is used in Boltzmann-style statistical mechanics [29] be replaced by some precise mathematical form of randomness?

- 3.

- Are “typical” trajectories in “chaotic” dynamical systems (i.e., those with high Kolmogorov–Sinai entropy) random in the same, or some similar sense?

Here, “typical” means “extremely probable”, which may be idealized to “occurring almost surely”.

My attempts to address these questions are guided by what I call Earman’s principle, stated after Theorem 3 in Section 3, which regulates the connection between actual and idealized physical theories. On this score, P-randomness (see Section 3) does quite well, cf. Theorems 2 and 3, although I have some misgivings about the physical relevance of its mathematical origins in the theory of computation, which for physical applications should be replaced by some abstract logical form of determinism.

Various mathematical examples provide situations where some property that holds for P-almost every (where P is some probability measure on X) in fact holds for all P-random , at least under some further computability assumptions, see Section 4. The main result in Section 5, i.e., Theorem 15 due to Hiura and Sasa [81], as well as the much better known results about the relationship between entropy, dynamical systems, and P-randomness reviewed Section 2 and Section 4, notably Theorem 1 and Equation (90), provide positive answers to questions 2 and 3. This, in turn, paves the way for an explanation of emergent phenomena like irreversibility and chaos, and suggests that the answer to question 1 is that at least the computational concept of P-randomness requires a prior probability P.

Funding

This research received no external funding.

Data Availability Statement

There are no relevant data.

Acknowledgments

This paper originated in a talk at the “Kolmogorov Workshop” in Geneva, 16 February 2023. I thank Alexei Grinbaum for the invitation, as well as various participants for questions. My presentation of Martin-Löf randomness benefited from discussions with Cristian Calude. I also thank Chris Porter and Sylvia Wenmackers for helpful correspondence. The recent volume Algorithmic Randomness: Progress and Prospects, eds. J.Y. Franklin and C.P. Porter (Cambridge University Press, 2020) [100], greatly faciliated this work.

Conflicts of Interest

The author declares no conflict of interest.

References

- Brush, S.G. The Kind of Motion We Call Heat; North-Holland: Amsterdam, The Netherlands, 1976. [Google Scholar]

- Sklar, L. Physics and Chance: Philosophical Issues in the Foundations of Statistical Mechanics; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Uffink, J. Compendium of the foundations of classical statistical physics. In Handbook of the Philosophy of Science; Butterfield, J., Earman, J., Eds.; North-Holland: Amsterdam, The Netherlands, 2007; Volume 2: Philosophy of Physics; Part B; pp. 923–1074. [Google Scholar]

- Uffink, J. Boltzmann’s Work in Statistical Physics. The Stanford Encyclopedia of Philosophy; Summer 2022 Edition; Zalta, E.N., Ed.; Stanford University: Stanford, CA, USA, 2022; Available online: https://plato.stanford.edu/archives/sum2022/entries/statphys-Boltzmann/ (accessed on 15 June 2023).

- Von Plato, J. Creating Modern Probability; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Boltzmann, L. Über die Beziehung dem zweiten Haubtsatze der mechanischen Wärmetheorie und der Wahrscheinlichkeitsrechnung respektive den Sätzen über das Wärmegleichgewicht. Wien. Berichte 1877, 76, 373–435, Reprinted in Boltzmann, L. Wissenschaftliche Abhandlungen, Hasenöhrl, F., Ed.; Chelsea: London, UK, 1969; Volume II, p. 39. English translation in Sharp, K.; Matschinsky, F., Entropy 2015, 17, 1971–2009. [Google Scholar] [CrossRef]

- Einstein, A. Zum Gegenwärtigen STAND des Strahlungsproblem. Phys. Z. 1909, 10, 185–193, Reprinted in The Collected Papers of Albert Einstein; Stachel, J., et al., Eds.; Princeton University Press: Princeton, NJ, USA, 1990; Volume 2; Doc.56, pp. 542–550. Available online: https://einsteinpapers.press.princeton.edu/vol2-doc/577 (accessed on 15 June 2023); English Translation Supplement. pp. 357–375. Available online: https://einsteinpapers.press.princeton.edu/vol2-trans/371 (accessed on 15 June 2023).

- Ellis, R.S. Entropy, Large Deviations, and Statistical Mechanics; Springer: Berlin/Heidelberg, Germany, 1985. [Google Scholar]

- Ellis, R.S. An overview of the theory of large deviations and applications to statistical mechanics. Scand. Actuar. J. 1995, 1, 97–142. [Google Scholar] [CrossRef]

- Lanford, O.E. Entropy and Equilibrium States in Classical Statistical Mechanics; Lecture Notes in Physics; Springer: Berlin/Heidelberg, Germany, 1973; Volume 20, pp. 1–113. [Google Scholar]

- Martin-Löf, A. Statistical Mechanics and the Foundations of Thermodynamics; Lecture Notes in Physics; Springer: Berlin/Heidelberg, Germany, 1979; Volume 101, pp. 1–120. [Google Scholar]

- McKean, H. Probability: The Classical Limit Theorems; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Von Mises, R. Grundlagen der Wahrscheinlichkeitsrechnung. Math. Z. 1919, 5, 52–99. [Google Scholar] [CrossRef]

- Von Mises, R. Wahrscheinlichkeit, Statistik, und Wahrheit, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 1936. [Google Scholar]

- Van Lambalgen, M. Random Sequences. Ph.D. Thesis, University of Amsterdam, Amsterdam, The Netherlands, 1987. Available online: https://www.academia.edu/23899015/RANDOM_SEQUENCES (accessed on 15 June 2023).

- Van Lambalgen, M. Randomness and foundations of probability: Von Mises’ axiomatisation of random sequences. In Statistics, Probability and Game Theory: Papers in Honour of David Blackwell; IMS Lecture Notes–Monograph Series; IMS: Beachwood, OH, USA, 1996; Volume 30, pp. 347–367. [Google Scholar]

- Porter, C.P. Mathematical and Philosophical Perspectives on Algorithmic Randomness. Ph.D. Thesis, University of Notre Dame, Notre Dame, IN, USA, 2012. Available online: https://www.cpporter.com/wp-content/uploads/2013/08/PorterDissertation.pdf (accessed on 15 June 2023).

- Kolmogorov, A.N. Grundbegriffe de Wahrscheinlichkeitsrechnung; Springer: Berlin/Heidelberg, Germany, 1933. [Google Scholar]

- Kolmogorov, A.N. Three Approaches to the Quantitative Definition of Information. Probl. Inf. Transm. 1965, 1, 3–11. Available online: http://alexander.shen.free.fr/library/Kolmogorov65_Three-Approaches-to-Information.pdf (accessed on 15 June 2023). [CrossRef]

- Kolmogorov, A.N. Logical Basis for information theory and probability theory. IEEE Trans. Inf. Theory 1968, 14, 662–664. [Google Scholar] [CrossRef]

- Cover, T.M.; Gács, P.; Gray, R.M. Kolmogorov’s contributions to information theory and algorithmic complexity. Ann. Probab. 1989, 17, 840–865. [Google Scholar] [CrossRef]

- Li, M.; Vitányi, P.M.B. An Introduction to Kolmogorov Complexity and Its Applications, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Porter, C.P. Kolmogorov on the role of randomness in probability theory. Math. Struct. Comput. Sci. 2014, 24, e240302. [Google Scholar] [CrossRef]

- Zvonkin, A.K.; Levin, L.A. The complexity of finite objects and the development of the concepts of information and randomness by means of the theory of algorithms. Russ. Math. Surv. 1970, 25, 83–124. [Google Scholar] [CrossRef]

- Landsman, K. Randomness? What randomness? Found. Phys. 2020, 50, 61–104. [Google Scholar] [CrossRef]

- Porter, C.P. The equivalence of definitions of algorithmic randomness. Philos. Math. 2021, 29, 153–194. [Google Scholar] [CrossRef]

- Georgii, H.-O. Probabilistic aspects of entropy. In Entropy; Greven, A., Keller, G., Warnecke, G., Eds.; Princeton University Press: Princeton, NJ, USA, 2003; pp. 37–54. [Google Scholar]

- Grünwald, P.D.; Vitányi, P.M.B. Kolmogorov complexity and Information theory. With an interpretation in terms of questions and answers. J. Logic, Lang. Inf. 2003, 12, 497–529. [Google Scholar] [CrossRef]

- Bricmont, L. Making Sense of Statistical Mechanics; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Austin, T. Math 254A: Entropy and Ergodic Theory. 2017. Available online: https://www.math.ucla.edu/~tim/entropycourse.html (accessed on 15 June 2023).

- Dembo, A.; Zeitouni, A. Large Deviations: Techniques and Applications, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Dorlas, T.C. Statistical Mechanics: Fundamentals and Model Solutions, 2nd ed.; CRC: Boca Raton, FL, USA, 2022. [Google Scholar]

- Ellis, R.S. The theory of large deviations: From Boltzmann’s 1877 calculation to equilibrium macrostates in 2D turbulence. Physica D 1999, 133, 106–136. [Google Scholar] [CrossRef]

- Borwein, J.M.; Zhu, Q.J. Techniques of Variational Analysis; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory, 2nd ed.; Wiley: Hoboken, NJ, USA, 2006. [Google Scholar]

- Lesne, A. Shannon entropy: A rigorous notion at the crossroads between probability, information theory, dynamical systems and statistical physics. Math. Struct. Comput. Sci. 2014, 24, e240311. [Google Scholar] [CrossRef]

- MacKay, D.J. Information Theory, Inference, and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Kolmogorov, A.N. New metric invariant of transitive dynamical systems and endomorphisms of Lebesgue spaces. Dokl. Russ. Acad. Sci. 1958, 119, 861–864. [Google Scholar]

- Viana, M.; Oliveira, K. Foundations of Ergodic Theory; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Charpentier, E.; Lesne, A.; Nikolski, N.K. Kolmogorov’s Heritage in Mathematics; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Castiglione, P.; Falcioni, M.; Lesne, A.; Vulpiani, A. Chaos and Coarse Graining in Statistical Mechanics; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Martin-Löf, P. The definition of random sequences. Inf. Control 1966, 9, 602–619. [Google Scholar] [CrossRef]

- Hertling, P.; Weihrauch, K. Random elements in effective topological spaces with measure. Inform. Comput. 2003, 181, 32–56. [Google Scholar] [CrossRef][Green Version]

- Hoyrup, M.; Rojas, C. Computability of probability measures and Martin-Löf randomness over metric spaces. Inf. Comput. 2009, 207, 830–847. [Google Scholar] [CrossRef]

- Gács, P.; Hoyrup, M.; Rojas, C. Randomness on computable probability spaces—A dynamical point of view. Theory Comput. Syst. 2011, 48, 465–485. [Google Scholar] [CrossRef][Green Version]

- Bienvenu, L.; Gács, P.; Hoyrup, M.; Rojas, C. Algorithmic tests and randomness with respect to a class of measures. Proc. Steklov Inst. Math. 2011, 274, 34–89. [Google Scholar] [CrossRef][Green Version]

- Hoyrup, M.; Rute, J. Computable measure theory and algorithmic randomness. In Handbook of Computability and Complexity in Analysis; Springer: Berlin/Heidelberg, Germany, 2021; pp. 227–270. [Google Scholar]

- Calude, C.S. Information and Randomness: An Algorithmic Perspective, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Nies, A. Computability and Randomness; Oxford University Press: Oxford, UK, 2009. [Google Scholar]

- Downey, R.; Hirschfeldt, D.R. Algorithmic Randomness and Complexity; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Kjos-Hansen, B.; Szabados, T. Kolmogorov complexity and strong approximation of Brownian motion. Proc. Am. Math. Soc. 2011, 139, 3307–3316. [Google Scholar] [CrossRef]

- Chaitin, G.J. A theory of program size formally identical to information theory. J. ACM 1975, 22, 329–340. [Google Scholar] [CrossRef]

- Gács, P. Exact expressions for some randomness tests. In Theoretical Computer Science 4th GI Conference; Weihrauch, K., Ed.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1979; Volume 67, pp. 124–131. [Google Scholar]

- Levin, L.A. On the notion of a random sequence. Sov. Math.-Dokl. 1973, 14, 1413–1416. [Google Scholar]

- Earman, J. Curie’s Principle and spontaneous symmetry breaking. Int. Stud. Phil. Sci. 2004, 18, 173–198. [Google Scholar] [CrossRef]

- Mörters, P.; Peres, Y. Brownian Motion; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Billingsley, P. Convergence of Probability Measures; Wiley: Hoboken, NJ, USA, 1968. [Google Scholar]

- Asarin, E.A.; Prokovskii, A.V. Use of the Kolmogorov complexity in analysing control system dynamics. Autom. Remote Control 1986, 47, 21–28. [Google Scholar]

- Fouché, W.L. Arithmetical representations of Brownian motion I. J. Symb. Log. 2000, 65, 421–442. [Google Scholar] [CrossRef]

- Fouché, W.L. The descriptive complexity of Brownian motion. Adv. Math. 2000, 155, 317–343. [Google Scholar] [CrossRef][Green Version]

- Vovk, V.G. The law of the iterated logarithm for random Kolmogorov, or chaotic, sequences. Theory Probab. Its Appl. 1987, 32, 413–425. [Google Scholar] [CrossRef]

- Brattka, V.; Miller, J.S.; Nies, A. Randomness and differentiability. Trans. Am. Math. Soc. 2016, 368, 581–605. [Google Scholar] [CrossRef]

- Rute, J. Algorithmic Randomness and Constructive/Computable Measure Theory; Franklin & Porter: New York, NY, USA, 2020; pp. 58–114. [Google Scholar]

- Downey, R.; Griffiths, E.; Laforte, G. On Schnorr and computable randomness, martingales, and machines. Math. Log. Q. 2004, 50, 613–627. [Google Scholar] [CrossRef]

- Bienvenu, L.; Day, A.R.; Hoyrup, M.; Mezhirov, I.; Shen, A. A constructive version of Birkhoff’s ergodic theorem for Martin-Löf random points. Inf. Comput. 2012, 210, 21–30. [Google Scholar] [CrossRef][Green Version]

- Galatolo, S.; Hoyrup, M.; Rojas, C. Effective symbolic dynamics, random points, statistical behavior, complexity and entropy. Inf. Comput. 2010, 208, 23–41. [Google Scholar] [CrossRef]

- Pathak, N.; Rojas, C.; Simpson, S. Schnorr randomness and the Lebesgue differentiation theorem. Proc. Am. Math. Soc. 2014, 142, 335–349. [Google Scholar] [CrossRef][Green Version]

- V’yugin, V. Effective convergence in probability and an ergodic theorem for individual random sequences. SIAM Theory Probab. Its Appl. 1997, 42, 39–50. [Google Scholar] [CrossRef]

- Towsner, H. Algorithmic Randomness in Ergodic Theory; Franklin & Porter: New York, NY, USA, 2020; pp. 40–57. [Google Scholar]

- V’yugin, V. Ergodic theorems for algorithmically random points. arXiv 2022, arXiv:2202.13465. [Google Scholar]

- Brudno, A.A. Entropy and the complexity of the trajectories of a dynamic system. Trans. Mosc. Math. Soc. 1983, 44, 127–151. [Google Scholar]

- White, H.S. Algorithmic complexity of points in dynamical systems. Ergod. Theory Dyn. Syst. 1993, 15, 353–366. [Google Scholar] [CrossRef]

- Batterman, R.W.; White, H. Chaos and algorithmic complexity. Found. Phys. 1996, 26, 307–336. [Google Scholar] [CrossRef]

- Porter, C.P. Biased Algorithmic Randomness; Franklin and Porter: New York, NY, USA, 2020; pp. 206–231. [Google Scholar]

- Brudno, A.A. The complexity of the trajectories of a dynamical system. Russ. Math. Surv. 1978, 33, 207–208. [Google Scholar] [CrossRef]

- Schack, R. Algorithmic information and simplicity in statistical physics. Int. J. Theor. Phys. 1997, 36, 209–226. [Google Scholar] [CrossRef]

- Fouché, W.L. Dynamics of a generic Brownian motion: Recursive aspects. Theor. Comput. Sci. 2008, 394, 175–186. [Google Scholar] [CrossRef][Green Version]

- Allen, K.; Bienvenu, L.; Slaman, T. On zeros of Martin-Löf random Brownian motion. J. Log. Anal. 2014, 6, 1–34. [Google Scholar]

- Fouché, W.L.; Mukeru, S. On local times of Martin-Löf random Brownian motion. arXiv 2022, arXiv:2208.01877. [Google Scholar]

- Hiura, K.; Sasa, S. Microscopic reversibility and macroscopic irreversibility: From the viewpoint of algorithmic randomness. J. Stat. Phys. 2019, 177, 727–751. [Google Scholar] [CrossRef]

- Boltzmann, L. Weitere Studien über das Wärmegleichgewicht unter Gasmolekülen. Wien. Berichte 1872, 66, 275–370, Reprinted in Boltzmann, L. Wissenschaftliche Abhandlungen; Hasenöhrl, F., Ed.; Chelsea: London, UK, 1969; Volume I, p. 23. English translation in Brush, S. The Kinetic Theory of Gases: An Anthology of Classic Papers with Historical Commentary; Imperial College Press: London, UK, 2003; pp. 262–349. [Google Scholar]

- Lanford, O.E. Time evolution of large classical systems. In Dynamical Systems, Theory and Applications; Lecture Notes in Theoretical Physics; Moser, J., Ed.; Springer: Berlin/Heidelberg, Germany, 1975; Volume 38, pp. 1–111. [Google Scholar]

- Lanford, O.E. On the derivation of the Boltzmann equation. Astérisque 1976, 40, 117–137. [Google Scholar]

- Ardourel, V. Irreversibility in the derivation of the Boltzmann equation. Found. Phys. 2017, 47, 471–489. [Google Scholar] [CrossRef]

- Villani, C. A review of mathematical topics in collisional kinetic theory. In Handbook of Mathematical Fluid Dynamics; Friedlander, S., Serre, D., Eds.; Elsevier: Amsterdam, The Netherlands, 2002; Volume 1, pp. 71–306. [Google Scholar]

- Villani, C. (Ir)reversibility and Entropy. In Time Progress in Mathematical Physics; Duplantier, B., Ed.; (Birkhäuser): Basel, Switzerland, 2013; Volume 63, pp. 19–79. [Google Scholar]

- Bouchet, F. Is the Boltzmann equation reversible? A Large Deviation perspective on the irreversibility paradox. J. Stat. Phys. 2020, 181, 515–550. [Google Scholar] [CrossRef]

- Bodineau, T.; Gallagher, I.; Saint-Raymond, L.; Simonella, S. Statistical dynamics of a hard sphere gas: Fluctuating Boltzmann equation and large deviations. arXiv 2020, arXiv:2008.10403. [Google Scholar]

- Aldous, D.L. Exchangeability and Related Topics; Lecture Notes in Mathematics; Springer: Berlin/Heidelberg, Germany, 1985; Volume 1117, pp. 1–198. [Google Scholar]

- Sznitman, A. Topics in Propagation of Chaos; Lecture Notes in Mathematics; Springer: Berlin/Heidelberg, Germany, 1991; Volume 1464, pp. 164–251. [Google Scholar]

- Kac, N. Probability and Related Topics in Physical Sciences; Wiley: Hoboken, NJ, USA, 1959. [Google Scholar]

- Maes, C.; Netocny, K.; Shergelashvili, B. A selection of nonequilibrium issues. arXiv 2007, arXiv:math-ph/0701047. [Google Scholar]

- De Bièvre, S.; Parris, P.E. A rigourous demonstration of the validity of Boltzmann’s scenario for the spatial homogenization of a freely expanding gas and the equilibration of the Kac ring. J. Stat. Phys. 2017, 168, 772–793. [Google Scholar] [CrossRef]

- Landsman, K. Foundations of Quantum Theory: From Classical Concepts to Operator Algebras; Springer Open: Berlin/Heidelberg, Germany, 2017; Available online: https://www.springer.com/gp/book/9783319517766 (accessed on 15 June 2023).

- Landsman, K. Indeterminism and undecidability. In Undecidability, Uncomputability, and Unpredictability; Aguirre, A., Merali, Z., Sloan, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; pp. 17–46. [Google Scholar]

- Goldstein, S. Bohmian Mechanics. The Stanford Encyclopedia of Philosophy. 2017. Available online: https://plato.stanford.edu/archives/sum2017/entries/qm-bohm/ (accessed on 15 June 2023).

- Landsman, K. Bohmian mechanics is not deterministic. Found. Phys. 2022, 52, 73. [Google Scholar] [CrossRef]

- Dürr, D.; Goldstein, S.; Zanghi, N. Quantum equilibrium and the origin of absolute uncertainty. J. Stat. Phys. 1992, 67, 843–907. [Google Scholar] [CrossRef]

- Franklin, J.Y.; Porter, C.P. (Eds.) Algorithmic Randomness: Progress and Prospects; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).