Abstract

With the widespread application of generative adversarial networks (GANs) in image generation and content creation, their model architectures and training outcomes have become valuable intellectual property assets. However, in practical deployment, image generative models are vulnerable to surrogate model attacks, posing significant risks to copyright ownership and commercial interests. To address this issue, this paper proposes a novel copyright protection scheme for image generative models with a symmetric embedding–retrieval watermark architecture in GANs focused on defending against surrogate model attacks. Unlike traditional model encryption or architectural constraint strategies, the proposed approach integrates a watermark embedding module directly into the image generative network, enabling generated images to implicitly carry copyright identifiers. Leveraging a symmetric design between the embedding and retrieval processes, the system ensures that, under surrogate model attacks, the original model’s identity can be reliably verified by extracting the embedded watermark from the generated outputs. The implementation comprises three key modules—feature extraction, watermark embedding, and watermark retrieval—forming an end-to-end, balanced embedding–retrieval pipeline. Experimental results demonstrate that this approach achieves efficient and stable watermark embedding and retrieval without compromising generation quality, exhibiting high robustness, traceability, and practical applicability, thereby offering a viable and symmetric solution for intellectual property protection in image generative networks.

1. Introduction

With the widespread application of image generation models in fields such as image synthesis, data augmentation, and content creation, the issue of intellectual property (IP) protection has become increasingly prominent. Due to the unique commercial value of these models and the high cost of training them, they are particularly vulnerable to black-box attacks, which can easily replicate core functionalities and threaten the intellectual property and competitiveness of model owners. Among various black-box attack methods, surrogate model attacks have emerged as a major real-world threat due to their strong stealthiness and low cost. Attackers can train functionally similar substitute models using only input–output pairs, making such attacks especially feasible in Machine Learning as a Service (MLaaS) scenarios. This may lead to unauthorized usage, IP infringement, and other security risks. Therefore, enhancing the model’s resistance to surrogate attacks is critical for ensuring secure commercialization.

In recent years, the issue of intellectual property protection for neural network models has gained increasing attention; however, existing research has predominantly focused on discriminative tasks such as image classification [,,], with relatively limited protection for image generative models. Due to the complex structure of generative models, their high-dimensional image outputs, and the lack of explicit labels during training, protection methods designed for classification models are difficult to apply directly. Although some studies have attempted to develop copyright protection mechanisms for generative models, most have not adequately addressed practical threats encountered during deployment, such as surrogate model attacks and image tampering. Moreover, image generative models are inherently easy to replicate, as attackers can train approximate models solely based on input–output pairs, circumventing existing protection methods. This makes model ownership difficult to verify, significantly increasing intellectual property risks and highlighting the limitations of current protection mechanisms.

To address the challenges of copyright protection for image generative models under surrogate model attacks, this paper proposes an intellectual property protection method with a symmetric watermark embedding–retrieval mechanism. By integrating watermark processing modules directly into the generative network, the model’s outputs inherently embed imperceptible copyright identifiers. This enables ownership verification under surrogate model attacks, as the embedded watermark remains detectable in the surrogate model’s generated outputs. In contrast to traditional methods such as parameter encryption or access control, the proposed approach achieves persistent and verifiable ownership by tracing through the generated content itself, offering a more practical and deployable solution for real-world copyright protection.

2. Related Work

2.1. Introduction of Surrogate Attack on GANs

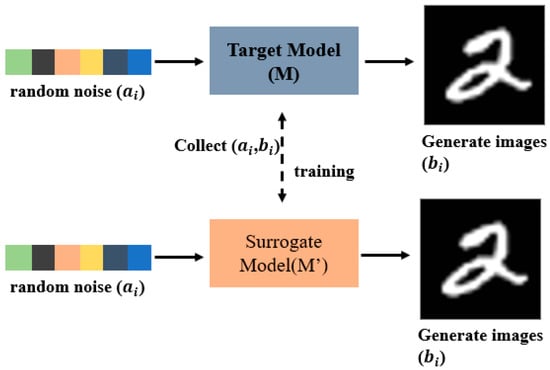

The flow of the surrogate modeling attack [,] is shown in Figure 1. For the image processing task, it is assumed that domain A consists of a large amount of 100-dimensional random noise, denoted as while domain B consists of data output from the original generation network, denoted as , where each pair of and is mapped one-to-one through a hidden transformation function Y. The goal of the image processing model M is to approximate the transformation Y by minimizing the distance L between and :

Figure 1.

Schematic diagram of the surrogate model attack.

In the attack scenario, the attacker first uses a large amount of random noise A as the query input, denoted as and generates the corresponding image data using the original generative network M, denoted as , where . Once the attacker has collected a sufficient number of input–output pairs (A,B), the data can be used to train a surrogate model to approximate the performance of the target generating network M.

Unlike model extraction attacks, which primarily focus on obtaining a model’s parameters and architectural information, surrogate model attacks concentrate on replicating the functional performance of the target model. When conducting surrogate model at-tacks, attackers need not understand the internal structure of the original model, but only train a surrogate model that closely matches the target model in terms of generative capabilities or output distribution using generative data, thereby posing an indirect threat to the model’s intellectual property.

2.2. Introduction of Traditional Protection Strategies for GANs

In recent years, as deep learning models have seen widespread application, the issue of intellectual property protection for neural networks has attracted increasing attention. “Deep watermarking” has emerged as a promising technique, with its effectiveness initially demonstrated in tasks such as image classification. Existing methods can be broadly categorized into three types: parameter-level watermarking for white-box ownership verification []; trigger-based mechanisms that enable black-box verification through specific inputs [,]; and model response-based approaches that extract unique behavioral features for identification [,]. However, these techniques are difficult to directly apply to generative models, which are characterized by complex architectures and diverse outputs.

For the copyright protection of image generative models, several approaches have been proposed. Yu et al. [] achieved implicit watermark propagation through data fingerprinting, offering strong concealment but limited robustness against perturbations. Fei et al. [] enhanced watermark embedding by introducing a decoder and supervised loss, though their method is vulnerable to fine-tuning attacks. Ong et al. [] proposed a backdoor-based black-box watermarking scheme that can withstand architectural modifications, but performs poorly against surrogate model attacks. Zhang et al. [] directly embedded watermarks into generated images to defend against surrogate models, but their method is sensitive to image perturbations, resulting in significantly decreased extraction accuracy.

In summary, existing copyright protection methods for generative models struggle to address complex scenarios such as black-box deployment, open-ended outputs, and diverse attack types. In particular, when facing both surrogate model attacks and image-level perturbations, there is a lack of general solutions that are both robust and capable of cross-model traceability. To this end, this paper proposes an embedded watermark protection method. By leveraging the structural characteristics of generative models, we design a network framework in which generated images inherently carry watermark information. This enables copyright verification while enhancing robustness and traceability against model surrogate attacks.

3. Materials and Methods

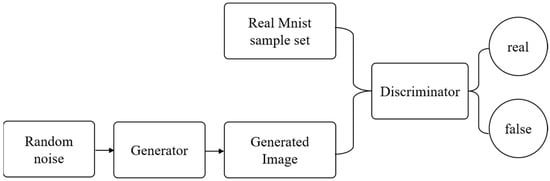

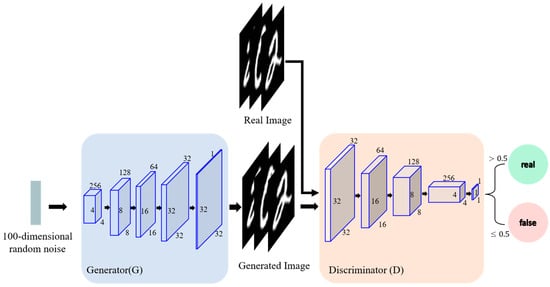

3.1. Materials for DCGAN

In this study, the DCGAN (Deep Convolution Generative Adversarial Network) architecture is adopted as a generative model to be protected, and its basic diagram is illustrated in Figure 2. For specific research, a practical application model based on DCGAN is introduced in Figure 3, which exhibits a detailed architecture of DCGAN model for handwritten digit generation. The network consists of a generator and a discriminator, both constructed with multiple convolutional and transposed convolutional layers. The generator takes a random noise vector as the input and progressively upsamples it to produce realistic images, while the discriminator distinguishes between real samples and generated images, thereby enabling adversarial training.

Figure 2.

A basic composition diagram of handwritten digit generation adversarial network.

Figure 3.

A detailed architecture of DCGAN model for handwritten digit generation.

For simplicity of expression, we train the DCGAN for a specific generation function, for example, handwritten numeral. Specifically, the DCGAN takes random noise as the input, which is transformed by the generator into synthesized MNIST-like images. The discriminator then receives both the generated and real MNIST samples to determine their authenticity and provides feedback to optimize the generator’s performance. After the training converges, the generator is capable of producing high-quality images that closely resemble those in the real MNIST dataset. Taking this DCGAN of handwritten numeral generation as an object to be protected, the following section will present the proposed protection methodology in detail.

3.2. Method of Protection for DCGAN

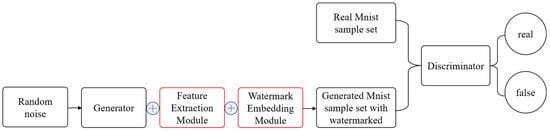

The method of protection for DCGAN comprises two major steps: watermark embedding and watermark retrieval. The part of watermark embedding is integrated into DCGAN (the object to be protected), which enables the content generated from the DCGAN carrying concealed watermark information. On the contrary, the part of watermark retrieval is constructed as an individual network to extract watermark information from the content generated.

3.2.1. Watermark Embedding in DCGAN

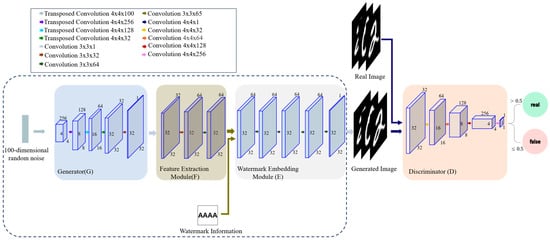

To enable effective copyright identification in the generated images, a feature extraction module and a watermark embedding module (denoted by a red solid border) are integrated into the original DCGAN [] architecture (as illustrated in Figure 4), forming an end-to-end watermark integration mechanism. The feature extraction module captures semantic features of the generated content to enhance the semantic relevance of the embedded watermark. The watermark embedding module imperceptibly integrates copyright information into the generated images, enabling reliable ownership identification and traceability while preserving image quality. A more detailed illustration of the network architecture is presented in Figure 5.

Figure 4.

Handwritten digit generation adversarial network with watermark embedding.

Figure 5.

The detailed architecture of handwritten digit generation network with watermark embedding, which adds feature extraction and watermark embedding modules compared to Figure 3.

- (1)

- Feature Extraction Module Design

The feature extraction module is designed to perform preliminary semantic representation extraction on images generated by the DCGAN generator. It serves a dual purpose in the watermark embedding process, functioning both as a semantic encoder of the image content and as a carrier for embedding information. By extracting high-level semantic features—such as edges, textures, and local structures—the module enhances the integration and imperceptibility of the embedded watermark, significantly mitigating artifacts and information loss typically associated with pixel-level embedding. Compared with conventional direct embedding methods, this module maintains the original image resolution while providing a more stable embedding pathway, thereby reducing training complexity. Furthermore, its architecture is carefully designed to balance feature expressiveness and computational efficiency, offering essential support features for the downstream embedding module. The detailed architecture and parameter settings are provided in Table 1.

Table 1.

Feature extraction module parameters.

In Table 1, denotes the output image generated by the DCGAN generator. “Conv” refers to a convolutional layer, and “ReLU” indicates the activation function applied after the convolution. “Kernel 3 × 3” specifies that the convolutional kernel size is 3 × 3, while “Stride” represents the step size of the convolution operation.

- (2)

- Watermark Embedding Module Design

The watermark embedding module adopts a feature fusion strategy to embed the watermark into the deep semantic features of the generated image in an implicit manner, ensuring that the embedding process does not introduce noticeable visual artifacts. Specifically, feature fusion integrates the multi-channel image features with the watermark information within the feature space, enabling the watermark to be distributed across high-level semantic components such as edges, textures, and local structures. This approach not only enhances the robustness and imperceptibility of the watermark but also avoids the artifacts and information loss typically caused by pixel-level overlay methods. Moreover, the feature fusion mechanism supports multi-scale feature interaction and optimization, which improves the stability of the embedding pathway, reduces training complexity, and facilitates reliable watermark extraction. In this module, multi-channel image features are progressively and hierarchically fused with the single-channel grayscale watermark, ultimately producing the watermarked output image. The module consists of five layers, with detailed structural parameters presented in Table 2.

Table 2.

Watermark embedding module parameters.

In this context, denotes the combination of feature maps extracted from the feature extraction module and the watermark information, which together serve as the input to the watermark embedding network. The structure “Conv + BN + ReLU” refers to a sequence consisting of a convolutional layer (Conv), followed by batch normalization (BN), and a ReLU activation function, enabling nonlinear transformation and accelerating convergence. The final output stage adopts a “Conv + Tanh” configuration to produce the image with the imperceptible watermark, where the Tanh activation function compresses the output values into the range , aligning with the distribution characteristics of image data.

- (3)

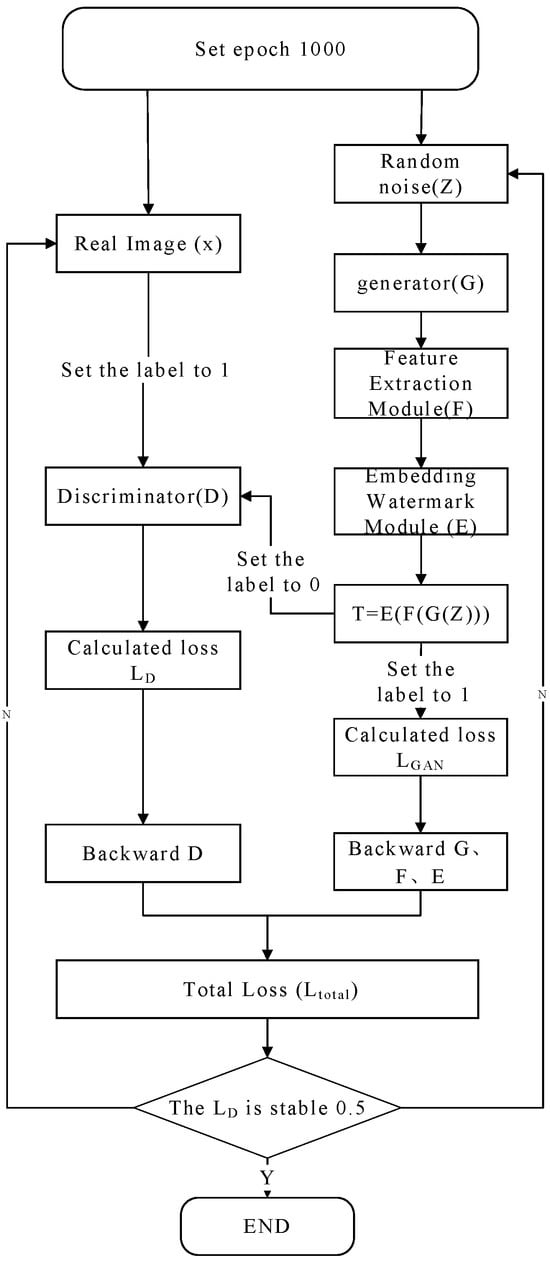

- Training Strategy for DCGAN with Embedded Watermark

The training process adopts an alternating optimization strategy. First, a batch of real images is sampled and fed into the discriminator with a positive label: 1. The discriminator is updated via backpropagation by minimizing the prediction error, enhancing its ability to recognize real samples. Next, the generator synthesizes fake images containing watermarks from random noise Z, which are inputted into the discriminator with a negative label: 0. Backpropagation is performed again to strengthen the discriminator’s capability to detect these watermarked fake images. Finally, another batch of watermarked images are generated and fed into the discriminator with a positive label. Using the adversarial loss defined in Equation (5), only the generator parameters are updated, enabling it to learn to fool the discriminator and thereby improving both the visual realism and adversarial robustness of the watermarked images. The detailed flowchart of training strategy for DCGAN with the embedded watermark is illustrated as Figure 6.

Figure 6.

The flowchart of training strategy for DCGAN with the embedded watermark.

The model of DCGAN with the embedded watermark is trained on a server equipped with 32 GB RAM and an RTX 3090 24 GB GPU, using a batch size of 16. Adam optimizer was employed, with learning rates set to 0.0002 for the generator and 0.0001 for the discriminator. Throughout the training process, the generator, feature extraction module, and watermark embedding module are jointly optimized as a unified network. This iterative training continues until the discriminator’s loss approaches a value of 0.5, indicating that it can no longer reliably distinguish between real and watermarked fake images, thus reaching adversarial equilibrium. The generator loss stabilized around 0.7. At this point, a favorable balance is achieved between the visual quality of the generated images and the robustness and imperceptibility of the embedded watermarks.

In the process of training, there are two critical loss functions and , which represent discriminator loss and generator loss, respectively. During adversarial training, the discriminator and the generator are optimized alternately with respect to their own objectives related to and respectively. The discriminator loss is composed of the losses from real image () and generated image (), which is written as Formula (2).

Specifically, the and are expressed as Formulas (3) and (4):

where and are the outputs of discriminator and generator, respectively, and the variables and represent the real image and random noise, respectively.

The generator loss represents the whole loss of the sub-network composed of the generator, feature extraction, and watermark embedding modules. Generator loss consists of three components illustrated by Formula (5):

Specifically, the three terms , , and are expressed as Formulas (6)–(8):

where is the adversarial loss, which measures the discrepancy between watermarked generated images and real images; is the perceptual loss, used to evaluate the difference between the generator’s outputs and the watermarked images in a high-level feature space; and represents the pixel-wise L1 loss, quantifying the pixel-level difference between the generator’s outputs and the watermarked generated images. The hyperparameters and are used to balance the perceptual and pixel-level reconstruction terms, ensuring that the generator not only fools the discriminator but also produces structurally coherent and visually natural images.

In Formulas (6)–(8), represents a final generated image, is the generator module, is the feature extraction module, and is the watermark embedding module.

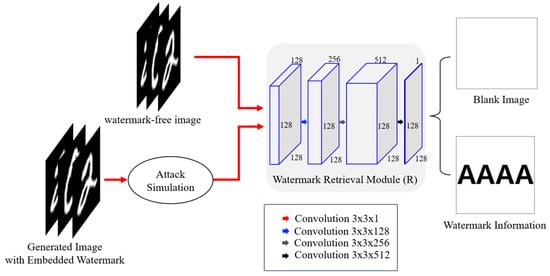

3.2.2. Watermark Retrieval from DCGAN

- (1)

- Design of the Watermark Retrieval Network

The WRN (watermark retrieval network) is tasked with recovering the embedded watermark from watermarked images. Once the surrogate attack occurs (training a new GAN using generated images), the copyright ownership of new GAN can be confirmed by extracting the watermark from its re-generated images, thereby providing an effective and verifiable mechanism for intellectual property protection. The whole architecture of watermark retrieval network is shown in Figure 7, and the detailed configuration of watermark retrieval module is illustrated in Table 3.

Figure 7.

Watermark retrieval network architecture.

Table 3.

Watermark retrieval module parameters.

In the architecture of WRN, an attack simulation module is set at the front end of the network to enhance the robustness of watermark retrieval and mitigate the risk of attacker’s employing data augmentation techniques during surrogate model training. The module introduces perturbations in watermarked images simulating potential tampering scenarios, so as to train the robustness of watermark retrieval. In the attack simulation, we have introduced seven different common attacks that include five types of pixel-level distortions and two types of image-level composite attacks, as detailed in Table 4.

Table 4.

Attack types and specific parameters.

- (2)

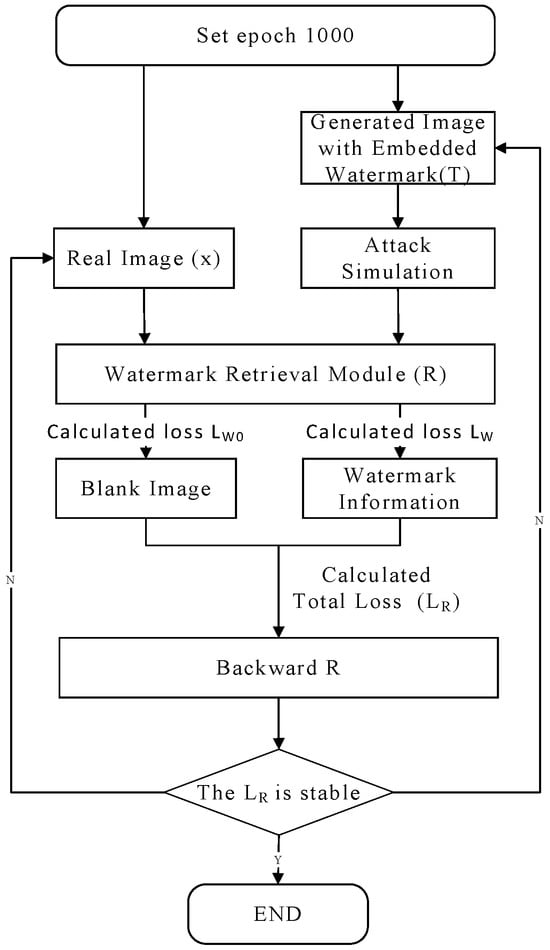

- Training Strategy for Watermark Retrieval Network

The total loss of WRN is denoted by , which comprises two terms and representing the retrieval loss of watermarked and watermark-free information, respectively, illustrated as Formula (9):

where and are hyperparameters that balance the two extraction losses and . The retrieval losses of watermarked information and watermark-free information and are illustrated as Formulas (10) and (11), respectively.

In Formulas (10) and (11), and denote the watermark retrieval and attack simulation modules, respectively, represents a watermarked image, is the desired watermark, is a blank image, and the norm () is used to compute the pixel-level difference between the extracted and desired watermarks.

The training procedure of WRN is illustrated in Figure 8. During training of the WRN, both watermarked and watermark-free images are inputted alternately. Each training iteration uses a batch size of 16 and the Adam optimizer with a learning rate set to 0.0001. To ensure high extraction accuracy while effectively suppressing false detections on clean images, the loss function’s weighting parameters and are set to 1.0 and 15.0, respectively.

Figure 8.

Flowchart of WRN training.

Backpropagation is conducted to update WRN parameters according to the total loss . Although at beginning the training epoch is initialized as 1000 for a full computation, however, the training procedure is terminated when the difference between two adjacent training is less than 0.001.

4. Results

In order to verify the effectiveness of IP method for the DCGAN proposed above, a series of experiments have been conducted, which include two major classes: verification and comparative tests. For the convenience of expression in comparative tests, we named our method mentioned above as GenGuard.

In the following experiments, two open datasets MNIST [] and CIFAR-10 [] are adopted as model material, which contain 60,000 grayscale images of handwritten digits and 60,000 color images across 10 classes, respectively. Without a loss of generality, two watermark images are used in experiments illustrated as Figure 9: the character string for MNIST and QR code for CIFAR.

Figure 9.

Left: character string used for MNIST; right: QR code used for CIFAR-10.

4.1. Effectiveness and Performance Evaluation of GenGuard

4.1.1. Qualitative and Quantitative Comparisons Between GenGuard and the Baseline Model

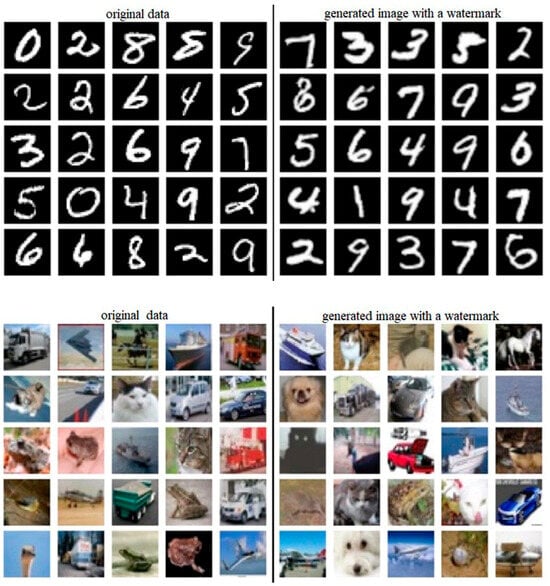

To evaluate the generative quality of GenGuard, we conduct both qualitative and quantitative analyses. Qualitatively, visual comparison is conducted between generated contents and original contents of MNIST (the leftmost image in the first row of Figure 10 displays the original MNIST image, while the rightmost one presents the generated image by GenGuard) and CIFAR-10 (the leftmost image in the second row of Figure 10 displays the original CIFAR-10 image, while the rightmost one shows the image generated by GenGuard).

Figure 10.

Comparison between real images and watermarked generated images.

Experimental results display that GenGuard performs on a par with the original data in terms of clarity, detail, and class diversity. The generated images remain natural and realistic, with minimal perceptual degradation caused by watermark embedding. These results indicate that the protection mechanism achieves effective watermark integration without compromising generation quality, maintaining strong imperceptibility.

Quantitatively, we use 30,000 watermark-imbedded images to evaluate the quality using the FID (Frechet Inception Distance) and IS (Inception Score) metrics (see Table 5). Statistic results display that the distinction of FID and IS values is very fine between content generated by GenGuard and the baseline model, which is negligible within the error range and reflect that watermark imbedded in GenGuard has little influence on content generation.

Table 5.

Quality comparison table between baseline model and GenGuard.

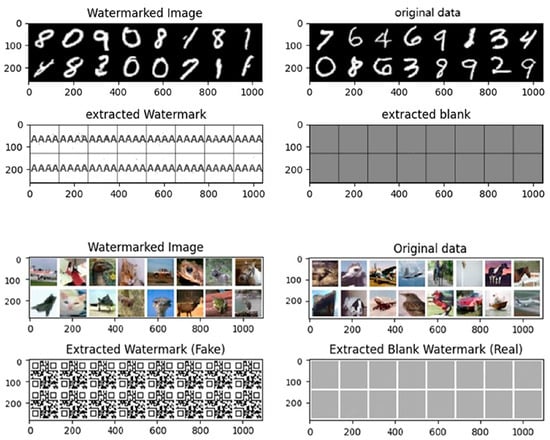

4.1.2. Qualitative and Quantitative Evaluations of the WRN

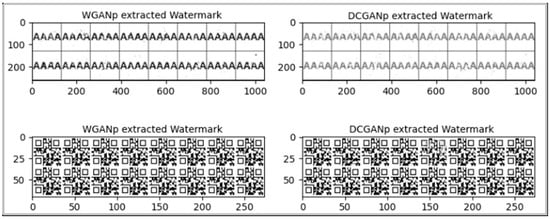

Before conducting the surrogate model experiments, we performed a performance test on the watermark retrieval module to ensure it could effectively extract watermark information during surrogate model attacks. Results shown in Figure 11 demonstrate that the module can extract the watermark clearly from watermarked images, and blank images from original images, which validated its extraction accuracy and effectiveness visually.

Figure 11.

Watermark retrieval results of the MNIST (first and second rows) and CIFAR-10 (third and fourth rows) datasets.

To quantitatively evaluate the effectiveness of watermark retrieval, we used two metrics: Mean Squared Error (MSE) and Normalized Correlation (NC). A smaller MSE indicates a smaller difference between the extracted watermark and the original, while a larger NC indicates higher similarity between the two. Ideally, an MSE of 0 represents a perfect match, and an NC of 1 indicates complete similarity. The detailed experimental results are shown in Table 6, which display that both MSE and NC are almost near their best values, respectively, and verify the effectiveness of watermark extraction quantitatively.

Table 6.

MSE and NC values of the extracted watermarks.

4.1.3. GenGuard Under Surrogate Model Attacks: Experimental Setup and Results

- (1)

- Collect input–output pairs from the original model

Given that the target generative model is typically provided as a black box, we adopt a query-based approach to collect its input–output pairs as training data for the surrogate model. Specifically, we feed randomly generated latent noise vectors into the original model and record the resulting generated images. To enhance the diversity and coverage of the generated samples, multiple random sampling methods in the latent space are employed, including Gaussian distribution, uniform distribution, and mixture distribution, thereby thoroughly exploring different regions of the latent input space. This process not only helps gather high-quality training samples but also preliminarily characterizes the output boundary features of the target generative model, providing support for subsequent surrogate model training.

- (2)

- Surrogate Model Architecture Design

The design of surrogate models should adhere to the principle of structural similarity, which means that the network architecture of the surrogate should closely resemble that of the original generative model to more effectively replicate its behavior. In this study, we adopt two representative architectures—DCGAN and WGAN—each embodying distinct generative mechanisms and training strategies, to comprehensively evaluate the transfer robustness of the watermark under surrogate model attacks. In terms of loss function design, the DCGAN-based surrogate model adopts the conventional adversarial loss, whereas the WGAN-based model is optimized using the Wasserstein distance.

Meanwhile, in order to comprehensively evaluate the robustness and resilience of the designed watermarking scheme against image-level attacks (such as rotation transformations, noise perturbations, etc.), this study introduces data augmentation strategies during the experiments. Specifically, we apply common image processing operations—such as random rotation, Gaussian noise addition, and contrast adjustment—to the input samples of the surrogate model, in order to simulate various interference factors that may occur in real-world applications.

- (3)

- Results of Surrogate Model Attacks

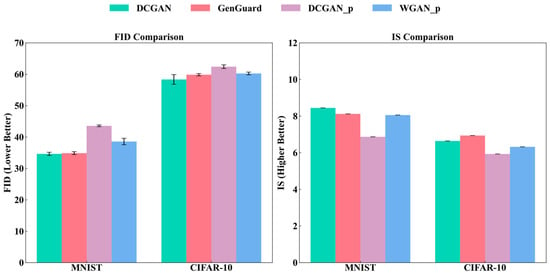

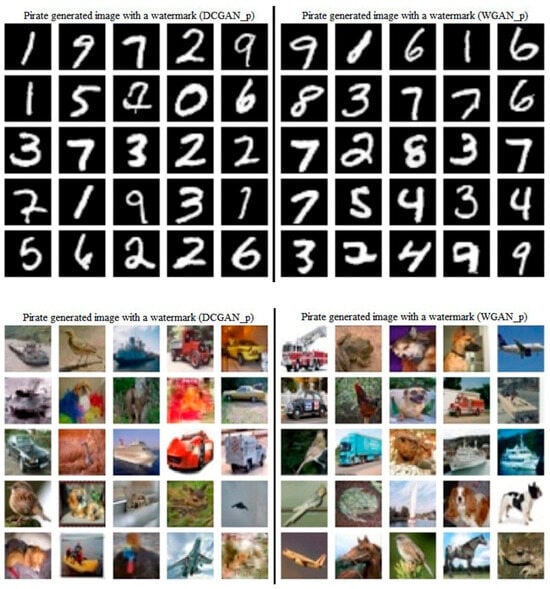

To facilitate comparison, we denote the two surrogate models as and in the following experiments. Figure 12 presents a comparative analysis of the generation performance among the baseline model, Genguard, and surrogate model, while Figure 13 demonstrates the generation results of the surrogate model. As shown in the results, the generation performance of the surrogate model can reach an almost same standard of Genguard while generating similar images, not only quantitatively but qualitatively.

Figure 12.

Performance comparison of FID and IS among DCGAN, Genguard, , and while generating images similar to MNINST and CIFAR-10.

Figure 13.

Images generated by the surrogate model (the first column displays images generated by , while the second column presents those generated by ).

To further validate the protective effectiveness of Genguard, the images generated by the surrogate model are inputted into the WRN to test whether the desired watermark can be extracted. The extracted watermarks are evaluated using Normalized Correlation (NC) and Mean Squared Error (MSE). We randomly selected 160 images generated by the surrogate models for watermark extraction.

Table 7 presents the corresponding average MSE and NC metrics for these samples. The experimental results satisfy NC > 0.95 and MSE < 0.1 [,], which indicates that the embedded watermark can still be accurately identified even under surrogate model attack scenarios, thereby effectively safeguarding the intellectual property of the generative model.

Table 7.

Evaluation of watermark retrieval from the surrogate model.

Figure 14 shows the extracted watermark images under different surrogate models. The experimental results demonstrate that, even under the combined disturbances of image perturbations and surrogate attacks, Genguard can still accurately extract the watermark. The subjective visual quality is good, and metrics such as MSE and NC perform excellently, validating the robustness and reliability of the approach.

Figure 14.

Illustration of watermark retrieval in the surrogate model.

4.2. Comparative Experiments Between GenGuard and Classic Protection Methods

In this section, we design a series of comparative experiments to evaluate Genguard performance compared to classic protection methods. Since different protection schemes are often developed for distinct tasks, achieving a fully uniform comparison is inherently challenging []. Nevertheless, we attempt to assess properties of GenGuard from multiple perspectives.

Specifically, we first compare GenGuard with the methods in [,] to evaluate the impact of watermark embedding on image quality and generative speed. Furthermore, we conduct a comprehensive robustness comparison against surrogate model attacks using the approaches in [,,]. Finally, we compare GenGuard with the techniques reported in [,] to benchmark robustness under pruning attacks.

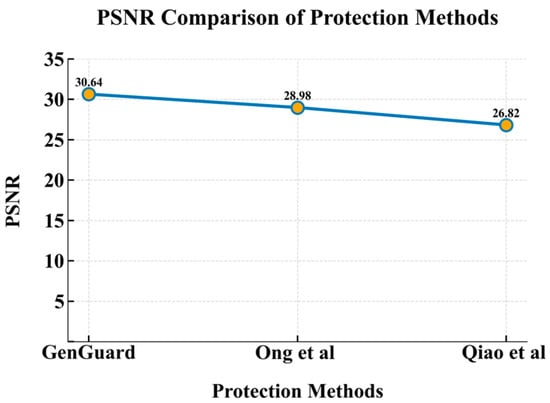

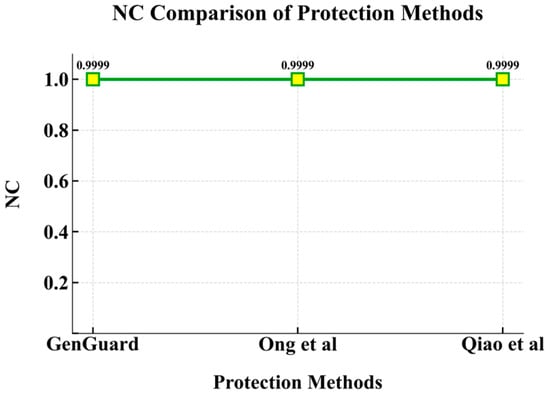

- Comparative Analysis of Quality and Speed between GenGuard and Classic Protection Methods

In this section, we employ the Peak Signal-to-Noise Ratio (PSNR) to evaluate the visual similarity between the generated images and the watermarked images, where a higher PSNR indicates that the embedding process introduces less distortion, and Normalized Correlation (NC) measures the similarity between the extracted watermark and the original watermark, with a higher NC meaning a better watermark reconstruction. Finally, the generative speed of GenGuard is compared with that of other classic watermark imbedding models.

Figure 15 presents the comparison of average PSNR value of 1000 watermarked images generated by GenGuard and the methods in [,]. As shown, GenGuard achieves the highest PSNR, indicating that the imbedded watermark causes minimal distortion to the generated image under the condition of little compromise on its visual quality.

Figure 15.

PSNR comparison between GenGuard and classic protection methods. Results are obtained from reproduced implementation of methods in Ong et al. [] and Qiao et al. [].

Figure 16 shows the average NC values for watermark extraction from 1000 watermarked images generated by GenGuard and the methods in [,]. As illustrated, GenGuard achieves comparable watermark extraction performance to other methods, demonstrating that the embedded watermarks can be successfully extracted with high fidelity in GenGuard.

Figure 16.

NC comparison between GenGuard and classic protection methods. Results are obtained from reproduced implementation of methods in One et al. [] and Qiao et al. [].

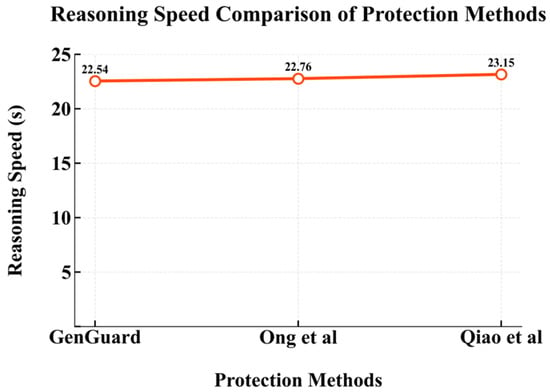

Due to the addition of watermark imbedding module to GenGuard, the method inevitably incurs additional computational overhead during generation. We evaluated the generative speed of GenGuard in comparison with the approaches in [,]. To ensure a fair comparison, all generative experiments were conducted on a single RTX 3090 GPU with a batch size of 32. Figure 17 presents the time required to generate 10,000 watermarked images, showing that GenGuard achieves faster generation than other methods.

Figure 17.

Generative speed comparison between GenGuard and classic protection methods. Results are obtained from reproduced implementation of methods in Ong et al. [] and Qiao et al. [].

- 2.

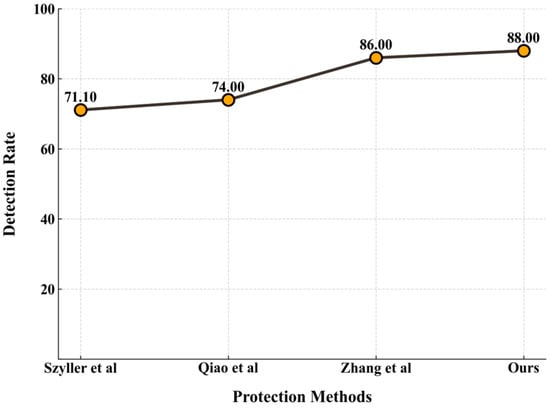

- Comparative Analysis between GenGuard and Classic Protection Methods under Surrogate Model Attacks

This section presents a comparative analysis of the performance of GenGuard against classic methods under surrogate model attacks. The evaluation metric used is the watermark detection rate, where a higher detection rate indicates higher robustness against such attacks. As shown in Figure 18, GenGuard achieves a watermark detection rate of 88% under surrogate model attacks, demonstrating superior robustness compared to the other methods and highlighting the advantage of GenGuard in defending from surrogate model attacks.

Figure 18.

Performance comparison under surrogate model attacks between GenGuard and other classic protection methods proposed in references [,,]. The data detection rate data for the method in [] are cited from its original document. However, the data for the methods in [,] are obtained from reproduced implementation.

- 3.

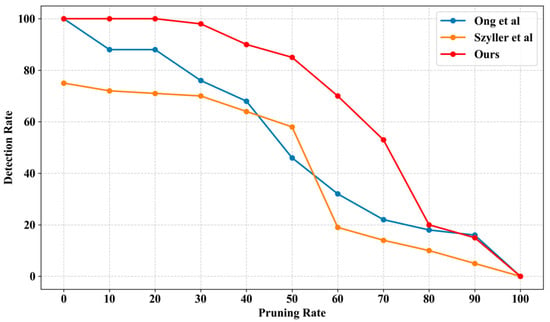

- Comparative Analysis between GenGuard and Classic Protection Methods under Pruning Attacks

In Figure 19, the comparison experiments of the robustness under pruning attacks are conducted between GenGuard and classic protection methods proposed in [,]. It can be observed that, as the pruning rate increases, the watermark detection performance of different methods gradually decreases. In particular, GenGuard consistently maintains stable and superior detection performance throughout the pruning process, clearly outperforming methods in [,]. These results demonstrate that GenGuard for GAN models exhibits higher robustness against pruning attacks.

Figure 19.

Robustness comparison between GenGuard and other classic protection methods. The data detection rate data for the method in Szyller et al. [] are cited from its original document. However, the data of the method in Ong et al. [] are obtained from reproduced implementation.

5. Discussion

Experimental results demonstrate that the proposed method can accurately and consistently retrieve the embedded watermark information, even when facing combined image perturbations and surrogate attacks, thereby enabling reliable ownership verification. The robustness of the framework primarily benefits from the introduction of feature extraction and watermark embedding modules at the generator’s output, as well as the incorporation of an attack simulation strategy within the WRN, which enhances the model’s resistance to attacks during training. Further comparative experiments show that the proposed method achieves superior performance in defending against surrogate and fine-tuning attacks while maintaining high image quality. This improvement is attributed to the carefully designed joint loss function and optimization strategy, which effectively strengthen the robustness of the embedded watermark without compromising the fidelity of the generated images.

Compared with traditional approaches such as those in [,,], the proposed framework eliminates the need for specific trigger datasets in IP verification. Instead, ownership can be validated directly by extracting the embedded watermark from the outputs of a normally operating surrogate model, thereby significantly improving the flexibility and practicality of the verification process. However, it is worth noting that the current framework has been validated primarily on typical architectures such as DCGAN and WGAN, and its applicability to more complex or large-scale generative models (e.g., StyleGAN, Diffusion Models) remains to be further explored. Future work will focus on extending the proposed approach to high-resolution and multimodal generation tasks, and investigating watermark embedding strategies in the latent feature space to enhance cross-domain robustness and generalization.

From a broader perspective, this research not only provides a technically reliable solution for verifying the ownership of generative models, but also contributes to reducing legal disputes caused by model misuse or IP infringement, thereby promoting fairness, accountability, and sustainable development in the artificial intelligence industry.

6. Conclusions

- (1)

- This paper proposes a symmetric watermark embedding and retrieval framework to address the challenge of intellectual property protection for generative adversarial networks (GANs) under surrogate model attacks. The framework integrates a feature extraction module and a watermark embedding module at the output stage of the generator, enabling the generated images to implicitly carry a unique watermark signature. When the model is subjected to surrogate model attacks, the designed watermark retrieval network can accurately extract the original watermark from the images produced by the surrogate model, thereby achieving effective ownership verification and copyright attribution.

- (2)

- Experimental results demonstrate that the proposed framework remains robust and accurate in recovering embedded watermarks even under surrogate attack scenarios. Further comparative analyses verify that the method outperforms traditional approaches in terms of image quality preservation, attack robustness, and inference efficiency.

- (3)

- Overall, this study provides a secure and verifiable solution for protecting the intellectual property of generative models, while offering new insights and societal value for building accountability and trust mechanisms in artificial intelligence systems.

Author Contributions

Conceptualization and methodology, S.C. and S.-C.Y.; software, S.C.; validation and formal analysis, S.-C.Y.; investigation, S.C.; resources, S.-C.Y.; data curation, writing—original draft preparation, and visualization, S.C.; supervision, project administration, and writing—review and editing, S.-C.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the research project of Jilin Provincial Department of Education under grant no. JJKH20240153KJ.

Data Availability Statement

This study used the MNIST and CIFAR-10 datasets, which are publicly available datasets. They can be found at http://yann.lecun.com/exdb/mnist/ (accessed on 11 October 2025) and https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 11 October 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Adi, Y.; Baum, C.; Cisse, M.; Pinkas, B.; Keshet, J. Turning your weakness into a strength: Watermarking deep neural networks by backdooring. In Proceedings of the 27th USENIX Security Symposium (USENIX Security 18), Baltimore, MD, USA, 15–17 August 2018; pp. 1615–1631. [Google Scholar]

- Zhang, J.; Gu, Z.; Jang, J.; Wu, H.; Stoecklin, M.P.; Huang, H.; Molloy, I. Protecting intellectual property of deep neural networks with watermarking. In Proceedings of the 2018 on Asia Conference on Computer and Communications Security (ASIACCS), Incheon, Republic of Korea, 4–8 June 2018; pp. 159–172. [Google Scholar]

- Zhong, Q.; Zhang, L.Y.; Zhang, J.; Gao, L.; Xiang, Y. Protecting IP of deep neural networks with watermarking: A new label helps. In Pacific-Asia Conference on Knowledge Discovery and Data Minin; Springer: Cham, Switzerland, 2020; pp. 462–474. [Google Scholar]

- He, X.; Lyu, L.; Xu, Q.; Sun, L. Model extraction and adversarial transferability, your BERT is vulnerable! arXiv 2021, arXiv:2103.10013. [Google Scholar] [CrossRef]

- Jia, H.; Choquette-Choo, C.A.; Chandrasekaran, V.; Papernot, N. Entangled watermarks as a defense against model extraction. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Virtual, 11–13 August 2021. [Google Scholar]

- Uchida, Y.; Nagai, Y.; Sakazawa, S.; Satoh, S. Embedding watermarks into deep neural networks. In Proceedings of the 2017 ACM on International Conference on Multimedia Retrieval, Bucharest, Romania, 6–9 June 2017. [Google Scholar]

- Guo, J.; Potkonjak, M. Evolutionary trigger set generation for DNN black-box watermarking. arXiv 2019, arXiv:1906.04411. [Google Scholar]

- Cong, T.; He, N.; Zhang, Y. Sslguard: A watermarking scheme for self-supervised learning pre-trained encoders. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security (CCS), Los Angeles, CA, USA, 7–11 November 2022. [Google Scholar]

- Chen, J.; Wang, J.; Peng, T.; Sun, Y.; Cheng, P.; Ji, S.; Ma, X.; Li, B.; Song, D. Copy, right? A testing framework for copyright protection of deep learning models. In Proceedings of the 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 22–26 May 2022. [Google Scholar]

- Maini, P.; Yaghini, M.; Papernot, N. Dataset inference: Ownership resolution in machine learning. arXiv 2021, arXiv:2104.10706. [Google Scholar] [CrossRef]

- Yu, N.; Skripniuk, V.; Chen, D.; Davis, L.; Fritz, M. Responsible disclosure of generative models using scalable fingerprinting. arXiv 2020, arXiv:2012.08726. [Google Scholar]

- Fei, J.; Xia, Z.; Tondi, B.; Barni, M. Supervised GAN watermarking for intellectual property protection. In Proceedings of the 2022 IEEE International Workshop on Information Forensics and Security (WIFS), Shanghai, China, 12–16 December 2022. [Google Scholar]

- Ong, D.S.; Chan, C.S.; Ng, K.W.; Fan, L.; Yang, Q. Protecting intellectual property of generative adversarial networks from ambiguity attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Zhang, J.; Chen, D.; Liao, J.; Fang, H.; Zhang, W.; Zhou, W.; Cui, H.; Yu, N. Model watermarking for image processing networks. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12805–12812. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Geoffrey, H. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2009; p. 7. [Google Scholar]

- Zhang, J.; Chen, D.; Liao, J.; Zhang, W.; Feng, H.; Hua, G.; Yu, N. Deep model intellectual property protection via deep watermarking. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4005–4020. [Google Scholar] [CrossRef] [PubMed]

- Wan, W.; Wang, J.; Zhang, Y.; Li, J.; Yu, H.; Sun, J. A comprehensive survey on robust image watermarking. Neurocomputing 2022, 488, 226–247. [Google Scholar] [CrossRef]

- Qiao, T.; Ma, Y.; Zheng, N.; Wu, H.; Chen, Y.; Xu, M.; Luo, X. A novel model watermarking for protecting generative adversarial network. Comput. Secur. 2023, 127, 103102. [Google Scholar] [CrossRef]

- Szyller, S.; Atli, B.G.; Marchal, S.; Asokan, N. Dawn: Dynamic adversarial watermarking of neural networks. In Proceedings of the 29th ACM International Conference on Multimedia (MM), New York, NY, USA, 20–24 October 2021; pp. 4417–4425. [Google Scholar]

- Wu, H.; Liu, G.; Yao, Y.; Zhang, X. Watermarking neural networks with watermarked images. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2591–2601. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).