Abstract

The aim of the present work is a comparative study of different persistence kernels applied to various classification problems. After some necessary preliminaries on homology and persistence diagrams, we introduce five different kernels that are then used to compare their performances of classification on various datasets. We also provide the Python codes for the reproducibility of results and, thanks to the symmetry of kernels, we can reduce the computational costs of the Gram matrices.

1. Introduction

In the last two decades, with the increasing need to analyze large amounts of data, which are usually complex and of high dimension, it has been revealed as meaningful and helpful to discover further methodologies to provide new information from data. This has brought to birth Topological Data Analysis (TDA), whose aim is to extract intrinsic, topological features related to the so-called “shape of data”. Thanks to its main tool, Persistent Homology (PH), it can provide new qualitative information that would be impossible to extract in any other way. This kind of feature, which one can collect in the so-called Persistence Diagram (PD), have been gainful in many different applications, mainly related to applied science, improving the performances of models or classifiers, as in our context. Thanks to the strong basis of algebraic topology beneath it, the TDA is very versatile and can be applied to data with a priori any kind of structure, as we will explain in the following. This is the reason why there is a wide range of fields of applications, like chemistry [1], medicine [2], neuroscience [3,4], finance [5] and computer graphics [6], to name only a few.

An interesting and relevant property of this tool is its stability with regard to noise [7], which is a meaningful aspect for applications to real-world data. On the other hand, since the space of PDs is only metric, to use methods that require data to live in a Hilbert space, such as the SVM and PCA, it is necessary to introduce the notion of the kernel or, better still, the Persistence Kernel (PK), which maps PDs to space with more structure, where it is possible to apply techniques that need a proper definition of inner product. A relevant aspect to highlight is that PKs are symmetric, as usual, and, taking advantage of this symmetry, we may reduce the computational costs of the corresponding Gram matrices in our codes, since we need only to compute values onto the diagonal and below it.

In the literature, researchers have tested the PKs in the context of classification on some datasets but, to our knowledge, there is a lack of transversal analysis. For instance, the Persistence Scale-Space Kernel (PSSK) was introduced and tested in [8] on shape classification (SHREC14) and texture classification (OUTEX TC 00000), while in [9] the authors considered image classification (MNIST, HAM10000), classification of sets of points (PROTEIN) and shape classification (SHREC14, MPEG7). Ref. [10] presented the Persistence Weighted Gaussian Kernel (PWGK) and reported performances of kernels in trying to classify protein and synthetized data. In [11], the authors introduced their own kernel, the Sliced Wasserstein Kernel (SWK), and they compared it with other kernels for classification of 3D shapes, orbit recognition (linked twisted map) and texture classification (OUTEX00000). In [12], the Persistence Fisher Kernel (PFK) was tested for orbit recognition (linked twisted map) and shape classification (MPEG7). Persistence Image (PI) in [13] was used in orbit recognition (linked twisted map) and breast tumor classification [2]. Finally, in [14] there were comparisons of PWGK, SWK and PI in the context of graphs classification (PROTEIN, PTC, MUTAG, etc.…), and in [15] PSSK, PWGK, SWK, PFK were tested on classification related to Alzheimer disease, orbit recognition (linked twisted map) and classification of 3D shapes.

The goals of the present paper are as follows: first, we investigate how to choose values for parameters related to different kernels; then, we collect tools for computing PD starting from different kinds of data; finally, we compare the performances of the main kernels in the classification context. As far as we know, the content of this study is not already present in the literature.

The paper is organized as follows: in Section 2, we recall the basic notion related to persistent homology, the problem of classification and how to solve it using the Support Vector Machine (SVM) and we list the main PK available in the literature. Section 3 collects all the numerical tests that we have run, and in Section 4 we outline our conclusions.

2. Materials and Methods

2.1. Persistent Homology

This brief introduction does not claim to be exhaustive; therefore, we invite interested readers to refer, for instance, to the works [16,17,18,19,20,21]. The first ingredient needed is the concept of filtration. The most common choice in applications is to take into account a function , where is a topological space that varies based on different contexts, and then to take into account the filtration based on the sub-level set given by , . For example, such an f can be chosen as the distance function in the case of point cloud data, the gray-scale values at each pixel for images, the heat kernel signature for datasets as SHREC14 [22], the weight function of edges for graphs, and so on. We now recall the main theoretical results related to point cloud data, but all of them can be easily applied in other contexts.

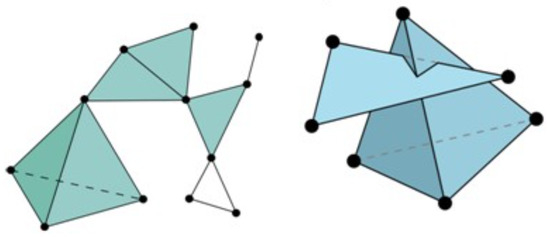

We assume a set of points that we suppose to live in an open set of a manifold . The aim is to be able to capture the relevant intrinsic properties of the manifold itself, and this is achieved through Persistent Homology (PH) being applied to such discrete information. To understand how PH has been introduced, first we have to mention simplicial homology, which represents the extension of homology theory to structures called simplicial complexes, that roughly speaking, are collections of simplices, glue togheter in a valid manner, as shown in Figure 1.

Figure 1.

An example of a valid simplicial complex (left) and an invalid one (right).

Definition 1.

A

simplicial complex

K consists of a set of simplices of different dimensions and has to meet the following conditions:

- Every face of a simplex σ in K must belong to K;

- The non-empty intersection of any two simplices is a face of both and .

The dimension of K is the maximum dimension of simplices that belong to K.

In application, data analysts usually compute the Vietoris–Rips complex.

Definition 2.

Let denote a metric space from which the samples are taken. The Vietoris–Rips complex related to , associated to the value of parameter ϵ, denoted by , is the simplicial complex whose vertex set is , and spans a k-simplex if and only if for all .

If then we can divide all simplices of this set K into groups, based on their dimension k, and we can enumerate them using . If is the well-known Abelian group, we may build linear combinations of simplices with coefficients in G, and so we introduce the following:

Definition 3.

An object of the form with is an integer-valued k-dimensional chain.

Linearity allows us to extend the previous definition to any subsets of simplices of K with dimension k.

Definition 4.

The group is called the group of k-dimensional simplicial integer-valued chains of the simplicial complex K.

It is then possible to associate with each simplicial complex the corresponding set of Abelian groups .

Definition 5.

The boundary of an oriented simplex is the sum of all its -dimensional faces taken with a chosen orientation. More precisely,

In a general setting, we can extend the boundary operator by linearity to a general element of , obtaining a map .

For any value of k, is a linear map. Therefore, we can take into account its kernel: for instance, the group of k-cycles, and the image, the group of k-boundaries, . Then, is the k-homology group and represents the k-dimensional holes that can be recovered from the simplicial structure. We briefly recall here that, for instance, zero-dimensional holes correspond to connected components, one-dimensional holes are cycles, and two-dimensional holes are cavities/voids. Since they are algebraic invariants, they collect qualitative information regarding the topology of the data. The most crucial aspect is highlighting the best value for to obtain a simplicial complex K that faithfully reproduces the original manifold’s topological structure. The answer is not straightforward and the process reveals instability; therefore, the PH analyzes not only one simplicial complex but a nested sequence of them, and, following the evolution of such a structure, it notes down the features that gradually emerge. From a theoretical point of view, letting be an increasing sequence of real numbers, we obtain the filtration

with , and then

Definition 6.

The p-persistent homology group of is the group defined as

This group contains all stable homology classes in the interval i to : they are born before the time/index i and are still alive after p steps. The persistent homology classes that are alive for large p correspond to stable topological features of (see [23]). Along the filtration, the topological information appears and disappears; thus, it means that they may be represented with a couple of indexes. If p is such a feature, it must be born in some and die in , so it can be described as , . We underline here that j can be equal to , since some features can be alive up to the end of the filtration. Hence, all such topological invariants live in the extended positive plane that here is denoted by . Another interesting aspect to highlight is that some features can appear more than once and, accordingly, such collections of points are called multisets. All of these observations are grouped into the following:

Definition 7.

A Persistence Diagram (PD) Dr related to the filtration with is a multiset of points defined as

where denotes the set of r-dimensional birth–death couples that came out along the filtration, each is considered with its multiplicity, while points of with infinite multiplicity. One may consider all for every r together, obtaining the total PD denoted here by , which we will usually consider in the following sections.

Each point is known as a generator of the persistent homology, and it corresponds to a topological feature that is born at and dies at . The difference is called the persistence of the generator, which represents its lifespan and shows the robustness of the topological property.

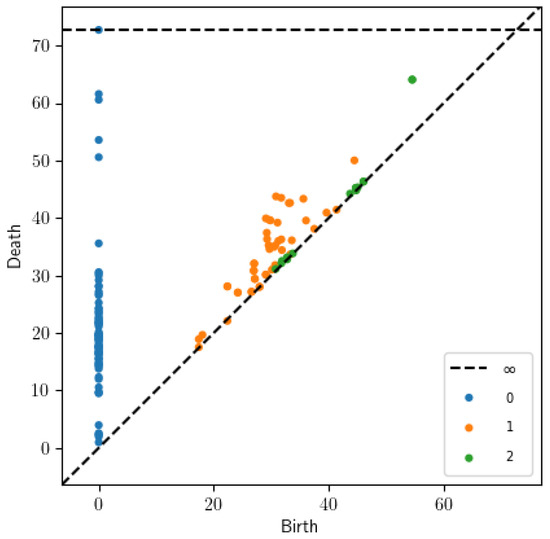

Figure 2 is an example of a total PD collecting features of zero dimensions (in blue), one dimension (in orange), and two dimensions (in green). Points close to the diagonal represent features with a short lifetime, and so, usually, they are concerned with noise, while features far away are also relevant and meaningful and, based on applications, one can decide to consider both or only the most interesting ones. At the top of the Figure, there is a dashed line that indicates infinity and also allows us to plot couples as .

Figure 2.

Example of PD with features of zero, one and two dimensions.

In the previous definition, the set is added to finding out proper bijections between sets that without could not have the same number of points. This makes it possible to compute the proper distance between PDs.

Stability

A key property of PDs is stability under perturbation of the data. First, we recall two famous distances for sets:

Definition 8.

Given two non-empty sets with equal cardinality, the Haussdorff distance is

and the bottleneck distance is defined as

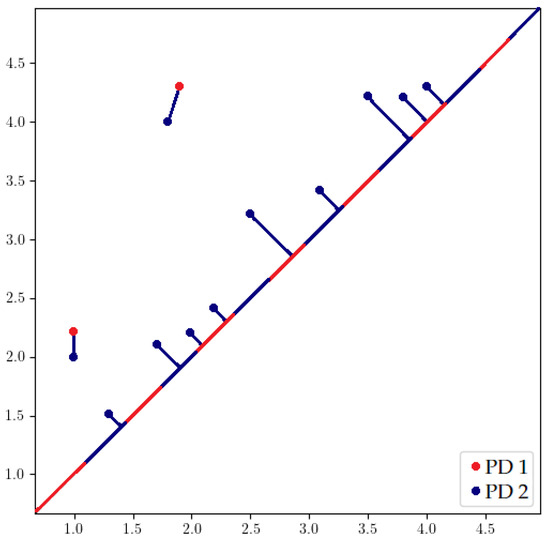

where we consider all possible bijection of multisets . Here, we use

We will now try to better explain how to compute the bottleneck distance. We have to take all possible ways to move points from to in a bijective manner; then, we can compute properly the distance. Figure 3 shows two different PDs overlapped that consist of joined with 2 points in red and 11 points in blue, respectively. First, in order to apply definition (1), we need two sets with the same cardinality. For this aim, it is necessary to add points of —more precisely, points of the diagonal obtained, projecting in an orthogonal manner 9 blue points closer to it, to reach 11. The lines between the points and represent the bijection that realizes the best matching between the points in definition (1).

Figure 3.

Example of bottleneck distance between two PDs in red and blue.

Proposition 1.

Let and be a finite subset in a metric space . Then, two Persistence Diagrams, , , satisfy

For any further details, see, for example, in [17].

2.2. Classification with SVM

Let and be the set of input data with We have a training set composed of the couples with and . The binary supervised learning task consists in finding a function , the model, such that it can predict satisfactorily the label of an unseen .

The goal is to define a hyperplane that can separate, in the best possible way, points that belong to different classes and, from here, name the separating hyperplane. The best possible way means that it separates the two classes with the higher margin—that is, the distance between the hyperplane and the points of both classes.

More formally, if we assume that we are in a space with a dot product—for instance, can be a subset of with , since a generic hyperplane can be defined as

—then we can introduce the following definition.

Definition 9.

We call

the geometrical margin of the point . The minimum value,

may be called a geometrical margin of .

From a geometrical perspective, this margin measures effectively the distance between samples and the hyperplane itself. Then, the SVM is looking for a suitable hyperplane that intuitively realizes the maximum of such a margin. For any further details, see, for example, in [24]. The precise formalization brings us to an optimization problem that, thanks to the Lagrange multipliers and the Karush–Kuhn–Tucker conditions, turns out to have the following formulation, as an SVM optimization problem:

where is the bounding box and are called the support vectors. Henceforth, the name Support Vector Machine is shortened to SVM, and denotes the inner product in . This formulation can face satisfactorily the classification task if the data are linearly separable. In applications, this does not happen frequently, and so it is necessary to introduce some nonlinearity and to move in a higher-dimensional space where, hopefully, this can happen. This can be achieved with the use of kernels. Starting from the original dataset , the theory tells us to introduce a feature map that moves data from to a Hilbert space of function : the so-called feature space. The kernel is then defined as (kernel trick). Thus, the optimization problem becomes

where the kernel represents a generalization of the inner product in . We are interested in classifying the PDs and, obviously, we need suitable definitions for the kernels for the PDs, the so-called Persistence Kernels (PK).

2.3. Persistence Kernels

In what follows, we denote with the set of the total PDs.

2.3.1. Persistence Scale-Space Kernel (PSSK)

The first kernel was described in [8]. The main idea is to compute the feature map as the solution of the Heat equation. We consider and we denote with the Dirac delta with its center at x. If , we take into account the solution , of the following PDE:

The feature map at scale at D is defined as . This map yields the Persistence Scale-Space Kernel (PSSK) on as

But, since it is known as an explicit formula for the solution u, the kernel takes the form

where for any .

2.3.2. Persistence Weighted Gaussian Kernel (PWGK)

In [10], the authors introduced a new kernel, whose idea is to replace each PD with a discrete measure. Starting with a strictly positive definite kernel—as, for example, the Gaussian one , —we indicate the corresponding Reproducing Kernel Hilbert Space .

If , we denote with the space of finite signed Radon measures and

For any , if , where the weight function satisfies for all , then

where

and .

The Persistence Weight Gaussian Kernel (PWGK) is defined as

for any .

2.3.3. Sliced Wasserstein Kernel (SWK)

Another possible choice for was introduced in [11].

If and are two non-negative measures on , such that and , then we recall that the 1-Wasserstein distance for non-negative measures is defined as

where is the set of measures on with marginals and .

Definition 10.

If with , let indicate the line and let be the orthogonal projection onto . Let and let and and similarly for and , where denotes the orthogonal projection onto the diagonal. Then, the Sliced Wasserstein distance is

Thus, the Sliced Wasserstein Kernel (SWK) is defined as

for any .

2.3.4. Persistence Fisher Kernel (PFK)

In [12], the authors described a kernel based on Fisher Information geometry.

Given a persistence diagram , it is possible to build a discrete measure , where is Dirac’s delta centered in u. Given a bandwidth and a set , one can smooth and normalize as follows:

where N is a Gaussian function, and I is the identity matrix. Thus, using this measure, any PD can be regarded as a point in .

Given the two elements in , the Fisher Information Metric is

Inspired by the Sliced Wasserstein Kernel construction, we have the following definition.

Definition 11.

Given two finite and bounded persistence diagrams , the Fisher Information Metric between D and E is defined as

where , and is the orthogonal projection on the diagonal .

The Persistence Fisher Kernel (PFK) is then defined as

2.3.5. Persistence Image (PI)

The main reference is [13]. If then we introduce a change of coordinates, given by and we let be the multiset made by the first-persistence coordinates. Let be a differentiable probability distribution with mean , usually , where is the two-dimensional Gaussian with mean u and variance , defined as

Fix a weight function , where , which is equal to zero on the horizontal axis, continuous and piecewise differentiable. A possible choice is a function that depends only on the persistence coordinate y, a function where

Definition 12.

Given , the corresponding persistence surface is the function

If we divide the plane into a grid with pixels then we have the following definition.

Definition 13.

Given , its persistence image is the collection of pixels

Thus, through the persistence image, each persistence diagram is turned into a vector that is ; then, it is possible to introduce the following kernel:

3. Results

3.1. Shape Parameters Analysis

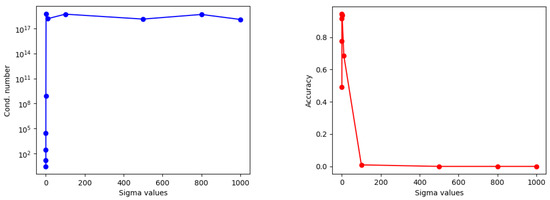

From the definitions of the aforementioned kernels, it is evident that each of them depends on some parameters, and it is not clear, at present, which values are assigned to them. The cross-validation phase—or, for abbreviation, the CV phase—tries to answer to this question. For instance, in the CV phase the user defines a set of values for the parameters, and for any possible admissible choice he solves the classification task and measures the goodness of the obtained results. It is well-known that in the RBF interpolation literature [25] the shape parameters have to be chosen after a process similar to the CV phase. In the beginning, the user chooses some values for each parameter, and then, varying them, one checks the condition number and the interpolation error, noting how they vary according to the values of the parameters. The trade-off principle suggests considering values where the condition number is not huge (ill-conditioned) and the interpolation error is too small (accuracy). Now, in the context of classification, we have to replace the concepts of the condition number of the interpolation matrix and the interpolation error. We can achieve this goal by considering the condition number of the Gram matrix and the accuracy of the classifier. To obtain a good classifier, it is desirable to have a small condition number of the Gram matrix and high accuracy, as close to 1 as possible. The aim here was to run such an analysis for the kernels presented in this paper.

The PSSK has only one parameter to tune: . Typically, the users consider . We ran the CV phase for different shuffles of a dataset and plotted the results in terms of the condition number of the Gram matrix related to the training samples and the accuracy. For our analysis, we considered and we ran tests on some datasets cited in the following. The results were similar in each case, so we decided to report those for the SHREC14 dataset.

From the Figure 4, it is evident that large values of result in an unstable matrix and less accuracy. Then, in what follows, we will take into account only .

Figure 4.

Comparison results about PSSK for SHREC14, in terms of condition number (left) and accuracy (right) with different .

The PWGK is the kernel with a higher number of parameters to tune; therefore, it was not so evident what were the best-set values to take into account. We chose reasonable starting sets as follows: , , , . Due to a large number of parameters, we first ran some experiments varying with fixed , and then we reversed the roles.

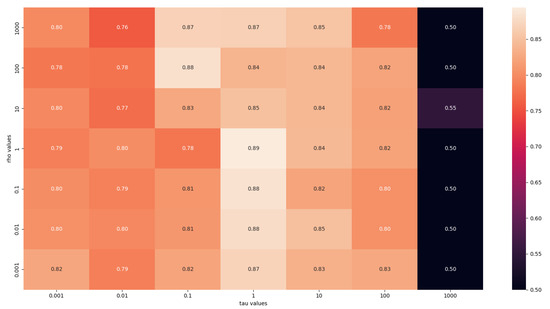

We report here in Figure 5 only a plot for fixed and p because this highlights how high values of (for example ) were excluded. We found this behavior for different values of and various datasets—here, for the case , and the MUTAG dataset. Therefore, we decided to vary the parameters, as follows: , , , . Unfortunately, there was no other evidence that could guide the choices, except for , where values always had bad accuracy, as one can see below in the case of MUTAG with the shortest path distance.

Figure 5.

Comparison results about the PWGK for MUTAG, in terms of accuracy with different and .

In the case of the SWK, there is only one parameter, . In [11], the authors proposed to consider values starting from the first and last decile and with the median value of the gram matrix of the training samples flattened, in order to obtain a vector; then, they multiplied these three values for .

For our analysis, we decided to study the behavior of such kernels, considering the same set of values independently from the specific dataset. We considered .

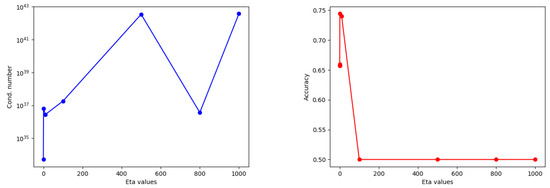

We ran tests on some datasets, and the plot, related to the DHFR dataset, revealed evidently that large values for were to be excluded, as suggested by Figure 6. So, we decided to take only in .

Figure 6.

Comparison results about the SWK for DHFR, in terms of condition number (left) and accuracy (right) with different .

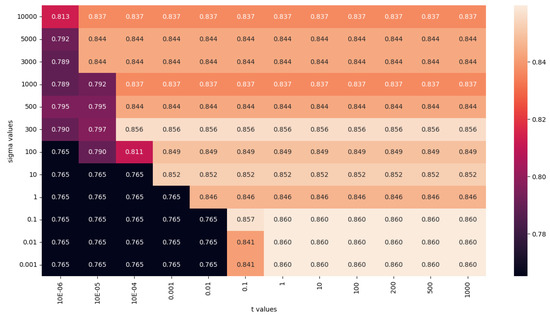

The PFK has two parameters: the variance and t. In [12], the authors exhibited the procedure to follow, in order to obtain the corresponding set of values. It shows that the choice of t depends on . Our aim in this paper was to carry out an analysis that was dataset-independent, which turned out to be strictly connected only to the definition of the kernel itself. First, we took different values for and we plotted the corresponding accuracies—here, in the case of MUTAG with the shortest path distance, but the same behavior holds true also for other datasets.

The condition numbers were indeed high for every choice of parameters and, therefore, we avoided reporting here, because it would have been meaningless. From the Figure 7, it is evident that it is convenient to set lower or equal to 10, while t should be set larger or equal to 0.1. Thus, in what follows, we took into account and .

Figure 7.

Comparison results about the PFK for MUTAG, in terms of accuracy with different t and .

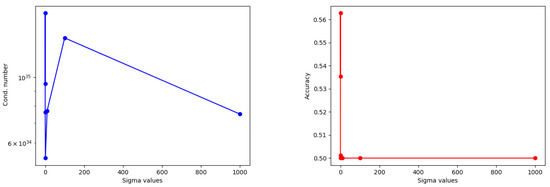

In the case of the PI, we considered a reasonable set of values for the parameter . The results were related to BZR with the shortest path distance redand shown in Figure 8.

Figure 8.

Comparison results about the PI for BZR, in terms of condition number (left) and accuracy (right) with different .

As in the previous kernels, it seemed that the accuracy was better for small values of . For this reason, we set .

3.2. Numerical Tests

For what concerned the computation of simplicial complexes and persistence diagrams, we used some Python libraries available online: gudhi [26], ripser [27], giotto-tda [28] and persim [29]. On all the datasets, we performed a random splitting for training and testing, and we applied a tenfold cross-validation on the training set, in order to tune the parameters. Then, we averaged the results over 10 runs. For balanced datasets, we measured the performances of the classifier through accuracy for binary and multiclass problems:

In the case of the imbalanced datasets, we adopted balanced accuracy, as explained in [30]: if for every class i we defined the related recall as

then the balanced accuracy in the case of n different classes was

This definition was able to effectively quantify how accurate the classifier was, even in the case of the smallest classes. For the tests, we used the implementation of the SVM provided by the Scikit [31] library of Python. For PFK, we precomputed the Gram matrices using a Matlab (Matlab R2023b) routine because it is faster than the Python one. The values for C belonged to . For each kernel, we considered the following values for the parameters:

- PSSK: .

- PWGK: , , , , and for the kernel we chose the Gaussian one.

- SWK: .

- PFK: and .

- PI: and number of pixel 0.1.

All the codes were run using Python 3.11 on a 2.5 GHz Dual-Core Intel Core i5, 32 Giga RAM. They can be found and downloaded from the GitHub page https://github.com/cinziabandiziol/persistence_kernels (accessed on 1 August 2024).

3.3. Point Cloud Data and Shapes

3.3.1. Protein

This is the Protein Classification Benchmark dataset PCB00019 [32]. It sums up information for 1357 proteins related to 55 classification problems. The data were highly imbalanced and therefore we applied the classifier to one of them, whereby the imbalance was slightly less evident. Persistence diagrams were computed for each protein by considering the 3-D structure or, better still, the position of any atoms in each of the 1357 molecules, as a point cloud in . Finally, using ripser we computed the persistence diagrams of only one dimension.

3.3.2. SHREC14—Synthetic Data

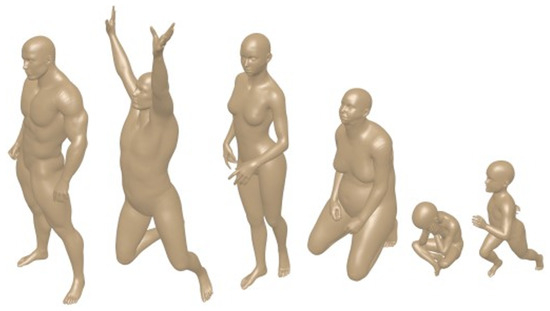

This dataset is related to the problem of non-rigid 3D shape retrieval. It collects exclusively human models in different body shapes and 20 poses, some examples are reported in Figure 9. It consists of 15 different human models, including man, woman, and child, each with its own body shape. Each of these models exists in 20 different poses, making up a dataset composed of 300 models.

Figure 9.

Some elements of the SHREC14 dataset.

For each shape, the meshes are given with about 60,000 vertices and, using the Heat Kernel Signature (HKS) introduced in [33], over different values of as [8], we computed the persistence diagrams of the induced filtrations in dimensions 1.

3.3.3. Orbit Recognition

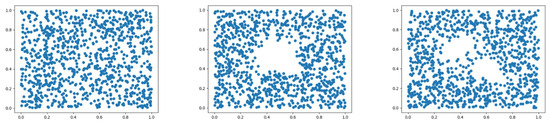

We considered the dataset proposed in [13]. We took into account the linked twisted map, which modeled the fluid flows. The orbits could then be computed through the following discrete dynamical system:

with the starting point and being a real number that influenced the behavior of the orbits, as shown in Figure 10.

Figure 10.

Orbits composed by the first 1000 iterations of the twisted map with from left to right, starting from the fixed random .

As in [13], , and it was strictly connected to the label of the corresponding orbit. For each of them, we provided the first 1000 points of 50 orbits, with starting points chosen randomly. The final dataset was composed of 250 elements. We computed the PDs, considering only the one-dimensional features. Since each PD had a huge number of topological features, we decided to consider only the first 10 most persistent ones, as in [15].

Firstly, the great difference in performances among the different datasets was probably due to the high imbalance of the PROTEIN one, with respect to the perfect balance of the other ones. It is well-known that if the classifier does not have enough samples for each class, as in the case of the imbalanced dataset, it has to face significant issues in classifying correctly the elements of the minor classes. From Table 1 it is evident how, except for PROTEIN, where the PSSK showed slightly better performances, for SHREC14 and DYN SYS the best accuracy was achieved by the SWK.

Table 1.

Accuracy related to point cloud and shape datasets (Balanced Accuracy only for the PROTEIN dataset). The best results are underlined in bold.

3.4. Images

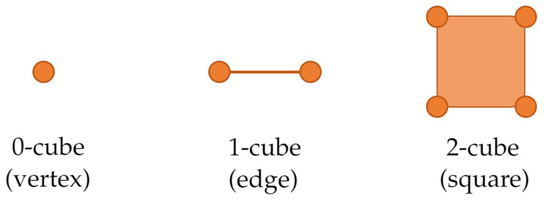

All the definitions introduced in Section 2 can be extended to another kind of simplicial complex, the cubical complex. This is useful when one deals with images or objects based on meshes, for example. More details can be found in [34].

Definition 14.

An elementary cube can be defined as the product of , , where each can be either a set with one element or a unit-length interval for some A k-cube Q is a cube whose number of unit-length intervals in the product of Q is equal to k, which is then defined as the dimension of the cube Q. If and are cubes and then is called a face of . A cubical complex X in is a collection of k-cubes , such that:

- every face of a cube in X has to belong to X;

- given two cubes of X, their intersection must be either empty or a face of each of them.

The lower dimensional cubical complexes are reported in Figure 11.

Figure 11.

Cubical simplices.

MNIST and FMNIST

MNIST [35] is very common in the classification framework. It consists of 70,000 handwritten digits, in grayscale as one can see in Figure 12, which one can try to classify into 10 different classes. Each image can be viewed as a set of pixels with a value between 0 and 256 (black and white), as in the figure below:

Figure 12.

Example of an element in the MNIST dataset.

Starting from this kind of dataset, we have to compute the corresponding persistent features. According to the approach proposed in [36] coming from [9], we first binarize each image: for instance, we replace each grayscale image with a white/black one, then we use as a filtration function the so-called Height filtration in [36]. For a cubical complex, for a chosen vector of unit norm, it is defined as

where is a large default value chosen by the user. As in [9], we choose four different vectors for p, , and we compute zero and one-dimensional persistent features, using both the tda-giotto and the gudhi libraries. Finally, we concatenate them. For the current experiment, we decided to focus the test on a subset of the original MNIST, composed of only 10,000 samples. This was a balanced dataset. Due to some memory issues, we had to consider for this dataset a pixel size of and for the PWGK only , , , .

Another example of a grayscale image dataset is the FMNIST [37], which contains 28 × 28 grayscale images related to the fashion world. Figure 13 shows an example.

Figure 13.

Example of an element in the FMNIST dataset.

To deal with this, we followed another approach proposed in [23], where the authors applied padding, median filter, shallow thresholding and canny edges and then computed the usual filtration to the image obtained. Due to some memory issues, we had to consider for this dataset a pixel size of 1 and for the PWGK only , , , .

Both datasets were balanced, and it is probable that the results were better in the case of MNIST, due the fact that it is easier to classify handwritten digits instead of images of cloths. The SWK showed a slightly better performance as reported in Table 2.

Table 2.

Accuracy related to MNIST and FMNIST. The best results are underlined in bold.

3.5. Graphs

In many different contexts, from medicine to chemistry, data can have the structure of graphs. Graphs are couples of a set , where V is the set of vertices and E is the set of edges. The graph classification is the task of attaching a label/class to each whole graph. In order to compute the persistent features, we needed to build a filtration. In the context of graphs, as in other cases, there are different definitions; see, for example, in [38].

We considered the Vietoris–Rips filtration, where, starting from the set of vertices, at each step we would add the corresponding edge whose weights were less or equal to a current value . This turned out to be the most common choice, and the software available online allowed us to build it after providing the corresponding adjacency matrix. In our experiments, we considered only undirected graphs, but, as in [38], building a filtration is possible also for directed graphs. Once defining the kind of filtration to use, one needs again to choose the corresponding weights. We decided to take into account first the shortest path distance and then the Jaccard index, as, for example, in [14].

Given two vertices the shortest path distance was defined as the minimum number of different edges that one has to meet going from u to v or vice versa, since the graphs here were considered as undirected. In graphs theory, this is a widely use metric.

The Jaccard index is a good measure of edge similarity. Given an edge then the corresponding Jaccard index is computed as

where is the set of neighbors of u in the graph. This metric recovers the local information of nodes, in the sense that two nodes are considered similar if their neighbor sets are similar.

In both cases, we considered the sub-level set filtration and we collected both zero- and one-dimensional persistent features.

We took six of such sets among the graph benchmark datasets, all undirected, as follows:

- MUTAG: a collection of nitroaromatic compounds, the goal being to predict their mutagenicity on Salmonella typhimurium;

- PTC: a collection of chemical compounds represented as graphs that report the carcinogenicity of rats;

- BZR: a collection of chemical compounds that one has to classify as active or inactive;

- ENZYMES: a dataset of protein tertiary structures obtained from the BRENDA enzyme database; the aim is to classify each graph into six enzymes;

- DHFR: a collection of chemical compounds that one has to classify as active or inactive;

- PROTEINS: in each graph, nodes represent the secondary structure elements; the task is to predict whether or not a protein is an enzyme.

The properties of the above are summarized in Table 3, where the IR index is the so-called Imbalanced Ratio (IR) that denotes the imbalance of the dataset, and it is defined as a sample size of the major class over a sample size of the minor class.

Table 3.

Graph datasets.

The computations of the adjacency matrix and the PDs were made using the functions implemented in tda-giotto.

Table 4.

Balanced Accuracy related to graph datasets using the shortest path distance (for the ENZYMES dataset, Accuracy only). The best results are underlined in bold.

Table 5.

Balanced Accuracy related to graph datasets using the Jaccard Index (for the ENZYMES dataset, Accuracy only) The best results are underlined in bold.

Thanks to these results, two conclusions can be reached. The first one is that, as expected, the goodness of the classifier is strictly related to the particular filtration used for the computation of persistent features. The second one is related to the fact that the SWK and the PFK seem to work slightly better than the other kernels: in the case of the shortest path distance in Table 4, the SWK is to be preferred while the PFK seems to work better in the case of the Jaccard index Table 5. In the case of PROTEINS, in both cases the PWGK provides the best Balanced Accuracy.

3.6. One-Dimensional Time Series

In many different applications, one can deal with one-dimensional time series. A one-dimensional time series is a set . In [39], the authors provided different approaches to building a filtration upon this kind of data. We decided to adopt the most common one. Thanks to the Taken’s embedding, these data could be translated into point clouds. With suitable choices for two parameters— for the delay parameter and for the dimension—it was possible to compute a subset of points in composed by for . The theory mentioned above related to point clouds could then be applied to signals, as points in . For how to choose values for the parameters, see [39]. The datasets for the tests, in Table 6, were taken from the UCR Time Series Classification Archive (2018) [40], which consists of 128 datasets of time series from different worlds of application. In the archive, there is a split into test and train sets, but for the aim of our analysis we did not regard the split; we considered the train and test data as a whole dataset and then the codes provided properly the subdivision.

Table 6.

Time series datasets.

Using giotto-tda, we computed the persistent features of zero, one and two dimensions and joined them together. The final results of the datasets are reported in Table 7.

Table 7.

Balanced Accuracy related to time series datasets. The best results are underlined in bold.

As in the previous examples, the SWK achieved the best results and provided slightly better performances, in terms of accuracy.

4. Discussion

In this paper, we compared the performances of five Persistent Kernels applied to data of different natures. The results show how different PKs are indeed comparable, in terms of accuracy, and that there was no one PK that emerged clearly above the others. However, in many cases, the SWK and PFK performed slightly better. In addition, from a purely computational point of view, the SWK is to be preferred, since by construction the preGram matrix is parameter-independent. Therefore, in practice, the user has to compute such a matrix on the whole dataset only once at the beginning and then choose a suitable subset of rows and columns to perform the training, cross-validation and test phases. This aspect is relevant and reduces the computational costs and time compared with other kernels. Another aspect to be considered, as in the case of graphs, is how to choose the function f that provides the filtration. The choice of such a function is still an open problem and an interesting field of research. The right choice, in fact, would guarantee being able to better extract the intrinsic information from data, thus improving the classifier’s performances. For the sake of completeness, we recall here that in the literature there is also an interesting direction of research, the aim of which is to build a new PK starting from the main five kernels that we introduced in Section 2.3. From one of the PKs mentioned in previous sections, the authors in [15,41] studied how to modify them, obtaining the so-called Variably Scaled Persistent Kernels, which are Variably Scaled Kernels applied to the classification context. The results reported by the authors are indeed promising. This could, therefore, be another interesting direction for further analysis.

Author Contributions

Conceptualization, C.B. and S.D.M.; methodology, C.B. and S.D.M.; software, C.B.; writing—original draft preparation, C.B. and S.D.M.; supervision, S.D.M.; funding acquisition, S.D.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was achieved as part of RITA “Research ITalian network on Approximation” and as part of the UMI topic group “Teoria dell’Approssimazione e Applicazioni”. The authors are members of the INdAM-GNCS Research group. The project was also funded by the European Union-Next Generation EU under the National Recovery and Resilience Plan (NRRP), Mission 4 Component 2 Investment 1.1—Call PRIN 2022 No. 104 of 2 February 2022 of the Italian Ministry of University and Research; Project 2022FHCNY3 (subject area: PE—Physical Sciences and Engineering) “Computational mEthods for Medical Imaging (CEMI)”.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| TDA | Topological Data Analysis |

| PD | Persistence Diagram |

| PK | Persistence Kernel |

| PH | Persistent Homology |

| SVM | Support Vector Machine |

| PSSK | Persistence Scale-Space Kernel |

| PWGK | Persistence Weighted Gaussian Kernel |

| SWK | Sliced Wasserstein Kernel |

| PFK | Persistence Fisher Kernel |

| PI | Persistence Image |

References

- Townsend, J.; Micucci, C.P.; Hymel, J.H.; Maroulas, V.; Vogiatzis, K.D. Representation of molecular structures with persistent homology for machine learning applications in chemistry. Nat. Commun. 2020, 11, 3230. [Google Scholar] [CrossRef] [PubMed]

- Asaad, A.; Ali, D.; Majeed, T.; Rashid, R. Persistent Homology for Breast Tumor Classification Using Mammogram Scans. Mathematics 2022, 10, 21. [Google Scholar] [CrossRef]

- Pachauri, D.; Hinrichs, C.; Chung, M.K.; Johnson, S.C.; Singh, V. Topology based Kernels with Application to Inference Problems in Alzheimer’s disease. IEEE Trans. Med. Imaging 2011, 30, 1760–1770. [Google Scholar] [CrossRef]

- Flammer, M. Persistent Homology-Based Classification of Chaotic Multi-variate Time Series: Application to Electroencephalograms. SN Comput. Sci. 2024, 5, 107. [Google Scholar] [CrossRef]

- Majumdar, S.; Laha, A.K. Clustering and classification of time series using topological data analysis with applications to finance. Expert Syst. Appl. 2020, 162, 113868. [Google Scholar] [CrossRef]

- Brüel-Gabrielsson, R.; Ganapathi-Subramanian, V.; Skraba, P.; Guibas, L.J. Topology-Aware Surface Reconstruction for Point Clouds. Comput. Graph. Forum 2020, 39, 197–207. [Google Scholar] [CrossRef]

- Cohen-Steiner, D.; Edelsbrunner, H.; Harer, J. Stability of persistence diagrams. Discret. Comput. Geom. 2007, 37, 103–120. [Google Scholar] [CrossRef]

- Reininghaus, J.; Huber, S.; Bauer, U.; Kwitt, R. A Stable Multi-Scale Kernel for Topological Machine Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4741–4748. [Google Scholar]

- Barnes, D.; Polanco, L.; Peres, J.A. A Comparative Study of Machine Learning Methods for Persistence Diagrams. Front. Artif. Intell. 2021, 4, 681174. [Google Scholar] [CrossRef]

- Kusano, G.; Fukumizu, K.; Hiraoka, Y. Kernel method for persistence diagrams via kernel embedding and weight factor. J. Mach. Learn. Res. 2017, 18, 6947–6987. [Google Scholar]

- Carriere, M.; Cuturi, M.; Oudot, S. Sliced Wasserstein kernel for persistent diagrams. Int. Conf. Mach. Learn. 2017, 70, 664–673. [Google Scholar]

- Le, T.; Yamada, M. Persistence fisher kernel: A riemannian manifold kernel for persistence diagrams. In Proceedings of the 32nd Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Adams, H.; Emerson, T.; Kirby, M.; Neville, R.; Peterson, C.; Shipman, P.; Chepushtanova, S.; Hanson, E.; Motta, F.; Ziegelmeier, L. Persistence images: A stable vector representation of persistent homology. J. Mach. Learn. Res. 2017, 18, 1–35. [Google Scholar]

- Zhao, Q.; Wang, Y. Learning metrics for persistence-based summaries and applications for graph classification. arXiv 2019, arXiv:1904.12189. [Google Scholar]

- De Marchi, S.; Lot, F.; Marchetti, F.; Poggiali, D. Variably Scaled Persistence Kernels (VSPKs) for persistent homology applications. J. Comput. Math. Data Sci. 2022, 4, 100050. [Google Scholar] [CrossRef]

- Fomenko, A.T. Visual Geometry and Topology; Springer Science and Business Media: New York, NY, USA, 2012. [Google Scholar]

- Rotman, J.J. An Introduction to Algebraic Topology; Springer: New York, NY, USA, 1988. [Google Scholar]

- Edelsbrunner, H.; Harer, J. Persistent homology—A survey. Contemp. Math. 2008, 453, 257–282. [Google Scholar]

- Edelsbrunner, H.; Harer, J. Computational Topology: An Introduction; American Mathematical Society: Providence, RI, USA, 2010. [Google Scholar]

- Guillemard, M.; Iske, A. Interactions between kernels, frames and persistent homology. In Recent Applications of Harmonic Analysis to Function Spaces, Differential Equations, and Data Science; Springer: Cham, Switzerland, 2017; pp. 861–888. [Google Scholar]

- Carlsson, G. Topology and data. Bull. Am. Math. Soc. 2009, 46, 255–308. [Google Scholar] [CrossRef]

- Pickup, D.; Sun, X.; Rosin, P.L.; Martin, R.R.; Cheng, Z.; Lian, Z.; Aono, M.; Ben Hamza, A.; Bronstein, A.; Bronstein, M.; et al. SHREC’ 14 Track: Shape Retrieval of Non-Rigid 3D Human Models. In Proceedings of the 7th Eurographics workshop on 3D Object Retrieval, EG 3DOR’14, Strasbourg, France, 6 April 2014. [Google Scholar]

- Ali, D.; Asaad, A.; Jimenez, M.; Nanda, V.; Paluzo-Hidalgo, E.; Soriano-Trigueros, M. A Survey of Vectorization Methods in Topological Data Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 14069–14080. [Google Scholar] [CrossRef]

- Scholkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization and Beyond; The MIT Press: Cambridge, MA, USA, 2002; ISBN 978-026-225-693-3. [Google Scholar]

- Fasshauer, G.E. Meshfree Approximation with MATLAB; World Scientific: Singapore, 2007; ISBN 978-981-270-634-8. [Google Scholar]

- The GUDHI Project, GUDHI User and Reference Manual, 3.5.0 Edition, GUDHI Editorial Board. 2022. Available online: https://gudhi.inria.fr/doc/3.5.0/ (accessed on 13 January 2022).

- Tralie, C.; Saul, N.; Bar-On, R. Ripser.py: A lean persistent homology library for python. J. Open Source Softw. 2018, 3, 925. [Google Scholar] [CrossRef]

- Giotto-tda 0.5.1 Documentation. 2021. Available online: https://giotto-ai.github.io/gtda-docs/0.5.1/library.html (accessed on 25 January 2019).

- Saul, N.; Tralie, C. Scikit-tda: Topological Data Analysis for Python. 2019. Available online: https://docs.scikit-tda.org/en/latest/ (accessed on 25 January 2019).

- Grandini, M.; Bagli, E.; Visani, G. Metrics for multi-class classification: An overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Sonego, P.; Pacurar, M.; Dhir, S.; Kertész-Farkas, A.; Kocsor, A.; Gáspári, Z.; Leunissen, J.A.M.; Pongor, S. A Protein Classification Benchmark collection for machine learning. Nucleic Acids Res. 2006, 35, D232–D236. [Google Scholar] [CrossRef]

- Sun, J.; Ovsjanikov, M.; Guibas, L. A Coincise and Provably Informative Multi-Scale Signature Based on Heat Diffusion. Comput. Graph. Forum 2009, 28, 1383–1392. [Google Scholar] [CrossRef]

- Lee, D.; Lee, S.H.; Jung, J.H. The effects of topological features on convolutional neural networks—An explanatory analysis via Grad-CAM. Mach. Learn. Sci. Technol. 2023, 4, 035019. [Google Scholar] [CrossRef]

- LeCun, Y.; Cortes, C. MNIST Handwritten Digit Database. 2010. Available online: https://yann.lecun.com/exdb/mnist/ (accessed on 10 November 1998).

- Garin, A.; Tauzin, G. A Topological “Reading” Lesson: Classification of MNIST using TDA. In Proceedings of the 18th IEEE International Conference On Machine Learning And Applications, Boca Raton, FL, USA, 16–19 December 2019; pp. 1551–1556. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Aktas, M.E.; Akbas, E.; El Fatmaoui, A. Persistent Homology of Networks: Methods and Applications. Appl. Netw. Sci. 2019, 4, 61. [Google Scholar] [CrossRef]

- Ravinshanker, N.; Chen, R. An introduction to persistent homology for time series. WIREs Comput. Stat. 2021, 13, e1548. [Google Scholar] [CrossRef]

- Dau, H.A.; Keogh, E.; Kamgar, K.; Yeh, C.M.; Zhu, Y.; Gharghabi, S.; Ratanamahatana, C.A.; Chen, Y.; Hu, B.; Begum, N.; et al. University of California Riverside. Available online: https://www.cs.ucr.edu/~eamonn/time_series_data_2018/ (accessed on 1 October 2018).

- De Marchi, S.; Lot, F.; Marchetti, F. Kernel-Based Methods for Persistent Homology and Their Applications to Alzheimer’s Disease. Master’s Thesis, University of Padova, Padova, Italy, 25 June 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).