Abstract

Oraclebone characters (OBCs) are crucial for understanding ancient Chinese history, but existing recognition methods only recognize known categories in labeled data, neglecting novel categories in unlabeled data. This work introduces a novel approach to discovering new OBC categories in unlabeled data through generalized category discovery. We address the challenges posed by OBCs’ instinctive characteristics, such as misleading contrastive views from random cropping, sub-optimal learned representation, and insufficient supervision for unlabeled data. Our method features a symmetrical structure enhanced by character component distillation and self-merged pseudo-label. We utilize random geometric transforms to create symmetrical contrastive views to avoid misleading views. Then, the proposed character component distillation procedure optimizes symmetrical shared character components for better transferable representation. Finally, we construct a self-merged pseudo-label from the model and a symmetrical teacher model to provide stable and robust supervision for unlabeled data. Extensive experiments validate the superiority of our method in recognizing ’All’ and ’Novel’ OBC categories, providing an effective tool to aid OBC researchers.

1. Introduction

Oracle bone characters (OBCs) record divination and historical events of the Shang dynasty, holding the key to unlocking the mysteries of ancient Chinese history. Recognizing novel OBC categories is essential for understanding and deciphering the ancient information conveyed by these ancient scripts. Due to the scarcity and complexity of the original materials of OBCs, the study of OBCs has been dominated by authoritative experts in the past. Recently, advancements in deep learning [1,2] and digitization have enabled researchers to harness deep learning techniques for advanced OBC research, such as font translation [3,4], rejoining [5], and retrieval [6,7]. Even multi-modal large language models have also been successfully applied in generating visual guides for people to better understand OBCs by training with corresponding textual contextualization [8]. As an important underlying part, the recognition of OBCs is vital for these advanced applications and fundamental in practical OBC research. About 4500 OBC categories have been confirmed from unearthed oracle bones, of which only about 1000 categories have been recognized. Hence, recognizing these novel categories is of great significance.

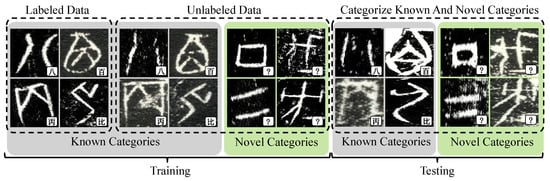

For OBC recognition, supervised classification [9,10,11,12] methods and unsupervised domain adaptation methods [13,14] have been successfully applied to recognize authentic OBC images. But the key issue of these methods is the limited recognition capability, where the category set is fixed to the labeled set for training. Therefore, these methods can only recognize known OBC categories. Meanwhile, annotating massive OBC images requires domain knowledge and is time-consuming in real practice. Considering the lack of labeled data and the high annotation cost of OBCs, data augmentation methods have also been explored [15,16,17]. However, these methods only recognize OBC categories occurring in labeled data and assume consistent category sets between labeled and unlabeled data, where unlabeled data may contain novel OBC categories that do not occur in labeled categories. Therefore, it is urgent to develop a method for recognizing OBCs that can leverage unlabeled data and recognize new categories from unlabeled data to meet these practical needs in OBC research. We summarize these objectives as OBC category discovery, as depicted in Figure 1. The goal of OBC category discovery is to train a model with labeled and unlabeled data simultaneously, where the labeled data only contains known OBC categories while the unlabeled data contains novel OBC categories. After training, the model is required to recognize both known categories and novel categories that occur in labeled and unlabeled data.

Figure 1.

An illustration of OBC category discovery. Given labeled OBC images only contain known categories and unlabeled images contain known and unknown categories; both known and novel categories need to be recognized. The Chinese characters at the bottom right of each OBC image are the corresponding deciphered Chinese characters.

Recently, the generalized category discovery (GCD) problem first proposed by [18] provides a solution to recognize novel categories from unlabeled data. Choi et al. [18] utilize a pre-trained self-supervised model fine-tuned by contrastive learning and realizes this objective via non-parametric K-means clustering [19], followed and improved by several research papers [20,21,22,23,24]. These methods pave the way for OBC category discovery. However, the characteristics of OBCs lead to several limitations when applying GCD methods developed for common objects to OBCs: (1) Random cropping used in contrastive learning may generate misleading views since OBCs are characterized by high inter-class similarity and extensive noise, especially when data is scarce. Considering the case of a complex OBC being cropped into two distinct views that coincidentally match two simple OBC categories, either instance-level or category-level contrastive learning is not reasonable since these views represent two distinct categories different from the category of the original image. (2) Image-level contrastive learning or distillation is sub-optimal for neglecting the learning of shared components by different OBC categories. The valuable prior knowledge that characters are made up of some shared components could be the key to transferable representations, considering that rarely used Chinese characters can be guessed from their radicals. (3) The supervision for labeled and unlabeled data is asymmetrical, which is insufficient for unlabeled data, especially considering the high inter-class similarity of novel OBC categories to known categories. The supervision for unlabeled data usually contains instance-level contrastive learning or self-distillation, while the supervision for the labeled data contains category-level contrastive learning as well as ground truth optimization.

To address these limitations, we propose an OBC category discovery method enhanced by a character component distillation procedure and a self-merged pseudo-label. Concretely, we employ random geometric transforms instead of random cropping to avoid the misleading effect and overfitting on limited data. The character component distillation procedure optimizes features of character components in symmetrical views extracted by masks generated from pixel clustering procedures. The self-merged pseudo-label is constructed by the model’s prediction and a symmetrical teacher providing stable features for known categories, compensating for the asymmetrical supervision between labeled and unlabeled data. We leverage the robust self-merged pseudo-label to supervise unlabeled data to compensate for the asymmetrical supervision between labeled and unlabeled data. Extensive experiments on three OBC recognition datasets demonstrate the superiority of our method compared with state-of-the-art methods. In summary, our main contributions are as follows:

- We propose an OBC category discovery method with a symmetrical structure that effectively recognizes novel OBC categories from unlabeled data.

- To reinforce the learned representation, we propose a character component distillation procedure that distills character components in symmetrical views by extracting spatial-disjoint components from foreground masks based on K-means clustering.

- To compensate for the asymmetrical supervision, we propose a self-merged pseudo-label based on the predictions of the model and a symmetrical teacher for known and novel categories as stable and robust supervision for unlabeled data.

2. Related Research

2.1. Oracle Bone Character Recognition

Oracle bone character recognition is fundamental for high-level OBC research. Early attempts are primarily based on traditional low-level image features. Guo et al. [25] utilize shape- and sketch-related image features to build a hierarchical representation for OBCs. Meng et al. [26] utilize Hough transform and template matching to recognize OBCs. Noticing the great potential of deep learning, OBC researchers have been engaging in deep convolutional neural networks [9,10,11] and transformers [12] to realize OBC recognition. Recently, more practical problems have been explored in OBC recognition. Some recent research focuses on addressing the inherited class imbalance problem of OBCs by augmentation methods. Yue et al. [15] propose a WGAN-based data augmentation method to generate OBC images similar to the original image. Wang et al. [16] propose a CycleGAN-based data augmentation method to generate OBC images from glyphs. Li et al. [17] consider the feature similarity between similar OBC images and propose a data augmentation method based on adversarial learning and mixup to improve the generalization of tail classes by mixing the similar OBC images. The main deficiency of mainstream supervised recognition methods is that they are limited to the labeled category used for training and are not able to recognize samples from novel OBC categories. Other research [13,14] considers the importance of utilizing clean and standard handwritten OBC fonts provided by experts to improve the recognition performance on noisy authentic OBC images. Unsupervised domain adaption has been adopted to transfer knowledge from handwritten OBC fonts to authentic OBC images, where the model is trained on the labeled source domain (e.g., handwritten OBC fonts) with unlabeled data from the target domain (e.g., authentic OBC images) and required to recognize the images from the target domain. However, these researches are based on supervised learning with the acquisition of all category information or partly unsupervised learning, where the shared category set between the labeled source domain and unlabeled target domain is a prerequisite. R-GNN [27] utilizes recurrent graph neural networks to recognize OBC font by capturing both the local fine-grained details and the global contextual information of oracle bone inscriptions. However, this method focuses on determining the font style to discover the historical period of oracle bone scripts, not recognizing novel OBC categories. Conf-UNet [28] proposes a method for the recognition of OBCs that predicts the corresponding large seal scripts, achieving primary extrapolating capability. However, this method requires a corresponding large seal script database for training and testing, where some OBCs may not have a strict one-to-one mapping with large seal scripts. In contrast, we do not assume a shared category set between labeled data and unlabeled data, and our method is capable of categorizing novel categories in unlabeled data.

In summary, compared with previous research, our method can utilize unlabeled data and recognize novel OBC categories from unlabeled data. Our method achieves better performance than other GCD methods on three OBC recognition datasets. Therefore, our method not only fills the gap in OBC recognition research where recognition has been limited to labeled data but also promotes computer-aided OBC category discovery research.

2.2. Generalized Category Discovery

Generalized category discovery (GCD) aims to categorize known and novel categories occurring in unlabeled data. Transferable representation from known categories to novel categories is the key to the categorization of novel categories. Better clustering utilization, richer supervision, and stronger augmentations are bright directions to transferable and robust representation, either parametric or non-parametric. Non-parametric methods usually tackle this task similarly to deep clustering [29] with deep clustering K-means [19], where better clustering utilization is essential. GCD [18] first addresses this task by supervised contrastive learning [30] and unsupervised contrastive learning [31]. While GCD successfully realized the task through unsupervised contrastive learning, the supervision solely from contrastive learning is insufficient, and the relationship of unlabeled data is ignored. Some recent research adopts community detection algorithms to realize category-level contrastive learning on unlabeled data: DCCL [20] adopts the InfoMap algorithm to find communities in unlabeled images and performs category-level contrastive learning between the assigned communities; Yang et al. [21] adopt the Louvain algorithm for similar purposes to achieve category-level contrastive learning and constrain the consistency between weak and strong augmentations. These researches utilize the underlying relationship between unlabeled data to enhance its representation, but the community detection algorithms can be complex. CMS [24] further realizes and simplifies GCD by KNN and contrastive mean-shift learning. It greatly enhances the representation of unlabeled data by contrastive learning based on simple KNN neighbors. However, misleading views and insufficient supervision remain issues to tackle regarding the application to OBCs. Meanwhile, parametric methods are faster in inference since they realize classification by computing similarities between parametric prototypes and image features, where richer supervision and augmentation are beneficial. SimGCD [22] proposes a parametric method based on a self-distillation framework based on soft pseudo-label and entropy regularization. The self-distillation objective and entropy regularization successfully alleviate the bias of parametric classifiers and enhance the learned representation, along with the fast inference speed brought by parametric classifiers. However, for its application to OBCs, the problems of misleading views and insufficient supervision also need to be tackled. SPT [23] further introduces an alternating learning scheme of spatial augmentation by wrapping learnable parameters. By wrapping image patches by learnable parameters, SPT enhances the model’s ability to focus on local features for better transferable representations. However, this wrapping procedure may split the internal components of OBCs into pieces, leading to sub-optimal transferable representation.

In comparison, our method alleviates the misleading effect caused by random cropping views. Moreover, our method reinforces the learned transferable representation by a character component distillation procedure and provides stable and robust pseudo-labels as sufficient supervision to supervise unlabeled data to enhance the learned representation.

3. Proposed Method

3.1. Preliminary

Given a labeled dataset where is the known category set, GCD aims to train a model by an unlabeled dataset where is the unlabeled category set that contains novel categories from . The model is trained with both the labeled data and unlabeled data . In the training stage, the ground truth of an unlabeled sample is not accessible. The model is required to recognize samples from both known and novel categories in the test set where .

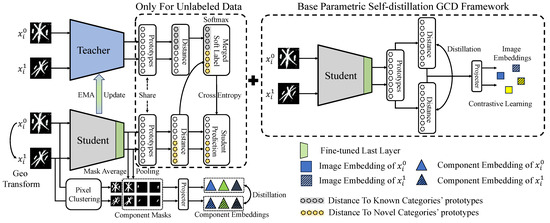

As depicted in Figure 2, we adopt the parametric self-distillation framework proposed by SimGCD [22], where a prototypical classifier R performs classification by calculating the cosine similarity between randomly initialized prototypes and image feature extracted by the backbone f. The number of novel classes is known a priori [22]. The base learning objective can be divided into a contrastive learning part (Equations (1) and (2)) and a self-distillation part (Equation (4)). The contrastive learning part consists of an unsupervised part and a supervised part .

Figure 2.

The overview of the proposed method. Random geometric transforms generate symmetrical contrastive views and . The character component distillation procedure distills the symmetrical character components in different views. Meanwhile, the self-merged pseudo-label, merged from the predictions of the model (also denoted as the student) and a symmetrical teacher model, is used as supervision for unlabeled data.

Specifically, given two randomly augmented views and of the same image in a batch , the unsupervised part takes as the positive sample while other images are negative samples. In contrast, the supervised takes all samples that share the same class label with as positive samples, and samples from other classes are negative samples:

where calculates the cosine similarity between the embedding and projected by a multi-layer perceptron (MLP) g. and are temperature hyper-parameters. is the index set of all samples that share the same label with . is the number of labeled samples in the batch. For the self-distillation part, soft pseudo-label generated from one view is used to supervise the prediction of another view, where and are temperature hyper-parameters for calculating softmax probabilities for prediction and soft pseudo-label. The self-distillation part can be formulated as follows:

where H is the cross-entropy loss and is a mean-entropy maximization regularizer [32] with its weight . The total loss of the base parametric self-distillation framework can be formulated as follows:

where is the weight of supervised learning, set to 0.35, following [22].

3.2. Geometric Transforms for Generating Contrastive Views

Learning on contrastive views generated by random augmentation from the same image is the fundamental step in contrastive learning [31,33] to learn better image representation, also adopted in GCD methods [18,22,23,24]. The most common practice in generating contrastive views is random cropping. However, random cropping is potentially harmful to OBCs, which is characterized by high inter-class similarity and extensive noise. Hence, generating cropped OBC images with missing key parts may eventually lead to overfitting.

To alleviate the misleading effect caused by random cropping while keeping the variance of contrastive views, we propose to employ geometric transforms as the alternative, as depicted in Figure 3. Specifically, we only apply random flip on while we apply random scaling, random translating, random rotating, and random shearing to generate from . These geometric transforms are reasonable to OBCs based on the insight that handwritten characters can be crooked, but each character still has high similarity with its symmetrical crooked version. In this manner, we can generate symmetrical contrastive views for the same OBC image, keeping its essential structure.

Figure 3.

Comparison of random geometric transforms and random cropping.

3.3. Character Component Distillation

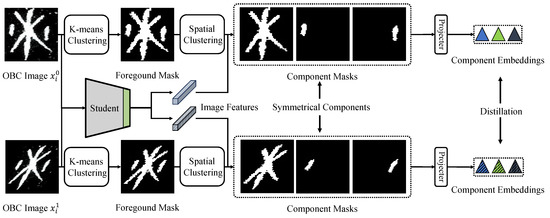

After substituting random cropping with random geometric transforms, the symmetrical contrastive views and are used for contrastive learning. Similar to modern Chinese characters, different OBCs share some inner components, which are critical in the transferring learning process of recognizing characters. Optimizing the consistency between these components in symmetrical views further reinforces the learned representation. Therefore, we propose a character component distillation procedure shown in Figure 4 to enhance transferable representation from known to novel OBC categories by distilling extracted features of symmetrical character components.

Figure 4.

The diagram of the proposed character component distillation procedures.

Similar to the concept of radicals in modern Chinese characters, connected components within a single OBC are likely to be shared over various OBC categories. To realize the character component distillation, splitting a single OBC into several connected components and extracting features from connected components is essential, requiring masks of these connected components. Inspired by [34], this goal can be realized through pixel clustering procedures. Firstly, the foreground mask of can be obtained by K-means clustering [19] on pixel values. The foreground mask divides the OBC image into character and background regions. We further split the foreground mask into several connected region masks by a spatial clustering method [35] based on density. Concretely, neighboring pixels sharing the same pixel value are assigned to the same connected region. After the K-means and spatial clustering, we obtain the connected region masks for , where is the number of the connected components. With the obtained connected region masks, we can perform masked average pooling to extract features for each connected component:

where is the extracted feature of , is the sum of connected region mask , and ⊙ denotes the element-wise multiplication. In this way, we obtain the connected components’ features for and for . The core insight in character component distillation is the consistency of connected components in symmetrical views. To this end, features for each connected component are projected into an embedding space by a MLP and then classified by another prototypical classifier T of n random initialized prototypes :

where n is set to 65536 in all experiments. Finally, the component soft pseudo-label generated from one view is used to supervise the prediction of another view . The character component distillation loss can be formulated as follows:

3.4. Self-Merged Pseudo-Label

By equipping with the proposed character component distillation, the learned representation has been reinforced. Furthermore, we seek to leverage richer supervision compensating for the asymmetrical supervision between labeled and unlabeled data to obtain robust representation. Motivated by the commonly used teacher–student framework in semi-supervised learning [36,37], we propose to use pseudo-labels to supervise the model on unlabeled data. Specifically, a symmetrical teacher backbone of the same structure as the student backbone f is slowly updated by the exponential moving average (EMA): of the student backbone’s parameters . After epochs of warming up, the teacher generates stable feature , and the teacher’s prediction is used to supervise the student on unlabeled data:

However, stability derived from slow updating brings the issue of adhering to known categories. Since the representation learning of novel categories is fully based on self-distillation, supervision of novel categories is insufficient at the early stage of training. As a result, the teacher is stable toward known categories but unresponsive to novel categories. In other words, the feature is stable to known categories while the feature could be more suitable for novel categories. Based on this insight, we propose to merge the predictions of teacher and student to provide robust pseudo-labels, namely self-merged pseudo-labels. Since the prototypes used for classification are symmetrically shared by the teacher and student, we can directly merge the distance of to prototypes representing known categories with the distance of to prototypes representing novel categories . The self-merged pseudo-label can be formulated as follows:

Then, the cross-entropy loss is calculated between the student’s prediction and self-merged pseudo-label with the same temperature . For simplicity, we denote as and as ). The self-merged pseudo-label loss can be formulated as follows:

where and represent unlabeled images predicted as known and novel categories with confidence score over a fixed threshold: . and are balancing weights for known and novel categories, considering the different amounts of samples from known and novel categories in unlabeled data. Finally, the total loss can be formulated as follows:

where and are balancing weights for the proposed character component distillation and self-merged pseudo-label.

4. Experiments

4.1. Datasets and Evaluation

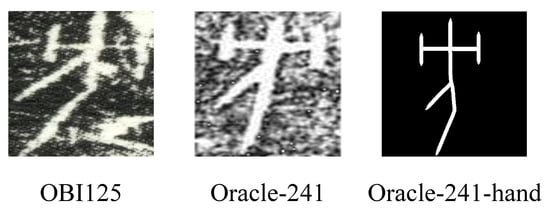

We evaluate our method on three OBC recognition datasets: Oracle-241 [13], Oracle-241-hand [13], and OBI125 [15]. Oracle-241 contains 241 OBC categories, where 50,168 images are used for training and 13,806 images are used for testing. Oracle-241-hand shares the same categories as Oracle-241, but the images are high-quality handwritten OBC fonts from experts, containing 10,861 images used for training and 3730 images for testing. OBI125 contains 125 OBC categories, where 3131 images are used for training and 730 images are used for testing. Examples from each dataset are shown in Figure 5. Following GCD [18], half of all categories are sampled as the ‘Known’ categories, and the remaining categories are regarded as the ‘Novel’ categories. For ‘Known’ OBC categories, half of the training images are sampled as labeled data, while all remaining data from both ‘Known’ and ‘Novel’ OBC categories are used as unlabeled data. The data splits are shown in Table 1. For calculating the accuracy metric for classification, we adopt the Hungarian algorithm to find the optimal assignment between the prototype assignment and the ground truth following GCD [18]. The accuracy scores are reported for the ‘All’, ‘Known’, and ‘Novel’ OBC categories.

Figure 5.

Example OBC images from each dataset.

Table 1.

Data splits used in the experiments.

4.2. Implementation Details

Following SimGCD [22], we adopt an ImageNet pre-trained DINO [38] with the ViT-B/16 [2] as the backbone f. We only finetune the last block of the backbone, and the [CLS] token is used as the image representation. We train our model with an initial learning rate of 0.05 with the SGD optimizer. The batch size is set to 128. For Oracle-241, is set to 2, is set to 70, and is set to . For Oracle-241-hand, is set to 4, is set to 40, and is set to . For OBI125, is set to 2, is set to 10, and is set to . and are both set to 0.5 for Oracle-241 and set to 0.66 and 0.33 for Oracle-241-hand and OBI125. The teacher is EMA-updated with set to 0.999 after the initial three epochs. The geometric transformation includes scaling between 0.6 and 1.1, translating by −2% to 2%, rotating by −10 to 10 degrees, and shearing by −45 to 45 degrees. We keep the best checkpoint with the highest average of accuracy on known classes and the unsupervised Silhouette score. Other hyper-parameters and training schedules are adopted from SimGCD [22]. We implement all experiments with an NVIDIA GeForce RTX 4090 GPU.

4.3. Comparison with State-of-the-Art Methods

In this section, we compare our method with K-means [19] and several state-of-the-art methods including GCD [18], SimGCD [22], SPT [23], and CMS [24].

Evaluation on OBC recognition datasets. Table 2 shows the results on three OBC recognition datasets. Our method achieves the best performance on All and Novel categories on all evaluated OBC recognition datasets, especially on the OBI125 dataset. These results demonstrate the superiority of our method in the OBC category discovery task. For All categories, our method outperforms CMS by 1.04% on the Oracle-241 and 2.20% on the Oracle-241-hand dataset, and SPT by 14.52% on the OBI125 dataset. For Novel categories, our method outperforms CMS by 3.85% on the Oracle-241 dataset, SimGCD by 2.95% on the Oracle-241-hand dataset, and SPT by 15.43% on the OBI125 dataset. These improvements demonstrate the effectiveness of our method for learning transferable representation of OBCs characterized by high inter-class similarity. Our method also achieves the best performance on Known categories on the OBI125 dataset. In comparison, only applying K-means to the raw feature without training yields poor performance since the backbone has not been fine-tuned for the OBC recognition task. GCD lags behind other SOTA methods since it only considers the basic unsupervised instance-level and supervised category-level contrastive targets. SimGCD achieves better performance for its self-distillation framework and effective entropy regularization; CMS also achieves this for its effective category-level contrastive learning based on KNN neighbors for unlabeled data. However, misleading views and insufficient supervision remain areas for SimGCD and CMS to improve, whereas our method utilizes geometric transforms instead of cropping to avoid misleading views; the proposed character component distillation and the self-merged pseudo-label provide sufficient supervision. SPT shows a strong performance in OBI125 for its spatial prompts wrapping image patches, enabling the model to focus on local features to alleviate overfit on limited data. However, the wrapping process used in SPT splits the internal components of OBC into pieces, leading to sub-optimal transferable representation. In contrast, our method splits spatial-disjoint components for better transferable representation. In summary, the enhanced representation from the character component distillation and the stable and robust supervision from the self-merged pseudo-label are the keys to the superior performance of our method. Notably, our method outperforms other methods by a significant gap on the OBI125 dataset where the number of categories is significant, but the samples are relatively scarce. This situation is practical for OBC research, where available labeled data are scarce, and many categories remain unexplored. Therefore, our method can provide a robust and helpful tool to assist researchers in discovering novel OBC categories from unlabeled data.

Table 2.

Results on three OBC recognition datasets.

4.4. Ablation Study

Effectiveness of proposed components. To verify the effectiveness of our proposed components, we conduct extensive ablation experiments, and the results are shown in Table 3. Our baseline is the simple SimGCD with random cropping replaced by random geometric transforms. By using the proposed character component distillation, we observe a consistent performance improvement on All and Novel categories over all three datasets. These results substantiate that the proposed distillation procedure of character components is beneficial to learning transferable representations. Meanwhile, training by the proposed self-merged pseudo-label yields a similar boost, validating the effectiveness of the proposed self-merged pseudo-label to provide rich supervision for robust representation. Notably, we observe that directly using the teacher’s prediction for supervision results in poorer performance, since the teacher’s prediction is less accurate than the student’s on novel categories. Finally, by equipping all the proposed components, our method reaches its best performance on All categories, demonstrating the compatibility and effectiveness of our proposed components. Moreover, we find that the self-merged pseudo-label brings more improvement than character component distillation on noisy datasets (Oracle-241 and OBI125) compared with the clean dataset (Oracle-241-hand), indicating the importance of rich supervision on unlabeled authentic OBC data.

Table 3.

Ablation study on the components. ‘CCD’ denotes the character component distillation. ‘SST’ denotes the self-merged pseudo-label, where ‘plain’ denotes directly using the teacher’s prediction as pseudo-label.

Influence of . We further investigate the influence of the hyper-parameter in Table 4. It can be observed that on the clean dataset Oracle-241-hand and small-scale dataset OBI125, a relatively larger (0.0001) yields better performance, while on the noisy and large dataset Oracle-241, a relatively lower (0.00005) is better. We posit that character component distillation plays a pivotal role in scenarios where data is either clean or limited. We suppose this result is because clean OBC data facilitate more straightforward component extraction, while data scarcity stands to gain significantly from the additional distillation process.

Table 4.

Ablation study on the weight of character component distillation.

Influence of . We also investigate the influence of the number of warming-up epochs in Table 5. We select = ‘immediate’, 10, 20, 30, 45, 50, 60, 70 (up to of total training epochs) for the ablation experiments, where ’immediate’ denotes no warming up. It can be observed that a long warming-up is beneficial when OBC data is noisy and sufficient (Oracle-241), while a short warming-up is preferable when data is scarce (OBI125). We hypothesize that data characterized by high noise and substantial volume requires an extended period of warming up for the teacher model to achieve a state of stability and accuracy. On the contrary, scarce data only needs a short warming-up to avoid overfitting.

Table 5.

Ablation study on the number of warming-up epochs .

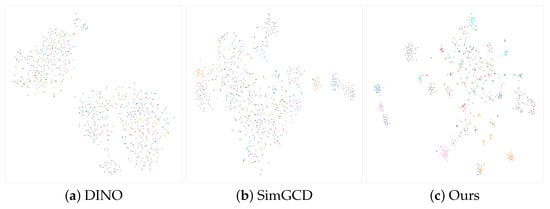

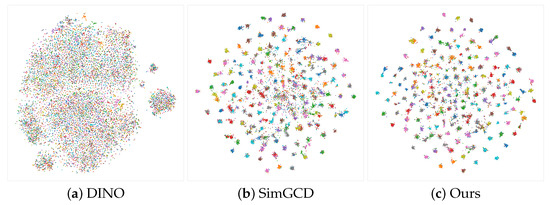

T-SNE Visualization. In addition, we visualized the feature space by T-SNE [39] in Figure 6 and Figure 7 to qualitatively verify the effectiveness of our method in representation learning. Compared with DINO and SimGCD, our method reduces noise and obtains more precise and compact clusters. For the Oracle-241 dataset, which represents massive noisy labeled data, our method obtains clearer clusters with reduced noisy sample points in the middle region. This validates the effectiveness of our method under extensive noise caused by archaeological excavations and rubbing procedures. For the OBI125 dataset, which represents limited labeled data, our method obtains better clusters with a more compact distribution compared with DINO and SimGCD. This demonstrates the effectiveness of our method under scarce labeled data, which meets the circumstances where OBC images from novel categories are insufficient for unsupervised contrastive learning.

Figure 6.

T-SNE visualization of the feature space of OBI125.

Figure 7.

T-SNE visualization of the feature space of Oracle-241.

4.5. Time Complexity

To analyze the time complexity of our method, we compare the elapsed time of one training epoch on the Oracle-241 dataset. Since our method is based on the parametric self-distillation framework proposed by SimGCD [22], we only consider the extra time complexity of our method. The elapsed time in one training epoch of our model and other models are shown in Table 6. Our method needs more time for the character component distillation and the self-merged pseudo-label. Let the number of labeled and unlabeled samples be and , the number of all categories K, the feature dimension of prototypes d, and the number of character component prototypes n; we first analyze time complexity for one sample. For each sample, the extra time complexity for the character component distillation is for calculating the distance of the L2-normed feature with character component prototypes. The extra time complexity for the self-merged pseudo-label is for searching the nearest prototype by calculating the distance of the L2-normed feature with prototypes. For the whole dataset, the extra time complexity of our method is . Compared with other methods, although our method needs more time in training, the trade-off of time is reasonable for improved performance.

Table 6.

The running time (s) by one epoch of different methods on Oracle-241.

5. Discussion

5.1. Methodology

Based on the experimental results and analysis, we summarize the key factors to better performance in the OBC category discovery task, which is generally difficult and time-consuming by traditional research methods. Learning transferable and robust representation is essential in recognizing known and novel OBC categories, considering the high inter-class similarity of OBCs. We can effectively reinforce the learned representation by the proposed distillation procedure of symmetrical character components in different contrastive views. Meanwhile, we proposed the self-merged pseudo-label compensating for the asymmetrical supervision between labeled and unlabeled data, which is also critical to providing sufficient supervision for robust representation. The experimental results show that the self-merged pseudo-label can gain more improvement on noisy authentic OBC images. Overall, our method achieves the best recognition performance on ‘All’ and ‘Novel’ categories on three OBC recognition datasets. For the ‘All’ category, our method achieves recognition accuracy of 71.41% (2.88% improvement), 80.54% (1.99% improvement), and 64.66% (22.88% improvement), respectively. For the ’Novel’ category, our method achieves recognition accuracy of 67.05% (4.44% improvement), 75.80% (2.95% improvement), and 45.40% (23.44% improvement), respectively. These significant improvements (especially on the OBI125 dataset) are achieved by the alleviated misleading effect, better transferable representation brought by the proposed character component distillation, and richer supervision from the self-merged pseudo-label. Our method provides an effective and robust tool for OBC researchers to facilitate relevant OBC recognition research by massive unlabeled data, and novel OBC categories within unlabeled data can be effectively recognized.

5.2. The Opted Teacher–Student Framework

The teacher model in our work is an EMA-updated model with the same structure as the student model. We opted for the teacher–student framework for several reasons. Firstly, the teacher–student framework is suitable for learning on unlabeled data with limited labeled data, which has been demonstrated and adopted by the research field of semi-supervised learning. Secondly, in addition to the self-distillation objective in the base parametric self-distillation framework, the teacher–student framework can provide additional supervision to unlabeled data in the form of high-quality pseudo-labels. Meanwhile, other methods to transfer the knowledge from the labeled to unlabeled categories, such as self-labeling, are prone to be biased due to error accumulation, considering the setting of GCD. In comparison, the EMA-updated teacher provides more stable and robust supervision and is more suitable for the OBC category discovery task.

5.3. Limitations and Future Research

There are still some limitations in applying our method in practical OBC research. The primary limitation of our method is the imbalanced nature of novel OBC categories in unlabeled data. The distribution of novel OBC categories could be severely imbalanced in unlabeled data, which will cause the model to be biased toward the major categories. Therefore, using a fixed threshold for all OBC categories to generate pseudo-labels is sub-optimal, where the model is less confident with minor categories than major categories. Another limitation of our method is the essence of character components. In modern Chinese characters and OBCs, radicals are not just spatial-disjoint components, which are also likely to overlap with each other. To address these limitations, dynamic or adaptive thresholds, class reweighting methods, and character radical extraction modules can be utilized to provide a more robust solution.

Moreover, in more practical circumstances, novel OBC categories could be continually labeled by experts, while the amount of labeled data is constant in the GCD setting. In future studies, we will utilize active learning techniques to continually utilize the recognized samples from novel OBC categories to obtain better performance.

6. Conclusions

In this paper, we proposed a novel oracle bone character (OBC) category discovery method based on a parametric self-distillation GCD framework generating symmetrical contrastive views by random geometric transforms. By the proposed character component distillation procedure on symmetrical character components, we reinforce better transferable representation essential to transfer knowledge from known to novel OBC categories. To further compensate for the asymmetrical supervision between labeled and unlabeled data and obtain robust representation, we construct a self-merged pseudo-label by the predictions of the model itself and a symmetrical teacher model to provide supervision for unlabeled data. In addition to alleviating misleading views, compared to other methods, our method can better transfer from known categories to novel categories by the better transferable representation learned by the character component distillation procedure. The richer supervision from the self-merged pseudo-label also enables our method to learn stronger and more robust representations from unlabeled data. Therefore, our method achieves significant improvement and superior performance on three OBC recognition datasets, especially on the OBI125 dataset (64.66% accuracy for ‘All’, 81.17% accuracy for ‘Known’, and 45.40% accuracy for ‘Novel’), where the labeled data is limited, so transferable representation and robust representation are crucial. Experimental results verified that our method achieves the best performance in recognizing ’All’ and ’Novel’ OBC categories on three OBC recognition datasets, providing a helpful tool to assist OBC researchers in recognizing novel OBC categories.

Author Contributions

X.W.: conceptualization, methodology, and writing—original draft preparation; Z.L.: validation and visualization; S.P.: supervision and investigation; Y.F.: investigation, writing—review and editing, and project administration. All authors have read and agreed to the published version of the manuscript.

Funding

The research was supported by the National Natural Science Foundation of China under Grant No.: 61976132 and 61991410.

Data Availability Statement

The dataset used in this research are publicly available. Oracle-241 and Oracle-241-hand can be accessed at https://github.com/wm-bupt/STSN (accessed on 1 July 2024). OBI125 can be accessed at http://www.ihpc.se.ritsumei.ac.jp/Dataset/JOCCH2021/OBI125.rar (accessed on 1 July 2024).

Acknowledgments

This research was supported by Shanghai Technical Service Center of Science and Engineering Computing, Shanghai University.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Gao, F.; Zhang, J.; Liu, Y.; Han, Y. Image Translation for Oracle Bone Character Interpretation. Symmetry 2022, 14, 743. [Google Scholar] [CrossRef]

- Guan, H.; Yang, H.; Wang, X.; Han, S.; Liu, Y.; Jin, L.; Bai, X.; Liu, Y. Deciphering Oracle Bone Language with Diffusion Models. arXiv 2024, arXiv:2406.00684. [Google Scholar]

- Zhang, Z.; Guo, A.; Li, B. Internal Similarity Network for Rejoining Oracle Bone Fragment Images. Symmetry 2022, 14, 1464. [Google Scholar] [CrossRef]

- Gao, F.; Chen, X.; Li, B.; Liu, Y.; Jiang, R.; Han, Y. Linking unknown characters via oracle bone inscriptions retrieval. Multimed. Syst. 2024, 30, 125. [Google Scholar] [CrossRef]

- Hu, Z.; Cheung, Y.M.; Zhang, Y.; Zhang, P.; Tang, P.L. Component-Level Oracle Bone Inscription Retrieval. In Proceedings of the International Conference on Multimedia Retrieval, Phuket, Thailandp, 10–14 June 2024; pp. 647–656. [Google Scholar]

- Qiao, R.; Yang, L.; Pang, K.; Zhang, H. Making Visual Sense of Oracle Bones for You and Me. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle WA, USA, 17–21 June 2024; pp. 12656–12665. [Google Scholar]

- Zhang, Y.K.; Zhang, H.; Liu, Y.G.; Yang, Q.; Liu, C.L. Oracle Character Recognition by Nearest Neighbor Classification with Deep Metric Learning. In Proceedings of the International Conference on Document Analysis and Recognition, Sydney, Australia, 20–25 September 2019; pp. 309–314. [Google Scholar]

- Chen, S.; Xu, H.; Weize, G.; Xuxin, L.; Bofeng, M. A classification method of oracle materials based on local convolutional neural network framework. IEEE Comput. Graph. Appl. 2020, 40, 32–44. [Google Scholar] [CrossRef]

- Liu, M.; Liu, G.; Liu, Y.; Jiao, Q. Oracle Bone Inscriptions Recognition based on Deep Convolutional Neural Network. J. Image Graph. 2020, 8, 114–119. [Google Scholar] [CrossRef]

- Gan, J.; Chen, Y.; Hu, B.; Leng, J.; Wang, W.; Gao, X. Characters as Graphs: Interpretable Handwritten Chinese Character Recognition via Pyramid Graph Transformer. Pattern Recognit. 2023, 137, 109317. [Google Scholar] [CrossRef]

- Wang, M.; Deng, W.; Liu, C.L. Unsupervised Structure-Texture Separation Network for Oracle Character Recognition. IEEE Trans. Image Process. 2022, 31, 3137–3150. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Deng, W.; Su, S. Oracle Character Recognition using Unsupervised Discriminative Consistency Network. Pattern Recognit. 2024, 148, 110180. [Google Scholar] [CrossRef]

- Yue, X.; Li, H.; Fujikawa, Y.; Meng, L. Dynamic Dataset Augmentation for Deep Learning-Based Oracle Bone Inscriptions Recognition. ACM J. Comput. Cult. Herit. 2022, 15, 1–20. [Google Scholar]

- Wang, W.; Zhang, T.; Zhao, Y.; Jin, X.; Mouchere, H.; Yu, X. Improving Oracle Bone Characters Recognition via A CycleGAN-Based Data Augmentation Method. In Proceedings of the International Conference on Neural Information Processing, IIT, Indore, India, 22–26 November 2022; pp. 88–100. [Google Scholar]

- Li, J.; Wang, Q.F.; Huang, K.; Yang, X.; Zhang, R.; Goulermas, J.Y. Towards Better Long-tailed Oracle Character Recognition with Adversarial Data Augmentation. Pattern Recognit. 2023, 140, 109534. [Google Scholar] [CrossRef]

- Vaze, S.; Han, K.; Vedaldi, A.; Zisserman, A. Generalized Category Discovery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 8–24 June 2022; pp. 7482–7491. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. K-means++: The Advantages of Careful Seeding. In Proceedings of the SODA, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Pu, N.; Zhong, Z.; Sebe, N. Dynamic Conceptional Contrastive Learning for Generalized Category Discovery. In Proceedings of the Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7579–7588. [Google Scholar]

- Yang, X.; Pan, X.; King, I.; Xu, Z. Generalized Category Discovery with Clustering Assignment Consistency. In Proceedings of the International Conference on Neural Information Processing, Changsha, China, 20–23 November 2023. [Google Scholar]

- Wen, X.; Zhao, B.; Qi, X. Parametric Classification for Generalized Category Discovery: A Baseline Study. In Proceedings of the International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 16590–16600. [Google Scholar]

- Wang, H.; Vaze, S.; Han, K. SPTNet: An Efficient Alternative Framework for Generalized Category Discovery with Spatial Prompt Tuning. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Choi, S.; Kang, D.; Cho, M. Contrastive Mean-Shift Learning for Generalized Category Discovery. In Proceedings of the Computer Vision and Pattern Recognition, Seattle WA, USA, 17–21 June 2024; pp. 23094–23104. [Google Scholar]

- Guo, J.; Wang, C.; Roman-Rangel, E.; Chao, H.; Rui, Y. Building hierarchical representations for oracle character and sketch recognition. IEEE Tran. Image Process. 2015, 25, 104–118. [Google Scholar] [CrossRef] [PubMed]

- Meng, L.; Fujikawa, Y.; Ochiai, A.; Izumi, T.; Yamazaki, K. Recognition of Oracular Bone Inscriptions using Template Matching. Int. J. Comput. Theory Eng. 2016, 8, 53. [Google Scholar] [CrossRef]

- Yuan, J.; Chen, S.; Mo, B.; Ma, Y.; Zheng, W.; Zhang, C. R-GNN: Recurrent graph neural networks for font classification of oracle bone inscriptions. Herit. Sci. 2024, 12, 30. [Google Scholar] [CrossRef]

- Xu, Y.; Feng, Y.; Liu, J.; Song, S.; Xu, Z.; Zhang, L. Conf-UNet: A Model for Speculation on Unknown Oracle Bone Characters. In Proceedings of the Knowledge Science, Engineering and Management; Springer Nature: Cham, Switzerland, 2023; pp. 89–103. [Google Scholar] [CrossRef]

- Caron, M.; Bojanowski, P.; Joulin, A.; Douze, M. Deep Clustering for Unsupervised Learning of Visual Features. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 139–156. [Google Scholar]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. In Proceedings of the Advances in Neural Information Processing Systems, virtual, 6–12 December 2020. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G.E. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning, Addis Ababa, Ethiopia, 26–30 April 2020; Volume 119, pp. 1597–1607. [Google Scholar]

- Assran, M.; Caron, M.; Misra, I.; Bojanowski, P.; Bordes, F.; Vincent, P.; Joulin, A.; Rabbat, M.; Ballas, N. Masked Siamese Networks for Label-efficient Learning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 456–473. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R.B. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9726–9735. [Google Scholar]

- Guan, T.; Shen, W.; Yang, X.; Feng, Q.; Jiang, Z.; Yang, X. Self-Supervised Character-to-Character Distillation for Text Recognition. In Proceedings of the International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 19473–19484. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean Teachers are Better Role Models: Weight-averaged Consistency Targets Improve Semi-supervised Deep Learning Results. In Proceedings of the International Conference on Learning Representations (Workshop), Toulon, France, 24–26 April 2017. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.; Cubuk, E.D.; Kurakin, A.; Li, C. FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. In Proceedings of the Advances in Neural Information Processing Systems, virtual, 6–12 December 2020. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 9630–9640. [Google Scholar]

- van der Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).