Abstract

Integral–algebraic equations and their systems are a common description of many technical and engineering problems. Often, such models also describe certain dependencies occurring in nature (e.g., ecosystem behaviors). The integral equations occurring in this problem may have two types of domains—symmetric or asymmetric. Depending on whether such symmetry exists in the system describing a given problem, we must choose the appropriate method to solve this system. In this task, the absence of symmetry is more advantageous, but the presented examples demonstrate how one can approach cases where symmetry is present. In this paper, we present the application of two methods for solving such tasks: the analytical Differential Transform Method (DTM) and Physics-informed Neural Networks (PINNs). We consider a wide class of these types of equation systems, including Volterra and Fredholm integrals (which are also in a single model). We demonstrate that despite the complex nature of the problem, both methods are capable of handling such tasks, and thus, they can be successfully applied to the issues discussed in this article.

1. Introduction

Integral–algebraic equations and their systems (IAEs) are an important problem. Such tasks often appear in the description of mathematical models in various fields, including biology, heat conduction, viscoelasticity, electrical engineering, and chemical reactor processes [1,2,3,4,5,6]. At the same time, IAEs pose a challenging problem as there are no general analytical methods for solving such equations. Of course, in certain specific cases, with the appropriate form of the equation, this is possible. However, more often than not, the form of the task precludes such approaches. Various approximate techniques for solving these types of tasks also exist. In the literature, we encounter methods such as the use of Legendre polynomials, regularization methods, various types of multistep methods, and block pulse functions [7,8,9,10,11,12]. In most scientific papers, the IAEs describing a given problem are not too complex, and in many cases, there is no system of such equations, only a single equation. In some instances, systems of IAEs are considered; for example, in articles [7,9,13], systems of two equations are examined, while in [12,14], systems of three equations are analyzed. In this paper, we consider systems of IAEs in a very general form, not limiting ourselves to either the number of equations or their structure. The studied systems may include equations containing both the integral part and the algebraic part. The integrals present in the IAEs can be either Volterra-type or Fredholm-type. These integrals may also appear multiple times in the equations, and the relationships describing dependencies can be nonlinear. Of course, the number of equations that contain or do not contain the above-mentioned components can vary, and there may also be cases where certain components do not occur in the system of IAEs.

In general, we, therefore, consider systems of the following form:

where

and

and where the functions , , , , , , , , , , , describe the relationships connecting the equations in the considered IAEs or in the integrals that appear in these equations.

To solve the system (1), we use the DTM method (see Section 2) and PINNs (see Section 3). DTM is a method applied to many different problems. The authors have successfully applied this method, among others, to solve ODE problems and integral–differential equations with delayed arguments [15,16,17]. There are also other applications of this method, mainly for solving differential equations, including partial differential equations, integral equations, equations with shifted arguments, or fractional-order differential equations [18,19,20,21,22]. The PINN method also has a wide range of applications, which allows it to be used for solving various types of problems. Recently, a number of publications on PINNs have appeared in the scientific literature. For example, paper [23] concerns PINNs and metalearning and transfer learning concepts. The authors evaluate their approach to several canonical forward-parameterized PDEs presented in the scientific literature on PINNs. In [24], an algorithm for PINNs applied to the acoustic wave equation and full waveform inversions is presented. The authors of the following paper have successfully tested this method, for example, for solving variational calculus problems [25]. PINNs are also applicable for solving integro-differential equations [26]. The article [27] presents the application of stochastic procedures of the Levenberg–Marquardt backpropagation neural networks (LMBNNs) in modeling kidney dysfunction caused by hard water consumption. The accuracy of the model was validated by comparing the results, with a negligible absolute error observed.

2. Different Transform Method

Zhou can be considered the creator of DTM, as he described and applied it to certain electronic problems in 1986 [28].

Definition 1.

The original is a function that can be expanded into a Taylor series around a certain point [28,29].

According to the above definition, and assuming that the function f is the original, we can write (for the specific case of the Taylor series where , i.e., in the case of the Maclaurin series):

Thus, based on the theorem of the uniqueness of this expansion, a function (transformation) can be assigned to each original f. Let :

Considering the transformation F of the original f, we can represent this original in the form of

Thanks to the properties of the Taylor transformation and the known forms of transforms of basic functions, we can relatively easily determine the transforms of more complex functions, their derivatives, and integrals. By applying these properties and using a computational platform, we can efficiently solve IAEs tasks.

Properties of the Taylor Transform

In this subsection, the basic properties of the DTM transformation are presented. We limit these properties to only those that are used in presented examples. Many other properties and their proofs can be found in the cited literature.

In the following properties, we assume that x is an element of domain of the considered function f, and .

- If , , then

- If , , then

- If , , then

- If , , then

- If , then

- If , , then

- If , then

- If , then

- If , then

- If , then

- If , then and, for :

- If , then and, for :

- If , then and, for :

The proofs of these properties are not difficult (some of them directly follow from the Taylor series expansions of known functions) and can be found in the cited literature.

3. Physics-Informed Neural Networks—Description

Physics-informed neural networks (PINNs) are an innovative method for solving both ordinary and partial differential equations, as well as integro-differential equations, by leveraging deep learning. Early contributions to this approach include the articles of Raisi et al. [30] and Karniadakis et al. [31]. PINNs combine neural network architectures with the governing principles of physics by embedding differential or integro-differential equations and initial or boundary conditions directly into the network’s loss function. This ensures that the model’s predictions remain consistent with the underlying physical laws.

One of the key advantages of PINNs lies in their flexibility and efficiency, offering an alternative to classical numerical methods, which can be hindered by computational expenses and scalability issues, particularly in high-dimensional problems. Once trained, PINNs can generate solutions across any grid, offering a notable benefit over classical techniques such as finite difference methods or other and other mesh-based methods. Furthermore, they are adept at managing complex boundary and initial conditions and can seamlessly integrate experimental data into the model. This capability not only enhances solution accuracy but also enables PINNs to learn and adjust to real-world situations where data might be limited or noisy. As a result, PINNs have demonstrated considerable success in diverse fields, including fluid dynamics, materials science, and biological systems [32,33,34].

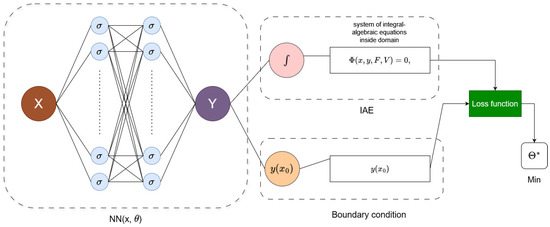

The architecture of PINN is built upon traditional elements of neural networks, such as feedforward processing, backpropagation, hidden layers, the number of nodes in each hidden layer, and activation functions. The training of the neural network is typically performed using optimization methods like the Adam optimizer. The PINN framework described in the article comprises two key components (see Figure 1): a fully connected neural network and a loss function module, which calculates the loss as the weighted norm of the residuals from both the governing system of IAEs and the boundary conditions. In Figure 1, X represents the input to the fully connected neural network, and Y is the output of this network. The output Y represents the approximate solutions of the desired functions in the considered problem. The loss function is defined as the sum of domain errors (arising from the system of integro-algebraic equations defining the problem) and boundary errors (resulting from the initial and boundary conditions). The neural network is trained to minimize this loss function. This process leads to finding the network parameters that allow for approximating the solution to the problem defined by the system of equations. Once the model is trained, a grid of points (arguments) can be provided as input to the network. At the network’s output, we obtain approximate values of the unknown functions at these points, which enables the solution of the problem in a discrete form.

Figure 1.

Schema of physics-informed neural network for solving system of IAEs with boundary conditions.

For the considered system of integral–algebraic equations

and boundary conditions

a loss function is constructed:

where N is the number of training points inside the domain used in the training process. The process of building and training a neural network aims to learn a model to find the best weights and biases (parameters of the model) by finding (optimizing) the loss function .

4. Examples

In this section, we present four examples to demonstrate the universality and effectiveness of the discussed methods. The examples differ in terms of the number and type of equations. They also include typical problems that may arise when solving IDE tasks (along with a discussion on how to address these problems).

4.1. Example 1

Consider a system of integro-algebraic equations consisting of one integral equation and one algebraic equation:

where and , are the searched functions.

4.1.1. DTM Approach

Assume that and are transforms of the functions and . To simplify the form of the following formulas, let us assume in the system (17) (we would obtain a similar result by taking ). From the first equation of this system, we will obtain , while from the second one (also using the calculated value of ), we will obtain . This means and . Now for , the system (17), based on the properties (3)–(8), (10) and (15) will transform into the following:

By limiting ourselves to nine terms (), we obtain approximate solutions:

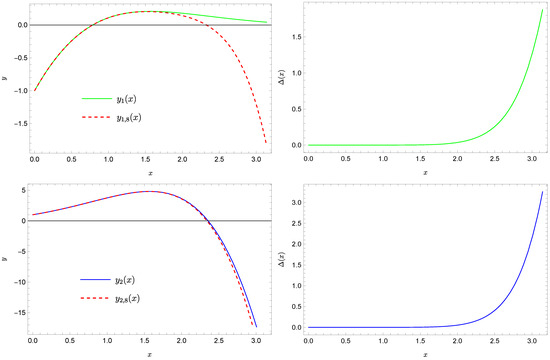

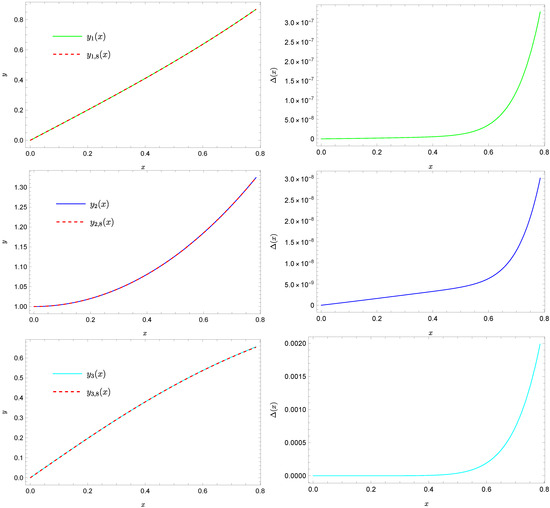

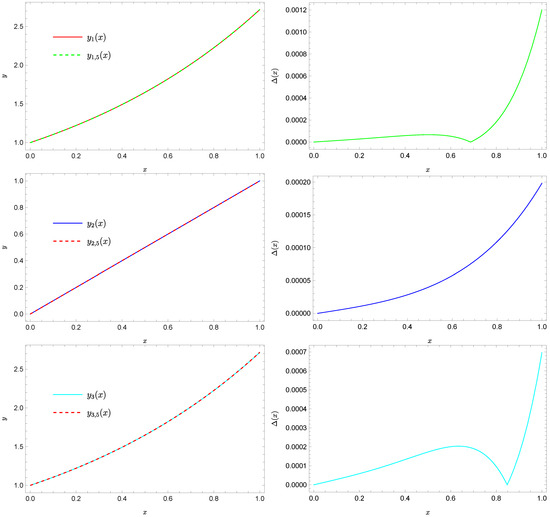

Figure 2 presents a comparison of this solution with the exact solutions, while Figure 3 shows a comparison of the approximate solutions , with the exact solutions, which are functions:

The error is calculated as follows:

where k is the number of unknowns in the system (1).

Figure 2.

The exact solution (solid green line), the approximate (dashed red line), and the absolute errors of this approximation, as well as the exact solution (solid blue line), the approximate (dashed red line), and absolute errors of this approximation.

Figure 3.

The exact solution (solid green line), the approximate (dashed red line), and the absolute errors of this approximation, as well as the exact solution (solid blue line), the approximate (dashed red line), and the absolute errors of this approximation.

By expanding the functions and into their respective Taylor series, it turns out that the obtained solutions exactly correspond to the successive terms of these series.

4.1.2. PINN Approach

The system of Equations (17) was also solved using neural networks (PINNs). In the considered example, the following architecture and parameters are set:

- The network consists of three hidden layers, each containing 20 neurons;

- The network takes one input and two outputs (there are two unknown functions in the system);

- A fully connected neural network (FNN) is defined, which is used to model the solution;

- The activation function in each layer is the hyperbolic tangent, which helps in nonlinear modeling of relationships;

- The weights are initialized using the “Glorot uniform” method, a common technique that ensures proper scaling of the weights to improve training stability;

- 32 training points are considered.

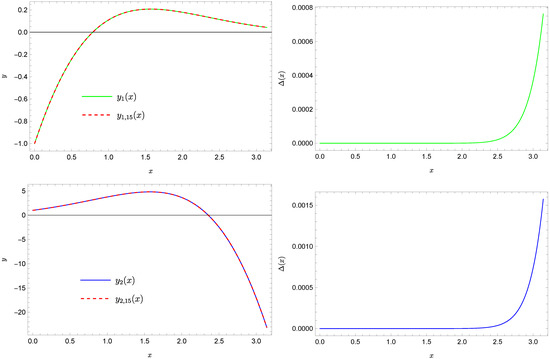

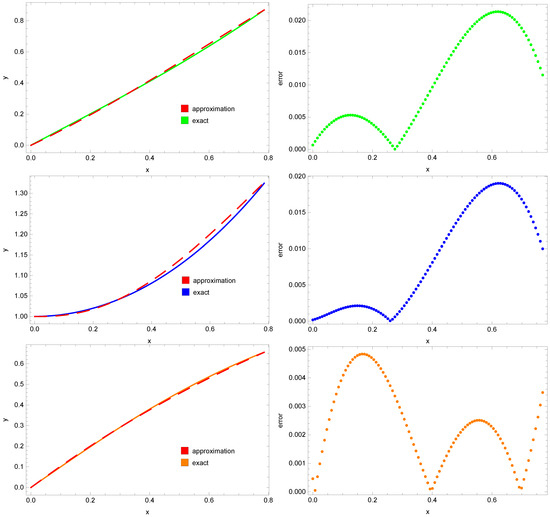

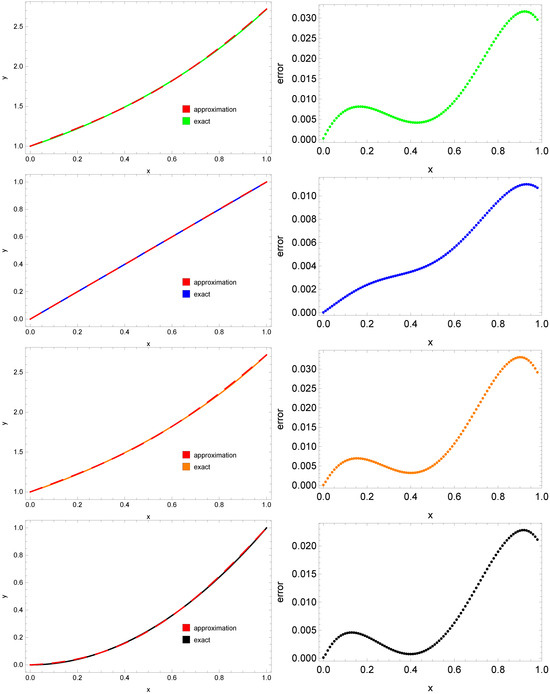

In Figure 4, the obtained results, along with the errors, are presented. On the left side, the plots of the approximate solution (red dashed line) and the exact solution (solid lines) are shown. As can be observed, the approximate solutions for the functions and almost overlap with the exact solutions. The corresponding errors are presented on the right side. Errors for the function (maximum error ) are significantly smaller than those for the function (maximum error ). For the function , the largest errors are observed at the right boundary of the domain.

Figure 4.

The exact solution (solid green line), the approximation (dashed red line), the absolute errors of approximation of (green dotted line—right side), the exact solution (solid blue line), the approximation (dashed red line), and the absolute errors of approximation of (blue dotted line—right side).

4.2. Example 2

We consider a system of integro-algebraic equations, which consists of two integral equations and one algebraic equation:

where and , , are the searched functions.

4.2.1. DTM Approach

Let , , and be the transforms of the functions , , and . If we substitute (or equivalently ) into system (18), we obtain the following system of equations:

which is equivalent to the system

It turned out that the solution , , generates three groups of approximate solutions, each of which results in large errors . We therefore assume , , (in this case, the errors are significantly smaller). Applying the DTM transform to system (18), for , we obtain the following:

We obtained these formulas by using, among others, the properties (3)–(8), (10), (12) and (13) and by transforming the second equation of the system (18):

All the coefficients and for depend on an unknown parameter (the k-th coefficient is a polynomial of degree k), which from the first equation of system (18) takes the following form:

Taking nine consecutive coefficients determined in this way (), we obtain the approximate solutions , , and , which still depend on the parameter . We determine this parameter by solving the equation:

The equation has two solutions: and (so we have two groups of solutions, similar to the previous example where we generated three groups). After conducting the tests mentioned in the initial part of this example, it turns out that the second value, , yields satisfactory results. For this value of the parameter , the approximate solutions take the form:

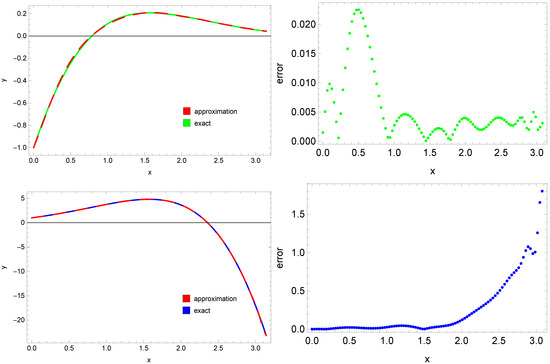

Figure 5 compares the approximate solutions , with the exact solutions (where we know that ), which are the functions:

Figure 5.

Exact solution (solid green line), approximate (dashed red line), exact solution (solid blue line), approximate (dashed red line), exact solution (solid cyan line), approximate solution (dashed red line), and absolute errors of these approximations.

This time, we cannot find the exact values of the terms in the expansion of the Taylor series, but we can (by applying the appropriate methods) find a good numerical approximation of these solutions.

4.2.2. PINN Approach

To solve the system of Equation (18), a fully connected neural network was used with the following settings: three hidden layers, each containing 20 neurons; hyperbolic tangent as an activation function; “Glorot uniform” as a method to initialize weights; 32 training points are taken to learning model.

The obtained results, in the form of exact solution plots and error plots, are presented in Figure 6. As can be seen, all three approximated solutions fit relatively well to the exact solutions. The smallest errors were obtained for the function (these errors do not exceed ). In the case of the functions and , the errors of the approximated solutions are larger (with a maximum error at the level of ). Comparing the error plots obtained by the PINNs and DTM methods, one can observe differences in both their nature and magnitude. In the case of DTM, the largest errors occur at the end of the interval. Only in the case of the approximated solution for the function is the order of magnitude of the errors comparable in both methods. For the remaining functions, the errors obtained using PINNs are significantly larger than those from DTM.

Figure 6.

Exact solution , (green, blue, and orange solid lines—left side), approximation , (red dashed line—left side), and absolute errors of these approximations (green, blue, and orange dotted lines—right side) for example 2.

4.3. Example 3

We focus on the following system of integral–algebraic equations:

where and , i are the searched functions.

4.3.1. DTM Approach

If we assume that , , and are the transforms of the functions , , and , then by taking (or equivalently ) in the system (19), we obtain , , and , which means that , , and .

If for we apply to the equations of the system (19) properties such as (3), (5)–(10) and (12)–(14), then we obtain the following:

where

By determining the successive coefficients from this system, we obtain the following:

By calculating 13 terms in this way, i.e., taking , we obtain approximate solutions , for , which depend on the parameter . The value of this parameter is determined by solving Equation (20) that defines this parameter. After solving this system, we obtain two almost identical solutions: and . The first solution turned out to be better. This was not a coincidence, as the exact solutions, which are the functions:

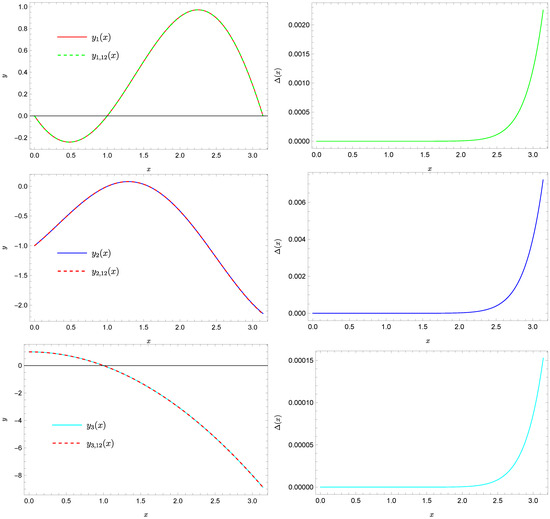

it follows that . For this value of , we obtain approximate solutions, the interpretation of which is presented in Figure 7.

Figure 7.

Exact solution (solid green line), approximate (dashed red line), exact solution (solid blue line), approximate (dashed red line), exact solution (solid cyan line), approximate solution (dashed red line), and absolute errors of these approximations.

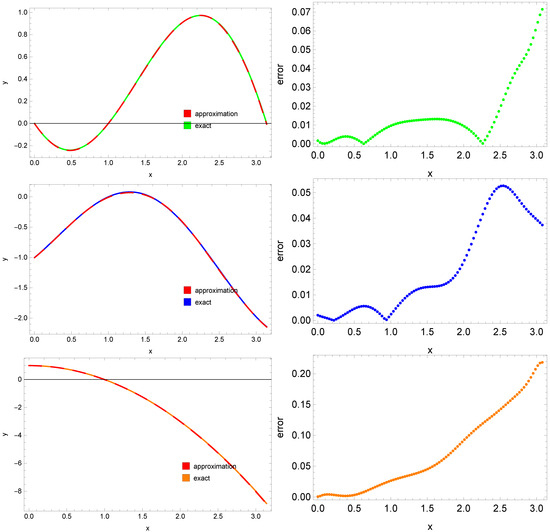

4.3.2. PINN Approach

As in the previous examples, the results obtained from both methods were compared. In this example, we used the same neural network architecture and its parameters as in the two previous examples (see Section 4.1.2 and Section 4.2.1). The solutions obtained using PINNs are close to the exact solutions. However, in this case as well, the approximation errors are larger than in the case of DTM. The largest errors were obtained for the function (maximum error ), while for the functions and , the errors were approximately ≈0.07 and , respectively (see right column of Figure 8).

Figure 8.

Exact solution , (green, blue, and orange solid lines—left side), approximation , (red dashed line—left side), and absolute errors of these approximations (green, blue, and orange dotted lines—right side) for example 3.

4.4. Example 4

Consider the following system of equations:

where , the exact solution of which is the following:

4.4.1. DTM Approach

Substituting into the first two equations of the system (21), we obtain and . The remaining two values, for , can be obtained from the other two equations of the system (21), from which it follows that

Solving the above system of equations, we obtain the following:

so consequently, we have the following:

Using, among others, the properties (3), (4), (7)–(10), and (13)–(15) from the equations of the system (21) for , we obtain the following:

where

By determining the values of for from Equation (23), we obtain approximate solutions

dependent on the parameters and , which can be found by solving the system (23), where the functions and are replaced by the functions and . The solution to this system is a pair of numbers:

Since the functions , , and are exact solutions, the parameters and take the exact values and .

By determining five terms, we obtain and , along with the corresponding approximate solutions for :

Figure 9 presents a comparison of the exact solutions with the corresponding approximate solutions obtained for the five determined terms.

Figure 9.

Exact solution (solid green line), approximate (dashed red line), exact solution (solid blue line), approximate (dashed red line), exact solution (solid cyan line), approximate solution (dashed red line), exact solution (solid purple line), approximate solution (dashed red line), and absolute errors of these approximations.

4.4.2. PINN Approach

We solve the system of Equation (21) using a neural network. The parameters and network architecture were set the same as in the previous examples, with the only change being an increase in the number of training points to 64. In this example as well, DTM provided more accurate solutions. Figure 10 shows the approximate solutions (left column) and their errors (right column), which were obtained using PINNs.

Figure 10.

Exact solution , (green, blue, orange, and black solid lines—left side), approximation , (red dashed line—left side), and absolute errors of these approximations (green, blue, orange, and black dotted lines—right side).

5. Conclusions

The DTM is a universal technique that allows for solving various types of equations and their systems. This article presents the application of this method to systems of integral–algebraic equations. Such systems are complex enough that classical methods struggle to handle these types of tasks. This work demonstrates that despite the complexity of these systems and their significant level of complication, the DTM successfully addresses these tasks, providing very good approximate solutions (and sometimes, as in the first example, an exact solution). The examples presented varied in terms of complexity, the number of equations, and the number of integrals (and their types) in these equations. This further argument supports the usefulness of the discussed method.

In this article, the DTM was compared with the application of Physics-Informed Neural Networks (PINNs) in solving a system of integral–algebraic equations. PINNs also proved to be an effective tool for addressing such problems. In each of the four examples presented in the paper, the neural networks successfully dealt with the considered system of equations. However, it should be emphasized that the error of the approximate solution obtained from PINNs was greater than that of DTM in each case. The advantage of PINNs lies in their relatively simple implementation and versatility. In the Section 4, four numerical examples with varying degrees of complexity were presented. As can be observed in the numerous figures provided (Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10), both methods yielded fairly accurate results with small errors. However, it should be emphasized once again that the results obtained using DTM exhibited smaller errors, compared to those obtained with PINNs.

Of course, in the case of more complex problems, the application of the DTM cannot proceed without the assistance of a computational platform. It is often desirable for such a platform to also be capable of performing symbolic computations. In this article, the authors utilized Mathematica (version 13.3) [35,36]. For the implementation of PINNs, the authors employed Python and the TensorFlow library.

Author Contributions

Conceptualization, M.P. and R.B.; methodology, M.P.; software, M.P. and R.B.; validation, M.P. and R.B.; formal analysis, R.B.; writing—original draft preparation, M.P. and R.B.; writing—review and editing, M.P. and R.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ghoreishi, F.; Hadizadeh, M.; Pishbin, S. On the convergence analysis of the spline collocation method for system of integral algebraic equations of index-2. Int. J. Comput. Methods 2012, 9, 1250048. [Google Scholar] [CrossRef]

- Hadizadeh, M.; Ghoreishi, F.; Pishbin, S. Jacobi spectral solution for integral algebraic equations of index-2. Appl. Numer. Math. 2011, 61, 131–148. [Google Scholar] [CrossRef]

- Kopeikin, I.D.; Shishkin, V.P. Integral form of the general solution of equations of steady-state thermoelasticity. J. Appl. Math. Mech. 1984, 48, 117–119. [Google Scholar] [CrossRef]

- Kot, M. Elements of Mathematical Ecology; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Pishbin, S.; Ghoreishi, F.; Hadizadeh, M. A posteriori error estimation for the Legendre collocation method applied to integral-algebraic Volterra equations. Electron. Trans. Numer. Anal. 2011, 38, 327–346. [Google Scholar]

- Pougaza, D.B. The Lotka Integral Equation as a Stable Population Model; African Institute for Mathematical Sciences (AIMS): Cape Town, South Africa, 2007; pp. 1–30. [Google Scholar]

- Balakumar, V.; Murugesan, K. Numerical solution of Volterra integral-algebraic equations using block pulse functions. Appl. Math. Comput. 2015, 2639, 165–170. [Google Scholar] [CrossRef]

- Brunner, H. Collocation Methods for Volterra Integral and Related Functional Differential Equations; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Budnikova, O.S.; Bulatov, M.V. Numerical solution of integral-algebraic equations for multistep methods. Comput. Math. Math. Phys. 2012, 52, 691–701. [Google Scholar] [CrossRef]

- Farahani, M.S.; Hadizadeh, M. Direct regularization for system of integral-algebraic equations of index-1. Inverse Probl. Sci. Eng. 2018, 26, 728–743. [Google Scholar] [CrossRef]

- Liang, H.; Brunner, H. Collocation methods for integro-differential algebraic equations with index 1. IMA J. Numer. Anal. 2020, 40, 850–885. [Google Scholar] [CrossRef]

- Pishbin, S. Operational Tau method for singular system of Volterra integro-differential equations. J. Comput. Appl. Math. 2017, 311, 205–214. [Google Scholar] [CrossRef]

- Pishbin, S. Solving integral-algebraic equations with non-vanishing delays by Legendre polynomials. Appl. Numer. Math. 2022, 179, 221–237. [Google Scholar] [CrossRef]

- Shiri, B.; Shahmorad, S.; Hojjati, G. Convergence analysis of piecewise continuous collocation methods for higher index integral algebraic equations of the Hessenberg type. Int. J. Appl. Math. Comput. Sci. 2013, 23, 341–355. [Google Scholar] [CrossRef]

- Grzymkowski, R.; Pleszczyński, M. Application of the Taylor transformation to the systems of ordinary differential equations. In Proceedings of the Information and Software Technologies, ICIST 2018, Communications in Computer and Information Science, Vilnius, Lithuania, 4–6 October 2018; Damasevicius, R., Vasiljeviene, G., Eds.; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Hetmaniok, E.; Pleszczyński, M. Comparison of the Selected Methods Used for Solving the Ordinary Differential Equations and Their Systems. Mathematics 2022, 10, 306. [Google Scholar] [CrossRef]

- Hetmaniok, E.; Pleszczyński, M.; Khan, Y. Solving the Integral Differential Equations with Delayed Argument by Using the DTM Method. Sensors 2022, 22, 4124. [Google Scholar] [CrossRef] [PubMed]

- Arikoglu, A.; Ozkol, I. Solution of differential-difference equations by using differential transform method. Appl. Math. Comput. 2006, 174, 153–162. [Google Scholar] [CrossRef]

- Biazar, J.; Eslami, M.; Islam, M.R. Differential transform method for special systems of integral equations. J. King Saud Univ.-Sci. 2012, 24, 211–214. [Google Scholar] [CrossRef]

- Odibat, Z.; Momani, S. A generalized differential transform method for linear partial differential equations of fractional order. Appl. Math. Lett. 2008, 21, 194–199. [Google Scholar] [CrossRef]

- Ravi Kanth, A.S.V.; Aruna, K.; Chaurasial, R.K. Reduced differential transform method to solve two and three dimensional second order hyperbolic telegraph equations. J. King Saud Univ.-Eng. Sci. 2017, 29, 166–171. [Google Scholar]

- Tari, A.; Shahmorad, S. Differential transform method for the system of two-dimensional nonlinear Volterra integro-differential equations. Comput. Math. Appl. 2011, 61, 2621–2629. [Google Scholar] [CrossRef][Green Version]

- Penwarden, M.; Zhe, S.; Narayan, A.; Kirby, R.M. A metalearning approach for Physics-Informed Neural Networks (PINNs): Application to parameterized PDEs. J. Comput. Phys. 2023, 477, 111912. [Google Scholar] [CrossRef]

- Rasht-Behesht, M.; Huber, C.; Shukla, K.; Karniadakis, G.E. Physics-Informed Neural Networks (PINNs) for Wave Propagation and Full Waveform Inversions. J. Geophys. Res. Solid Earth 2022, 127, e2021JB023120. [Google Scholar] [CrossRef]

- Brociek, R.; Pleszczyński, M. Differential Transform Method and Neural Network for Solving Variational Calculus Problems. Mathematics 2024, 12, 2182. [Google Scholar] [CrossRef]

- Yuan, L.; Ni, Y.-Q.; Deng, X.-Y.; Hao, S. A-PINN: Auxiliary physics informed neural networks for forward and inverse problems of nonlinear integro-differential equations. J. Comput. Phys. 2022, 462, 111260. [Google Scholar] [CrossRef]

- Sabir, Z.; Umar, M. Levenberg-Marquardt backpropagation neural network procedures for the consumption of hard water-based kidney function. Int. J. Math. Comput. Eng. 2023, 1, 127–138. [Google Scholar] [CrossRef]

- Zhou, J.K. Differential Transformation and Its Applications for Electrical Circuits; Huazhong University Press: Wuhan, China, 1986. [Google Scholar]

- Grzymkowski, R.; Pleszczyński, M. Taylor Transformation and Its Implementation in Mathematica; Wydawnictwo Politechniki Śląskiej: Gliwice, Poland, 2021. (In Polish) [Google Scholar]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-informed machine learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Eivazi, H.; Wang, Y.; Vinuesa, R. Physics-informed deep-learning applications to experimental fluid mechanics. Meas. Sci. Technol. 2024, 35, 075303. [Google Scholar] [CrossRef]

- Cuomo, S.; Schiano Di Cola, V.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific Machine Learning Through Physics–Informed Neural Networks: Where we are and What’s Next. J. Sci. Comput. 2022, 92, 88. [Google Scholar] [CrossRef]

- Ahmadi Daryakenari, N.; De Florio, M.; Shukla, K.; Karniadakis, G.E. AI-Aristotle: A physics-informed framework for systems biology gray-box identification. PLoS Comput. Biol. 2024, 20, e1011916. [Google Scholar] [CrossRef]

- Hastings, C.; Mischo, K.; Morrison, M. Hands-On Start to Wolfram Mathematica and Programming with the Wolfram Language, 3rd ed.; Wolfram Media, Inc.: Champaign, IL, USA, 2020. [Google Scholar]

- Wolfram, S. The Mathematica Book, 5th ed.; Wolfram Media, Inc.: Champaign, IL, USA, 2003. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).