Abstract

Inferences on the location parameter in location-scale families can be carried out using Studentized statistics, i.e., considering estimators of and of the nuisance scale parameter , in a statistic with a sampling distribution that does not depend on . If both estimators are independent, then T is an externally Studentized statistic; otherwise, it is an internally Studentized statistic. For the Gaussian and for the exponential location-scale families, there are externally Studentized statistics with sampling distributions that are easy to obtain: in the Gaussian case, Student’s classic t statistic, since the sample mean and the sample standard deviation are independent; in the exponential case, the sample minimum and the sample range , where the latter is a dispersion estimator, which are independent due to the independence of spacings. However, obtaining the exact distribution of Student’s statistic in non-Gaussian populations is hard, but the consequences of assuming symmetry for the parent distribution to obtain approximations allow us to determine if Student’s statistic is conservative or liberal. Moreover, examples of external and internal Studentizations in the asymmetric exponential population are given, and an ANalysis Of Spacings (ANOSp) similar to an ANOVA in Gaussian populations is also presented.

1. Introduction

Let be a random sample from a population . If the location parameter and the scale parameter are unknown, the unbiased estimators and , with , can be used to estimate these parameters.

The distribution of the estimator depends on the nuisance parameter , which is a problem for making inferences on the location parameter . Student’s [1] pathbreaking paper has shown that the statistic

has a distribution that does not depend on the nuisance scale parameter, since its probability density function is

where , , is Euler’s Beta function. The probability density function defined in (2) is for a random variable with Student’s distribution with degrees of freedom. Basically, the “Studentization” defined in (1), as opposed to the common standardization , uses the estimators of the parameter of interest (location) and of the nuisance parameter, so that the sampling distribution of does not depend on the location parameter or on the nuisance parameter , thus being a pivot statistic that can be used for inferences on .

However, Student’s exceptional result depends heavily on the fact that in the location-scale Gaussian family, and are independent random variables, which is an exclusive property of the Gaussian family. This characterization of the Gaussian distribution appeared in Geary’s work [2], and has been proved, independently, by Darmois [3] and by Skitovich [4]. Additionally, in the context of the Koopman–Darmois–Pitman k-parameter exponential family [5,6,7], the Gaussian family is the only one with support and with a pair of sufficient statistics for , namely , which captures all available information in the sample to estimate these two parameters.

When working with samples from non-Gaussian location-scale families, the dependence structure between the sample mean and the sum of squares is hard to discern, except for the special case (note that an index n is now being used to emphasize the size of the random sample). For , the computation of the probability density function of Student’s statistic

is a more difficult problem to handle. In Section 2, the joint probability density function of is investigated in a general context, i.e., not restricted to the Gaussian family. Its explicit expression for is given in Section 2.1, and examples for the probability density function of , i.e., for in (3), for several symmetric parent distributions are given in Section 2.2. For , a recurrence formula for the joint probability density function of is obtained in Section 2.3, and a detailed study of the recurrence formula for the Gaussian case is seen in Section 2.4.

An interesting alternative for Studentization was proposed in Logan et al.’s work [8], where a self-normalized statistic was considered, especially when X has heavier tails than the Gaussian law, and it is in the domain of attraction of a stable law for sums with index . Peña et al.’s [9] monograph on self-normalized processes is a thorough overview, with Chapter 15 dealing with the classical t-statistic and Studentized statistics.

Another interesting fact is that Efron [10] showed that when a rotational symmetry of the unit vector over the surface of the unit sphere in the Euclidean n-space is assumed. However, Efron [10] pointed out the following:

Unfortunately the usual sampling procedures almost never yield rotational symmetry for the normalized vector except in the case .

Efron also stated that “A very special “lucky” case is given in Section 9, namely Inverted normal error for . It is possible to construct examples where is t-distributed with n degrees of freedom, without having rotational symmetry”. Efron’s [10] pioneering work also investigated the consequences, in what regards Student’s statistic, by assuming weaker orthant symmetry, i.e., when the random vector has the same distribution as for every choice of , .

This led us to observe, in Section 2.4, that the joint probability density function of , in the exceptional rotational symmetry of the Gaussian case, is proportional to the product of n Gaussian probability density functions, computed at some special points that form a symmetric arithmetic progression with a null sum and a sum of squares equal to one.

In Section 3, we shall investigate the simplifications that result from an additional symmetry assumption, namely when the joint probability density function of is proportional to the product of n probability density functions of X, as in the Gaussian case. The approximation obtained from the smoothness hypothesis 1 (Section 3.2) is further investigated in Section 3.3 for the worst case possible, i.e., uniform distribution (see Hendriks et al. [11]), by comparing the approximate expression for with the exact distribution given by Perlo [12] for a parent.

Aside from the Gaussian family, the exponential location-scale family is also a remarkable member of the Koopman–Darmois–Pitman exponential family. It is the only member of this family with support in a half-line and with a pair of sufficient statistics to estimate , namely , where , , denotes the k-th order statistic of the random sample .

Moreover, the spacings , , are independent, with the convention . This property characterizes the exponential distribution, and it is a consequence of Pexider’s [13] functional equation , which is an extension of Cauchy’s functional equation, with the solution .

Using the independence of the spacings of the exponential model, we shall also obtain in Section 4 the probability density function of some externally and internally Studentized statistics, according to David’s [14] definition, for inferences on the exponential location parameter. The independence of spacings is further used to compare the location parameters of two exponential populations in Section 4.4, and also to establish an ANalysis Of Spacings (ANOSp), similar to a one-way ANOVA in Gaussian populations, which is presented in Section 4.5. A Satterthwaite [15] approximation for the case of unequal dispersions is given in Section 4.6.

Finally, Section 5 summarizes the main findings regarding approximate solutions for non-Gaussian symmetric populations and for a very asymmetric exponential population, either for exact solutions resulting from the independence of spacings or for approximate solutions for inferences on the comparison of location parameters without assuming equal dispersions.

2. Joint Probability Density Function of and Probability Density Function of

Let be a random sample of size n from a population X. If , then from the independence of and , the joint probability density function of is

where , , is Euler’s Gamma function.

2.1. Joint Probability Density Function of

The independence of and does not hold for a non-Gaussian population X. However, for samples of size , it is easy to obtain the probability density function of Student’s statistic .

Since

it follows that if , then

with a similar result also holding true if , namely and . Therefore, if we denote the probability density function of X, as the absolute value for the Jacobian determinant of both transformations is , then the joint probability density function of is

where the support

As for the coefficients of in the arguments of the functions in (5), they satisfy the conditions

2.2. Probability Density Function of Student’s Statistic

To obtain the probability density function of Student’s statistic , let

from which the inverse transformation is and , with . Hence, the joint probability density function of is

and therefore, if X has support , the probability density function of is given by

Some simple examples for symmetric parent distributions are the following:

- If ,i.e., (note that a standard Cauchy random variable is Student t-distributed with one degree of freedom).

- If ,

- If ,

- If ,

- If ,

(Note that a random variable X has a symmetric distribution around a parameter if its cumulative distribution function satisfies , . In particular, if X is an absolutely continuous random variable, its distribution is symmetric around if , .)

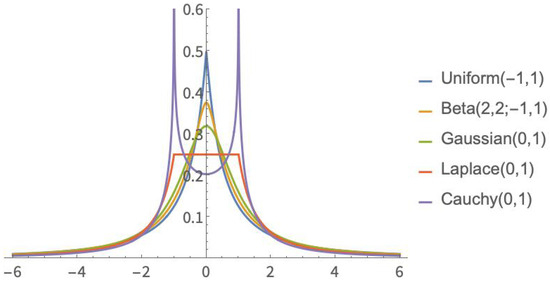

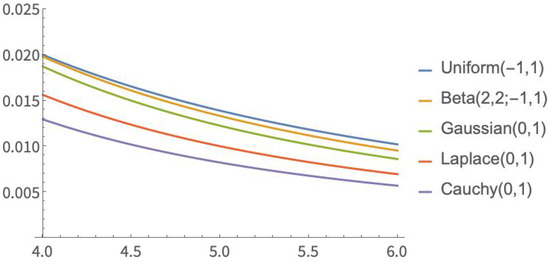

The graphics in Figure 1 show that the probability density function of with a non-Gaussian parent can be quite different from that of , i.e., with a Gaussian parent. Note that with a parent and a parent, the corresponding densities of are unimodal and have heavier tails than those of (see Figure 2). In the case of a Cauchy parent, which is known for having very heavy tails, has an antimodal probability density function. The observation that heavier tails of the underlying parent distribution result in less heavy tails for the distribution of Student’s T-statistic, and vice-versa, was proved by van Zwet [16,17]. This indicates that the tail weight of the distribution is very important to determine the conservative or liberal behavior of Student’s statistic.

Figure 1.

Probability density function of with symmetric parent distributions.

Figure 2.

Right tail of the probability density function of for the symmetric parent distributions in Figure 1.

2.3. Joint Probability Density Function of

Let , , be a random sample from the parent distribution X. From and being independent, the joint probability density function of is

On the other hand, as

using the auxiliary random variable , the inverse transformation is

with . Hence, the joint probability density function of is

and therefore, the joint probability density function of is given by

We emphasize that in integral (8), it is assumed that X has support , which we shall continue to assume for what follows, but if this is not the case, then the integration limits should be defined according to its support (see (6)).

In making the replacement in (8), it follows that

Notice that the coefficients of in the arguments of the functions in the last integrand in (10) satisfy the following two conditions:

and

2.4. The Gaussian Case

In applying (10), in particular, to the case , for and ,

where is a set of equidistant points such that their sum is 0 and the sum of their squares is 1.

As for the joint probability density function of , using the recurrence Formula (9), we obtain

and in view of what was established in (11), the joint probability density function (12) can be approximated using the product of computed at the points , , i.e.,

with K as a norming constant, provided that and .

In determining the points , since the set is symmetric, for its elements to fulfill the first condition, they must satisfy and . As the points are also equidistant, i.e., , , they form an arithmetic progression with common distance r, and thus, . In order to satisfy the second condition, we must also have , and hence,

Therefore, from (13), and for and , we obtain

More generally, we have the following:

- If , thenand from , we obtain .

- If , thenand from , we obtain .

It follows that , and since , , we have

In particular, , and hence,

Therefore,

For example, for and we obtained the values

As a recurrence formula,

By expressing the multiplier as , we obtain .

3. Symmetry and Studentization

3.1. Symmetric Random Variables

There are useful results that enable the identification or characterization of symmetric random variables, such as the following:

- (i)

- If X and Y are independent random variables, then has a characteristic function .

- (ii)

- If , then has a probability mass function , and its characteristic function is .

- (iii)

- If X and B (B as defined in (ii)) are independent, the characteristic function of is .

- (iv)

- A random variable is symmetric if and only if its characteristic function is .

From the above, a random variable X is symmetric if and only if , with X and B being independent. Therefore, if X and Y are independent random variables and X is symmetric, then is also symmetric. In particular, if X is symmetric, then is symmetric, which leads to being symmetric as well.

3.2. An Approximate Joint Probability Density Function of with a Symmetric Parent Distribution

Using the Gaussian case as a guideline, for , we assume that is a continuous and smooth probability density function of a symmetric random variable X, in the sense that there is a value such that, from the mean value theorem, the integral in (10) can be computed as

where , , and , so that and .

More generally, we investigate the consequences of a smoothness hypothesis, which is based on the Gaussian case.

Smoothness Hypothesis 1.

Let be independent replicas of a random variable X, with a smooth probability density function that allows the integral mean value theorem to be used. Therefore, for , there are values and of the form

satisfying the conditions and such that the approximation

where K is a norming constant, is valid.

Then, the integral mean value theorem also holds for , i.e., there are values and such that the approximation

is also valid, with having support defined in (6), and , , and .

If we further assume that X is a symmetric random variable, which implies that the points form an arithmetic progression with common distance and , , then and

Equation (19) is easily obtained. In fact, if , then . Moreover, the abcissas of the positive points are , and since , this implies that . For , the results are similar, with the abcissas of the positive now being .

3.3. An Approximate Expression for the Probability Density Function of with a Smooth Symmetric Parent

Considering the transformation

from which and , with , we obtain

and therefore, for , and X with support ,

Using approximation (18), and denoting , it follows that

For example, if , then

as expected.

If , where , then

and therefore,

In particular, if ,

Computing the norming constant so that the right-hand side of (21) is transformed into a probability density function, we obtain

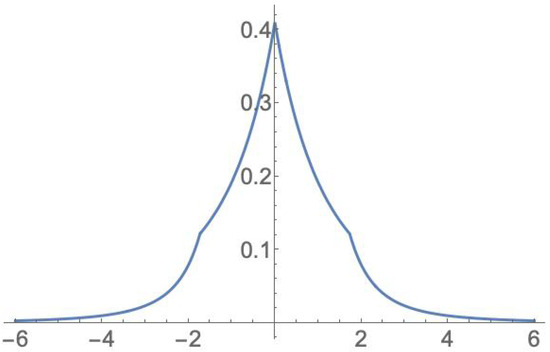

In Figure 3, the approximate probability density function of with a parent is represented.

Figure 3.

Approximate probability density function of with a parent.

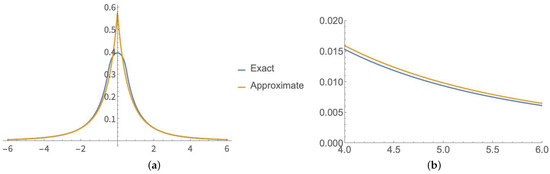

For the non-Gaussian parent case, to assess how well approximation (20) works, we shall compare the exact probability density function of with a parent, given by Perlo [12], namely

with the approximation using (20).

As we are now dealing with a parent distribution with a limited support, the integration limits for (20) must be defined according to (6). In this case, the condition

must be satisfied, or equivalently,

Hence, in denoting , it follows that

Computing the norming constant K so that the function in the right-hand side of (23) becomes a probability density function, we obtain

In Figure 4, the exact and approximate probability density functions of with a parent, defined in (22) and (24), respectively, are plotted together. As can be observed, the approximation is not good in the central region of the distributions, but it is quite good in the tails of the distributions, which is where it actually matters because inferences are made using the tails of the distributions.

Figure 4.

Probability density function of with a parent: (a) Exact and approximate densities. (b) Zoom in of right tails.

Observe that Hendriks et al. [11] analyzed why the Uniform parent is the least favorable one, as far as approximations are concerned, for the probability density function of .

4. Externally Studentized Statistics Using Spacings of an Exponential Parent

4.1. External Studentization Using the Maximum Likelihood Scale Estimator

Let be a random sample of size n from , , , i.e., with probability density function

The maximum likelihood estimators and are independent due to the independence of the spacings , , with the usual convention .

Therefore, the externally Studentized statistic

where , with being a random sample from , has probability density function

and hence, .

4.2. External Studentization Using the Sample Range as a Dispersion Estimator

Another externally Studentized statistic that can be used to make inferences on the location parameter is

In noticing that and considering that , the joint probability density function of is

Using the transformation and , with , the joint probability density function of is defined as

Therefore, for ,

By making the replacement in the integrals, we obtain

Since

where is the digamma function, it follows that

Using the recurrence formula for the digamma function,

(cf. Abramowitz and Stegun [18]), the probability density function of , given in (27), can be further simplified to

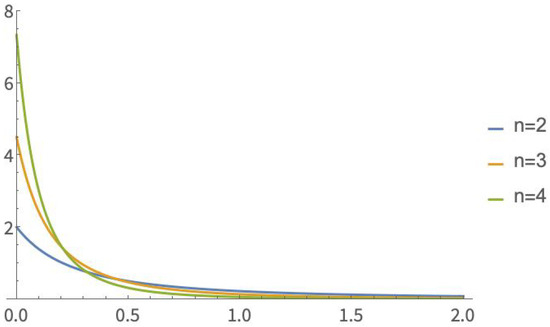

In Table A1, in Appendix A, critical values of are displayed. The critical values were obtained using Mathematica v12. In Figure 5, the probability density function of is plotted for some values of n.

Figure 5.

Probability density function of .

For large values of n, an approximation can be used to obtain critical values of the statistic . In recalling that , the limit distribution of the sequence of maxima is a standard Gumbel distribution, and therefore,

Moreover, as , in using the fact that and , it follows from Slutsky’s theorem [19] that

4.3. Internal Studentization Using Sums of Spacings

As the minimum and/or the maximum can be outliers, according to Hampel’s [20] breakdown point concept, the statistic , defined in (26), can be unreliable. For an overview of robustness issues, see Rochetti [21], and in what concerns the duality of perspectives for extreme values/outliers, see Bhattacharya et al. [22]. For these reasons, it is more conservative to use instead the internally Studentized statistic

with .

The sample mean is a sufficient and complete estimator of , and the distribution of does not depend on the nuisance scale parameter . Hence, from Basu’s [23] theorem, we conclude that and are independent.

Noticing also that , from the equality

we must have . Hence, from and , we have for ,

i.e.,

Thus, denoting the Laplace transform of function f at x, we obtain

and in denoting the inverse Laplace transform of function g at t, it follows that

For example, if , and ,

i.e.,

The most interesting scenario for (29) is when the integers n, i, and k satisfy the condition , which occurs in the following cases:

- , and ;

- , and ;

- , and .

If this happens, then

Since

from the fact that the inverse Laplace transform of a product is the convolution of the inverse Laplace transforms of the factors, we obtain, for example, for , , and ,

and for , , and ,

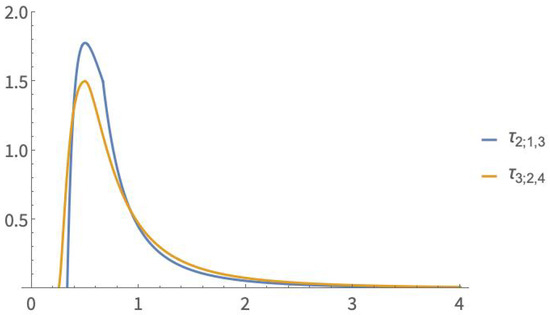

In Table A2, in Appendix A, critical values of are supplied for integers n, i, and k satisfying . The critical values of were also obtained with Mathematica v12. The probability density functions of and are shown in Figure 6.

Figure 6.

Probability density functions of and .

4.4. Comparing the Locations of Two Exponential Populations with Equal Dispersions

For two exponential populations and , it may be of interest to make inferences on . For this purpose, Theorem 1 can be helpful.

Theorem 1.

Let and be two independent random variables such that and . Then, has an asymmetric Laplace distribution with probability density function

Proof.

Let . Since and , for ,

and for ,

Hence, from , it follows that

□

If in Theorem 1 it is considered that , the symmetric Laplace distribution with location parameter , and scale parameter is obtained. For more details on asymmetric Laplace random variables, see Brilhante and Kotz [24].

When dealing with two populations with equal dispersions, inferences on the difference between the locations of the two populations can be carried out using an externally Studentized statistic as described below.

Let and be two independent random samples with parent distributions and , respectively. Considering that

from Theorem 1,

has probability density function

with .

On the other hand, as

and, similarly, , we have

The independence of spacings in exponential populations ensures that U and V are independent, and therefore, the externally Studentized statistic , i.e.,

can be used to make inferences on .

As for the probability density function of , for ,

and for ,

Therefore,

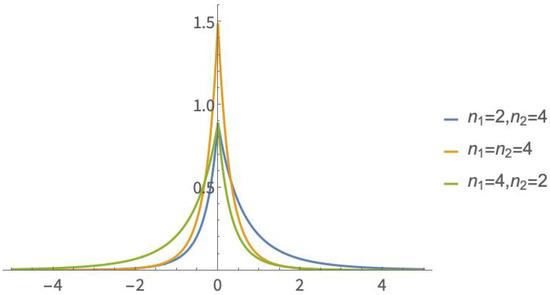

From (30), and . Notice that is the proportion of observations in the combined sample that comes from the population X.

The critical value , , of is

If a balanced design is considered instead, i.e., in (30), denoting, for simplicity, , then

The -th critical value of is , . (The distribution of is symmetric around zero.)

In Figure 7, the probability density function of is plotted for some values of and .

Figure 7.

Probability density function of .

Observe that if the two populations have unequal dispersions, i.e., , the distribution of depends on the nuisance scale parameters and , and so, it cannot be used to make inferences on , unless a Satterthwaite [15] approximation is considered (for more details, see Section 4.6).

4.5. Analysis of Spacings (ANOSp) for Testing Homogeneity of Locations of Exponential Populations with Equal Dispersions

Let be independent random samples, with , , . For what follows, we shall use the notations for the sample mean and for the j-th ascending order statistic, , of the i-th random sample, . We shall also use the notations and , with denoting the size of the combined samples.

The maximum likelihood estimators of the location and scale parameters of the individual populations are

. Our interest lies in testing the homogeneity of locations of the populations, i.e., testing

For the time being, we shall assume that the populations have equal dispersions, i.e., .

In this setting, a parallelism with a one-way ANOVA scheme can be made, since the Total Sum of Spacings (TSSp) can be split into a Between Sum of Spacings (BSSp) and a Within Sum of Spacings (WSSp) as follows:

In noticing that

the statistic is an unbiased estimator of , regardless of the validity of .

On the other hand, under , the random variables , , are independent. Thus, from

and , with , it follows that

Thus, under , the statistic is an unbiased estimator of .

From the above, and under , the F-statistic

can detect gross departures from the null hypothesis. Notice that the independence of spacings in exponential populations guarantees the independence of BSSp and WSSp.

An ANOSp table, similar to a one-way ANOVA table, can be shown in this context (see Table 1).

Table 1.

ANOSp table.

4.6. Analysis of Spacings (ANOSp) for Testing Homogeneity of Locations of Exponential Populations with Unequal Dispersions

If the exponential populations have unequal dispersions, the statistic defined in (32) is useless, because its distribution depends now on the nuisance scale parameters of the individual populations. However, a Satterthwaite approximation can be considered to eliminate its distribution’s dependence on those nuisance parameters.

Satterthwaite [15] showed that if are independent random variables such that , , the linear combination , with , , can be approximated using , where , i.e., , where the degree of freedom is estimated using the estimator

Since under , the distribution of MBSSp does not depend on , we shall assume, without loss of generality, that . We shall also assume that from , in particular, , and therefore,

which can be expressed as

with , , and . In using and , , in Formula (33), the parameter is estimated by

On the other hand, noticing that

with , , and then considering , , we have

with . In using , , in Formula (33), the parameter is estimated by

5. Conclusions

The exact distribution of externally Studentized statistics in Gaussian samples and in exponential samples are readily obtained. The members of the location-scale exponential family with a pair of sufficient statistics for the parameters are as follows:

- , when the support is the real line;

- , when the support is the half-line (or the reverted exponential when the support is , in which case the pair of sufficient statistics is , and the maximum likelihood estimators of the parameters are and );

- when the support is a segment, in which case the pair of sufficient statistics is and the maximum likelihood estimators of the parameters are and .

While for Gaussian samples and for exponential samples, the probability density functions of externally Studentized statistics are easily obtained, in the case of uniform samples, this is not possible. However, as uniform random variables are symmetric by default, the approximation under the smoothness hypothesis 1 holds, although it is known from Hendriks [11] that this is the worst framework to consider. Alternatively, as uniform samples can be logarithmically transformed into exponential data, inferences on the location parameter(s) can be dealt with using the results of Section 4.

On the other hand, it is, in general, impossible to obtain exact results for internally Studentized statistics. As Hotelling [25], Efron [10], and Lehman [26] have shown, symmetry for the parent distribution is a useful property for Studentizations. The investigation of the approximation resulting from the smoothness hypothesis (17) for symmetric parents, inspired by the Gaussian case, shows that even in the worst case, which was identified by Hendriks et al. [11] as such, the approximation in the tails is quite good, and therefore, it is usable for inferences on the location parameter.

With regard to asymmetric exponential parent distributions, from the independence of spacings, exact results either for one sample inferences on the location parameter or for the comparison of location parameters assuming equal dispersions are straightforward. An approximate solution for the unequal dispersions case, inspired by Satterthwaite’s [15] treatment for comparing means in heteroscedastic Gaussian parents, has been presented.

Author Contributions

Conceptualization, M.d.F.B., D.P. and M.L.R.; methodology, M.d.F.B. and D.P.; investigation, M.d.F.B., D.P. and M.L.R.; writing—original draft preparation, M.d.F.B. and D.P.; writing—review and editing, M.d.F.B., D.P. and M.L.R.; supervision, M.d.F.B. and D.P.; project administration, M.d.F.B. and D.P.; funding acquisition, M.d.F.B., D.P. and M.L.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially financed by national funds through FCT (Fundação para a Ciência e a Tecnologia), Portugal, under project UIDB/00006/2020 (https://doi.org/10.54499/UIDB/00006/2020), and research grant UIDB/00685/2020 of the CEEAplA (Centre of Applied Economics Studies of the Atlantic), School of Business and Economics of the University of the Azores.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Tables with Critical Values

Table A1.

Critical values of .

Table A1.

Critical values of .

| n | 0.001 | 0.005 | 0.01 | 0.025 | 0.05 | 0.1 | 0.9 | 0.95 | 0.975 | 0.99 | 0.995 | 0.999 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 0.000501 | 0.002513 | 0.005051 | 0.012821 | 0.026316 | 0.055556 | 4.5 | 9.5 | 19.5 | 49.5 | 99.5 | 499.5 |

| 3 | 0.000222 | 0.001115 | 0.002240 | 0.005666 | 0.011562 | 0.024110 | 1 | 1.614763 | 2.486079 | 4.216991 | 6.168750 | 14.408050 |

| 4 | 0.000136 | 0.000684 | 0.001373 | 0.003470 | 0.007068 | 0.014677 | 0.5 | 0.75 | 1.067030 | 1.618460 | 2.164490 | 4.047390 |

| 5 | 0.000096 | 0.000482 | 0.000966 | 0.002441 | 0.004966 | 0.010291 | 0.319126 | 0.462948 | 0.635782 | 0.917745 | 1.179750 | 1.999380 |

| 6 | 0.000073 | 0.000366 | 0.000735 | 0.001855 | 0.003771 | 0.007805 | 0.228990 | 0.325932 | 0.438821 | 0.616237 | 0.775077 | 1.244660 |

| 7 | 0.000058 | 0.000292 | 0.000587 | 0.001481 | 0.003010 | 0.006224 | 0.176015 | 0.247467 | 0.328973 | 0.453985 | 0.563222 | 0.874548 |

| 8 | 0.000048 | 0.000242 | 0.000485 | 0.001224 | 0.002487 | 0.005140 | 0.141563 | 0.197318 | 0.259996 | 0.354495 | 0.435671 | 0.661185 |

| 9 | 0.000041 | 0.000205 | 0.000411 | 0.001038 | 0.002108 | 0.004354 | 0.117566 | 0.162821 | 0.213143 | 0.288052 | 0.351592 | 0.524816 |

| 10 | 0.000035 | 0.000177 | 0.000356 | 0.000897 | 0.001822 | 0.003762 | 0.1 | 0.137805 | 0.179488 | 0.240930 | 0.292540 | 0.431222 |

| 11 | 0.000031 | 0.000156 | 0.000312 | 0.000788 | 0.001599 | 0.003301 | 0.086647 | 0.118930 | 0.154283 | 0.205986 | 0.249079 | 0.363559 |

| 12 | 0.000028 | 0.000138 | 0.000278 | 0.000700 | 0.001422 | 0.002934 | 0.076193 | 0.104239 | 0.134782 | 0.179165 | 0.215923 | 0.312669 |

| 13 | 0.000025 | 0.000124 | 0.000249 | 0.000629 | 0.001277 | 0.002634 | 0.067811 | 0.092518 | 0.119300 | 0.158009 | 0.189898 | 0.273188 |

| 14 | 0.000022 | 0.000112 | 0.002292 | 0.000570 | 0.001157 | 0.002386 | 0.060958 | 0.082974 | 0.106745 | 0.140945 | 0.168995 | 0.241782 |

| 15 | 0.000021 | 0.000103 | 0.000206 | 0.000520 | 0.001056 | 0.002177 | 0.055261 | 0.075069 | 0.096381 | 0.126926 | 0.151881 | 0.216280 |

| 16 | 0.000019 | 0.000094 | 0.000189 | 0.000478 | 0.000970 | 0.001999 | 0.050459 | 0.068426 | 0.087699 | 0.115228 | 0.137644 | 0.195213 |

| 17 | 0.000017 | 0.000087 | 0.000175 | 0.000441 | 0.000896 | 0.001847 | 0.046363 | 0.062774 | 0.080332 | 0.105336 | 0.125636 | 0.177552 |

| 18 | 0.000016 | 0.000081 | 0.000162 | 0.0004010 | 0.000831 | 0.001714 | 0.042832 | 0.057913 | 0.074011 | 0.096874 | 0.115388 | 0.162561 |

| 19 | 0.000015 | 0.000076 | 0.000151 | 0.000382 | 0.000775 | 0.001597 | 0.039761 | 0.053694 | 0.068535 | 0.089564 | 0.106553 | 0.149697 |

| 20 | 0.000014 | 0.000071 | 0.000142 | 0.000357 | 0.000725 | 0.001495 | 0.037067 | 0.05000 | 0.063751 | 0.083191 | 0.098865 | 0.138550 |

| 21 | 0.000013 | 0.000066 | 0.000133 | 0.000336 | 0.000681 | 0.001404 | 0.034687 | 0.046743 | 0.059538 | 0.077593 | 0.092122 | 0.128812 |

| 22 | 0.000012 | 0.000063 | 0.000125 | 0.000316 | 0.000642 | 0.001322 | 0.032571 | 0.043851 | 0.055804 | 0.072641 | 0.086166 | 0.120238 |

| 23 | 0.000012 | 0.000059 | 0.000118 | 0.000299 | 0.000606 | 0.001249 | 0.030679 | 0.041268 | 0.052474 | 0.068232 | 0.080871 | 0.112640 |

| 24 | 0.000011 | 0.000056 | 0.000112 | 0.000283 | 0.000574 | 0.001183 | 0.028978 | 0.038950 | 0.049488 | 0.064285 | 0.076137 | 0.105864 |

| 25 | 0.000011 | 0.000053 | 0.000107 | 0.000269 | 0.000545 | 0.001123 | 0.027441 | 0.036857 | 0.046796 | 0.060733 | 0.071880 | 0.099790 |

| 30 | 0.000008 | 0.000042 | 0.000085 | 0.000213 | 0.000433 | 0.000891 | 0.021568 | 0.028883 | 0.036567 | 0.047281 | 0.055805 | 0.076986 |

| 50 | 0.000004 | 0.000022 | 0.000045 | 0.000113 | 0.000230 | 0.000472 | 0.011186 | 0.014887 | 0.018732 | 0.024031 | 0.028200 | 0.038399 |

Table A2.

Critical values of .

Table A2.

Critical values of .

| n | i | k | 0.001 | 0.005 | 0.01 | 0.025 | 0.05 | 0.1 | 0.9 | 0.95 | 0.975 | 0.99 | 0.995 | 0.999 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | 1 | 3 | 0.340957 | 0.350877 | 0.358697 | 0.375292 | 0.395936 | 0.429336 | 1.49071 | 2.10819 | 2.98142 | 4.71405 | 6.66667 | 14.9071 |

| 4 | 2 | 4 | 0.271553 | 0.289258 | 0.301567 | 0.325555 | 0.353308 | 0.395822 | 1.79672 | 2.60383 | 3.74135 | 5.99467 | 8.53244 | 19.2387 |

| 5 | 1 | 4 | 0.451275 | 0.481872 | 0.501286 | 0.536241 | 0.573179 | 0.624337 | 1.79163 | 2.31281 | 2.96466 | 4.08859 | 5.19617 | 9.00251 |

| 6 | 2 | 5 | 0.404304 | 0.439866 | 0.461814 | 0.500623 | 0.541023 | 0.597081 | 1.93635 | 2.52828 | 3.26727 | 4.54005 | 5.79359 | 10.0999 |

| 7 | 3 | 6 | 0.373282 | 0.412145 | 0.435759 | 0.477117 | 0.520069 | 0.580165 | 2.03545 | 2.67576 | 3.47458 | 4.84979 | 6.20392 | 10.855 |

| 8 | 2 | 6 | 0.495362 | 0.540184 | 0.566193 | 0.610203 | 0.654555 | 0.715025 | 1.93923 | 2.40243 | 2.94501 | 3.81446 | 4.6136 | 7.09137 |

| 9 | 3 | 7 | 0.469472 | 0.516524 | 0.543743 | 0.589912 | 0.636674 | 0.700813 | 2.00248 | 2.49299 | 3.0672 | 3.98691 | 4.83202 | 7.4518 |

| 10 | 4 | 8 | 0.449604 | 0.498404 | 0.526618 | 0.574574 | 0.623311 | 0.690388 | 2.05194 | 2.56382 | 3.1628 | 4.12196 | 5.00318 | 7.73456 |

| 11 | 3 | 8 | 0.540956 | 0.591963 | 0.620843 | 0.669181 | 0.717526 | 0.782997 | 1.96377 | 2.36465 | 2.81547 | 3.50589 | 4.11369 | 5.88409 |

| 12 | 4 | 9 | 0.523183 | 0.575748 | 0.605564 | 0.655587 | 0.70575 | 0.773818 | 1.99983 | 2.41515 | 2.88203 | 3.59686 | 4.22604 | 6.05843 |

| 13 | 5 | 10 | 0.508582 | 0.562481 | 0.593100 | 0.644558 | 0.696248 | 0.766474 | 2.02986 | 2.4572 | 2.93749 | 3.67270 | 4.31974 | 6.20397 |

| 14 | 4 | 10 | 0.580715 | 0.63532 | 0.665943 | 0.716882 | 0.767472 | 0.835382 | 1.95716 | 2.30928 | 2.6942 | 3.26553 | 3.75377 | 5.11685 |

| 15 | 5 | 11 | 0.567344 | 0.623225 | 0.654616 | 0.706903 | 0.758895 | 0.828738 | 1.98074 | 2.34184 | 2.73646 | 3.32208 | 3.82248 | 5.21933 |

| 16 | 6 | 12 | 0.555921 | 0.612927 | 0.644989 | 0.698448 | 0.751649 | 0.823149 | 2.00113 | 2.36998 | 2.7730 | 3.37101 | 3.88193 | 5.30806 |

| 17 | 5 | 12 | 0.61511 | 0.672086 | 0.703832 | 0.756351 | 0.808153 | 0.877114 | 1.94147 | 2.25603 | 2.59269 | 3.08098 | 3.48921 | 4.59421 |

| 18 | 6 | 13 | 0.604516 | 0.662577 | 0.694965 | 0.748586 | 0.801505 | 0.871973 | 1.95825 | 2.27896 | 2.62214 | 3.11981 | 3.53583 | 4.66184 |

| 19 | 7 | 14 | 0.595226 | 0.654259 | 0.687218 | 0.741813 | 0.795718 | 0.86751 | 1.97312 | 2.29927 | 2.64823 | 3.15422 | 3.57717 | 4.72183 |

| 20 | 6 | 14 | 0.645159 | 0.703758 | 0.736231 | 0.789683 | 0.842074 | 0.911308 | 1.92354 | 2.2086 | 2.50871 | 2.93625 | 3.28769 | 4.21667 |

| 21 | 7 | 15 | 0.636466 | 0.696005 | 0.729022 | 0.783394 | 0.836703 | 0.907156 | 1.93617 | 2.22574 | 2.53055 | 2.96473 | 3.32158 | 4.26483 |

| 22 | 8 | 16 | 0.628699 | 0.689089 | 0.722597 | 0.777795 | 0.831926 | 0.903471 | 1.94757 | 2.24118 | 2.555023 | 2.9904 | 3.35216 | 4.3083 |

| 23 | 7 | 16 | 0.671706 | 0.731426 | 0.764359 | 0.81832 | 0.870914 | 0.939971 | 1.90572 | 2.16709 | 2.43863 | 2.81995 | 3.12915 | 3.93126 |

| 24 | 8 | 17 | 0.664389 | 0.724931 | 0.758334 | 0.813078 | 0.866443 | 0.936514 | 1.91563 | 2.18046 | 2.45556 | 2.84183 | 3.15503 | 3.96745 |

| 25 | 9 | 18 | 0.657759 | 0.719053 | 0.752883 | 0.80834 | 0.862405 | 0.933397 | 1.92469 | 2.19266 | 2.47102 | 2.86182 | 3.17867 | 4.00052 |

| 30 | 10 | 21 | 0.711262 | 0.772902 | 0.806614 | 0.861427 | 0.914346 | 0.98310 | 1.87984 | 2.10779 | 2.33984 | 2.65864 | 2.91187 | 3.55044 |

| 50 | 16 | 34 | — | 0.885094 | 0.917836 | 0.970073 | 1.01943 | 1.082080 | 1.79297 | 1.95205 | 2.10773 | 2.31277 | 2.46933 | 2.84359 |

References

- Student. The probable error of a mean. Biometrika 1908, 6, 1–25. [Google Scholar] [CrossRef]

- Geary, R.C. Distribution of Student’s ratio for nonnormal samples. Suppl. J. R. Stat. Soc. 1936, 3, 178–184. [Google Scholar] [CrossRef]

- Darmois, G. Analyse générale des liaisons stochastiques: Étude particuière de l’analyse factorielle lineéaire. Rev. L’Institut Int. Stat./Rev. Int. Stat. Inst. 1953, 21, 2–8. [Google Scholar] [CrossRef]

- Skitovich, V.P. Linear forms of independent random variables and the normal distribution law. Izv. Akad. Nauk SSSR Ser. Mat. 1954, 18, 185–200, (English Translation Sel. Transl. Math. Stat. Probab. 1962, 2, 211–218). [Google Scholar]

- Koopman, B.O. On distributions admitting a sufficient statistic. Trans. Am. Math. Soc. 1936, 39, 399–409. [Google Scholar] [CrossRef]

- Darmois, G. Sur les lois de probabilités à estimation exhaustive. Comptes Rendus L’Acad. Sci. Paris 1935, 200, 1265–1266. [Google Scholar]

- Pitman, E. Sufficient statistics and intrinsic accuracy. Math. Proc. Camb. Philos. Soc. 1936, 32, 567–579. [Google Scholar] [CrossRef]

- Logan, B.F.; Mallows, C.L.; Rice, S.O.; Shepp, L.A. Limit distributions of self-normalized sums. Ann. Probab. 1973, 1, 788–809. [Google Scholar] [CrossRef]

- Peña, V.H.D.L.; Lai, T.L.; Shao, Q.M. Self-Normalized Processes. Limit Theory and Statistical Applications; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Efron, B. Student’s t-test under symmetry conditions. J. Am. Stat. Assoc. 1969, 64, 1278–1302. [Google Scholar] [CrossRef]

- Hendriks, H.W.M.; Ijzerman-Boon, P.C.; Klaassen, C.A.J. Student’s t-statistic under unimodal densities. Austrian J. Stat. 2006, 35, 131–141. [Google Scholar] [CrossRef]

- Perlo, V. On the distribution of Student’s ratio for samples of three drawn from the rectangular distribution. Biometrika 1933, 25, 203–204. [Google Scholar] [CrossRef]

- Pexider, J.V. Notiz über Funkcionaltheoreme. Monatsh. Math. Phys. 1903, 14, 293–301. [Google Scholar] [CrossRef]

- David, H.A. Order Statistics; Wiley: New York, NY, USA, 1981. [Google Scholar]

- Satterthwaite, F.E. An approximate distribution of estimates of variance components. Biom. Bull. 1946, 2, 110–114. [Google Scholar] [CrossRef]

- van Zwet, W. Convex Transformations of Random Variables, 7th ed.; Mathematical Centre: Amsterdam, The Netherlands, 1964. [Google Scholar]

- van Zwet, W. Convex transformations: A new approach to skewness and kurtosis. Statist. Neerl. 1964, 18, 433–441. [Google Scholar] [CrossRef]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions: With Formulas, Graphs, and Mathematical Tables, 8th ed.; Dover: New York, NY, USA, 1972. [Google Scholar]

- Slutsky, E. Über stochastische Asymptoten und Grenzwerte. Metron 1964, 5, 3–89. [Google Scholar]

- Hampel, F.R. Contributions to the Theory of Robust Estimation. Ph.D. Thesis, University of California, Berkeley, CA, USA, 1986. [Google Scholar]

- Ronchetti, E. The main contributions of robust statistics to statistical science and a new challenge. Metron 2021, 79, 127–135. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Kamper, F.; Beirlant, J. Outlier detection based on extreme value theory and applications. Scand. J. Stat. 2024, 50, 1466–1502. [Google Scholar] [CrossRef]

- Basu, D. On statistics independent of a complete sufficient statistic. Sankhyā Indian J. Stat. 1955, 15, 377–380. [Google Scholar] [CrossRef]

- Brilhante, M.F.; Kotz, S. Infinite divisibility of the spacings of a Kotz–Kozubowski–Podgórski generalized Laplace model. Stat. Probab. Lett. 2008, 78, 2433–2436. [Google Scholar] [CrossRef]

- Hotelling, H. The behavior of some standard statistical tests under nonstandard conditions. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability (Volume 1: Contributions to the Theory of Statistics); Neyman, J., Ed.; University of California Press: Berkeley, CA, USA, 1961; pp. 319–359. [Google Scholar]

- Lehmann, E.L. “Student” and small-sample theory. Stat. Sci. 1999, 14, 418–426. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).