An Adaptive Fatigue Detection System Based on 3D CNNs and Ensemble Models

Abstract

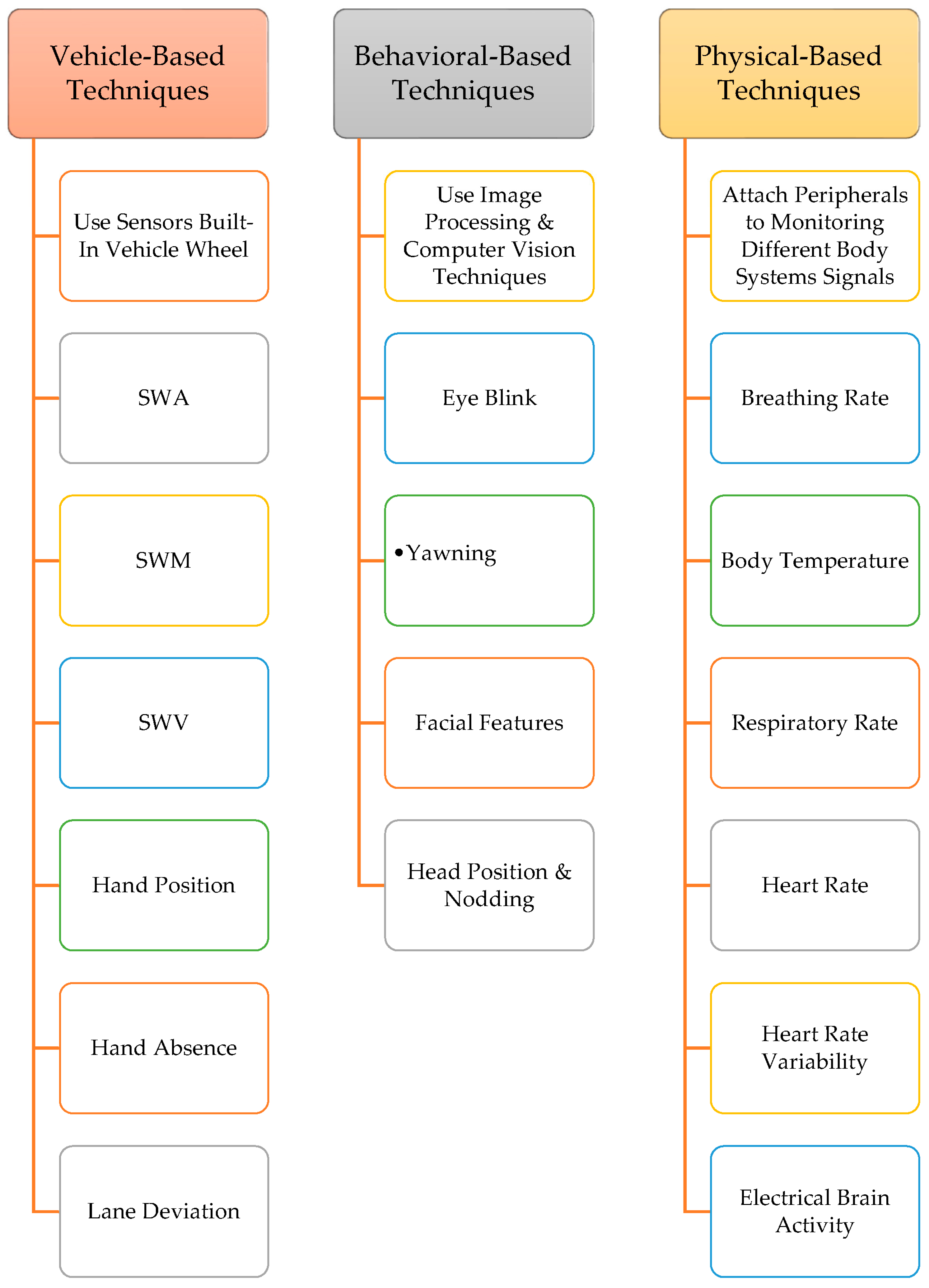

1. Introduction

1.1. Related Work

1.2. Novelty and Contributions

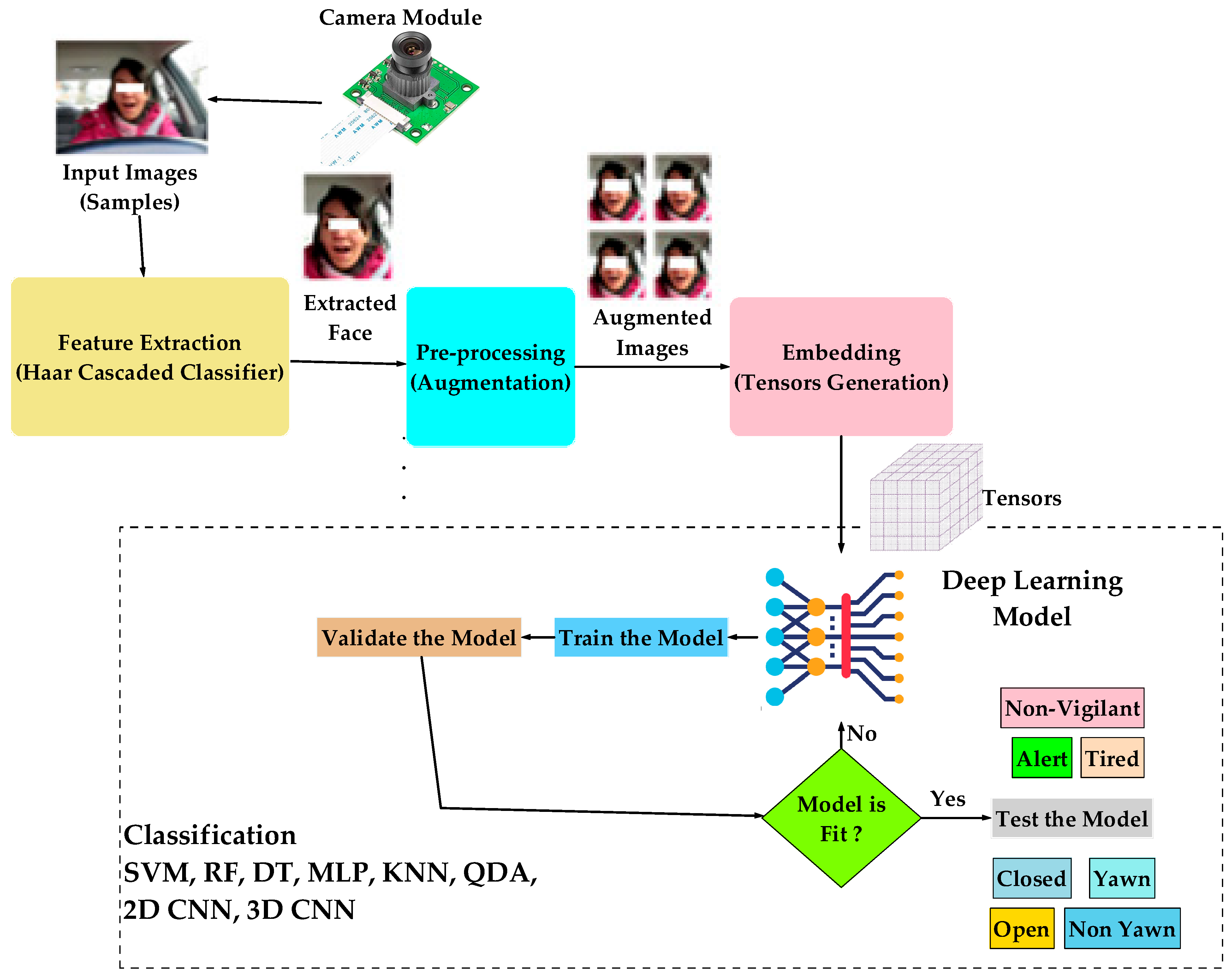

- The process of feature extraction from images and videos is accomplished by the utilization of the Haar Cascaded Classifier (HCC).

- To investigate a cascaded system that detects both tiredness and eye closure. This system is the first concern in this topic, to the best of the author’s knowledge.

- To explore an improved approach to detect fatigue from images based on machine learning methods utilizing SVM, RF, DT, KNN, QDA, MLP, and LR.

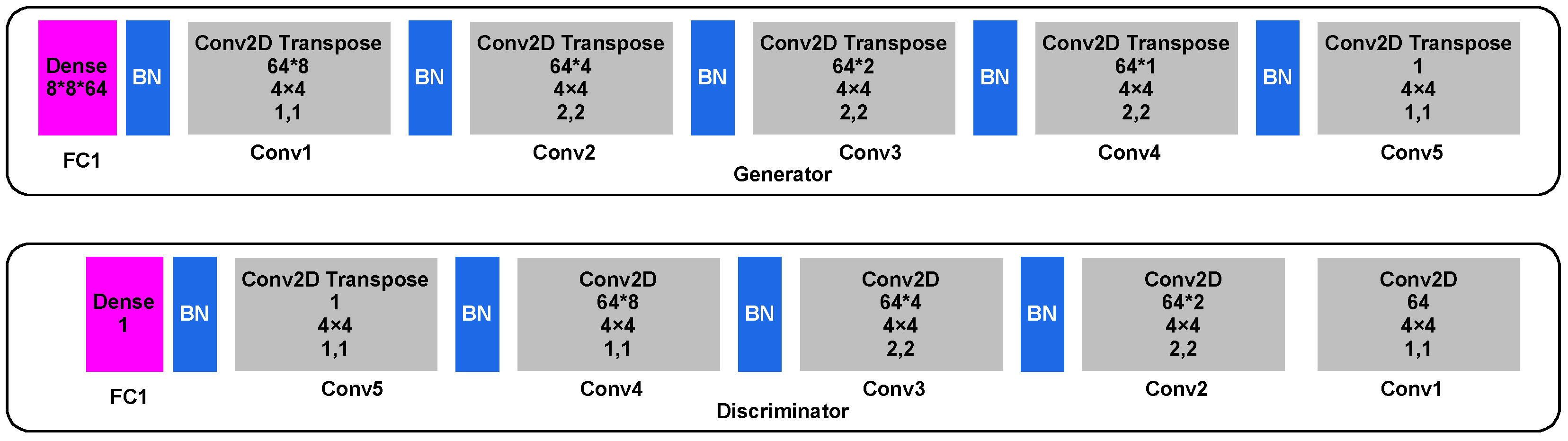

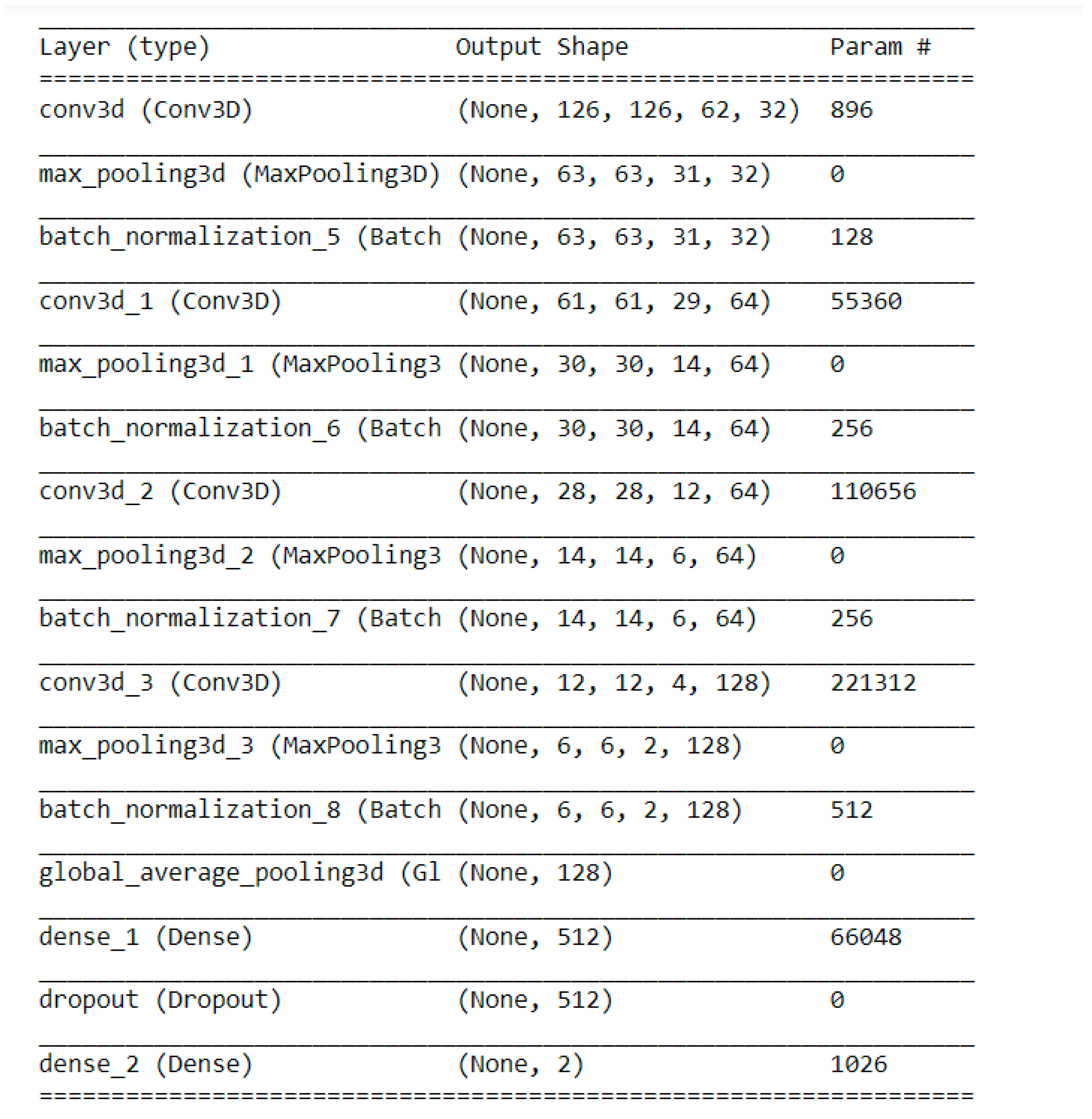

- To design some deep learning models based on 2D and 3D CNNs to handle the input data in RGB modality with specific hyper-parameters.

- A comparison is carried out among the proposed models, which presents the optimal one based on the accuracy of detection and testing time.

2. Materials and Methods

| Algorithm 1: Steps of Drowsiness Detection in The Proposed System |

| Input Data |

| Step 1: Face extraction |

| Step 2: Face Detection |

| Step 3: Drowsiness Detection from face if yawn; Alert; else if tired; Alert; end if; else Go to step 5; |

| Step 4: Eye Detection |

| Step 5: Drowsiness detection from Eye |

| if yawn; Alert; else Go to step 1; end if; |

2.1. Image Augmentation

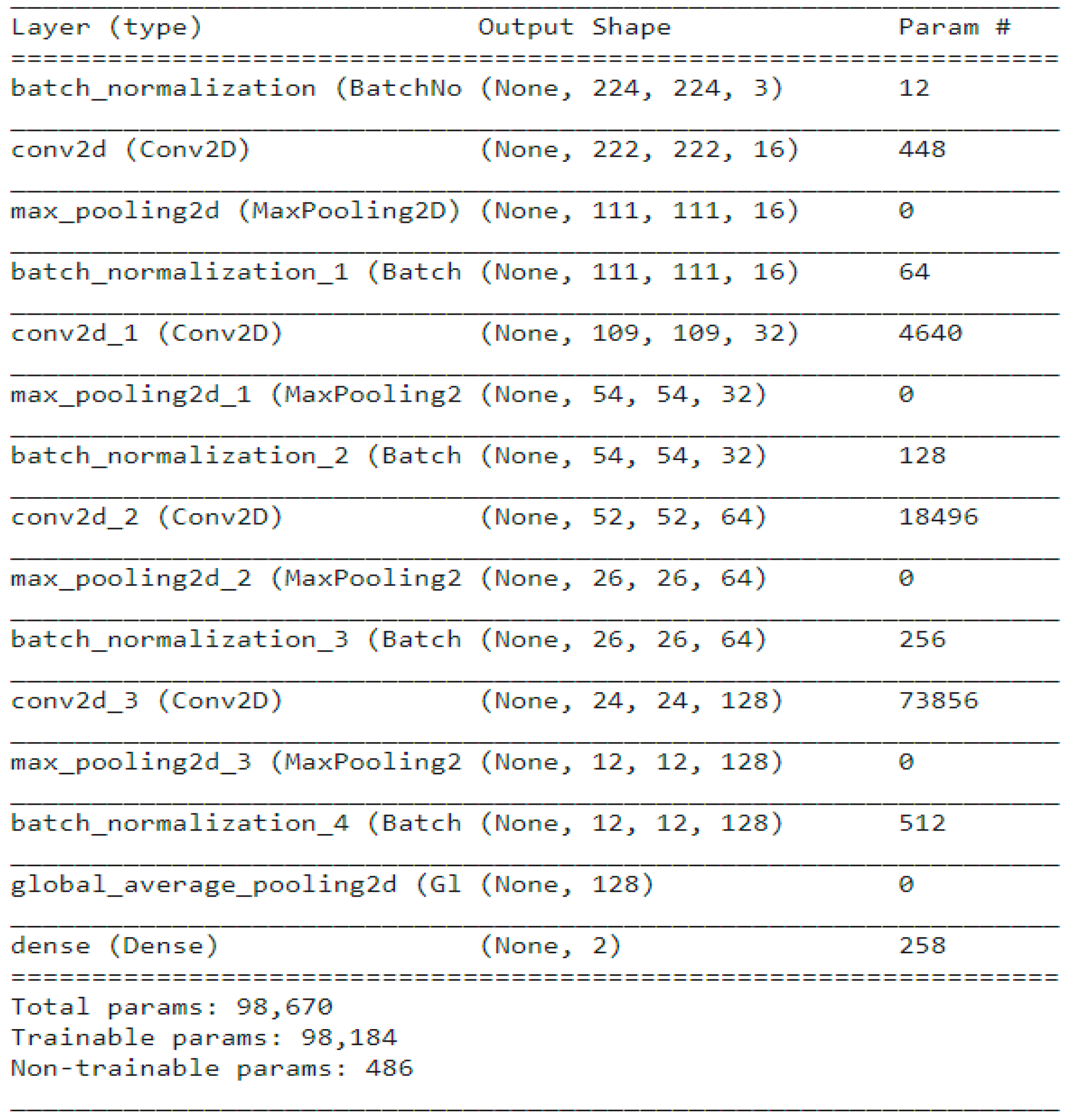

2.2. Classification

3. Results

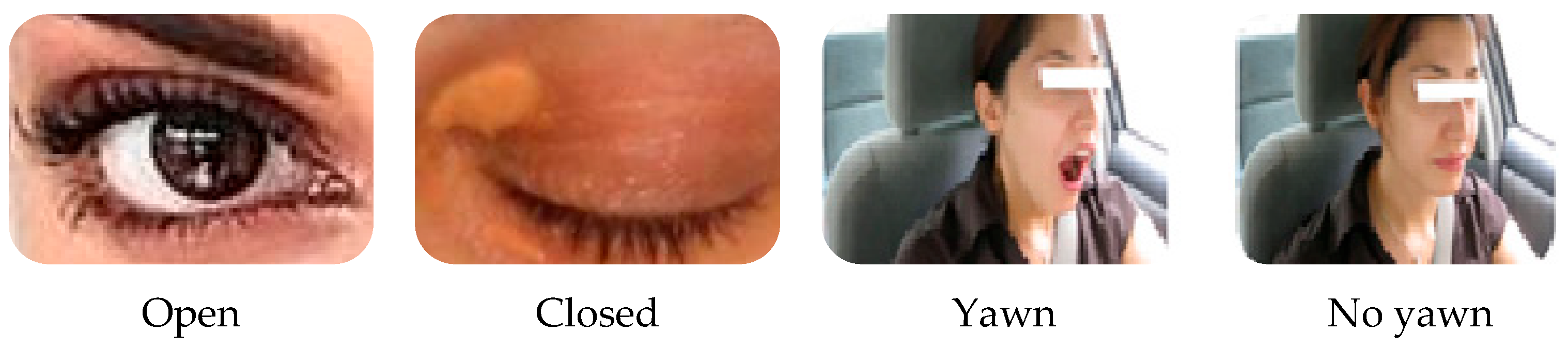

3.1. Datasets

3.2. Evaluation Metrics

3.3. Hyperparameter Setting

3.4. Simulation Results

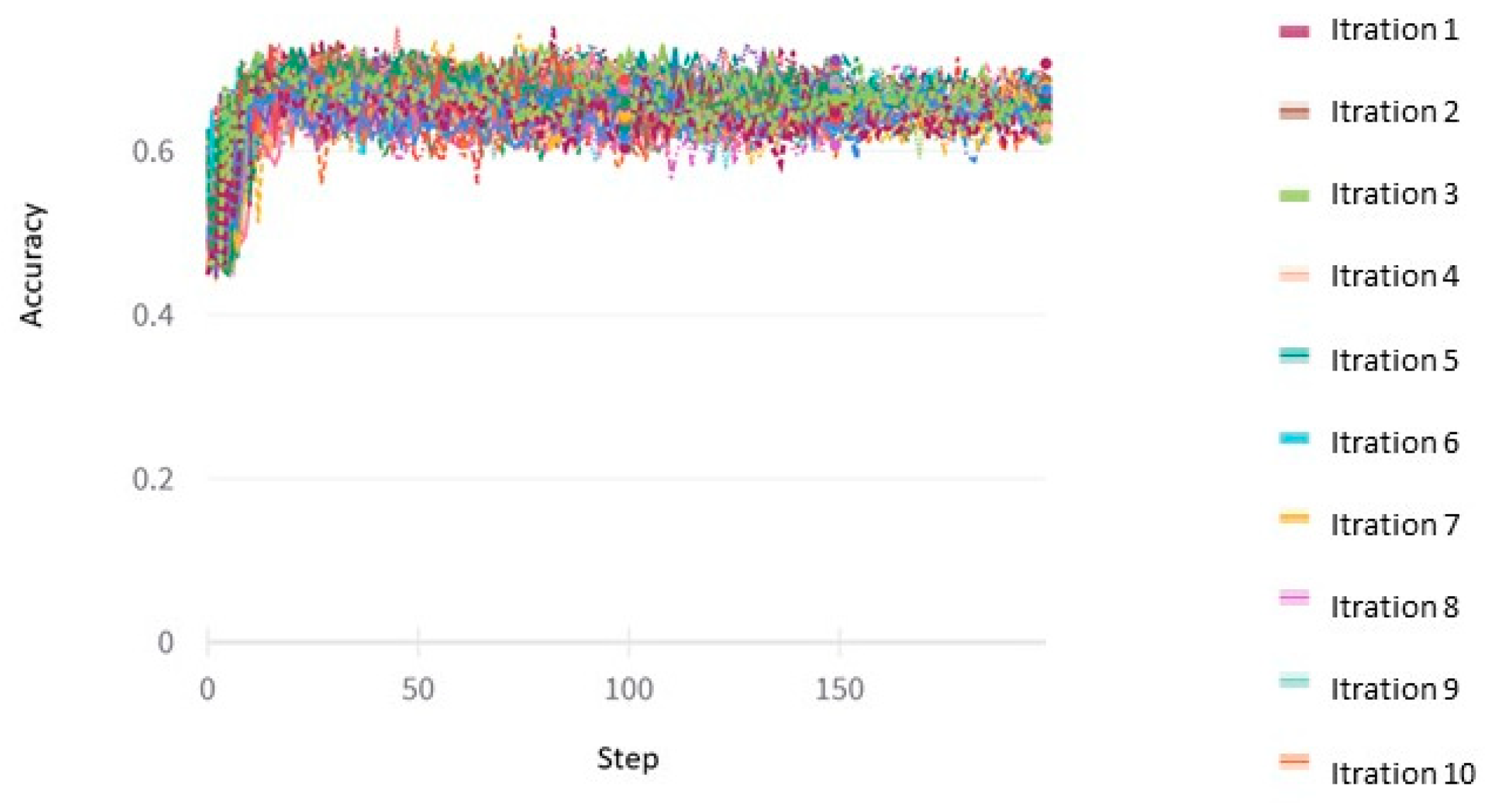

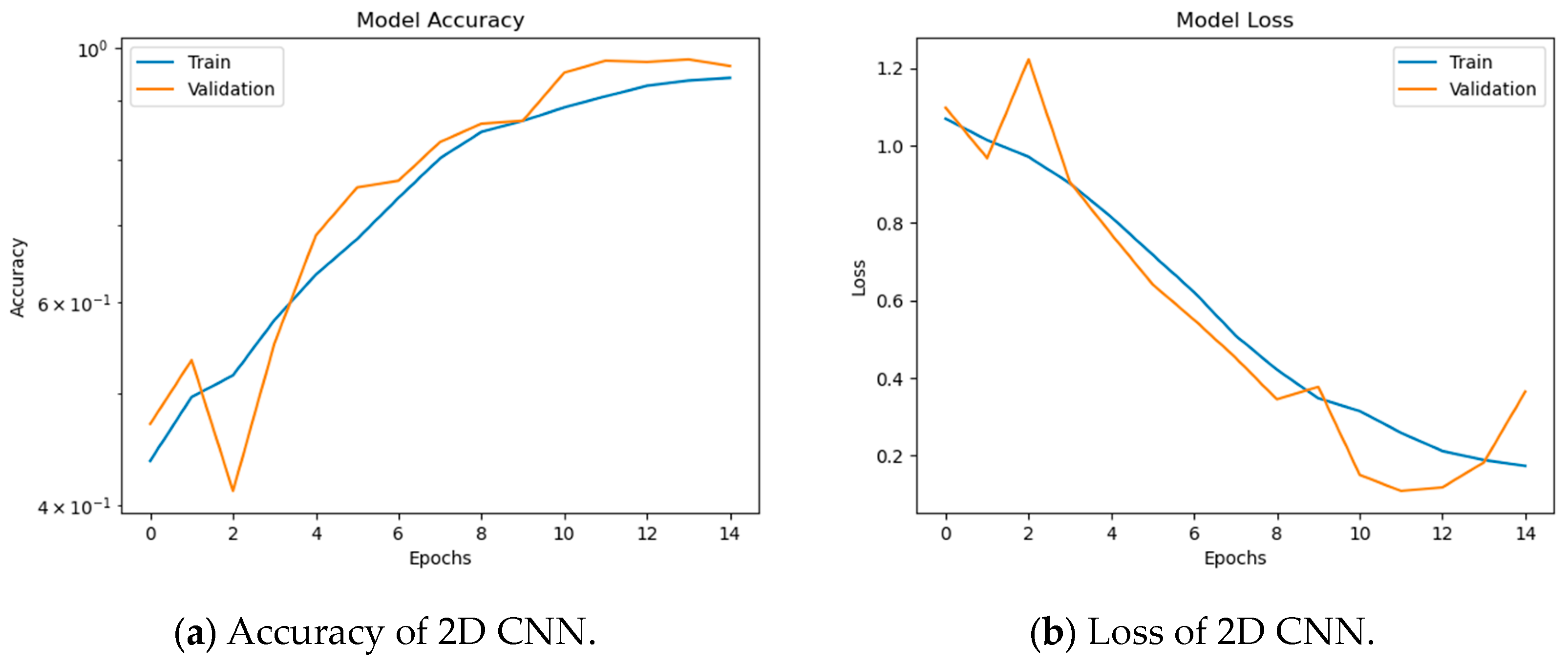

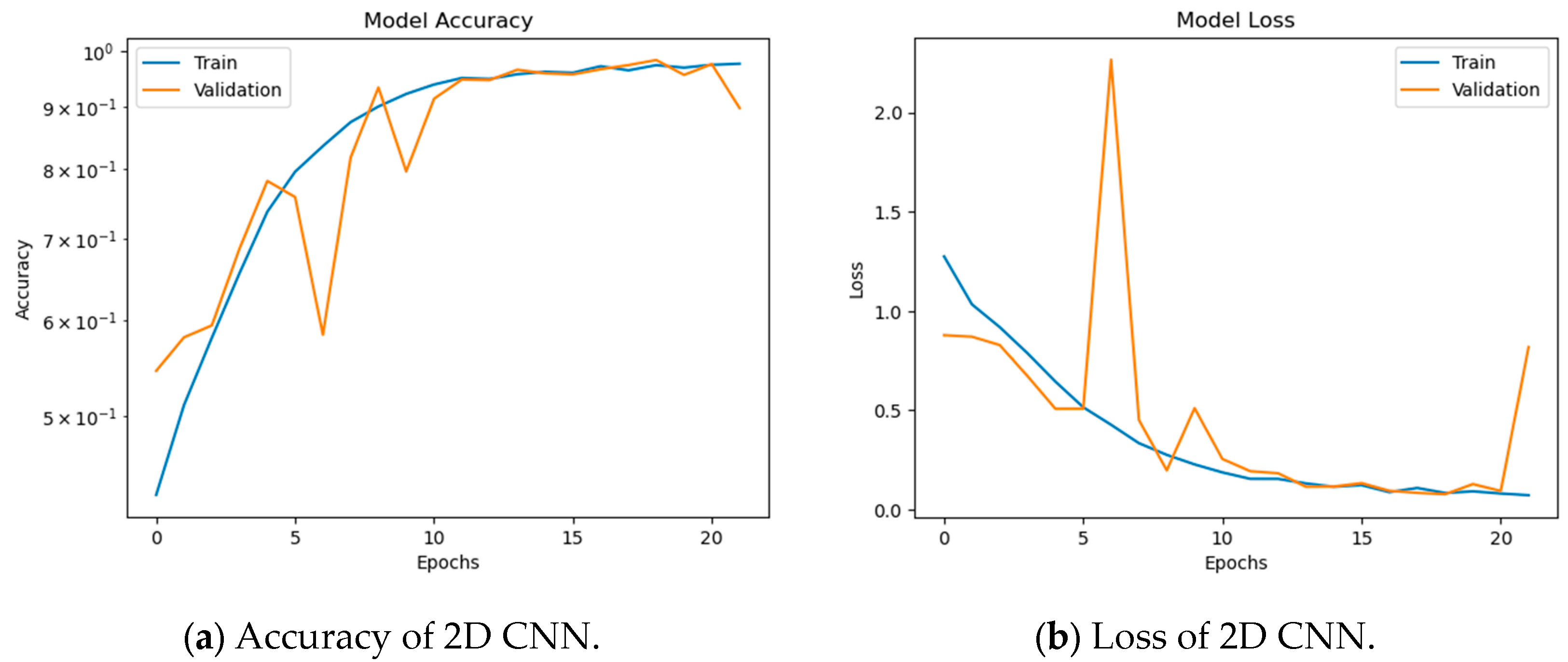

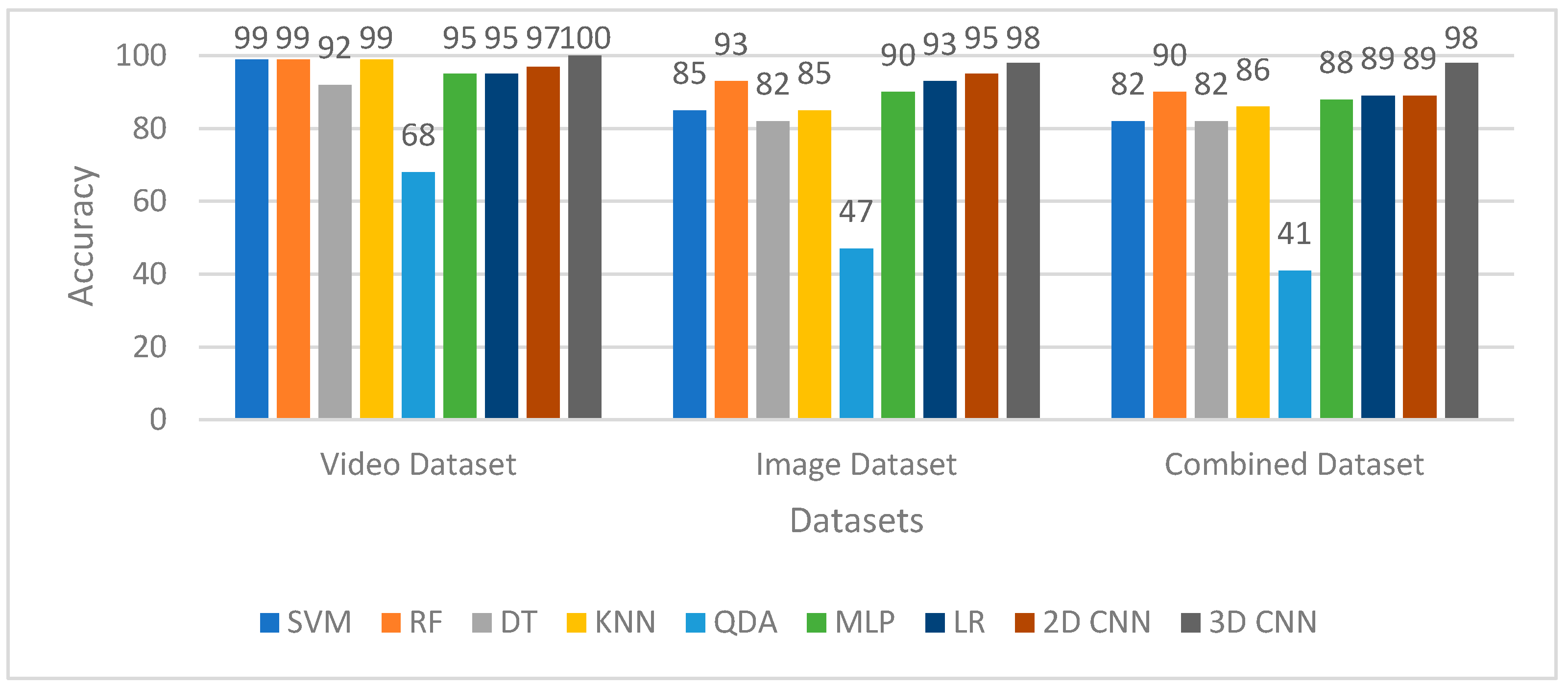

3.4.1. Simulation Results of DROZY Video Dataset

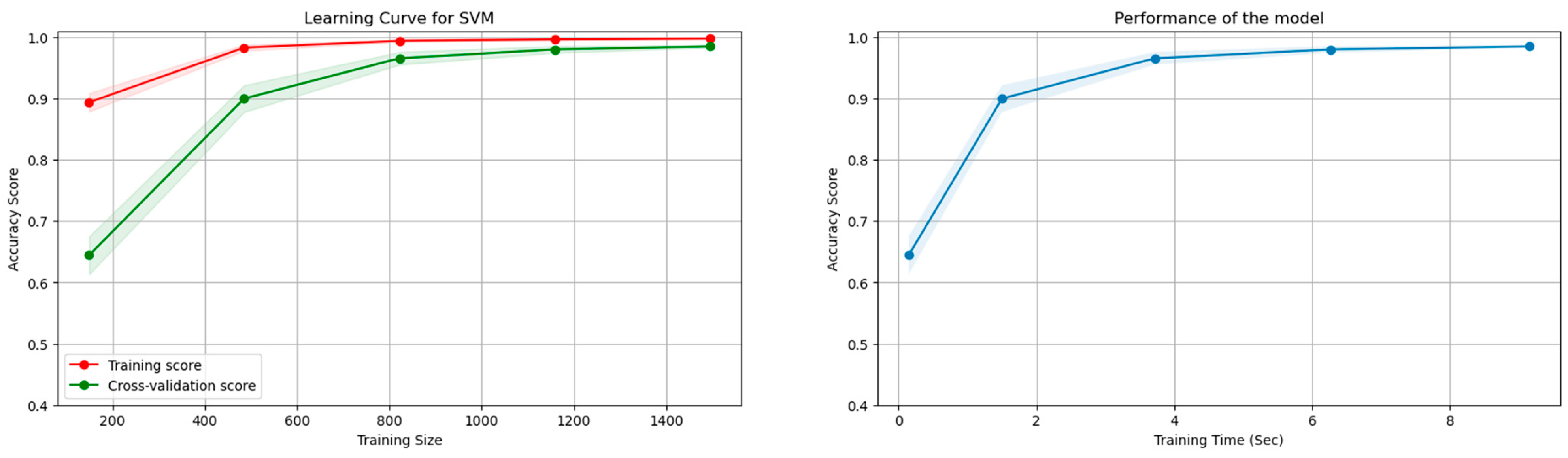

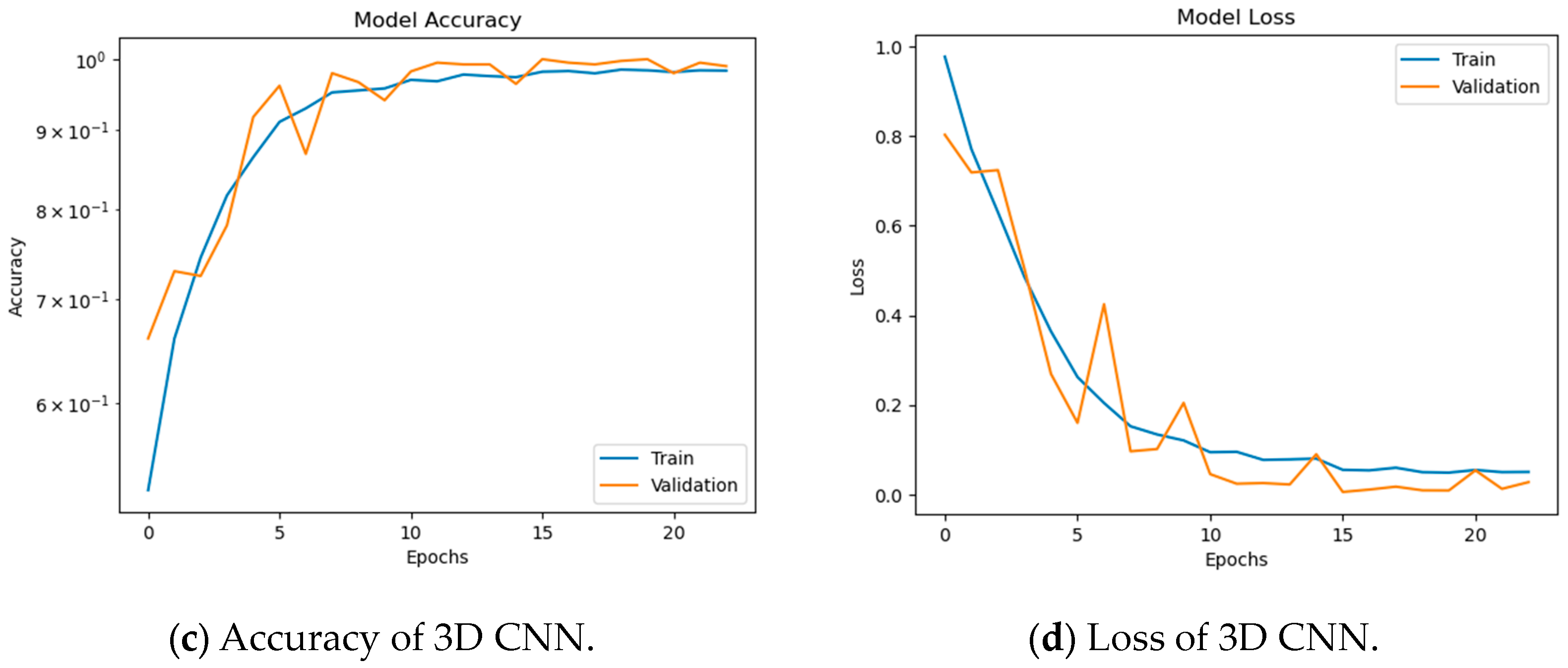

3.4.2. Simulation Results of Drowsiness Image Dataset

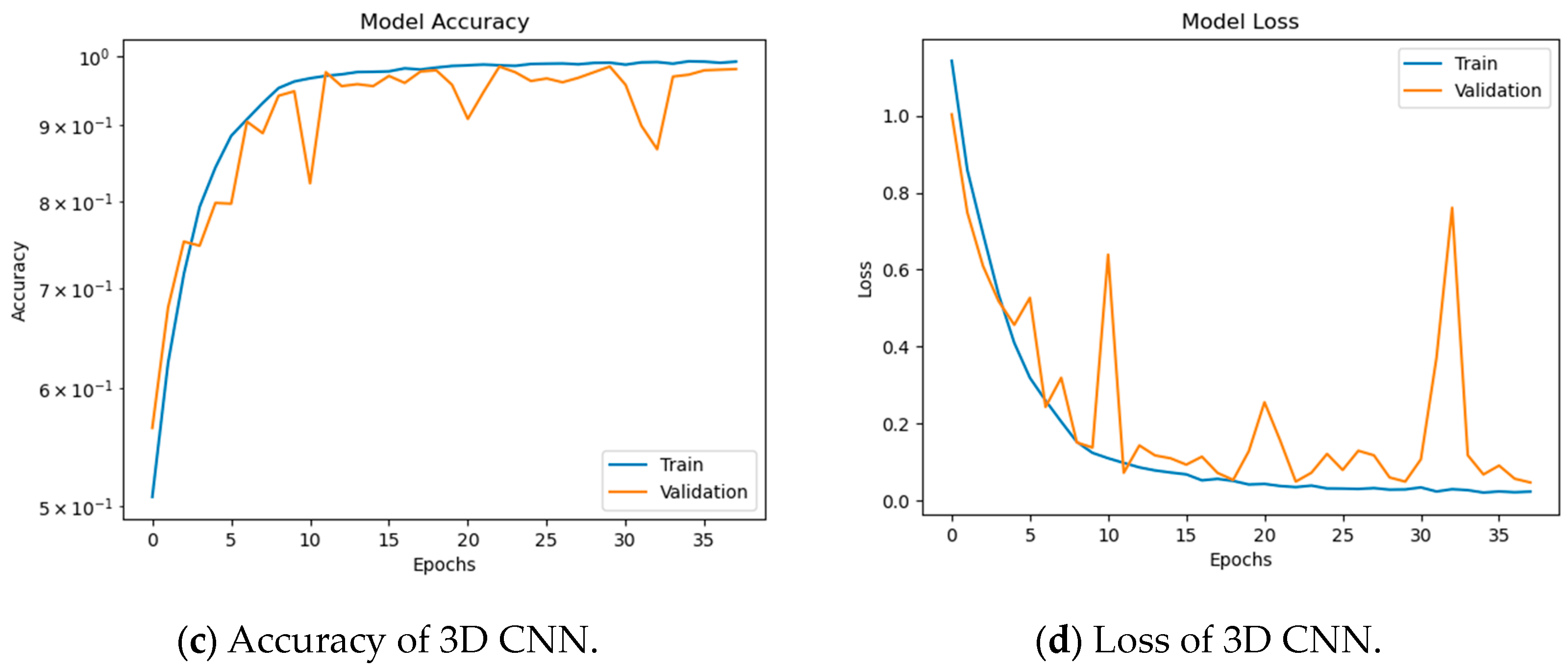

3.4.3. Simulation Results of The Combined Dataset

4. Discussion

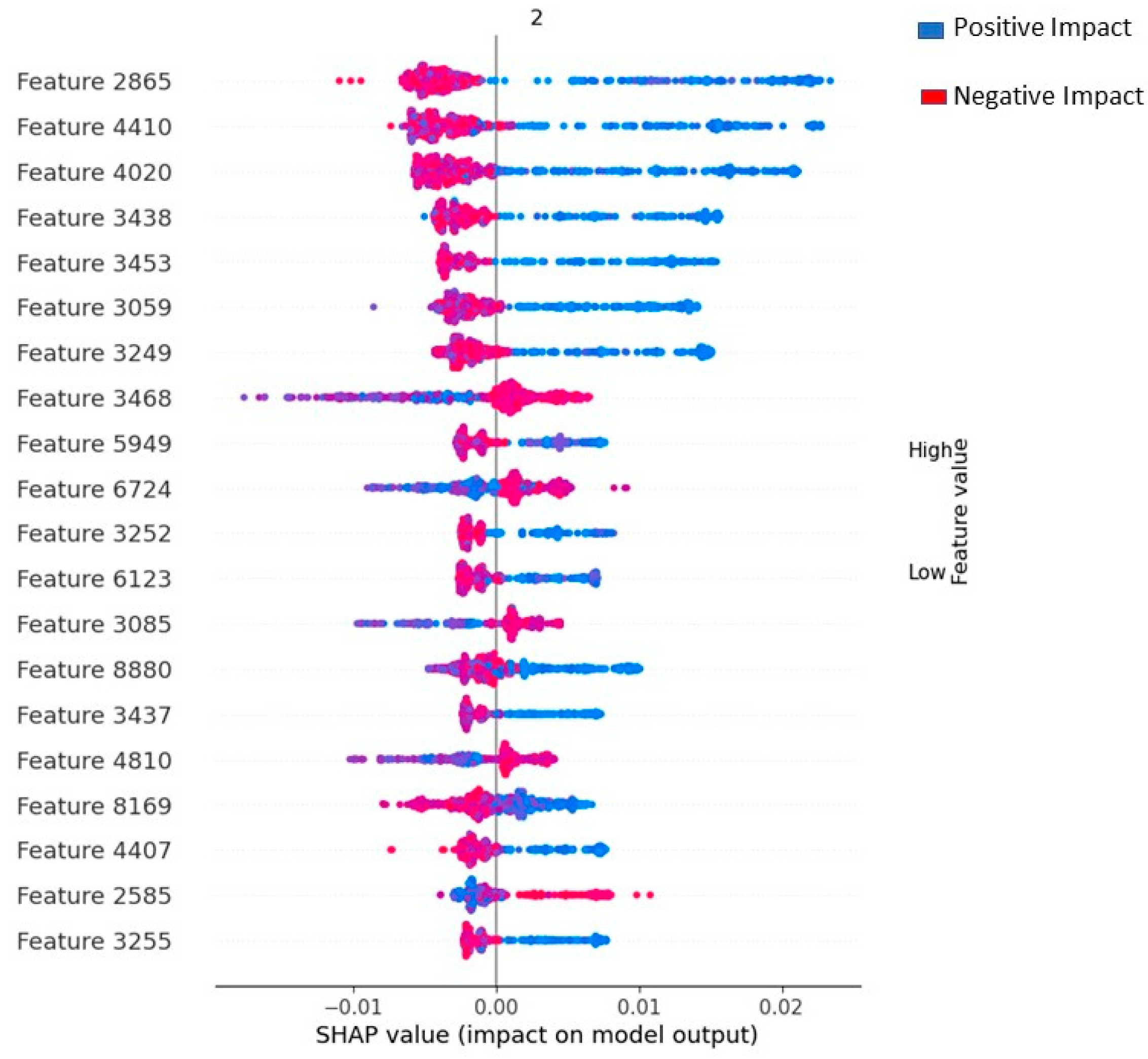

4.1. Explainability and Features Impact

4.2. Results Discussion and Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Abbas, Q.; Alsheddy, A. Driver Fatigue Detection Systems Using Multi-Sensors, Smartphone, and Cloud-Based Computing Platforms: A Comparative Analysis. Sensors 2020, 21, 56. [Google Scholar] [CrossRef] [PubMed]

- Ramzan, M.; Khan, H.U.; Awan, S.M.; Ismail, A.; Ilyas, M.; Mahmood, A. A Survey on State-of-the-Art Drowsiness Detection Techniques. IEEE Access 2019, 7, 61904–61919. [Google Scholar] [CrossRef]

- Niloy, A.R.; Chowdhury, A.I.; Sharmin, N. A Brief Review on Different Driver’s Drowsiness Detection Techniques. Int. J. Image Graph. Signal Process. 2020, 10, 41. [Google Scholar]

- Choudhary, P.; Sharma, R.; Singh, G.; Das, S. A Survey Paper on Drowsiness Detection & Alarm System for Drivers. Int. Res. J. Eng. Technol. 2016, 3, 1433–1437. [Google Scholar]

- Khan, M.Q.; Lee, S. A Comprehensive Survey of Driving Monitoring and Assistance Systems. Sensors 2019, 19, 2574. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zhi, X.; Wang, H.; Wang, G.; Zhou, Z.; Yazdani, A.; Zheng, X. Driver Fatigue Detection via Differential Evolution Extreme Learning Machine Technique. Electronics 2020, 9, 1850. [Google Scholar] [CrossRef]

- Fuletra, J.D.; Bosamiya, D. A Survey on Drivers Drowsiness Detection Techniques. Int. J. Recent Innov. Trends Comput. Commun. 2013, 1, 816–819. [Google Scholar]

- Bergasa, L.M.; Nuevo, J.; Sotelo, M.A.; Barea, R.; Lopez, M.E. Real-Time System for Monitoring Driver Vigilance. IEEE Trans. Intell. Transp. Syst. 2006, 7, 63–77. [Google Scholar] [CrossRef]

- Abtahi, S.; Hariri, B.; Shirmohammadi, S. Driver Drowsiness Monitoring Based on Yawning Detection. In Proceedings of the 2011 IEEE International Instrumentation and Measurement Technology Conference, Hangzhou, China, 10–12 May 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–4. [Google Scholar]

- Flores, M.J.; Armingol, J.M.; de la Escalera, A. Real-Time Warning System for Driver Drowsiness Detection Using Visual Information. J. Intell. Robot. Syst. 2010, 59, 103–125. [Google Scholar] [CrossRef]

- Lenskiy, A.A.; Lee, J.-S. Driver’s Eye Blinking Detection Using Novel Color and Texture Segmentation Algorithms. Int. J. Control. Autom. Syst. 2012, 10, 317–327. [Google Scholar] [CrossRef]

- Jo, J.; Lee, S.J.; Kim, J.; Jung, H.G.; Park, K.R. Vision-Based Method for Detecting Driver Drowsiness and Distraction in Driver Monitoring System. Opt. Eng. 2011, 50, 127202. [Google Scholar] [CrossRef]

- Malla, A.M.; Davidson, P.R.; Bones, P.J.; Green, R.; Jones, R.D. Automated Video-Based Measurement of Eye Closure for Detecting Behavioral Microsleep. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 6741–6744. [Google Scholar]

- Fu, Y.; Fu, H.; Zhang, S. A Novel Safe Life Extension Method for Aircraft Main Landing Gear Based on Statistical Inference of Test Life Data and Outfield Life Data. Symmetry 2023, 15, 880. [Google Scholar] [CrossRef]

- Yang, G.; Tang, C.; Liu, X. DualAC2NN: Revisiting and Alleviating Alert Fatigue from the Detection Perspective. Symmetry 2022, 14, 2138. [Google Scholar] [CrossRef]

- Xiao, C.; Han, L.; Chen, S. Automobile Driver Fatigue Detection Method Based on Facial Image Recognition under Single Sample Condition. Symmetry 2021, 13, 1195. [Google Scholar] [CrossRef]

- Sigari, M.-H.; Fathy, M.; Soryani, M. A Driver Face Monitoring System for Fatigue and Distraction Detection. Int. J. Veh. Technol. 2013, 2013, 263983. [Google Scholar] [CrossRef]

- Vijayan, V.; Sherly, E. Real Time Detection System of Driver Drowsiness Based on Representation Learning Using Deep Neural Networks. J. Intell. Fuzzy Syst. 2019, 36, 1977–1985. [Google Scholar] [CrossRef]

- Galarza, E.E.; Egas, F.D.; Silva, F.M.; Velasco, P.M.; Galarza, E.D. Real Time Driver Drowsiness Detection Based on Driver’s Face Image Behavior Using a System of Human Computer Interaction Implemented in a Smartphone. In Proceedings of the International Conference on Information Technology & Systems, San Francisco, CA, USA, 13–16 December 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 563–572. [Google Scholar]

- Arceda, V.E.M.; Nina, J.P.C.; Fabian, K.M.F. A Survey on Drowsiness Detection Techniques. In Proceedings of the Iberoamerican Conference of Computer Human Interaction, Arequipa, Perú, 16–18 September 2020; Volume 15, p. 2021. [Google Scholar]

- Ouabida, E.; Essadike, A.; Bouzid, A. Optical Correlator Based Algorithm for Driver Drowsiness Detection. Optik 2020, 204, 164102. [Google Scholar] [CrossRef]

- Maior, C.B.S.; das Chagas Moura, M.J.; Santana, J.M.M.; Lins, I.D. Real-Time Classification for Autonomous Drowsiness Detection Using Eye Aspect Ratio. Expert Syst. Appl. 2020, 158, 113505. [Google Scholar] [CrossRef]

- Saurav, S.; Mathur, S.; Sang, I.; Prasad, S.S.; Singh, S. Yawn Detection for Driver’s Drowsiness Prediction Using Bi-Directional LSTM with CNN Features. In Proceedings of the International Conference on Intelligent Human Computer Interaction, Copenhagen, Denmark, 19–24 July 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 189–200. [Google Scholar]

- Abtahi, S.; Omidyeganeh, M.; Shirmohammadi, S.; Hariri, B. YawDD: A Yawning Detection Dataset. In Proceedings of the 5th ACM Multimedia Systems Conference, Singapore, 19–21 March 2014; pp. 24–28. [Google Scholar]

- Weng, C.-H.; Lai, Y.-H.; Lai, S.-H. Driver Drowsiness Detection via a Hierarchical Temporal Deep Belief Network. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2017; pp. 117–133. [Google Scholar]

- Biswal, A.K.; Singh, D.; Pattanayak, B.K.; Samanta, D.; Yang, M.-H. IoT-Based Smart Alert System for Drowsy Driver Detection. Wirel. Commun. Mob. Comput. 2021, 2021, 6627217. [Google Scholar] [CrossRef]

- Jeon, Y.; Kim, B.; Baek, Y. Ensemble CNN to Detect Drowsy Driving with In-Vehicle Sensor Data. Sensors 2021, 21, 2372. [Google Scholar] [CrossRef]

- Sedik, A.; Marey, M.; Mostafa, H. WFT-Fati-Dec: Enhanced Fatigue Detection AI System Based on Wavelet Denoising and Fourier Transform. Appl. Sci. 2023, 13, 2785. [Google Scholar] [CrossRef]

- Kamaruzzaman, M.A.; Othman, M.; Hassan, R.; Rahman, A.W.A.; Mahri, N. EEG Features for Driver’s Mental Fatigue Detection: A Preliminary Work. Int. J. Perceptive Cogn. Comput. 2023, 9, 88–94. [Google Scholar]

- Feng, W.; Zeng, K.; Zeng, X.; Chen, J.; Peng, H.; Hu, B.; Liu, G. Predicting Physical Fatigue in Athletes in Rope Skipping Training Using ECG Signals. Biomed. Signal Process. Control 2023, 83, 104663. [Google Scholar] [CrossRef]

- Alharbey, R.; Dessouky, M.M.; Sedik, A.; Siam, A.I.; Elaskily, M.A. Fatigue State Detection for Tired Persons in Presence of Driving Periods. IEEE Access 2022, 10, 79403–79418. [Google Scholar] [CrossRef]

- Zhu, M.; Chen, J.; Li, H.; Liang, F.; Han, L.; Zhang, Z. Vehicle Driver Drowsiness Detection Method Using Wearable EEG Based on Convolution Neural Network. Neural Comput. Appl. 2021, 33, 13965–13980. [Google Scholar] [CrossRef]

- Hemantkumar, B.; Shashikant, D. Non-Intrusive Detection and Prediction of Driver’s Fatigue Using Optimized Yawning Technique. Mater. Today Proc. 2017, 4, 7859–7866. [Google Scholar] [CrossRef]

- Knapik, M.; Cyganek, B. Driver’s Fatigue Recognition Based on Yawn Detection in Thermal Images. Neurocomputing 2019, 338, 274–292. [Google Scholar] [CrossRef]

- Liu, Z.; Peng, Y.; Hu, W. Driver Fatigue Detection Based on Deeply-Learned Facial Expression Representation. J. Vis. Commun. Image Represent. 2020, 71, 102723. [Google Scholar] [CrossRef]

- Devos, H.; Alissa, N.; Lynch, S.; Sadeghi, M.; Akinwuntan, A.E.; Siengsukon, C. Real-Time Assessment of Daytime Sleepiness in Drivers with Multiple Sclerosis. Mult. Scler. Relat. Disord. 2021, 47, 102607. [Google Scholar] [CrossRef]

- Siam, A.I.; Soliman, N.F.; Algarni, A.D.; Abd El-Samie, F.E.; Sedik, A. Deploying Machine Learning Techniques for Human Emotion Detection. Comput. Intell. Neurosci. 2022, 2022, 8032673. [Google Scholar] [CrossRef]

- El-Moneim, S.A.; Sedik, A.; Nassar, M.A.; El-Fishawy, A.S.; Sharshar, A.M.; Hassan, S.E.A.; Mahmoud, A.Z.; Dessouky, M.I.; El-Banby, G.M.; El-Samie, F.E.A.; et al. Text-Dependent and Text-Independent Speaker Recognition of Reverberant Speech Based on CNN. Int. J. Speech Technol. 2021, 24, 993–1006. [Google Scholar] [CrossRef]

- Ali, A.M.; Benjdira, B.; Koubaa, A.; El-Shafai, W.; Khan, Z.; Boulila, W. Vision Transformers in Image Restoration: A Survey. Sensors 2023, 23, 2385. [Google Scholar] [CrossRef]

- Hammad, M.; Abd El-Latif, A.A.; Hussain, A.; Abd El-Samie, F.E.; Gupta, B.B.; Ugail, H.; Sedik, A. Deep Learning Models for Arrhythmia Detection in IoT Healthcare Applications. Comput. Electr. Eng. 2022, 100, 108011. [Google Scholar] [CrossRef]

- Ibrahim, F.E.; Emara, H.M.; El-Shafai, W.; Elwekeil, M.; Rihan, M.; Eldokany, I.M.; Taha, T.E.; El-Fishawy, A.S.; El-Rabaie, E.M.; Abdellatef, E. Deep Learning-based Seizure Detection and Prediction from EEG Signals. Int. J. Numer. Method. Biomed. Eng. 2022, 38, e3573. [Google Scholar] [CrossRef]

- Shoaib, M.R.; Emara, H.M.; Elwekeil, M.; El-Shafai, W.; Taha, T.E.; El-Fishawy, A.S.; El-Rabaie, E.-S.M.; El-Samie, F.E.A. Hybrid Classification Structures for Automatic COVID-19 Detection. J. Ambient Intell. Humaniz. Comput. 2022, 13, 4477–4492. [Google Scholar] [CrossRef]

- Daoui, A.; Yamni, M.; Karmouni, H.; Sayyouri, M.; Qjidaa, H.; Motahhir, S.; Jamil, O.; El-Shafai, W.; Algarni, A.D.; Soliman, N.F. Efficient Biomedical Signal Security Algorithm for Smart Internet of Medical Things (IoMTs) Applications. Electronics 2022, 11, 3867. [Google Scholar] [CrossRef]

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A Novel Bandit-Based Approach to Hyperparameter Optimization. J. Mach. Learn. Res. 2017, 18, 6765–6816. [Google Scholar]

- Crammer, K.; Singer, Y. On the Algorithmic Implementation of Multiclass Kernel-Based Vector Machines. J. Mach. Learn. Res. 2001, 2, 265–292. [Google Scholar]

- Massoz, Q.; Langohr, T.; François, C.; Verly, J.G. The ULg Multimodality Drowsiness Database (Called DROZY) and Examples of Use. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–7. [Google Scholar]

- Bolboacă, S.D.; Jäntschi, L. Sensitivity, Specificity, and Accuracy of Predictive Models on Phenols Toxicity. J. Comput. Sci. 2014, 5, 345–350. [Google Scholar] [CrossRef]

- Gwak, J.; Hirao, A.; Shino, M. An Investigation of Early Detection of Driver Drowsiness Using Ensemble Machine Learning Based on Hybrid Sensing. Appl. Sci. 2020, 10, 2890. [Google Scholar] [CrossRef]

- Bakheet, S.; Al-Hamadi, A. A Framework for Instantaneous Driver Drowsiness Detection Based on Improved HOG Features and Naïve Bayesian Classification. Brain Sci. 2021, 11, 240. [Google Scholar] [CrossRef] [PubMed]

| Person | Number of Frames | ||

|---|---|---|---|

| Alert | Non-Vigilant | Tired | |

| 1 | 17,865 | 15,195 | 14,185 |

| 2 | 17,899 | 14,156 | 13,033 |

| 3 | 17,882 | 13,540 | 14,198 |

| 6 | 17,789 | 13,079 | 14,272 |

| 7 | 17,898 | 14,163 | 13,167 |

| 8 | 17,913 | 14,198 | 14,331 |

| 10 | 17,863 | 17,886 | 14,204 |

| 11 | 17,866 | 17,900 | 14,339 |

| 12 | 17,914 | 17,861 | 17,972 |

| 13 | 17,889 | 17,908 | 17,889 |

| 14 | 17,902 | 17,198 | 17,875 |

| Model | Hyperparameters |

|---|---|

| SVM | C|275 Gamma|‘scale’ Kernel|‘rbf’ |

| RF | Number of estimators|79 Criterion|‘entropy’ |

| DT | Criterion|‘gini’ Minimum samples leaf|1 Minimum samples split|2 CCP Alpha|0 |

| KNN | Number of neighbors|1 Leaf size|30 Metric|‘minkowski’ P|2 Weights Distribution|‘uniform’ |

| QDA | Tol|0.0001 |

| MLP | Number of hidden layers|2 Hidden layer_sizes|[44,45] Activation|‘relu’ Maximum number of iterations|200 Optimizer|‘adam’ |

| LR | Optimizer|‘lbfgs’ C|1.0 Fit intercept|True |

| 2D CNN | Optimizer|‘adam’ Epochs|Automatic (using Early_Stopping technique) Batch size|20 Activation function|‘relu’ Learning rate|0.01 |

| 3D CNN | Optimizer|‘adam’ Epochs: Automatic (using Early_Stopping technique) Batch size|20 Activation function|‘relu’ Learning rate: 0.01 |

| Hyper Parameter | Accuracy | |

|---|---|---|

| Learning Rate | Optimizer | |

| 0.01 | adam | 1 |

| 0.001 | rmsprop | 1 |

| 0.01 | adam | 1 |

| 0.001 | rmsprop | 0.9990 |

| 0.01 | rmsprop | 0.9990 |

| 0.01 | rmsprop | 0.9990 |

| 0.01 | adam | 0.9990 |

| 0.001 | adam | 0.99904 |

| 0.001 | rmsprop | 0.9910 |

| 0.001 | adam | 0.9900 |

| 0.01 | adam | 0.9900 |

| 0.01 | adam | 0.9890 |

| 0.001 | adam | 0.9880 |

| 0.001 | adam | 0.9871 |

| Model | Precision | Recall | F1-Score | Accuracy | Testing Time (ms) |

|---|---|---|---|---|---|

| SVM | 100 | 99 | 99 | 99 | 187 |

| RF | 100 | 99 | 100 | 99 | 31 |

| DT | 92 | 92 | 92 | 92 | 20 |

| KNN | 100 | 99 | 100 | 99 | 16 |

| QDA | 68 | 68 | 68 | 68 | 40 |

| MLP | 95 | 95 | 95 | 95 | 30 |

| LR | 95 | 95 | 95 | 95 | 10 |

| 2D CNN | 97 | 97 | 97 | 97 | 120 |

| 3D CNN | 100 | 100 | 100 | 100 | 124 |

| Model | Precision | Recall | F1-Score | Accuracy | Testing Time (ms) |

|---|---|---|---|---|---|

| SVM | 86 | 85 | 85 | 85 | 194 |

| RF | 93 | 93 | 93 | 93 | 39 |

| DT | 81 | 82 | 82 | 82 | 25 |

| KNN | 86 | 85 | 85 | 85 | 20 |

| QDA | 47 | 47 | 47 | 47 | 54 |

| MLP | 90 | 90 | 90 | 90 | 32 |

| LR | 93 | 93 | 93 | 93 | 15 |

| 2D CNN | 95 | 94 | 95 | 95 | 12.4 |

| 3D CNN | 98 | 98 | 98 | 98 | 16.9 |

| Model | Precision | Recall | F1-Score | Accuracy | Testing Time (ms) |

|---|---|---|---|---|---|

| SVM | 83 | 82 | 82 | 82 | 214 |

| RF | 90 | 90 | 90 | 90 | 65 |

| DT | 82 | 82 | 82 | 82 | 53 |

| KNN | 87 | 86 | 86 | 86 | 33 |

| QDA | 42 | 41 | 41 | 41 | 27 |

| MLP | 89 | 88 | 88 | 88 | 41 |

| LR | 89 | 89 | 89 | 89 | 20 |

| 2D CNN | 91 | 89 | 90 | 89 | 19 |

| 3D CNN | 98 | 98 | 98 | 98 | 25 |

| Work | Dataset | Method | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|---|

| Maior et al. [22] | DROZY | SVM | - | - | - | 94 |

| Biswal et al. [26] | Collected | CNN | 97.07 | 97.13 | 97.65 | 97.1 |

| Jeon et al. [27] | ETS2 | CNN | 93.9 | 94.74 | 94.18 | 94.2 |

| Gwak et al. [48] | Collected | Ensemble ML | 97.1 | 93.5 | 94.9 | 95.4 |

| Bakheet et al. [49] | NTHU-DDD | Naïve Bayes | - | - | 87.84 | 85.62 |

| Knapik et al. [34] | Thermal Images | CNN | - | - | 87 | - |

| Hemantkumar et al. [33] | Mouth images | Optimization | - | - | - | 84.66 |

| Liu [35] | FDDB | CNN | - | - | - | 96.7 |

| Proposed | DROZY | SVM | 100 | 99 | 99 | 99 |

| RF | 100 | 99 | 100 | 99 | ||

| KNN | 100 | 99 | 100 | 99 | ||

| 2D CNN | 97 | 97 | 97 | 97 | ||

| 3D CNN | 100 | 100 | 100 | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sedik, A.; Marey, M.; Mostafa, H. An Adaptive Fatigue Detection System Based on 3D CNNs and Ensemble Models. Symmetry 2023, 15, 1274. https://doi.org/10.3390/sym15061274

Sedik A, Marey M, Mostafa H. An Adaptive Fatigue Detection System Based on 3D CNNs and Ensemble Models. Symmetry. 2023; 15(6):1274. https://doi.org/10.3390/sym15061274

Chicago/Turabian StyleSedik, Ahmed, Mohamed Marey, and Hala Mostafa. 2023. "An Adaptive Fatigue Detection System Based on 3D CNNs and Ensemble Models" Symmetry 15, no. 6: 1274. https://doi.org/10.3390/sym15061274

APA StyleSedik, A., Marey, M., & Mostafa, H. (2023). An Adaptive Fatigue Detection System Based on 3D CNNs and Ensemble Models. Symmetry, 15(6), 1274. https://doi.org/10.3390/sym15061274