Abstract

Heteroscedasticity is often encountered in spatial-data analysis, so a new class of heterogeneous spatial autoregressive models is introduced in this paper, where the variance parameters are allowed to depend on some explanatory variables. Here, we are interested in the problem of parameter estimation and the variable selection for both the mean and variance models. Then, a unified procedure via double-penalized quasi-maximum likelihood is proposed, to simultaneously select important variables. Under certain regular conditions, the consistency and oracle property of the resulting estimators are established. Finally, both simulation studies and a real data analysis of the Boston housing data are carried to illustrate the developed methodology.

1. Introduction

In the field of modern economics and statistics, the spatial autoregressive (SAR) model has always been a hot topic for economists and statisticians. Theories and methods for estimation as well as other inferences based on linear SAR models and their extensions have been deeply studied. Among them, there is a lot of wonderful literature based on linear SAR models, such as Cliff and Ord [1], Anselin [2], and Anselin and Bera [3]. Other research results for linear SAR models can also be referred to: Xu and Lee [4], Liu et al. [5], Xie et al. [6], Xie et al. [7], and so on. In order to capture the nonlinear relationship between response variables and some explanatory variables, a variety of semiparametric SAR models have recently been proposed and studied in depth. For example, based on a partially linear spatial autoregressive model, Su and Jin [8] developed a profile quasi-maximum likelihood-estimation method and discussed the asymptotic properties of the obtained estimators. Du et al. [9] developed partially linear additive spatial autoregressive models and proposed an estimation method via combining the spline approximations with an instrumental-variables-estimation method. Cheng and Chen [10] discussed partially linear single-index spatial autoregressive model as well as obtained the consistency and asymptotic normality of the proposed estimators, under some mild conditions. Other research results of semiparametric SAR models can also be seen in Wei et al. [11], Hu et al. [12], and so on. Previous research on various SAR models focused mainly on the homoskedasticity assumption, that the variance of the unobservable error, conditional on the explanatory variables, is constant. It is well-known that if the innovations are heteroskedastic, most existing statistical inference methods under homoskedasticity assumption could lead to incorrect inference, see Lin and Lee [13]. Therefore, many researchers want to relax the homoskedasticity assumption for spatial autoregressive models, by allowing different variances for each unobservable error. For example, Dai et al. [14] developed a Bayesian local-influence analysis for heterogeneous spatial autoregressive models. However, the variance terms in these above-mentioned heterogeneous spatial autoregressive models are assumed fixed and do not depend on the regression variables. Furthermore, in many application fields, such as economics and quality management, it is a topic of interest to model the variance itself, which is helpful to identify the factors that affect the variability in the observations. Thus, we intend to propose a class of heterogeneous spatial autoregressive models where the variance parameters are modelled in terms of covariates.

In addition, many authors have done a lot of research on joint mean and variance models. For example, Wu and Li [15] proposed a variable-selection procedure via a penalized maximum likelihood for the joint mean and dispersion models of the inverse Gaussian distribution. Xu and Zhang [16] discussed a Bayesian estimation for semiparametric joint mean and variance models, based on B-spline approximations of nonparametric components. Zhao et al. [17] studied variable selection for beta-regression models with varying dispersion, where both the mean and the dispersion are modeled by explanatory variables. Li et al. [18] proposed an efficient unified variable selection of the joint location, scale, and skewness models, based on a penalized likelihood method. Zhang et al. [19] developed a Bayesian quantile-regression analysis for semiparametric mixed-effects double-regression models, on the basis of the asymmetric Laplace distribution for the errors.

As we all know, variable selection is the most important problem to be considered in regression analysis. In practice, a large number of variables should be used in the initial analysis, but many of them may not be important and should be excluded from the final model, in order to improve the accuracy of the prediction. When the number of predictive variables is large, traditional variable selection methods such as stepwise regression and best-subset selection are not computationally feasible. Therefore, various shrinkage methods have been proposed in recent years and have gained much attention, such as the LASSO (Tibshirani [20]), the adaptive LASSO (Zou [21]), and the SCAD (Fan and Li [22]). Based on these shrinkage methods, the variable selection for SAR models (see Liu et al. [5]; Xie et al. [6]; Xie et al. [7]; Luo and Wu [23]) and the variable selection for other models without spatial dependence (see, for example, Li and Liang [24]; Zhao and Xue [25]; Tian et al. [26]) have been studied extensively in recent years. To the best of our knowledge, most existing variable-selection methods in spatial-data analysis are limited, to only select the mean explanatory variables in the literature, and little work has been done to select the variance explanatory variables.

Therefore, in this paper we aim to make variable selection of heterogeneous spatial autoregressive models (heterogeneous SAR models), based on penalized quasi-maximum likelihood, using different penalty functions. The proposed variable-selection method simultaneously selects important explanatory variables in a mean model and a variance model. Furthermore, it can be proven that this variable selection procedure is consistent, and the obtained estimators of regression coefficients have oracle property under certain regular conditions. This indicates that the penalized estimators work as well as if the subset of true zero coefficients were already known. A simulation and a real data analysis of a Boston housing price are used to illustrate the proposed variable selection method.

The outline of the paper is organized as follows. Section 2 introduces new heterogeneous spatial autoregressive models. Then, we propose a unified variable-selection procedure for the joint models via the double-penalized quasi-maximum likelihood method. Section 3 gives the theoretical results of the resulting estimators. The computation of the penalized quasi-maximum likelihood estimator as well as the choice of the tuning parameters are presented in Section 4. The finite-sample performance of the method is investigated, based on some simulation studies, in Section 5. Section 6 gives a real data analysis of a Boston housing price to illustrate the proposed method. Some conclusions as well as a brief discussion are given in Section 7. Some assumptions and the technical proofs of all the asymptotic results are provided in Appendix A.

2. Variable Selection via Penalized Quasi-Maximum Likelihood

2.1. Heterogeneous SAR Models

The classical spatial autoregressive models have the following form:

where is an n-dimensional observation vector on the dependent variable; is an unknown spatial parameter; and W is a specified spatial weight matrix of known constants with zero diagonal elements. Let is an matrix whose ith row is the observation of the explanatory variables and be a vector of unknown regression parameters in the mean model; is an n-dimensional vector of independent identically distributed disturbances with zero mean and finite variance .

Furthermore, similar to Xu and Zhang [16], we consider variance heterogeneity in the models and assume an explicit variance modeling related to other explanatory variables, that is:

where is the observation of explanatory variables associated with the variance of and is a vector of regression parameters in the variance model. There might be some components of which coincide with some ’s. In addition, is a known function, for the identifiability of the models, so we always assume that is a monotone function and , which takes into account the positiveness of the variance. For example, the researcher can take in general. So, this paper considers the following heterogeneous SAR models:

According to the idea of the quasi-maximum likelihood estimators (Lee [27]), the log-likelihood function of the model (3) is

where , and is an identity matrix.

2.2. Penalized Quasi-Maximum Likelihood

In order to obtain the desired sparsity in the resulting estimators, we propose the penalized quasi-maximum likelihood

For notational simplicity, we rewrite (5) in the following

where with , and is a given-penalty function with the tuning parameters . The data-driven criteria, such as cross validation (CV), generalized cross-validation (GCV), or the BIC-type tuning-parameter selector (see Wang et al. [28]), can be used to choose the tuning parameters, which is described in Section 4. Here we use the same penalty function for all the regression coefficients but with different tuning parameters , for the mean parameters and the variance parameters, respectively. Note that the penalty functions and tuning parameters are not necessarily the same for all the parameters. For example, we wish to keep some important variables in the final model and, therefore, do not want to penalize their coefficients. In this paper, we mainly use the smoothly clipped absolute deviation (SCAD) penalty, with a first derivative that satisfies

in which is taken in our work. The details about SCAD can be seen in Fan and Li [22].

The penalized quasi-maximum likelihood estimator of is denoted by , which maximizes the function in (6) with respect to . The technical details and an algorithm for calculating the penalized quasi-maximum likelihood estimator are provided in Section 4.

3. Asymptotic Properties

We next study the asymptotic properties of the resulting penalized quasi-maximum likelihood estimators. We first introduce some notations. Let denote the true value of . Furthermore, let For ease of presentation and without loss of generality, it is assumed that is an nonzero regression coefficient and that is an vector of the zero-valued regression coefficient. Let

and

Theorem 1.

Suppose that , and as Under the conditions – in Appendix A, with probability tending to 1 there must exist a local maximizer of the penalized quasi-maximum likelihood function in (6), such that is a -consistent estimator of .

The following theorem gives the asymptotic normality of . Let

where is the jth component of .

Furthermore, denote

where ,

,

, and , thus, is called as the average Hessian matrix (the information matrix when are normal). In addition, is a symmetric matrix, is an n-dimensional column vector of ones, , is the ith row of , and is the th entry of . Besides, represents a diagonal matrix with C, where C is an arbitrary vector.

Theorem 2.

Suppose that the penalty function satisfies

and under the same mild conditions as these given in Theorem 1, if and as and , then the -consistent estimator in Theorem 1 must satisfy

- (i)

- with probability tending to 1.

- (ii)

where , , and are the first super-left submatrix of , and . In addition, “” denotes convergence in distribution.

4. Computation

4.1. Algorithm

Since is irregular at the origin, the commonly used gradient method is not applicable. Now, an iterative algorithm is developed based on the local quadratic approximation of the penalty function , as in Fan and Li [22].

Firstly, note that the first two derivatives of the log-likelihood function are continuous. Around a fixed point , we approximate the log-likelihood function by

Moreover, given an initial value , the penalty function can be approximated by a quadratic function

Therefore, we can approximate the penalized quasi-maximum likelihood function (6) by the following formula

where

and Accordingly, the quadratic maximization problem for leads to a solution iterated by

Secondly, based on the log-likelihood function (4) we can obtain the score functions

where , , . Denote

where , , , ,

, , here, . Finally, we give the following Algorithm 1, which can summarize the computation of penalized quasi-maximum likelihood estimators of the parameters in the heterogeneous SAR Models.

| Algorithm 1 |

Step 1. The ordinary quasi-maximum likelihood estimators (without penalty) of are taken as their initial values. Step 2. are given as current values, then update them by

Step 3. Repeat Step 2 above until , where is a given small number, such as . |

4.2. Choosing the Tuning Parameters

The penalty function involves the tuning parameters () that control the amount of the penalty. Many selection criteria, such as CV, GCV, and BIC selection can be used to select the tuning parameters. Wang et al. [28] suggested using a BIC for the SCAD estimator in linear models and partially linear models and proved that its model selection consistency property, i.e., the optimal parameter chosen by the BIC, can identify the true model with the probability tending to one. Hence, their suggestion will be adopted in this paper. Nevertheless, in real application, how to simultaneously select a total of shrinkage parameters is challenging. To bypass this difficulty, we follow the idea of Li et al. [18], and simplify the tuning parameters as follows,

- (i)

- (ii)

where and are, respectively, the jth element and kth element of the unpenalized estimates and . Consequently, the original dimensional problem about becomes a one-dimensional problem about . can be selected according to the following BIC-type criterion

where is simply the number of nonzero coefficients of , and, here, is the estimate of for a given .

The tuning parameter can be obtained as

From our simulation studies, we found that this method works well.

5. Simulation Study

In this section we conduct a simulation study to assess the small sample performance of the proposed procedure. In this simulation, we choose m to be 5 and R to be 30, 40, 60, thus to be 150, 200, and 300. X and Z are, respectively, generated from multivariate normal distribution with zero mean vector and covariance matrix being where . Besides, let the spatial parameter , which represents different spatial dependencies; and the structure of the variance model is with . In these simulation, the weight matrix is taken to be where is an m-dimensional vector with all elements being 1,⊗ means Kronecker product.

We generated random samples of size n = 150, 200, and 300, respectively. For each random sample, the proposed variable-selection method, based on penalized quasi-maximum likelihood with SCAD and ALASSO penalty functions, is considered. The unknown tuning parameters for the penalty function are chosen by the BIC criterion in the simulation. The average number of the estimated zero coefficients with 500 simulation runs is reported in Table 1, Table 2 and Table 3. Note that “C” in the tables means the average number of zero regression coefficients that are correctly estimated as zero, and “IC” depicts the average number of non-zero regression coefficients that are erroneously set to zero. The performance of estimators , and is assessed by the mean square error(MSE), defined as

Table 1.

Variable selections for and under different sample sizes when .

Table 2.

Variable selections for and under different sample sizes when .

Table 3.

Variable selections for and under different sample sizes when .

From Table 1, Table 2 and Table 3, we can make the following observations: (i) as n increases, the performances of variable-selection procedures become better and better. For example, the values in the column labeled ‘MSE’ of and become smaller and smaller; the values in the column labeled ‘C’ become closer and closer to the true number of zero regression coefficients in the models. (ii) Based on different spatial parameters, the results of variable selection are similar. (iii) Two different penalty functions (SCAD and ALASSO) are used in this paper, and both methods perform almost equally well. (iv) Under the same circumstances, the ‘MSE’ of the mean parameters is smaller than the variance parameters’, which are very common in parametric estimation due to the fact that lower-order moments are easier to estimate than higher-order moments’.

6. Real Data Analysis

In this section, the proposed variable selection method is used to analyze the Boston housing price data that has been analyzed by many authors, for example, Pace and Gilley [29], Su and Yang [30], and so on. The database can be found in the spdep library of R. The dataset contains 14 variables with 506 observations, and a detailed description of these variables is listed in Table 4.

Table 4.

Description of the variables in Boston housing data.

This dataset has been used by Pace and Gilley [29] on the basis of spatial econometric models, and longitude–latitude coordinates for the census tracts are added to the dataset. In this paper, we take MEDV as the response variable, and the other 13 variables in Table 4 are treated as explanatory variables. Similar with Pace and Gilley [29] as well as Su and Yang [30], we use the Euclidean distances in terms of longitude and latitude to generate weight matrix , where

is the Euclidean distance and is the threshold distance, which is set to be 0.05 as in Su and Yang [30]. Thus, a spatial weight matrix is used with 19.1% nonzero elements. In addition, Z-variables in the variance model are taken to be the same as X-variables in the mean model. Then, the heterogeneous SAR models are considered here as follows:

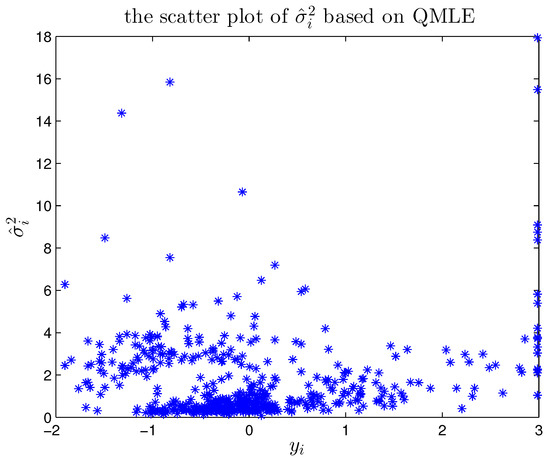

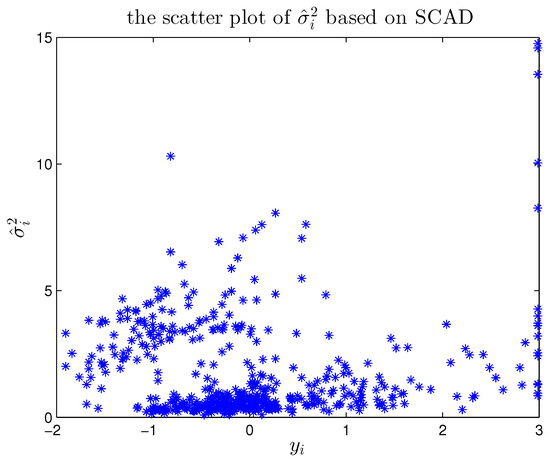

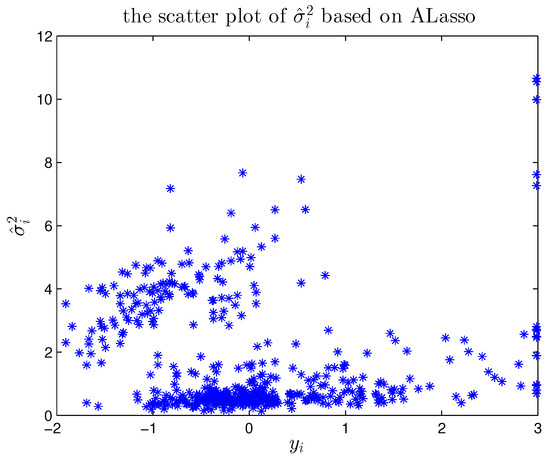

The ordinary quasi-maximum likelihood estimators (QMLE) and the penalized quasi-maximum likelihood estimators using the SCAD and ALASSO penalty functions are all considered. The tuning parameter was selected by the BIC. The estimated spatial parameter and the regression coefficients by different penalty-estimation methods are presented in Table 5. From Table 5, we can clearly see the following facts. (i) As expected, the spatial parameters are estimated very close to each other, and the other estimates are slightly different among different methods. (ii) Both the SCAD and ALASSO methods can eliminate many unimportant variables in joint mean and variance models. Concretely, the ALASSO method can select the same variables with the SCAD method in the mean model, and the SCAD method can select two more variables than the ALASSO method in the variance model. (iii) The important explanatory variables, selected by the proposed methods, are basically consistent with existing research results, for example, the regression coefficient of is negative in the mean model, which reveals that the housing price would decrease as the pupil–teacher ratio increases. In addition, based on the estimate of ’s, we can obtain the estimates of based on different methods and list the scatter plot of in Figure 1, Figure 2 and Figure 3, which shows that the heteroscedasticity modeling for this dataset is reasonable.

Table 5.

Penalized quasi-maximum likelihood estimators for and .

Figure 1.

The scatter plot of based on the ordinary quasi-maximum likelihood estimators (QMLE).

Figure 2.

The scatter plot of based on the penalized quasi-maximum likelihood using SCAD.

Figure 3.

The scatter plot of based on the penalized quasi-maximum likelihood using ALASSO.

7. Conclusions and Discussion

Within the framework of heterogeneous spatial autoregressive models, we proposed a variable-selection method on the basis of a penalized quasi-maximum likelihood approach. Like the mean, the variance parameters may be dependent on various explanatory variables of interest, so that simultaneous variable selection to the mean and variance models becomes important to avoid the modeling biases and reduce the model complexities. We have proven that the proposed penalized quasi-maximum likelihood estimators of the parameters in the mean and variance models are asymptotically consistent and normally distributed under some mild conditions. Simulation studies and a real data analysis of the Boston housing data are conducted to illustrate the proposed methodology. The results show that the proposed variable selection method is highly efficient and computationally fast.

Furthermore, there are several interesting issues that merit further research. For example, (i) it is interesting to increase the model flexibility by introducing nonparametric functions in the context of spatial autoregressive model and to study the variable selection for both the parametric component and the nonparametric component; (ii) a possible extension of the heterogeneous spatial autoregressive models is considered when the response variables are missing under a different missingness mechanism; and (iii) in the penalized estimation, we can also penalize the spatial parameter just like regression coefficients, which can help us directly judge whether the analyzed data have a spatial structure. These are all topics of interest and worthy of further study.

Author Contributions

Conceptualization, R.T. and D.X.; methodology, R.T. and D.X.; software, M.X. and D.X.; data curation, R.T. and D.X.; formal analysis, M.X. and D.X.; writing—original draft, R.T. and D.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Statistical Science Research Project (2021LY061), the Startup Foundation for Talents at Hangzhou Normal University (2019QDL039), and the Statistical Science Research Project of Zhejiang Province (in 2022).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorems

To prove the theorems in the paper, we require the following regular conditions:

- C1.

- The are independent with and . The moment exists for some .

- C2.

- The elements and are in W, where as .

- C3.

- The matrix S is a nonsingular matrix.

- C4.

- The sequences of matrices and are uniformly bounded in both row and column sums.

- C5.

- The and exist and are nonsingular. The elements of X and Z are uniformly bounded constants for all n.

- C6.

- are uniformly bounded in row or column sums, uniformly in in a closed subset of . The true is an interior point of .

- C7.

- The exists and is a nonsingular matrix.

- C8.

- The exists.

- C9.

- The third derivatives exist for all in an open set that contains the true parameter point . Furthermore, there exist functions such that for all , where for .

- C10.

- The penalty function satisfies

Remarks: Conditions 1–8 are sufficient conditions for the correctness of the global identification, asymptotic normality, and the consistent estimation of the QMLE of the model (3), which are similar to the conditions provided by Lee [27] and Liu et al. [5]. Concretely, Condition 1 is applied in the use of the central-limit theorem of Kelejian and Prucha [31]. Condition 2 shows the features of the weight matrix. If is a bounded sequence, Condition 2 holds. In Case’s model (Case [32]), Condition 2 is still satisfied although might diverge to infinity. Condition 3 is used to guarantee the existence of mean and variance of independent variable. Condition 4 implies that the variance of Y is bounded when n tends to infinity, see Kelejian and Prucha [31] as well as Lee [27]. Condition 5 can exclude the multicollinearity of the regressors of X and Z. For convenient analysis, we assume that the regressors are uniformly bounded. If not, they can be replaced by stochastic regressors with certain finite-moment conditions (Lee [27]). Condition 6 is meaningful to deal with the nonlinearity of in a log-likelihood function. Conditions 7–8 are used for the asymptotic normality of the QMLE. Condition 9 plays an important role in the Taylor expansion of related functions, which is similar to the condition (C) provided by Fan and Li [22]. Condition 10 is an assumption on the penalty function.

In order to prove Theorems 1 and 2, we need for the log-likelihood function to have several properties, which are stated in the following Lemmas.

Lemma A1.

Suppose that Conditions 1–9 hold, then we can obtain

Proof of Lemma A1.

By calculating the first-order partial derivatives of the log-likelihood function at , we obtain

The variance of is

Thus, . When the elements of X and Z are uniformly bounded for all n, it is obvious that . In addition, by some elementary calculations, we have the variance of is

where , therefore, Thus, the proof of the Lemma A1 is completed. □

Lemma A2.

If Conditions 1–8 hold, then

Proof of Lemma A2.

The proof of Lemma A2 is similar to the proof of Theorem 3.2 (Lee [27]), so we omit the details. □

Proof of Theorem 1.

Let . Similar to the proof of Theorem 1 in Fan and Li [22], we just need to prove that for any given , there exists a large constant C, such that

Note that . By using the Taylor expansion of the log-likelihood function, we have

where is the number of dimensions of . It follows from Lemma A1 that

Under Condition 8,

In addition,

Furthermore, by choosing a sufficiently large C, and are dominated by uniformly in . Hence, (A1) holds, which implies the proof of Theorem 1 is completed. □

Proof of Theorem 2.

We first prove part (i). According to Theorem 1, it is sufficient to show that, for any that satisfies and some given small , when , with probability tending to 1, we have

and

By the Taylor expansion, it is easy to prove that

where lies between and . From Lemmas A1–A2 and Condition 9, we obtain

When , we have

Note that and . The sign of the derivative is determined by that of with a sufficiently large n. This show that (A2) and (A3) follow. This completes the proof of part (i).

Next, we prove part (ii). By Theorem 1, there is a consistent local maximizer of , denoted as , which satisfies

For , note that

Hence, we have

where is the lth element of .

Let , , then

Furthermore, by using the central-limit theorem for the linear-quadratic forms of Kelejian and Prucha [31], it has

Therefore, according to Slutsky’s theorem, we obtain

The proof of Theorem 2 is, hence, completed. □

References

- Cliff, A.; Ord, J.K. Spatial Autocorrelation; Pion: London, UK, 1973. [Google Scholar]

- Anselin, L. Spatial Econometrics: Methods and Models; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1988. [Google Scholar]

- Anselin, L.; Bera, A.K. Spatial Dependence in Linear Regression Models with an Introduction to Spatial Econometrics. In Handbook of Applied Economics Statistics; Ullah, A., Giles, D.E.A., Eds.; Marcel Dekker: New York, NY, USA, 1998. [Google Scholar]

- Xu, X.B.; Lee, L.F. A spatial autoregressive model with a nonlinear transformation of the dependent variable. J. Econom. 2015, 186, 1–18. [Google Scholar] [CrossRef]

- Liu, X.; Chen, J.B.; Cheng, S.L. A penalized quasi-maximum likelihood method for variable selection in the spatial autoregressive model. Spat. Stat. 2018, 25, 86–104. [Google Scholar] [CrossRef]

- Xie, L.; Wang, X.R.; Cheng, W.H.; Tang, T. Variable selection for spatial autoregressive models. Commun.-Stat. Methods 2021, 50, 1325–1340. [Google Scholar] [CrossRef]

- Xie, T.F.; Cao, R.Y.; Du, J. Variable selection for spatial autoregressive models with a diverging number of parameters. Stat. Pap. 2020, 61, 1125–1145. [Google Scholar] [CrossRef]

- Su, L.J.; Jin, S.N. Profile quasi-maximum likelihood estimation of partially linear spatial autoregressive models. J. Econom. 2010, 157, 18–33. [Google Scholar] [CrossRef]

- Du, J.; Sun, X.Q.; Cao, R.Y.; Zhang, Z.Z. Statistical inference for partially linear additive spatial autoregressive models. Spat. Stat. 2018, 25, 52–67. [Google Scholar] [CrossRef]

- Cheng, S.L.; Chen, J.B. Estimation of partially linear single-index spatial autoregressive model. Stat. Pap. 2021, 62, 485–531. [Google Scholar] [CrossRef]

- Wei, C.H.; Guo, S.; Zhai, S.F. Statistical inference of partially linear varying coefficient spatial autoregressive models. Econ. Model. 2017, 64, 553–559. [Google Scholar] [CrossRef]

- Hu, Y.P.; Wu, S.Y.; Feng, S.Y.; Jin, J.L. Estimation in Partial Functional Linear Spatial Autoregressive Model. Mathematics 2020, 8, 1680. [Google Scholar] [CrossRef]

- Lin, X.; Lee, L.F. GMM estimation of spatial autoregressive models with unknown heteroskedasticity. J. Econom. 2010, 157, 34–52. [Google Scholar] [CrossRef]

- Dai, X.W.; Jin, L.B.; Tian, M.Z.; Shi, L. Bayesian Local Influence for Spatial Autoregressive Models with Heteroscedasticity. Stat. Pap. 2019, 60, 1423–1446. [Google Scholar] [CrossRef]

- Wu, L.C.; Li, H.Q. Variable selection for joint mean and dispersion models of the inverse Gaussian distribution. Metrika 2012, 75, 795–808. [Google Scholar] [CrossRef]

- Xu, D.K.; Zhang, Z.Z. A semiparametric Bayesian approach to joint mean and variance models. Stat. Probab. Lett. 2013, 83, 1624–1631. [Google Scholar] [CrossRef]

- Zhao, W.H.; Zhang, R.Q.; Lv, Y.Z.; Liu, J.C. Variable selection for varying dispersion beta regression model. J. Appl. Stat. 2014, 41, 95–108. [Google Scholar] [CrossRef]

- Li, H.Q.; Wu, L.C.; Ma, T. Variable selection in joint location, scale and skewness models of the skew-normal distribution. J. Syst. Sci. Complex. 2017, 30, 694–709. [Google Scholar] [CrossRef]

- Zhang, D.; Wu, L.C.; Ye, K.Y.; Wang, M. Bayesian quantile semiparametric mixed-effects double regression models. Stat. Theory Relat. Fields 2021, 5, 303–315. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the LASSO. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef] [Green Version]

- Fan, J.Q.; Li, R.Z. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Luo, G.; Wu, M. Variable selection for semiparametric varying-coefficient spatial autoregressive models with a diverging number of parameters. Commun.-Stat. Methods 2021, 50, 2062–2079. [Google Scholar] [CrossRef]

- Li, R.; Liang, H. Variable selection in semiparametric regression modeling. Ann. Stat. 2008, 36, 261–286. [Google Scholar] [CrossRef] [PubMed]

- Zhao, P.X.; Xue, L.G. Variable selection for semiparametric varying coefficient partially linear errorsin-variables models. J. Multivar. Anal. 2010, 101, 1872–1883. [Google Scholar] [CrossRef] [Green Version]

- Tian, R.Q.; Xue, L.G.; Liu, C.L. Penalized quadratic inference functions for semiparametric varying coefficient partially linear models with longitudinal data. J. Multivar. Anal. 2014, 132, 94–110. [Google Scholar] [CrossRef]

- Lee, L.F. Asymptotic distributions of quasi-maximum likelihood estimators for spatial autoregressive models. Econometrica 2004, 72, 1899–1925. [Google Scholar] [CrossRef]

- Wang, H.; Li, R.; Tsai, C. Tuning parameter selectors for the smoothly clipped absolute deviation method. Biometrika 2007, 94, 553–568. [Google Scholar] [CrossRef] [PubMed]

- Pace, R.K.; Gilley, O.W. Using the spatial configuration of the data to improve estimation. J. Real Estate Financ. Econ. 1997, 14, 333–340. [Google Scholar] [CrossRef]

- Su, L.; Yang, Z. Instrumental Variable Quantile Estimation of Spatial Autoregressive Models; Working Paper; Singapore Management University: Singapore, 2009. [Google Scholar]

- Kelejian, H.H.; Prucha, I.R. On the asymptotic distribution of the Moran I test statistic with applications. J. Econom. 2001, 104, 219–257. [Google Scholar] [CrossRef] [Green Version]

- Case, A.C. Spatial patterns in household demand. Econometrica 1991, 59, 953–965. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).