Simple Summary

To improve cancer classification performance for high-dimensional microarray datasets, this work proposes combining filter and differential evolutionary (DE) algorithm feature selection techniques. By scoring genes or features of high-dimensional microarray datasets by some common filter methods, we keep only the highest-ranked features and eliminate superfluous and irrelevant ones to decrease the dimensionality of the microarray datasets. Then, the genes or features of the microarray datasets are optimized further by DE, producing noticeably better classification results. This could lead to outstanding improvement in the cancer classification using only less features of the microarray datasets.

Abstract

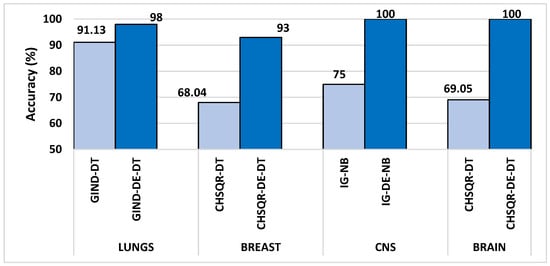

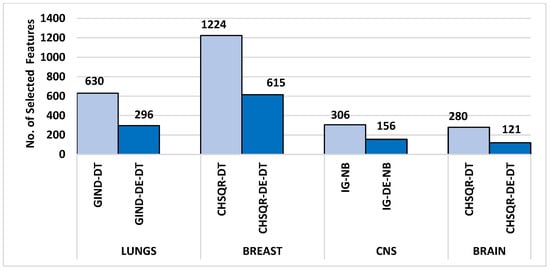

Background: In recent years, microarray datasets have been used to store information about human genes and methods used to express the genes in order to successfully diagnose cancer disease in the early stages. However, most of the microarray datasets typically contain thousands of redundant, irrelevant, and noisy genes, which raises a great challenge for effectively applying the machine learning algorithms to these high-dimensional microarray datasets. Methods: To address this challenge, this paper introduces a proposed hybrid filter and differential evolution-based feature selection to choose only the most influential genes or features of high-dimensional microarray datasets to improve cancer diagnoses and classification. The proposed approach is a two-phase hybrid feature selection model constructed using selecting the top-ranked features by some popular filter feature selection methods and then further identifying the most optimal features conducted by differential evolution (DE) optimization. Accordingly, some popular machine learning algorithms are trained using the final training microarray datasets with only the best features in order to produce outstanding cancer classification results. Four high-dimensional cancerous microarray datasets were used in this study to evaluate the proposed method, which are Breast, Lung, Central Nervous System (CNS), and Brain cancer datasets. Results: The experimental results demonstrate that the classification accuracy results achieved by the proposed hybrid filter-DE over filter methods increased to 100%, 100%, 93%, and 98% on Brain, CNS, Breast and Lung, respectively. Furthermore, applying the suggested DE-based feature selection contributed to removing around 50% of the features selected by using the filter methods for these four cancerous microarray datasets. The average improvement percentages of accuracy achieved by the proposed methods were up to 42.47%, 57.45%, 16.28% and 43.57% compared to the previous works that are 41.43%, 53.66%, 17.53%, 61.70% on Brain, CNS, Lung and Breast datasets, respectively. Conclusions: Compared to the previous works, the proposed methods accomplished better improvement percentages on Brain and CNS datasets, comparable improvement percentages on Lung dataset, and less improvement percentages on Breast dataset.

1. Introduction

Cancer research is widely acknowledged as a highly promising domain for using machine learning. Extensive endeavours have been undertaken to explore prospective approaches for detecting and treating cancer [1].

Cancer is a condition that exhibits uncontrolled cellular proliferation and results as a growth of a tumor in the form of mass or lump. Lung, colon, breast, central nervous system (CNS), liver, kidney, prostate, and brain cancer are among the various types of cancer that can occur. In this research study we have examined four distinct types of cancer dataset: Lung, Breast, Brain, and Central Nervous System. Lung cancer is a prevalent and mortal cancer worldwide [2]. It can arise in the primary airway, specifically within the lung tissue. The outcome is the unregulated proliferation and growth of specific lung cells. Respiratory disorders, including emphysema, are linked to an increased risk of lung cancer development. Breast cancer is one of the most invasive malignancies, predominantly affecting women. It is considered the most severe cancer following lung cancer due to the elevated mortality rate among women [3,4]. The rapid development of abnormal brain cells that is indicative of a brain tumor [5,6,7] is a significant health concern for adults, as it can result in severe impairment of organ function and even mortality. A malignant brain tumour rapidly grows and extends to adjacent brain regions. The Central Nervous System (CNS), consisting of the brain and spinal cord, is responsible for numerous biological functions. Spinal cord compression and spinal instability often involve the vertebral and spinal epidural spaces as common sites for cancer metastases. Metastases represent the most common type of CNS tumour in adults [8].

Cancer is regarded as one of the primary causes of death. In order to preserve the lives of patients, advanced technologies such as artificial intelligence and machine learning are used to detect cancer at an early stage and accurately predict its type. The cancer diagnosis is performed by employing several medical datasets, which encompass microarray gene expression data, also known as the microarray dataset. Microarray technology offers unique experimental capabilities that have been beneficial to cancer research. Microarray data can be used to evaluate a wide variety of cancer types. High-dimensional data from DNA microarray experiments is known as gene expression data. It is widely used to classify and detect malignant disorders [9]. The most recent development of artificial intelligence, specifically machine learning, has simplified data analysis, including microarray data. The authors [10] demonstrated that machine learning algorithms can be employed for microarray dataset analysis for cancer classification. Utilizing expressions of genes in microarray datasets can serve as an effective tool for diagnosing cancer. However, the number of active genes continues to grow, surpassing hundreds of thousands, while the available datasets remain limited in size, containing only a few subsets of samples. Therefore, one of the challenges in analyzing microarray datasets used for cancer classification is the curse of dimensionality. There is an additional concern regarding the characteristics of the current microarray datasets, which consist of numerous redundant and irrelevant features that have a detrimental impact on cancer classification results and computational expense [11]. The presence of duplicated and irrelevant features in very high-dimensional microarray datasets reduces the ability of the machine learning techniques to achieve accurate cancer classification and prediction [12]. These characteristics diminish the efficiency of the prediction model and complicate the search for meaningful insights. Consequently, it is necessary to employ feature selection methods in order to enhance the accuracy of the machine learning classifiers [13].

In order to enhance the effectiveness of widely used machine learning algorithms, many feature selection techniques have been employed to identify the most important features in malignant microarray datasets [14,15,16,17,18]. Even though filter feature selection approaches offer computational efficiency and the ability to reduce the dimensionality of microarray datasets, their accuracy results are limited since they evaluate features independently of classifiers. On the other hand, wrapper feature selection approaches interact with the classifier throughout the feature evaluation process, resulting in superior outcomes compared to the filter method. Nevertheless, the utilization of wrapper approaches on high-dimensional microarray datasets might be difficult and time-consuming.

In recent years, several evolutionary and bio-inspired algorithms [19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35] have been implemented in literature to obtain the highest level of accuracy in the gene selection challenge. Although feature selection methods based on evolutionary algorithms can overcome the limitations of filter and wrapper methods, they may result in greater computational times for certain machine learning algorithms. Due to the high dimensionality and large number of features in malignant microarray datasets, it is not feasible to initially employ evolutionary algorithms as feature selection approaches. It is essential to reduce the features of microarray cancer datasets using filter feature selection. Then, an evolutionary optimization algorithm can be utilized to optimize the features further to maximize cancer classification performance. This motivated us to suggest a novel hybrid filter-differential evolutionary feature selection method that combines the strengths of both filters and evolutionary techniques to generate effective solutions with improved cancer classification performance for high-dimensional microarray datasets.

The Differential Evolutionary (DE) is one of the superior optimization evolutionary algorithms, which is inspired by the biological evolution of the chromosomes in nature. DE performs well in convergence, although it is straightforward to implement and requires a few parameters to control and low space complexity. These attractive advantages of DE over other competitive optimization algorithms make DE gained widespread recognition for its exceptional efficacy in addressing various optimization challenges. Hence, this study aims to combine the superior performance of the DE optimization algorithm with filter selection methods to improve the classification accuracy of four microarray datasets by highlighting the most important and relevant genes. This is the first attempt at applying the hybrid filter and DE-based gene selection and classification of DNA Microarray data to the belief of our knowledge. In this paper, we propose a novel approach that combines feature selection methods based on differential evolutionary optimization algorithms and filter methods for identifying the most effective subset of features. Six common filtering methods were applied in this study to assign a score to each feature in microarray cancer datasets. These methods were then used to reduce the dimensionality of the datasets by retaining only the highest-ranked features and removing superfluous and irrelevant ones. The DE algorithm was then used to optimize the reduced cancer datasets, resulting in significantly improved results in cancer classification. Our proposed approach improved the classification performance of cancer when applied to microarray datasets with high dimensions. The following is a summary of the most important contributions that our paper represents:

- Feature reduction using filter algorithms: Even though microarray datasets are used a lot in scientific literature, a recent study [28] found that popular machine learning methods weren’t very good at correctly classifying the high-dimensional microarray datasets such as the Brain, Breast, Lung, and CNS datasets since they have limited samples and thousands of redundant, irrelevant, and noisy genes or features. In this paper, we employed six well-known, fast and effective filtering methods—Information gain (IG), information gain ratio (IGR), correlation (CR), Gini index (GIND), Relief (RELIEF) and Chi-squared (CHSQR) to reduce the dimensionality of microarray datasets by ranking the features and genes and then selecting only the top 5% of ranked features in order to enhance the cancer classification performance.

- Optimal feature selection using DE: The performance improvement of the machine learning algorithms achieved on high-dimensional microarray datasets considering only the filter methods is inadequate since the filter methods assess the features separately by identifying the correlation between each feature and the class label. Therefore, the DE optimization algorithm is suggested to be effectively used in the second phase to identify the correlation between a set of the best features and the class label. This is done to further optimize the selected features obtained from the filter methods and to make an enhancement in cancer classification performance. DE is able to reduce almost 50% of the irrelevant features in comparison to filter feature selection methods.

- Maximizing the cancer classification: The proposed hybrid filter-DE feature selection methods have achieved the two main objectives stated in this study, which are reducing the dimensionality and increasing the cancer classification performance on the four microarray datasets used. It is evident from experimental results that proposed hybrid filter-DE feature selection methods accomplished excellent performances with fewer important features: 100% classification accuracy with only 121 features for the Brain dataset, 100% classification accuracy with only 156 features for CNS, 98% classification accuracy with only 296 features for Lung dataset, and 93% classification accuracy with only 615 features for Breast, respectively. The average improvement percentages of accuracy achieved by the proposed methods were up to 42.47%, 57.45%, 16.28% and 43.57% compared to the previous works that are 41.43%, 53.66%, 17.53%, 61.70% on Brain, CNS, Lung and Breast datasets, respectively. Compared to the previous works, the proposed methods accomplished better improvement percentages on Brain and CNS datasets, comparable improvement percentages on Lung dataset, and less improvement percentages on Breast dataset.

The remaining part of this paper consists of the following sections. Section 2 discussed recent works related to cancerous gene selection and classification performance suggested for high-dimensional microarrays. Section 3 describes proposed methodology and elaborates on the details of the phases of the proposed hybrid filter-DE feature selection methods. The experimental results and discussion of the proposed hybrid filter-DE are presented in Section 4. Finally, the paper is concluded, and future work is recommended in Section 5.

2. Related Works

This section presents and investigates the existing hybrid feature selection methods approaches that have recently been applied on cancer microarray datasets to improve cancer classification results.

Karthika et al. [20] employed the mixture model (MM) in addition to the Fast Fourier Transform (FFT) on Microarray Gene Expression data for dimensionality reduction. In order to select an effective feature, they employed optimization techniques called Dragonfly. Nonlinear Regression, DT, RF, and SVM were used as classifiers in this study. The classifiers’ performance is evaluated both with and without feature selection methods. Finally, hyper-parameter tuning techniques such as Adaptive Moment Estimation (Adam) and Random Adaptive Moment Estimation (RanAdam) are used to improve classifiers, resulting in an accuracy of approximately 98% with the SVM classifier. This research did not address computational complexity and model validation techniques. In addition, this study has notable limitations, including population-specific findings, reliance on MAGE data, and the influence of outliers.

Elbashir et al. [21]. suggested a graph attention network (GAT) model to utilize diverse mRNA and miRNA for the prediction of the survival rate of non-small cell lung cancer (NSCLC) using multi-omics data. Chi-square analysis was used to select the most significant features to include in the model. They used the synthetic minority oversampling method (SMOTE) to make the dataset and the concordance index (C-index) more equal, and they tested the model on different sets of omics data. When using combined mRNA and miRNA data, they obtained the highest value of the C-index (0.82) along with the accuracy of 0.75. Chi-Square approaches cannot be regarded as the most ideal feature selection method for highly complicated and correlated biological data; this is a significant limitation of the current research.

Zamri et al. [22] presented a hybrid metaheuristics optimization-based two-stage feature selection model. The SKF-MUT simulated Kalman filter was used in this study to pick microarray features that would make the ANN classification more accurate. The experimental results were validated using eight binary and multiclass benchmark datasets. SKF-MUT effectively selected the correct number of features and achieved 95–100% classification accuracy. The significant limitations of this study include model evaluation relying just on accuracy. Instead, other metrics like precision, recall, F1-score, or AUC-ROC might better assess the model’s performance along with accuracy. Further, the computational cost of feature selection has not been discussed.

Ali et al. [23] presented a hybrid filter-genetic feature selection method to reduce microarray dataset dimensionality. The first part of this work used three filter methods: information gain (IG), information gain ratio (IGR), and Chi-squared (CS) to pick the most relevant microarray dataset features. The second phase used a genetic algorithm to optimize the features selected in the first phase of the proposed approach. The proposed method was validated utilizing breast, lung, CNS, and brain cancer microarray datasets. Experimental results indicated the suggested model improved performance of various common machine learning approaches in terms of Accuracy, Recall, Precision, and F-measure and the reported accuracy ranges from 92 to 100%. The limitations of the existing work can be included as computational cost of the feature selection process and also statistical validation not discussed.

Elemam and Elshrkawey [24] introduced a two-stage hybrid feature selection. They began by using feature evaluation methods that included chi-squared, F-statistics, and mutual information (MI) filters. In the second phase, they employed wrapper-based sequential forward selection with ML models like SVM, DT, RF, and KNN classifiers to find the optimal set of features. The model was then rigorously tested and validated using lung cancer, ovarian cancer, leukemia, and SRBCT datasets. The results were impressive, with an accuracy rate of almost 100 percent and a minimal number of selected features. However, the study’s performance was solely measured through accuracy, and the issue of feature redundancy was not adequately addressed. No statistical tests were conducted for model validation, which are the limitations of the existing work

In a recent study, Abasabadi et al. [25] proposed a novel hybrid feature selection method to address the challenge of high dimensionality in microarray datasets. The methodology combines a filter approach (SLI-γ) with a genetic algorithm (GA). In the initial phase, 99% of irrelevant features were eliminated using SLI-γ. The second phase involved the GA optimization of the remaining relevant features to enhance classification accuracy. The results of this method were not only enhanced performance but also a significant reduction in execution time, which is a remarkable achievement. However, the inherent computational complexity associated with GA-based optimization remains a challenge, especially as the dimensionality of datasets increases.

Almutiri et al. [26] proposed a hybrid feature selection method, GI-SVM-RFE, to improve classification accuracy in high-dimensional microarray datasets. The methodology combines the Gini index and SVM-RFE to select informative genes recursively. The results showed enhanced classification accuracy reported as 90.67 compared to other methods without feature selection or using only the Gini index or SVM-RFE. The model not validated statistically.

Similarly, Xie et al. [27] proposed the Multi-Fitness RankAggreg Genetic Algorithm (MFRAG). The methodology employed a genetic algorithm framework to integrate nine feature selection techniques. It uses an ensemble model to assess fitness and guide the evolutionary process. The results indicated that MFRAG demonstrated exceptional performance, achieving an accuracy between 87 and 100 percent, with increased classification accuracy using fewer selected characteristics. The limitations of this study include the potential for overfitting despite the use of the ensemble method and the absence of statistical discussion of model validation.

Dash et al. [28] proposed a hybrid methodology for feature reduction utilizing harmony search and Pareto optimization. The authors employed the Harmony Search algorithm and Gene Selection (AHSGS) to identify the top 100 gene characteristics while also utilizing Bi-objective Pareto optimization to eliminate insignificant gene features. The model was assessed using four publicly available microarray datasets. In all instances, SVM surpassed other classifiers, attaining nearly 100 percent accuracy, with the exception of the Colon dataset, where ANN reached 82 percent accuracy. The existing work exhibits notable limitations, particularly in the statistical analysis, as the results concerning significance levels are absent. Furthermore, the author failed to address the criteria or methodology employed to ascertain the Harmony Memory Consideration Rate and Pitch Adjusting Rate.

Almutiri et al. [29] suggested a fusion-based feature selection framework aimed at mitigating high dimensionality and enhancing classification performance in gene expression microarray data. The framework utilizes a three-layer approach. The first layer has independent feature selection methods for gene ranking and scoring. The second layer consists of a threshold-based filtering step and a final decision layer employing majority or consensus voting. Experiments were conducted on five microarray datasets using an SVM classifier. The results revealed enhanced classification accuracy, achieving up to 97% on the Prostate dataset, alongside dimensionality reduction in comparison to existing methods. The primary limitations of this study are threshold sensitivity and dependence on voting strategy.

Kilicarslan et al. [30] proposed a hybrid model to significantly improve cancer diagnosis. The methodology combined relief and stacked autoencoders for dimension reduction. Then, SVM and CNN were used to improve classification accuracy. The proposed method achieved the highest classification accuracies (98.6%, 99.86%, and 83.95%, respectively) on three microarray datasets (Ovarian, Leukemia, and CNS), outperforming SVM and other tested approaches. The study highlighted the effectiveness of dimension reduction in enhancing classification accuracy. However, this study has most notable limitations as limited comparison with other Feature Selection Methods and process of hyperparameter optimization for the CNN model.

Baliarsingh et al. [31] proposed a microarray-based hybrid cancer classification model. The methodology utilized ANOVA to select relevant genes. Then, the enhanced Jaya (EJaya) algorithm and the forest optimization algorithm (FOA) were utilized to find the best gene subset, and SVM was used for classification. The proposed method reduced features and exceeded benchmark methods in classification accuracy from 96 to 100%. The significant limitation of this study is the use of a single classifier (SVM), which may not generalize across datasets. In addition Parameter tuning may also affect EJaya and FOA algorithm performance.

In this study, Almugren and Alshamlan [32] evaluated and compared contemporary hybrid approaches combining bio-inspired evolutionary algorithms for gene selection and cancer classification. The methodology, which was conducted with utmost thoroughness, involved reviewing various algorithms, with a focus on genetic algorithms (GA) as wrapper methods for gene selection. The results revealed that GA is the most extensively used and achieved the highest accuracy with a minimal number of selected genes ranging from 93 to 100%. In contrast, the Firefly algorithm has not been used as a wrapper approach. The limitation of existing work is the inadequate investigation of alternative hybrid algorithms.

Sayed et al. [33] proposed this study to investigate the efficacy of a Nested Genetic Algorithm (Nested-GA) for feature selection in high-dimensional colon cancer microarray datasets. The methodology used a t-test to preprocess data and a nested approach with two Genetic Algorithms. The outer Genetic Algorithm (OGA-SVM) is used for gene expression data, and the Inner Genetic Algorithm (IGA-NNW) is utilized for DNA methylation data. The validation was performed using five cross-folds, ensuring a thorough examination of the results. Nested-GA outperformed KNN and RF on the colon cancer dataset with 99.9% classification accuracy. This study’s main limitation is comparing Nested-GA to a limited set of feature selection algorithms (KNN and RF). A more extensive comparison with additional contemporary methods could yield more significant insights into its performance which is one of the limitation of this work.

Similarly, Ghosh et al. [34] introduced a novel two-stage hybrid model that integrates multiple filter methods with a genetic algorithm (GA) for cancer detection in microarray datasets. The methodology involved initially creating an ensemble of filter methods such as ReliefF, chi-square, and symmetrical uncertainty by looking at the union and intersection of their top-n-ranked features. Then, in the next step, GA is used to make the results of the first step even better. The result showed that the model did better than the best current methods, with an accuracy of about 100% and a smaller number of chosen features across five cancer datasets: colon, lung, leukemia, SRBCT, and prostate. The limitation of this study is that the performance evaluation is mainly based on accuracy and feature count.

Hameed et al. [35] introduced a three-phase hybrid method to select and classify high-dimensional microarray data. To achieve this purpose, the author employed Pearson’s Correlation Coefficient (PCC) alongside Binary Particle Swarm Optimization (BPSO) or Genetic Algorithm (GA) and numerous classifiers. In the first phase, the methodology utilizes PCC as a filter for feature selection. Subsequently, the second phase involved the application of either BPSO or GA as wrapper methods. The data was classified using five distinct classifiers. The results showed improved classification accuracy, with BPSO outperforming GA in speed and effectiveness across multiple datasets and classifiers. Although the authors compared BPSO with GA, they did not study a broader range of optimization algorithms or hybrid approaches. This highlights the urgent need for a more comprehensive understanding of the best practices in feature selection and classification.

As can be observed from the existing works discussed above, the filter methods have been utilized individually [18,24] or combined with the genetic algorithm [23,24,26,27,33,34,35] or wrapper feature selection [29,32] in order to improve cancer classification on microarray datasets. In contrast, this study proposed integrating Differential Evolution (DE) with some popular filter methods to maximize cancer classification on microarray datasets. DE has several attractive advantages over other competitive optimization algorithms. DE performs well in convergence, although it is straightforward to implement and requires a few parameters to control and low space complexity. Therefore, the proposed hybrid method successfully identified the most effective features using both filter methods and DE. This resulted in improved performance of classifying cancer on highly dimensional microarray datasets.

3. Materials and Methods

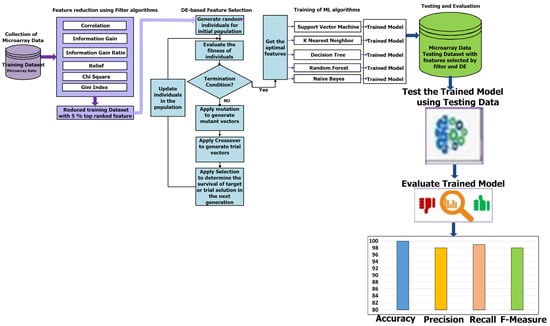

This section introduces a hybrid feature selection strategy that combines filter methods with differential evolutionary algorithms. This approach aims to effectively identify the most relevant features from high-dimensional microarray datasets to get excellent results in cancer classification. The methodology of the proposed hybrid filter and DE-based feature selection consists of five phases, as shown in Figure 1: microarray data collection, feature reduction using filter algorithms, feature selection using DE, training, and testing and evaluation of trained models.

Figure 1.

The methodology of improving cancer classification in Microarray data using the proposed hybrid filter and differential evolution-based feature selection.

3.1. Description of Cancerous Microarray Data Used

There are many microarray datasets used in literature. In order to assess the effectiveness of the suggested hybrid filter-DE feature selection method, we will focus on four malignant microarray datasets. Brain cancer [36,37], Breast cancer [38], Lung cancer [39] and Central Nervous System (CNS) [39] datasets since these datasets are more common microarray datasets and have high-dimensional features. Furthermore, several latest works [20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35] reported that the high-dimensional features of these four cancerous microarray datasets caused low classification results. Table 1. shows characteristics of the four cancerous microarray datasets used for assessing the proposed hybrid filter-DE feature selection method.

Table 1.

Characteristics of the four cancerous microarray datasets used for assessing the proposed hybrid filter-DE feature selection method.

3.2. Feature Reduction Using Filter Algorithms

As stated in Section 3.1, the microarray datasets included in this study are characterized by a high number of dimensions and contain numerous duplicated and irrelevant features. It is impractical to train machine learning algorithms with all these features of microarray datasets. So, we use common filter feature selection methods to rank all features of microarray cancer datasets. Subsequently, to reduce the high dimensional datasets, only the top 5% of the best-ranked features are selected, and the remaining redundant and unnecessary features are removed. In this work, the features of microarray cancer datasets are ranked with various common filter feature selection methods. The features of microarray cancer datasets are ranked by utilizing well-known, fast and effective filtering methods such as correlation, information gain, and information gain ratio, Relief, Chi-squared and Gini Index.

3.2.1. Correlation

The correlation-based feature selection (CR-FS) technique is a widely used filter algorithm [40] that relies on the correlation between features and the target class. CFS chooses only the features that have high correlation to the target class and minimal correlation with each other. CFS uses the following Equation (1) to evaluate the features.

where the average correlation between the class and the feature is represented by while the correlation between two features is represented by , and denotes the number of features. In this study, the correlations between features and the target class are computed by feature Pearson’s correlation coefficient (PCC).

3.2.2. Information Gain

The information gain (IG) [41] is a well-known filter technique that has been effectively used to choose highly relevant features by employing the entropy concept to assess the importance of features. In IG, the worth of an attribute is determined by computing the quantity of information gained by the feature with respect to the target class. The IG method uses Equation (2) to compute the score IG (S, A) of a feature A:

where represents the subset of data S and feature A has the specific value v.

By using Equation (3), Entropy(S) is calculated based on the probability of class in S.

3.2.3. Information Gain Ratio

Although IG is usually a good filter feature selection method used for ranking the features, IG biases towards features with a large number of distinct values. The information gain ratio (IGR) [42], which penalizes features with a large number of values, is used to address the drawback of IG. In IGR, the information gain is divided by the split information, which effectively measures the inherent information required to distinguish between the various values of that feature. IGR uses an Equation (4) to calculate split information (S, A).

The original dataset is denoted as S, each sub-dataset after being split is represented as , and m is the number of sub-datasets. The number of samples in and are denoted as and respectively.

The score IGR (S, A) of feature A is calculated by using Equation (5).

3.2.4. Relief

The Relief (RF) feature selection method [43] is another popular filter method based on the nearest neighbor to weight attributes, so it is utilized to deal effectively with dependent features and noisy data. The Relief feature selection method arbitrarily chooses a sample of the data and then finds its k-nearest neighbors from the same class and each of the opposite classes. The Relief method uses Equation (6) to compute the score of ith attribute:

where represents the randomly chosen samples from the dataset, denotes the distance between the ith attribute value of a randomly chosen sample and the nearest sample of the same class, while and represents the distance between the ith attribute value of a randomly chosen sample and the nearest sample of the different class.

3.2.5. Chi-Squared

Chi-squared [44] is a popular and statistical filter method based on calculating the dependence between features and class. Features are ranked based on how strongly they are associated with the target class. The Chi-squared (CHSQR) test computes the aggregate of squared differences between the observed frequencies of each category with the expected frequencies under the assumption of no association. By computing χ2 with regard to the class as shown in Equation (7), each feature’s significance is assessed.

where c represents the class number and r stands for the number of bins utilized to discretize numerical attributes. and stand for the observed frequency and expected frequency, respectively.

3.2.6. Gini Index

Another popular filter method used for feature ranking is the Gini index (GI) [45]. It computes and allocates a weight or scoring to each feature, indicating the feature’s ability to distinguish instances from distinct classes. The following Equation (8) is utilized for calculating the Gini index of :

where c denotes number of classes and refers to the probability of samples belonging to class in S.

3.3. Differential Evolution Based Feature Selection

Machine learning algorithms can be effectively trained to produce enhanced classification results using reduced cancer datasets that only include the top-ranked features determined by the filter methods. However, the performance of the machine learning algorithms considering only the filter methods is still limited when they are applied on high-dimensional microarray datasets [46]. This is because the majority of filter methods ignore the correlation between groups of features and the class label. They assume that the features are independent and find the relationship between the individual feature and the class label. Furthermore, filter methods use certain criteria to assess the features without the use of a machine learning algorithm. Thus, in order to improve the effectiveness of the cancer classification, Differential Evolution (DE) is used in this paper to further optimize the chosen features that are selected by the filter approaches.

DE is a powerful global optimization approach developed by Storn and Price [47], which belongs to category of evolutionary algorithms that draw inspiration from the natural evolution of chromosomes. In recent years, DE has been applied successfully in many real applications and optimization problems since it has several attractive advantages [46,48] over other competitive optimization algorithms. DE performs well in convergence although it is straightforward to implement and requires a few parameters to control and low space complexity.

Firstly, the initial population of the possible solutions in DE is randomly generated over the feasible region. Accordingly, the fitness function is used to evaluate the candidate solutions of the initial population. Then, DE generates a new solution by combining several solutions with the candidate solution. Like GA, the candidate solutions in DE population iteratively evolve to find better solutions through repeated generations of three main DE operators: mutation, crossover, and selection. However, the mutation, crossover, and selection operations in DE are conducted in different ways compared to GA operations.

Let be the source vector of solution i, where i = {1, 2, …, P} and P is population size, d = {1, 2, …, D}, and D is the dimensionality of the search space. The mutation, crossover, and selection in DE are achieved as follows:

- Mutation: For each solution vector , Equation (9) is used to produce a mutant solution

- Crossover: The crossover operation is performed between the parent and its corresponding mutant solution in order to produce a trial solution as shown in Equation (10).

- Selection: The fitness of trial vector is computed and then compared with the fitness of source vector . If i is better than , will be replaced with into the population of the next generation. Otherwise, the population keeps the source vector for the next generation.

From generation to generation, the mutation, crossover and selection are applied to update and evolve the population in order to find the optimal solution until a stopping criterion is met.

DE was originally produced for solving optimization problems in continuous numbers search space. Therefore, the binary version of DE(BDE) was developed in order to solve feature selection and other binary optimization problems. The steps of feature selection based on DE can be described as follows:

- Initialization: In this step, the initial population of possible individuals is generated randomly. Each individual represents a possible solution of feature subset which is binary-coded vector with m bits, where m is the number of available features. If any bit of an individual is 1, the corresponding feature is selected; otherwise, it is not selected. For example, five features (3rd, 5th, 7th, 8th and 9th features) are selected for given a solution X = {0, 0, 1, 0, 1, 0, 1, 1, 1, 0}.

- Fitness evaluations: In this step, the individuals (feature subset solutions) in the population are evaluated using the fitness function. To calculate the fitness value of a feature subset solution, the misclassification rate (1-classification rate) of a machine learning technique trained with that feature subset solution is calculated for each individual in the population. That means the training dataset with the features indicated by 1 value on feature subset solution is used to train the machine learning technique. Then the misclassification rate is computed as the fitness of that feature subset solution. The misclassification rate is the ratio of the number of incorrectly classified instances to the total number of instances. The fitness function in the proposed DE-based feature selection aims at minimizing the misclassification rate of machine learning techniques trained with the possible feature subset solutions in order to identify the optimal feature subset that can produce the best classification results.

- Mutation process: For the current individual , the mutation process in DE starts by randomly choosing three individuals , , from the population where . In DE used in feature selection problem, mutant solution is generated based on difference vector as shown in the following Equations (11) and (12).

- Crossover process: Once the mutant individual is generated in the mutation process, the trial individual is created by performing the crossover process between the source vector and its corresponding mutant vector . The trial solution in DE-based feature selection is computed using the same equation used in the original DE as shown in Equation (13).

- Selection process: In this step, DE compares the fitness values (misclassification rate) produced by the source solution and the trial solution . If the trial solution has better fitness value (lower misclassification rate) than the source solution , DE replaces the source solution with the trial solution in the population for the next generation. Otherwise, the source solution is retained in the DE population for the next generation.

In DE evolutionary process, the mutation, crossover, and selection processes are repeatedly conducted until a stopping criterion is satisfied. Eventually, the DE returns the best solution in the DE population that represents the optimum feature subset that can be effectively utilized later in the training and testing phases. Algorithm 1 presents steps of the proposed hybrid filter and differential evolution-based feature selection suggested to enhance cancer classification in Microarray data.

| Algorithm 1: Hybrid filter and differential evolution-based feature selection | |

| Input: F: Original feature set, and P: Size of population Output: SF: The optimal selected features | |

| Begin | |

| 1 | Compute scores of the features using Filter methods: Information gain (IG), information gain ratio (IGR), correlation (CR), Gini index (GIND), Relief (RELIEF) and Chi-squared (CHSQR) |

| 2 | Do ranking of all features in F based on the scores computed by Filter methods |

| 3 | Reduce dimension of training data by selecting only the top 5% of ranked features |

| 4 | Set Crossover rate, maximum number of generations Max_t, the generation counter t = 0 |

| 5 | Generate and initialize P individuals of population (feature subset solutions) |

| 6 | While t < Max_t and Stopping criterion is not satisfied Do |

| 7 | t = t + 1 |

| 8 | Compute the fitness of individuals using misclassification rate |

| 9 | Best individual = Evaluate the fitness of individuals |

| 10 | For each individual Do |

| 11 | Choose three individuals ,

,

randomly where |

| 12 | Generate a mutant solution using Equations (9) and (10) |

| 13 | Generate a trial vector using Equation (11) |

| 14 | Evaluate fitness values of and |

| 15 | If fitness of is better than fitness of |

| 16 | is replaced with in the population and then used for the next generation |

| 17 | Else |

| 18 | is kept for next generation |

| 19 | End if |

| 20 | End For |

| 21 | End While |

| 22 | Extract the optimal selected features from the individual with the best fitness value |

| 23 | Return the optimal features SF |

| 24 | End Algorithm |

3.4. Training of Machine Learning Techniques

As mentioned, the high-dimensional microarray datasets are reduced by applying the proposed hybrid filter-DE feature selection. In the first stage, we used filter methods to rank all features in microarray datasets and then select only the top 5% of features while the remaining features were removed. Then, we used DE in the second stage of the proposed method to choose only highly relevant features of the reduced microarray datasets. By using the final training microarray datasets with the most optimal features, we trained some well-known machine learning algorithms, such as support vector machine (SVM), naïve Bayes classifier (NB), k-Nearest Neighbour (kNN), decision tree (DT), and random forest (RF), that are widely employed in the literature to classify cancer having large dimension microarray datasets. To optimally design the kNN, NB, DT, RF, and SVM, the best features selected by the proposed hybrid filter-DE feature selection are used to as inputs to train these models. In addition, the best settings and parameters used in all classifiers were selected by a trial-and-error basis in order to produce the best results. Furthermore, we train these models using stratified cross-validation that is especially useful for imbalanced datasets, ensuring equal representation in each fold.

3.5. Testing and Evaluation of Machine Learning Techniques

After completing the training phase, the trained classification models based on the proposed hybrid filter-DE feature selection method will be evaluated with the new testing microarray datasets. Instead of using all the features of testing microarray datasets, we reduce testing microarray datasets by using only the optimal features selected by the proposed hybrid filter-DE feature selection method. Then, the reduced testing microarray datasets will be used as input of SVM, NB, kNN, DT, and RF to assess the cancer classification performance of these classifiers. In this paper, we used 10-fold cross-validation to evaluate the proposed method. We use some popular evaluation metrics used in the literature to evaluate the proposed hybrid filter-DE feature selection method, such as classification accuracy, recall, precision, and F-measure. The evaluation metrics used in this paper are defined based on the confusion matrix as follows:

Classification accuracy expressed in Equation (14) measures the total proportion of properly diagnosed samples.

Equation (15) calculates Recall measure, which is the proportion of positive samples that are properly diagnosed as the positive class.

Precision is calculated in Equation (16) as the number of properly diagnosed positive samples divided by the total number of samples classified as positive. It is represented by the following formula:

F-measure is a measure that combines Recall and Precision into a harmonic mean as expressed in Equation (17) to give a balanced assessment of the model’s performance.

where TP denotes the number of correctly classified positive samples, FP stands for the number of negative samples incorrectly classified as positive samples, TN stands for the number of correctly classified negative samples, and FN indicates the number of positive samples incorrectly classified as negative samples.

In the multi-class classification, these measures are computed using Equations (18)–(23). Recall for each class i is calculated using Equation (18), which represents the ratio of true positive predictions to the total actual samples of that class. The Overall Recall can be calculated using Equation (19).

where , , and are true positive, false positive, true negative and false negative for class i, respectively, and is the number of classes.

As shown in Equation (20), Precision for each class i is the ratio of true positive predictions for that class to the total samples classified as that class. The Overall Precision can be calculated using Equation (21).

F-measure for each class i is calculated using Equation (22), which is the harmonic mean of precision and recall for that class. The Overall F-measure can be calculated using Equation (23).

In addition to classification measures, the classification error can be measured for each class using False Positive Rate and False Negative Rate. False Positive Rate (FPR) for each class i is calculated using Equation (24), which represents the ratio of samples incorrectly classified as class i out of all samples that do not actually belong to class i. Equation (25) is used to calculate the Overall FPR. In contrast, the False Negative Rate (FNR) for each class i is calculated using Equation (26), which denotes the proportion of samples that actually belong to class i but they are incorrectly classified as a different class. Equation (27) is used to calculate the overall FNR.

4. Experimental Environment and Results Discussion

This section presents the experimental environment and settings used to implement the suggested hybrid filter-DE techniques. In addition, it investigates the effectiveness of machine learning approaches after implementing the recommended hybrid filter-DE feature selection strategy, in comparison to their performance standalone and after applying only filter methods. The comparison includes the performance of machine learning by considering all features, features selected by filter methods, and features selected by the suggested hybrid filter-DE techniques. The most optimal classification results of the filter approaches were accomplished by training machine learning algorithms with top 5% of ranking features on these four datasets.

4.1. Experimental Environment

This study utilized the RapidMiner tool (version 10.1) and the Anaconda package. RapidMiner resources offer a comprehensive collection of machine learning algorithms and a variety of strategies for data validation. Furthermore, the Anaconda package is employed to execute differential evolutionary algorithms in the Python programming language, as well as for the purposes of training, testing, and visualization. As shown in Table 2, we have conducted many experiments and scenarios to get the best crossover rate (CR) and population size (P) that can produce the best classification results. The best parameters of DE used with the proposed hybrid filter-DE feature selection are listed in Table 3.

Table 2.

Some scenarios used to get the best crossover rate (CR) and population size (P).

Table 3.

Parameter details of DE used in the hybrid filter-DE feature selection approach across the experiment on all datasets.

In addition, several Python libraries, such as Scikit-learn and Matplotlib, have been utilized. The data was visualized using Matplotlib, and machine learning techniques were implemented using the Scikit-learn module. The computing environment utilized a Lenovo Laptop equipped with the Windows 10 operating system, an Intel Core i7 (RTX) CPU, and 32 GB of RAM.

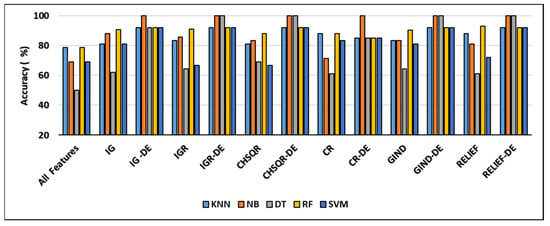

4.2. Results Analysis of Brain Dataset

It is evident from Figure 2 that when the IG filter was applied, the classification accuracies of KNN (78.57%), NB (69.05%), DT (50%), RF (78.57%), and SVM (69%) improved to 81%, 88.1%, 61.9%, 90.5%, and 81%, respectively. When IGR was used, the accuracy results of KNN, NB, DT, RF, and SVM showed enhancement and reached 83.33%, 85.71%, 64.29%, 90.86%, and 66.67%, respectively. Furthermore, when Chi-square (CHSQR) was applied, the accuracy results of KNN, NB, DT, RF, and SVM were enhanced to 80.95%, 83.33%, 69.05%, 88.1%, and 66.67%, respectively. In addition, when applying correlation CR, the accuracy results showed some improvement to 88.1%, 71.43%, 61.1%, 88.1%, and 83.33%, respectively. In the case of the GIND filter, accuracy enhancement was observed as KNN (83.33%), NB (83.33%), DT (64.29%), RF (90.46%), and SVM (80.95%). Additionally, the relief filter method showed enhancement with KNN (88.1%), NB (80.95%), DT (61.09%), RF (92.86%), and SVM (71.90%).

Figure 2.

Comparison of machine learning accuracy on the Brain dataset using all features, filter features, and hybrid filter-DE methods.

The hybrid IG-DE method improved the results of classification accuracy of KNN (78.57%), NB (69.05%), DT (50%), RF (78.57%), and SVM (69%) to 92%, 100%, 92%, and 92%, respectively, whereas the hybrid IGR-DE method improved them to 92%, 100%, 100%, 92%, and 92%. The suggested hybrid CHSQR-DE approach improved classification accuracy of KNN, NB, DT, RF, and SVM to 92%, 100%, 100%, 92%, and 92%, whereas hybrid CR-DE improved them to 85%, 100%, 85%, 85%, and 85%. Furthermore, the proposed hybrid GIND-DE method improved accuracy results to 92%, 100%, 92%, and 92%, while the hybrid RELIEF-DE method improved accuracy results to 92%, 100%, 100%, 92%, and 92% when compared to all features’ accuracy results.

In terms of accuracy, the IG-DE-NB, IGR-DE, with NB and DT, CHSQR-DE with NB and DT, CR-DE with NB, GIND-DE with NB and DT, and RELIEF-DE with NB and DT classifiers achieved optimal performance of 100% accuracy using the top 5% of ranked features on this dataset.

Table 4 shows the comparison of the number of selected genes and other measures of KNN, NB, DT, RF, and SVM by applying all features, filter feature selection, and hybrid-DE filter feature selection methods. It is obvious from Table 4 that the machine learning algorithms, with the implementation of the proposed hybrid filter-DE methods, accomplished much better performance in terms of accuracy, precision, recall, and F-measure compared to their performance with all features and their performances considering only filter methods. Outstandingly, the proposed hybrid methods IG-DE, IGR-DE, CHSQR-DE, CR-DE, GIND-DE, and RELIEF-DE with NB achieved optimal 100% performance. Furthermore, it is obvious from Table 4 that CHSQR-DE with DT has an edge over all other hybrid methods in terms of the features selected. The smallest number of selected features are highlighted in grey color. CHSQR-DE with DT performed excellently by selecting only 121 features out of 5597 features. It is evident from Table 4 that the filter feature selection strategy reduced the number of relevant features in the BRAIN datasets from 5597 to 280. Furthermore, the suggested hybrid IG-DE, IGR-DE, CHSQR-DE, CR-DE, GIND-DE, and RELIEF-DE techniques in the BRAIN dataset on average favored only 142, 126, 134, 132, 144, 138 features out of the 280 features.

Table 4.

Performance comparison of classifiers utilizing all features, filter methods, and the suggested hybrid filter-DE approaches on the Brain dataset. Background color: show the lowest number of features.

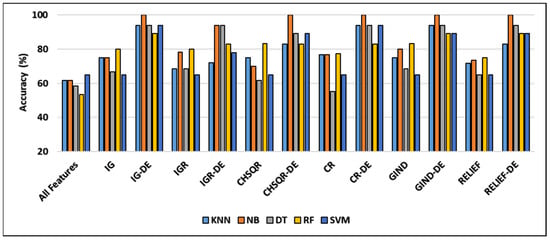

4.3. Results Analysis of CNS Dataset

Figure 3 demonstrates that the classification accuracies of KNN (61.67%), NB (61.67%), DT (58.33%), and RF (53.33%) were enhanced by applying IG to 75%, 66.67%, 80%, and 80% while they were enhanced by applying IGR to 68.33%, 78.33%, 68.33%, and 80%, respectively. Further, in the case of CHSQR, the observed enhancement reached 75%, 70%, 61.67%, and 83.33%. In the case of CR (correlation), the change was noted as 76.67%, 55.5, and 77.33%, respectively. Further, when GIND was applied, the enhanced results reached 75%, 80%, 68.33%, and 83.33%. The relief filter also showed enhancement, reaching 71.67%, 73.33%, 65%, and 75%. It was also observed that there was no enhancement in all cases with SVM.

Figure 3.

Comparison of machine learning accuracy on the CNS dataset using all features, filter features, and hybrid filter-DE methods.

The hybrid IG-DE technique improved the classification accuracies of KNN, NB, DT, RF, and SVM to 94%, 100%, 94%, 89%, and 94% respectively. On the other hand, the hybrid CHSQR-DE method improved the accuracies to 83%, 100%, 89%, 83%, and 89%. The hybrid CR-DE technique improved the classification accuracies of KNN, NB, DT, RF, and SVM to 945, 100%, 94%, 83%, and 94%, respectively. On the other hand, the hybrid GIND-DE method improved the accuracies to 94%, 100%, 94%, 89%, and 89%. In addition, their performances were improved by implementing the suggested hybrid RELIEF-DE technique to achieve success rates of 83%, 100%, 94%, 89%, and 83% respectively.

The least enhancements observed with the proposed IGR-DE were 72%, 94%, 94%, 83%, and 78% in comparison to KNN (61.67), NB (61.67), DT (58.33), RF (53.33), and SVM (65). In terms of accuracy, the IG-DE with NB, CR-DE with DT, GIND-DE with NB, CHSQR-DE with NB, and RELIEF-DE with NB classifiers achieved optimal performance of 100% accuracy using the top 5% of ranked features on this dataset. To further analyze the efficacy of the suggested technique, the results of the proposed approaches were compared against feature selection methods as well as all features as presented in Table 5. Table 5 shows the comparison of and the number of selected genes and other measures of KNN, NB, DT, RF, and SVM by applying all features, filter feature selection, and hybrid-DE filter feature selection methods.

Table 5.

Performance comparison of classifiers utilizing all features, filters, and the suggested hybrid filter-DE approaches on the CNS dataset. Background color: show the lowest number of features.

Table 5 clearly shows that the machine learning algorithms performed significantly better performance in terms of accuracy, precision, recall, and F-measure after applying the proposed hybrid filter-DE methods compared to their performance with all features and their performance with only filter methods. Outstandingly, the proposed IG-DE with NB, CR-DE with DT, GIND-DE with NB, CHSQR-DE with NB, and RELIEF-DE with DT achieved optimal 100% performance. Furthermore, it is obvious from Table 5 that IG-DE with NB has an edge over all other hybrid methods in terms of the features selected. IG-DE with NB performed excellently with selecting only 156 features out of a total of 6129 features. The smallest number of selected features are highlighted in grey color. In addition, Table 5 shows that the filter feature selection strategy reduced the number of relevant features in the CNS datasets from 6129 to 306. Further, the suggested hybrid IG-DE, IGR-DE, CHSQR-DE, CR-DE, GIND-DE, and RELIEF-DE techniques choose only 163, 171, 177, 180, 174 and 178 relevant features on average from the 306 filtered features.

4.4. Results Analysis of Lung Dataset

Figure 4 shows that applying GIND improved the classification accuracies of KNN (92.61%), NB (90.15%), DT (84.43%), and RF (83.74%) by 93.6%, 95.07%, 91.13%, and 93.60, respectively. Also, after applying the IG filter method, accuracy results were enhanced to 92.61%, 95%, 86.7%, and 93.6%, respectively.

Figure 4.

Comparison of machine learning accuracy on the Lung dataset using all features, filter features, and hybrid filter-DE features.

Further, when CHSQR was applied, the accuracy results showed some improvements at 92.12%, 92.12%, 85.22, and 92.61%, respectively. In the case of the IGR filter, enhancement was recorded with NB (93.6%), DT (86.21%), and RF (91.13%), while KNN and Relief showed a little decrement. In addition, with the CR filter method, the enhancement was recorded only with NB (94.09%) and RF (86.7%), while we observed a little decrease with KNN and DT. The relief filter method showed enhancement with NB (91.63%), DT (89.16%), and RF (92.12%) but it did not perform well with KNN, and a small amount of decrement was observed in the accuracy. No method of the six filter methods performed well with SVM, and no enhancement was recorded in accuracy.

The proposed hybrid IG-DE method enhanced further the classification accuracies of KNN, NB, DT, RF, and SVM to 97%, 97%, 97%, 93%, and 98%, respectively, while they were enhanced by applying the hybrid IGR-DE method to 95%, 92%, 98%, 95%, and 97%, respectively. The CHSQR-DE method enhanced further the classification accuracies of DT, RF, and SVM to 97%, 93%, and 97%, respectively, while for KNN and NB, a very small amount of decrement was observed. Additionally, by applying the hybrid CR-DE method, KNN, NB, DT, RF, and SVM results were enhanced to 95%, 93%, 93%, 93%, and 97%, respectively. Furthermore, by applying the hybrid GIND-DE method, the accuracy results of KNN, NB, DT, RF, and SVM were enhanced to 93%, 93%, 98%, 95%, and 95%, respectively, while they were enhanced by applying the hybrid RELIEF-DE method to 93%, 92%, 97%, 93%, and 93%, respectively. In terms of accuracy, the IG-DE with SVM, IGR-DE with DT, and GIND-DE with DT classifiers achieved optimal performance of 98% accuracy using the top 5% of ranked features on this Lung dataset.

Table 6 compares the performance measures, and the number of genes identified by the proposed methodology on the Lung dataset to five classifiers (KNN, NB, DT, RF, and SVM) with all features, filter feature selection, and hybrid-DE filter feature selection approaches. Table 6 clearly shows that the machine learning algorithms performed significantly better in terms of accuracy, precision, recall, and F-measure after applying the proposed hybrid filter-DE methods in comparison to when using all features or only filter methods. From Table 6, it’s clear that the proposed hybrid methods IG-DE with SVM (98%, 100%, and 98%), IGR-DE with DT (98%, 100%, and 98%), and Gini Index GIND-DE with DT (98%, 100%, and 98%) accomplished about the same performance in terms of accuracy, precision, and F-measure. In terms of selected features, as shown in Table 6, the hybrid GIND-DE with DT and IGR-DE with DT showed excellent performance using only 296 features out of a total of 12,600 features. In conclusion, the hybrid Gini Index and IGR with DE optimization and the DT classifier outperformed other hybrid methods.

Table 6.

Performance comparison of classifiers utilizing all features, filters, and the suggested hybrid filter-DE approaches on the Lung dataset. Background color: show the lowest number of features.

In addition, Table 6 reveals that the filter feature selection strategy reduced the number of relevant features in the LUNG datasets from 12,600 features to 630 features. Furthermore, the suggested hybrid IG-DE, IGR-DE, CHSQR-DE, CR-DE, GIND-DE, and RELIEF-DE techniques reduced the 630 features to just 300, 305, 318, 308 and 294 relevant features on average. The smallest number of the selected features are highlighted in grey colour.

As described in Table 1, the Lung dataset has five classes: normal tissue (17 samples), adenocarcinoma (139 samples), pulmonary carcinoid (20 samples), squamous carcinoma (21 samples) and small cell cancer (6 samples). That means Lung consists of 17 samples of normal tissue, while remaining classes represent types of lung cancer. Therefore, in addition to classification measures, since there are different numbers of samples for classes in lung dataset, the classification errors in terms of False Positive Rate (FPR) and False Negative Rate (FNR) were calculated for each class in Table 7.

Table 7.

The comparison of FPR and FNR for the suggested hybrid filter-DE approaches on the Lung dataset.

The FPR and FNR were calculated for SVM, NB, KNN, DT, and RF after applying the proposed hybrid filter-DE feature section methods: IG-DE, IGR-DE, CHSQR-DE, CR-DE, GIND-DE and RELIEF-DE. The lower FPR and FNR indicate less misclassification and better performance. As can be observed from Table 7, in most cases, TPRs and TNRs of SVM, NB, KNN, DT, and RF after applying the proposed hybrid filter-DE feature section methods were low, especially with the four types of lung cancer (adenocarcinoma, pulmonary carcinoid, squamous carcinoma and small cell cancer). Particularly, NB achieves low FPR across most feature selection methods, especially with IG-DE and IGR-DE. However, it struggles with higher FNR in Adenocarcinoma using CR-DE, impacting sensitivity for this class. DT maintains low FPR, with IGR-DE showing excellent results, though FNR for Adenocarcinoma remains moderately high across methods. IGR-DE provides the best overall balance for DT performance. RF achieves low FPR with IG-DE but shows slightly higher FPR for Normal tissue with methods like CHSQR-DE. FNR is highly variable, with challenges in Adenocarcinoma and Pulmonary Carcinoid depending on the feature selection method. KNN has consistently low FPR, particularly with IG-DE, though CHSQR-DE raises FPR for Normal tissue. FNR is more variable, with Pulmonary Carcinoid being challenging in certain feature selection methods. SVM shows high FPR in Normal tissue using IG-DE but maintains low FPR with other methods like IGR-DE. FNR is generally low, though Pulmonary Carcinoid can be challenging for GIND-DE and RELIEF-DE. We can conclude that NB and DT with IG-DE or IGR-DE feature selection offer the best balance of low FPR and FNR across most cancer types, ensuring minimal misclassifications. So, NB and DT with IG-DE or IGR-DE can be considered as effective options for this lung cancer dataset.

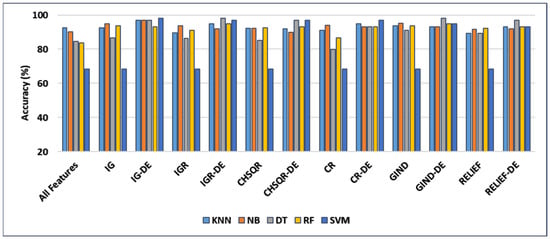

4.5. Results Analysis of Breast Dataset

Figure 5 shows that adding IG improved the classification accuracy of KNN (56.67%), NB (48.45%), DT (53.73%), RF (63.92%), and SVM (52.58%) to 71.33%, 55.67%, 67.01%, 86.6%, and 74.23% respectively. Furthermore, using the IGR filter method increased the accuracy results to 64.95%, 54.67%, 61.86%, 87.63%, and 69.07%, respectively. Additionally, in the case of CHSQR, the observed enhancement in comparison to all feature performances reached 72.16%, 72.33%, 68.04%, 81.44%, and 73.20%, respectively. In the case of CR (correlation), enhancement reached 76.29%, 77.32%, 67.01%, 76.29%, and 74.23%, respectively. In the case of GIND (Gini Index), enhancement in comparison to all feature performance reached 73.2%, 56.64%, 70.1%, 70.10%, 82.47%, and 78.35%, respectively. In the case of GIND (Gini Index), enhancement in comparison to all feature performance reached 73.2%, 56.64%, 70.1%, 70.10%, 82.47%, and 78.35%, respectively.

Figure 5.

Comparison of accuracy results on the Breast dataset using all features, filter approaches, and the suggested hybrid filter-DE method.

The relief filter also showed enhancement in the accuracy results and reached 74.23%, 77.32%, 63.92%, 80.41%, and 76.29%. By applying the filter method, the highest accuracy achieved was 87.63% with IGR-RF, and the lowest accuracy achieved was 54.67% with IGR-NB. The proposed hybrid IG-DE method improved the accuracy of KNN, NB, DT, RF, and SVM even more, to 80%, 53%, 87%, 70%, and 73%, respectively. The hybrid IGR-DE method improved them even more, to 70%, 53%, 87%, 70%, and 67%, respectively. The CHSQR-DE method enhanced further the classification accuracies of KNN, NB, DT, RF, and SVM to 77%, 70%, 93%, 80%, and 73%, respectively. By applying the hybrid CR-DE method, KNN, NB, DT, RF, and SVM results were enhanced to 83%, 70%, 87%, 70%, and 70%, respectively. Using the hybrid GIND-DE method, the accuracy of KNN, NB, DT, RF, and SVM improved to 80%, 60%, 87%, 77%, and 73%, respectively. Using the hybrid RELIEF-DE method, the accuracy also improved to 80%, 77%, 90%, 77%, and 73%, respectively. The highest accuracy (93%) enhancement was observed with CHSQR-DE with the DT classifier, while the lowest enhancement (53%) was observed with IG-DE and IGR-DE using the NB classifier. In terms of accuracy, the CHSQR-DE with the DT classifier achieved an optimal performance of 93% using the top 5% of ranked features on this Breast dataset.

Table 8 shows the performance measures, and the number of genes identified by the proposed methodology on the Breast dataset using all features, filters, and the proposed hybrid filter-DE methods. Table 8 clearly reveals that the machine learning algorithms performed significantly better in terms of accuracy, precision, recall, and F-measure after applying the proposed hybrid filter-DE methods than when using all features or only filter methods. Further, Table 8 shows that the suggested hybrid CHSQR-DE with DT outperformed other hybrid approaches in terms of accuracy, precision, and F-measure 93%, 94%, 93% respectively.

Table 8.

Classifier performance comparison on the Breast dataset using all features, filters, and the proposed hybrid filter-DE methods. Background color: show the lowest number of features.

Furthermore, the relief RELIEF-DE with the DT classifier obtained accuracy, precision, and F-measures of 90%, 90%, and 90%, respectively. In terms of selected features, as shown in Table 8. CHSQR-DE with DT showed the best performance using the least number of features (615) out of a total of 24,481 features. In conclusion, Chi-Square with DE optimization and a DT classifier outperformed all other filter and hybrid methods. In addition, Table 8 indicates that the filter feature selection strategy reduced the number of relevant features in the BREAST datasets from 24,481 features to 1224 features. Further, the application of suggested hybrid CHSQR-DE-DT, CR-DT-KNN, CHSQR-DE-RF, and RELIEF-DE-NB techniques decreased the 1224 features to an average of 615, 583, 619 and 596 significant features, respectively. The smallest number of selected features are highlighted in grey color.

4.6. Analysis of Computational Time Complexity

To study computational complexity, big notation was used in this study to analyze the computational time complexity of the proposed method. The computational times for Information gain (IG), information gain ratio (IGR), correlation (CR), Gini index (GIND), Relief (RELIEF) and Chi-squared (CHSQR) are , , , , , and , respectively. Here, represents the number of features, denotes the total number of samples in the dataset, represents number of samples chosen to evaluate the features in Relief (RELIEF).

The time complexity of one generation in DE involves the time complexity of the mutation process , the crossover process , the fitness evaluation and selection process . The combined complexity per generation is . Thus, the total time complexity of DE for all generations is , where is the total number of generations, is the population size, is the dimensionality of the problem (number of features reduced by filter methods), and is the time complexity of evaluating the fitness function.

The overall time complexity of proposed hybrid approach involves calculating the computational time of filter algorithms used to rank the features and then reduce the dimensionality of microarray datasets, and the computational time of the DE optimization algorithm used in the second phase to identify the best features. Therefore, the total computational time complexity of the proposed hybrid filter-DE feature selection approaches can be listed in the following Table 9.

Table 9.

The computational time complexity of the proposed methods.

4.7. Comparison of Proposed Hybrid Filter-DE with Previous Works

This section presents a comparison between the proposed hybrid filter-DE and previous research works that utilized hybrid feature selection techniques on microarray datasets.

This study aimed to reduce the dimensionality of four microarray datasets: Lung, CNS, Brain, and Breast. Hameed et al. [35] proposed using PCC-GA and PCC-BPSO approaches in microarray datasets to integrate Pearson’s Correlation Coefficient (PCC) with GA or BPSO. Almutiri et al. [29] enhanced cancer classification using fusion-based feature selection on four microarray datasets. In one recent work, the authors [17] used the hybrid feature selection approach to evaluate these four microarray datasets. They combined the Gini index (GI) and support vector machines (SVMs) with recursive feature elimination (RFE).

Recently, Ali et al. [23] employed hybrid GA-based feature selection on the same four microarray datasets used in this study, integrating genetic algorithm with filter methods. Table 10 shows the accuracy comparison of the proposed hybrid filter-DE against previous research works. The proposed hybrid filter-DE feature selection approaches were compared to related studies using the same datasets. Table 10 reveals that the proposed approaches outperformed most of the previous works on most of the datasets used in this study. For Brain dataset, it is evident from Table 10 that the suggested hybrid filter-DE feature selection approaches with NB performed well with 100% accuracy. Furthermore, most of the proposed hybrid filter-DE methods with DT achieved 100% classification accuracy. Similarly, the classification accuracy results accomplished by previous work [23] with RF were competitive with our proposed methods. For CNS dataset, the classification accuracy results of most of the proposed hybrid filter-DE methods were better than accuracy results achieved by other works. Particularly, Table 10 reveals that the proposed IG-DE, CHSQR-DE, CR-DE, GIND-DE, and RELIEF-DE with NB for CNS dataset performed well with 100% accuracy. For Lung dataset, the suggested IGR-DE and GIND-DE with DT performed well with 98% accuracy. The IG-GA [23] and PCC-BPSO [35] with NB also performed comparable performance about 98% classification accuracy. For Breast dataset, it is evident from Table 10 that the proposed CHSQR-DE with the DT and IGR-GA [23] with RF outperformed all other approaches with 93% accuracy.

Table 10.

The accuracy comparison of the proposed hybrid filter-DE methods with the previous studies.

To show the improvement achieved, the improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods is calculated using the following Formula (28):

where is the improvement percentage of accuracy, is the accuracy achieved with features selected by the proposed hybrid filter-DE methods, and the accuracy achieved with all features.

Table 11 shows the comparison of the improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods and the previous studies. For Brain dataset, the average improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods was up to 42.47%, while the previous works achieved the average improvement percentage of accuracy up to 41.43%. For CNS, the average improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods was up to 57.45% while the average improvement percentage of previous works was up to 53.66%. For LUNG, the average improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods was up to 16.28%, while the average improvement percentage for previous works was up to 17.53%. For BREAST, the average improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods was up to 43.57%, while the average improvement percentage for previous works is up to 61.70%. We conclude the proposed hybrid filter-DE methods accomplished better improvement percentage of accuracy than the previous works in Brain and CNS datasets. For Lung dataset, the improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods was competitive to the improvement percentage of accuracy achieved by the previous work. For BREAST dataset, the improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods was less than the improvement percentage of accuracy achieved by the previous works.

Table 11.

The comparison of the improvement percentage of accuracy achieved by the proposed hybrid filter-DE methods and the previous studies.

4.8. Statistical Significance Testing

We have performed the Wilcoxon signed-rank tests, assuming the null hypothesis (H0) and alternative hypothesis (H1). The null hypothesis states that there is no difference between the accuracies of the pairs, while the alternative hypothesis states that there is a significant difference between the accuracies of the pairs. The Wilcoxon signed-rank test yields two values: the test statistic and the p-value. The p-value represents the likelihood of obtaining a result that is at least as extreme as the observed outcome under the assumption that the null hypothesis holds. The statistic uses ranks of differences between paired data. More minor statistics indicate more substantial evidence of paired sample differences. If p ≤ 0.05, there is significant evidence to reject the null hypothesis, and it shows significant differences between samples. If p > 0.05, we do not reject the null hypothesis, indicating no significant difference in performance and the two methods perform competitively.

4.8.1. Statistical Significance Testing of Proposed Methods Compared with Each Other

From Table 12, we observed that the p-values of IG-DE vs. IGR-DE (p = 0.07), IG-DE vs. CR-DE (p = 0.08), CR-DE vs. GIND-DE (p = 0.16) and CR-DE vs. RELIEF-DE (p = 0.12) found very close to the significant level (0.05). This marginal difference validates that IG-DE and CR-DE performed in almost significant ways in competition.

Table 12.

Comparison of the p-value of the proposed methods with each other.

The comparisons among IG-DE vs. CHSQR-DE, IG-DE vs. GIND-DE, IG-DE vs. RELIEF-DE, IGR-DE vs. CR-DE, CHSQR-DE vs. CR-DE, CHSQR-DE vs. GIND-DE, CHSQR-DE vs. RELIEF-DE, GIND-DE vs. RELIEF-DE were having high p-values (mostly > 0.05). For example, the comparison between IG-DE and CHSQR-DE yielded a p-value of 0.90, while the comparison between GIND-DE and RELIEF-DE produced a p-value of 0.73. No statistically significant differences exist in performances for IG-DE vs. GIND-DE, IG-DE vs. RELIEF-DE, IGR-DE vs. CR-DE, CHSQR-DE vs. CR-DE, CHSQR-DE vs. GIND-DE and CHSQR-DE vs. RELIEF-DE pairs. The high p-values validate that the null hypothesis (no difference in the method performance) cannot be rejected. This concludes that the performances of IG-DE vs. CHSQR-DE, IG-DE vs. GIND-DE, IG-DE vs. RELIEF-DE, IGR-DE vs. CR-DE, CHSQR-DE vs. CR-DE, CHSQR-DE vs. GIND-DE, CHSQR-DE vs. RELIEF-DE, GIND-DE vs. RELIEF-DE were not distinguishably different from each other.

The results revealed a promising future for feature selection methodologies, with IGR-DE emerging as a frontrunner, outperforming GIND-DE, RELIEF-DE, and CHSQR-DE. Other methods, such as IG-DE, CHSQR-DE, CR-DE, and GIND-DE, RELIEF-DE, demonstrated similar performance, suggesting their potential interchangeability without significant differences in accuracy.

4.8.2. Statistical Significance Testing of Proposed Methods Against Other Existing Works

As shown in Table 13, the Wilcoxon signed-rank test showed that IGR-DE outperformed PCC-GA [35] (p = 0.01) and GI-SVM-RFE [26] (p = 0.006). IGR-DE outperforms GI-SVM-RFE [26] due to its lower p-values, specifically 0.006. The lower p-value (0.01) indicates that IGR-DE has better performance than PCC-GA [35]. The lower p-values (0.006 and 0.01) support the rejection of the null hypothesis, which states that there is no significant difference between the compared methods. These findings support our proposed IGR-DE approach. The performance of IGR-DE differs significantly from GI-SVM-RFE [26] and PCC-GA [35]. IGR-DE outperformed GI-SVM-RFE [26] and PCC-GA [35] due to this considerable difference.

Table 13.

Comparison of the p-value of the proposed methods against previous works.

Despite the slight difference in significance level, IGR-DE demonstrated a performance that was on par with other methods such as IG-GA [23] (p = 0.13), Fusion [29] (p = 0.10), and PCC-BPSO [35] (p = 0.11). The p-value is larger than 0.05 indicates that the proposed methods are competitive with previous research works. IGR-DE was found to be competitive with IGR-GA [23] (p = 1.0) and CS-GA [23] (p = 0.40), although not significantly different.

The Wilcoxon signed-rank test revealed that IG-DE performed comparably to PCC-GA [35] (0.09), PCC-BPSO [35] (0.11), and GI-SVM-RFE [26] (0.11) due to a minor variation in significant p-value. Even though IG-DE showed no significant difference from IG-GA [23] (p = 0.75), Fusion [29] (p = 0.68), IGR-GA [23] (p = 0.59), and CS-GA [23] (p = 0.98), the p-value is greater than 0.05 that implies the proposed approaches perform competitively to the existing works.