Abstract

The periodic rotation of the Ocean Color and Temperature Scanner (OCTS) introduces jitter errors in the HaiYang-3A (HY-3A) satellite, leading to internal geometric distortion in optical imagery and significant registration errors in multispectral images. These issues severely influence the application value of the optical data. To achieve near real-time compensation, a novel jitter error estimation and correction method based on multi-source attitude data fusion is proposed in this paper. By fusing the measurement data from star sensors and gyroscopes, satellite attitude parameters containing jitter errors are precisely resolved. The jitter component of the attitude parameter is extracted using the fitting method with the optimal sliding window. Then, the jitter error model is established using the least square solution and spectral characteristics. Subsequently, using the imaging geometric model and stable resampling, the optical remote sensing image with jitter distortion is corrected. Experimental results reveal a jitter frequency of 0.187 Hz, matching the OCTS rotation period, with yaw, roll, and pitch amplitudes quantified as 0.905”, 0.468”, and 1.668”, respectively. The registration accuracy of the multispectral images from the Coastal Zone Imager improved from 0.568 to 0.350 pixels. The time complexity is low with the single-layer linear traversal structure. The proposed method can achieve on-orbit near real-time processing and provide accurate attitude parameters for on-orbit geometric processing of optical satellite image data.

1. Introduction

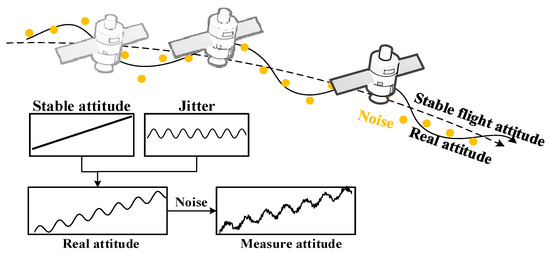

The HaiYang-3A (HY-3A) satellite (orbital altitude of 782.121 km), a new-generation ocean observation remote sensing satellite developed by China, was successfully launched on 16 November 2023. To improve the spatial resolution and spectral resolution for ocean observation, three instruments are installed on HY-3A: the Ocean Color and Temperature Scanner (OCTS) with a ground sample distance (GSD) of 500 m, the Coastal Zone Imager (CZI) with a GSD of 5/20 m, and the Medium Resolution Spectral Imager (MRSI) with a GSD of 100/200 m, as detailed in Table 1 [1,2,3,4]. The primary mission of HY-3A is to conduct large-scale, continuous, and dynamic monitoring of ocean color, sea surface temperature, and sea ice across global marine domains. To meet the requirements of geometric positioning and image application, the star sensors and gyroscope were installed on HY-3A to calculate high-precision attitude (Table 1). The rotating scanning imaging technology implemented in OCTS enables 360° rotation within 5.24 s, thereby enhancing the imaging coverage range. However, the rotational mechanism induces periodic minor attitude vibrations on the satellite platform, a phenomenon designated as satellite jitter (Figure 1). The imaging instruments of HY-3A are composed of a non-collinear multiple Time Delay Integration Charge-Coupled Device (TDI CCD), which exposes the same target multiple times to improve image quality and the signal-to-noise ratio. Influenced by the non-collinear detector, the jitter causes internal geometric distortion in optical imagery and produces significant multispectral image registration errors. These distortions substantially degrade the application value of optical images [5,6,7,8,9,10,11].

Table 1.

Parameter information for HY-3A.

Figure 1.

Schematic diagram of satellite jitter.

Currently, satellite jitter has been detected in multiple satellites. Teshima and Iwasaki identified a 1.5 Hz jitter error with 0.2-pixel amplitude in the cross-track direction of ASTER satellite data [12]. Ayoub et al. reported a jitter error with a frequency of 1.4 Hz and amplitude of 3 pixels in the HiRise satellite and two jitter errors with a frequency of 1 Hz and amplitude of 5 pixels and a frequency of 4.3 Hz and amplitude of 0.2 pixels in the QuickBird satellite [13,14]. Takaku et al. extracted 6–7 Hz along-track jitter error with 1-pixel amplitude in ALOS satellite data [15]. For the Pleiades-HR satellite, Amberg et al. detected a cross-track jitter at 71.5 Hz (0.18 pixels) and an along-track jitter at 71 Hz (0.25 pixels) [16].

There are two types of methods for correcting remote sensing satellite jitter errors: jitter correction based on image parallax error and jitter correction based on deep learning with geometric information. Based on the characteristics of the ZiYuan-3 satellite, Tong et al. proposed a jitter error detection method for the three-line array stereo images [17]. The dense tie points in stereo images were extracted using the geometrically constrained cross-correlation, normalized cross-correlation, and least squares matching. Based on the back-projection residuals of the tie points, the relative image distortion of stereo pairs was examined, and the jitter error was separated. The internal geometric distortion of the nadir image decreased from 1.41 to 0.41 pixels. Sun et al. detected jitter error using the parallax error of the tie points on the adjacent overlapping areas of the original panchromatic image [18]. The space variant blurring model and the viewing angles correction method were proposed to compensate for radiometric and geometric deteriorations, respectively. The geometric positioning accuracy of Mapping Satellite-1 improved from 117.252 m to 84.236 m. Wang et al. used the parallax error between multispectral images for jitter error detection and resampled the images based on a periodic disparity model to correct jitter error [19]. The internal geometric distortion of multispectral images of the ZiYuan-3 satellite decreased from 0.5 to 0.17 pixels. Pan et al. further derived the quantitative relationship between the jitter displacement and relative registration error obtained from parallax images [20]. Zhu further incorporated the relative internal error into the multispectral image parallax to eliminate geometric distortions in the multispectral sensor caused by lens distortion and improve the accuracy of jitter error separation [21,22]. Compared with the conventional method, the detection accuracy was improved by approximately 30%. Liu et al. proposed a satellite jitter compensation method based on dynamic point spread function estimation and iterative image restoration considering the effect of terrain relief and time delay integration [23]. To address the inner distortion caused by the different integration stages of TDI CCD, Zhu et al. proposed a rigorous parallax observation model considering multistage integration time and presented a jitter distortion correction method for panchromatic and multispectral images [24]. Experimental results showed that the proposed method can effectively correct high-frequency jitter distortion in the GaoFen-9 satellite. The jitter error detection method based on image parallax highly relies on the accuracy of image matching, which is time-consuming, sensitive to noise interference, and lacks real-time implementation capability.

Deep learning can autonomously learn the jitter error model and perform image correction using a single optical image. Zhang et al. proposed a deep learning architecture that automatically learns essential scene features from a single image to estimate the attitude jitter. The distorted images and the estimated attitude jitter vectors were then utilized to correct the images through interpolation and resampling. This approach significantly reduced image distortion in the YaoGao-26 satellite and unmanned aerial vehicle systems [25]. Wang et al. developed a satellite jitter correction method based on generative adversarial networks. Experimental results demonstrate that this network exhibits robust compensation effects on satellite jitters under different frequencies and amplitudes [26]. Nguyen et al. explored convolutional neural networks to identify distribution shifts and retrieve satellite attitude parameters from single input images. They further designed specialized unrolled neural networks to reconstruct optical images and correct jitter errors [27,28,29]. The proposed method enhanced both the signal-to-noise ratio and structural similarity index metrics. However, these methods require extensive training datasets and cannot ensure the internal geometric accuracy of the image.

To achieve near real-time compensation for the influence of jitter errors on HY-3A optical images, a jitter error estimation and image distortion correction method is proposed in this paper through multi-source attitude data fusion. Satellite attitude parameters containing jitter errors are derived by fusing absolute attitude direction and relative angular displacement measured from multi-source attitude sensors. Subsequently, the jitter error component is extracted using a sliding window fitting method, while measurement noise is further eliminated using a least squares-based error model. Finally, optical remote sensing images without distortion are generated by applying the stable resampling techniques with the imaging geometric model. The proposed method can correct the geometric distortion of CZI imagery with high accuracy and low time complexity.

The primary innovation of this paper lies in the following:

- (1)

- Based on the characteristics of measurement noise and the mechanism of error influence, a multi-directional dynamic filtering strategy is proposed to obtain the satellite attitude parameter including jitter error directly without image matching.

- (2)

- The stable attitude exaction and jitter separation method based on Fourier transform and sliding filtering is proposed to reconstruct the stable attitude and fluctuating attitude for jitter correction. Moreover, the optimal sliding fitting window is adaptively calculated to obtain the stable attitude for both short-strip and long-strip optical images.

2. Methodology

The proposed optical satellite jitter error correction method includes three key parts: attitude data fusion, jitter error extraction, and error correction. These are illustrated in Figure 2. The process begins with multi-directional fusion filtering using gyroscope and star sensor measurement data, in which differential noise values are assigned to multi-source attitude measurements to enhance jitter error fusion effectiveness. Subsequently, an optimal sliding fitting window is determined based on the initial jitter frequency characteristics. This enables the accurate segmentation of stable satellite attitude and jitter components from the fused attitude using sliding window analysis. Based on the spectral analysis of the extracted jitter errors, robust error modeling is performed with least squares optimization. Finally, the distortion of optical image is corrected according to the geometric model in stable imaging and the reconstruction attitude with jitter error.

Figure 2.

Flowchart of optical satellite jitter error correction with multi-source attitude data fusion.

2.1. Multi-Source Attitude Data Fusion

The star sensor can measure the absolute attitude parameters of the satellite body coordinate system in the J2000 (Julian year 2000) inertial coordinate system. The amplitude of jitter is usually smaller than the measurement noise of the star sensor. Thus, the characteristics of satellite jitter error cannot be well represented in the measurement data from the star sensor. The gyroscope can measure the relative angular velocity parameters of the three axes in the satellite body coordinate system with higher measurement accuracy. However, relative angular displacement cannot directly indicate the directional changes of satellite body in J2000. Therefore, a dynamic fusion filter is proposed to obtain the satellite attitude parameter including jitter error.

2.1.1. Data Fusion of Star Sensor and Gyroscope

The extended Kalman filter (EKF) is applied for the fusion processing of star sensor and gyroscope data [30,31,32,33]. To reduce the dimensionality of the filtering state variables and avoid normalization issues, the quaternion vector component of the satellite’s absolute attitude and the constant drift of the gyroscope are adopted as the state variables, named as .

The system equation is shown as follows:

where represents the quaternion of the satellite body at time . represents the three-axis angular velocity of the satellite body measured by the gyroscope at time . is the constant drift. and are white noises with zero means.

Through discretization of the differential system equation, the equation becomes the following:

where T represents the filtering period.

Using the quaternion measured by the star sensor, the measurement equation is established:

where represents the quaternion measured by the star sensor. denotes the error quaternion.

For time , the state variables at time are predicted using the system equation and the estimated values of the satellite body quaternion and gyroscope constant drift (time update). Then, the predicted state variables are substituted into the measurement equation (measurement update). Finally, the gain matrix is calculated based on the difference between the predicted measurements and the actual measurements to obtain the optimal estimation of the state variables at time (optimal estimation). This process iterates over time, enabling continuous computation of optimal satellite attitude estimation in J2000.

2.1.2. Multi-Directional Filtering with Jitter Error

In data fusion, it is necessary to set the measurement noise values for the star sensor and gyroscope. When the star sensor noise is set to a large value and the gyroscope noise is set to a relatively small value, it will lead to inaccurate constant drift estimation. Such fusion inaccuracies cause significant discrepancies between the fused attitude and true attitude as well as low geometric positioning accuracy of optical imagery [34]. Conversely, the fused attitude fails to capture satellite jitter error characteristics. Therefore, a multi-directional filtering strategy is proposed.

The first filtering employs a forward filtering process with time increments. Based on the testing results, the star sensor noise is set to a small value to improve the estimation accuracy of the gyroscope’s constant drift, as shown in the following equation:

where represents the noise value of the star sensor in the first filtering. represents the noise value of the gyroscope. and represent the measurement random errors of the star sensor and gyroscope, respectively, which can be obtained using sensor specifications.

The second filtering is a time-decreasing backward filtering process with the estimation result in the first filtering. The gyroscope noise is set to a smaller value to improve the fusion effect of satellite jitter error.

Here, represents the noise value of the star sensor in the second filtering. represents the noise value of the gyroscope.

Similar to the first filtering, the third filtering is a forward filtering method. Moreover, the noise values of the star sensor and gyroscope are the same as the second filtering to improve the fusion effect of satellite jitter error. The fused results of the third filtering are used as the optimal results for the final satellite attitude estimation.

2.2. Satellite Jitter Error Extraction

2.2.1. Attitude Transformation

After precise attitude processing, the quaternion from the satellite body coordinate system to the J2000 can be obtained. Although the quaternion parameter has advantages such as being free of singularity and having high computational efficiency, it lacks physical significance and is unable to characterize the jitter. Therefore, the fusion attitude is converted into the Euler angles representing the attitude from the satellite body coordinate system to the orbital coordinate system. The conversion involves the satellite body coordinate system , the orbital coordinate system , and J2000 coordinate system , as shown in Figure 3.

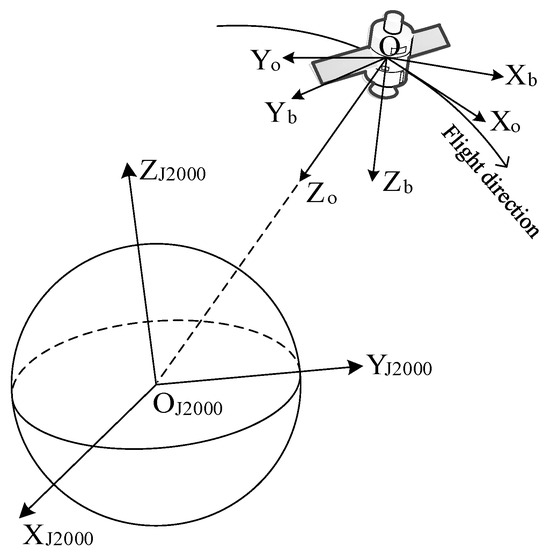

Figure 3.

Optical satellite coordinate system relationship.

Based on the orbit measurement data, the satellite position and velocity at time can be obtained. The attitude rotation matrix from to can be derived using the following equation [35]:

The rotation matrix from to can be calculated as follows:

where represents the rotation matrix from to and is obtained using the fusion attitude.

Then, the fused Euler angles (yaw angle , roll angle , pitch angle , unit: degree) related to can be calculated.

2.2.2. Jitter Error Extraction

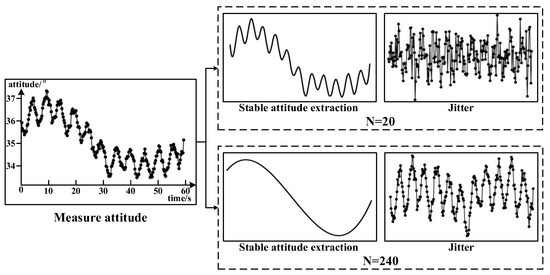

The satellite attitude can be regarded as the composition of the stable attitude and jitter error. To correct the jitter error, the satellite stable flight attitude (small numerical changes without periodic fluctuations) should be separated first. As shown in Figure 4, when the size of stable attitude extraction window is too small, some jitter data are incorrectly extracted, resulting in jitter error data without periodic characteristics. Conversely, the details of stable flight attitude cannot be expressed well using the large extraction window. To address this problem, a stable attitude exaction and jitter separation method based on Fourier transform and sliding filtering is proposed.

Figure 4.

Satellite jitter error extraction under different windows.

First, the fusion Euler angles are fitted using the cubic polynomial model, as shown in the following equation:

where represents the fusion roll angle at observation time . denotes the data index, and n is the total number. are the coefficient of the polynomial fitting model and solved using the least square method. represents the roll angle of the initial stable attitude.

Meanwhile, the initial satellite jitter error (unit: arcsecond) is obtained. Then, the Fourier transform is applied to obtain the spectral characteristics of the initial jitter error, as shown in the following equation:

where (arcsecond), (Hz), and (degree) represent amplitude, frequency, and phase, respectively.

Then, the amplitude of jitter error is converted into the amplitude of image distortion:

where (mm) is the focal length of the camera. (mm) is the detector size corresponding to one pixel of the image.

With the values of and the threshold of 0.1 pixels, the sliding fitting window for stable attitude is calculated using the following equation:

where represents the jitter frequency that causes image distortion greater than 0.1 pixels. is the sampling frequency of fused attitude.

Finally, according to Equations (13)–(15), the stable attitude at time is calculated using the fused attitude data between time and . Similarly, by applying the above processing to both the yaw and pitch angles of the satellite, the stable attitude of the satellite can be extracted. Then, deducting the stable attitude from the fusion attitude, the jitter errors along roll angle , pitch angle , and yaw angle can be obtained.

2.3. Satellite Jitter Error Modeling and Correction

2.3.1. Jitter Error Modeling with Spectrum Analysis

According to the characteristic of HY-3A jitter, the sine function is adopted to establish the jitter error [36]. The jitter model of the roll angle is shown as follows:

where and represent the amplitude, frequency, and phase information of the sine model, respectively. is the index of the sine jitter models. is the number of sine functions and is determined by the number of satellite jitters that causes image distortion greater than 0.1 pixels.

The fitting coefficients are determined using the linearization method and the least square method. Through Taylor series expansion, the nonlinear equation is transformed into a linear equation involving correction values for each parameter, as expressed in the following equation:

where represent the initial values of each parameter. represent the correction values of each parameter.

Based on the equation, the parameters are subsequently calculated using the extracted jitter data. The iteration process is then executed with current parameters until the correction values of model parameters fall below the specified threshold.

2.3.2. Jitter Error Correction Based on Stable Resampling

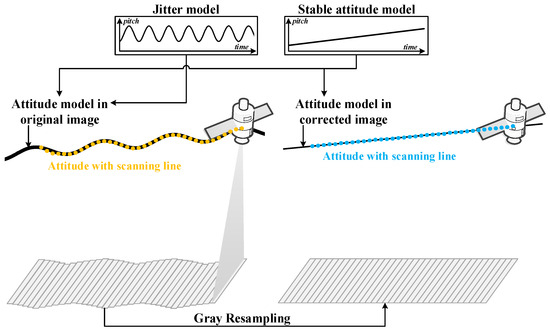

As shown in Figure 5, when affected by satellite jitter, linear scanning imaging will result in image misalignment and geometric distortion. However, when the satellite attitude is stable without jitter errors, optical images with high internal geometric accuracy and no distortion can be obtained. To correct the optical image of HY-3A, resampling using a geometric model and stable attitude is adopted [37,38,39].

Figure 5.

Satellite jitter error correction using resampling with a geometric model and stable attitude.

By applying the jitter error model and stable attitude model established in this paper, the attitude parameters corresponding to each scanning line of the optical image can be reconstructed using temporal interpolation at the imaging time of the scanning line. These reconstructed parameters are related to the original distorted optical image. Thus, the rigorous geometric positioning model for the distorted image can be formulated as follows:

where are the object coordinates. are the satellite position in WGS84 and calculated using orbit position data. is the transform matrix from the J2000 conventional inertial system to WGS84. is the transform matrix from the satellite body coordinate system to the J2000 and is calculated using the reconstructed attitude data. is the transform matrix form the camera coordinate system to the body coordinate system and is extracted with the camera parameter. are the coordinates in the camera system.

Furthermore, using the stable attitude model, stable attitude parameters corresponding to each scanning line of the corrected optical image can be reconstructed. Based on the reconstructed stable attitude and orbit data, a rational function model of the corrected image is constructed:

where and represent the row and column numbers of the corrected image, respectively. are the geodetic coordinates of the object point.

For a pixel on the original distorted image, the coordinates of the corresponding object point can be calculated using Equation (28) and the digital elevation model. Then, based on Equation (29), the coordinates on the corrected image corresponding to can be obtained. Similarly, the coordinates of all points of the original distorted image on the corrected optical image can be obtained. Finally, the intensity of each pixel in the corrected image can be calculated using intensity interpolation and the coordinate relationship between the distorted image and corrected image. Based on the reconstructed stable attitude and fluctuating attitude, the corrected image without jitter error is obtained using the resampling method.

3. Experimental Results

3.1. Experimental Data

To verify the accuracy of the method proposed in this paper, multispectral images from the CZI acquired across different time and regions were selected. Table 2 provides the specific information of the experimental data. To evaluate the effectiveness of the proposed method, the registration error between the second band (B2) and sixth band (B6) is calculated.

Table 2.

Experimental data information.

3.2. Accuracy Analysis of Jitter Error Model

3.2.1. Jitter Error Fusion Analysis

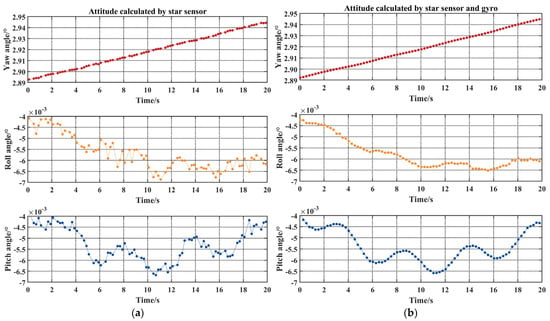

Figure 6a illustrates the attitude parameters from the satellite body coordinate system to the orbital coordinate system determined using the star sensors. Figure 6b demonstrates the attitude parameters determined using the proposed fusion method with the star sensor and gyroscope. Given the control requirements for the yaw angle in linear detector imaging, the yaw angle gradually increases from 2.89° to 2.945°, which makes the jitter error unobserved. Consequently, the changes in roll and pitch angles remain minimal (10″ over 20 s). Notably, jitter errors become particularly visible, especially the pitch angle. However, the random fluctuations caused by the star sensor measurement noise affect the periodic characteristics of jitter errors. Using the proposed fusion method, the random noise in both pitch and roll angles is reduced, enabling a clear manifestation of the periodic characteristics of jitter errors. It demonstrates that the fusion method effectively reduces the impact of star sensor noise and accurately represents the satellite jitter error.

Figure 6.

Satellite attitude parameters: (a) Euler angles determined by star sensors, (b) Euler angles determined by star sensors and gyroscope fusion.

Furthermore, the frequency and amplitude of jitter errors are analyzed, as shown in Figure 7. A significant jitter error occurs at a frequency of 0.187 Hz, which equals the rotating period of the OCTS. This indicates that the periodic rotation of the OCTS constitutes the primary factor causing HY3A satellite jitter. The corresponding amplitudes of yaw, roll, and pitch angles are 0.905″, 0.468″, and 1.668″, respectively. The larger amplitude observed in the pitch angle compared to those of yaw and roll angles results in greater along-track distortion than cross-track distortion. As demonstrated in Figure 7, for data within the 0.3–2 Hz frequency range, the amplitudes extracted by the star sensor exceed those obtained through the star sensor and gyroscope fusion. The proposed method effectively reduces the impact of random measurement noise in the star sensor. Additionally, amplitudes at the 0.187 Hz frequency are also changed following star sensor and gyroscope fusion. Through random noise elimination, the jitter characteristics achieve more precise representation.

Figure 7.

Frequency and amplitude of jitter errors: (a) extracted by the star sensor, (b) extracted by star sensors and gyroscope fusion.

The fitting residuals were analyzed following initial stable attitude extraction, as presented in Table 3. The residuals of the star sensor attitude range from −3″ to 3″, with corresponding root mean square errors (RMS) of 1.018″ in yaw, 1.064″ in roll, and 1.377″ in pitch, corresponding to a cross-track geometric error of 4.035 m and an along-track geometric error of 5.221 m for an orbital altitude of 782.121 km. These values are consistent with the measurement accuracy of the star sensor optical axis (5″, 3σ). The RMS errors of yaw, roll, and pitch angles obtained from the fused star sensor and gyroscope attitude are 0.706″, 0.440″, and 1.264″, respectively. These results demonstrate that the fusion method significantly reduces the impact of the star sensor’s random errors.

Table 3.

Satellite attitude fitting residuals.

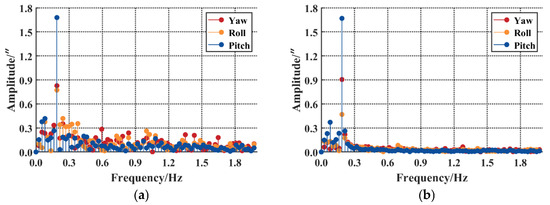

3.2.2. Jitter Error Extraction Analysis

To compare the applicability of the proposed method under different time ranges, attitude data within 35 s and 110 s are selected. For the attitude data within 35 s, the extracted jitter errors with the sliding fitting are similar to the overall fitting, as shown in Figure 8a,c. The satellite flight attitude is relatively stable and can be expressed using the overall cubic polynomial model in a relatively short period of time. For the attitude data within 110 s, extracted jitter errors with the sliding fitting reveal a certain periodicity, as shown in Figure 8b. However, using the overall fitting, the stable component cannot be removed from the fusion attitude, and the jitter error cannot be separated well, as shown in Figure 8d. The details of stable flight attitude cannot be expressed well by the overall fitting for a relatively long period of time. The sliding fitting window for stable attitude is calculated using the frequency of the jitter error, which can accurately separate jitter errors without the interference of stable flight attitude.

Figure 8.

Jitter error extraction: (a) results of the proposed method within 35 s, (b) results of the proposed method within 110 s, (c) overall fitting results within 35 s, (d) overall fitting results within 110 s.

To evaluate the applicability of the proposed method across different time ranges, attitude data spanning 35-second and 110-second intervals were selected. For the 35-second data, jitter errors extracted using sliding fitting demonstrate similarity to those obtained using overall fitting, as illustrated in Figure 8a,c. This indicates that the satellite flight attitude remains sufficiently stable to be modeled by a global cubic polynomial over shorter durations. As shown in Figure 8b, the 110-second data reveals periodic jitter characteristics when processed with sliding fitting. However, the overall fitting fails to adequately eliminate stable components from the fused attitude or effectively extract jitter errors, as demonstrated in Figure 8d. This limitation arises because the details of flight attitude cannot be expressed well by the overall fitting over a long period of time. The sliding fitting window for stable attitude is calculated using the frequency of the jitter error, which can accurately separate jitter errors without the interference of stable flight attitude.

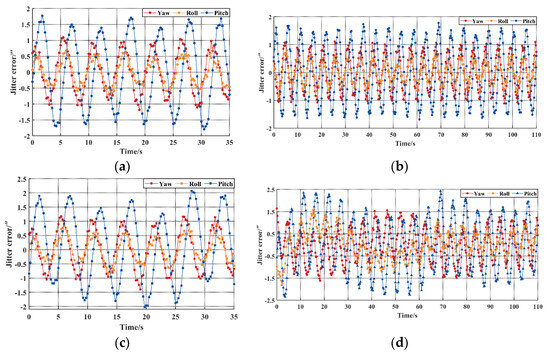

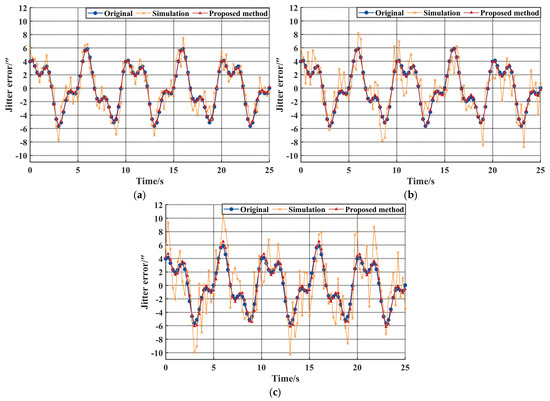

The attitude reconstruction results within 110 s are shown in Figure 9. The blue, yellow, and red dots represent the fused attitude, the reconstruction results based on overall fitting of stable attitude, and the reconstruction results obtained with the proposed method, respectively. During imaging, the stable roll attitude angle ranges from 6″ to 17″ arcseconds, and the stable pitch attitude angle ranges from 19″ to 30″ to ensure the imaging region is captured. When affected by jitter, in addition to maintaining a stable attitude, there are also periodic fluctuations. The reconstruction results obtained using the proposed method demonstrate consistency with the fused attitude. Furthermore, fluctuations in fused attitude caused by the gyroscope noise are effectively removed through application of the proposed jitter error model and least squares solution. Although the reconstructed results based on overall fitting of stable attitude exhibit good smoothness, they show significant deviation from the fused attitude. These findings demonstrate that the proposed method successfully eliminates measurement noise and accurately characterizes jitter errors.

Figure 9.

Attitude reconstruction results: (a) yaw angle, (b) roll angle, (c) pitch angle.

The attitude reconstruction residuals are analyzed in Table 4. The RMS errors of the yaw, roll, and pitch angles between the overall fitting attitude and fused attitude are 0.178″, 0.385″, and 0.309″, respectively. Moreover, the maximum (MAX) and minimum (MIN) errors exceed 1″, which generates a geometric error of 3.801 m in the imagery. These findings indicate that the overall fitting method is unsuitable for modeling and compensating jitter errors. In contrast, the proposed method achieves RMS errors of 0.114″, 0.122″, and 0.128″ for yaw, roll, and pitch angles, respectively. These values not only reflect the gyro measurement noise but can also be effectively eliminated using the proposed methodology.

Table 4.

Attitude reconstruction residuals.

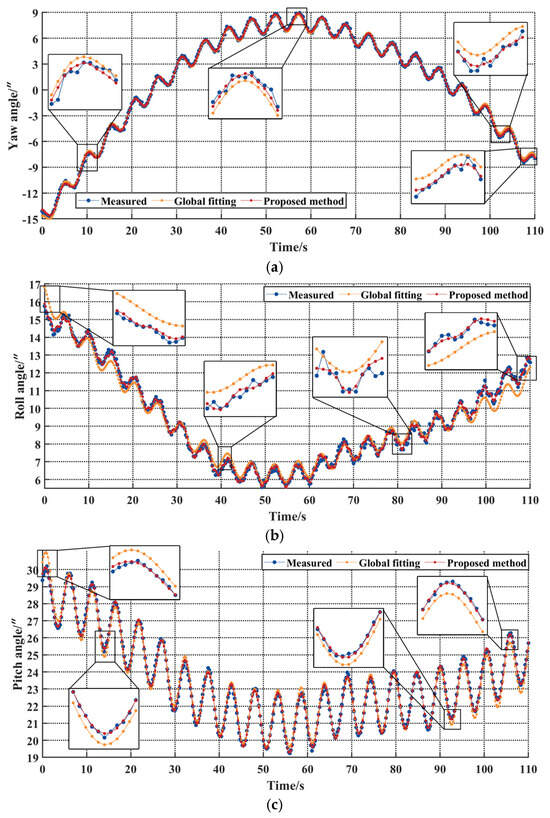

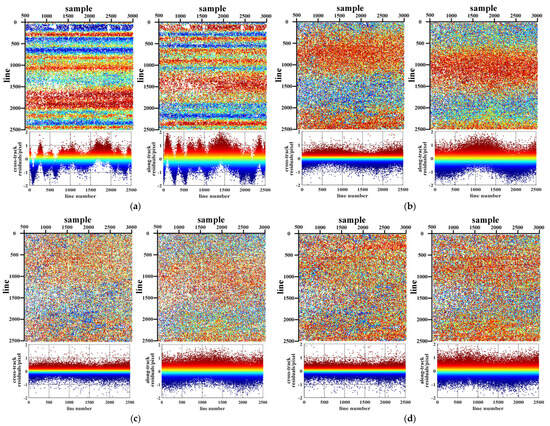

3.3. Registration Accuracy Analysis

The registration results between B2 and B6 using different attitude parameters are shown in Figure 10. When using attitude results calculated by star sensors, the matching error of tie points exhibits random fluctuations without any obvious periodic characteristics, as demonstrated in Figure 10a. This discrepancy arises because the random measurement noise level of the star sensor exceeds the satellite jitter amplitude. In Figure 10b, with the stable attitude, the registration error is no longer a random noise but a periodic variation. While the fitted stable attitude can eliminate random errors, it fails to capture the actual satellite jitter during imaging, leading to image distortion. Furthermore, the along-track registration error is larger than that in the cross-track direction, aligning with the attitude analysis findings presented in Section 3.2.1. These observations confirm that the satellite attitude measurement results can correspond well with the optical image distortion caused by jitter, providing conditions for jitter error compensation based on attitude measurement data. For the conventional attitude fusion and sliding interpolation method, part of the jitter error is eliminated, as shown in Figure 10c. The conventional attitude fusion does not change the measurement noise of the star sensors and gyroscope during multiple filtering processes, making it difficult to accurately characterize jitter errors. Meanwhile, the third-order Lagrange interpolation algorithm with unreasonable sliding window is susceptible to noise, resulting in poor interpolation accuracy and modelling accuracy. Therefore, compared to stable attitude, the conventional method only eliminates some jitter errors, but there are still some residual jitter errors influencing the geometric accuracy of optical images. As illustrated in Figure 10d, the proposed method successfully eliminates periodic fluctuations, resulting in registration errors that conform to random error characteristics of tie point matching. This demonstrates that the proposed method can effectively extract satellite jitter error and correct the geometric distortion.

Figure 10.

Optical image registration residuals: (a) attitude determined by star sensors, (b) attitude determined by stable attitude, (c) attitude determined using the conventional sliding method, (d) attitude determined by star sensor and gyroscope fusion.

To further verify the effectiveness, the along-track registration results of Scene 4 with 6000 scanning lines are analyzed, as shown in Figure 11. The registration error in Figure 11a correlates with the measurement noise of the star sensor. Regarding the registration error under stable attitude conditions, due to increasing the number of scanning lines to 6000 and the imaging time to 19 s, it can be observed that there are four distinct periodic fluctuations in the registration error in Figure 11b. The fluctuation period of registration error is approximately 1583 scanning lines, with an imaging duration of 0.0033 s per line. Therefore, the fluctuation period is 5.224 s, which is consistent with the jitter detection results. Using the conventional method, the amplitude of registration error fluctuation decreases in Figure 11c. However, affected by the inaccurate extraction and modeling of jitter error, there are also four distinct periodic fluctuations in the registration error. Using the proposed method, the jitter error is effectively extracted, and no periodic fluctuations are observed in the registration error, as demonstrated in Figure 11d. Using sliding window fitting with the optimal parameters, both short-strip and long-strip optical image jitter errors can be effectively corrected.

Figure 11.

Residuals of optical image registration along the track direction: (a) attitude determined by star sensors, (b) attitude determined by stable attitude, (c) attitude determined using the conventional sliding method, (d) attitude determined by star sensor and gyroscope fusion.

The registration accuracy of the six optical images is shown in Table 5. Using attitude parameters determined by star sensors, the cross-track registration accuracies of the six images are 0.681, 0.313, 0.515, 0.676, 0.856, and 0.528 pixels, respectively. With stable attitude parameters, these accuracies become 0.382, 0.245, 0.437, 0.324, 0.256, and 0.210 pixels, respectively. Using the conventional attitude fusion and interpolation method, the registration accuracies are 0.504, 0.322, 0.431, 0.416, 0.359, and 0.243 pixels, respectively. Using the proposed method, the registration accuracies improve to 0.400, 0.222, 0.341, 0.326, 0.251, and 0.192 pixels, respectively. As analyzed in Section 3.2.1 regarding jitter amplitude, the cross-track jitter amplitude remains relatively small, approximating the tie-point matching accuracy (0.3 pixels). However, influenced by star sensor noise, the cross-track error reduces to 0.595 pixels. The proposed method achieves registration accuracy (0.289 pixels) comparable to that obtained with stable attitude parameters (0.309 pixels), demonstrating that cross-track jitter does not introduce geometric distortion. Similarly, random errors in star sensors contribute to the increase in along-track registration errors, exhibiting a mean error of 0.718 pixels. After implementing the conventional method, the along-track registration accuracies improve from 0.433, 0.801, 0.491, 0.431, 0.847, and 0.406 pixels to 0.464, 0.564, 0.446, 0.408, 0.429, and 0.406 pixels, respectively. The conventional method can eliminate some jitter errors, and the increase in registration errors is relatively small. Furthermore, using the proposed method, the along-track registration accuracies improve to 0.356, 0.321, 0.384, 0.297, 0.413, and 0.330 pixels, respectively. The average along-track registration error is 0.350 pixels, which is close to the matching error of tie points. Compared with the attitudes determined using star sensors, stable attitude, and the conventional method, the improvement rates of the proposed method are 52%, 38%, and 23%, respectively. It demonstrates that the along-track jitter distortion is corrected well using the proposed method.

Table 5.

Accuracy of image registration between B2 and B6 of the CZI.

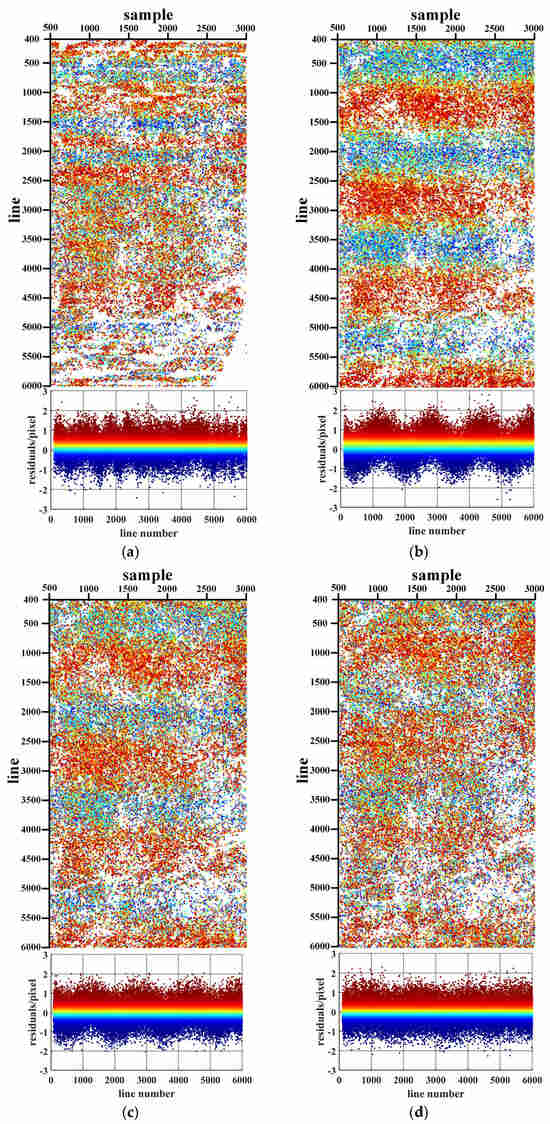

The registration results of typical ground targets are shown in Figure 12. Under jitter error interference, significant target misalignment occurs in ground observations. After implementing the proposed method, multispectral image registration accuracy demonstrates substantial improvement, with visual misalignment being effectively eliminated. This methodology successfully corrects geometric distortions induced by jitter errors, thereby preserving the application value of HY3A optical imaging data.

Figure 12.

Registration error between B2 and B6: (a) before jitter correction, (b) after jitter correction.

4. Discussion

4.1. Measurement Noise

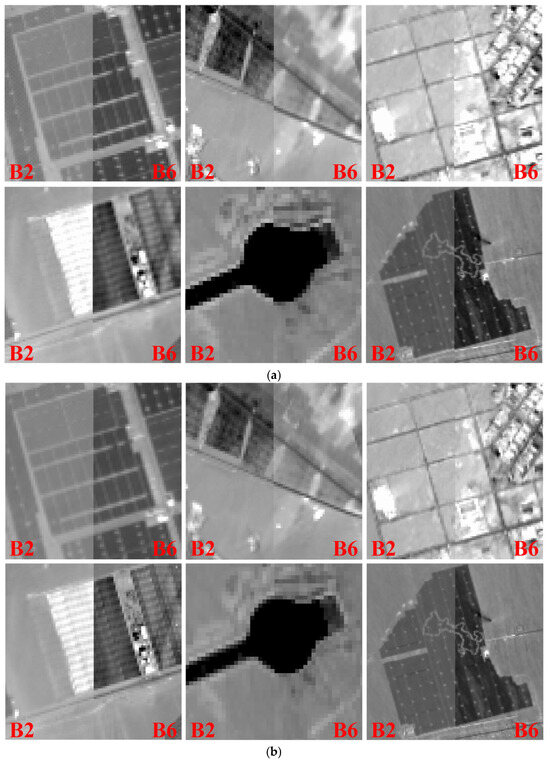

To verify the accuracy of the proposed method under different measurement noises, simulation data containing two jitter error are analyzed. The frequencies, amplitudes, and phases of the two jitters designed in this paper are 0.2 Hz, 4″, 30° and 0.5 Hz, −2″, −80°, respectively. Then, different measurement noises are added using the Gaussian noise with standard deviations of 0.000″, 0.288″, 0.578″, 0.864″, 1.154″, 1.444″, 1.737″, 2.019″, 2.295″, 2.602″, 2.890″, and 3.179″. Thus, the simulated jitter data are represented as follows:

where denotes the label of the noise level.

The frequency, amplitude, and phase parameters established using the proposed method under various noise levels are presented in Table 6. As the measurement noise level increases, the amplitude and phase errors in the jitter model established using this method gradually increase. Notably, under identical noise conditions, the modeling error for 2″ amplitude jitter exceeds that in 4″ amplitude jitter. Measurement random noise is a critical factor influencing jitter modeling accuracy. The least square solution is adopted to improve the robustness, enabling relatively accurate extraction of jitter characteristics under various noise levels.

Table 6.

Modeling results under different noise levels.

The model residuals between the noise-free simulated jitter and the reconstructed jitter using the proposed method are presented in Table 7. With noise standard deviations of 0.288″, 0.578″, 0.864″, 1.154″, 1.444″, 1.737″, 2.019″, 2.295″, 2.602″, 2.890″, and 3.179″, the corresponding residuals are 0.037″, 0.085″, 0.133″, 0.231″, 0.223″, 0.207″, 0.261″, 0.208″, 0.337″, 0.499″, and 0.447″, respectively. Notably, when noise levels reach arcsecond magnitudes, the proposed method maintains sub-arcsecond modeling accuracy. These results demonstrate that the proposed method has a high robustness in overcoming noise sensitivity, which is a limitation commonly observed in traditional approaches like Lagrange interpolation and spline interpolation.

Table 7.

Jitter model residuals under different noise levels.

The reconstruction results of jitter error under different noise levels are shown in Figure 13. The blue, yellow, and red points represent the simulated jitter without noise, simulated jitter with Gaussian noise, and reconstructed jitter using the proposed method, respectively. When the noise levels are 1.444″, 2.295″, and 3.179″, the difference between the blue and yellow points gradually increases. When the noise level reaches 3.179″, both the periodicity and amplitude characteristics of jitter are severely affected by measurement noise. The proposed method reconstructs the jitter based on periodicity characteristics and exhibits high robustness to noise. Consequently, the reconstructed jitter results demonstrate high consistency with the original jitter, as evidenced by the close alignment between the red and blue points in Figure 13.

Figure 13.

Modeling results of jitter error under different noise levels: (a) 1.444″, (b) 2.295″, (c) 3.179″.

4.2. Time Complexity

Algorithm 1 is pseudo-code of the proposed precision attitude calculation algorithm using the star sensor and gyroscope. Algorithm 1 consists of three single-layer linear traversal structures. The number of cycles is strictly linearly and related to the number of sampled data points from the star sensor and gyroscope (N). The time complexity of the Algorithm 1 is O(N). As shown in Algorithm 2, the proposed jitter error extraction and modeling algorithm consists of two single-layer linear traversal structures, one of which is related to the number of sample data points N and the other is related to the number of image scanning lines M. Thus, the time complexity of Algorithm 2 is O(M). The proposed method does not have nested loops or exponential recursive calls. It can achieve near real-time processing and meet the onboard processing requirements of the optical remote sensing satellite.

| Algorithm 1 Precision attitude calculation |

| Input: , |

| Output: precision attitude angle |

| while do |

| (time update) |

| (measurement update) |

| (optimal estimation) |

| end while |

| while do |

| (time update) |

| (measurement update) |

| (optimal estimation) |

| end while |

| while do |

| (time update) |

| (measurement update) |

| (optimal estimation) |

| (attitude Transformation) |

| end while |

| return |

| Algorithm 2 Jitter error extraction and modeling |

| Input: |

| Output: precision attitude angle of scanning line |

| for (the number of attitude data) do |

| (stable attitude modeling) |

| (jitter error extraction) |

| end for |

| for (the number of scanning line) do |

| (stable attitude) |

| (jitter error) |

| end for |

| return |

To verify the efficiency of the proposed method, the experiments on atmospheric refraction index reading and atmospheric refractive correction were carried out on a computer with a Windows 11 operating system with an Inter CPU i9-14900HX at 2.20 GHz and 32 GB of RAM. The proposed method presented for jitter data processing is implemented using MATLAB R2024b.

Table 8 lists the time consumption for jitter data processing. The time consumption gradually increases as the number of scanning lines grows. When the number of scanning lines in the entire imaging strip is 12,887, 35,625, 12,596, 36,338, 36,430, and 20,697, the time consumption is 0.697, 2.415, 0.706, 2.205, 2.212, and 1.201 s, respectively. Processing efficiency is high with an average time consumption of 1.573 s. It can achieve near real-time processing and meet the onboard processing requirements of the optical remote sensing satellite.

Table 8.

Time consumption for jitter data processing.

5. Conclusions

To compensate for the jitter error introduced by the periodic rotation of the OCTS, this paper proposes a jitter error estimation and correction method based on multi-source attitude data fusion. Based on the measurement data from the star sensor and gyroscope, multi-directional fusion is applied to extract the jitter error through direct rapid extraction. According to the geometric relationship between jitter imaging and stable imaging, the distortion in the optical image is corrected using stable resampling without time-consuming image matching. By analyzing the fusion attitude, a significant along-track jitter error is identified, exhibiting a frequency of 0.187 Hz and an amplitude of 1.668”. Experimental results demonstrate that the along-track registration accuracy between the B2 and B6 optical images of the CZI improved from 0.568 pixels to 0.350 pixels. The proposed method can effectively correct the geometric distortion caused by jitter errors for both short-strip and long-strip optical images. Furthermore, the proposed method is a single-layer loop algorithm related to the number of attitude data points and image scanning lines, with a time complexity of O(M) and time consumption of 1.573 s. It can achieve on-orbit near real-time processing and provide accurate attitude parameters for on-orbit geometric processing of optical satellite image data.

Author Contributions

Conceptualization, Y.W.; methodology, R.Z., Y.X. and X.Z.; software, R.D. and S.J.; validation, R.Z. and Y.X.; formal analysis, Y.X. and R.D.; resources, Y.W. and S.J.; data curation, Y.W. and S.J.; writing—original draft preparation, R.Z. and Y.X.; writing—review and editing, R.Z. and R.D.; supervision, Y.W.; project administration, Y.W. and S.J.; funding acquisition, Y.W. and S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key Research and Development Program of China under Grant 2021YFC2803300 and in part by the National Natural Science Foundation of China under Grant 42401549.

Data Availability Statement

The data presented in this study are available from the corresponding author upon reasonable request. The data are not publicly available due to the fact that we need to conduct more research based on these data.

Acknowledgments

We would like to express our sincere thanks to the anonymous reviewers.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, N.; Guan, L.; Gao, H. Sun Glint Correction Based on BRDF Model for Improving the HY-1C/COCTS Clear-Sky Coverage. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Mao, Z.; Chen, P.; Tao, B.; Ding, J.; Liu, J.; Chen, J.; Hao, Z.; Zhu, Q.; Huang, H. A Radiometric Calibration Scheme for COCTS/HY-1C Based on Image Simulation from the Standard Remote-Sensing Reflectance. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–9. [Google Scholar] [CrossRef]

- Cao, J.; Zhou, N.; Shang, H.; Ye, Z.; Zhang, Z. Internal Geometric Quality Improvement of Optical Remote Sensing Satellite Images with Image Reorientation. Remote Sens. 2022, 14, 1. [Google Scholar] [CrossRef]

- Li, S.; Chen, S.; Ma, C.; Peng, H.; Wang, J.; Hu, L.; Song, Q. Construction of a Radiometric Degradation Model for Ocean Color Sensors of HY1C/D. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Song, J.; Zhang, Z.; Iwasaki, A.; Wang, J.; Sun, J.; Sun, Y. An Augmented H∞ Filter for Satellite Jitter Estimation Based on ASTER/SWIR and Blurred Star Images. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 2637–2646. [Google Scholar] [CrossRef]

- Perrier, R.; Arnaud, E.; Sturm, P.; Ortner, M. Satellite Image Registration for Attitude Estimation with a Constrained Polynomial Model. In Proceedings of the 17th IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010. [Google Scholar]

- Perrier, R.; Arnaud, E.; Sturm, P.; Ortner, M. Estimation of an Observation Satellite’s Attitude Using Multimodal Pushbroom Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 987–1000. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, M.; Pan, J. Detection of ZY-3 Satellite Platform Jitter Using Multi-Spectral Imagery. Acta Geod. Cartogr. Sin. 2015, 44, 399–406. [Google Scholar]

- Zhang, Z.; Xu, G.; Song, J. Observation Satellite Attitude Estimation Using Sensor Measurement and Image Registration Fusion. Proc. Inst. Mech. Eng. G J. Aerosp. Eng. 2018, 232, 1390–1402. [Google Scholar] [CrossRef]

- Wang, P.; An, W.; Deng, X.; Yang, J.; Sheng, W. A Jitter Compensation Method for Spaceborne Line-Array Imagery Using Compressive Sampling. Remote Sens. Lett. 2015, 6, 558–567. [Google Scholar] [CrossRef]

- Jiang, J.; Huang, J.; Zhang, G. An Accelerated Motion Blurred Star Restoration Based on Single Image. IEEE Sens. J. 2017, 17, 1306–1315. [Google Scholar] [CrossRef]

- Teshima, Y.; Iwasaki, A. Correction of Attitude Fluctuation of Terra Spacecraft Using ASTER/SWIR Imagery with Parallax Observation. IEEE Trans. Geosci. Remote Sens. 2008, 46, 222–227. [Google Scholar] [CrossRef]

- Ayoub, F.; Leprince, S.; Binet, R.; Lewis, K.W.; Aharonson, O.; Avouac, J.P. Influence of Camera Distortions on Satellite Image Registration and Change Detection Applications. In Proceedings of the 2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008. [Google Scholar]

- Kirk, R.; Howington, K.E.; Rosiek, M. Ultrahigh Resolution Topographic Mapping of Mars With Mro Hirise Stereo Images: Meter-scale Slopes of Candidate Phoenix Landing Sites. J. Geophys. Res. Planets 2008, 113, E3. [Google Scholar] [CrossRef]

- Takaku, J.; Tadono, T. High Resolution DSM Generation from ALOS Prism-processing Status and Influence of Attitude Fluctuation. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Amberg, V.; Dechoz, C.; Bernard, L.; Greslou, D.; de Lussy, F.; Lebegue, L. In-Flight Attitude Perturbations Estimation: Application to PLEIADES-HR Satellites. In Proceedings of the SPIE Optical Engineering + Applications, San Diego, CA, USA, 25–29 August 2013. [Google Scholar]

- Tong, X.; Li, L.; Liu, S.; Xu, Y.; Ye, Z.; Jin, Y.; Wang, F.; Xie, H. Detection and Estimation of ZY-3 Three-Line Array Image Distortions Caused by Attitude Oscillation. ISPRS J. Photogramm. Remote Sens. 2015, 101, 291–309. [Google Scholar] [CrossRef]

- Sun, T.; Long, H.; Liu, B.; Li, Y. Application of Attitude Jitter Detection Based on Short-Time Asynchronous Images and Compensation Methods for Chinese Mapping Satellite-1. Opt. Express 2015, 23, 1395. [Google Scholar] [CrossRef]

- Wang, M.; Zhu, Y.; Pan, J.; Yang, B.; Zhu, Q. Satellite Jitter Detection and Compensation Using Multispectral Imagery. Remote Sens. Lett. 2016, 7, 513–522. [Google Scholar] [CrossRef]

- Pan, J.; Che, C.; Zhu, Y.; Wang, M. Satellite Jitter Estimation and Validation Using Parallax Images. Sensors 2017, 17, 83. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, M.; Cheng, Y.; He, L.; Xue, L. An Improved Jitter Detection Method Based on Parallax Observation of Multispectral Sensors for Gaofen-1 02/03/04 Satellites. Remote Sens. 2018, 11, 16. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, M.; Cheng, Y.F.; Xue, L.; Zhu, Q. Jitter Detection for Gaofen-1 02/03/04 Satellites During the Early In-Flight Period. In Proceedings of the International Conference on Communications, Signal Processing, and Systems, Singapore, 16–18 November 2018; pp. 244–253. [Google Scholar] [CrossRef]

- Liu, S.; Lin, F.; Tong, X.; Zhang, H.; Lin, H.; Xie, H.; Ye, Z.; Zheng, S. Dynamic PSF-Based Jitter Compensation and Quality Improvement for Push-Broom Optical Images Considering Terrain Relief and the TDI Effect. Appl. Opt. 2022, 61, 16. [Google Scholar] [CrossRef]

- Zhu, Y.; Yang, T.; Wang, M.; Pan, J.; Ye, G.; Hong, H.; Wang, L. Rigorous Parallax Observation Model-Based Remote Sensing Panchromatic and Multispectral Images Jitter Distortion Correction for Time Delay Integration Cameras. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–19. [Google Scholar] [CrossRef]

- Zhang, Z.; Iwasaki, A.; Xu, G. Attitude Jitter Compensation for Remote Sensing Images Using Convolutional Neural Network. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1358–1362. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Z.; Dong, L.; Xu, G. Jitter Detection and Image Restoration Based on Generative Adversarial Networks in Satellite Images. Sensors 2021, 21, 14. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, M.; de Vieilleville, F.; Weiss, P. DeepVibes: Correcting Microvibrations in Satellite Imaging with Pushbroom Cameras. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–9. [Google Scholar] [CrossRef]

- Shajkofci, A.; Liebling, M. Spatially-Variant CNN-Based Point Spread Function Estimation for Blind Deconvolution and Depth Estimation in Optical Microscopy. IEEE Trans. Image Process. 2020, 29, 5848–5861. [Google Scholar] [CrossRef]

- Debarnot, V.; Weiss, P. Deep-Blur: Blind Identification and Deblurring with Convolutional Neural Networks. Biol. Imaging. 2024, 4, e13. [Google Scholar] [CrossRef]

- Caery, J.; Stuber, G.L. Nonlinear Multiuser Parameter Estimation and Tracking in CDMA Systems. IEEE Trans. Commun. 2000, 48, 2053–2063. [Google Scholar] [CrossRef]

- Iwata, T.; Kawahara, T.; Muranaka, N. High-Bandwidth Pointing Determination for the Advanced Land Observing Satellite (ALOS). In Proceedings of the 24th International Symposium on Space Technology and Science, Miyazaki, Japan, 30 May–6 June 2004. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. Reduced Sigma Point Filters for the Propagation of Means and Covariances Through Nonlinear Transformations. In Proceedings of the 2002 American Control Conference, New York, NY, USA, 8–10 May 2002; IEEE: New York, NY, USA, 2002. [Google Scholar]

- Wan, E.A.; Van Der Merwe, R. The Unscented Kalman Filter. In Kalman Filtering and Neural Networks; Wiley: Hoboken, NJ, USA, 2001; pp. 221–280. [Google Scholar]

- Wang, Y.; Wang, M.; Dong, Z.; Zhu, Y. High-Precision Geometric Positioning for Optical Remote Sensing Satellite in Dynamic Imaging. Geospat. Inf. Sci. 2024, 1, 1–17. [Google Scholar] [CrossRef]

- Wang, M.; Fan, C.; Pan, J.; Jin, S.; Chang, X. Image Jitter Detection and Compensation Using a High-Frequency Angular Displacement Method for Yaogan-26 Remote Sensing Satellite. ISPRS J. Photogramm. Remote Sens. 2017, 130, 32–34. [Google Scholar] [CrossRef]

- Tong, X.; Ye, Z.; Xu, Y.; Tang, X.; Liu, S.; Li, L.; Xie, H.; Wang, F.; Li, T.; Hong, Z. Framework of Jitter Detection and Compensation for High Resolution Satellites. Remote Sens. 2014, 6, 3944–3964. [Google Scholar] [CrossRef]

- Cheng, Y.; Jin, S.; Wang, M.; Zhu, Y.; Dong, Z. A New Image Mosaicking Approach for the Multiple Camera System of the Optical Remote Sensing Satellite GaoFen1. Remote Sens. Lett. 2017, 8, 1042–1051. [Google Scholar] [CrossRef]

- Wang, M.; Cheng, Y.; Guo, B.; Jin, S. Parameters Determination and Sensor Correction Method Based on Virtual CMOS with Distortion for the GaoFen6 WFV Camera. ISPRS J. Photogramm. Remote Sens. 2019, 156, 51–62. [Google Scholar] [CrossRef]

- Cheng, Y.; Jin, S.; Wang, M.; Zhu, Y.; Dong, Z. Image Mosaicking Approach for a Double-Camera System in the GaoFen2 Optical Remote Sensing Satellite Based on the Big Virtual Camera. Sensors 2017, 17, 1441. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).