SD-CapsNet: A Siamese Dense Capsule Network for SAR Image Registration with Complex Scenes

Abstract

1. Introduction

- (1)

- We propose a texture constraint-based phase congruency (TCPC) keypoint detector that can detect uniformly distributed keypoints in SAR images with complex scenes and remove keypoints that may be located in overlay or shadow regions, thereby improving the high-repeatability of keypoints;

- (2)

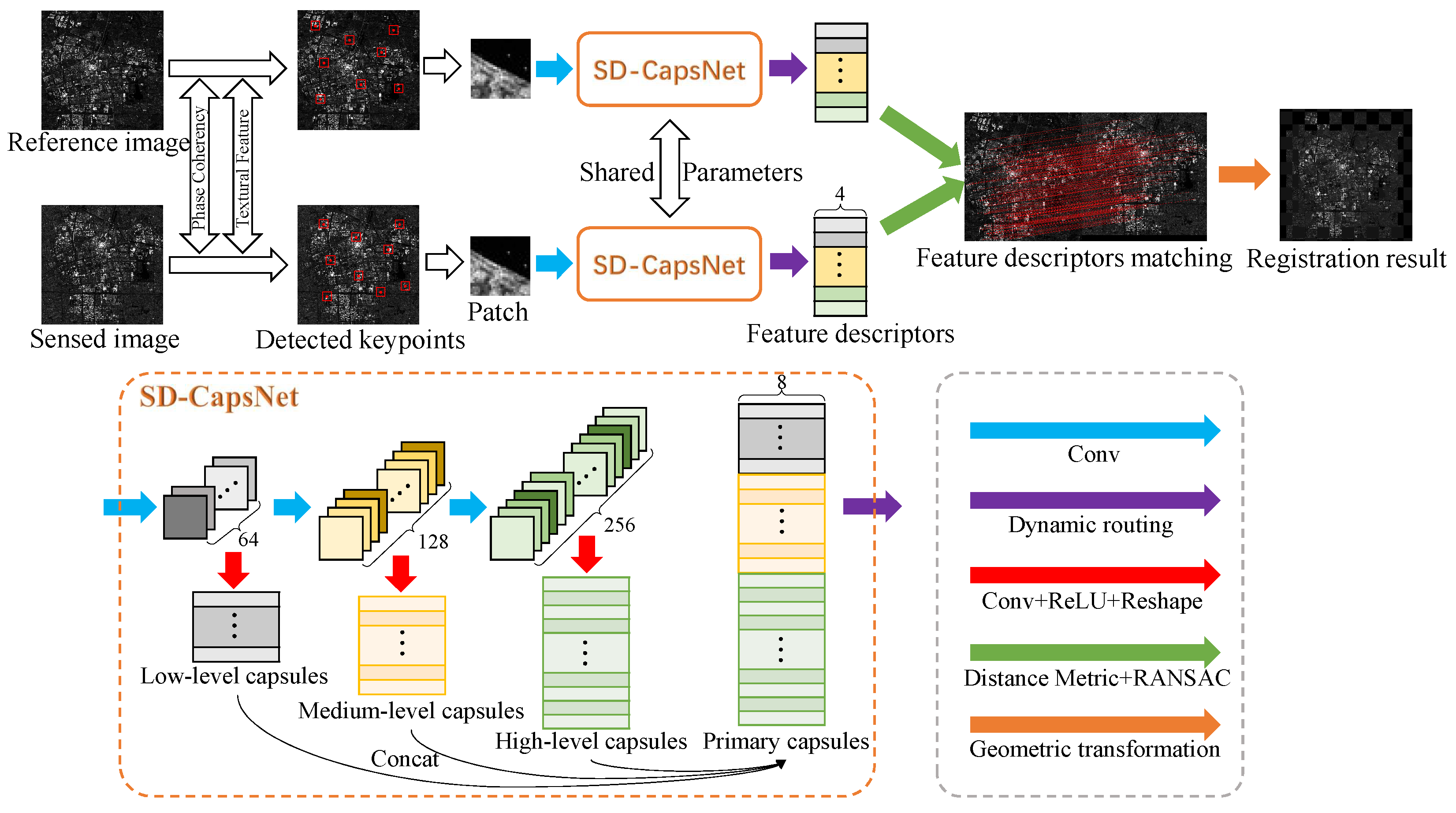

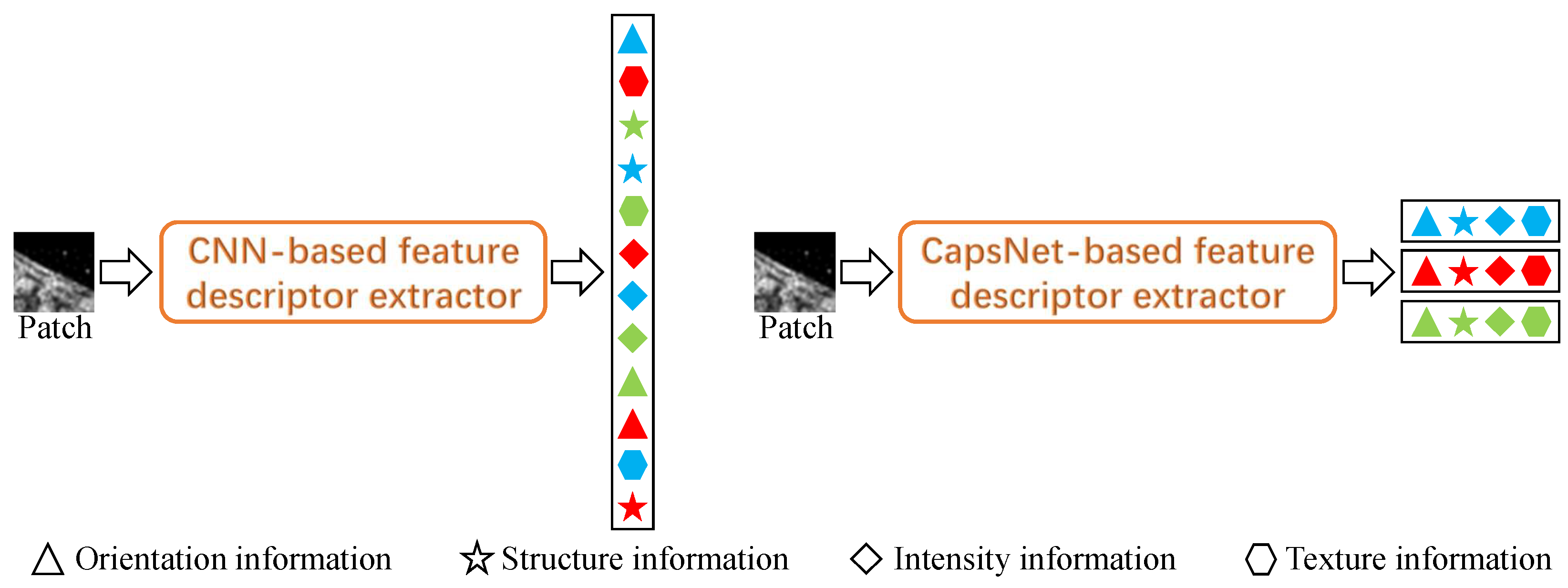

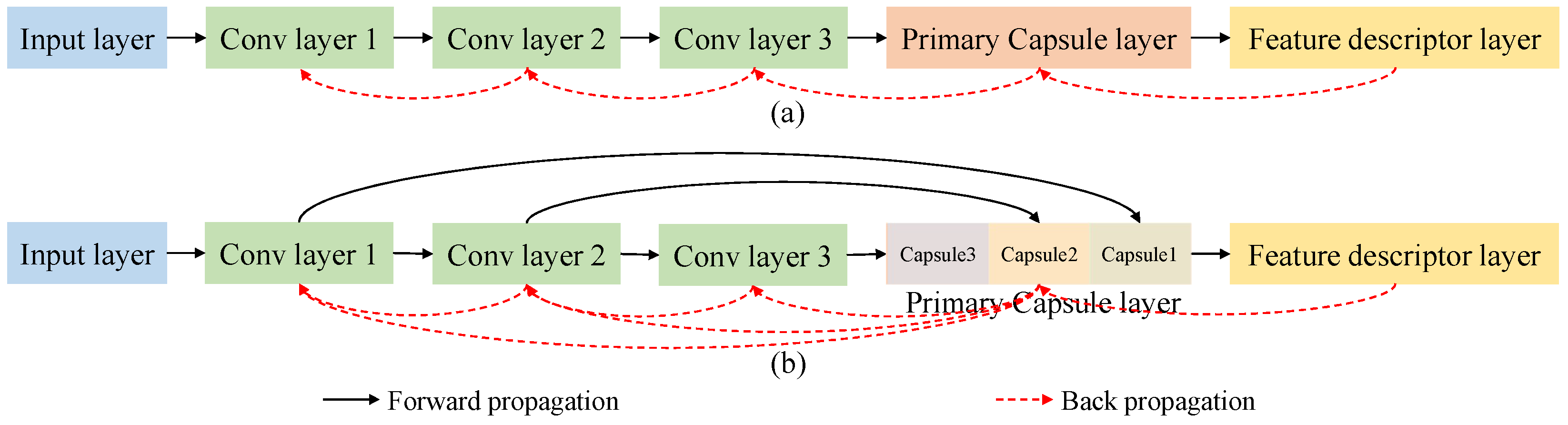

- We propose a Siamese dense capsule network (SD-CapsNet) to implement feature descriptor extraction and matching. SD-CapsNet designs a dense connection to construct the primary capsules, which shortens the backpropagation distance and makes the primary capsules contain both deep semantic information and shallow detail information;

- (3)

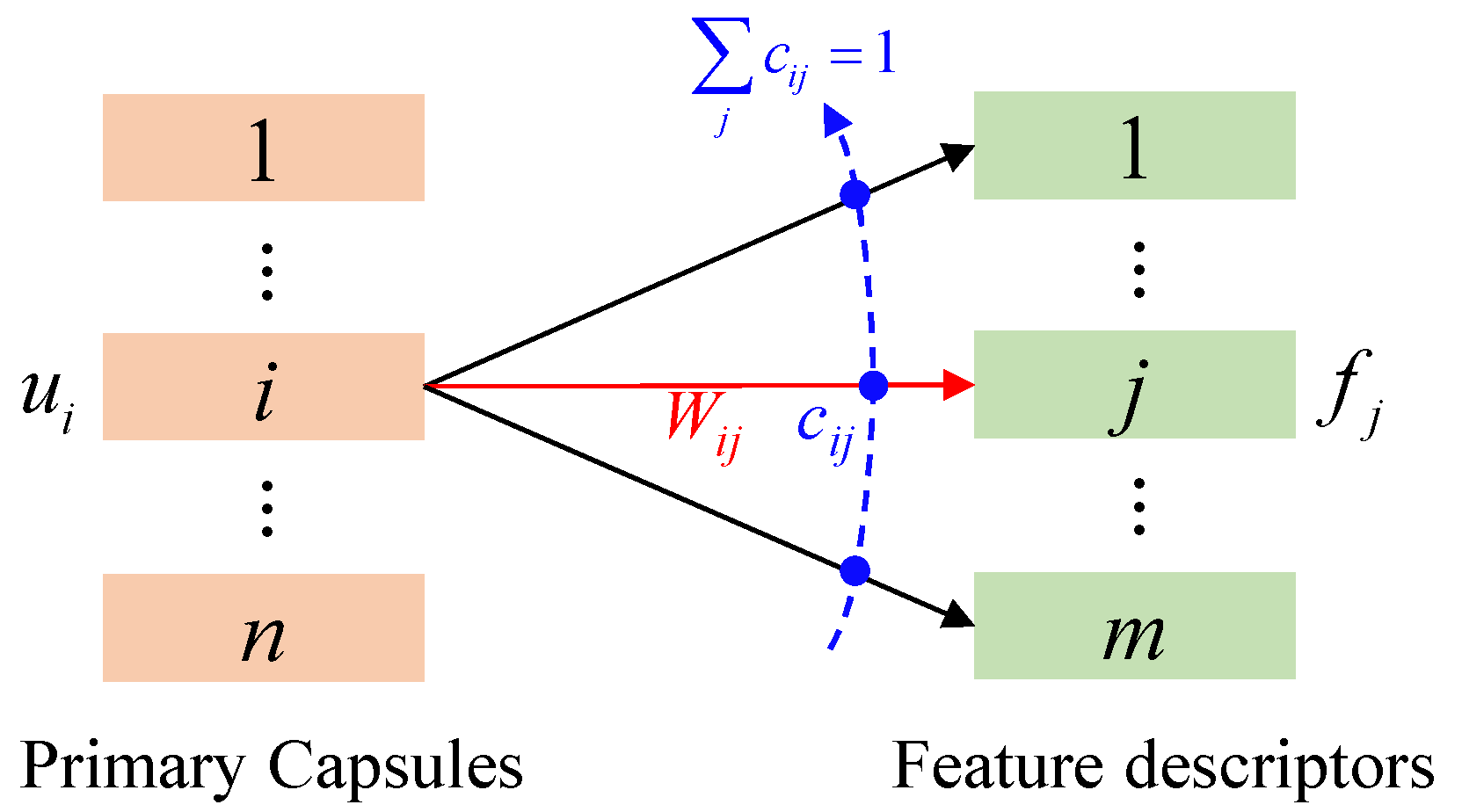

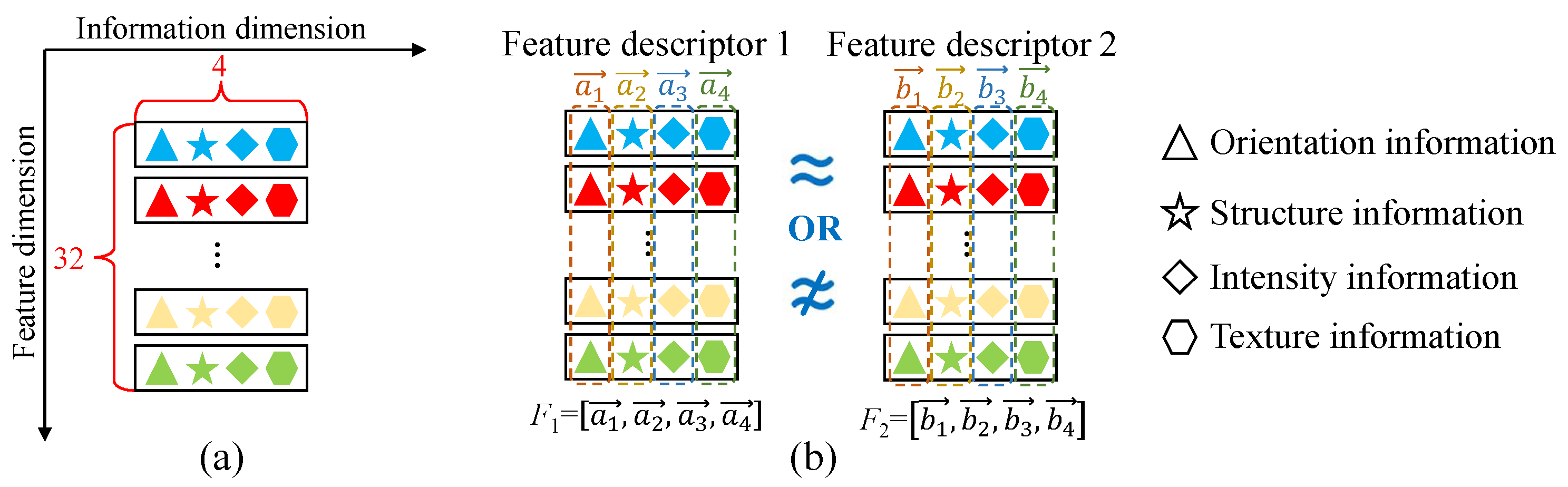

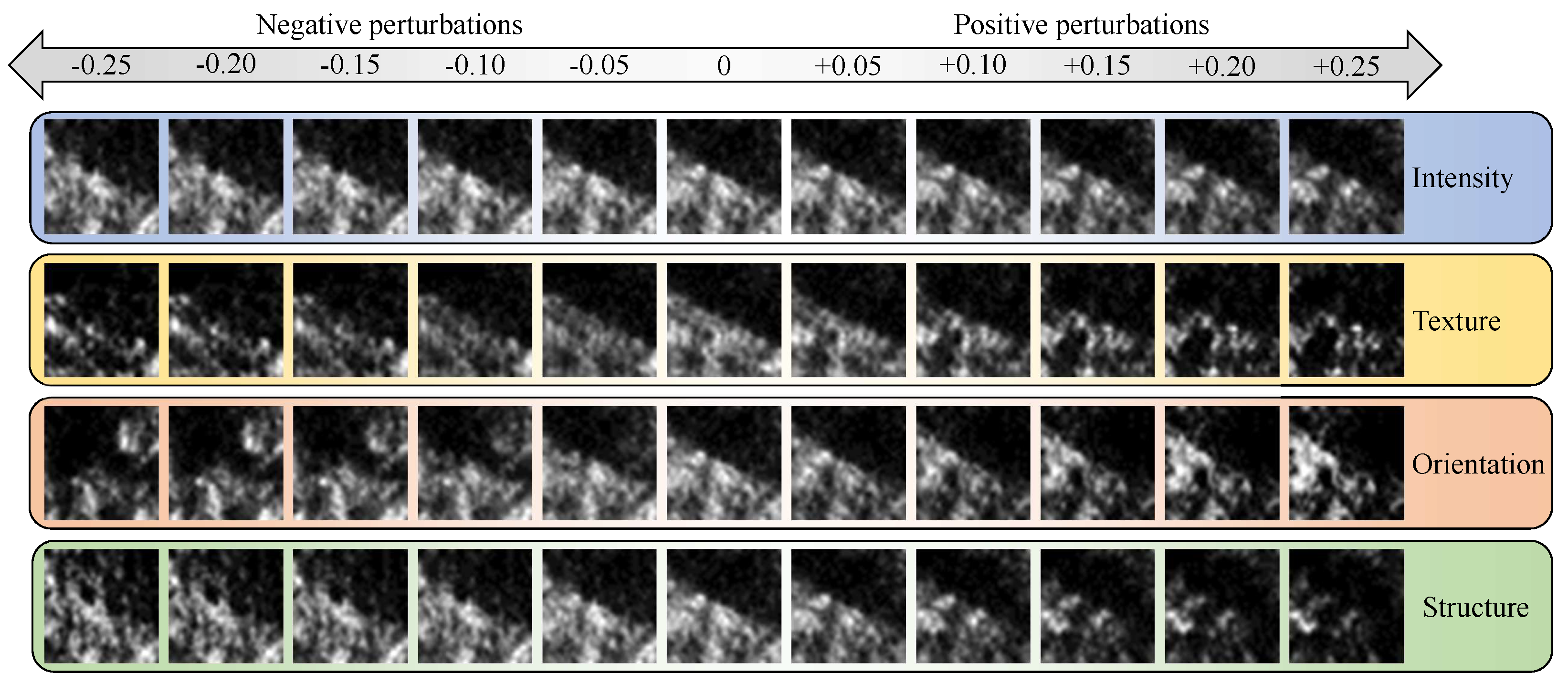

- We innovatively construct feature descriptors in the capsule form and verify that each dimension of the capsule corresponds to intensity, texture, orientation, and structure information. Furthermore, we define the L2 distance between capsules for feature descriptors in the capsule form and combine this distance with the hard L2 loss function to implement the training of SD-CapsNet.

2. Methodology

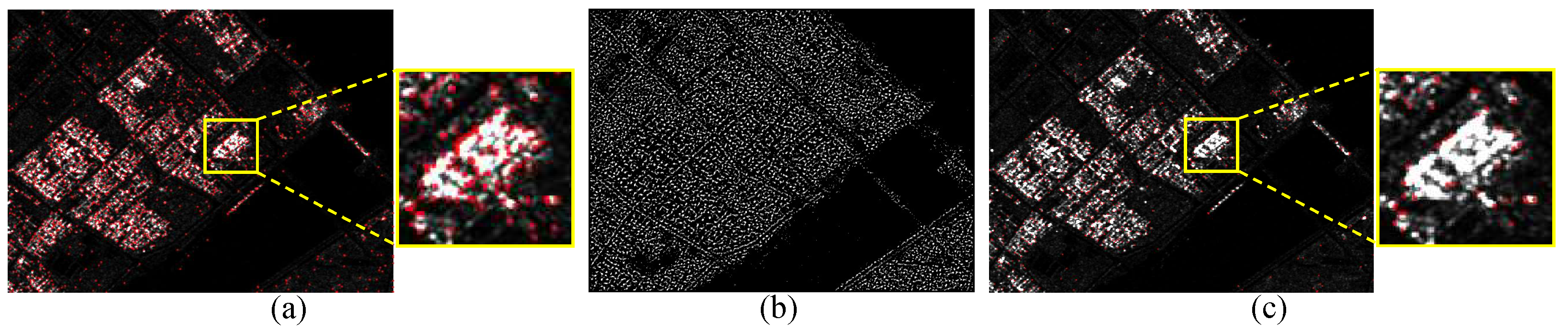

2.1. Texture Constraint-Based Phase Congruency Keypoint Detector

2.2. Siamese Dense Capsule Network-Based Feature Descriptor Extractor

3. Experimental Results and Analysis

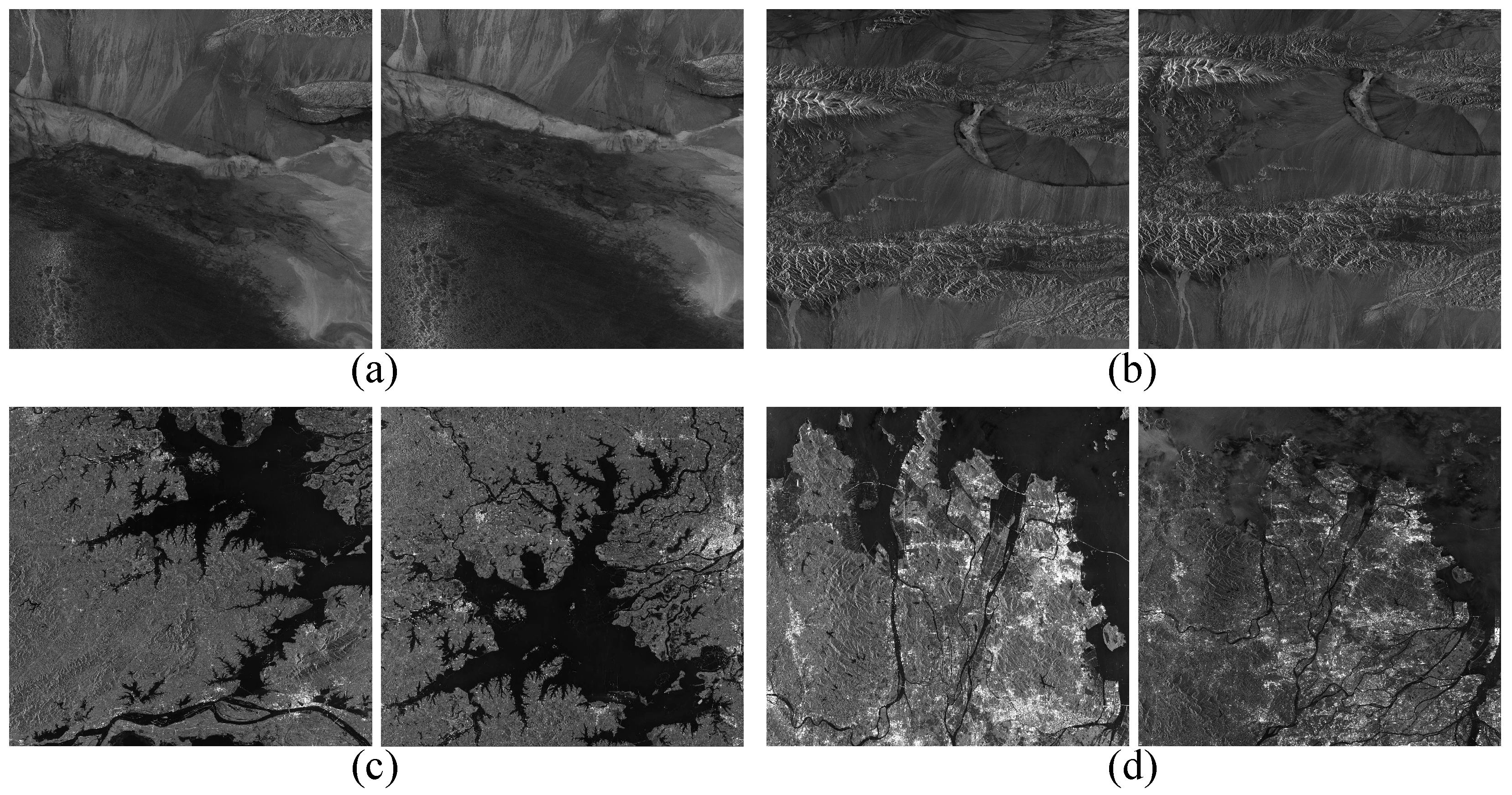

3.1. Data Description and Parameter Settings

- SAR-SIFT [13] proposed SAR-Harris instead of DoG to detect keypoints and used the circular descriptor to describe spatial context information;

- SOSNet [42] used second-order similarity regularization for local descriptor learning, and proposed SOSLoss for registration model training with a small training sample;

- Sim-CSPNet [20] proposed a feature interaction-based keypoint detector and used a Siamese cross-stage partial network to generate feature descriptors.

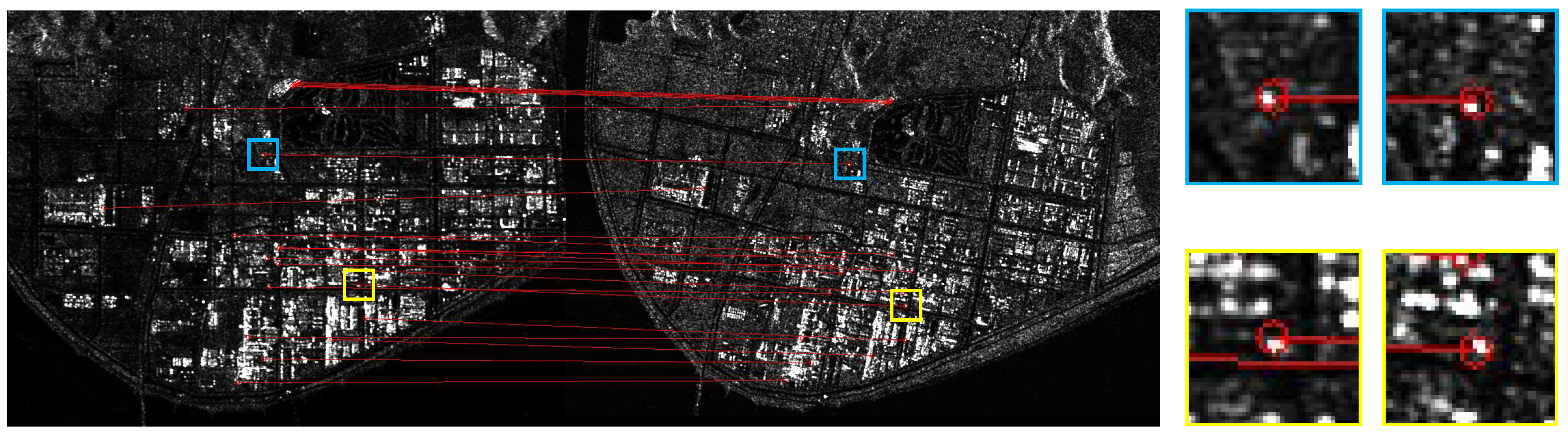

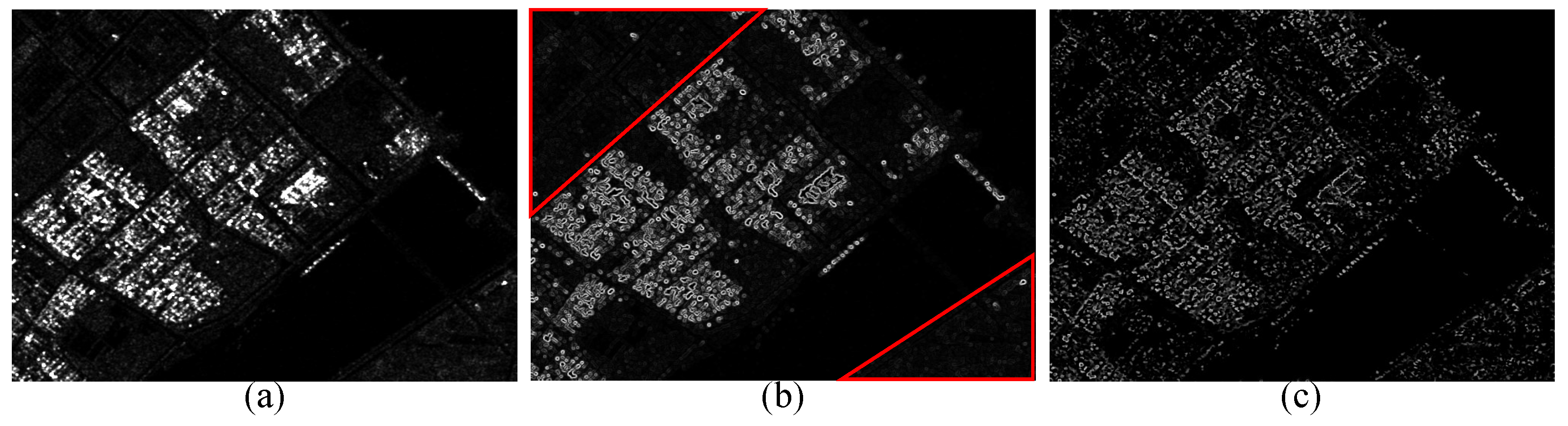

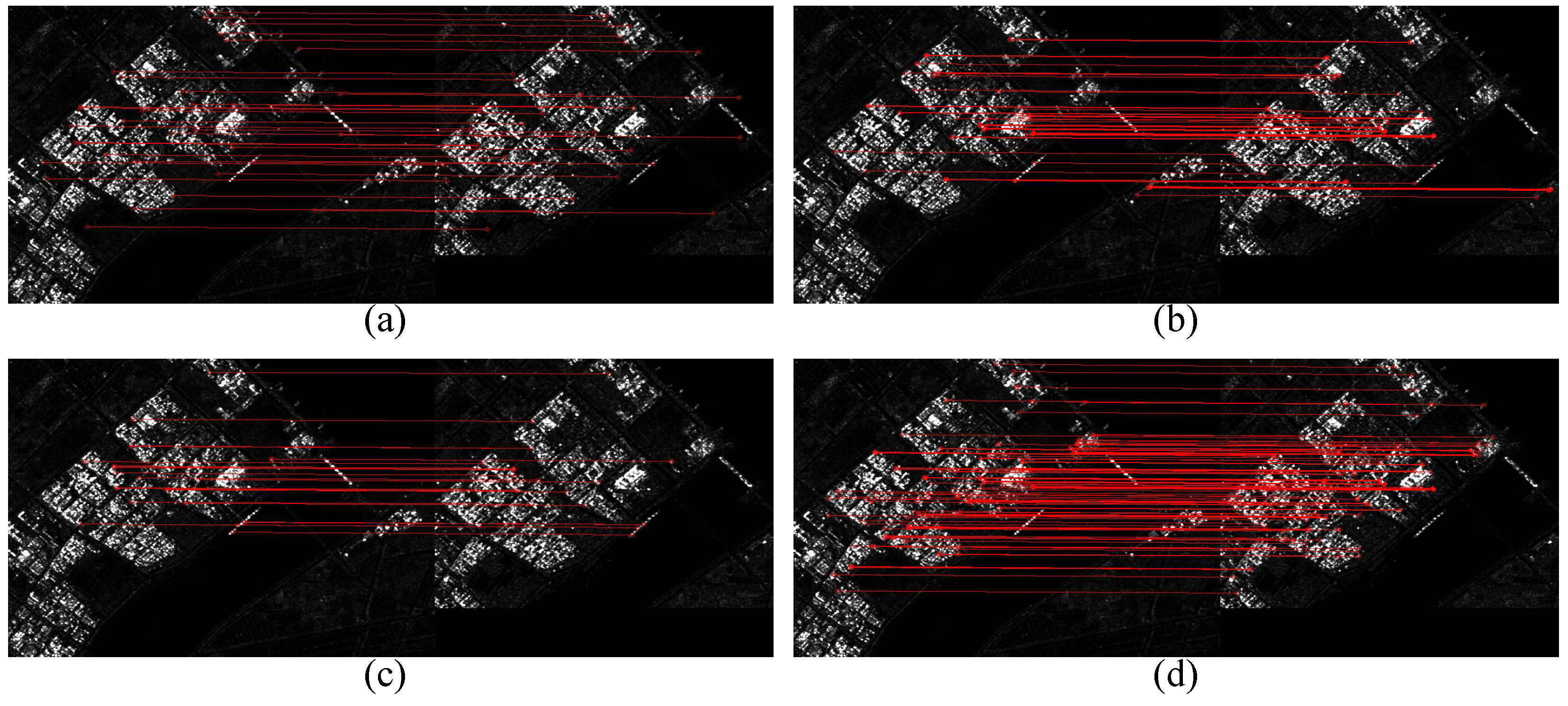

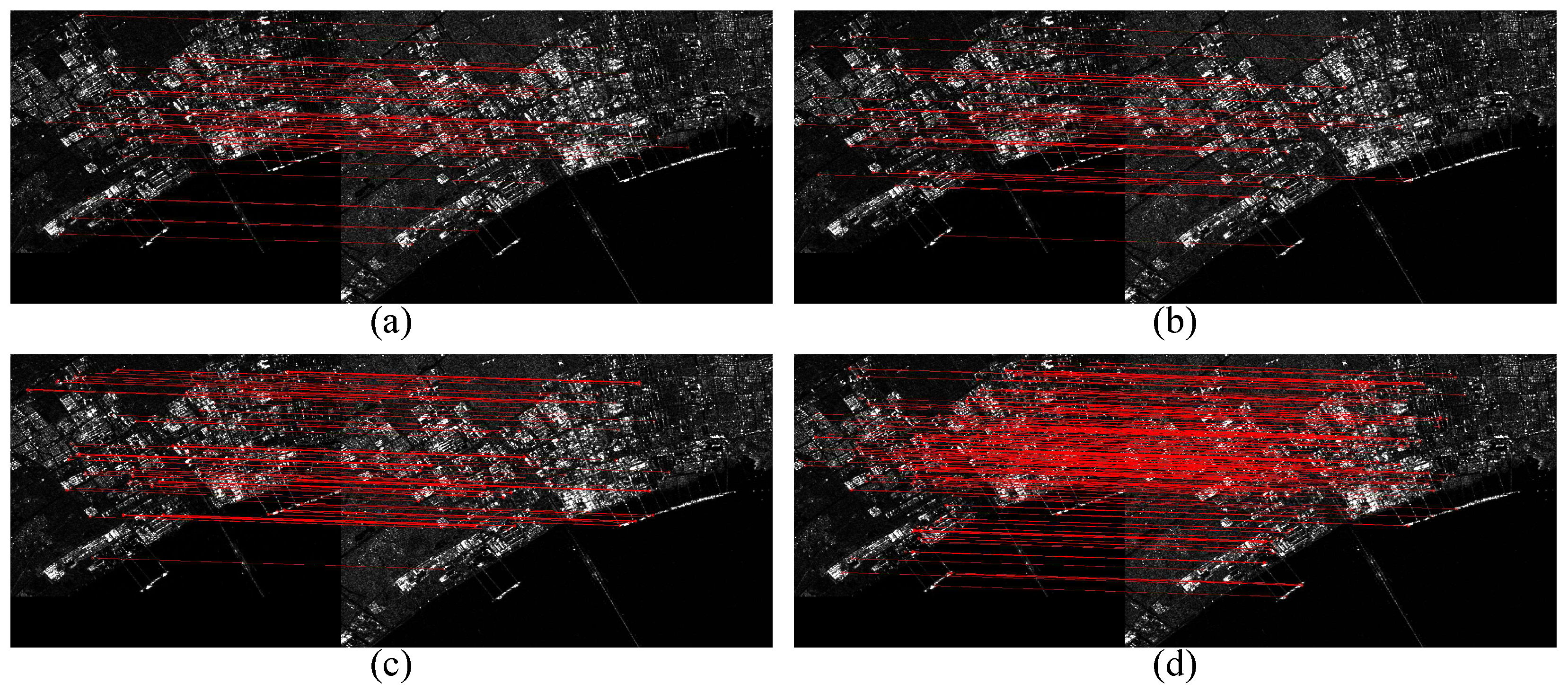

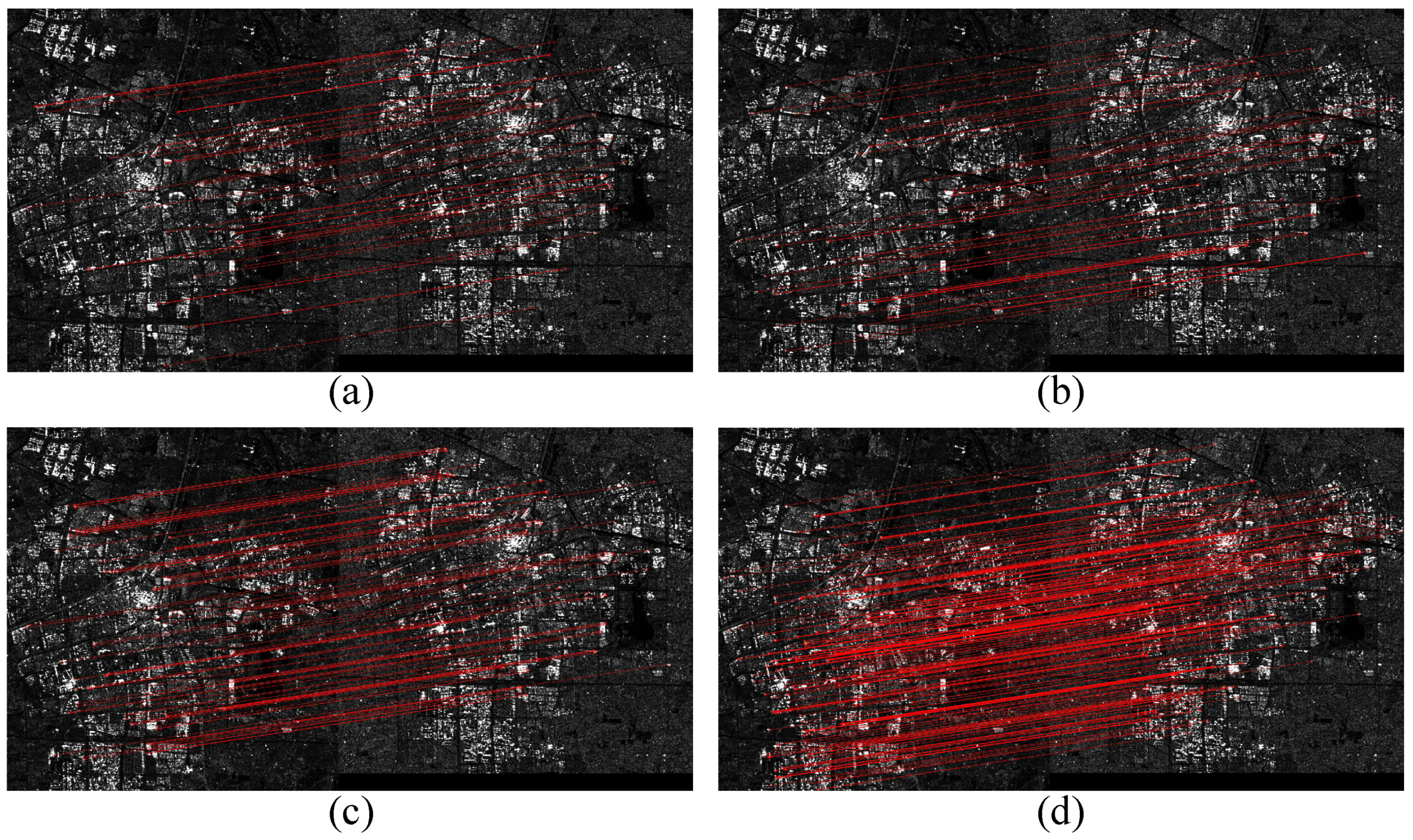

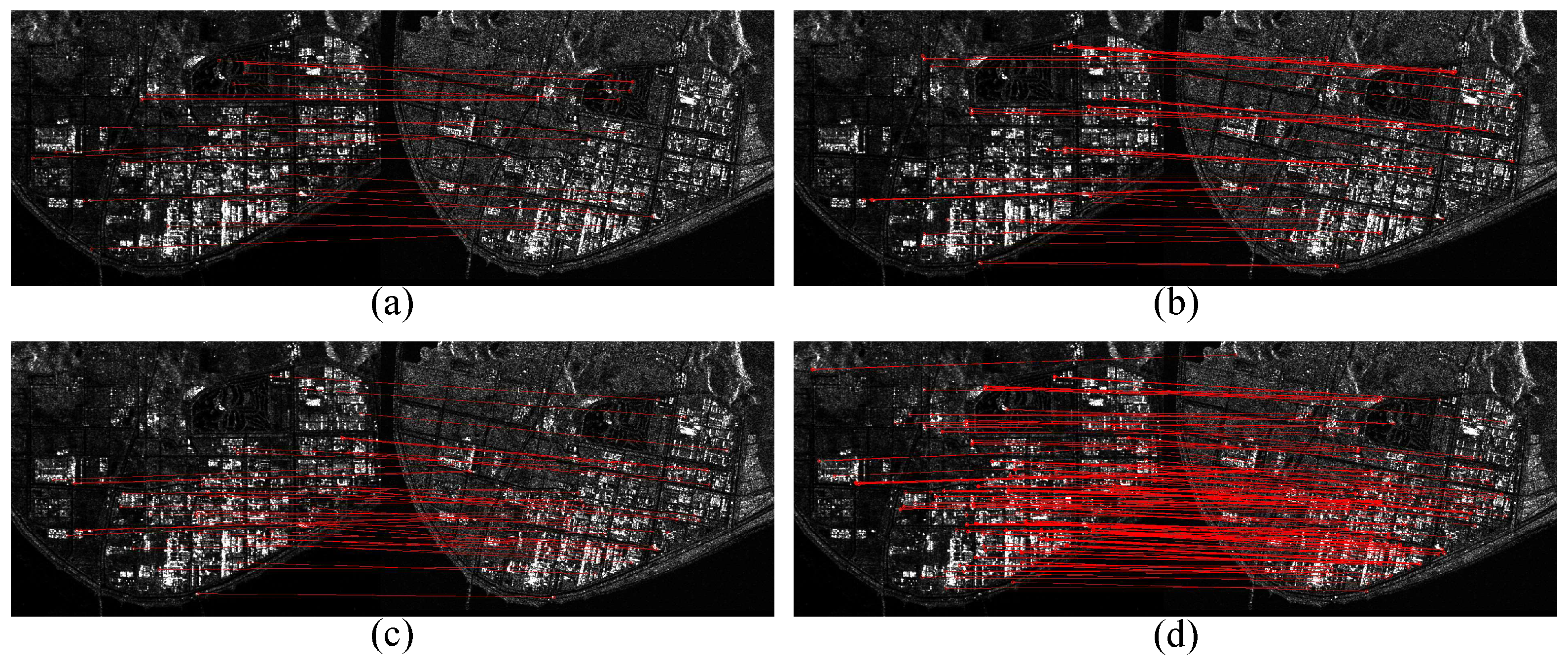

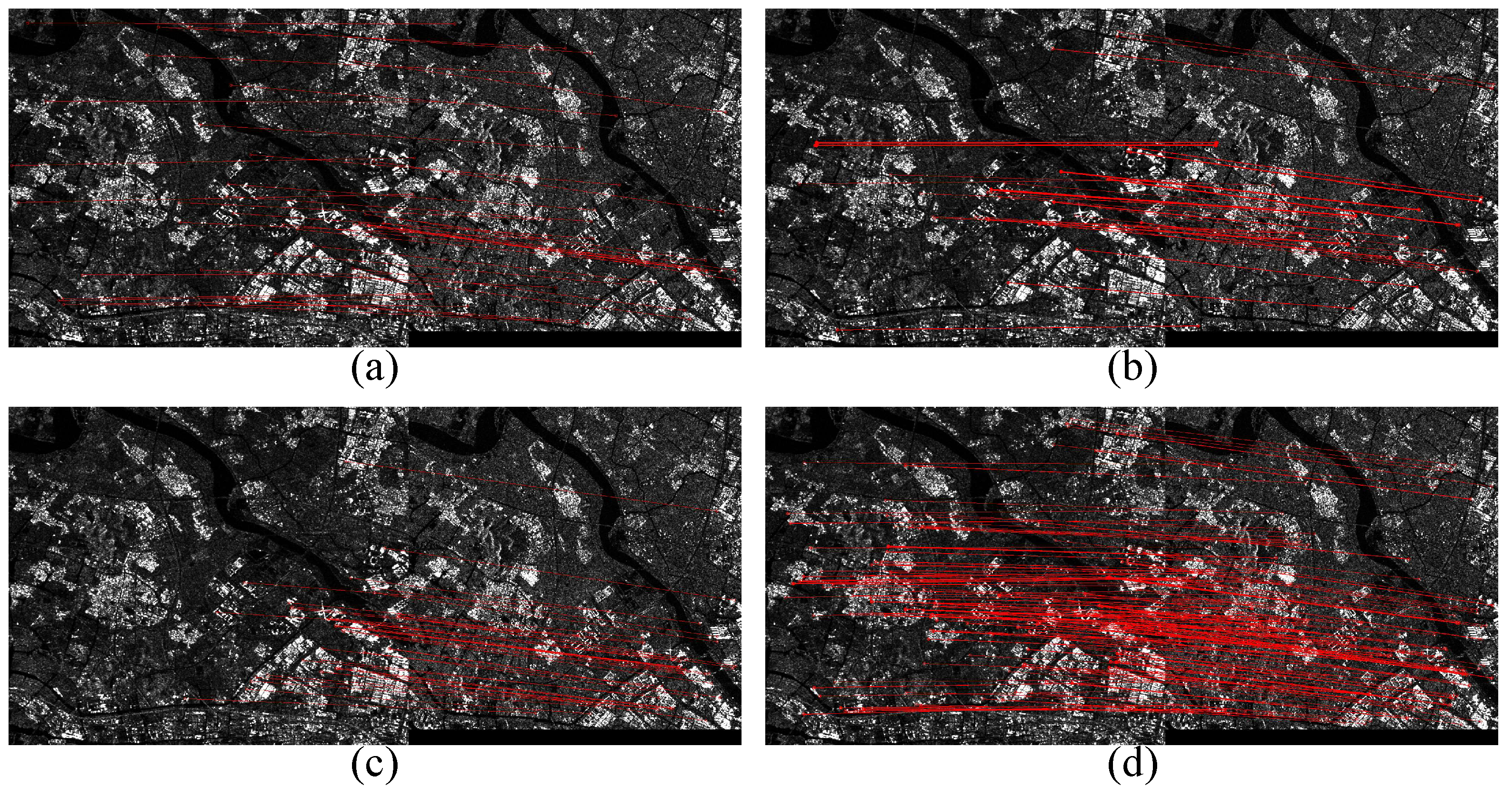

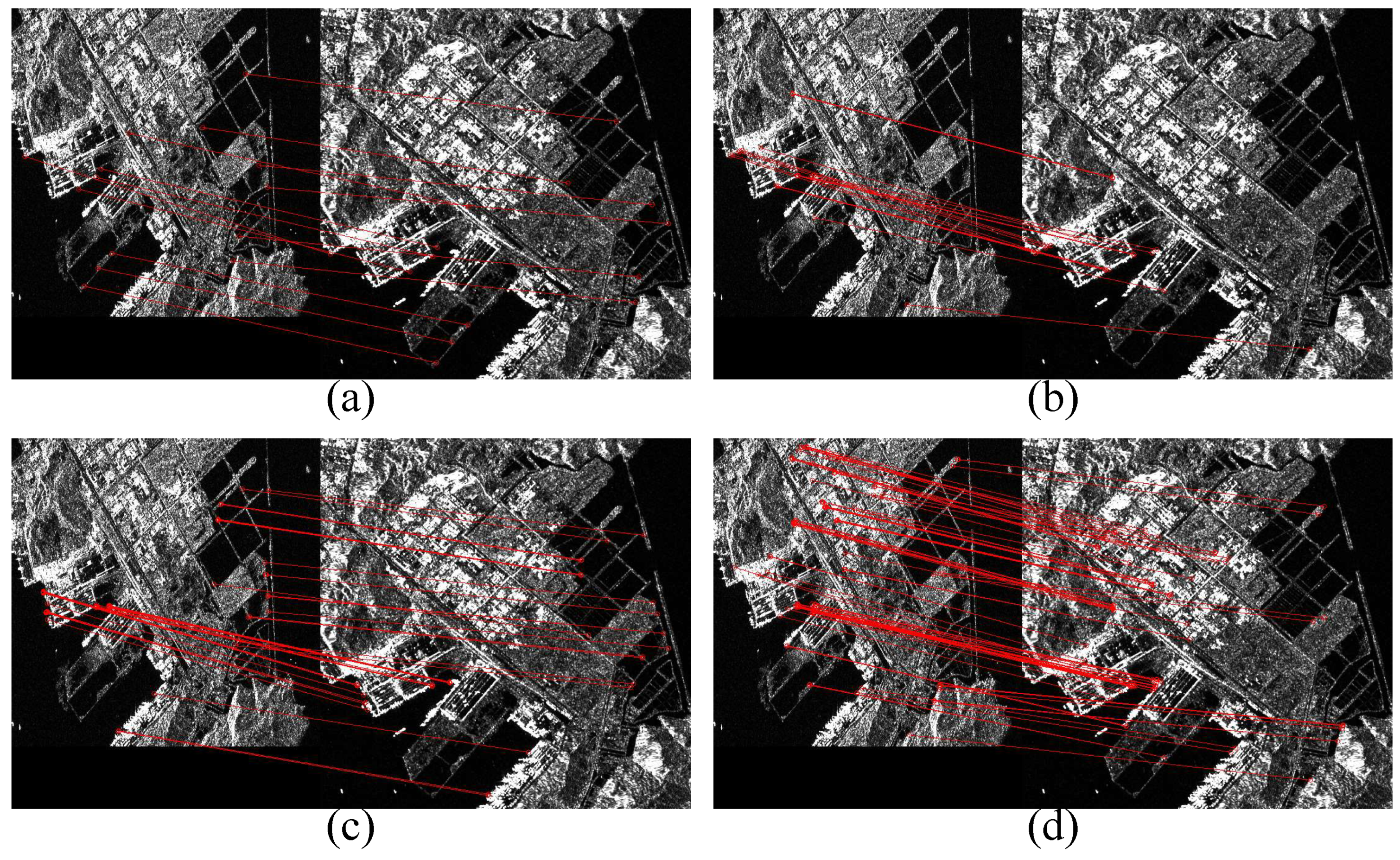

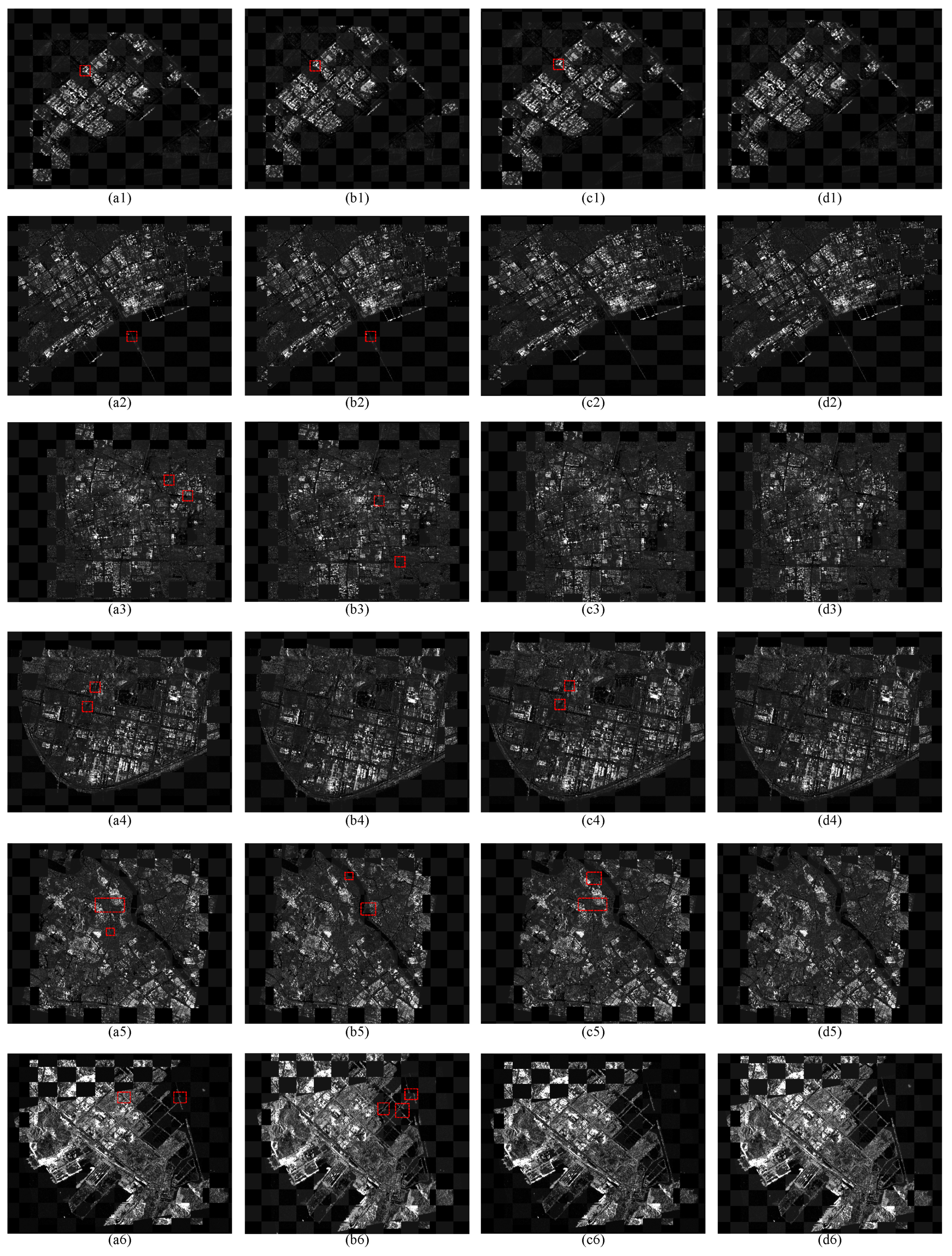

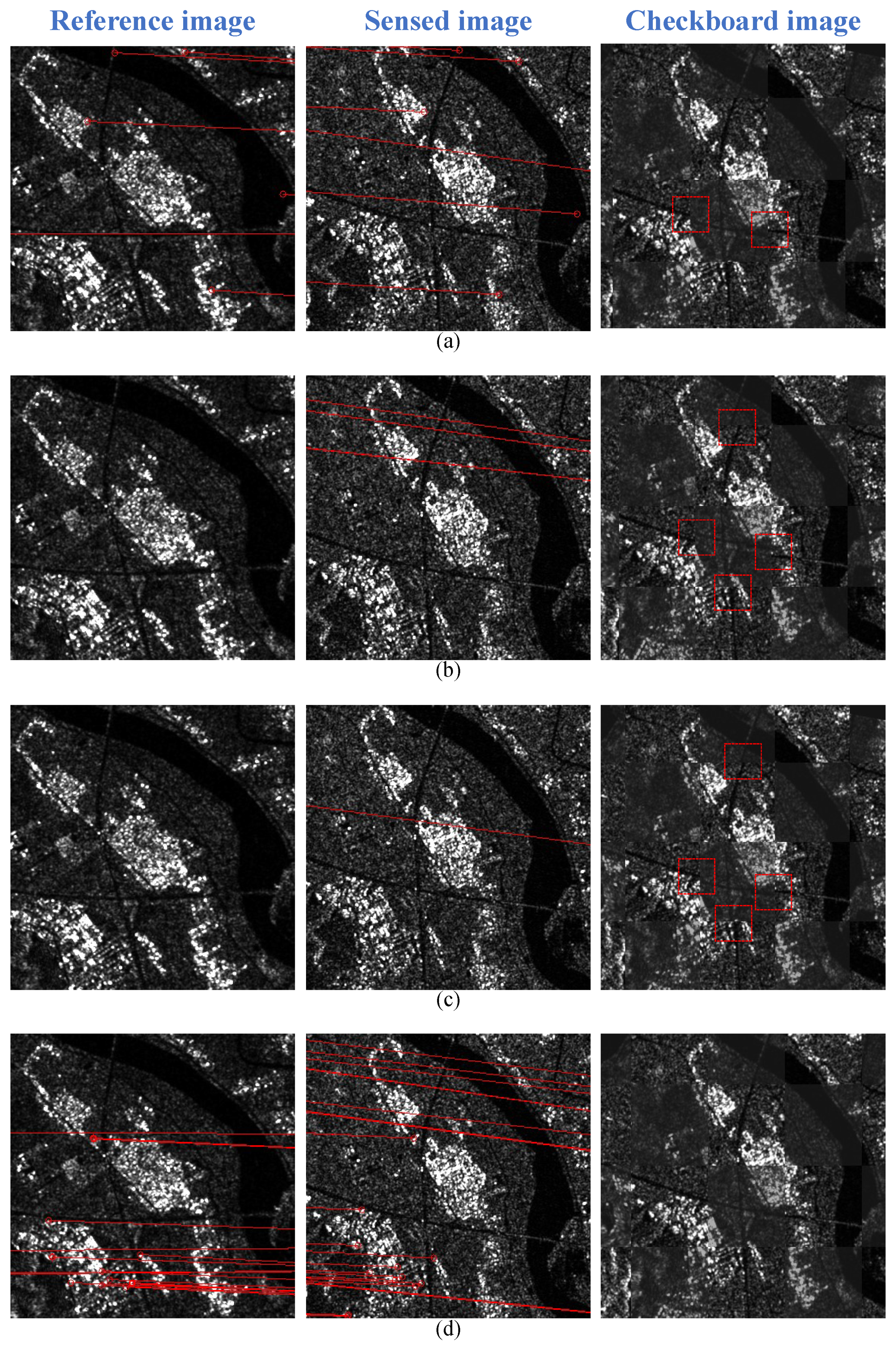

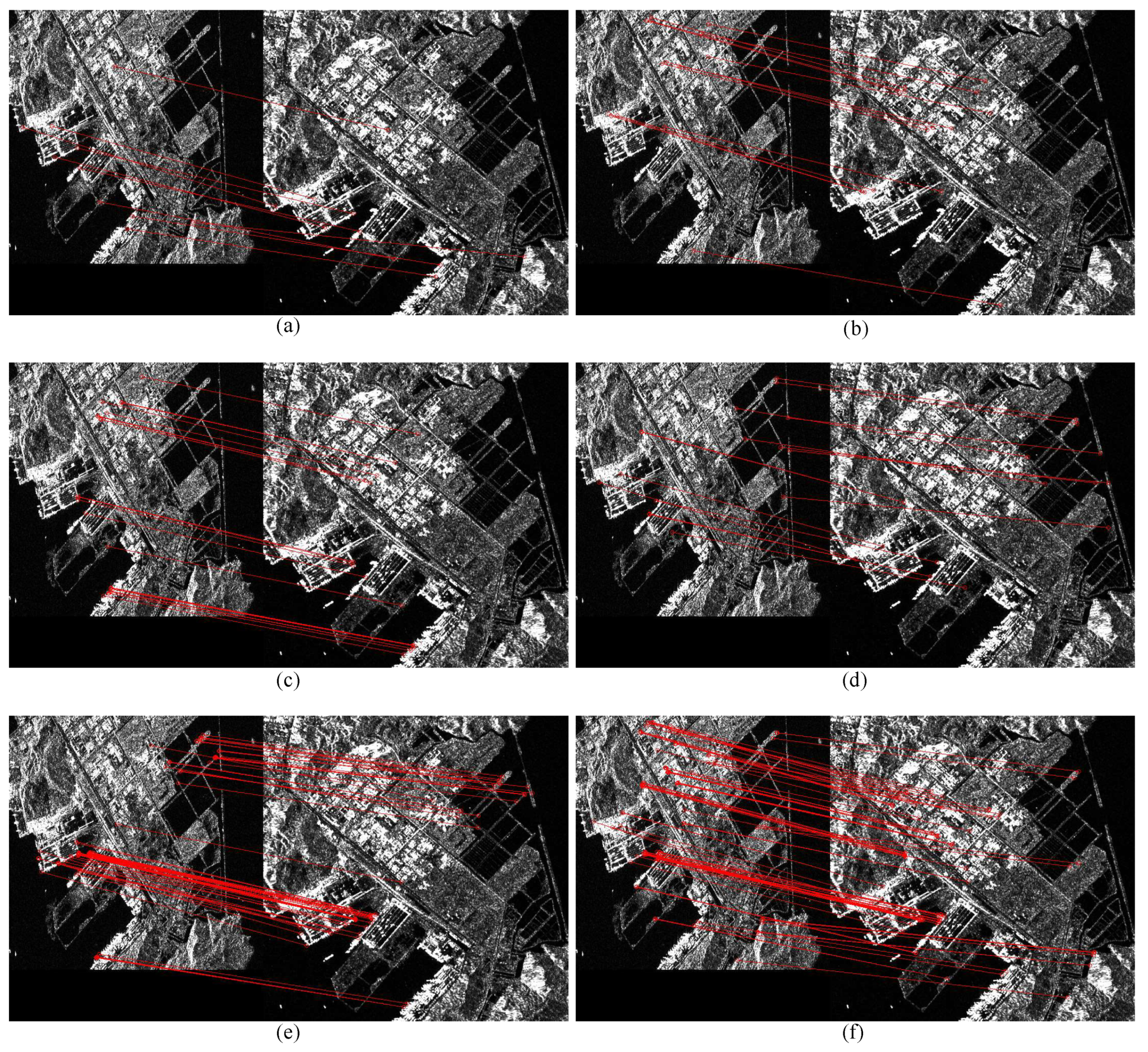

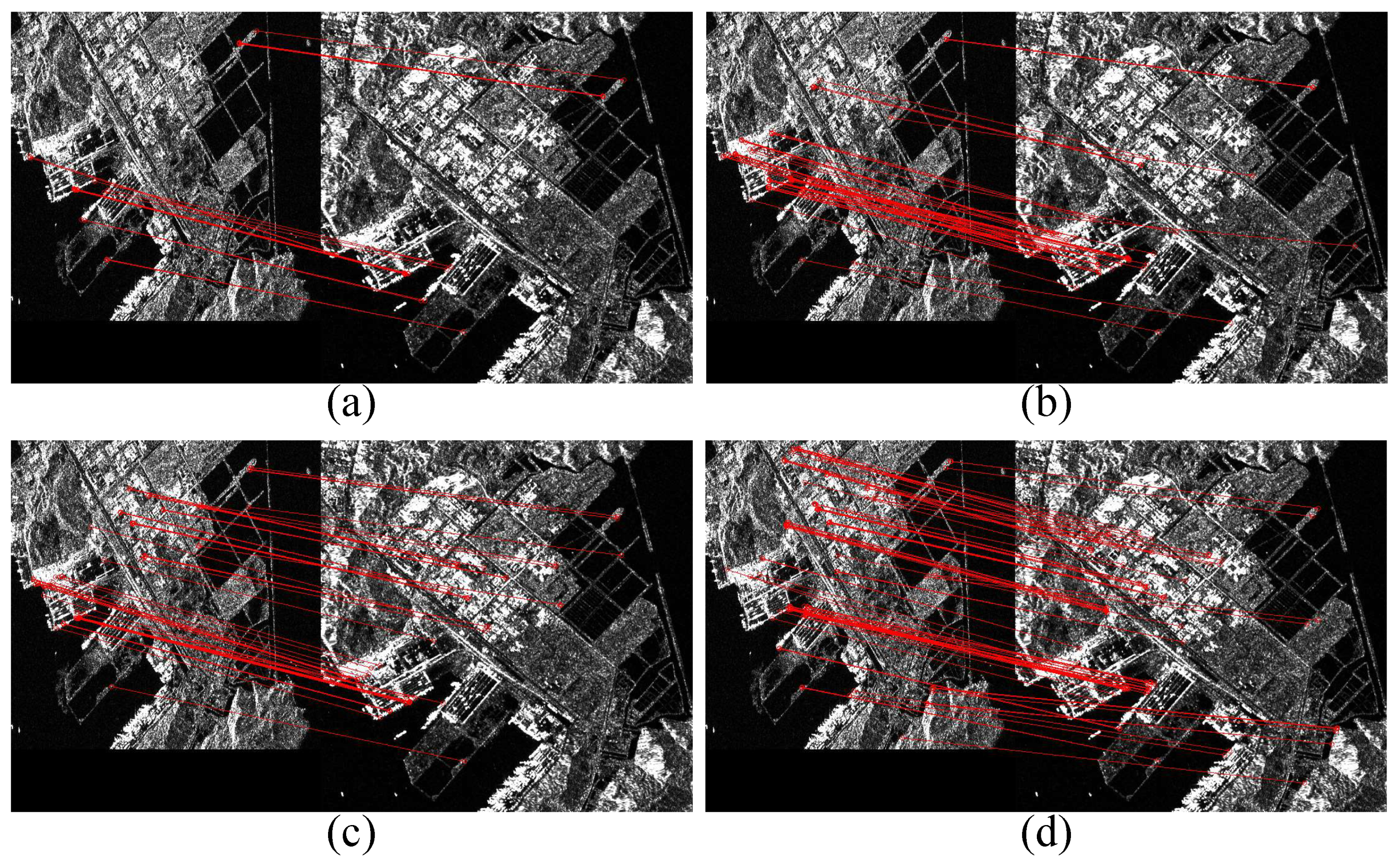

3.2. Registration Results

4. Discussion

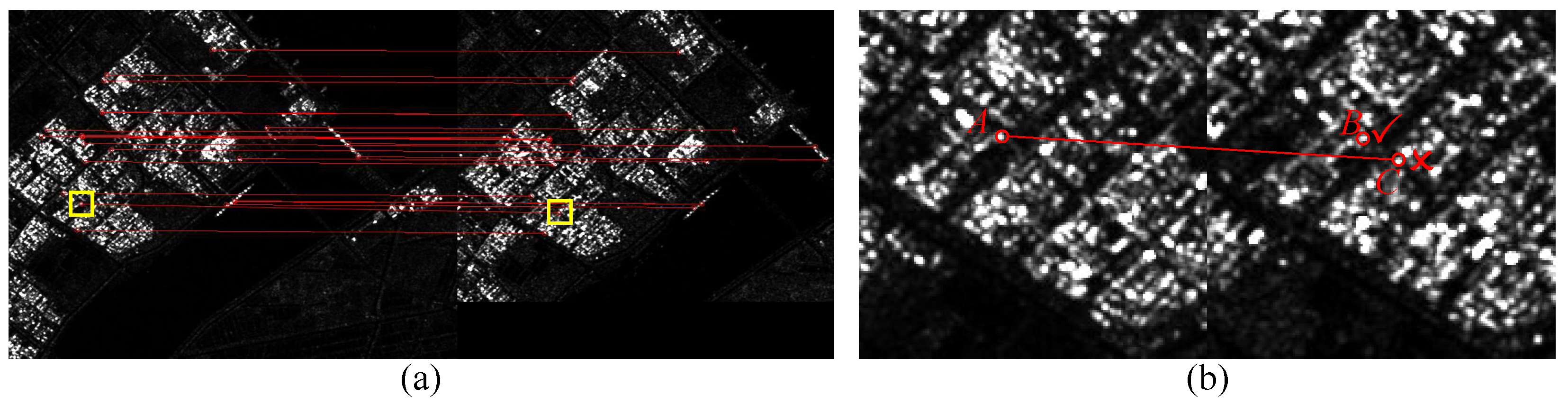

4.1. Effectiveness of the TCPC Keypoint Detector

4.2. Effectiveness of the SD-CapsNet Feature Descriptor Extractor

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rambour, C.; Audebert, N.; Koeniguer, E.; Le Saux, B.; Crucianu, M.; Datcu, M. Flood detection in time series of optical and sar images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1343–1346. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, F.; Xiang, D.; Yin, Q.; Zhou, Y. PolSAR image classification with multiscale superpixel-based graph convolutional network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5209314. [Google Scholar] [CrossRef]

- Zhu, Y.; Yao, X.; Yao, L.; Yao, C. Detection and characterization of active landslides with multisource SAR data and remote sensing in western Guizhou, China. Nat. Hazards 2022, 111, 973–994. [Google Scholar] [CrossRef]

- Sun, Y.; Lei, L.; Li, X.; Tan, X.; Kuang, G. Structure consistency-based graph for unsupervised change detection with homogeneous and heterogeneous remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4700221. [Google Scholar] [CrossRef]

- Gong, M.; Zhou, Z.; Ma, J. Change detection in synthetic aperture radar images based on image fusion and fuzzy clustering. IEEE Trans. Image Process. 2011, 21, 2141–2151. [Google Scholar] [CrossRef]

- Fornaro, G.; Pauciullo, A.; Reale, D.; Verde, S. Multilook SAR tomography for 3-D reconstruction and monitoring of single structures applied to COSMO-SKYMED data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2776–2785. [Google Scholar] [CrossRef]

- Xie, H.; Pierce, L.E.; Ulaby, F.T. Mutual information based registration of SAR images. In Proceedings of the IGARSS 2003—2003 IEEE International Geoscience and Remote Sensing Symposium, Proceedings (IEEE Cat. No. 03CH37477), Toulouse, France, 21–25 July 2003; Volume 6, pp. 4028–4031. [Google Scholar]

- Wang, Y.; Yu, Q.; Yu, W. An improved Normalized Cross Correlation algorithm for SAR image registration. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 2086–2089. [Google Scholar]

- Ye, Y.; Shen, L. Hopc: A novel similarity metric based on geometric structural properties for multi-modal remote sensing image matching. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 9. [Google Scholar] [CrossRef]

- Xiang, Y.; Wang, F.; You, H. An automatic and novel SAR image registration algorithm: A case study of the Chinese GF-3 satellite. Sensors 2018, 18, 672. [Google Scholar] [CrossRef]

- Cordón, O.; Damas, S. Image registration with iterated local search. J. Heuristics 2006, 12, 73–94. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, W.; Miao, Q.; Wang, S. Multimodal continuous ant colony optimization for multisensor remote sensing image registration with local search. Swarm Evol. Comput. 2019, 47, 89–95. [Google Scholar] [CrossRef]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-like algorithm for SAR images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 453–466. [Google Scholar] [CrossRef]

- Paul, S.; Pati, U.C. SAR image registration using an improved SAR-SIFT algorithm and Delaunay-triangulation-based local matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2958–2966. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, J.; Deng, K.; Hua, F. Combining optimized SAR-SIFT features and RD model for multisource SAR image registration. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5206916. [Google Scholar] [CrossRef]

- Durgam, U.K.; Paul, S.; Pati, U.C. SURF based matching for SAR image registration. In Proceedings of the 2016 IEEE Students’ Conference on Electrical, Electronics and Computer Science (SCEECS), Bhopal, India, 5–6 March 2016; pp. 1–5. [Google Scholar]

- Liu, R.; Wang, Y. SAR image matching based on speeded up robust feature. In Proceedings of the 2009 WRI Global Congress on Intelligent Systems, Xiamen, China, 19–21 May 2009; Volume 4, pp. 518–522. [Google Scholar]

- Pourfard, M.; Hosseinian, T.; Saeidi, R.; Motamedi, S.A.; Abdollahifard, M.J.; Mansoori, R.; Safabakhsh, R. KAZE-SAR: SAR image registration using KAZE detector and modified SURF descriptor for tackling speckle noise. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5207612. [Google Scholar] [CrossRef]

- Eltanany, A.S.; Amein, A.S.; Elwan, M.S. A modified corner detector for SAR images registration. Int. J. Eng. Res. Afr. 2021, 53, 123–156. [Google Scholar] [CrossRef]

- Xiang, D.; Xu, Y.; Cheng, J.; Hu, C.; Sun, X. An Algorithm Based on a Feature Interaction-based Keypoint Detector and Sim-CSPNet for SAR Image Registration. J. Radars 2022, 11, 1081–1097. [Google Scholar]

- Quan, D.; Wang, S.; Ning, M.; Xiong, T.; Jiao, L. Using deep neural networks for synthetic aperture radar image registration. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 2799–2802. [Google Scholar]

- Zhang, H.; Ni, W.; Yan, W.; Xiang, D.; Wu, J.; Yang, X.; Bian, H. Registration of multimodal remote sensing image based on deep fully convolutional neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3028–3042. [Google Scholar] [CrossRef]

- Fan, Y.; Wang, F.; Wang, H. A transformer-based coarse-to-fine wide-swath SAR image registration method under weak texture conditions. Remote Sens. 2022, 14, 1175. [Google Scholar] [CrossRef]

- Kayhan, O.S.; Gemert, J.C.v. On translation invariance in cnns: Convolutional layers can exploit absolute spatial location. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14274–14285. [Google Scholar]

- Cheng, J.; Zhang, F.; Xiang, D.; Yin, Q.; Zhou, Y.; Wang, W. PolSAR image land cover classification based on hierarchical capsule network. Remote Sens. 2021, 13, 3132. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. In Proceedings of the NIPS 2017, Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Deng, F.; Pu, S.; Chen, X.; Shi, Y.; Yuan, T.; Pu, S. Hyperspectral image classification with capsule network using limited training samples. Sensors 2018, 18, 3153. [Google Scholar] [CrossRef]

- Xiang, C.; Zhang, L.; Tang, Y.; Zou, W.; Xu, C. MS-CapsNet: A novel multi-scale capsule network. IEEE Signal Process. Lett. 2018, 25, 1850–1854. [Google Scholar] [CrossRef]

- Yang, S.; Lee, F.; Miao, R.; Cai, J.; Chen, L.; Yao, W.; Kotani, K.; Chen, Q. RS-CapsNet: An advanced capsule network. IEEE Access 2020, 8, 85007–85018. [Google Scholar] [CrossRef]

- Phaye, S.S.R.; Sikka, A.; Dhall, A.; Bathula, D. Dense and diverse capsule networks: Making the capsules learn better. arXiv 2018, arXiv:1805.04001. [Google Scholar]

- Fan, J.; Wu, Y.; Wang, F.; Zhang, Q.; Liao, G.; Li, M. SAR image registration using phase congruency and nonlinear diffusion-based SIFT. IEEE Geosci. Remote Sens. Lett. 2014, 12, 562–566. [Google Scholar]

- Wang, L.; Sun, M.; Liu, J.; Cao, L.; Ma, G. A robust algorithm based on phase congruency for optical and SAR image registration in suburban areas. Remote Sens. 2020, 12, 3339. [Google Scholar] [CrossRef]

- Goncalves, H.; Corte-Real, L.; Goncalves, J.A. Automatic image registration through image segmentation and SIFT. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2589–2600. [Google Scholar] [CrossRef]

- Li, Y.; Liu, L.; Wang, L.; Li, D.; Zhang, M. Fast SIFT algorithm based on Sobel edge detector. In Proceedings of the 2012 2nd International Conference on Consumer Electronics, Communications and Networks (CECNet), Yichang, China, 21–23 April 2012; pp. 1820–1823. [Google Scholar]

- Ye, Y.; Wang, M.; Hao, S.; Zhu, Q. A novel keypoint detector combining corners and blobs for remote sensing image registration. IEEE Geosci. Remote Sens. Lett. 2020, 18, 451–455. [Google Scholar] [CrossRef]

- Kovesi, P. Phase congruency detects corners and edges. In Proceedings of the Australian Pattern Recognition Society Conference: DICTA 2003, Sydney, Australia, 10–12 December 2003. [Google Scholar]

- Guo, Z.; Zhang, L.; Zhang, D. A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 2010, 19, 1657–1663. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Wang, Z.; Liu, T. Two-stage method based on triplet margin loss for pig face recognition. Comput. Electron. Agric. 2022, 194, 106737. [Google Scholar] [CrossRef]

- Tian, Y.; Yu, X.; Fan, B.; Wu, F.; Heijnen, H.; Balntas, V. Sosnet: Second order similarity regularization for local descriptor learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11016–11025. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Part I 9. pp. 430–443. [Google Scholar]

| Block | Layer | Input Size | Output Size |

|---|---|---|---|

| Input layer | One column of the feature descriptor | 32 × 1 | |

| Fully connected layer | Linear (32, 256); “RelU” | 32 × 1 | 256 × 1 |

| Fully connected layer | Linear (256, 512); “RelU” | 256 × 1 | 512 × 1 |

| Fully connected layer | Linear (512, 1024); “Sigmoid” | 512 × 1 | 1024 × 1 |

| Output layer | Reshape | 1024 × 1 | 32 × 32 |

| Site | Size | Resolution (Range × Azimuth) |

|---|---|---|

| Pair 1 | 836 × 583 | 10 m × 10 m |

| 663 × 488 | ||

| Pair 2 | 1027 × 752 | 10 m × 10 m |

| 1339 × 911 | ||

| Pair 3 | 1095 × 1201 | 10 m × 10 m |

| 1171 × 1143 | ||

| Pair 4 | 918 × 683 | 10 m × 10 m |

| 976 × 666 | ||

| Pair 5 | 1135 × 961 | 10 m × 10 m |

| 945 × 916 | ||

| Pair 6 | 500 × 499 | 11 m × 14 m |

| 600 × 600 |

| Block | Layer | Input Size | Output Size (Width × Height × Channel) |

|---|---|---|---|

| Input layer | 32 × 32 × 1 | ||

| Conv layer 1 | Conv (3 × 3), stride (1), ReLU | 32 × 32 × 1 | 32 × 32 × 64 |

| Low-level capsule Layer | Conv (9 × 9), stride (2), ReLU | 32 × 32 × 64 | 12 × 12 × 64 |

| Reshape | 12 × 12 × 64 | 1152 × 8 | |

| Conv layer 2 | Conv (3 × 3), stride (1), ReLU | 32 × 32 × 64 | 32 × 32 × 128 |

| Medium-level capsule Layer | Conv (9 × 9), stride (2), ReLU | 32 × 32 × 128 | 12 × 12 × 128 |

| Reshape | 12 × 12 × 128 | 2304 × 8 | |

| Conv layer 3 | Conv (3 × 3), stride (1), ReLU | 32 × 32 × 128 | 32 × 32 × 256 |

| High-level capsule layer | Conv (9 × 9), stride (2), ReLU | 32 × 32 × 128 | 12 × 12 × 256 |

| Reshape | 12 × 12 × 256 | 4608 × 8 | |

| Primary capsule layer | Concat | 1152 × 8, 2304 × 8, 4608 × 8 | 8064 × 8 |

| Feature descriptor layer | Dynamic routing | 8064 × 8 | 32 × 4 |

| Platform | Linux |

| Torch | V 1.10.1 |

| CPU | Intel(R) Xeon(R) Silver 4210R |

| Memory | 64G |

| GPU | Nvidia GeForce RTX 3090 |

| Video memory | 24G |

| Method | Pair 1 | Pair 2 | Pair 3 | Pair 4 | Pair 5 | Pair 6 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NCM | RMSE | Time | NCM | RMSE | Time | NCM | RMSE | Time | NCM | RMSE | Time | NCM | RMSE | Time | NCM | RMSE | Time | |

| SAR-SIFT | 33 | 0.77 | 718.51 | 70 | 0.68 | 2496.3 | 46 | 0.77 | 2807.37 | 23 | 0.89 | 975.43 | 40 | 0.76 | 1625.38 | 14 | 0.87 | 369.9 |

| SOSNet | 94 | 0.77 | 28.78 | 60 | 0.72 | 40.42 | 61 | 0.73 | 39.89 | 84 | 0.79 | 18.06 | 118 | 0.84 | 19.19 | 17 | 0.82 | 15.39 |

| Sim-CSPNet | 24 | 0.61 | 3.15 | 121 | 0.63 | 5.63 | 105 | 0.65 | 7.01 | 48 | 0.76 | 5.08 | 43 | 0.74 | 5.68 | 51 | 0.71 | 3.60 |

| SD-CapsNet | 156 | 0.59 | 2.05 | 391 | 0.45 | 5.43 | 369 | 0.46 | 6.75 | 310 | 0.74 | 3.60 | 325 | 0.73 | 5.39 | 93 | 0.66 | 3.39 |

| Method | DoG [33] | FAST [43] | Harris [35] | SAR-Harris [13] | FI [20] | Proposed TCPC |

|---|---|---|---|---|---|---|

| Detection time | 0.10 | 0.01 | 0.57 | 44.27 | 2.40 | 1.17 |

| Matching time | 2.65 | 3.28 | 2.44 | 2.81 | 1.14 | 2.22 |

| Total time | 2.75 | 3.29 | 3.01 | 47.08 | 3.54 | 3.39 |

| NCM | 14 | 14 | 22 | 33 | 74 | 93 |

| RMSE | 0.78 | 0.74 | 0.75 | 0.69 | 0.66 | 0.66 |

| Method | TCPC+SOSNet | TCPC+Sim-CSPNet | TCPC+CapsNet | SD-CapsNet |

|---|---|---|---|---|

| RMSE | 0.74 | 0.71 | 0.71 | 0.66 |

| NCM | 22 | 55 | 57 | 96 |

| Time(s) | 8.85 | 3.47 | 3.23 | 3.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Guan, D.; Zheng, X.; Chen, Z.; Pan, L. SD-CapsNet: A Siamese Dense Capsule Network for SAR Image Registration with Complex Scenes. Remote Sens. 2023, 15, 1871. https://doi.org/10.3390/rs15071871

Li B, Guan D, Zheng X, Chen Z, Pan L. SD-CapsNet: A Siamese Dense Capsule Network for SAR Image Registration with Complex Scenes. Remote Sensing. 2023; 15(7):1871. https://doi.org/10.3390/rs15071871

Chicago/Turabian StyleLi, Bangjie, Dongdong Guan, Xiaolong Zheng, Zhengsheng Chen, and Lefei Pan. 2023. "SD-CapsNet: A Siamese Dense Capsule Network for SAR Image Registration with Complex Scenes" Remote Sensing 15, no. 7: 1871. https://doi.org/10.3390/rs15071871

APA StyleLi, B., Guan, D., Zheng, X., Chen, Z., & Pan, L. (2023). SD-CapsNet: A Siamese Dense Capsule Network for SAR Image Registration with Complex Scenes. Remote Sensing, 15(7), 1871. https://doi.org/10.3390/rs15071871