Spatial Downscaling of Near-Surface Air Temperature Based on Deep Learning Cross-Attention Mechanism

Abstract

:1. Introduction

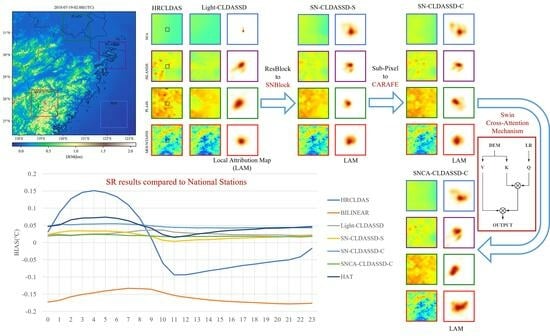

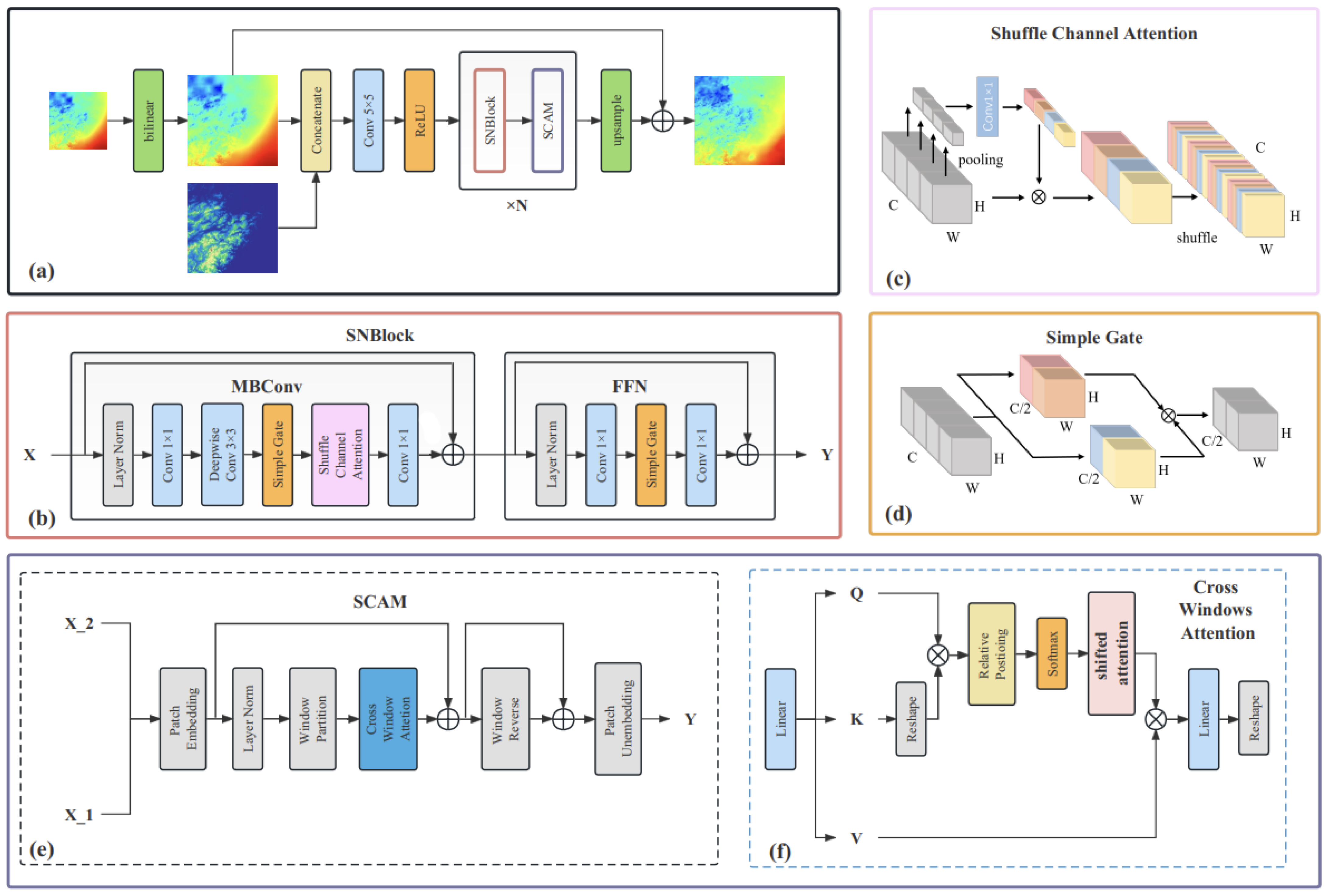

- Without increasing the number of parameters and computational complexity of the network, we introduce the feature extraction module of SNBlock to augment the network’s feature extraction capability and the mapping learning ability between high and low-resolution temperature fields.

- Replacing the upsampling operator from sub-pixel to CARAFE, which is lightweight and has a larger receptive field to reconstruct spatial details, effectively mitigates the occurrence of checkerboard artifacts.

2. Materials and Methods

2.1. Study Area and Data

2.1.1. Study Area

2.1.2. Data

2.2. Data Preprocessing

2.2.1. Grid Data

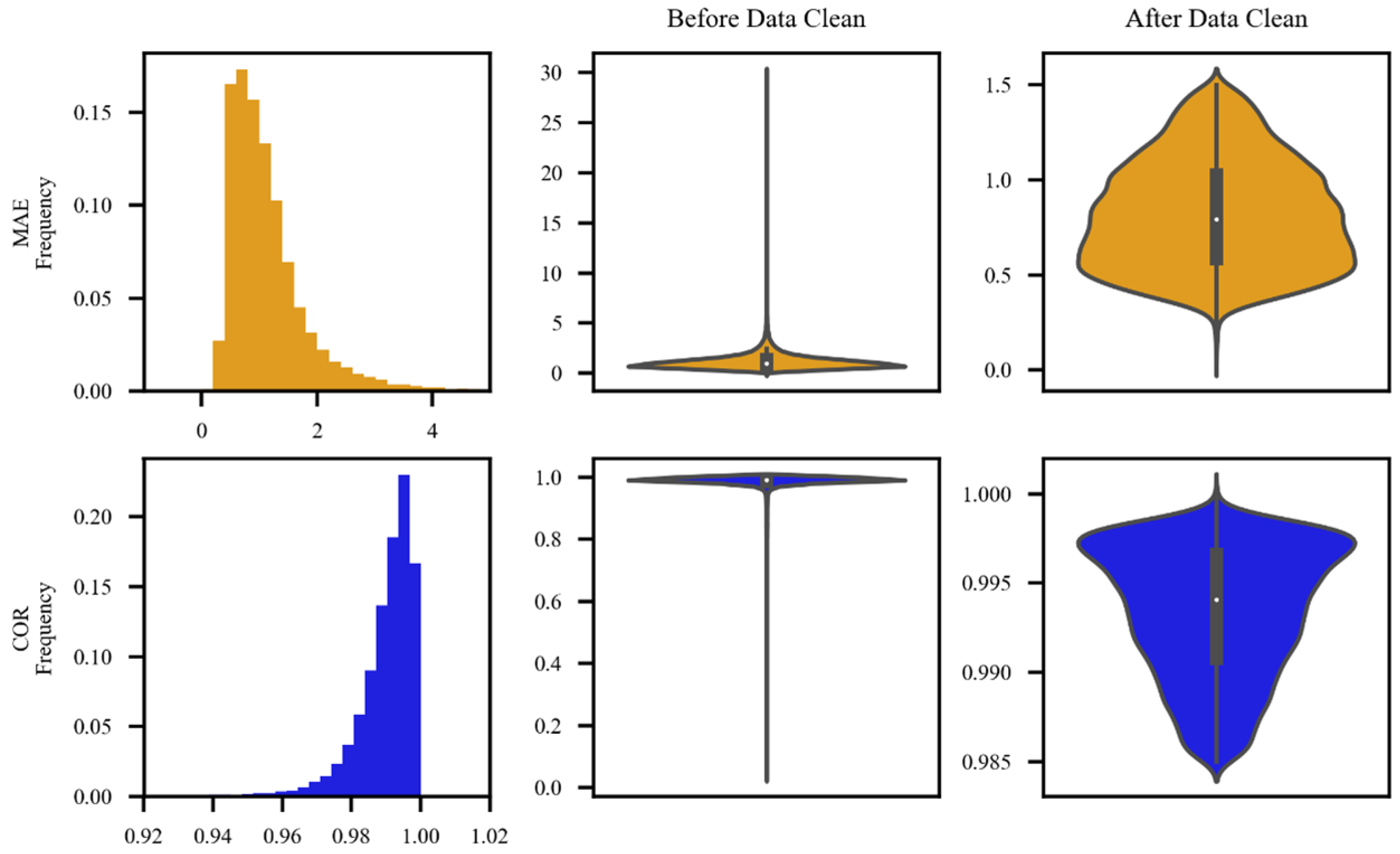

2.2.2. Regional Stations Data

2.3. SNCA-CLDASSD

2.4. Shuffle–Nonlinear-Activation-Free Block

2.5. Swin Cross-Attention Mechanism

2.6. Upsampling Module

2.6.1. Sub-Pixel

2.6.2. CARAFE

2.7. Loss Function

2.8. Evaluation Metrics

2.9. Experimental Designs

2.9.1. Ablation Study

2.9.2. Comparative Experiment

- Bilinear Interpolation

- 2.

- Hybrid Attention Transformer (HAT)

3. Experimental Results

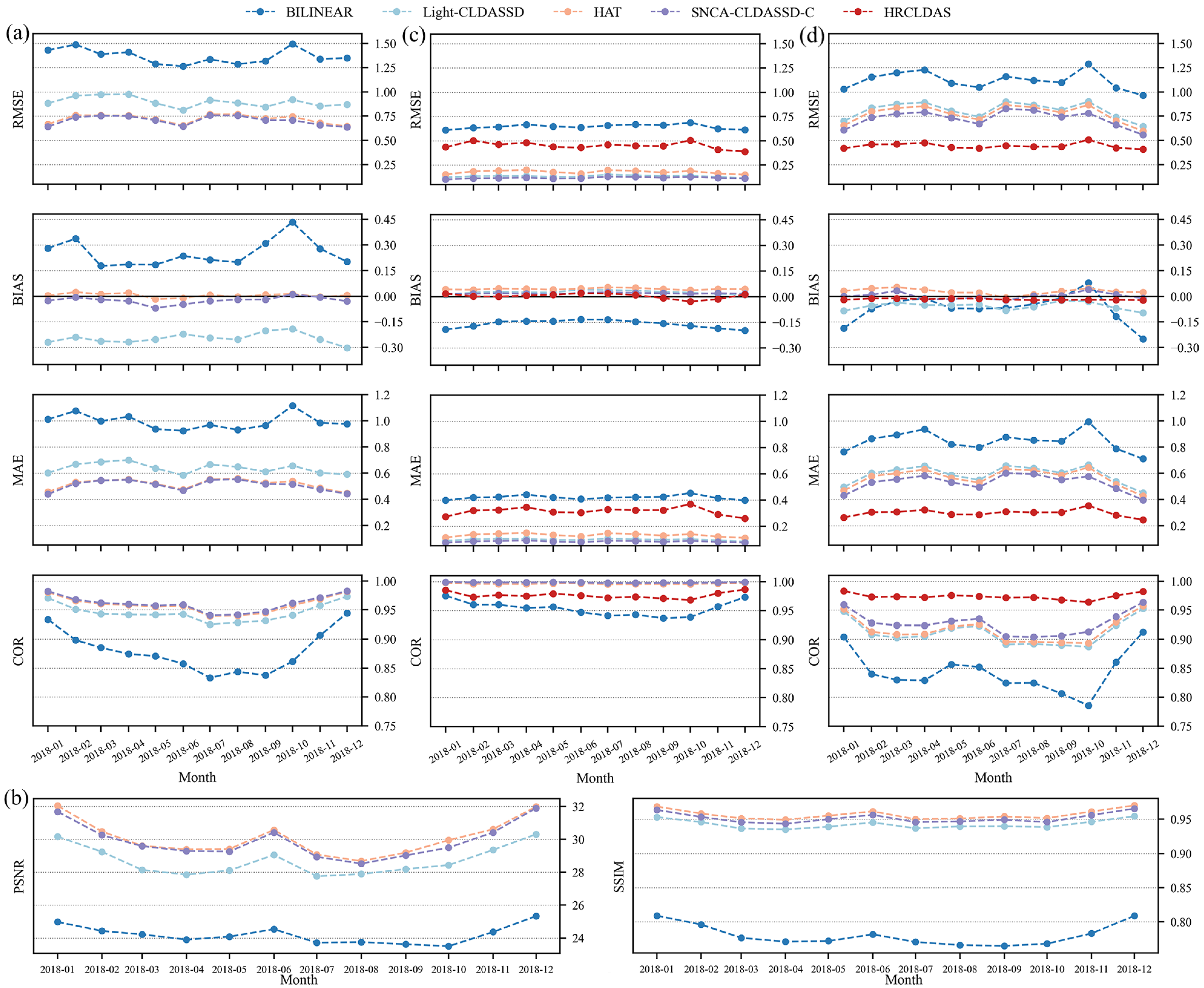

3.1. Ablation Study Result

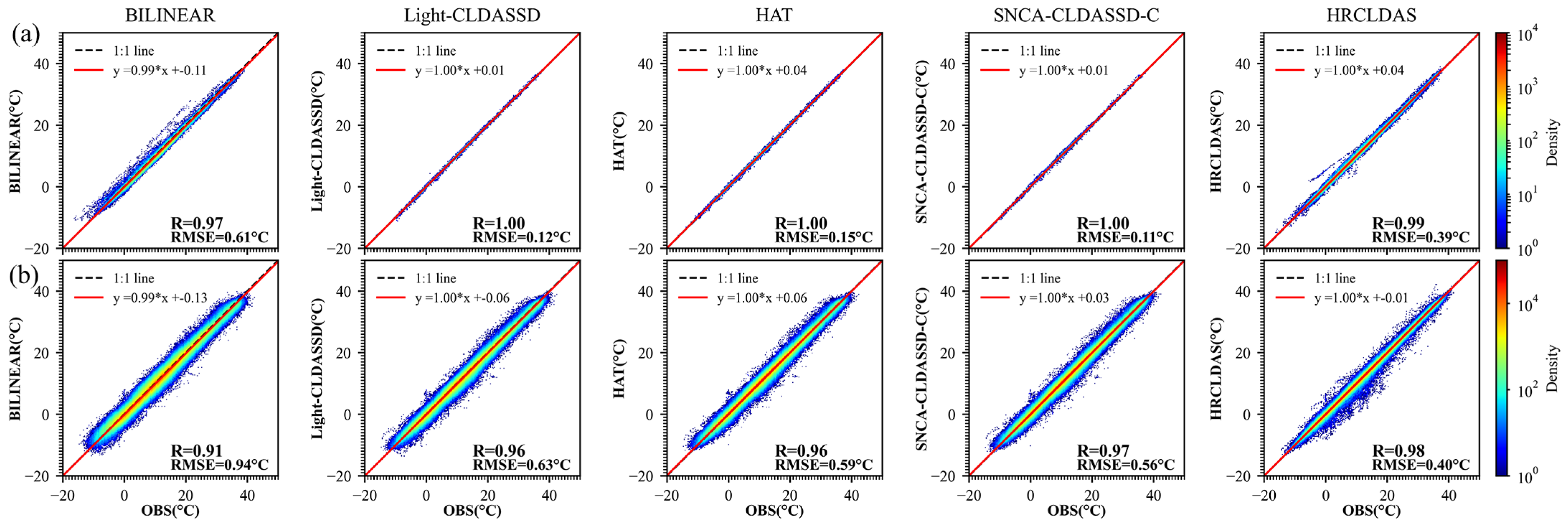

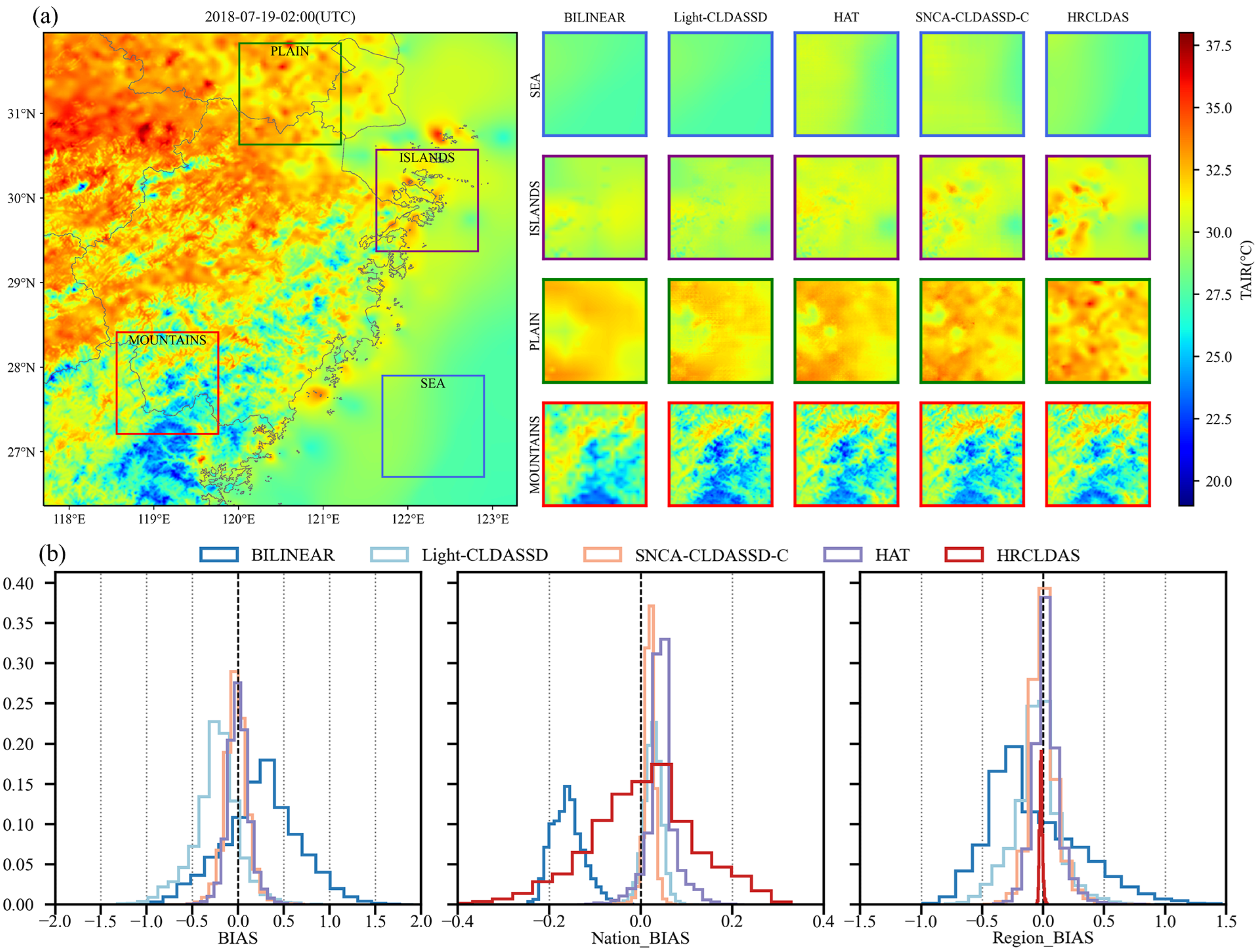

3.2. Spatial Distribution

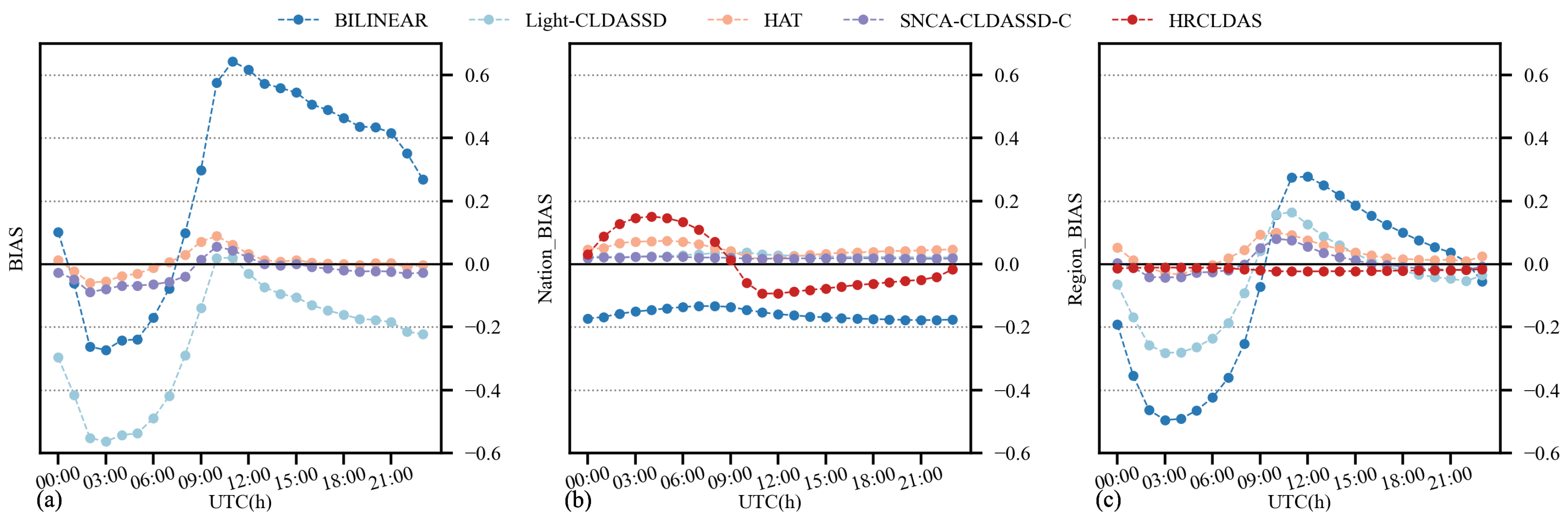

3.3. Temporal Change

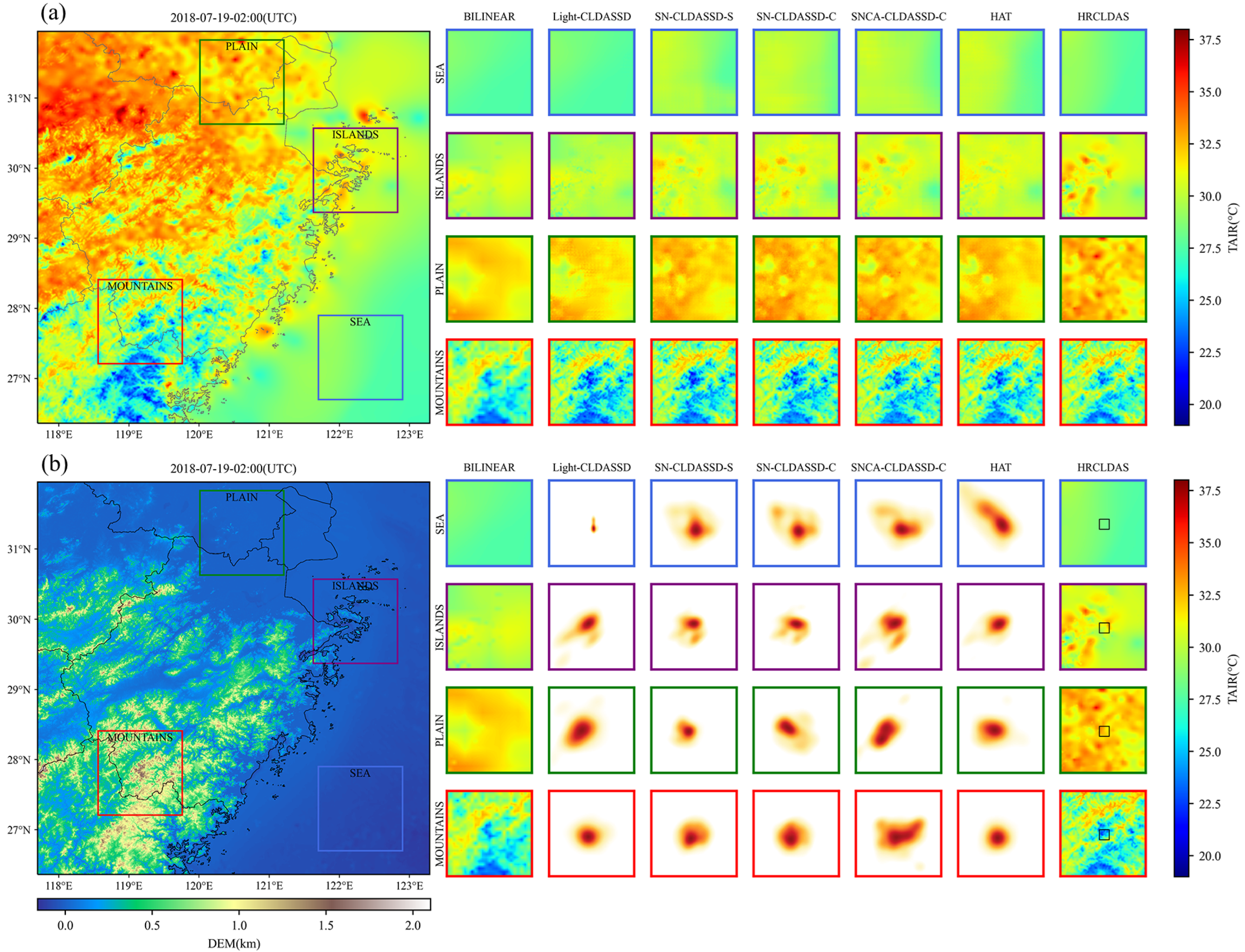

3.4. Local Attribution Analysis

4. Discussion

5. Conclusions

- The SNCA-CLDASSD-C model, incorporating the SNBlock, SCAM, and CARAFE, exhibits the best performance among all variations. Compared to Light-CLDASSD, it significantly improves the spatial downscaling accuracy.

- The SNCA-CLDASSD-C model shows the most improvement in mountainous areas compared to Light-CLDASSD, followed by plain areas. Additionally, CARAFE effectively reduces checkerboard patterns compared to sub-pixel. Furthermore, the CARAFE upsampling operator effectively suppresses the checkerboard artifacts compared to sub-pixel.

- Our model performs best in winter, and then in autumn, but has a relatively lower performance in spring and summer. It also has the least bias, especially in hourly temperature.

- Through local attribution analysis (LAM) of various downscaling methods, it is evident that the SCAM effectively utilizes high-resolution auxiliary data such as the DEM to enhance model performance. The SCAM adeptly extracts feature information from these auxiliary data sources, allowing for the reconstruction of more detailed temperature field textures.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Han, S.; Shi, C.; Xu, B.; Sun, S.; Zhang, T.; Jiang, L.; Liang, X. Development and evaluation of hourly and kilometer resolution retrospective and real-time surface meteorological blended forcing dataset (SMBFD) in China. J. Meteorol. Res. 2019, 33, 1168–1181. [Google Scholar] [CrossRef]

- Huang, X.; Rhoades, A.M.; Ullrich, P.A.; Zarzycki, C.M. An evaluation of the variable-resolution CESM for modeling California’s climate. J. Adv. Model. Earth Syst. 2016, 8, 345–369. [Google Scholar] [CrossRef]

- Chen, L.; Liang, X.Z.; DeWitt, D.; Samel, A.N.; Wang, J.X. Simulation of seasonal US precipitation and temperature by the nested CWRF-ECHAM system. Clim. Dyn. 2016, 46, 879–896. [Google Scholar] [CrossRef]

- Griggs, D.J.; Noguer, M. Climate change 2001: The scientific basis. Contribution of working group I to the third assessment report of the intergovernmental panel on climate change. Weather 2002, 57, 267–269. [Google Scholar] [CrossRef]

- Hertig, E.; Jacobeit, J. Assessments of Mediterranean precipitation changes for the 21st century using statistical downscaling techniques. Int. J. Climatol. J. R. Meteorol. Soc. 2008, 28, 1025–1045. [Google Scholar] [CrossRef]

- Sun, X.; Wang, J.; Zhang, L.; Ji, C.; Zhang, W.; Li, W. Spatial downscaling model combined with the Geographically Weighted Regression and multifractal models for monthly GPM/IMERG precipitation in Hubei Province, China. Atmosphere 2022, 13, 476. [Google Scholar] [CrossRef]

- Stehlík, J.; Bárdossy, A. Multivariate stochastic downscaling model for generating daily precipitation series based on atmospheric circulation. J. Hydrol. 2002, 256, 120–141. [Google Scholar] [CrossRef]

- Kwon, M.; Kwon, H.H.; Han, D. A spatial downscaling of soil moisture from rainfall, temperature, and AMSR2 using a Gaussian-mixture nonstationary hidden Markov model. J. Hydrol. 2018, 564, 1194–1207. [Google Scholar] [CrossRef]

- Ailliot, P.; Allard, D.; Monbet, V.; Naveau, P. Stochastic weather generators: An overview of weather type models. J. De La Société Française De Stat. 2015, 156, 101–113. [Google Scholar]

- Semenov, M.A. Simulation of extreme weather events by a stochastic weather generator. Clim. Res. 2008, 35, 203–212. [Google Scholar] [CrossRef]

- Choi, H.; Lee, J.; Yang, J. N-gram in swin transformers for efficient lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2071–2081. [Google Scholar]

- Wang, P.; Bayram, B.; Sertel, E. A comprehensive review on deep learning based remote sensing image super-resolution methods. Earth-Sci. Rev. 2022, 232, 104110. [Google Scholar] [CrossRef]

- Chan, K.C.; Zhou, S.; Xu, X.; Loy, C.C. Basicvsr++: Improving video super-resolution with enhanced propagation and alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5972–5981. [Google Scholar]

- Ranade, R.; Liang, Y.; Wang, S.; Bai, D.; Lee, J. 3D Texture Super Resolution via the Rendering Loss. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 7–13 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1556–1560. [Google Scholar]

- Wang, F.; Tian, D.; Lowe, L.; Kalin, L.; Lehrter, J. Deep learning for daily precipitation and temperature downscaling. Water Resour. Res. 2021, 57, e2020WR029308. [Google Scholar] [CrossRef]

- Harris, L.; McRae, A.T.; Chantry, M.; Dueben, P.D.; Palmer, T.N. A generative deep learning approach to stochastic downscaling of precipitation forecasts. J. Adv. Model. Earth Syst. 2022, 14, e2022MS003120. [Google Scholar] [CrossRef]

- Leinonen, J.; Nerini, D.; Berne, A. Stochastic super-resolution for downscaling time-evolving atmospheric fields with a generative adversarial network. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7211–7223. [Google Scholar] [CrossRef]

- Vandal, T.; Kodra, E.; Ganguly, S.; Michaelis, A.; Nemani, R.; Ganguly, A.R. Deepsd: Generating high resolution climate change projections through single image super-resolution. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1663–1672. [Google Scholar]

- Mao, Z. Spatial Downscaling of Meteorological Data Based on Deep Learning Image Super-Resolution. Master’s Thesis, Wuhan University, Wuhan, China, 2019. [Google Scholar]

- Singh, A.; White, B.; Albert, A.; Kashinath, K. Downscaling numerical weather models with GANs. In Proceedings of the 100th American Meteorological Society Annual Meeting, Boston, MA, USA, 12–16 January 2020. [Google Scholar]

- Höhlein, K.; Kern, M.; Hewson, T.; Westermann, R. A comparative study of convolutional neural network models for wind field downscaling. Meteorol. Appl. 2020, 27, e1961. [Google Scholar] [CrossRef]

- Gerges, F.; Boufadel, M.C.; Bou-Zeid, E.; Nassif, H.; Wang, J.T. A novel deep learning approach to the statistical downscaling of temperatures for monitoring climate change. In Proceedings of the 2022 6th International Conference on Machine Learning and Soft Computing, Haikou, China, 15–17 January 2022; pp. 1–7. [Google Scholar]

- Tie, R.; Shi, C.; Wan, G.; Hu, X.; Kang, L.; Ge, L. CLDASSD: Reconstructing fine textures of the temperature field using super-resolution technology. Adv. Atmos. Sci. 2022, 39, 117–130. [Google Scholar] [CrossRef]

- Tie, R.; Shi, C.; Wan, G.; Kang, L.; Ge, L. To Accurately and Lightly Downscale the Temperature Field by Deep Learning. J. Atmos. Ocean. Technol. 2022, 39, 479–490. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Chen, C.F.R.; Fan, Q.; Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 357–366. [Google Scholar]

- Han, S.; Liu, B.; Shi, C.; Liu, Y.; Qiu, M.; Sun, S. Evaluation of CLDAS and GLDAS datasets for Near-surface Air Temperature over major land areas of China. Sustainability 2020, 12, 4311. [Google Scholar] [CrossRef]

- Reuter, H.I.; Nelson, A.; Jarvis, A. An evaluation of void-filling interpolation methods for SRTM data. Int. J. Geogr. Inf. Sci. 2007, 21, 983–1008. [Google Scholar] [CrossRef]

- QX/T 118-2020; Meteorological Observation Data Quality Control. Chinese Industry Standard: Beijing, China, 2020.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple baselines for image restoration. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 17–33. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June 2016; pp. 1874–1883. [Google Scholar]

- Odena, A.; Dumoulin, V.; Olah, C. Deconvolution and checkerboard artifacts. Distill 2016, 1, e3. [Google Scholar] [CrossRef]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. Carafe: Content-aware reassembly of features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Fast and accurate image super-resolution with deep laplacian pyramid networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2599–2613. [Google Scholar] [CrossRef]

- Chen, X.; Wang, X.; Zhou, J.; Qiao, Y.; Dong, C. Activating more pixels in image super-resolution transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2023; pp. 22367–22377. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Gu, J.; Dong, C. Interpreting super-resolution networks with local attribution maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9199–9208. [Google Scholar]

- Maraun, D.; Wetterhall, F.; Ireson, A.; Chandler, R.; Kendon, E.; Widmann, M.; Brienen, S.; Rust, H.; Sauter, T.; Themeßl, M.; et al. Precipitation downscaling under climate change: Recent developments to bridge the gap between dynamical models and the end user. Rev. Geophys. 2010, 48. [Google Scholar] [CrossRef]

- Wilby, R.L.; Charles, S.P.; Zorita, E.; Timbal, B.; Whetton, P.; Mearns, L.O. Guidelines for Use Of Climate Scenarios Developed From Statistical Downscaling Methods. Supporting material of the Intergovernmental Panel on Climate Change, available from the DDC of IPCC TGCIA. 2004. Available online: https://www.ipcc-data.org/guidelines/dgm_no2_v1_09_2004.pdf (accessed on 1 August 2004).

- Hao, M.; Changjie, L.; Qifeng, Q.; Zheyong, X.; Jingjing, X.; Ming, Y.; Dawei, G. Analysis on Climatic Characteristics of Extreme High-temperature in Zhejiang Province in May 2018 and Associated Large-scale Circulation. J. Arid Meteorol. 2020, 38, 909. [Google Scholar]

- Jianjiang, W.; Hao, M.; Liping, Y.; Liqing, G.; Chen, W. Analysis of Atmospheric Circulation Characteristics Associated with Autumn Drought over Zhejiang Province in 2019. J. Arid Meteorol. 2021, 39, 1. [Google Scholar]

- Chen, F.; Manning, K.W.; LeMone, M.A.; Trier, S.B.; Alfieri, J.G.; Roberts, R.; Tewari, M.; Niyogi, D.; Horst, T.W.; Oncley, S.P.; et al. Description and evaluation of the characteristics of the NCAR high-resolution land data assimilation system. J. Appl. Meteorol. Climatol. 2007, 46, 694–713. [Google Scholar] [CrossRef]

| Dataset | Spatial Resolution | Range | Source |

|---|---|---|---|

| CLDAS | 0.05° | 2016.01–2020.12 (hourly) | NMIC |

| HRCLDAS | 0.01° | 2016.01–2020.12 (hourly) | NMIC |

| SRTM(DEM) | 0.01° | - | NASA |

| Station Observation | - | 2016.01–2020.12 (hourly) | NMIC |

| Model | Feature Extraction Block | SCAM | Upsampling Module |

|---|---|---|---|

| Light-CLDASSD | ResBlock | - | Sub-Pixel |

| SN-CLDASSD-S | SNBlock | - | Sub-Pixel |

| SN-CLDASSD-C | SNBlock | - | CARAFE |

| SNCA-CLDASSD-C | SNBlock | √ | CARAFE |

| Methods | HRCLDAS/Nation Stations/Region Stations | PSNR | SSIM | ||

|---|---|---|---|---|---|

| RMSE | MAE | COR | |||

| BILINEAR | 1.365/0.646/1.118 | 0.993/0.419/0.845 | 0.879/0.954/0.844 | 24.206 | 0.781 |

| Light-CLDASSD | 0.898/0.134/0.810 | 0.638/0.096/0.589 | 0.946/0.998/0.912 | 28.707 | 0.943 |

| SN-CLDASSD-S | 0.713/0.163/0.771 | 0.514/0.119/0.565 | 0.960/0.997/0.917 | 29.981 | 0.953 |

| SN-CLDASSD-C | 0.711/0.131/0.727 | 0.514/0.093/0.531 | 0.961/0.998/0.927 | 30.027 | 0.954 |

| SNCA-CLDASSD-C | 0.706/0.118/0.724 | 0.507/0.082/0.527 | 0.961/0.998/0.928 | 30.083 | 0.957 |

| HAT | 0.720/0.178/0.774 | 0.515/0.131/0.566 | 0.959/0.996/0.916 | 29.899 | 0.952 |

| HRCLDAS | - /0.450/0.443 | - /0.313/0.296 | - /0.976/0.974 | - | - |

| Methods | Topography | HRCLDAS/Nation Stations/Region Stations | ||

|---|---|---|---|---|

| RMSE | MAE | COR | ||

| BILINEAR | Water | 1.113/0.336/1.009 | 0.849/0.250/0.769 | 0.852/0.981/0.812 |

| Island | 0.839/0.236/0.967 | 0.630/0.193/0.761 | 0.772/0.974/0.715 | |

| Plain | 0.994/0.521/1.013 | 0.704/0.370/0.760 | 0.838/0.961/0.832 | |

| Mountains | 1.732/1.162/1.325 | 1.346/0.816/1.046 | 0.754/0.974/0.812 | |

| Light-CLDASSD | Water | 0.741/0.093/0.800 | 0.539/0.071/0.585 | 0.912/0.999/0.883 |

| Island | 0.668/0.073/0.744 | 0.503/0.059/0.560 | 0.867/0.998/0.844 | |

| Plain | 0.775/0.125/0.741 | 0.551/0.092/0.540 | 0.906/0.997/0.904 | |

| Mountains | 1.088/0.187/0.924 | 0.806/0.136/0.688 | 0.908/0.998/0.892 | |

| SNCA-CLDASSD-C | Water | 0.638/0.092/0.697 | 0.467/0.072/0.511 | 0.929/0.998/0.907 |

| Island | 0.527/0.081/0.640 | 0.394/0.066/0.485 | 0.903/0.997/0.878 | |

| Plain | 0.605/0.104/0.670 | 0.430/0.077/0.488 | 0.934/0.998/0.920 | |

| Mountains | 0.808/0.175/0.823 | 0.604/0.117/0.613 | 0.938/0.998/0.911 | |

| HAT | Water | 0.648/0.154/0.749 | 0.467/0.117/0.554 | 0.923/0.996/0.892 |

| Island | 0.542/0.147/0.702 | 0.406/0.119/0.537 | 0.896/0.991/0.852 | |

| Plain | 0.623/0.174/0.713 | 0.443/0.130/0.523 | 0.930/0.995/0.908 | |

| Mountains | 0.825/0.200/0.881 | 0.617/0.148/0.658 | 0.934/0.998/0.895 | |

| HRCLDAS | Water | - /0.424/0.379 | - /0.324/0.240 | - /0.965/0.974 |

| Island | - /0.407/0.333 | - /0.314/0.246 | - /0.918/0.969 | |

| Plain | - /0.387/0.403 | - /0.269/0.265 | - /0.975/0.972 | |

| Mountains | - /0.670/0.531 | - /0.519/0.379 | - /0.974/0.963 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, Z.; Shi, C.; Shen, R.; Tie, R.; Ge, L. Spatial Downscaling of Near-Surface Air Temperature Based on Deep Learning Cross-Attention Mechanism. Remote Sens. 2023, 15, 5084. https://doi.org/10.3390/rs15215084

Shen Z, Shi C, Shen R, Tie R, Ge L. Spatial Downscaling of Near-Surface Air Temperature Based on Deep Learning Cross-Attention Mechanism. Remote Sensing. 2023; 15(21):5084. https://doi.org/10.3390/rs15215084

Chicago/Turabian StyleShen, Zhanfei, Chunxiang Shi, Runping Shen, Ruian Tie, and Lingling Ge. 2023. "Spatial Downscaling of Near-Surface Air Temperature Based on Deep Learning Cross-Attention Mechanism" Remote Sensing 15, no. 21: 5084. https://doi.org/10.3390/rs15215084

APA StyleShen, Z., Shi, C., Shen, R., Tie, R., & Ge, L. (2023). Spatial Downscaling of Near-Surface Air Temperature Based on Deep Learning Cross-Attention Mechanism. Remote Sensing, 15(21), 5084. https://doi.org/10.3390/rs15215084