Abstract

The Destination Earth (DestinE) European initiative has recently brought into the scientific community the concept of the Digital Twin (DT) applied to Earth Sciences. Within 2030, a very high precision digital model of the Earth, continuously fed and powered by Earth Observation (EO) data, will provide as many digital replicas (DTs) as the different domains of the earth sciences are. Considering that a DT is driven by use cases, depending on the selected application, the provided information has to change. It follows that, to achieve a reliable representation of the selected use case, a reasonable and complete a priori definition of the needed elements that DT must contain is mandatory. In this work, we define a possible theoretical framework for a future DT of the Italian Alpine glaciers, trying to define and describe all those information (both EO and in situ data) and relationships that necessarily have to enter the process as building blocks of the DT itself. Two main aspects of glaciers were considered and investigated: (i) the “metric quantification” of their spatial dynamics (achieved through measures) and (ii) the “qualitative (semantic) description” of their health status as definable through observations from domain experts. After the first identification of the building blocks, the work proceeds focusing on existing EO data sources providing their essential elements, with specific focus on open access high-resolution (HR) and very-high-resolution (VHR) images. This last issue considered two scales of analysis: local (single glacier) and regional (Italian Alps). Some considerations were furtherly reported about the expected glaciers-related applications enabled by the availability of a DT at regional level. Applications involving both metric and semantic information were considered and grouped in three main clusters: Glaciers Evolution Modelling (GEM), 4D Multi Reality, and Virtual Reality. Limitations were additionally explored, mainly related to the technical characteristics of available EO VHR open data and some conclusions provided.

1. Introduction

Initially coined and developed by the industrial manufacturing sector, the Digital Twin concept has also landed, in recent years, in academia [1].

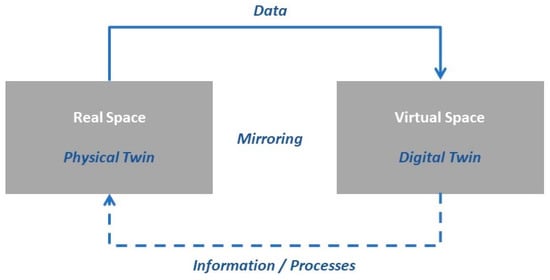

Due to its adoption in diverse sectors and disciplines, several definitions exist for Digital Twin (DT). With reference to the scientific research and according to El Saddik [2] “A digital twin is a digital replica of a living or non-living physical entity. By bridging the physical and the virtual world, data is transmitted seamlessly allowing the virtual entity to exist simultaneously with the physical entity”. Thus, unlike a three-dimensional model, which is a mathematical representation of a three-dimensional object, the DT is not simply the copy of the physical product but is also closely connected to this by specific relationships. A DT, in fact, consists of three main components [3]: (i) physical entity, (ii) digital entity (virtual representation) and (iii) connections relating physical and digital entities. Connections work through data that flows from the physical to the digital representation and through the information that is generated from the digital entity to enter the physical environment.

Considering that the information generated by the digital twins is driven by use cases [3], related to a wide range of scientific application domains, such connection could enable the (virtual) monitoring of specific processes/entities/ecosystems, depending on the considered use case. Moreover, the DT allows the simulation of different and eventual scenarios, and the analysis of consequent responses of the physical model to which it refers (Figure 1). In short, it serves to test and understand how the analysed system will behave as conditions change. Of course, to achieve such ambitious modelling, the latest technological advances of the IoT (Internet of Things), AI (Artificial Intelligence), ML (Machine Learning), HPC (High Performance Computing) and Big Data analytics have to be exploited. Synergies among these technologies and experts in different discipline, depending on the application type, allow the creation of virtual representations of physical entities and the understanding of their behaviour, anticipating their possible reactions to simulated events.

Figure 1.

Digital Twin concept scheme.

The Digital Twin (DT) is the core of the Destination Earth initiative (DestinE) [4] of the European Commission, aimed at creating, by year 2030, a very high precision digital model of the Earth useful to interactively explore various natural processes and human activities at a local and global level. Since 2021, the development of digital replicas of Earth systems has been initiated in an effort to take care of all involved complexities. Digital twins are implemented and specified according to the thematic domains of the Earth Sciences, such as natural disasters, adaptation to climate change, oceans, and biodiversity.

Nevertheless, it is expected that all these digital replicas will be integrated in a single and complete DT, representative for the entire Earth system. Moreover, Destination Earth Digital Twins will be made available through a dedicated platform enabling both modelling and simulation. The platform will be openly accessible and cloud-based, funded as part of the Digital Europe program [5].

The platform will welcome contributions from experts, scientists, policy makers, companies, and private users. Public and private entities will be able to access the system taking advantage of the archived information, scenario analysis capabilities, forecasting and simulation services, with the aim of accelerating the green transition, predicting environmental degradation, and supporting the political choices of EU member states.

Implementation of the Earth DT is expected to largely profit from Earth Observation (EO) data and services. These will represent the main source of updated information continuously feeding the DT to ensure adaptation, re-calibration and validation of modelled processes.

Present satellite Earth observation capabilities provide, in fact, global and synoptic observations at unprecedented temporal and geometric resolution that greatly improve the performance and reliability of the system. Additionally, information coming from satellite data could be supported, and integrated, by other technologies, such as aerial acquisitions from both airplanes and UAVs (Unmanned Aerial Vehicles), a network of ground sensors possibly managed through smart and IoT-based systems, and coupled with distributed information relying on citizen science approaches for information gathering. Thanks to this integration, which should necessarily undergo a significant pre-processing step aimed at validating and homogenizing data, Earth DT will provide an accurate representation of the past, present and (simulated) future phenomena occurring in the world. A special focus will certainly be on instances of the monitoring of the planet’s health, looking for answers about interconnections between the Earth system and human behaviour.

In this context, the European Space Agency (ESA), in preparation of the Digital Twin Earth implementation activities, is encouraging research and precursor studies. In September 2020, the ESA launched several Digital Twin Earth Precursor Activities [6] to explore some of the main scientific and technical challenges in building a Digital Twin of the Earth. Explored sectors were forest, hydrology, Antarctica, food systems, ocean and climate hot spots. Each of them addresses a different scientific, technical and operational challenge where artificial intelligence, ICT infrastructure, and stakeholder engagement play a crucial role. For some of these activities, involved researchers have already produced dynamic models and virtual representations of normal interactions that typically characterize the investigated sectors. This is the case in an advanced dynamic reconstruction of the Antarctica hydrology and its interaction with oceans and atmosphere that was obtained by combining past and present observations with simulations of the Earth system in and around Antarctica [7].

In October 2021, a specific procurement was addressed by the ESA to generate an Earth Digital Twin prototype specific to the Alpine Region [8]. The procurement was mainly focused on specific themes defined during the “Alpine Regional Initiative” (eo4alps) [9] in 2018, and identified by the ESA as of importance in contributing to the EU Green Deal [10]. For the specific Disaster Risk Management Theme, among the stated different priorities, one was specifically dedicated to “glacier dynamics and related hazards”.

Glaciers are already known to be related to climate change effects, representing one of the habitats where global warming consequences are more evident. For this reason, a need arises to have a dedicated DT for the real-time monitoring of their dynamics and responses to climate induced effects.

In this work, we propose a theoretical framework for a Digital Twin of the Italian Alpine glaciers, through the review of the information which we use as building blocks for its realization. The work proceeds focusing on (i) EO data, (ii) auxiliary data from ground surveys or network of sensors, (iii) services and products made available by institutional players, and (iv) modelling approaches for glaciers studies. Some considerations are also given to applications on glacier monitoring that can be enabled by the DT at a regional level. Three groups of approaches can be assumed as possible references for a DT: Glacier Evolution Modelling (GEM), 4D Multi Glacier and Virtual Reality.

It is worth stressing that the main goal of this paper is not to present an already developed DT for Alpine glaciers, but to draft a theoretical framework, based on existing data and sources, useful to enable the development of a proper Glacier DT that can profit from the numerous glacier mapping and modelling experiences. In other words, we offer some guidelines to summarize the skills of authors that mostly come from the glaciological and EO sectors. No new algorithm, no statistics or numerical result are to be expected by readers. The only expectation of the authors is to give an organized and, we hope, structured representation of what exists that can be used to develop an effective and operational Alpine glacier DT.

2. Glaciers Monitoring

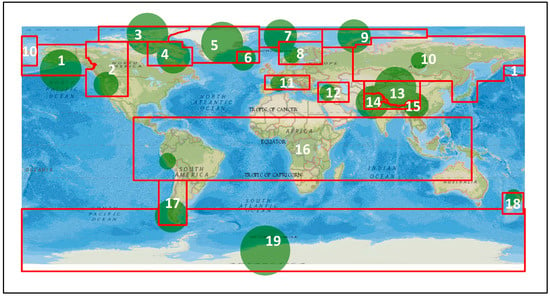

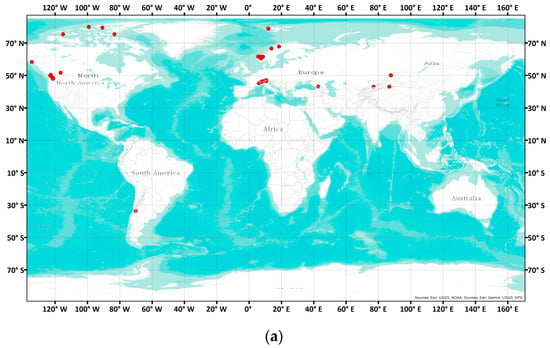

It is well known that glaciers (Figure 2) are sensitive sentinels of climate change. The progressive retreat of ice cover confirms the current trend of increasing global average temperature and the fragility of the ecosystem we are living in. For instance, most Alpine glaciers are expected to disappear by 2100, inducing negative implications on natural ecosystems and water resources management. The annual mass balance of glaciers is an important indicator of climate change, reflecting changes in temperature and precipitation patterns [11]. A glacier with a negative mass balance (mass loss is higher than mass gain) is an evident alert that the glacier is shrinking and that it may eventually disappear (Figure 3). In addition, the monitoring of other aspects, including distribution, velocity, temperature and structure, is crucial to understand the impact of glacier reduction on hydrological processes, the related hazard assessment and for developing appropriate strategies of mitigation.

Figure 2.

Global distribution of glaciers. The diameter of circles is proportional to the total glacier area shows the area covered. Number refers to the RGI (Randolph Glacier Inventory 6.0). Region names: 1: Alaska, 2: Western Canada and US, 3: Arctic Canada North, 4: Arctic Canada South, 5: Greenland, 6: Iceland, 7: Svalbard, 8: Scandinavia, 9: Russian Arctic, 10: North Asia, 11: Central Europe, 12: Caucasus and Middle East, 13: Central Asia, 14: South Asia West, 15: South Asia East, 16: Low Latitudes, 17: Southern Andes, 18: New Zealand, 19: Antarctic and Subantarctic. Base map National Geographic World Map from ESRI, Garmin, HERE, UNEP-WCMC, USGS, NASA, ESA, METI, NRCAN, GEBCO, NOAA, Increment P corp.

Figure 3.

(a) Distribution of the global reference glaciers (47) with more than 30 continued observation years for the time-period 1949/50–2021/22. Base map Source: National Geographic World Map from ESRI, USGS, Garmin, NOAA. (b) Annual mass balance of reference glaciers. Annual mass change values are given on the y-axis in tonnes per square meter (1000 kg m−2). Green and yellow bars represent negative and positive variations, respectively. Data Source: WGMS (2021, updated and earlier reports).

Glacier inventories are important tools for monitoring and analysing changes in glaciers over time [12]. They provide essential information on the location, size, shape and characteristics of glaciers in a particular region, allowing glaciologists to understand how these ice masses are responding to changes in climate.

Glaciologists use a variety of techniques to monitor glaciers and track change in their size and behaviour over time, including ground-based measurements, airborne measurements and satellite remote sensing.

2.1. The Role of Remote Sensing and Its Contribution to Glacier Inventories

Satellite imagery and other remote sensing technologies can provide useful information about glaciers. Remote sensing plays a crucial role in the regular monitoring of the properties of glaciers thanks to a synoptic view, and to a repetitive and up-to-date coverage. Such properties are particularly useful for the monitoring of glaciers usually located in remote, inaccessible and inhospitable environments with limited field-based glaciological measurements—Until the early 1970s, aerial photography was the main remote sensing technique for glacier monitoring.

The global glacier inventory, proposed in the late 1950s as a form of national glacier list, and known as World Glacier Inventory (WGI), was primarily based during the 1970s on aerial photographs and maps [13,14]. As the original inventory method was too time-consuming and inapplicable for some remote areas, a new method was developed and introduced in the early 1980s by means of satellite imagery [15,16] and Digital Elevation Models (DEMs) using Geographic Information Systems (GIS) [17]. The launch of the space-borne sensor Landsat Multispectral Scanner (MSS) in 1972, with a spatial resolution of 60 m, has indeed guaranteed continuity with respect to the previous ordinary adopted aerial data in the historical analysis of glacier extension. The subsequent generation of medium and high-spatial-resolution satellites provided, as a final result, a rich dataset of images over several decades, thus ensuring a robust means for change evaluation and trend analysis.

In fact, in the 1990s, several studies applied Landsat Thematic Mapper (TM) imagery for mapping glacier extension, change assessment and glacier characterization thanks to the improved spatial resolution (i.e., 30 m) and the availability of a shortwave infrared band, able to discriminate snow from clouds as well as glacier coverage from the ground [17,18,19].

During these years, a large-scale inventory of the world glaciers started with the Global Ice Measurements from Space (GLIMS) initiative promoted by NASA [20] also thanks to the development of automatic glacier mapping algorithms and GIS-based data processing. GLIMS is committed to monitoring glaciers around the world by primarily using data from optical satellite instruments such as the Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER); it covers about the 58% of global glaciated areas, recording a wide range of attributes. GLIMS feeds and maintains a geospatial database accessible via the internet, featuring interactive maps and providing an interoperability standard web mapping service [21].

As far as optical data are concerned, the ASTER sensor has been used for a large number of glaciological applications all around the world [22,23,24,25]. This is mainly due to its high spatial resolution (i.e., VNIR Ground Sampling Distance—GSD = 15 m) and to its capability of acquiring stereoscopic images useful to derive global DEMs. Alpine glaciers, specifically, have been, and are, heavily monitored through images from ASTER and Landsat/TM/ETM+/OLI data, whose spatial, spectral and temporal resolutions proved to be consistent with the extraction of quantitative indicators of both seasonal and longer-term changes of glaciers [26,27,28,29].

At the European level, the Copernicus program has significantly improved the capabilities of monitoring glacier extension in the Alpine Region. Sentinel-1 (S1) and Sentinel-2 (S2) constellations now ensure acquisitions with a revisit time of, respectively, 10 and 5 days and a spatial resolution of 10/20 m for the majority of S2/S1 bands. Some recent works provided systematic applications aimed at exploiting the large volume of data provided by Copernicus Sentinel missions for detecting glacier outlines, including debris-covered glaciers [30].

In particular [31,32] provide an effective overview of snow cover mapping potentialities from satellite, with special concerns about Sentinel-2 and Landsat missions.

Specifically, [31] validated snow cover mapping from the Copernicus Land Monitoring Service for High-Resolution Snow and Ice Monitoring (HRSI) with reference to test plots showing different aspects, canopy cover, and solar irradiance conditions, located on the Pyrenees (Spain) and on the Sierra Nevada (USA). HRSI relies on a combination of Sentinel-2 and Landsat-7/8 satellite scenes, LiDAR-based, and in situ datasets. An accuracy of 25–30% RMSE was found. Moreover, it was observed that highly accurate LiDAR-derived tree-cover density maps did not improve the subcanopy FSC retrievals. In [32], the Theia Snow collection, an alternative to the Copernicus HRSI, was similarly validated, and the accuracy of snow-covered area detection related to snow depth. It was found that the proposed algorithm was able detect the snow-covered map with an accuracy higher than 90% and increasing as snow depth decreases.

The adoption of optical imagery in glacier monitoring implies some constraints, mainly related to cloud coverage and presence of rocky debris. Specifically, the presence of cloud cover strongly limits a continued and proper identification of glacier outlines that should be identified as close as possible to the end of the ablation period, i.e., the summer season. Since clouds are very common in the Alps during summer, this is a significantly limiting aspect that optical data suffer from [33].

Additionally, the presence of rocky debris that can partially, or completely, cover the ice, is a complex issue in glacier mapping, being that spectra of covering rocks are identical to those of the surrounding rocks.

As a result of these limitations, many studies propose the adoption of the synthetic aperture radar (SAR) to map glaciers [34]. Interferometric SAR (InSAR) and polarimetric SAR (PolSAR) approaches can, in fact, be used to both overcome the problem of clouds and to separate the identification of debris coverage from ice [35]. It is worth remembering that SAR does not exploit sun radiation, but it is the sensor itself in charge of providing the lighting signal (in the microwave range) and recording the correspondent echo. Thanks to its relatively long wavelength, the SAR signal can pass through clouds and can operate during both day and night time. Additionally, SAR waves can penetrate into the snowpack, providing useful information such as snow/ice grain size and Snow Water Equivalent, SWE (liquid/frozen water content) [36].

The SAR backscatter coefficient, from the signal amplitude, provides information about the properties of ice, such as roughness and dielectric value that are significantly different from the ones optical data can generate. Additionally, different polarizations (horizontal and vertical) enable the detection of other features of glaciers such as shape, state of ice and prevailing development of volume. Phase information can, also, be exploited and coherence- or interferometry-based approaches applied to describe the deformation and stability of surfaces. This permits, for example, the detection and quantification of glacier velocity [36,37].

Further to glaciers outline identification, other base requirements to glacier inventories is the information related to glacier topography: elevation, slope, aspect and thickness. DSMs from different sources can support this type of analysis, providing more or less accurate description of glacier geometry. DSMs are, in fact, presently used in a large variety of glaciological applications, supporting modelling aimed at estimating/forecasting glacier changes in terms of thickness and volume [38,39,40,41]. Glacier morphological studies [42] can well profit from DSM and they certainly play a role as pivotal components in mass-balance models [38]. A great variety of DSMs can be freely and globally accessed: (i) EU-DEM v. 1.1 (GSD = 25 m) [43]; (ii) Copernicus global DEMs, namely, GLO-30 (GSD = 30 m) and GLO-90 (GSD = 90 m) [44]; (iii) SRTM (global, GSD = 90/30 m) [45]; (iv) ASTER GDEM (global, GSD = 30 m) [46]; (v) NASADEM (global, GSD = 30 m) [47]; (vi) Tandem_X DEM (global, GSD = 90/12 m) [48]; (vii) MERIT DEM (global, GSD = 90 m) [49]; and (viii) ALOS World 3D (global, GSD = 30 m) [50]. Moreover, considering Italy, the Tinitaly DEM (GSD = 10 m) was recently released for free by INGV (Istituto Nazionale Geofisica e Vulcanologia) [51].

2.2. Global Services Providing Data Feeding Glacier DT

As previously mentioned, EO data are nowadays widely used in many glacier-related applications. The way they can be directly exploited in glaciological applications has already been discussed in the previous section. However, one has also to remember that EO data are often at the basis of “services” offered by different providers that have therefore to be considered. It is authors’ opinion that, looking at all the available services, the most desirable are the ones relying on data (both EO and in situ) that are continuously and regularly acquired (ensuring monitoring), uniformly pre-processed (ensuring comparability), free-of-charge (ensuring affordability) and, possibly, official.

From this point of view, the EU invites researchers to capitalize on the already existing capabilities of Digital Twin Earth. Specifically, it is expected that whatever DT should profit from the data and capabilities of national and international institutions (e.g., European in situ data coordination by the EEA) and from European programs (e.g., Copernicus services and data).

To achieve these instances, a global overview of existing potential service providers is mandatory. The six Copernicus Services—Atmosphere, Marine, Land, Climate Change, Security and Emergency—can certainly represent the starting point for this research. They, in fact, make available free access data coming from Sentinel’s acquisitions and in situ measures and, in many cases, advanced ready-to-use products. The majority of Copernicus Services are managed thorough dedicated access points called Data and Information Access Services (DIAS, [52]).

Other data and services related to environmental themes (hydrography, elevation, land cover, natural hazards, fires, etc.) and their evolution along time can be obtained through the INSPIRE Geoportal [53].

Geoportals of the European Environment Agency (EEA, [54]) provides free data and services, as well. Data/services refer to a wide range of environmental topics: climate change, land use, water and marine environment, biodiversity-ecosystems, etc.

One of the most important features of these archives is the availability of multi-temporal data covering time ranges that permit the analysis of ongoing trends of natural and anthropic phenomena. In the case of the glaciological applications, for example, EEA makes the European glaciers distribution map available, dated 2010 [55], and the map of avalanche events in the Alps, dated 1999 [56]. It is worth remembering that past data enable temporal comparisons and support forecasting providing elements for phenomena modelling and simulation, which represent an essential part in DT Earth.

As a useful complement to glacier domain data/services, some auxiliary data can be obtained from the CORDA (COpernicus Reference Data Access) dashboard [57]. CORDA provides official reference data (i.e., orthoimages, administrative boundaries/units/toponyms, elevation data, land cover, hydrography, transport network, etc.) from the main European institutional bodies—EuroGeographic, EEA, and Eurostat—and from national and subnational authorities of member countries.

As far as the Alpine Region is concerned, the HISTALP database [58] gathers many high-quality climate data time-series (temperature, air pressure, precipitation, etc.) from in situ observations since 1760. HISTALP provides and interesting overview about the temporal variation of the main climate variables along time at the climatic temporal scale. It relies on an initiative of the national weather services, led by the Austrian ZAMG (Zentralanstalt für Meteorologie und Geodynamik).

The APGD (Alpine Precipitation Grid Dataset) is, conversely, a high-resolution grid dataset of daily precipitation developed by MeteoSwiss and covering the Alpine Region [59] that well fits requirements of dynamic modelling that a DT should provide.

Both HISTALP and APGD datasets feed the LAPrec, the Long-term Alpine Precipitation gridded reconstruction dataset accessible via the Copernicus Climate Change Service [60].

It is, finally, worth remembering that EO data can directly enter the modelling step, or be exploited to feed the Glacier inventories -that can further enter the modelling step as well.

2.3. Overviewing Glacier Modelling Appraches

In the last decades, many authors have been using data from glacier inventories and have combined them with models and parameters to predict future ice mass evolution.

Extensive endeavours have been dedicated to developing models to describe ice flow, encompassing its intricate physical phenomena and their relationships with climate.

Changes in glaciers play a crucial role in water availability concerns and their reduction can even heighten the occurrence of geo-hazards [61].

Due to data availability, nowadays, physical modelling of ice sheets and glaciers at high spatial resolution is possible. Different models have been developed to understand and study the evolution of glaciers, mainly relying on time series of in situ measurements. Models can be, however, classified depending on: (i) the spatial scale of analysis (single glacier, glacier system, etc.); (ii) on the type of modelled parameters; and (iii) the degree of complexity versus accuracy.

All these criteria are somehow inter-related. In fact, simple models are, in general, fed with few data and require limited computational efforts. These features make them suitable for performing a first estimate about the behaviour of a high number of glaciers over wide regions. These are in general referred to as 4D Multi Glaciers (see Section 3.2).

When aiming, on the other hand, at generating future scenarios based on expected or simulated climatic conditions, possibly looking for an estimated glacier “expiration date”, more advanced models have to be considered (hereinafter called Glaciers Evolution Models—GEM, see Section 3.2).

Both approaches have to be included in a DT as different output environments that different users can ask for, depending on the application they are facing. A third type of output one can be interested in is a mere 3D representation, useful for didactic, cultural and dissemination purposes, easy to be accessed and managed by citizens.

Presently, the reference modelling experience of the GEM type is the Open Global Glacier Model (OGGM). Its starting point is the Randolph Glacier Inventory, and the main goal is the simulation of past and future evolution of all of the 216,502 inventoried glaciers worldwide (as of RGI V6). OGGM works similarly to the most of global and regional models. Its simulations rely on auxiliary data such as glacier boundaries, topographic descriptors and climatic observations. Expected outputs can be summarized as follows: (i) glacier shape, defined through the adoption of topographic descriptors (e.g., hypsometry); (ii) estimates of the glacier total ice volume; (iii) maps describing bedrock topography; (iv) climatic-related mass balance and frontal ablation; and (v) glacier dynamic evolution under various climate conditions completed with estimates of associated uncertainties [62,63]. Some operational outputs can, in general, be automatically or semi-automatically obtained: (i) flow lines; (ii) glaciers catchment areas; (iii) mass balance; and (iv) ice thickness and its variation over time. An eventual increase in the complexity of the model determines a significant amount of the additional computational cost of simulations, that, to be somehow limited, involves approximations mainly related to a reduced geometrical and temporal resolution of inputs. The great differences that glaciers around the world present make the adoption of a single global model too challenging and, possibly, inadequate. The approach that is presently proposed is one relying on regional models. A region is defined as an area presenting common climatic features and all glaciers that belong to the same climatic area can be integrated in a single model. What “common climatic conditions” means depends on the scale of the modelling. That means that a “global” similarity can, eventually, be split in many “local” similarities if one focuses over a specific (but unitary) territorial context. The Alps represent exactly a unitary territorial context where different climatic similarities can be recognized and used to properly zone them. This is therefore a mandatory step that we advise about when aiming at proposing a DT for the Alps.

The Alpine Region is characterized by an abundance of glaciers, but only few of them are well studied. The time series of frontal variations are the most frequently available data. Other data such as glacier volume, shape, aspect, steepness and elevation are mandatory for models, but not always available for Alpine glaciers. Moreover, high mountain regions present some peculiarities, hosting mixed ecosystems where glaciers, rocks, debris, streams and lakes continuously interact. These makes the Alps particularly sensitive to climatic variations that, most importantly, can lead to important environmental hazards such as landslides, floods and significant changes in water availability and quality [64,65].

The estimation of the future evolution of all glaciers in the European Alps was started by Haeberli and Hoelzle [66], who combined glacier inventory data with a parameterisation scheme to predict the future evolution of the Alpine ice mass. Recently, Zekollari et al. [67] have proposed a model of glacial evolution in Europe by bringing together the experiences gained in recent decades and proposing an evolution that makes intensive use of inventory data.

In this context, a glacier DT should be able to integrate the peculiarities of both glacier inventories and models, aiming at continuously updating those features and inputs through the exploitation of available open and official EO data/services that institutional players are continuously proposing through downstream chains. In this context, glaciologists should list specific requirements to address services from institutions, thus making them increasingly consistent with their expectations. In the meantime, they should define and provide standards for both descriptors and models to make all results and simulations around the world homogeneous. Climatic zonation criteria should also be rigorously defined, making explicit their dependence from the detail level/scale of the analysis and making possible a more effective translation of the single-glacier approach to the multi-glacier one.

2.4. The Alpine Ecosystem: A Perfect Site for DT Prototyping

In the European context, the mountain range of the Alps is of fundamental importance in various aspects, such as geomorphological, natural, historical and cultural ones. Due to its central location with respect to different States, i.e., France, Italy, Switzerland, Liechtenstein, Austria, Germany and Slovenia, the Alpine Region is, in fact, characterized by great cultural diversity and richness. Moreover, the peculiar coexistence between men and nature, since around the year 13,500 a.C., generates a unique biodiversity of this mountain environment [68].

Thanks to its characteristics, the Alpine chain is therefore a perfect “test site”, also in the context of the Digital Twin, offering multiple types of applications linked to different scientific fields, also considering the future challenges that Alps will face, such as: climate change, demographic changes, land use abandonment, energy saving/production/supply/storage, conservation of cultural and natural heritages, etc. [69].

The Alpine ecosystem is a unique and, at the same time, one of the most fragile and endangered natural ecosystems. Actually, the European Alps is one of the regions where glaciers are shrinking most and the melting of glaciers, especially of the permafrost, are destabilizing the slopes of mountains themselves.

For these reasons, in the last decades, more and more studies and specific projects focused on the Alpine ecosystem are increased, driven by the need to pursue sustainable common territorial development and strengthen joint actions. A dedicated “Macroregional strategy” has been developed by the European Council, i.e., the EU Strategy for Alpine Region (EUSALP), which would provide opportunities to improve cooperation in the Alpine States and guide relevant policy instruments at EU, national and regional levels [70].

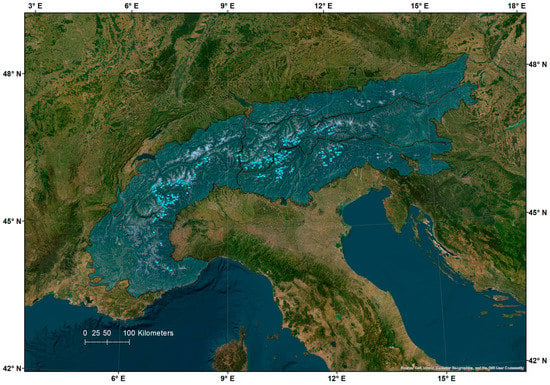

The retreat and melting of Alpine glaciers (Figure 4) appear to be increasingly rapid (Table 1), if compared with the observations of past decades, requiring frequent monitoring and equally frequent updating of glacier inventories.

Figure 4.

Map showing the distribution of Italian glaciers and International Standardized Mountain Subdivision of the Alps, SOIUSA. Data Source [71,78]. Base Maps World Imagery—Source: Esri, Maxar, Earthstar Geographics, and the GIS User Community.

Table 1.

Area and changes of the Italian glaciers (time span 1957–2015) sorted according to the administrative Italian region where they are located. Data source [71,72,73,74,75,76,77,78,79,80].

Table 1.

Area and changes of the Italian glaciers (time span 1957–2015) sorted according to the administrative Italian region where they are located. Data source [71,72,73,74,75,76,77,78,79,80].

| Italian Administrative Region | Number of Glaciers—New Inventory | Number of Glaciers—CGI Inventory | Cumulative Area—New Inventory (km2) | Cumulative Area—CGIInventory (km2) | Change in # of Glaciers | Area Change (km2) | Area Change(%) |

|---|---|---|---|---|---|---|---|

| Piemonte | 107 | 115 | 28.55 | 55.84 | −8 | −27.29 | −49% |

| Valle d’aosta | 192 | 204 | 132.90 | 180.91 | −12 | −48.01 | −27% |

| Lombardia | 230 | 185 | 87.67 | 114.86 | 45 | −27.19 | −24% |

| Trentino | 115 | 91 | 30.96 | 46.47 | 24 | −15.51 | −33% |

| Alto adige | 212 | 206 | 84.58 | 122.66 | 6 | −38.08 | −31% |

| Veneto | 38 | 26 | 3.21 | 5.70 | 12 | −2.49 | −44% |

| Friuli v.g. | 7 | 7 | 0.19 | 0.38 | 0 | −0.19 | −50% |

| Abruzzo | 2 | 1 | 0.04 | 0.06 | 1 | −0.02 | −33% |

For this reason, the implementation of a dedicated DT, continuously fed by EO data, in situ measurements and artificial intelligence-based modelling, provides a powerful tool to monitor and simulate the behaviour of glaciers, their characteristics and thus, also permitting the evaluation of any changes and related responses of the ecosystem. In this sense, the DT of the Alpine glaciers can be intended as a decision support tool for local administrators, providing an updated and dynamic overview of the territory useful to understand past and present changes and forecasting their future possible evolution.

3. DT Theoretical Framework

Starting from glaciers as physical assets, described with reference to national and international glacier inventories, a Digital Twin theoretical framework for Italian Alpine glaciers can be initially drafted at the single glacier level with the aim of moving towards an integrated approach where the DT of different single glaciers are related at the regional level to obtain models including mutual interactions.

Whatever the direction one has to follow, it has to be considered that a DT differs from a multi-temporal 3D model of glaciers, since it is able to generate dynamic scenarios exploiting simpler or more complicated models describing the actual and potential evolution of the system. In general, modelling is achieved by looking separately at the different features of the glacier with different levels of complexity, depending on the modelled feature, expected accuracy of the outputs and applications.

From this point of view, a first important task that a DT has to achieve is to remove any possibility of misunderstanding for users though a rigorous definition of a reference glossary. A good example, explicitly aimed at making unskilled users properly conscious about glacier modelling, is the one from [75].

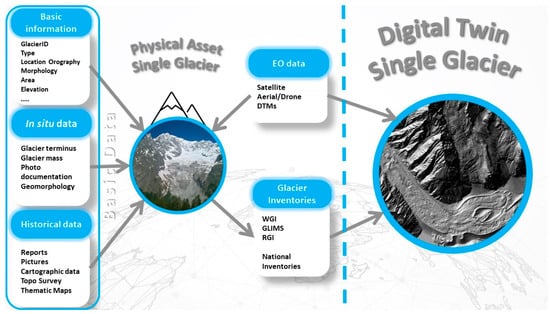

3.1. DT at the Single Glacier Level

Elements that act as building blocks for the definition of the DT for a single glacier are reported in Figure 5 and described in the next sections.

Figure 5.

Digital Twin theoretical framework at the single glacier level.

Such elements have been partially identified thanks to existing research studies dealing with glacier inventory structuring, at both worldwide and National levels. Since 1977 [76], many works reports useful “Instructions for Compilation and Assemblage of Data for a World Glacier Inventory”. They show that the exhaustive identification of a single glacier’s parameters needed to build an appropriate glacier inventory at whatever level (international, national and local single glacier) should develop around basic and auxiliary parameters. Basic parameters are related to the identification, localization, and morphometric description of the glacier; auxiliary parameters, on the other hand, refer to that physical information related to the multi-temporal characteristics of the glacier itself. Both the groups of parameters are needed to feed national and worldwide inventories with the aim of generating a general and up-to-date knowledge of this important part of the cryosphere.

Considering the Italian national context, these parameters have been identified and collected starting since the beginning of the last century [72,73], and still nowadays by Nigrelli et al. 2013 [21] and Salvatore et al. 2015 [77].

3.1.1. Basic (Glaciological) Data

According to [76] (see also Figure 5), a basic description of a glacier can be obtained with reference to three types of information: (i) basic information (ii) in situ data, i.e., measures collected during glaciological campaigns; and (iii) historical documentations.

From a physical asset point of view, each glacier inventory is a target point relying on data collection from both traditional surveying methods and, nowadays, EO data.

As far as basic information is concerned, the following information has to be obtained: (i) glacier identification through the adoption of a proper unique code and/or name; (ii) glacier type; (iii) glacier location and local topography/orography; (iv) administrative data, permitting the association of the glacier to the closest/more proper administrative unit (county, province, region, country); (v) glacier position with respect to a proper coordinate reference system; (vi) technical data about the basin hosting the glacier and its eventual relationship with neighbour basins possibly reaching the sea; (vii) orographic classification of the area according to both the “Traditional” and the “Suddivisione Orografica Internazionale Unificata del Sistema Alpino” (International Standardized Mountain Subdivision of the Alps, SOIUSA) systems [78]; (viii) morphological characterization, including body shape, frontal characteristics and longitudinal profile of the glacier; and (ix) morphometric features such as glacier size (m2), elevation (max, min, mean and median; m a.s.l.), thickness (m) and mean/max length (m).

It has to be pointed out that measures have always to be associated with the date of the survey in terms of day, month and year. This enables multi-temporal comparison of the glacier’s condition.

As far as in situ data are concerned, the following ones are desirable: (i) direct measures obtained from reference signs, (ii) photographic stations installed close to the glacier from fixed and stable points, and (iii) wherever possible, some geomorphology observations in order to know the “health state” of glaciers. In Italy, these measures generally come from glaciological campaigns (past and current) performed by the CGI (Italian Glaciological Committee) and refer to the glacier terminus and mass. New measures from successive campaigns are expected to enable the assessment of geomorphological changes over time.

Finally, considering the historical data, information can be grouped into two main classes: (i) text documents that report the general conditions of the glaciers, the main observed changes, the eventual occurrence of relevant phenomena (e.g., rockfall, lake growth/reduction, etc.). Documents can also correspond to pictures (including terrestrial and aerial photos), cartographic data stored in digital files, articles of journals, books, and unpublished studies; (ii) Topographic surveys and technical/thematic maps.

Auxiliary parameters, supporting and completing the basic ones, mainly concern the presence (or not) of the glacier at the time of the inventory, and eventually associated local codes and alternative glacier classification relying on local criteria.

3.1.2. Glacier Inventories

The organization of the above mentioned amount of information within a structured database (DB) can follow some indications coming from the literature [21] that indicates how to enter and order data, how to plan for new data and the type of formats one has to adopt. The DB is expected to: (i) catalogue data at the glacier level; (ii) make data access and sharing possible, possibly enabling both upload and download of data themselves; and (ii) optimize data store and queries, possibly profiling communities of users.

These DBs are named Glaciers Inventories. They mostly aim at the homogenization and synthesis of multi-source data providing operational information useful to describe glaciers and their dynamics over time. They could be intended as the skeleton of a DT where all the parameters/variables needed by models can be obtained.

With a focus on the local level, and specifically considering the Italian Alps, the CGI has a unique, extensive history of glaciological documentation that, when combined with a spatially distributed and multi-temporal data, can provide an accurate, dynamic and complete reconstruction of recent glacier evolution, including one of the longest observation series of glacier frontal variations in the world [77]. One of the earliest glacier databases has been created by Carlo Porro in 1925, listing 774 within the framework of initiatives promoted and supported by the CGI [79]. A more significant and innovative database, the Italian Glacier Inventory, was developed by the CGI in cooperation with the National Research Council (CNR) in the period 1959–1962. This database reports 838 glaciers covering a total area of about 500 km2 developed through map analysis and field survey [71]. In the late 1970s, the CGI was part of the international team in the World Glacier Inventory (WGI), which was published in the late 1980s as a synthesis. Later, an update of the 1959 CGI-CNR Inventory was needed, and a photogrammetric campaign, supported by the Minister of the Environment, was operated to document all glaciers of the Italian Alps with dimensions greater than 0.05 km2. The survey campaign, named ‘Volo Italia 1988–1989′, was able to identify 787 glaciers covering a total area of 474 km2 [74]. In the period 2005–2011 a project called “The New Italian Glacier Inventory” was developed to fill the remaining gaps of the glacial inventory. It provided high-resolution colour orthophotos that were used to map 903 glaciers covering a total area of about 370 km2 [80]. This new inventory was a breakthrough in the availability of glaciological data in the Italian Alps, providing an open-source availability of environmental data related to mountain regions.

At the worldwide level, three inventories are globally recognized from scientific community: the WGI (World Glacier Inventory) [14], the GLIMS (Global Land Ice Measurement from Space) [20] and the RGI (Randolph Glacier Inventory) [13], providing all necessary elements for a detailed and accurate compilation of glacier inventory.

The World Glacier Inventory (WGI) was established during the International Hydrological Decade (1965–74) with the aim of obtaining accurate assessment of the amount, distribution and variation of all snow and ice masses all over the world. The inventory includes information about more than 130,000 glaciers in terms of geographic location, area, length, orientation, elevation and classification. The WGI is primarily based on aerial photographs, and it represents a snapshot of the glacier distribution in the second half of the 20th century. The WGI remains an important resource to understand the current state of global glaciers. In the 1980s, remote sensing techniques and automated processing were adopted to accelerate the inventory work, leading to the establishment of the Global Land Ice Measurement from Space (GLIMS) service.

GLIMS has indeed implemented a comprehensive database of glacier outlines and related metadata, providing information about glacier behaviour, including changes in surface area, thickness and volume that can be proficiently used to read the effects of climate change on these important natural resources. GLIMS is a valuable tool that can be used in combination with other data layers (e.g., WGI and the Randolph Glacier Inventory, RGI), to provide a more comprehensive view of the state of the glaciers around the world. RGI is a database of glacier outlines that was developed using a combination of satellite data and ground-based measurements.

By combining data from all these sources, snapshots of world glaciers at different times can be possible. Trying to summarize the mutual role of local and global inventories, one can say that: (i) local glacier inventories are important for providing detailed information about individual glaciers within a specific region. They typically rely on a combination of ground observations and measurements as well as satellite imagery and aerial photographs. Unfortunately, they are limited in scope and can only cover small geographic areas. This means that they may not provide a comprehensive view of glacier changes across a larger region or over a large time period; and (ii) on the other hand, worldwide glacier inventories can provide information about glaciers with less detailed information, but for a broader coverage of glaciers and more frequent updates. WGI, GLIMS, RGI inventories are filled in by scientists from different countries, thus providing data and information for glaciers all over the world. Consequently, also in applications at regional scale, such as the one proposed in this work, i.e., the Italian Alps, these inventories can provide useful data and become, consequently, fundamental data sources to feed the DT.

Finally, we can say that a DT for Italian Alpine glacier monitoring needs to combine data from multiple glacier inventories in order to upscale information about individual glaciers to the regional or even global scale. The combination of data from multiple inventories can, in fact, help to identify discrepancies and inconsistencies in individual datasets, improving completeness and reliability of deductions about glacier dynamics and their response to climate change.

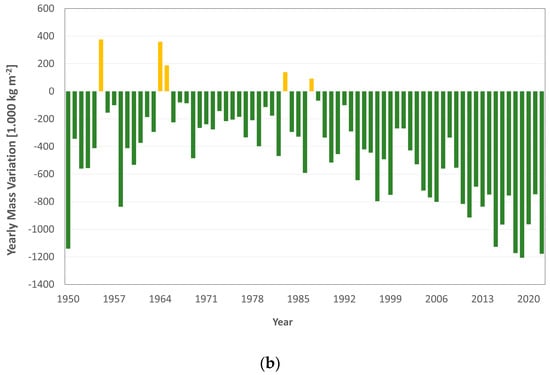

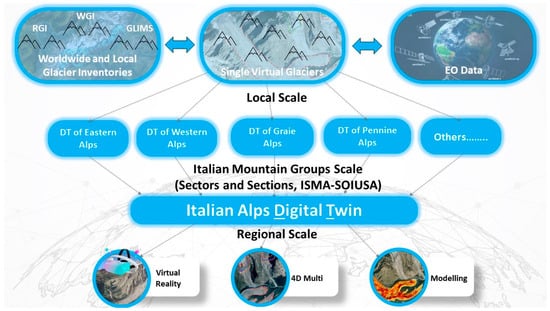

3.2. Enabling Different DT Outputs

Within the theoretical framework of the proposed DT, the approach is certainly to be thought of as a scalable one; the DT of the single glacier (local) must somehow represent the element of a more comprehensive compartmental system (e.g., the entire Alpine sector) where local and regional conditions continuously interact, one determining the evolution of the other (Figure 6). For this reason, data collected by inventories should be harmonized, re-organized and processed using the orographic setting of the Alps (International Standardized Mountain Subdivision of the Alps, ISMSA-SOIUSA, [78]) rather than local and regional administrative boundaries (regions and provinces). ISMSA-SOIUSA refers to all the Alps by defining mountain ranges, sections and groups.

Figure 6.

Digital Twin of Alps theoretical framework: a compartmental system where local models interact to generate a global response at the Alpine level, providing different approaches to output depending on user’s profile.

As illustrated in Figure 6, in the proposed DT theoretical framework, the integration of digital replicas at the single (local) glacier level leads to obtaining a DT at regional scale, e.g., a DT of Eastern Alps, a DT of Western Alps, and so on. A further integration of the “regional” digital replicas of glacier systems is then expected to move the DT to an upper level corresponding to the Italian Alps Digital Twin.

The possibility of having this type of DT enables a set of applications related to the two main aspects of glacier monitoring at the Italian level: spatial dynamics and health status. Applications can refer to different user’s profiles, thus enabling different DT environments to generate the expected outputs. These “environments” are different in terms of the type of requirements from users and the type of outputs generated by DT. Three main DT environment types can be defined, starting from the best performing and scientifically based one: (i) Glacier Evolution Modelling (GEM), (ii) 4D Multi Glacier and (iii) Virtual Reality. All of them can be accessed at both single- or multi-glacier level.

Glacier Evolution Modelling (GEM) is the most advanced and scientific approach for studying the behaviour of a single glacier or a system of glaciers. As previously mentioned, GEM relies on a computer-based simulation environment able to describe glacier behaviour under different past, present and forecasted climatic conditions. It can be therefore used to explore how glaciers could change and evolve over time, enabling predictions of, for example, their response to ongoing variations of thermal and pluviometric regimes. At the state of the art [81,82], GEM is mainly devoted to simulating glacier balance and predicting its future evolution taking into account a combination of satellite, ground-based and climate data. Outputs of GEM are expected to describe the effects of glacier retreat on water resources, including changes in water stream, water availability and quality, with the aim of properly addressing future policies for water management. This involves a systemic approach to modelling, where all glaciers belonging to the same hydrological system, and jointly participate in the simulation, mutually interacting.

The 4D Multi Glacier approach, conversely, can provide simulations of a single glacier or of a system of glaciers in a four-dimensional space that includes three spatial dimensions (x,y,z) and time. This approach [81] can provide detailed and accurate representations of glacier systems over time by integrating real-time data from various sources, such as satellite imagery, ground sensors and climate models. It entirely relies on recorded data, with no capability of entering simulated climatic data and generating future scenarios.

The Virtual Reality (VR) approach is relatively new and represent the answer to dissemination instances. It is mostly thought to improve the geo-touristic experience by giving a more effective interpretation of environmental geographic features. VR can provide an interactive experience that engages visitors and helps them to understand geological and environmental processes by combining virtual elements with a live view of a place [82]. If applied at the entire Alpine Region, it would guarantee new possibilities related to the comprehensive perception of the entire system, introducing an important change of scale. It moves from the visual representation of a single glacier to the synoptic scale of the entire Alps, thus providing a general vision useful for didactic purposes, but also for a scientific representation. No simulation is involved, and numerous museums are already experiencing this technique, proposing to visitors a virtual journey along time to explore the world of glaciers. In spite of the low degree of scientific soundness, this type of approach could greatly impact the communication by administrators at any level to increase the consciousness of people about the importance of glacier evolution and its criticalities in this important human period.

4. Discussions

The availability of a tool continuously fed with a vast, multi-temporal, multi-scale, and multi-platform dataset related to glacier spatial dynamics and health status could be a significant advancement in glacier modelling and in the understanding of the future evolution of glaciers in the Italian Alps. This could provide valuable insights about the impacts of climate change on glaciers at both local and regional levels, as well as support decisions and address behaviour from policymakers and stakeholders.

The open and free data from the Copernicus program have greatly enhanced the ability to monitor glacier extension with frequent updates. Nevertheless, especially for Alpine glaciers that are frequently small/medium in size, the medium spatial resolution of Sentinel data can sometimes appear not suitable for properly supporting their modelling and mapping. This situation is going to be worse and worse, since global warming is expected to increase the fragmentation of glaciers (more glaciers of smaller size).

Therefore, higher-spatial-resolution data (both optical and SAR) became highly desirable [83,84,85] for accurately monitoring small to medium size glaciers, particularly in regions where such glaciers are predominant. This is particularly true over the southern/eastern flank of the Alps, where small size glaciers are predominant [86,87]. Additionally, these data can only produce flat representation of glaciers, completely losing the third dimension related to their thickness and volume.

Data providing height information are, instead, highly desirable, enabling 3D measuring capabilities able to guarantee vertical accuracy consistent with the occurring glacier volume changes. Detailed height data are essential to generate proper 3D models useful to model glacier changes/dynamics. Stereo, LiDAR and SAR acquisitions can play an important role, and efforts should be made by space agencies to properly support such actions.

Revisit times of satellites over the same area is another limiting factor, also considering the strong climatic variability typical of mountainous areas. As a result, monitoring instances at proper geometric resolutions, related to the continued acquisition of new data, is hardly achievable. SAR missions can certainly help to improve frequency of acquisitions, but especially in mountain regions, they strongly suffer from geometric distortion and limitations that weaken their effectiveness. Foreshortening, layover and shadows are the main responsible factors that limits the use of SAR data for continued monitoring. Moreover, data processing is difficult and the meaning of spectral, polarimetric and interferometric information is too complicated to be explicitly referred to a glaciological meaning. Nevertheless, great opportunities appear to rely on SAR technology, especially if operated at high geometric resolution (see COSMO-Skymed, Tandem-X, TerraSAR-X missions) over glaciers.

In this context, great potential improvement is expected, especially for the Alps, from the upcoming Italian IRIDE Earth Observation program. It will be based on a new low-orbit (500 km height) satellite constellation and will be developed by the Italian Government. Economical resources from the Next Generation EU program (PNRR in Italy) will finance IRIDE together with other complementary national founds. It is expected to be operational in 2026 under the guide of the Italian Space Agency (ASI) and the management of the ESA. IRIDE is thought to be like a “constellation of constellations”, able to enable high acquisitions capabilities. It is intended as an end-to-end system having the following segments: (i) an upstream one made of a set of sub-constellations of Low Earth Orbiting—LEO satellites; (ii) a downstream one made of an operational ground infrastructure; and (iii) some services intended for supporting the Italian Public Administration. Satellites of IRIDE constellations will host a variety of both SAR and optical sensors exploring different geometric, spectra and temporal resolutions. The combination of the IRIDE constellations will allow a daily revisit time in Italy with a GSD of about 2 m.

While waiting for IRIDE, commercial providers such as PlanetScope [88], SkySAT [89] and ICEYE [90] could represent a possible solution. PlanetScope can, in fact, acquire optical data with a daily revisit time (at nadir), GSD ranging between 3.0 and 4.1 m; on the other hand, SkySAT sensors provide optical images with a GSD in the range of 0.57–1.0 m and a revisit time of 4–5 days; ICEYE acquire SAR data with a great variety of combinations in terms of both spatial and temporal resolution. These data are commercial, i.e., not free. Nevertheless, they are also entered into institutional archives, opening to a new hybrid approach that integrates commercial and public services.

While waiting for more structured actions from institutional players (i.e., national or international space agencies), where high-resolution 3D data are needed, airborne and UAVs (Unmanned Aerial Vehicles) can support the process at the single glacier level. Acquisitions can be achieved through multispectral digital photogrammetry or Airborne Laser Scanner (ALS) in response to specific actions from single users.

Products obtainable from this type of acquisitions (Digital Orthophoto Maps (DOMs), point clouds and Digital Surface Models (DSMs)) make possible, in fact, a detailed monitoring of glacier dynamics [91]. DSMs can eventually be derived also from sub-meter satellite stereo images (Worldview, Pleiades, GeoEye, ongoing Italian Iride mission) partially filling the gap between global DSMs from free satellite missions and very-high-resolution DSMs from aerial/UAV surveys [92].

Especially in the framework of regional (systemic) approaches to glacier DTs, this transitory situation necessitates that these single acquisitions are somehow properly coordinated by accredited players who are called to propose standards and guidelines to make all information comparable.

In particular, some legislative actions are expected at the national and international level to formally identify the subject that could be in charge of supervising and managing the coordination process, by firstly defining proper standards for (i) data acquisition and processing, (ii) data representation, (iii) type of information and (iv) suitability conditions under which different information can be used.

As far as DT modelling features are concerned, we propose a scalable approach relying on a facet-based logic. These should drive the system, according to user’s needs, to operate the generation of a specific output while selecting/flagging the desired options that the system can offer.

Options should concern: (i) output spatial resolution; (ii) output time resolution (if dynamic); (iii) type of modelled glacier parameter (or parameters); (iv) level of accuracy of estimates (if applicable); (v) type of model (if single or multi glaciers); and (vi) type of inputs (one should be enabled to adopt inputs from the system or to upload his/her own data). The output type is expected to be “static” if fixed at whatever past, present or future (simulated) situations, or “dynamic” if proposing a sequence of situations presented in the form of changing 3D models, graphs or tables. Both static and dynamic approaches could rely on actual data or respond to simulations where inputs can be arbitrarily set. Both visual (perceptive) and quantitative outputs should be generated.

5. Conclusions

In this work, we have proposed a theoretical framework for a Digital Twin of the Italian Alpine glaciers through the review of those basic elements that it should necessarily consider: (i) EO data, (ii) auxiliary data from ground surveys or network of sensors, (iii) services and products made available by institutional players, and (iv) glacier modelling approaches. It is worth remembering that the goal of this paper was simply to boot the development of a Glacier DT, moving from the numerous experiences of both mapping and modelling that the glacier-related scientific community already adopts. It was the authors’ explicit will to draft a theoretical (or philosophical) scheme that could represent the starting point for the development of an operational Alpine Glacier DT. No algorithmic proposal, nor quantitative data enter this contribution since the basic idea is that the DT, whatever it will be, should profit from the many glacier mapping and modelling experiences that are currently running separately, waiting for a harmonization and integration. We definitely propose that a systemic approach such as the one presently adopted in Glacier inventories could be set up properly to respond to the needs of a DT. We have tried to outline some guidelines with special concerns about those aspects of the process close to the authors’ skills (i.e., glaciology and EO). No new algorithm, no statistics or numerical result have been presented, aiming at an organized and structured representation of what exists that can be used to develop an effective and operational Alpine glacier DT.

Author Contributions

Conceptualization, V.F., L.B., L.P., E.B.-M. and P.B.; writing—original draft preparation, V.F., L.B., L.P. and E.B.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

In this study publicly available datasets were analysed, and their sources were cited in the text.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Akroyd, J.; Harper, Z.; Soutar, D.; Farazi, F.; Bhave, A.; Mosbach, S.; Kraft, M. Universal Digital Twin: Land Use. Data-Cent. Eng. 2022, 3, e3. [Google Scholar] [CrossRef]

- El Saddik, A. Digital Twins: The Convergence of Multimedia Technologies. IEEE Multimed. 2018, 25, 87–92. [Google Scholar] [CrossRef]

- Nativi, S.; Delipetrev, B.; Craglia, M. Destination Earth: Survey on “Digital Twins” Technologies and Activities, in the Green Deal Area. Available online: https://publications.jrc.ec.europa.eu/repository/handle/JRC122457 (accessed on 12 May 2023).

- Destination Earth—Shaping Europe’s Digital Future. Available online: https://digital-strategy.ec.europa.eu/en/policies/destination-earth (accessed on 3 April 2023).

- The Digital Europe Programme. Available online: https://digital-strategy.ec.europa.eu/en/activities/digital-programme (accessed on 3 April 2023).

- Working towards a Digital Twin of Earth. Available online: https://www.esa.int/Applications/Observing_the_Earth/Working_towards_a_Digital_Twin_of_Earth (accessed on 3 April 2023).

- Digital Twin—Antarctica. Available online: https://dte-antarctica.org/ (accessed on 3 April 2023).

- ESA. Eo Science for Society. Available online: https://eo4society.esa.int/regional-initiatives/alps-regional-initiative/ (accessed on 3 April 2023).

- Earth Observation for Alpine Ecosystems ‘Eco4alps’—Alps Regional Initiative. Available online: https://eo4society.esa.int/projects/eo4alps-eo4alps/ (accessed on 3 April 2023).

- European Commission. The European Green Deal. Available online: https://commission.europa.eu/publications/communication-european-green-deal_en (accessed on 3 April 2023).

- Stocker, T.F.; Qin, D.; Plattner, G.-K.; Tignor, M.M.; Allen, S.K.; Boschung, J.; Nauels, A.; Xia, Y.; Bex, V.; Midgley, P.M. Contribution of Working Group I to the Fifth Assessment Report of IPCC the Intergovernmental Panel on Climate Change. In Climate Change 2013: The Physical Science Basis; IPCC: Geneva, Switzerland, 2014. [Google Scholar]

- Shugar, D.H.; Jacquemart, M.; Shean, D.; Bhushan, S.; Upadhyay, K.; Sattar, A.; Schwanghart, W.; McBride, S.; de Vries, M.V.W.; Mergili, M.; et al. A Massive Rock and Ice Avalanche Caused the 2021 Disaster at Chamoli, Indian Himalaya. Science 2021, 373, 300–306. [Google Scholar] [CrossRef] [PubMed]

- Consortium, R. The Randolph Glacier Inventory: A Globally Complete Inventory of Glaciers. J. Glaciol. 2014, 60, 537–552. [Google Scholar]

- World Glacier Inventory, Version 1. Available online: https://nsidc.org/data/g01130/versions/1 (accessed on 3 April 2023).

- Howarth, P.J.; Ommanney, C.S.L. The Use of Landsat Digital Data for Glacier Inventories. Ann. Glaciol. 1986, 8, 90–92. [Google Scholar] [CrossRef]

- Ohmura, A. Completing the World Glacier Inventory. Ann. Glaciol. 2009, 50, 144–148. [Google Scholar] [CrossRef]

- Gratton, D.J.; Howarth, P.J.; Marceau, D.J. Combining DEM Parameters with Landsat MSS and TM Imagery in a GIS for Mountain Glacier Characterization. IEEE Trans. Geosci. Remote Sens. 1990, 28, 766–769. [Google Scholar] [CrossRef]

- Williams, R.S.; Hall, D.K.; Benson, C.S. Analysis of Glacier Facies Using Satellite Techniques. J. Glaciol. 1991, 37, 120–128. [Google Scholar] [CrossRef]

- Bayr, K.J.; Hall, D.K.; Kovalick, W.M. Observations on Glaciers in the Eastern Austrian Alps Using Satellite Data. Int. J. Remote Sens. 1994, 15, 1733–1742. [Google Scholar] [CrossRef]

- GLIMS, N. Compiled and made available by the international GLIMS community and the National Snow and Ice Data Cente. In Global Land Ice Measurements from Space Glacier Database; NSIDC: Boulder, CO, USA, 2018. [Google Scholar]

- Nigrelli, G.; Chiarle, M.; Nuzzi, A.; Perotti, L.; Torta, G.; Giardino, M. A Web-Based, Relational Database for Studying Glaciers in the Italian Alps. Comput. Geosci. 2013, 51, 101–107. [Google Scholar] [CrossRef]

- Taschner, S.; Ranzi, R. Comparing the Opportunities of Landsat-TM and Aster Data for Monitoring a Debris Covered Glacier in the Italian Alps within the GLIMS Project. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; pp. 1044–1046. [Google Scholar]

- Kääb, A.; Huggel, C.; Paul, F.; Wessels, R.; Raup, B.; Kieffer, H.; Kargel, J. Glacier Monitoring from ASTER Imagery: Accuracy and Applications. In Proceedings of the EARSeL-LISSIG Workshop Observing Our Cryosphere from Space, Bern, Switzerland, 11–13 March 2002; pp. 43–53. [Google Scholar]

- Toutin, T. ASTER DEMs for Geomatic and Geoscientific Applications: A Review. Int. J. Remote Sens. 2008, 29, 1855–1875. [Google Scholar] [CrossRef]

- Bolch, T.; Kamp, U. Glacier Mapping in High Mountains Using DEMs, Landsat and ASTER Data. In Proceedings of the 8th International Symposium on High Mountain Remote Sensing Cartography, La Paz, Bolivia, 20 March 2005. [Google Scholar]

- Paul, F.; Kääb, A.; Maisch, M.; Kellenberger, T.; Haeberli, W. The New Remote-Sensing-Derived Swiss Glacier Inventory: I. Methods. Ann. Glaciol. 2002, 34, 355–361. [Google Scholar] [CrossRef]

- Kääb, A.; Paul, F.; Maisch, M.; Hoelzle, M.; Haeberli, W. The New Remote-Sensing-Derived Swiss Glacier Inventory: II. First Results. Ann. Glaciol. 2002, 34, 362–366. [Google Scholar] [CrossRef]

- Paul, F.; Frey, H.; Le Bris, R. A New Glacier Inventory for the European Alps from Landsat TM Scenes of 2003: Challenges and Results. Ann. Glaciol. 2011, 52, 144–152. [Google Scholar] [CrossRef]

- Kääb, A.; Bolch, T.; Casey, K.; Heid, T.; Kargel, J.S.; Leonard, G.J.; Paul, F.; Raup, B.H. Glacier Mapping and Monitoring Using Multispectral Data. Glob. Land Ice Meas. Space 2014, 75–112. [Google Scholar]

- Barella, R.; Callegari, M.; Marin, C.; Klug, C.; Sailer, R.; Galos, S.P.; Dinale, R.; Gianinetto, M.; Notarnicola, C. Combined Use of Sentinel-1 and Sentinel-2 for Glacier Mapping: An Application Over Central East Alps. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4824–4834. [Google Scholar] [CrossRef]

- Muhuri, A.; Gascoin, S.; Menzel, L.; Kostadinov, T.S.; Harpold, A.A.; Sanmiguel-Vallelado, A.; López-Moreno, J.I. Performance Assessment of Optical Satellite-Based Operational Snow Cover Monitoring Algorithms in Forested Landscapes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7159–7178. [Google Scholar] [CrossRef]

- Gascoin, S.; Grizonnet, M.; Bouchet, M.; Salgues, G.; Hagolle, O. Theia Snow Collection: High-Resolution Operational Snow Cover Maps from Sentinel-2 and Landsat-8 Data. Earth Syst. Sci. Data 2019, 11, 493–514. [Google Scholar] [CrossRef]

- Paul, F.; Barry, R.G.; Cogley, J.G.; Frey, H.; Haeberli, W.; Ohmura, A.; Ommanney, C.S.L.; Raup, B.; Rivera, A.; Zemp, M. Recommendations for the Compilation of Glacier Inventory Data from Digital Sources. Ann. Glaciol. 2009, 50, 119–126. [Google Scholar] [CrossRef]

- Robson, B.A.; Nuth, C.; Dahl, S.O.; Hölbling, D.; Strozzi, T.; Nielsen, P.R. Automated Classification of Debris-Covered Glaciers Combining Optical, SAR and Topographic Data in an Object-Based Environment. Remote Sens. Environ. 2015, 170, 372–387. [Google Scholar] [CrossRef]

- Jiang, Z.; Liu, S.; Wang, X.; Lin, J.; Long, S. Applying SAR Interferometric Coherence to Outline Debris-Covered Glacier. In Proceedings of the 19th International Conference on Geoinformatics, Shanghai, China, 24 June 2011; pp. 1–4. [Google Scholar]

- Tsai, Y.-L.S.; Dietz, A.; Oppelt, N.; Kuenzer, C. Remote Sensing of Snow Cover Using Spaceborne SAR: A Review. Remote Sens. 2019, 11, 1456. [Google Scholar] [CrossRef]

- Tsai, Y.; Lin, S.; Kim, J. Tracking Greenland Russell Glacier Movements Using Pixel-Offset Method. J. Photogramm. Remote Sens. 2018, 23, 173–189. [Google Scholar]

- Berthier, E.; Arnaud, Y.; Kumar, R.; Ahmad, S.; Wagnon, P.; Chevallier, P. Remote Sensing Estimates of Glacier Mass Balances in the Himachal Pradesh (Western Himalaya, India). Remote Sens. Environ. 2007, 108, 327–338. [Google Scholar] [CrossRef]

- Wang, D.; Kääb, A. Modeling Glacier Elevation Change from DEM Time Series. Remote Sens. 2015, 7, 10117–10142. [Google Scholar] [CrossRef]

- Willis, M.J.; Melkonian, A.K.; Pritchard, M.E.; Ramage, J.M. Ice Loss Rates at the Northern Patagonian Icefield Derived Using a Decade of Satellite Remote Sensing. Remote Sens. Environ. 2012, 117, 184–198. [Google Scholar] [CrossRef]

- Borgogno Mondino, E. Multi-Temporal Image Co-Registration Improvement for a Better Representation and Quantification of Risky Situations: The Belvedere Glacier Case Study. Geomat. Nat. Hazards Risk 2015, 6, 362–378. [Google Scholar] [CrossRef]

- Chandler, B.M.; Lovell, H.; Boston, C.M.; Lukas, S.; Barr, I.D.; Benediktsson, Í.Ö.; Benn, D.I.; Clark, C.D.; Darvill, C.M.; Evans, D.J. Glacial Geomorphological Mapping: A Review of Approaches and Frameworks for Best Practice. Earth Sci. Rev. 2018, 185, 806–846. [Google Scholar] [CrossRef]

- EU-DEM v1.1. Available online: https://land.copernicus.eu/imagery-in-situ/eu-dem/eu-dem-v1.1 (accessed on 3 April 2023).

- Copernicus DEM—Global and European Digital Elevation Model (COP-DEM). Available online: https://spacedata.copernicus.eu/en/web/guest/collections/copernicus-digital-elevation-model (accessed on 3 April 2023).

- 30-Meter SRTM Tile Downloader. Available online: https://dwtkns.com/srtm30m/ (accessed on 3 April 2023).

- ASTER Global Digital Elevation Model V003. Available online: https://search.earthdata.nasa.gov/search/?fi=ASTER (accessed on 3 April 2023).

- NASADEM Merged DEM Global 1 Arc Second. Available online: https://lpdaac.usgs.gov/products/nasadem_hgtv001/ (accessed on 3 April 2023).

- TanDEM-X—Digital Elevation Model (DEM)—Global, 90 m. Available online: https://download.geoservice.dlr.de/TDM90/ (accessed on 3 April 2023).

- MERIT DEM: Multi-Error-Removed Improved-Terrain DEM. Available online: http://hydro.iis.u-tokyo.ac.jp/~yamadai/MERIT_DEM/index.html (accessed on 3 April 2023).

- ALOS Global Digital Surface Model ALOS World 3D—30m (AW3D30). Available online: https://www.eorc.jaxa.jp/ALOS/en/dataset/aw3d30/aw3d30_e.htm (accessed on 3 April 2023).

- Tinitaly DEM. Available online: http://tinitaly.pi.ingv.it/2023 (accessed on 3 April 2023).

- Data and Information Access Services. Available online: https://www.copernicus.eu/en/access-data/dias (accessed on 3 April 2023).

- The INSPIRE Geoportal. Available online: https://inspire-geoportal.ec.europa.eu (accessed on 3 April 2023).

- European Environment Agency—Data and Maps. Available online: https://www.eea.europa.eu/data-and-maps (accessed on 3 April 2023).

- European Environment Agency—Glacier Distribution in Europe. Available online: https://www.eea.europa.eu/data-and-maps/figures/glacier-distribution-in-europe (accessed on 3 April 2023).

- European Environment Agency—Casualties of 1999 Avalanches. Available online: https://www.eea.europa.eu/data-and-maps/figures/casualties-of-1999-avalanchessualtiesof1999avalanches (accessed on 3 April 2023).

- CORDA. Copernicus Reference Data Access. Available online: https://corda.eea.europa.eu/_layouts/15/CORDADashboard/Dashboard.aspx (accessed on 3 April 2023).

- HISTALP (Historical Instrumetal Climatological Surface Time Series of the Greater Alpine Region). Available online: https://www.zamg.ac.at/histalp/datasets.php (accessed on 3 April 2023).

- MeteoSwiss—Alpine Precipitation Datasets. Available online: https://www.meteoswiss.admin.ch/climate/the-climate-of-switzerland/spatial-climate-analyses/alpine-precipitation.html (accessed on 3 April 2023).

- Copernicus Climate Change Service—LAPrec Data Access. Available online: https://surfobs.climate.copernicus.eu/dataaccess/access_laprec.php (accessed on 3 April 2023).

- Richardson, S.D.; Reynolds, J.M. An Overview of Glacial Hazards in the Himalayas. Quat. Int. 2000, 65, 31–47. [Google Scholar] [CrossRef]

- Maussion, F.; Butenko, A.; Champollion, N.; Dusch, M.; Eis, J.; Fourteau, K.; Gregor, P.; Jarosch, A.H.; Landmann, J.; Oesterle, F. The Open Global Glacier Model (OGGM) v1. 1. Geosci. Model Dev. 2019, 12, 909–931. [Google Scholar] [CrossRef]

- Parkes, D.; Goosse, H. Modelling Regional Glacier Length Changes over the Last Millennium Using the Open Global Glacier Model. Cryosphere 2020, 14, 3135–3153. [Google Scholar] [CrossRef]

- Zemp, M.; Haeberli, W.; Hoelzle, M.; Paul, F. Alpine Glaciers to Disappear within Decades? Geophys. Res. Lett. 2006, 33, L13504. [Google Scholar] [CrossRef]

- Peano, D.; Chiarle, M.; von Hardenberg, J. Glacier Dynamics in the Western Italian Alps: A Minimal Model Approach. Cryosphere Discuss. 2014, 8, 1479–1516. [Google Scholar]

- Haeberli, W.; Hölzle, M. Application of Inventory Data for Estimating Characteristics of and Regional Climate-Change Effects on Mountain Glaciers: A Pilot Study with the European Alps. Ann. Glaciol. 1995, 21, 206–212. [Google Scholar] [CrossRef]

- Zekollari, H.; Huss, M.; Farinotti, D. Modelling the Future Evolution of Glaciers in the European Alps under the EURO-CORDEX RCM Ensemble. Cryosphere 2019, 13, 1125–1146. [Google Scholar] [CrossRef]

- Bosello, F.; Marazzi, L.; Nunes, P. Le Alpi Italiane e il Cambiamento Climatico: Elementi di Vulnerabilità Ambientale ed Economica, e Possibili Strategie di Adattamento. In Proceedings of the APAT Workshop on “Cambiamenti Climatici e Ambienti Nivo-Glaciali: Scenari e Prospettive di Adattamento”, Saint Vincent, Italy, 2–3 July 2007; pp. 2–3. [Google Scholar]

- Alps2050—Common Spatial Perspectives for the Alpine Area. Available online: https://www.espon.eu/sites/default/files/attachments/01_alps_2050_FR_main_report.pdf (accessed on 3 April 2023).

- EUSALP. Available online: https://www.alpine-region.eu/ (accessed on 3 April 2023).

- Consiglio Nazionale delle Richerche. Ghiacciai della Lombardia e dell’Ortles-Cevedale. In Catasto Dei Ghiacciai Italiani, Anno Geofisico Internazionale 1957–1958; Comitato Glaciologico Italiano: Torino, Italy, 1961; Volume 3, p. 389. [Google Scholar]

- CGI—Comitato Glaciologico Italiano Relazioni Delle Campagne Glaciologiche—Reports of the Glaciological Surveys. Boll. Del Com. Glaciol. Ital. 1928, 1–25.

- CGI—Comitato Glaciologico Italiano Relazioni Delle Campagne Glaciologiche—Reports of the Glaciological Surveys. Geogr. E Din. Quat. 1978, 1–34.

- BRANCUCCI, G.; CARTON, A.; SALVATORE, M.C. Changes in the Number and Area of Italian Alpine Glaciers between 1958 and 1989. Geogr. Fis. E Din. Quat. 1997, 20, 293. [Google Scholar]

- Antartic Glaciers. Available online: https://www.antarcticglaciers.org/glaciers-and-climate/numerical-ice-sheet-models/numerical-modelling-glossary/#references (accessed on 3 April 2023).

- Muller, F.; Caflish, T.; Muller, G. Instructions for Compilation and Assemblage of Data for a World Glacier Inventory; Department of Geography, Swiss Federal Institute of Technology: Zurich, Switzerland, 1977. [Google Scholar]

- Salvatore, M.C.; Zanoner, T.; Baroni, C.; Carton, A.; Banchieri, F.A.; Viani, C.; Giardino, M.; Perotti, L. The State of Italian Glaciers: A Snapshot of the 2006–2007 Hydrological Period. Geogr. Fis. Din. Quat. 2015, 38, 175–198. [Google Scholar]

- Marazzi, S. Atlante Orografico Delle Alpi: SOIUSA: Suddivisione Orografica Internazionale Unificata del Sistema Alpino; Priuli & Verlucca: Torino, Italy, 2005. [Google Scholar]

- Porro, C. Elenco dei Ghiacciai Italiani; Orsatti & Zinelli: Ufficio idrografico del Po, Italy, 1925; Volume 1. [Google Scholar]