Monitoring Fine-Scale Forest Health Using Unmanned Aerial Systems (UAS) Multispectral Models

Abstract

1. Introduction

- Determine the capability of UAS for classifying forest health at the individual tree level.

- Compare the results of forest health classification using UAS to high-resolution, multispectral, airborne imagery.

2. Materials and Methods

2.1. Study Areas

2.2. Assessing Forest Health: Field and Photo

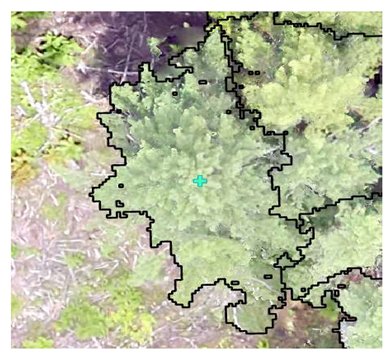

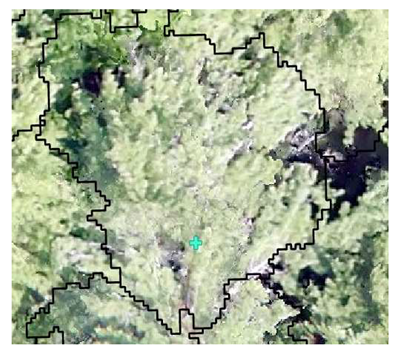

- Coniferous (C)—Healthy coniferous trees (e.g., eastern white pine or eastern hemlock) identified as having minimal or no signs of stress, which are calculated using the stress index as classes 1, 2, or 3.

- Deciduous (D)—Healthy deciduous trees (e.g., American beech, white ash, or Northern red oak) identified as having minimal or no signs of stress, which are calculated using the stress index as classes 1, 2, or 3.

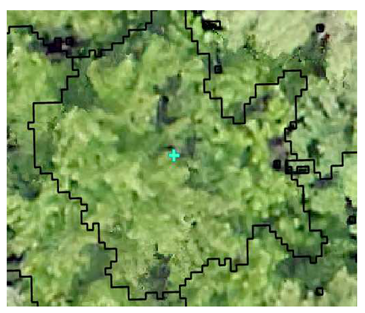

- Coniferous Stressed (CS)—Stressed coniferous trees, displaying moderate or severe reductions in crown vigor, which are calculated using the stress index as classes 4 through 9.

- Deciduous Stressed (DS)—Stressed deciduous trees, displaying moderate or severe reductions in crown vigor, which are calculated using the stress index as classes 4 through 9.

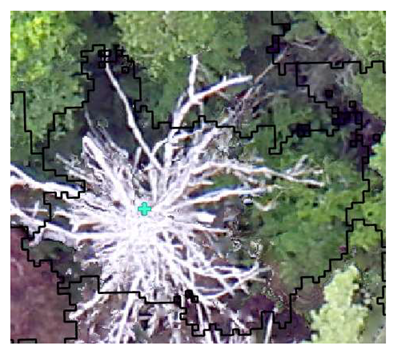

- Degraded/Dead (Snag)—Coniferous or deciduous trees identified as stress class 10 (dead) which represent the most degraded of each health attribute.

2.3. Assessiong Forest Health: Digital Image Classification

2.3.1. Airborne Imagery

2.3.2. UAS Imagery

2.4. Forest Health Accuracy Assessment

3. Results

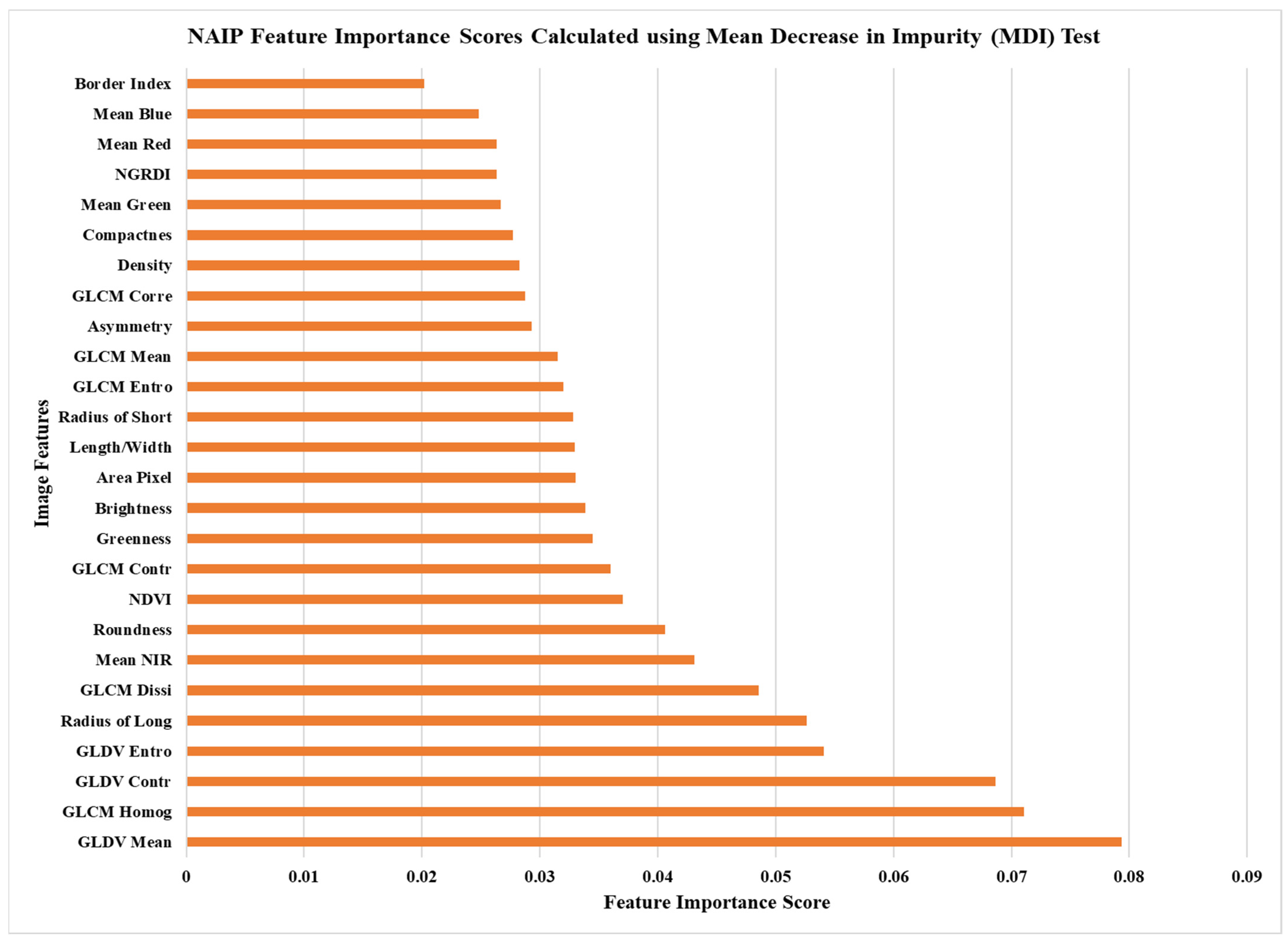

3.1. Airborne Imagery

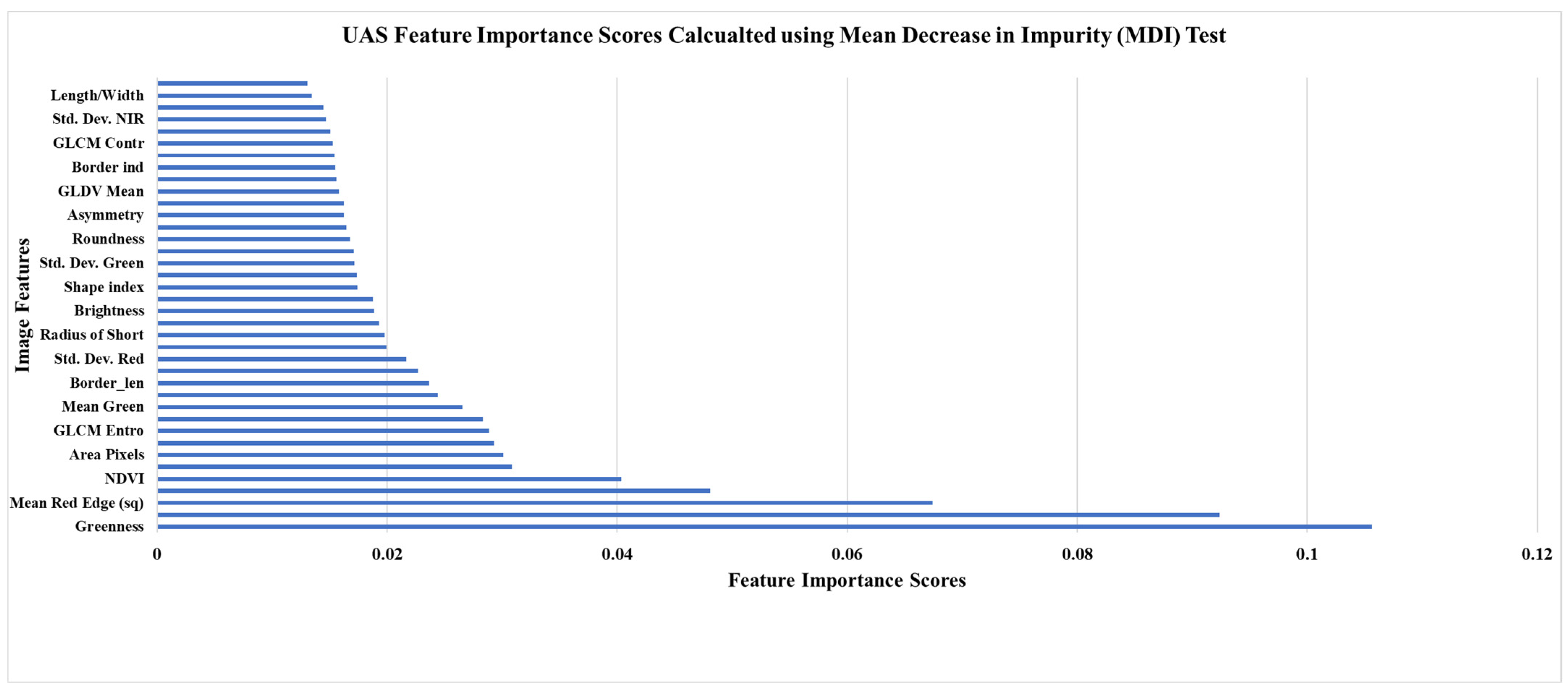

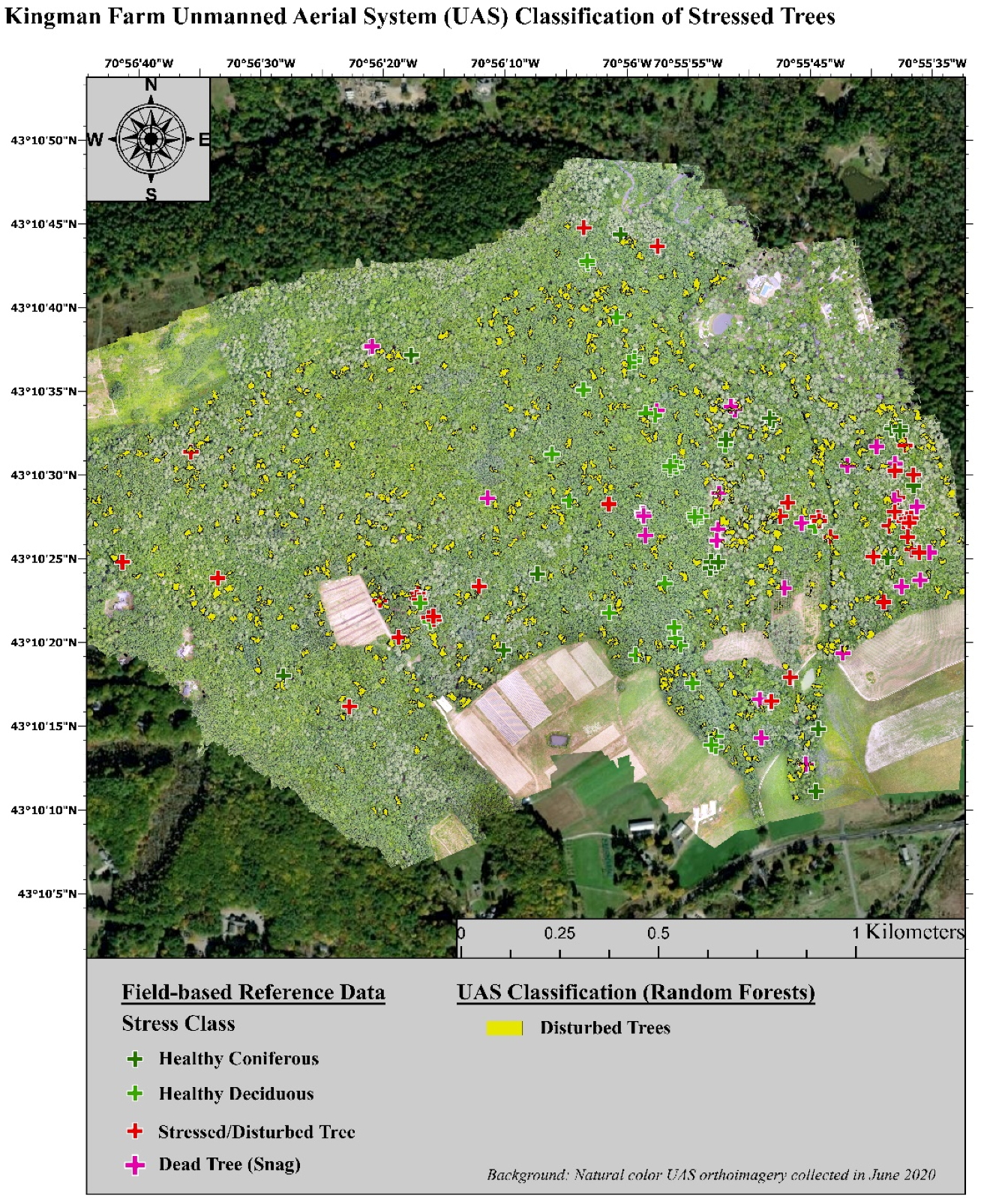

3.2. UAS Imagery

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Healthy | Stressed | Dead | |

|---|---|---|---|

| Conifer: Field Survey |  SI = 2 |  SI = 7 |  SI = 10 |

| Conifer: Photo Interpretation |  |  |  |

| Deciduous: Field Survey |  SI = 2 |  SI = 6 |  SI = 10 |

| Deciduous: Photo Interpretation |  |  |  |

| Image Classification Features | ||

|---|---|---|

| Geometric | Texture | Spectral |

| Area (Pixels) Asymmetry Border Index Border Length Compactness Density Length\Width Radius of Long Ellipsoid Radius of Short Ellipsoid Shape Index UAS Only | GLCM Contrast GLCM Correlation GLCM Dissimilarity GLCM Entropy GLCM Mean GLDV Entropy GLDV Mean GLDV Contrast | Brightness Greenness Index Mean red (SODA\NAIP) Mean green (SODA\NAIP) Mean blue (SODA\NAIP) Mean green (Sequoia) Mean red (Sequoia) Mean NIR (Sequoia\NAIP) Mean red edge (Sequoia) NDVI NGRDI Std. Dev. red (SODA\NAIP) Std. Dev. green (SODA\NAIP) Std. Dev. blue (SODA\NAIP) Std. Dev. green (Sequoia) Std. Dev. red (Sequoia) Std. Dev. NIR (Sequoia\NAIP) Std. Dev. red edge (Sequoia) |

References

- Oliver, C.D.; Larson, B.A. Forest Stand Dynamics, Updated ed.; John Wiley & Sons: New York, NY, USA, 1996. [Google Scholar]

- Frolking, S.; Palace, M.W.; Clark, D.B.; Chambers, J.Q.; Shugart, H.H.; Hurtt, G.C. Forest disturbance and recovery: A general review in the context of spaceborne remote sensing of impacts on aboveground biomass and canopy structure. J. Geophys. Res. Biogeosciences 2009, 114, G00E02. [Google Scholar] [CrossRef]

- Coleman, T.W.; Graves, A.D.; Heath, Z.; Flowers, R.W.; Hanavan, R.P.; Cluck, D.R.; Ryerson, D. Accuracy of aerial detection surveys for mapping insect and disease disturbances in the United States. For. Ecol. Manag. 2018, 430, 321–336. [Google Scholar] [CrossRef]

- Wilson, D.C.; Morin, R.S.; Frelich, L.E.; Ek, A.R. Monitoring disturbance intervals in forests: A case study of increasing forest disturbance in Minnesota. Ann. For. Sci. 2019, 76, 78. [Google Scholar] [CrossRef]

- Aukema, J.E.; Leung, B.; Kovacs, K.; Chivers, C.; Britton, K.O.; Englin, J.; Frankel, S.J.; Haight, R.G.; Holmes, T.P.; Liebhold, A.M.; et al. Economic impacts of Non-Native forest insects in the continental United States. PLoS ONE 2011, 6, e24587. [Google Scholar] [CrossRef]

- Pontius, J.; Hanavan, R.P.; Hallett, R.A.; Cook, B.D.; Corp, L.A. High spatial resolution spectral unmixing for mapping ash species across a complex urban environment. Remote Sens. Environ. 2017, 199, 360–369. [Google Scholar] [CrossRef]

- Hassaan, O.; Nasir, A.K.; Roth, H.; Khan, M.F. Precision Forestry: Trees Counting in Urban Areas Using Visible Imagery based on an Unmanned Aerial Vehicle. IFAC PapersOnLine 2016, 49, 16–21. [Google Scholar] [CrossRef]

- Lausch, A.; Erasmi, S.; King, D.J.; Magdon, P.; Heurich, M. Understanding forest health with Remote sensing—Part II—A review of approaches and data models. Remote Sens. 2017, 9, 129. [Google Scholar] [CrossRef]

- Lausch, A.; Erasmi, S.; King, D.J.; Magdon, P.; Heurich, M. Understanding forest health with remote sensing—Part I—A review of spectral traits, processes and remote-sensing characteristics. Remote Sens. 2016, 8, 1029. [Google Scholar] [CrossRef]

- Kopinga, J.; Van Den Burg, J. Using Soil and Foliar Analysis to Diagnose the Nutritional Status of Urban Trees. J. Arboric. 1995, 21, 17–24. [Google Scholar]

- Pan, Y.; McCullough, K.; Hollinger, D.Y. Forest biodiversity, relationships to structural and functional attributes, and stability in New England forests. For. Ecosyst. 2018, 5, 14. [Google Scholar] [CrossRef]

- Gerhards, M.; Schlerf, M.; Mallick, K.; Udelhoven, T. Challenges and future perspectives of multi-/Hyperspectral thermal infrared remote sensing for crop water-stress detection: A review. Remote Sens. 2019, 11, 1240. [Google Scholar] [CrossRef]

- Ward, K.T.; Johnson, G.R. Geospatial methods provide timely and comprehensive urban forest information. Urban For. Urban Green. 2007, 6, 15–22. [Google Scholar] [CrossRef]

- Steinman, J. Forest Health Monitoring in the North-Eastern United States: Disturbances and Conditions During 1993–2002; Tech. Pap. NA-01-04; U.S. Department of Agriculture, Forest Service, State and Private Forestry, Northeastern Area: Newtown Square, PA, USA, 2004; 46p.

- McLaughlin, S.; Percy, K. Forest health in North America: Some perspectives on actual and potential roles of climate and air pollution. Water Air Soil Pollut. 1999, 116, 151–197. [Google Scholar] [CrossRef]

- Meng, J.; Li, S.; Wang, W.; Liu, Q.; Xie, S.; Ma, W. Mapping forest health using spectral and textural information extracted from SPOT-5 satellite images. Remote Sens. 2016, 8, 719. [Google Scholar] [CrossRef]

- Broders, K.; Munck, I.; Wyka, S.; Iriarte, G.; Beaudoin, E. Characterization of fungal pathogens associated with white pine needle damage (WPND) in Northeastern North America. Forests 2015, 6, 4088–4104. [Google Scholar] [CrossRef]

- Wyka, S.A.; Smith, C.; Munck, I.A.; Rock, B.N.; Ziniti, B.L.; Broders, K. Emergence of white pine needle damage in the northeastern United States is associated with changes in pathogen pressure in response to climate change. Glob. Chang. Biol. 2017, 23, 394–405. [Google Scholar] [CrossRef]

- Poland, T.M.; McCullough, D.G. Emerald ash borer: Invasion of the urban forest and the threat to North America’s ash resource. J. For. 2006, 104, 118–124. [Google Scholar]

- Pasquarella, V.J.; Elkinton, J.S.; Bradley, B.A. Extensive gypsy moth defoliation in Southern New England characterized using Landsat satellite observations. Biol. Invasions 2018, 20, 3047–3053. [Google Scholar] [CrossRef]

- Orwig, D.A.; Foster, D.R. Forest Response to the Introduced Hemlock Woolly Adelgid in Southern New England, USA. J. Torrey Bot. Soc. 1998, 125, 60–73. [Google Scholar] [CrossRef]

- Simoes, J.; Markowski-Lindsay, M.; Butler, B.J.; Kittredge, D.B.; Thompson, J.; Orwig, D. Assessing New England family forest owners’ invasive insect awareness. J. Ext. 2019, 57, 16. [Google Scholar]

- Burns, R.M.; Honkala, B.H. Silvics of North America; Agriculture Handbook 654; United States Department of Agriculture, Forest Service: Washington, DC, USA, 1990; Volume 2, p. 877.

- McCune, B. Lichen Communities as Indicators of Forest Health. Bryologist 2000, 103, 353–356. [Google Scholar] [CrossRef]

- Pause, M.; Schweitzer, C.; Rosenthal, M.; Keuck, V.; Bumberger, J.; Dietrich, P.; Heurich, M.; Jung, A.; Lausch, A. In situ/remote sensing integration to assess forest health—A review. Remote Sens. 2016, 8, 471. [Google Scholar] [CrossRef]

- Tucker, C.J.; Townshend, J.R.G.; Goff, T.E. African land-cover classification using satellite data. Science 1985, 227, 369–375. [Google Scholar] [CrossRef] [PubMed]

- Goetz, S.; Dubayah, R. Advances in remote sensing technology and implications for measuring and monitoring forest carbon stocks and change. Carbon Manag. 2011, 2, 231–244. [Google Scholar] [CrossRef]

- Zaman, B.; Jensen, A.M.; McKee, M. Use of high-resolution multispectral imagery acquired with an autonomous unmanned aerial vehicle to quantify the spread of an invasive wetlands species. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, IEEE, Vancouver, BC, Canada, 24–29 July 2011; pp. 803–806. [Google Scholar]

- Innes, J.L. An assessment of the use of crown structure for the determination of the health of beech (Fagus sylvatica). Forestry 1998, 71, 113–130. [Google Scholar] [CrossRef][Green Version]

- Pontius, J.; Hallett, R. Comprehensive Methods for Earlier Detection and Monitoring of Forest Decline. For. Sci. 2014, 60, 1156–1163. [Google Scholar] [CrossRef]

- Hallett, R.; Johnson, M.L.; Sonti, N.F. Assessing the tree health impacts of salt water flooding in coastal cities: A case study in New York City. Landsc. Urban Plan. 2018, 177, 171–177. [Google Scholar] [CrossRef]

- Hallett, R.A.; Bailey, S.W.; Horsley, S.B.; Long, R.P. Influence of nutrition and stress on sugar maple at a regional scale. Can. J. For. Res. 2006, 36, 2235–2246. [Google Scholar] [CrossRef]

- HTHC. Healthy Trees Healthy Cities. Available online: https://healthytreeshealthycitiesapp.org/ (accessed on 1 August 2021).

- Guidi, L.; Lo Piccolo, E.; Landi, M. Chlorophyll fluorescence, photoinhibition and abiotic stress: Does it make any difference the fact to be a C3 or C4 species? Front. Plant Sci. 2019, 10, 174. [Google Scholar] [CrossRef]

- Gatica-Saavedra, P.; Echeverría, C.; Nelson, C.R. Ecological indicators for assessing ecological success of forest restoration: A world review. Restor. Ecol. 2017, 25, 850–857. [Google Scholar] [CrossRef]

- Noss, R.F. Assessing and monitoring forest biodiversity: A suggested framework and indicators. For. Ecol. Manag. 1999, 115, 135–146. [Google Scholar] [CrossRef]

- Lindenmayer, D.B.; Margules, C.R.; Botkin, D.B. Indicators of Biodiversity for Ecologically Sustainable Forest Management. Conserv. Biol. 2000, 14, 941–950. [Google Scholar] [CrossRef]

- Juutinen, A.; Mönkkönen, M. Testing alternative indicators for biodiversity conservation in old-growth boreal forests: Ecology and economics. Ecol. Econ. 2004, 50, 35–48. [Google Scholar] [CrossRef]

- Schrader-Patton, C.; Grulke, N.; Bienz, C. Assessment of ponderosa pine vigor using four-band aerial imagery in south central oregon: Crown objects to landscapes. Forests 2021, 12, 612. [Google Scholar] [CrossRef]

- Grulke, N.; Bienz, C.; Hrinkevich, K.; Maxfield, J.; Uyeda, K. Quantitative and qualitative approaches to assess tree vigor and stand health in dry pine forests. For. Ecol. Manag. 2020, 465, 118085. [Google Scholar] [CrossRef]

- Royle, D.D.; Lathrop, R.G. Monitoring Hemlock Forest Health in New Jersey Using Landsat TM Data and Change Detection Techniques. For. Sci. 1997, 49, 9. [Google Scholar]

- Bigler, C.; Vitasse, Y. Premature leaf discoloration of European deciduous trees is caused by drought and heat in late spring and cold spells in early fall. Agric. For. Meteorol. 2021, 307, 108492. [Google Scholar] [CrossRef]

- Hoffbeck, J.P.; Landgrebe, D.A. Classification of Remote Sensing Images Having High Spectral Resolution. Remote Sens. Environ. 1996, 57, 119–126. [Google Scholar] [CrossRef]

- Jensen, J. Introductory Digital Image Processing: A Remote Sensing Perspective, 4th ed.; Pearson Education Inc.: Glenview, IL, USA, 2016. [Google Scholar]

- Horsley, S.B.; Long, R.P.; Bailey, S.W.; Hallett, R.A.; Wargo, P.M. Health of Eastern North American sugar maple forests and factors affecting decline. North. J. Appl. For. 2002, 19, 34–44. [Google Scholar] [CrossRef]

- Gago, J.; Douthe, C.; Coopman, R.E.; Gallego, P.P.; Ribas-Carbo, M.; Flexas, J.; Escalona, J.; Medrano, H. UAVs challenge to assess water stress for sustainable agriculture. Agric. Water Manag. 2015, 153, 9–19. [Google Scholar] [CrossRef]

- Chaerle, L.; Van Der Straeten, D. Imaging Techniques and the early detection of plant stress. Trends Plant Sci. 2000, 5, 495–501. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Tuominen, S.; Saari, H.; Pölönen, I.; Hakala, T.; Viljanen, N.; Soukkamäki, J.; Näkki, I.; Ojanen, H.; et al. UAS based tree species identification using the novel FPI based hyperspectral cameras in visible, NIR and SWIR spectral ranges. In International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016; International Society for Photogrammetry and Remote Sensing: Istanbul, Turkey, 2016; pp. 1143–1148. [Google Scholar] [CrossRef]

- Choi, S.; Kim, Y.; Lee, J.H.; You, H.; Jang, B.J.; Jung, K.H. Minimizing Device-to-Device Variation in the Spectral Response of Portable Spectrometers. J. Sens. 2019, 2019, 8392583. [Google Scholar] [CrossRef]

- Lu, B.; He, Y.; Liu, H.H.T. Mapping vegetation biophysical and biochemical properties using unmanned aerial vehicles-acquired imagery. Int. J. Remote Sens. 2018, 39, 15–16. [Google Scholar] [CrossRef]

- Kerr, J.T.; Ostrovsky, M. From space to species: Ecological applications for remote sensing. Trends Ecol. Evol. 2003, 18, 299–305. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; Crawford, P. Assessing the status of forest regeneration using digital aerial photogrammetry and unmanned aerial systems. Int. J. Remote Sens. 2018, 39, 5246–5264. [Google Scholar] [CrossRef]

- Otsu, K.; Pla, M.; Duane, A.; Cardil, A.; Brotons, L. Estimating the threshold of detection on tree crown defoliation using vegetation indices from UAS multispectral imagery. Drones 2019, 3, 80. [Google Scholar] [CrossRef]

- Zhang, X.; Qiu, F.; Zhan, C.; Zhang, Q.; Li, Z.; Wu, Y.; Huang, Y.; Chen, X. Acquisitions and applications of forest canopy hyperspectral imageries at hotspot and multiview angle using unmanned aerial vehicle platform. J. Appl. Remote Sens. 2020, 14, 1. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Zhao, D.; Pang, Y.; Liu, L.; Li, Z. Individual tree classification using airborne LiDAR and hyperspectral data in a natural mixed forest of northeast China. Forests 2020, 11, 303. [Google Scholar] [CrossRef]

- Jenerowicz, A.; Siok, K.; Woroszkiewicz, M.; Orych, A. The fusion of satellite and UAV data: Simulation of high spatial resolution band. In Remote Sensing for Agriculture, Ecosystems, and Hydrology XIX, Proceedings of the SPIE Remote Sensing, Warsaw, Poland, 11–14 September 2017; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10421, p. 104211. [Google Scholar] [CrossRef]

- Berra, E.F.; Gaulton, R.; Barr, S. Assessing spring phenology of a temperate woodland: A multiscale comparison of ground, unmanned aerial vehicle and Landsat satellite observations. Remote Sens. Environ. 2019, 223, 229–242. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Houet, T.; Mony, C.; Lecoq, L.; Corpetti, T. Can UAVs fill the gap between in situ surveys and satellites for habitat mapping? Remote Sens. Environ. 2020, 243, 111780. [Google Scholar] [CrossRef]

- Næsset, E.; Gobakken, T.; McRoberts, R.E. A model-dependent method for monitoring subtle changes in vegetation height in the boreal-alpine ecotone using bi-temporal, three dimensional point data from airborne laser scanning. Remote Sens. 2019, 11, 1804. [Google Scholar] [CrossRef]

- Marshall, D.M.; Barnhart, R.K.; Shappee, E.; Most, M. Introduction to Unmanned Aerial Systems, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Fraser, B.T.; Congalton, R.G. Issues in Unmanned Aerial Systems (UAS) data collection of complex forest environments. Remote Sens. 2018, 10, 908. [Google Scholar] [CrossRef]

- Lelong, C.C.D.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Use of unmanned aerial systems for multispectral survey and tree classification: A test in a park area of northern Italy. Eur. J. Remote Sens. 2014, 47, 251–269. [Google Scholar] [CrossRef]

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of unmanned aerial system-based CIR images in forestry—A new perspective to monitor pest infestation levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Environ. Monit. Assess. 2016, 188, 146. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Zhang, X. Individual tree parameters estimation for plantation forests based on UAV oblique photography. IEEE Access 2020, 8, 96184–96198. [Google Scholar] [CrossRef]

- Liang, X.; Wang, Y.; Pyörälä, J.; Lehtomäki, M.; Yu, X.; Kaartinen, H.; Kukko, A.; Honkavaara, E.; Issaoui, A.E.I.; Nevalainen, O.; et al. Forest in situ observations using unmanned aerial vehicle as an alternative of terrestrial measurements. For. Ecosyst. 2019, 6, 20. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Suárez, J.C.; Barr, S.L. Canopy temperature from an Unmanned Aerial Vehicle as an indicator of tree stress associated with red band needle blight severity. For. Ecol. Manag. 2019, 433, 699–708. [Google Scholar] [CrossRef]

- Kattenborn, T.; Lopatin, J.; Förster, M.; Braun, A.C.; Fassnacht, F.E. UAV data as alternative to field sampling to map woody invasive species based on combined Sentinel-1 and Sentinel-2 data. Remote Sens. Environ. 2019, 227, 61–73. [Google Scholar] [CrossRef]

- Revill, A.; Florence, A.; Macarthur, A.; Hoad, S.; Rees, R.; Williams, M. Quantifying uncertainty and bridging the scaling gap in the retrieval of leaf area index by coupling sentinel-2 and UAV observations. Remote Sens. 2020, 12, 1843. [Google Scholar] [CrossRef]

- Janowiak, M.K.; D’Amato, A.W.; Swanston, C.W.; Iverson, L.; Thompson, F.R.; Dijak, W.D.; Matthews, S.; Peters, M.P.; Prasad, A.; Fraser, J.S.; et al. New England and Northern New York Forest Ecosystem Vulnerability Assessment and Synthesis: A Report from the New England Climate Change Response Framework Project; NRS-173; U.S. Department of Agriculture, Forest Service, Northern Research Station: Newtown Square, PA, USA, 2018; p. 234. [CrossRef]

- University of New Hampshire Office of Woodlands and Natural Areas. Available online: https://colsa.unh.edu/woodlands (accessed on 1 September 2021).

- Eisenhaure, S. Kingman Farm. Management and Operations Plan; University of New Hampshire, Office of Woodlands and Natural Areas: Durham, NH, USA, 2018. [Google Scholar]

- Fraser, B.T.; Congalton, R.G. Estimating Primary Forest Attributes and Rare Community Charecteristics using Unmanned Aerial Systems (UAS): An Enrichment of Conventional Forest Inventories. Remote Sens. 2021, 13, 2971. [Google Scholar] [CrossRef]

- EOS. Arrow 200 RTK GNSS. Available online: https://eos-gnss.com/product/arrow-series/arrow-200/?gclid=Cj0KCQjw2tCGBhCLARIsABJGmZ47-nIPNrAuu7Xobgf3P0HGlV4mMLHHWZz25lyHM6UuI_pPCu7b2gMaAukeEALw_wcB (accessed on 1 August 2021).

- Hallett, R.; Hallett, T. Citizen Science and Tree Health Assessment: How useful are the data? Arboric. Urban For. 2018, 44, 236–247. [Google Scholar] [CrossRef]

- Green, R. Sampling Design and Statistical Methods for Environmental Biologists; John Wiley and Sons Inc.: New York, NY, USA, 1979. [Google Scholar]

- USDA. NAIP Imagery. Available online: https://www.fsa.usda.gov/programs-and-services/aerial-photography/imagery-programs/naip-imagery/ (accessed on 1 September 2021).

- Fraser, B.; Congalton, R.G. A Comparison of Methods for Determining Forest Composition from High-Spatial Resolution Remotely Sensed Imagery. Forests 2021, 12, 1290. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- SenseFly. eBee Plus Drone User Manual v1.8; senseFly Parrot Group: Lausanne, Switzerland, 2018; p. 107. [Google Scholar]

- SenseFly. eBee X Drone User Manual v1.3; senseFly Parrot Group: Lausanne, Switzerland, 2019; p. 96. [Google Scholar]

- SenseFly. senseFly S.O.D.A. Photogrammetry Camera. Available online: https://www.sensefly.com/camera/sensefly-soda-photogrammetry-camera/ (accessed on 1 September 2021).

- SenseFly. senseFly Aeria X Photogrammetry Camera. Available online: https://www.sensefly.com/camera/sensefly-aeria-x-photogrammetry-camera/ (accessed on 1 September 2021).

- SenseFly. Parrot Sequoia+ Multispectral Camera. Available online: https://www.sensefly.com/camera/parrot-sequoia/ (accessed on 1 September 2021).

- SenseFly. eMotion Drone Flight Management Software Versions 3.15 (eBee Plus) and 3.19 (eBee X). Available online: https://www.sensefly.com/software/emotion/ (accessed on 1 October 2021).

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal altitude, overlap, and weather conditions for computer vision UAV estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- National Oceanic and Atmospheric Administration. Continuously Operating Reference Stations (CORS); National Oceanic and Atmospheric Administration. Available online: https://geodesy.noaa.gov/CORS/ (accessed on 1 October 2021).

- Gu, J.; Grybas, H.; Congalton, R.G. A comparison of forest tree crown delineation from unmanned aerial imagery using canopy height models vs. spectral lightness. Forests 2020, 11, 605. [Google Scholar] [CrossRef]

- Chen, Y.; Ming, D.; Zhao, L.; Lv, B.; Zhou, K.; Qing, Y. Review on high spatial resolution remote sensing image segmentation evaluation. Photogramm. Eng. Remote Sens. 2018, 84, 629–646. [Google Scholar] [CrossRef]

- GRANIT. GRANIT LiDAR Distribution Site. Available online: https://lidar.unh.edu/map/ (accessed on 1 October 2021).

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Gu, J.; Congalton, R.G. Individual Tree Crown Delineation from UAS Imagery Based on Region Growing by Over-Segments With a Competitive Mechanism. IEEE Trans. Geosci. Remote Sens. 2021, 1–11. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Hogland, J.; Anderson, N.; St Peter, J.; Drake, J.; Medley, P. Mapping Forest Characteristics at Fine Resolution across Large Landscapes of the Southeastern United States Using NAIP Imagery and FIA Field Plot Data. ISPRS Int. J. Geo Inf. 2018, 7, 140. [Google Scholar] [CrossRef]

- Chandel, A.K.; Molaei, B.; Khot, L.R.; Peters, R.T.; Stöckle, C.O. High resolution geospatial evapotranspiration mapping of irrigated field crops using multispectral and thermal infrared imagery with metric energy balance model. Drones 2020, 4, 52. [Google Scholar] [CrossRef]

- García, M.; Saatchi, S.; Ustin, S.; Balzter, H. Modelling forest canopy height by integrating airborne LiDAR samples with satellite Radar and multispectral imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 66, 159–173. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principals and Practices, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Chapelle, O.; Haffner, P.; Vapnik, V.N. Support vector machines for histogram-based image classification. IEEE Trans. Neural Netw. 1999, 10, 1055–1064. [Google Scholar] [CrossRef]

- Wessel, M.; Brandmeier, M.; Tiede, D. Evaluation of different machine learning algorithms for scalable classification of tree types and tree species based on Sentinel-2 data. Remote Sens. 2018, 10, 1419. [Google Scholar] [CrossRef]

- Morin, R.S.; Barnett, C.J.; Butler, B.J.; Crocker, S.J.; Domke, G.M.; Hansen, M.H.; Hatfield, M.A.; Horton, J.; Kurtz, C.M.; Lister, T.W.; et al. Forests of Vermont and New Hampshire 2012; Resource Bulletin NRS-95; U.S. Department of Agriculture United States Forest Service, Northern Research Station: Newtown Square, PA, USA, 2015; p. 80.

- Vitousek, P.M.; D’Antonio, C.M.; Loope, L.L.; Westbrooks, R. Biological invasions as global environmental change. Am. Sci. 1996, 84, 468–478. [Google Scholar]

- Thompson, I.D.; Guariguata, M.R.; Okabe, K.; Bahamondez, C.; Nasi, R.; Heymell, V.; Sabogal, C. An operational framework for defining and monitoring forest degradation. Ecol. Soc. 2013, 18, 20. [Google Scholar] [CrossRef]

- Gunn, J.S.; Ducey, M.J.; Belair, E. Evaluating degradation in a North American temperate forest. For. Ecol. Manag. 2019, 432, 415–426. [Google Scholar] [CrossRef]

- Meng, Y.; Cao, B.; Dong, C.; Dong, X. Mount Taishan Forest ecosystem health assessment based on forest inventory data. Forests 2019, 10, 657. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, X.; Wu, T.; Zhang, H. An Analysis of Shadow Effects on Spectral Vegetation Indexes Using a Ground-Based Imaging Spectrometer. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2188–2192. [Google Scholar] [CrossRef]

- Mulatu, K.A.; Decuyper, M.; Brede, B.; Kooistra, L.; Reiche, J.; Mora, B.; Herold, M. Linking terrestrial LiDAR scanner and conventional forest structure measurements with multi-modal satellite data. Forests 2019, 10, 291. [Google Scholar] [CrossRef]

- Liu, K.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Estimating forest structural attributes using UAV-LiDAR data in Ginkgo plantations. ISPRS J. Photogramm. Remote Sens. 2018, 146, 465–482. [Google Scholar] [CrossRef]

- Hugenholtz, C.H.; Whitehead, K.; Brown, O.W.; Barchyn, T.E.; Moorman, B.J.; LeClair, A.; Riddell, K.; Hamilton, T. Geomorphological mapping with a small unmanned aircraft system (sUAS): Feature detection and accuracy assessment of a photogrammetrically-derived digital terrain model. Geomorphology 2013, 194, 16–24. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Vanderbilt, B.C.; Ramezan, C.A. Land cover classification and feature extraction from National Agriculture Imagery Program (NAIP) Orthoimagery: A review. Photogramm. Eng. Remote Sens. 2017, 83, 737–747. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Blomqvist, M.; Lyytikäinen-Saarenmaa, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Holopainen, M. Remote sensing of bark beetle damage in urban forests at individual tree level using a novel hyperspectral camera from UAV and aircraft. Urban For. Urban Green. 2018, 30, 72–83. [Google Scholar] [CrossRef]

- Cardil, A.; Vepakomma, U.; Brotons, L. Assessing pine processionary moth defoliation using unmanned aerial systems. Forests 2017, 8, 402. [Google Scholar] [CrossRef]

- Kampen, M.; Lederbauer, S.; Mund, J.P.; Immitzer, M. UAV-Based Multispectral Data for Tree Species Classification and Tree Vitality Analysis. In Proceedings of the Dreilandertagung der DGPF, der OVG und der SGPF, Vienna, Austria, 20–22 February 2019; pp. 623–639. [Google Scholar]

- Barbedo, J.G.A. A review on the use of unmanned aerial vehicles and imaging sensors for monitoring and assessing plant stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Tree, R.M.; Slusser, J. Measurement of spectral signatures of invasive plant species with a low cost spectrometer. In Proceedings of the Optics and Photonics, San Diego, CA, USA, 31 July–4 August 2005; Volume 5886, pp. 264–272. [Google Scholar]

- Jha, C.S.; Singhal, J.; Reddy, S.; Rajashekar, G.; Maity, S.; Patnaik, C.; Das, A.; Misra, A.; Singh, C.P.; Mohapatra, J. Characterization of species diversity and forest health using AVIRIS-NG hyperspectral remote sensing data. Curr. Sci. 2019, 116, 1124–1135. [Google Scholar] [CrossRef]

- Adam, E.; Deng, H.; Odindi, J.; Abdel-Rahman, E.M.; Mutanga, O. Detecting the early stage of phaeosphaeria leaf spot infestations in maize crop using in situ hyperspectral data and guided regularized random forest algorithm. J. Spectrosc. 2017, 2017, 6961387. [Google Scholar] [CrossRef]

- Zhu, X.; Skidmore, A.K.; Darvishzadeh, R.; Wang, T. Estimation of forest leaf water content through inversion of a radiative transfer model from LiDAR and hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2019, 74, 120–129. [Google Scholar] [CrossRef]

- Olsson, P.O.; Vivekar, A.; Adler, K.; Garcia Millan, V.E.; Koc, A.; Alamrani, M.; Eklundh, L. Radiometric correction of multispectral uas images: Evaluating the accuracy of the parrot sequoia camera and sunshine sensor. Remote Sens. 2021, 13, 577. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring from an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Xia, H.; Zhao, W.; Li, A.; Bian, J.; Zhang, Z. Subpixel inundation mapping using landsat-8 OLI and UAV data for a wetland region on the zoige plateau, China. Remote Sens. 2017, 9, 31. [Google Scholar] [CrossRef]

| Coniferous | Coniferous Stressed | Deciduous | Deciduous Stressed | Dead/Degraded | |

|---|---|---|---|---|---|

| NAIP | 87 | 70 | 84 | 71 | 79 |

| UAS | 90 | 70 | 84 | 73 | 91 |

| 55% Training Split | 50%Training Split | 55% Training and Feature Reduction | 55% Training Out-of-Bag | Coniferous Only | Deciduous Only | Healthy/Stressed/Degraded | |

|---|---|---|---|---|---|---|---|

| 1 | 0.5568 | 0.5153 | 0.5227 | 0.4093 | 0.7196 | 0.6698 | 0.7102 |

| 2 | 0.5 | 0.5051 | 0.4943 | 0.3907 | 0.729 | 0.6604 | 0.7102 |

| 3 | 0.5568 | 0.5051 | 0.4545 | 0.4093 | 0.729 | 0.6509 | 0.7443 |

| 4 | 0.517 | 0.5204 | 0.5 | 0.386 | 0.7102 | 0.6509 | 0.6875 |

| 5 | 0.4886 | 0.4847 | 0.4659 | 0.4093 | 0.729 | 0.6604 | 0.7102 |

| 6 | 0.5057 | 0.4796 | 0.4375 | 0.3814 | 0.7383 | 0.6227 | 0.6761 |

| 7 | 0.4602 | 0.5102 | 0.7943 | 0.4093 | 0.7102 | 0.6604 | 0.75 |

| 8 | 0.4886 | 0.5051 | 0.4716 | 0.3907 | 0.6822 | 0.6887 | 0.7045 |

| 9 | 0.5 | 0.4643 | 0.4261 | 0.3953 | 0.757 | 0.6509 | 0.6818 |

| 10 | 0.4487 | 0.5051 | 0.483 | 0.3953 | 0.7477 | 0.717 | 0.6875 |

| Average | 0.50224 | 0.49949 | 0.50499 | 0.39766 | 0.72522 | 0.66321 | 0.70623 |

| Field (Reference) Data | ||||||||

|---|---|---|---|---|---|---|---|---|

| NAIP Imagery Using the RF Classifier | C | D | CS | DS | Snag | Total | Users Accuracy | |

| C | 27 | 8 | 5 | 8 | 2 | 50 | 54.0% | |

| D | 6 | 19 | 1 | 3 | 0 | 29 | 65.52% | |

| CS | 2 | 1 | 12 | 8 | 4 | 27 | 44.44% | |

| DS | 2 | 8 | 6 | 8 | 0 | 24 | 33.33% | |

| Snag | 2 | 2 | 7 | 5 | 30 | 46 | 65.21% | |

| Total | 39 | 38 | 31 | 32 | 36 | 96/174 | ||

| Producers Accuracy | 69.23% | 50.0% | 38.71% | 25.0% | 83.33% | Overall Accuracy 55.17% | ||

| 55% Training Split | 50% Training Split | 55% Training and Feature Reduction | Without green and red (SODA) | Without SODA Bands | 55% Training Out-of-Bag | Conifer Only | Deciduous Only | SVM | Healthy/ Stressed/ Degraded | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.6685 | 0.6225 | 0.6522 | 0.6685 | 0.6576 | 0.6637 | 0.7876 | 0.7232 | 0.5761 | 0.7609 |

| 2 | 0.6359 | 0.6373 | 0.6304 | 0.6522 | 0.6196 | 0.6592 | 0.7522 | 0.7321 | 0.6087 | 0.701 |

| 3 | 0.6413 | 0.6814 | 0.6141 | 0.6413 | 0.6359 | 0.6637 | 0.8053 | 0.7679 | 0.587 | 0.7174 |

| 4 | 0.6304 | 0.6618 | 0.6793 | 0.6793 | 0.6685 | 0.6592 | 0.7522 | 0.7946 | 0.5489 | 0.7228 |

| 5 | 0.6359 | 0.652 | 0.663 | 0.6413 | 0.6087 | 0.6771 | 0.7876 | 0.7768 | 0.5543 | 0.701 |

| 6 | 0.625 | 0.6373 | 0.6359 | 0.5978 | 0.5987 | 0.6099 | 0.7788 | 0.6696 | 0.5924 | 0.7065 |

| 7 | 0.6685 | 0.6029 | 0.6413 | 0.6467 | 0.6467 | 0.6457 | 0.7964 | 0.7054 | 0.5543 | 0.6848 |

| 8 | 0.6685 | 0.6667 | 0.6737 | 0.6033 | 0.663 | 0.6323 | 0.7611 | 0.6607 | 0.5707 | 0.7174 |

| 9 | 0.6413 | 0.652 | 0.6739 | 0.6304 | 0.6576 | 0.6637 | 0.7788 | 0.7232 | 0.5435 | 0.6902 |

| 10 | 0.6576 | 0.6324 | 0.6413 | 0.5924 | 0.6902 | 0.6682 | 0.8407 | 0.7232 | 0.5652 | 0.7174 |

| Average | 0.64729 | 0.64463 | 0.65051 | 0.63532 | 0.64465 | 0.65427 | 0.78407 | 0.72767 | 0.57011 | 0.71194 |

| Field (Reference) Data | ||||||||

|---|---|---|---|---|---|---|---|---|

| UAS Imagery Using the RF Classifier | C | D | CS | DS | Snag | Total | Users Accuracy | |

| C | 30 | 1 | 8 | 7 | 4 | 50 | 60.0% | |

| D | 7 | 31 | 0 | 13 | 0 | 51 | 60.78% | |

| CS | 3 | 0 | 18 | 4 | 0 | 25 | 72.0% | |

| DS | 1 | 6 | 3 | 6 | 5 | 21 | 28.57% | |

| Snag | 0 | 0 | 2 | 3 | 32 | 37 | 86.49% | |

| Total | 41 | 38 | 31 | 33 | 41 | 117/184 | ||

| Producers Accuracy | 73.17% | 81.5% | 58.06% | 18.18% | 78.05% | Overall Accuracy 63.59% | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fraser, B.T.; Congalton, R.G. Monitoring Fine-Scale Forest Health Using Unmanned Aerial Systems (UAS) Multispectral Models. Remote Sens. 2021, 13, 4873. https://doi.org/10.3390/rs13234873

Fraser BT, Congalton RG. Monitoring Fine-Scale Forest Health Using Unmanned Aerial Systems (UAS) Multispectral Models. Remote Sensing. 2021; 13(23):4873. https://doi.org/10.3390/rs13234873

Chicago/Turabian StyleFraser, Benjamin T., and Russell G. Congalton. 2021. "Monitoring Fine-Scale Forest Health Using Unmanned Aerial Systems (UAS) Multispectral Models" Remote Sensing 13, no. 23: 4873. https://doi.org/10.3390/rs13234873

APA StyleFraser, B. T., & Congalton, R. G. (2021). Monitoring Fine-Scale Forest Health Using Unmanned Aerial Systems (UAS) Multispectral Models. Remote Sensing, 13(23), 4873. https://doi.org/10.3390/rs13234873