Automatic Windthrow Detection Using Very-High-Resolution Satellite Imagery and Deep Learning

Abstract

1. Introduction

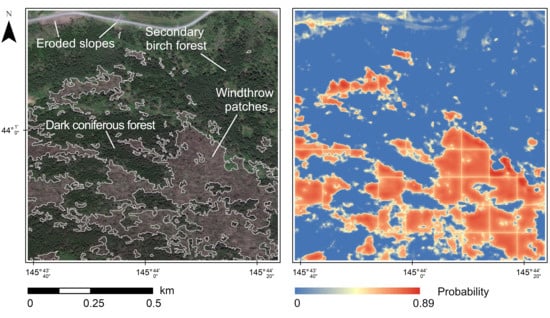

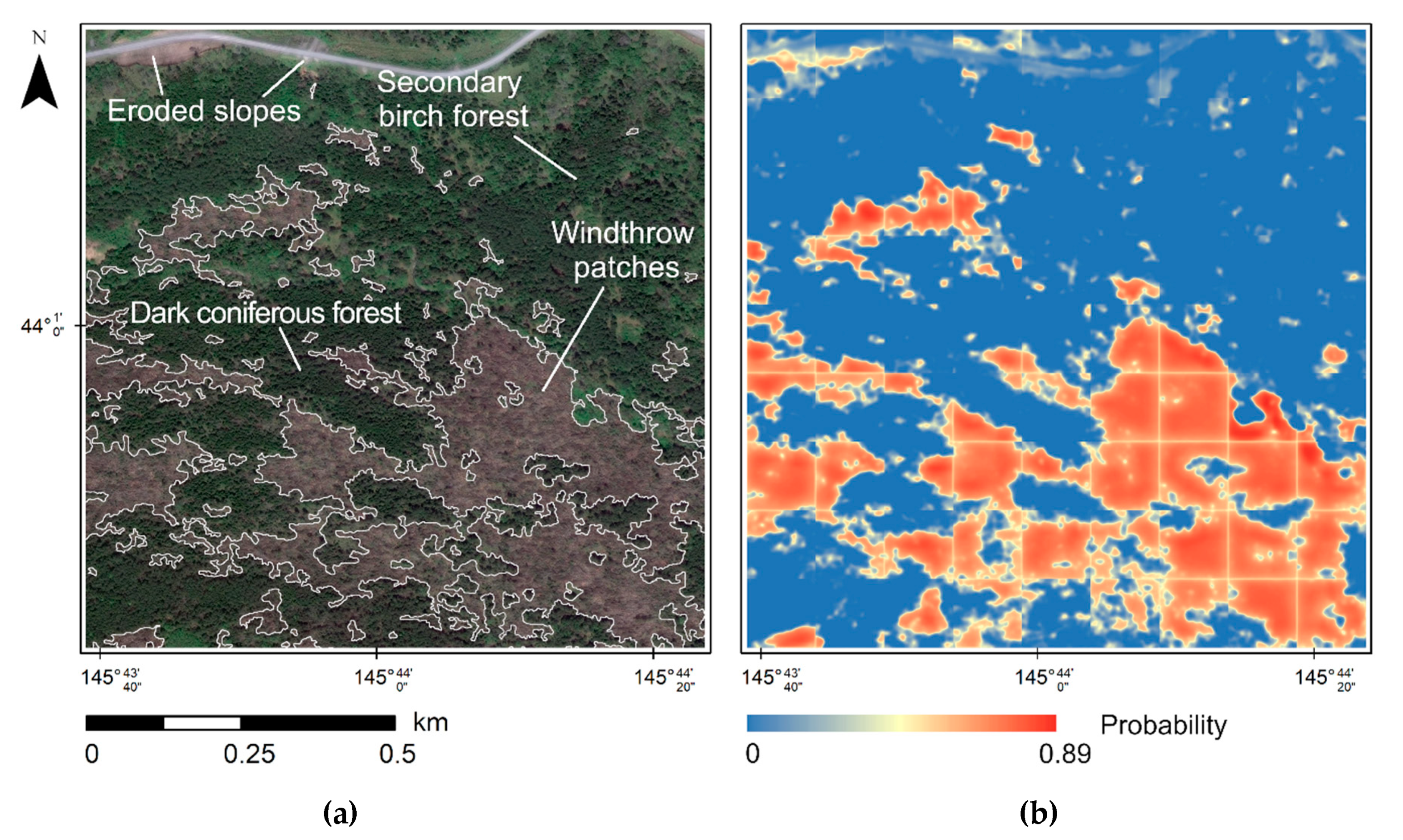

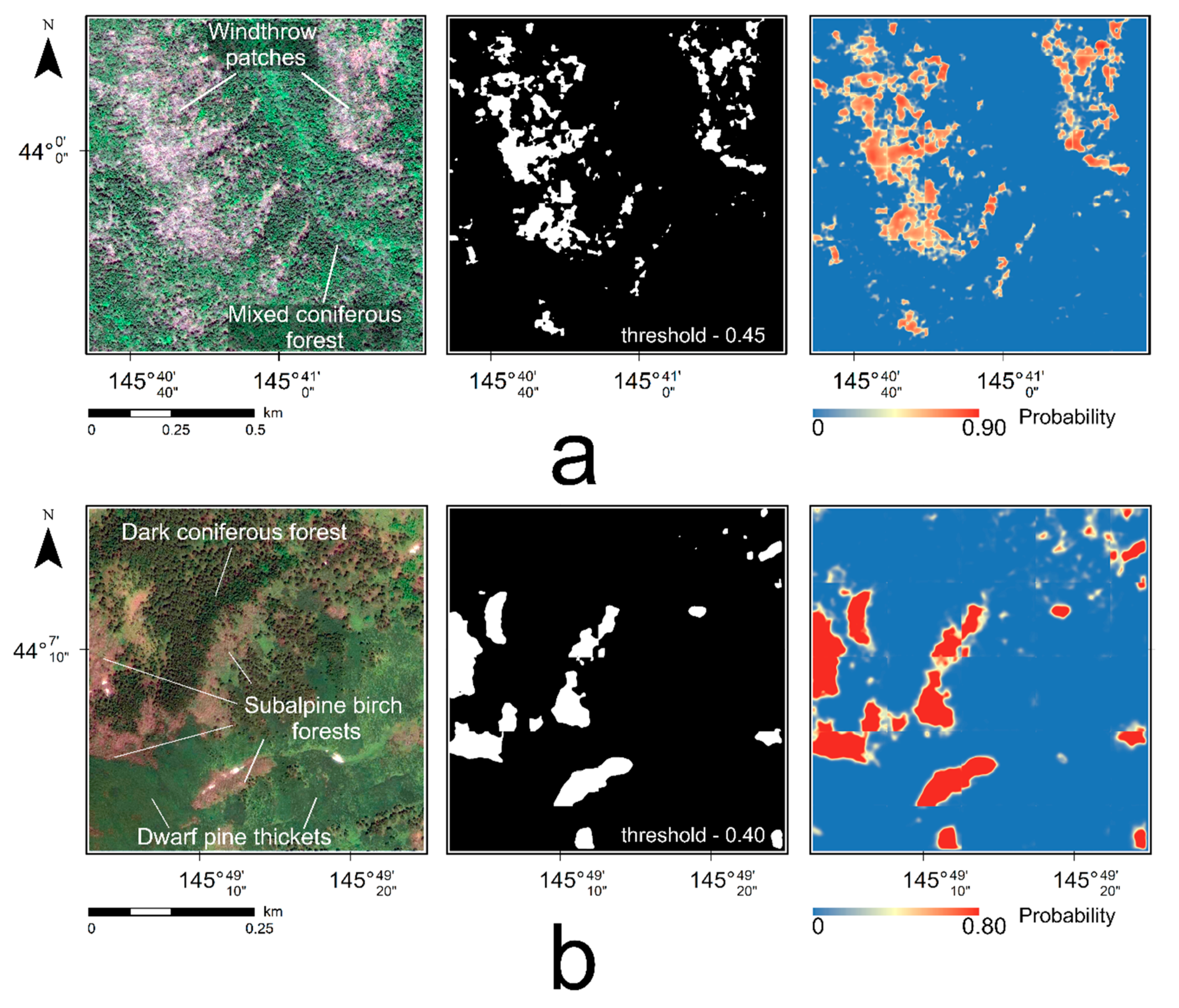

2. Materials and Methods

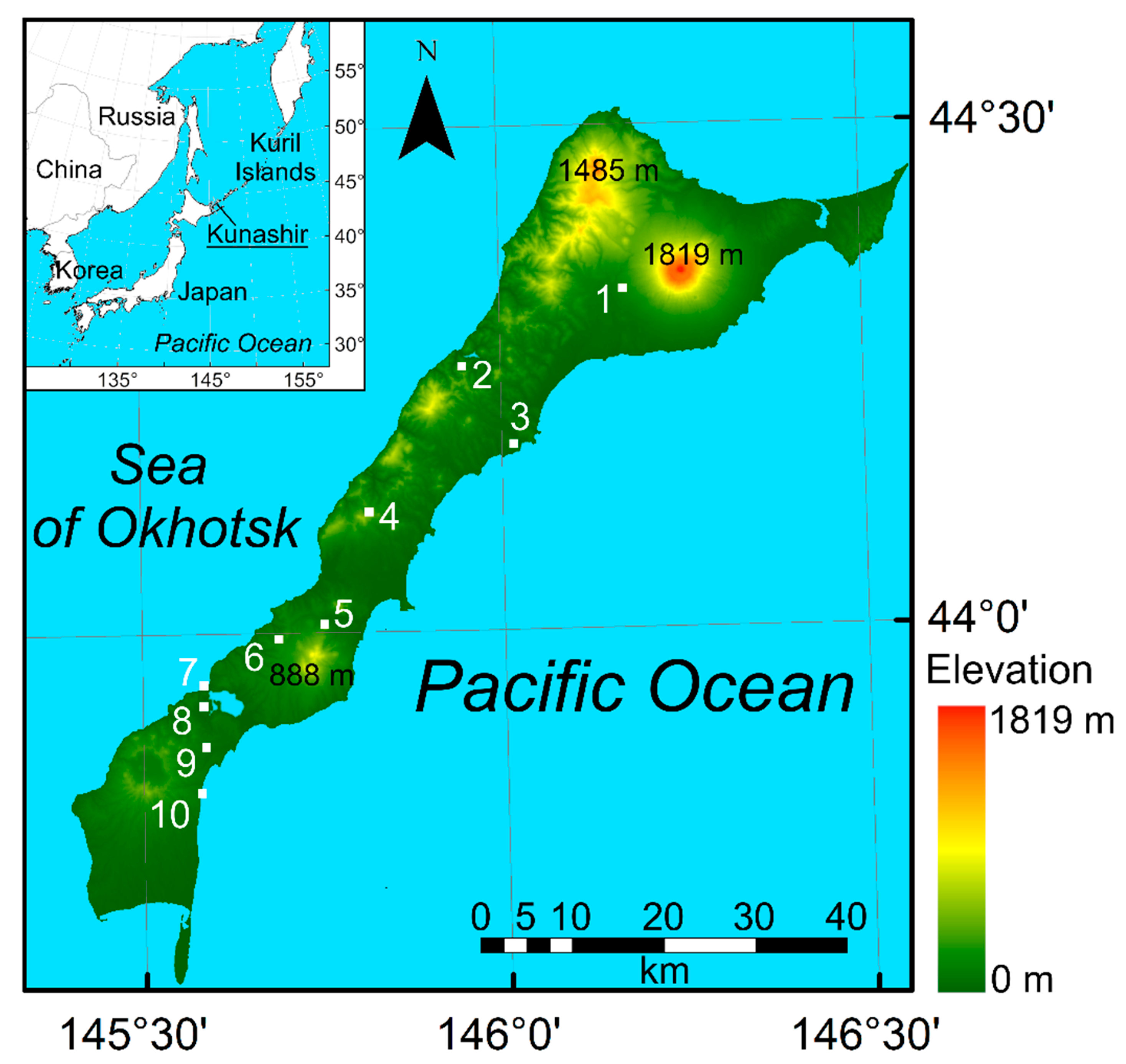

2.1. Study Area

2.2. Satellite Data

2.3. Training and Validation Data

2.4. Artificial Neural Network Architecture

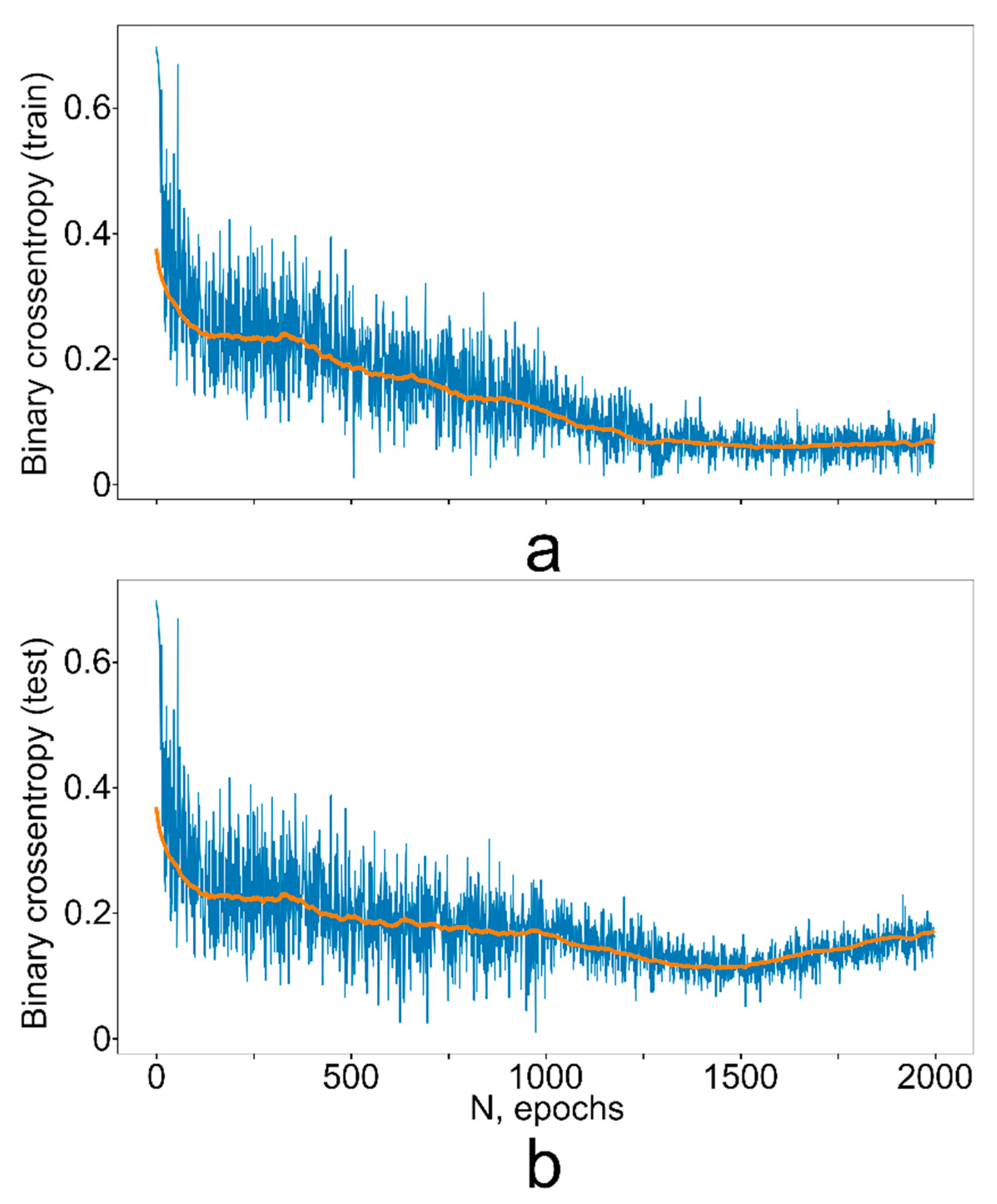

2.5. Neural Network Implementation and Tuning

2.6. Comparison to Traditional Machine Learning Algorithms

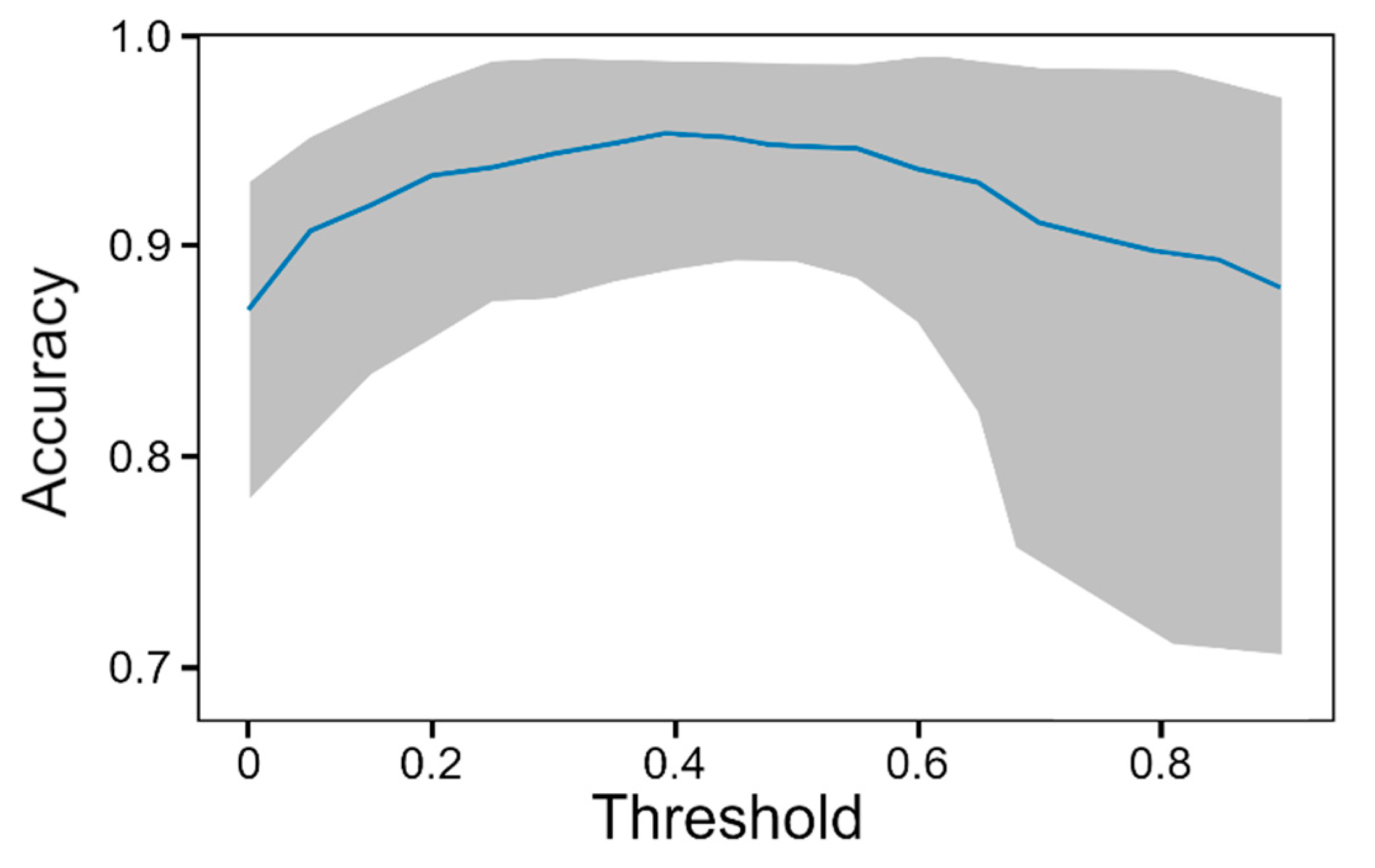

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Algorithm A1: Recursive definition of U-Net-like CNN | |

| 1: | DEF CONV_BLOCK(input, num_layers, batch_norm = False, residual= False, dropout = 0): |

| 2: | x = Conv2D(num_layers, 3, activation = “relu”, padding= ‘same’)(input) |

| 3: | x = BatchNormalization()(x) if batch_norm else x |

| 4: | x = Dropout(dropout)(x) if dropout ! = 0 else x |

| 5: | x = Conv2D(num_layers, 3, activation = “relu”, padding = ‘same’)(x) |

| 6: | x = BatchNormalization()(x) if batch_norm is True else x |

| 7: | output = Concatenate()([input, x]) if residual is True else x |

| 8: | return output |

| 9: | DEF UNET_BLOCK(input, num_layers, depth, layer_rate = 2, batch_norm = False, residual = False, dropout = 0.5): |

| 10: | if depth > 0: |

| 11: | x = CONV_BLOCK(input, num_layers, batch_norm, residual, dropout) |

| 12: | x = MaxPooling2D()(x) |

| 13: | x = UNET_BLOCK(x, round(layer_rate * dim), depth − 1, |

| 14: | layer_rate, batch_norm, residual, dropout) |

| 15: | x = UpSampling2D()(x) |

| 16: | x = Conv2D(dim, 2, activation = acti, padding = ‘same’)(x) |

| 17: | x = Concatenate()([input, x]) |

| 18: | output = CONV_BLOCK(x, dim, batch_norm, residual) |

| 19: | else: |

| 20: | output = CONV_BLOCK(input, dim, batch_norm, residual, dropout) |

| 21: | return output |

| 22: | DEF GET_UNet(input_shape, depth = 4, layer_rate = 2, batch_norm = False, residual = False, dropout = 0.5): |

| 23: | input = Input(input_shape) |

| 24: | x = UNET_BLOCK(input, num_layers = 64, depth = 4, layer_rate = 2, batch_norm = False, residual = False, dropout = 0.5) |

| 25: | output = Conv2D(out_ch, 1, activation = ‘sigmoid’)(x) |

| 26: | return Model(inputs = input, outputs = output) |

Appendix B

References

- Boose, E.R.; Foster, D.R.; Fluet, M. Hurricane impacts to tropical and temperate forest landscapes. Ecol. Monogr. 1994, 65, 369–400. [Google Scholar] [CrossRef]

- Everham, E.M.; Brokaw, N.V.L. Forest damage and recovery from catastrophic wind. Bot. Rev. 1996, 62, 113–185. [Google Scholar] [CrossRef]

- Ulanova, N.G. The effects of windthrow on forests at different spatial scales: A review. For. Ecol. Manag. 2000, 135, 155–167. [Google Scholar] [CrossRef]

- Fischer, A.; Marshall, P.; Camp, A. Disturbances in deciduous temperate forest ecosystems of the northern hemisphere: Their effects on both recent and future forest development. Biodiver. Conserv. 2013, 22, 1863–1893. [Google Scholar] [CrossRef]

- Mitchell, S.J. Wind as a natural disturbance agent in forests: A synthesis. Forestry 2013, 86, 147–157. [Google Scholar] [CrossRef]

- Webster, P.J.; Holland, G.J.; Curry, J.A.; Chang, H.R. Changes in tropical cyclone number, duration and intensity in a warming environment. Science 2005, 309, 1844–1846. [Google Scholar] [CrossRef]

- Turner, M.G. Disturbance and landscape dynamics in a changing world. Ecology 2010, 9, 2833–2849. [Google Scholar] [CrossRef]

- Altman, J.; Ukhvatkina, O.N.; Omelko, A.M.; Macek, M.; Plener, T.; Pejcha, V.; Cerny, T.; Petrik, P.; Strutek, M.; Song, J.-S.; et al. Poleward migration of the destructive effects of tropical cyclones during the 20th century. Proc. Natl. Acad. Sci. USA 2019, 115, 11543–11548. [Google Scholar] [CrossRef]

- Senf, C.; Seidl, R. Natural disturbances are spatially diverse but temporally synchronized across temperate forest landscapes in Europe. Glob. Chang. Biol. 2018, 24, 1201–1211. [Google Scholar] [CrossRef]

- Asbridge, E.; Lucas, R.; Rogers, K.; Accad, A. The extent of mangrove change and potential for recovery following severe Tropical Cyclone Yasi, Hinchinbrook Island, Queensland, Australia. Ecol. Evol. 2018, 8, 10416–10434. [Google Scholar] [CrossRef]

- Sommerfeld, A.; Senf, C.; Buma, B.; D’Amato, A.W.; Després, T.; Díaz–Hormazábal, I.; Fraver, S.; Frelich, L.E.; Gutiérrez, Á.G.; Hart, S.J.; et al. Patterns and drivers of recent disturbances across the temperate forest biome. Nat. Commun. 2018, 9, 4355. [Google Scholar] [CrossRef]

- Knohl, A.; Kolle, O.; Minayeva, T.Y.; Milyukova, I.M.; Vygodskaya, N.N.; Foken, T.; Schulze, E.-D. Carbon dioxide exchange of a Russian boreal forest after disturbance by wind throw. Glob. Chang. Biol. 2002, 8, 231–246. [Google Scholar] [CrossRef]

- Thürig, E.; Palosuo, T.; Bucher, J.; Kaufmann, E. The impact of windthrow on carbon sequestration in Switzerland: A model-based assessment. For. Ecol. Manag. 2005, 210, 337–350. [Google Scholar] [CrossRef]

- He, H.S.; Shang, B.Z.; Crow, T.R.; Gustafson, E.J.; Shifley, S.R. Simulating forest fuel and fire risk dynamics across landscapes—LANDIS fuel module design. Ecol. Model. 2004, 180, 135–151. [Google Scholar] [CrossRef]

- Bouget, C.; Duelli, P. The effects of windthrow on forest insect communities: A literature review. Biol. Conserv. 2004, 118, 281–299. [Google Scholar] [CrossRef]

- Lindenmayer, D.B.; Burton, P.J.; Franklin, J.F. Salvage Logging and Its Ecological Consequences, 2nd ed.; Island Press: Washington, DC, USA, 2012; 246p. [Google Scholar]

- Mokroš, M.; Výbošťok, J.; Merganič, J.; Hollaus, M.; Barton, I.; Koreň, M.; Tomaštík, J.; Čerňava, J. Early stage forest windthrow estimation based on unmanned aircraft system imagery. Forests 2017, 8, 306. [Google Scholar] [CrossRef]

- Global Forest Watch: Forest Monitoring Designed for Action. Available online: https://www.globalforestwatch.org (accessed on 28 February 2020).

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R. High-resolution global maps of 21st-century forest cover change. Science 2013, 6160, 850–853. [Google Scholar] [CrossRef] [PubMed]

- Potapov, P.; Hansen, M.C.; Kommareddy, I.; Kommareddy, A.; Turubanova, S.; Pickens, A.; Adusei, B.; Tyukavina, A.; Ying, Q. Landsat analysis ready data for global land cover and land cover change mapping. Remote Sens. 2020, 12, 426. [Google Scholar] [CrossRef]

- Chehata, N.; Orny, C.; Boukir, S.; Gyoon, D.; Wigneron, J.P. Object-based change detection in wind storm-damaged forest using high-resolution multispectral images. Int. J. Remote Sens. 2014, 35, 4758–4777. [Google Scholar] [CrossRef]

- Senf, C.; Seidl, R.; Pflugmacher, D.; Hostert, P.; Seidl, R. Using Landsat time series for characterizing forest disturbance dynamics in the coupled human and natural systems of Central Europe. ISPRS J. Photogram. 2017, 130, 453–463. [Google Scholar] [CrossRef]

- Haidu, I.; Fortuna, P.R.; Lebaut, S. Detection of old scattered windthrow using low cost resources. The case of Storm Xynthia in the Vosges Mountains, 28 February 2010. Open Geosci. 2019, 11, 492–504. [Google Scholar] [CrossRef]

- Rüetschi, M.; Small, D.; Waser, L.T. Rapid detection of windthrows using Sentinel-1 C-band SAR data. Remote Sens. 2019, 11, 115. [Google Scholar] [CrossRef]

- Einzmann, K.; Immitzer, M.; Böck, S.; Bauer, O.; Schmitt, A.; Atzberger, C. Windthrow detection in European forests with very high-resolution optical data. Forests 2017, 8, 21. [Google Scholar] [CrossRef]

- Jackson, R.G.; Foody, G.M.; Quine, C.P. Characterising windthrown gaps from fine spatial resolution remotely sensed data. For. Ecol. Manag. 2000, 135, 253–260. [Google Scholar] [CrossRef]

- Honkavaara, E.; Litkey, P.; Nurminen, K. Automatic storm damage detection in forests using high-altitude photogrammetric imagery. Remote Sens. 2013, 5, 1405–1424. [Google Scholar] [CrossRef]

- Duan, F.; Wan, Y.; Deng, L. A novel approach for coarse-to-fine windthrown tree extraction based on unmanned aerial vehicle images. Remote Sens. 2017, 9, 306. [Google Scholar] [CrossRef]

- Ravì, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.-Z. Deep learning for health informatics. IEEE J. Biomed. Health 2017, 21, 4–21. [Google Scholar] [CrossRef]

- Christin, S.; Hervet, É.; Lecomte, N. Applications for deep learning in ecology. Methods Ecol. Evol. 2019, 10, 1632–1644. [Google Scholar] [CrossRef]

- Lamba, A.; Cassey, P.; Segaran, R.J.; Koh, L.P. Deep learning for environmental conservation. Curr. Biol. 2019, 29, R977–R982. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. arXiv 2015, arXiv:1411.4038v2. [Google Scholar]

- Arnab, A.; Torr, P.H.S. Pixelwise instance segmentation with a dynamically instantiated betwork. arXiv 2017, arXiv:1704.02386. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of DL algorithms and architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Brodrick, P.G.; Davies, A.B.; Asner, G.P. Uncovering ecological patterns with convolutional neural networks. Trends Ecol. Evol. 2019, 34, 734–745. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional neural networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef]

- Hamdi, Z.M.; Brandmeier, M.; Straub, C. Forest damage assessment using deep learning on high resolution remote sensing data. Remote Sens. 2019, 11, 1976. [Google Scholar] [CrossRef]

- Rammer, W.; Rupert, S. Harnessing deep learning in ecology: An example predicting bark beetle outbreaks. Front. Plant Sci. 2019, 10, 1327. [Google Scholar] [CrossRef]

- Wagner, F.H.; Sanchez, A.; Tarabalka, Y.; Lotte, R.G.; Ferreira, M.P.; Aidar, M.P.; Gloor, E.; Phillips, O.L.; Aragão, L.E.O.C. Using the U-Net convolutional network to map forest types and disturbance in the Atlantic rainforest with very high resolution images. Remote Sens. Ecol. Conserv. 2019, 5, 360–375. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sen. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. arXiv 2015, arXiv:1606.06650. [Google Scholar]

- Ayrey, E.; Hayes, D.J. The use of three-dimensional convolutional neural networks to interpret LiDAR for forest inventory. Remote Sens. 2018, 10, 649. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep convolutional neural network for complex wetland classification using optical remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Wiser, S.; Burrows, L.; Fassnacht, F.E.; Schmidtlein, S. Convolutional neural networks accurately predict cover fractions of plant species and communities in unmanned aerial vehicle imagery. Remote Sens. Ecol. Conserv. 2020. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, L.; Kelly, M. Identification of citrus trees from unmanned aerial vehicle imagery using convolutional neural networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- Weather Archive in Yuzhno-Kurilsk. Available online: https://rp5.ru/Weather_archive_in_Yuzhno-Kurilsk (accessed on 24 March 2020).

- Pleiades-HR (High-Resolution Pptical Imaging Constellation of CNES). Available online: https://earth.esa.int/web/eoportal/satellite-missions/p/pleiades (accessed on 28 February 2020).

- WorldView-3 (WV-3). Available online: https://earth.esa.int/web/eoportal/satellite-missions/v-w-x-y-z/worldview-3 (accessed on 28 February 2020).

- Shorten, C.; Khoshgoftaar, T.J. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Chollet, F.; Fariz, R.; Taehoon, L.; de Marmiesse, G.; Oleg, Z.; Max, P.; Eder, S.; Thomas, M.; Xavier, S.; Frédéric, B.-C.; et al. Keras. GitHub. 2015. Available online: https://github.com/fchollet/keras (accessed on 26 March 2020).

- Gupta, S.; Girshick, R.; Arbelaez, P.; Malik, J. Learning rich features from RGB-D images for object detection and segmentation. arXiv 2014, arXiv:1407.5736v1. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. arXiv 2016, arXiv:1606.00915v2. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Yu, L.C.; Sung, W.K. Understanding geometry of encoder-decoder CNNs. arXiv 2019, arXiv:1901.07647v2. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167v3. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Evaluation of the CNN Design Choices Performance on ImageNet-2012. Available online: https://github.com/ducha-aiki/caffenet-benchmark (accessed on 24 March 2020).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-scale machine learning on heterogeneous systems. arXiv 2016, arXiv:1603.04467v2. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Mannor, S.; Peleg, D.; Rubinstein, R. The cross entropy method for classification. In Proceedings of the 22nd International Conference on Machine Learning (ICML ’05); Association for Computing Machinery: New York, NY, USA, 2005; pp. 561–568. [Google Scholar] [CrossRef]

- Zhang, H. The optimality of naive Bayes. In Proceedings of the Seventeenth International Florida Artificial Intelligence Research Society Conference (FLAIRS), Miami Beach, FL, USA, 12–14 May 2004; Available online: https://www.aaai.org/Library/FLAIRS/2004/flairs04-097.php (accessed on 26 March 2020).

- Cramer, J.S. The origins of logistic regression. Tinbergen Inst. Work. Pap. 2002, 119, 16. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Kégl, B. The return of AdaBoost.MH: Multi-class Hamming trees. arXiv 2013, arXiv:1312.6086. [Google Scholar]

- Moré, J.J. The Levenberg-Marquardt algorithm: Implementation and theory. In Numerical Analysis; Watson, G.A., Ed.; Springer: Berlin, Germany, 1978; pp. 105–116. [Google Scholar] [CrossRef]

- Lopatin, J.; Dolos, K.; Kattenborn, T.; Fassnacht, F.E. How canopy shadow affects invasive plant species classification in high spatial resolution remote sensing. Remote Sens. Ecol. Conserv. 2019, 5, 302–317. [Google Scholar] [CrossRef]

| Site Number | Satellite | Date | Image ID | Main Vegetation Types | Windthrow Patches | Using |

|---|---|---|---|---|---|---|

| 1 | Pleiades-1A | 17 July 2015 | DS_PHR1A_201507170119408_FR1_PX_E146N44_0410_03088 | DC, M, SB | + | Train |

| 2 | Pleiades-1A | 10 July 2015 | DS_PHR1A_201507100123116_FR1_PX_E145N44_1106_01880 | DB, DC, SB, | + | Validation |

| 3 | Pleiades-1B | 01 June 2015 | DS_PHR1B_201506010122019_FR1_PX_E146N44_0109_03525 | DB, DC, SB, | + | Train |

| 4 | WorldView-3 | 01 June 2015 | 104001000CC65500 | DB, DC, SB, PT | − | Validation |

| 5 | Pleiades-1B | 01 June 2015 | DS_PHR1B_201506010122226_FR1_PX_E145N43_0822_01654 | DC | + | Train |

| 6 | Pleiades-1B | 01 June 2015 | DS_PHR1B_201506010122226_FR1_PX_E145N43_0822_01654 | DC, DC-M | + | Validation |

| 7 | Pleiades-1B | 01 June 2015 | DS_PHR1B_201506010122421_FR1_PX_E145N43_0619_02996 | DB, S, W | − | Train |

| 8 | Pleiades-1B | 01 June 2015 | DS_PHR1B_201506010122226_FR1_PX_E145N43_0822_01654 | DB, DC-M, SB, W | + | Train |

| 9 | Pleiades-1B | 01 June 2015 | DS_PHR1B_201506010122421_FR1_PX_E145N43_0619_02996 | DC, DC-M, W | + | Train |

| 10 | Pleiades-1B | 01 June 2015 | DS_PHR1B_201506010122226_FR1_PX_E145N43_0822_01654 | S, W | − | Train |

| Method | Accuracy, % | MeanIoU |

|---|---|---|

| Naive Bayes classifier | 56 | less 0.01 |

| Logistic Regression + L2 | 74 | 0.07 |

| Support Vector Machine | 79 | 0.09 |

| Boosted RF (AdaBoost) | 83 | 0.15 |

| U-Net-like CNN | 94 | 0.46 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kislov, D.E.; Korznikov, K.A. Automatic Windthrow Detection Using Very-High-Resolution Satellite Imagery and Deep Learning. Remote Sens. 2020, 12, 1145. https://doi.org/10.3390/rs12071145

Kislov DE, Korznikov KA. Automatic Windthrow Detection Using Very-High-Resolution Satellite Imagery and Deep Learning. Remote Sensing. 2020; 12(7):1145. https://doi.org/10.3390/rs12071145

Chicago/Turabian StyleKislov, Dmitry E., and Kirill A. Korznikov. 2020. "Automatic Windthrow Detection Using Very-High-Resolution Satellite Imagery and Deep Learning" Remote Sensing 12, no. 7: 1145. https://doi.org/10.3390/rs12071145

APA StyleKislov, D. E., & Korznikov, K. A. (2020). Automatic Windthrow Detection Using Very-High-Resolution Satellite Imagery and Deep Learning. Remote Sensing, 12(7), 1145. https://doi.org/10.3390/rs12071145