Abstract

This study assesses the application of artificial intelligence (AI) algorithms for optimizing resource allocation, demand-supply matching, and dynamic pricing within circular economy (CE) digital marketplaces. Five AI models—autoregressive integrated moving average (ARIMA), long short-term memory (LSTM), random forest (RF), gradient boosting regressor (GBR), and neural networks (NNs)—were evaluated based on their effectiveness in predicting waste generation, economic growth, and energy prices. The GBR model outperformed the others, achieving a mean absolute error (MAE) of 23.39 and an of 0.7586 in demand forecasting, demonstrating strong potential for resource flow management. In contrast, the NNs encountered limitations in supply prediction, with an MAE of 121.86 and an of 0.0151, indicating challenges in adapting to market volatility. Reinforcement learning methods, specifically Q-learning and deep Q-learning (DQL), were applied for price stabilization, resulting in reduced price fluctuations and improved market stability. These findings contribute a conceptual framework for AI-driven CE marketplaces, showcasing the role of AI in enhancing resource efficiency and supporting sustainable urban development. While synthetic data enabled controlled experimentation, this study acknowledges its limitations in capturing full real-world variability, marking a direction for future research to validate findings with real-world data. Moreover, ethical considerations, such as algorithmic fairness and transparency, are critical for responsible AI integration in circular economy contexts.

1. Introduction

The circular economy (CE) offers a transformative model which diverges from the conventional linear “take, make, dispose” paradigm by promoting a regenerative system focused on minimizing resource input, waste, and emissions. By slowing, closing, and narrowing resource loops through reuse, recycling, and remanufacturing, the CE directly addresses environmental concerns like resource scarcity and climate change. Extending product lifecycles and reducing waste enhances resource efficiency, supporting the Sustainable Development Goals. Yet, implementing a CE, particularly in complex urban settings, poses significant challenges in efficiently managing dynamic resource flows.

Artificial intelligence (AI) has emerged as a powerful tool to advance CE objectives by enhancing capabilities in data processing, pattern recognition, forecasting, and resource optimization. Applications of AI in a CE include predictive modeling for resource consumption, waste forecasting, and efficient demand-supply matching. By leveraging large datasets, AI-powered systems can dynamically adjust resource flows, optimize pricing, and align with circular business models. This enables urban stakeholders—municipalities, businesses, and communities—to achieve greater resource efficiency and waste reduction, directly supporting CE initiatives.

AI-driven digital marketplaces can dynamically respond to real-time data, offering scalable CE solutions. However, the integration of dynamic variables such as waste generation rates, economic growth indicators, and energy costs introduces further complexities which can impact AI performance in CE settings. This study examines the feasibility of deploying AI to address these complexities, providing insights into the role of AI in sustainable resource management within the CE framework.

The following research questions (RQs) guide this study’s investigation into AI applications in circular economy (CE) digital marketplaces:

- 1.

- How effective are AI-driven models at optimizing demand-supply matching and dynamic pricing for CE marketplaces?

- 2.

- What are the specific strengths and limitations of these AI models in capturing nonlinear relationships and complex variable interactions within CE systems?

This paper is structured as follows. Section 2 reviews the literature on CE applications in urban and resource-intensive sectors, Section 3 describes the methodology and data generation process, Section 4 presents the results of the model evaluation, Section 5 discusses the implications and limitations, and Section 6 concludes with directions for future work.

2. Literature Review

2.1. Circular Economy and Its Role in Sustainable Development

Recent studies underscore the CE’s role in reducing scope-based emissions, such as Scope 3 emissions, which are notoriously challenging to manage but crucial for sustainable development. For instance, the application of a CE in reducing Scope 3 emissions across various sectors highlights its broader impact on decarbonization and supply chain sustainability [1]. Similarly, AI-enhanced CE practices improve resilience and livability in urban environments by supporting adaptive resource flows within smart city infrastructure, a critical element for sustainable urban planning [2].

The circular economy (CE) is a transformative model aimed at decoupling economic growth from resource consumption and environmental degradation. It emphasizes sustainable resource management through the 6R principles—reduce, reuse, recycle, recover, remanufacture, and redesign—which seek to extend product and material lifecycles, thereby minimizing waste and dependence on new resources [3,4]. By implementing these principles, CE enables closed material loops, creating value chains which are both resource-efficient and sustainable [5,6].

The circular economy has been increasingly recognized as a viable strategy for addressing global sustainability challenges across various sectors, including agriculture, construction, and waste management. For instance, recent studies emphasize the CE’s potential to reduce environmental impacts and enhance sustainable urban development by integrating CE principles into smart city infrastructure [1]. In the context of urban sustainability, a CE contributes significantly to waste management, resource allocation, and emissions reduction, which are essential for building resilient cities.

The application of CE principles in large-scale infrastructure, such as the construction of FIFA World Cup stadiums in Qatar, illustrates the significant role a CE can play in reducing environmental impacts in high-profile projects. Through circular strategies like recycling and reusing materials, these initiatives substantially decreased the carbon footprint associated with construction phases, underscoring the CE’s role in promoting sustainability in mega-events [1].

While substantial advancements have been made, challenges remain in scaling CE practices across traditionally linear industries and incorporating economic and energy variables which impact CE objectives. This gap emphasizes the need for innovative, interdisciplinary frameworks, such as AI-driven models, to navigate the complexities of circular business models and expand the CE’s applicability across diverse sectors.

2.2. Artificial Intelligence in a Circular Economy

Artificial intelligence (AI) has emerged as a powerful enabler for advancing circular economy (CE) initiatives by enhancing efficiency in resource allocation, waste sorting, and supply-demand management. AI applications in CE include predictive modeling to forecast resource consumption, machine learning for waste reduction, and optimization algorithms which streamline recycling processes [7,8]. These AI capabilities allow CE systems to adjust to real-time data, facilitating more proactive resource planning and minimizing waste.

Additionally, AI-driven digital marketplaces support CE models by dynamically matching supply and demand, optimizing pricing, and enhancing resilience to economic fluctuations. Such platforms utilize predictive algorithms to integrate variables such as economic growth and energy prices, allowing for efficient market operations under variable conditions [9,10]. However, the integration of complex variables in AI for CE remains a challenge, as real-time adjustments in volatile markets are necessary to achieve both environmental and economic sustainability goals.

Current research on the impact of AI in a CE primarily focuses on isolated applications, with limited exploration of the performance of AI under practical constraints such as fluctuating resource availability and economic conditions [11,12,13]. To fully leverage the potential of AI, future studies should assess the robustness and scalability of these models, ensuring they can support diverse and complex CE systems across various sectors.

2.3. Digital Marketplaces and AI-Driven Resource Management

Digital marketplaces have become pivotal platforms for advancing circular economy (CE) objectives by enabling efficient resource allocation, demand-supply matching, and dynamic pricing. These marketplaces leverage AI-driven algorithms to facilitate trading and resource management, thereby aligning with the CE’s goals of reducing waste and closing material loops. By analyzing historical data, AI within these marketplaces can forecast resource demand, optimize supply chain dynamics, and make real-time pricing adjustments to support sustainable resource management practices [7].

An important component within digital marketplaces for the CE is blockchain technology, which fosters transparency and builds trust among stakeholders. The blockchain enhances transaction security and reduces fraud, enabling decentralized exchanges which promote equitable access to marketplace resources [14,15]. Blockchain-backed decentralized trading also offers a solution to the environmental footprint linked with centralized distribution systems, promoting a more sustainable allocation model.

Despite these advantages, digital marketplaces are still evolving in their capacity to fully address CE objectives, especially in volatile market conditions. AI integration in these platforms requires further research to ensure robust performance under such conditions. Additionally, ethical issues, including algorithmic fairness and the potential for biases in AI decision making, are critical areas which need to be addressed. Ensuring transparency and aligning AI applications with social and environmental objectives are essential for the sustainable growth of these platforms [16] (Table 1).

Table 1.

Operational definitions and theoretical context for key constructs in digital marketplaces and AI-driven resource management.

3. Methodology and Conceptual Framework

3.1. Conceptual Framework for AI-Driven CE Marketplaces

This research investigates the viability of implementing artificial intelligence (AI) models within a digital marketplace framework designed to support circular economy (CE) practices. The framework centers on managing data flows across three primary processes: demand-supply alignment, price responsiveness, and resource distribution.

The research design employs a quantitative approach within this simulated digital marketplace to test the feasibility of AI models for a CE. The design aims to evaluate AI algorithms across demand-supply alignment, price responsiveness, and resource distribution, each of which is critical to effective CE operations. By simulating market interactions, this study assesses the adaptability of AI-driven processes in dynamically allocating resources and adjusting prices based on fluctuating demand and supply patterns, supporting scalable CE systems.

This framework provides a structured environment to test and observe the real-time adaptability of AI, simulating scenarios which reflect the challenges of a circular economy (CE). This design not only facilitates theoretical exploration but also provides practical insights into potential of AI for facilitating CE practices at scale, especially in complex, resource-intensive urban markets.

The digital marketplace provides a controlled environment for exploring the role of AI algorithms in enhancing resource flow efficiency and supporting sustainable practices. Each process is designed to dynamically adjust to shifts in supply, demand, and price, offering insights into how AI can facilitate efficient CE practices. This setup contributes valuable insights for informing real-world marketplace designs and policy planning.

This framework is designed to enhance resource efficiency and minimize waste by enabling AI-driven decision-making processes which directly support both economic and environmental sustainability goals within circular economy systems.

3.2. Synthetic Data Generation for AI Model Testing

A single synthetic dataset supporting this study was carefully generated to replicate realistic market dynamics within a digital marketplace for circular economy (CE) practices, encompassing multiple key variables. This dataset was developed independent of any real-world databases to provide a controlled environment, with potential for the future incorporation of real-world data for further validation. A custom Python-based script provided structured variability, producing daily, monthly, and yearly records across essential variables, including demand, supply, waste generation, pricing, economic growth rate, resource availability, and energy prices. By incorporating statistical principles and fine-tuning variability, the generated data aligned with urban CE patterns, ensuring robustness in AI model testing and scenario flexibility.

The data generation process for this study involved rigorous hyperparameter optimization to ensure realistic variability and consistency within synthetic data patterns. Hyperparameters, such as the variance levels in demand and supply variability, growth rates, and noise factors for pricing and energy costs, were iteratively tuned to capture realistic fluctuations. This optimization was critical in producing datasets which respond dynamically to simulated CE marketplace conditions, improving the relevance of model testing outcomes.

The use of synthetic data was essential due to proprietary limitations on real-world data, allowing for a controlled testing environment. However, future validations with real-world data will be necessary to confirm the generalizability of findings.

3.2.1. Generation Process and Statistical Foundation

To create a dataset which aligns with realistic market dynamics in circular economy (CE) settings, we designed each variable with specific statistical characteristics informed by observed patterns in the CE literature. This methodological approach was tailored to reflect common marketplace dynamics, such as supply-demand fluctuations and economic variability, ensuring the generated data closely mimicked real-world CE environments.

The optimization process for the key hyperparameters in data generation involved systematically testing a range of values for each statistical parameter, such as the mean and standard deviation for demand variability, energy pricing trends, and economic growth rates. Cross-validation and iterative refinement ensured that the generated data maintained alignment with established patterns in the CE literature while enhancing adaptability to market volatility:

- Demand: was modeled using a normal distribution with a mean and standard deviation , capturing typical urban consumption patterns with inherent daily variability.

- Supply was calculated as the demand plus an additional variability component (mean , ), simulating real-time adjustments in response to changes in demand.

- Pricing and energy prices were generated through a trend-based Gaussian noise model with a gradual linear increment, representing the cumulative effects of economic inflation and resource scarcity. This design challenges the AI models to adapt to both short-term market fluctuations and longer-term trends.

- Economic growth rate and waste generation were simulated with low variability (e.g., , for growth) to emulate broader economic stability while accounting for more dynamic market forces.

The synthetic data generation process relied on structured statistical techniques to approximate real-world CE marketplace dynamics accurately. To ensure a high degree of variability and robustness, parameter distributions were selected based on typical patterns observed in the CE sector. While synthetic data provides valuable control over the model inputs, we recognize its limitations and underscore the necessity of using real-world data to validate model adaptability across diverse market conditions. Future studies may explore advanced methods, such as the CTGAN [17], to further refine the variability and representational fidelity of the data.

Data preprocessing included scaling and normalization of variables to ensure compatibility across models, with synthetic data distributions compared to the real-world CE literature to confirm alignment with typical CE patterns. Exploratory data analysis (EDA) was conducted to assess the generated data consistency, including evaluations of distributional alignment, correlations, and the presence of expected trends, ensuring that the synthetic data mirrored common CE patterns reported in the literature. While synthetic data provided a controlled environment for experimentation, future studies should incorporate real-world datasets to verify the models’ performance under real market conditions, addressing any potential gaps in variability and unpredictability.

Exploratory data analysis was conducted to confirm data consistency, including evaluation of data distributions, correlation among variables, and the presence of periodic trends. This analysis ensured the dataset’s structure aligned with the expected CE marketplace dynamics. In addition, validity assessments were performed by comparing our synthetic data with known benchmarks, but future studies should prioritize validation against real-world CE marketplace data to assess model robustness more accurately.

Additionally, hourly data points were generated by introducing minor random fluctuations around the daily baseline values, enriching the dataset’s temporal resolution. This hourly granularity enhanced the evaluation of AI models on fine-grained data. The synthetic dataset was generated without any conditioning on real-world data, relying instead on structured statistical principles to approximate realistic CE marketplace dynamics.

3.2.2. Data Generation for Scenario Flexibility

The script’s parameterization (start date, period length, and variability ranges) enabled dynamic adjustments, making it possible to generate varied data scenarios. This flexibility allowed the input data to represent a range of real-world conditions, making the framework adaptable for extended CE marketplace studies.

This adaptability in the synthetic data generation process was achieved through targeted hyperparameter tuning. The key hyperparameters, including variability ranges and seasonal adjustment factors, were optimized to expand the model’s scenario generation capabilities. This allowed for data generation which not only reflected typical market conditions but also introduced structured variability for testing robustness under diverse CE scenarios.

The generated synthetic dataset supported scalability across various market scenarios, ensuring that the framework remained adaptable and flexible for extended CE marketplace studies. The dataset used in this study contained daily, monthly, and yearly records of the key variables, including supply, demand, waste generation, pricing, economic growth rate, resource availability, and energy prices. Synthetic data were generated to simulate the AI-driven digital marketplace’s operations over an extended period, allowing for an in-depth analysis of the relationships between these variables. Summaries of the statistics for the dataset are provided in Table 2 and Table 3.

Table 2.

Summary of the statistics of the input data (daily, monthly, and yearly averages for demand, supply, and pricing).

Table 3.

Summary of the statistics of the input data (daily, monthly, and yearly averages for waste generation, economic growth rate, resource availability, and energy prices).

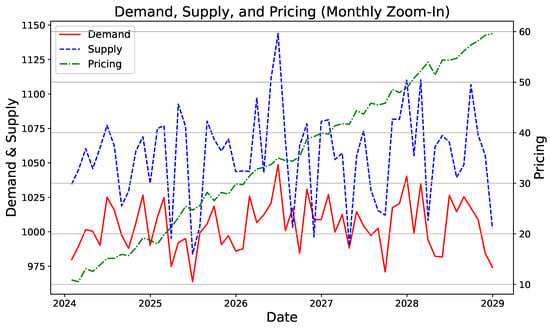

The following figures (Figure 1 and Figure 2) illustrate the trends in supply, demand, and pricing over time, segmented into daily, monthly, and yearly intervals.

Figure 1.

Daily supply, demand, and pricing over time.

Figure 2.

Monthly supply, demand, and pricing over time.

Figure 1 shows considerable variability in the daily supply and demand. The supply fluctuated significantly more than the demand, indicating periods of resource surplus which may lead to wastage. Pricing responded dynamically, with sharp peaks reflecting resource scarcity, demonstrating the market’s sensitivity to supply changes.

Figure 2 illustrates relatively stable demand with more pronounced supply volatility, likely due to seasonal variations. The steady rise in pricing corresponded to supply-demand imbalances and constraints in resource availability. As shown in Figure 1, supply consistently exceeded demand over time. This persistent supply-demand imbalance drove up pricing, influenced by broader economic factors and resource limitations.

While synthetic data allowed for structured variability and control within the CE marketplace simulation, they lacked some real-world complexities, such as unanticipated market fluctuations and external policy impacts. Additionally, while the study focused on key variables such as demand, supply, and energy prices, other factors, like material type and quality, are also critical in determining resource flows within CE systems. Future studies will incorporate real-world data as they become available, aiming to further validate the model’s performance in live CE settings. The choice of synthetic data in this study was due to proprietary restrictions on real-world data, but synthetic generation enabled systematic testing and control over variability for benchmarking AI models. This approach will better capture the diversity and complexity of marketplace dynamics in circular economies.

3.3. Development of AI Algorithms for Demand-Supply Matching and Pricing Optimization

Effective resource management in a circular economy (CE) digital marketplace requires advanced AI modeling techniques to accurately forecast demand, manage supply, and dynamically adjust pricing. Such CE systems are inherently complex, with notable fluctuations in resource availability and demand requiring models which support real-time decision making. This study explores a selection of AI-based algorithms to address demand-supply matching, price adjustments, and predictive analytics, each aligning with core objectives to advance resource efficiency within CE marketplaces. The choice of algorithms was informed by both theoretical and empirical considerations relevant to the dynamic characteristics of CE systems, ensuring that each model could adapt to nonlinear behaviors and high variability in the marketplace.

Each model underwent systematic hyperparameter optimization. For example, the LSTM model’s learning rate was tested between 0.001 and 0.1 in increments of 0.01, and neuron counts varied from 20 to 100. A grid search was conducted with fivefold cross-validation, focusing on minimizing the mean squared error of the validation set. These adjustments yielded an optimal learning rate and neuron count, significantly reducing the validation error by a substantial percentage compared with non-optimized values. Similar procedures were applied to other models, as outlined in the following sections.

To ensure each model was optimally configured, a systematic hyperparameter optimization process was conducted. For each model, an initial broad range of values for the key hyperparameters was established based on the prior literature and empirical testing. Then, a grid search with cross-validation was applied to narrow down these values to those which maximized model performance metrics (e.g., MAE, MSE, and R²) within our data context. Specific hyperparameter ranges included the learning rate for neural network models (ranging from 0.001 to 0.1), the number of neurons in the hidden layers (ranging from 20 to 100), and time steps (ranging from 3 to 6). For tree-based models like Gradient Boosting, parameters like the number of estimators (50–150) and maximum tree depth (5–20) were iteratively tuned. This optimization ensured each model’s configuration was refined to best capture the nuances of supply-demand dynamics within the CE marketplace framework.

3.3.1. Demand-Supply Matching: A Modeling Challenge

Effective resource allocation within a circular economy (CE) marketplace is essential to minimize waste and maintain real-time supply-demand equilibrium. Traditional forecasting models, which are commonly applied in resource management, encounter significant challenges in capturing seasonality and variability in dynamic systems [18]. To address these challenges, the autoregressive integrated moving average (ARIMA) model [18,19] was employed to forecast resource needs based on historical data, supporting proactive allocation adjustments.

For this study, the ARIMA model parameters were initially set to , where p represents the autoregressive component, d denotes the differencing order, and q controls the moving average component. A range of values was tested for each parameter; the autoregressive term p ranged from 1 to 7, the differencing d ranged from 0 to 2, and the moving average q ranged from 0 to 5. This tuning, achieved through grid search and cross-validation, allowed the model to effectively capture periodic seasonal patterns, thereby improving the accuracy in resource allocation adjustments [18]. The formal representation of the ARIMA model is as follows:

where represents the demand or supply at time t, c is a constant term, and are autoregressive and moving average coefficients, respectively, while denotes the error term [20].

In addition to ARIMA, linear regression (LR) was used as a comparative baseline, assuming a linear relationship between the predictor variables (such as resource availability and energy prices) and the target variable (demand or supply). Linear regression models are effective due to their simplicity and interpretability, making them an initial benchmark in time series analysis [21]. The LR model is represented as follows:

where represents the predicted demand or supply, denote predictor variables, is the intercept, and is the residual error. Although linear regression identifies linear dependencies, it has limitations in handling the nonlinear dependencies in CE systems, where complex interactions are often present [22]. Combining ARIMA’s strength in capturing periodic trends with linear regression’s ability to model linear trends may form a hybrid approach to address more complex forecasting requirements, particularly for systems exhibiting both seasonal and trend-based fluctuations.

3.3.2. Addressing Long-Term Dependencies

Forecasting within a circular economy (CE) marketplace requires sophisticated models capable of capturing both long- and short-term dependencies to account for fluctuating demand and supply patterns, which may challenge traditional linear models like ARIMA and linear regression [19,21]. Long short-term memory (LSTM) networks, originally proposed by the authors of [23], offer a robust solution for this purpose by incorporating a gated architecture which enables the retention and regulation of temporal dependencies over extended sequences.

To optimize the LSTM network configuration for CE applications, several key hyperparameters were carefully selected:

- Number of neurons: A value of 50 was chosen to effectively capture the temporal dependencies within the CE data.

- Time steps: Set to 3, this aligned with a 3 month sequence length in the input data to balance historical context and real-time adaptability.

- Batch size: This was set to 32, achieving a balance between computational efficiency and convergence.

- Epochs: Configured to be 50, this provided a sufficient number of training iterations for pattern recognition.

- Learning rate: This was tuned through the Adam optimizer, enabling efficient weight updates which accelerated convergence.

LSTM networks utilize a unique cell state and gating mechanism to capture nonlinear temporal dependencies effectively. Each cell state retains relevant information over time, while forget, input, and output gates regulate the data flow, ensuring that the model retains pertinent patterns and discards irrelevant information. The hidden state update at time t is mathematically expressed as follows:

where is the hidden state at time t, denotes the weight matrix, represents the input, is the bias term, and is the activation function. This architecture enhances model accuracy by capturing the complex temporal patterns which are prevalent in CE systems [23,24].

The gated structure of LSTM makes it particularly advantageous for applications in CE marketplaces, where seasonal, cyclical, and event-driven fluctuations play a significant role in resource availability and demand. By accommodating these patterns, LSTMs provide a predictive modeling framework which surpasses traditional methods, ensuring greater adaptability to CE market dynamics.

3.3.3. Incorporating Seasonality and Trends with the Prophet Model

Circular economy (CE) marketplaces often exhibit complex seasonal trends and irregular patterns, challenging traditional models like ARIMA [18]. To address these intricacies, the Prophet model [25,26] was employed due to its ability to handle seasonality, accommodate irregular time intervals, and model both linear and logistic growth trends, all of which are essential for accurately forecasting in CE systems.

Prophet decomposes a time series into three main components: trend, seasonality, and holiday effects. This approach can be mathematically represented as follows:

where captures long-term shifts, represents periodic fluctuations, accounts for holiday effects or significant external events, and is the error term, capturing unexplained variance. Prophet’s trend component can be either linear (), where m is the growth rate, or logistic to account for saturation points in growth [25].

The seasonality component was modeled using a Fourier series, providing the flexibility needed to capture complex cyclic patterns in CE systems:

where P denotes the periodicity and and are Fourier coefficients, allowing the model to fit various seasonal frequencies [18,25].

For this study, Prophet’s parameters were calibrated to address the dynamic and uncertain nature of CE marketplaces:

- Growth mode: Configured to be logistic, this setting enabled the model to capture demand and supply saturation, a common feature in circular economy contexts.

- Fourier order: This was set to 10 to capture seasonal variations without overfitting.

- Interval width: This was configured to be 0.95 to provide conservative uncertainty intervals, aligning with the high variability observed in CE factors.

Prophet’s robustness in managing missing data and outliers, coupled with its versatile trend and seasonality modeling, positions it as an effective tool for CE applications. By incorporating external influences, such as holidays and policy shifts, Prophet provides a comprehensive framework for examining complex behaviors in CE marketplaces, supporting informed decision making in resource allocation and demand management [25,26].

3.3.4. Dynamic Pricing in a Circular Economy

In a circular economy (CE) marketplace, dynamic pricing plays a critical role in balancing fluctuating supply-demand conditions and optimizing resource allocation. To facilitate real-time adaptive pricing strategies, this study employs reinforcement learning (RL), specifically Q-learning [27]. This model-free RL approach enables continuous adjustments to pricing based on current market conditions, contributing to overall market equilibrium.

Q-learning operates by iteratively updating an action value function to learn optimal pricing strategies derived from observed state-action pairs. The update rule in Q-learning is expressed as follows:

where represents the learning rate, r is the immediate reward for taking action a in state s, is the discount factor, which adjusts the model’s preference for future rewards, and denotes the state resulting from action a [28]. Through this iterative process, Q-learning refines its pricing strategies to respond optimally to market fluctuations.

The Q-learning implementation involved specific hyperparameter tuning to enhance learning efficiency:

- Learning rate (): This was set to 0.05 to strike a balance between stability and sensitivity to new information.

- Discount factor (): Configured to be 0.9, this emphasized long-term rewards while allowing adaptability to short-term market dynamics.

- Exploration rate: Initialized to be 1.0 with a decay of 0.995, this encouraged exploration of varied strategies early in the training process before converging toward optimal policies.

This dynamic pricing methodology enables the CE marketplace to respond effectively to shifts in demand and supply, supporting core CE goals such as resource optimization and waste reduction [27]. By continuously adjusting prices in response to real-time market data, the Q-learning model aids in maintaining a balanced marketplace and promoting sustainable resource utilization. Further refinements to the Q-learning model could involve adapting to sudden market shocks or rapid demand changes, ensuring that pricing strategies remain resilient under highly variable conditions.

3.3.5. Modeling Nonlinear Relationships with Random Forest and Gradient Boosting

The intricate dynamics of circular economy (CE) marketplaces, where demand, supply, and pricing interact in complex, nonlinear ways, require modeling approaches which can effectively capture these dependencies. Random forest (RF) [29] and gradient boosting regressor (GBR) [30] are particularly suitable for this task due to their ensemble-based structures, which enhance predictive robustness and accuracy in handling nonlinear relationships.

Random Forest (RF) Methodology

Random forest is an ensemble technique which generates predictions by averaging the outputs from multiple decision trees, with each trained on a different data subset. This approach reduces overfitting and increases model resilience, especially in high-variability CE contexts. The random forest prediction is formally defined as follows:

where represents the final prediction, n is the total number of trees in the forest, and denotes the prediction from the ith tree. In this study, random forest was configured with 100 estimators, a maximum depth of 15, and a minimum samples split of 2 to balance computational efficiency with predictive performance [29].

Gradient Boosting Regressor (GBR) Methodology

In contrast, gradient boosting regressor (GBR) builds trees sequentially, where each tree addresses the residuals (errors) from the previous trees. This iterative correction enhances model accuracy by focusing on the hardest-to-predict instances. The prediction function for GBR is represented as follows:

where is the prediction for instance i, M denotes the number of boosting iterations (trees), is the learning rate, and is the prediction of the mth tree. For optimal performance in the CE marketplace context, GBR was tuned with a learning rate of 0.1 and 100 estimators, effectively balancing model precision with the risk of overfitting.

Both random forest and GBR demonstrated strong predictive capabilities in CE systems, where nonlinear relationships among resource supply, demand, and pricing are prevalent. By effectively capturing complex dependencies, these models enhance the prediction accuracy of resource needs and allocation strategies, supporting sustainable management within CE marketplaces [30].

3.3.6. Predictive Analytics for Long-Term Resource Planning

To forecast long-term trends and support strategic planning within circular economy (CE) marketplaces, neural networks (NNs) were employed for their ability to model the complex nonlinear relationships essential in dynamic CE environments [22,24]. These neural network models facilitate the data-driven decision making critical for effective policy formulation and resource management, as they capture interactions among diverse market variables.

A neural network neuron is formulated as follows:

where denotes the predicted output, represents the weight applied to each input , b is the bias term, and f is the activation function, selected based on the network requirements (e.g., ReLU or Sigmoid). This structure allows the network to adaptively learn patterns within data, effectively capturing the complex dependencies across market factors.

The neural network model employed a multi-layer perceptron (MLP) architecture with two hidden layers having 64 neurons each, a configuration chosen to balance model complexity with computational efficiency. The key hyperparameters included the following:

- -

- Hidden layers: Two layers with 64 neurons each were employed, enabling the network to capture hierarchical and complex data features.

- -

- Learning rate: This was set to 0.001 to ensure stable gradient descent, allowing for gradual learning improvements over time.

- -

- Activation function: ReLU was employed for the hidden layers, enhancing the model’s ability to capture nonlinear patterns, with a linear activation for the output layer to support continuous output predictions.

Neural networks’ adaptability and robust pattern recognition capabilities make them well suited for CE marketplaces, where complex interdependencies often drive long-term resource needs. By effectively capturing these nuanced trends, neural networks play a pivotal role in strategic planning and enhance resource management efforts, thereby supporting sustainability objectives in the marketplace [24].

3.4. Performance Metrics and Model Evaluation

To rigorously assess AI model performance in addressing circular economy (CE) resource management challenges, multiple metrics were employed. Each metric offers distinct insights into model accuracy, reliability, and predictive power in demand-supply matching and dynamic pricing optimization. Robust evaluation is essential in CE systems, as model precision directly affects resource allocation efficiency and waste reduction goals [21,22].

The mean absolute error (MAE) quantifies the average magnitude of prediction errors, providing a straightforward accuracy measure. Due to its interpretability and simplicity, the MAE is widely adopted, particularly in high-stakes CE contexts where clear error representation is critical [21,22]:

where is the observed value, is the predicted value for instance i, and n is the number of observations.

The mean squared error (MSE) is especially valuable in applications where large prediction errors are costly, as it emphasizes these deviations by squaring the errors [21,22]. Low MSE values indicate reliable model performance for CE resource management applications:

where and are the observed and predicted values, respectively, for instance i and n is the total number of observations.

The root mean squared error (RMSE) further aids interpretability by expressing the error in the same units as the target variable, making it suitable for time series analysis and CE applications, where understanding the magnitude of error is essential [18,21]:

where and represent the actual and predicted values, respectively, and n is the number of observations.

The coefficient of determination () measures the proportion of variance in the target variable explained by the model, serving as an indicator of model fit and predictive power [18]. The value is calculated as follows:

where is the observed value, is the predicted value, is the mean of the observed values, and n is the total number of observations. An value close to 1 indicates a strong model fit, while values near 0 suggest a limited predictive capacity [21].

To optimize model stability and performance, a grid search was conducted over parameter ranges such as a learning rate from 0.01 to 0.1 and a batch size from 16 to 64). For future work, Bayesian optimization could offer a more efficient approach to hyperparameter tuning, enabling better navigation of the hyperparameter space in computationally intensive CE applications.

The chosen performance metrics (MAE, MSE, RMSE, and R²) also served as guides during the hyperparameter optimization process to ensure that model tuning aligned with the evaluation goals. Each model was iteratively optimized to reduce the error values, with cross-validation applied to ensure generalizability. This method helped ensure that the models were not overfitted to the training data but could reliably handle real-time demand-supply fluctuations.

3.5. Model Validation and Sensitivity Analysis

To ensure the robustness of the AI models, validation was conducted using the mean squared error (MSE) metric, a reliable measure of predictive accuracy especially suited for assessing model performance across demand, supply, and pricing outputs in circular economy (CE) marketplaces [21]. Lower MSE values indicate a model’s capability to predict with minimal error, which is essential for effective resource allocation within circular systems. Insights from the sensitivity analysis inform strategic planning and policy formulation within CE marketplaces, providing CE managers with a prioritized understanding of the variable impact for more efficient resource management.

In addition, sensitivity analysis was applied to evaluate the impact of key input variables, such as waste generation, energy prices, and economic growth, on model outcomes. This analysis enhances understanding of the variable interactions and dependencies, thereby improving model accuracy and informing strategic resource allocation within digital CE marketplaces [22]. By assessing these sensitivities, CE managers gain insights into how critical resource factors influence overall system performance, allowing for more precise management of resource flows.

Hyperparameter Tuning Scope and Constraints

Hyperparameter tuning was integral to optimizing model performance in this study. However, due to computational constraints, extensive tuning was limited in scope, with the primary research objective focused on evaluating model effectiveness for demand-supply forecasting and dynamic pricing. The key hyperparameters were chosen based on their influence on model responsiveness and stability. For example, the learning rate () for Q-learning was adjusted within a range of 0.01–0.1 to balance model stability and adaptability, while the discount factor () was set between 0.8 and 0.95 to prioritize long-term rewards. These parameters were carefully selected to enhance the models’ responsiveness in dynamic CE marketplaces.

Future optimization efforts could benefit from automated techniques, such as Bayesian optimization or grid search, to identify the ideal parameter settings more efficiently, further increasing the models’ adaptability to the variable conditions typical of circular economy systems.

4. Results

This results section evaluates AI models for resource allocation, demand-supply matching, and dynamic pricing optimization within circular economy (CE) digital marketplaces, directly addressing the study’s primary objectives. Specifically, each model’s performance was assessed in terms of its effectiveness in capturing complex variable interactions and responding to the nonlinear market dynamics characteristic of CE systems. The progression of model evaluations, beginning with foundational time series models and advancing through machine learning and reinforcement learning approaches, offers insights into each method’s suitability for CE applications. The following subsections systematically compare model efficacy, interpret critical findings, and discuss implications for CE marketplaces.

4.1. Supply, Demand Forecasting, Pricing, and Model Comparison

Prior to evaluating model performance, extensive hyperparameter optimization was performed for each AI model to ensure the configurations were well suited to the circular economy marketplace dynamics. This optimization process involved setting a range of values for each key hyperparameter, followed by grid search and cross-validation to identify the settings which maximized the prediction accuracy. For instance, LSTM models were optimized over a range of time steps (3–6) and neuron counts (20–100), while random forest and gradient boosting models were refined by adjusting the number of estimators (from 50 to 150) and maximum depth (from 5 to 20). This tuning process enhanced the reliability and adaptability of each model in forecasting demand, supply, and pricing fluctuations.

4.1.1. Performance of Initial Models

The AI models evaluated in this study varied in their effectiveness at forecasting supply and demand, as well as optimizing pricing strategies within the circular economy marketplace. Table 4, Table 5 and Table 6 provide a model performance comparison, progressing from basic time series approaches to more advanced machine learning algorithms. This comparison assesses their practicality in the circular economy context.

Table 4.

Performance metrics for basic models (ARIMA and linear regression).

Table 5.

Performance metrics for intermediate models (LSTM, random forest, and Prophet).

Table 6.

Performance metrics for advanced models (GBR and neural networks).

The performance of the basic models (Table 4), ARIMA and linear regression, provides a foundational understanding of the linear temporal dependencies in CE marketplaces. ARIMA, configured with parameters for demand and supply forecasting, encountered significant challenges in capturing the inherent variability of CE dynamics. This is reflected in its high mean absolute error (MAE) values of 96.30 for demand and 141.97 for supply, as well as its negative scores (−0.2178 for demand and −0.1378 for supply), indicating a limited fit to real-time fluctuations.

Linear regression performed moderately better, achieving an MAE of 70.54 and an of 0.2485 in demand forecasting, suggesting that it can capture basic trends in stable conditions. However, it exhibited overfitting in its supply predictions, as shown by its near-perfect of one, implying limited generalizability. These findings underscore the limitations of basic models in nonlinear and dynamic CE environments, highlighting the necessity of advanced machine learning approaches which can better handle market complexities.

Although simpler models such as ARIMA and linear regression required limited hyperparameter tuning, their configurations were optimized to enhance performance within the demand-supply forecasting context. For ARIMA, the parameters were refined through iterative testing, while linear regression benefited from adjustments to regularization parameters which helped improve the fit within its linear constraints.

The intermediate models (Table 5), encompassing long short-term memory (LSTM) networks, random forest, and Prophet, demonstrated enhanced capabilities in capturing nonlinear and sequential dependencies compared with the basic models. However, each model exhibited unique strengths and limitations within the circular economy (CE) marketplace dynamics.

LSTM, a recurrent neural network well suited for time series data, captured the general demand trends but struggled with the high variability in both the demand and supply datasets. Its mean absolute error (MAE) of 104.69 for demand and 453.53 for supply, paired with low scores (−0.0685 for demand and −6.2175 for supply), reflects a tendency to underperform in environments marked by sudden fluctuations. In this study, the LSTM hyperparameters were systematically optimized over a range of values, including the number of neurons (50–100), batch size (32–64), and time steps (3–5). Cross-validation was employed to finalize values which balanced model complexity and adaptability to high-variance periods. These findings suggest that while LSTM effectively captured trends, further tuning or hybridization may be necessary to manage CE marketplace volatility.

In contrast, random forest demonstrated robust performance in handling complex nonlinear relationships, achieving an MAE of 42.11 for demand and 63.44 for supply, with values of 0.6853 (demand) and 0.7164 (supply). This model’s ensemble structure enabled it to capture subtle variable interactions, indicating its suitability for volatile market conditions where demand and supply variability are high. Random forest hyperparameters, including tree depth (5–15) and the number of estimators (50–150), were optimized to balance accuracy and prevent overfitting, thereby enhancing the model’s performance in capturing demand-supply variability.

The Prophet model, designed for capturing seasonality and trend components, performed moderately well, with MAE values of 61.57 for demand and 109.87 for supply. Its scores (0.3986 for demand and 0.3468 for supply) indicate an ability to model long-term trends but reduced responsiveness to rapid changes. Prophet’s strengths in handling cyclical patterns make it applicable for scenarios where periodicity and seasonality drive resource demands, although its effectiveness diminishes during abrupt market shifts. Prophet’s hyperparameters, such as its seasonality mode (“additive” versus “multiplicative”) and growth type, were iteratively fine-tuned to capture the seasonality in CE data without overfitting.

Through hyperparameter optimization, intermediate models like random forest demonstrated suitability for nonlinear dynamics typical in CE marketplaces, while Prophet offered advantages in applications dominated by predictable seasonal patterns. These optimizations enhanced model robustness in the face of market volatility and contributed to reliable forecasting outcomes.

The advanced models (Table 6), including gradient boosting regressor (GBR) and neural networks (NNs), showed marked improvements over simpler models, particularly in their capacity to capture nonlinear and complex interactions which are characteristic of circular economy (CE) digital marketplaces.

Gradient boosting regressor (GBR) excelled in both demand and supply forecasting, achieving mean absolute errors (MAEs) of 23.39 for demand and 35.66 for supply, along with high values of 0.7586 and 0.7491, respectively. The iterative error reduction mechanism inherent in GBR’s ensemble structure allows it to progressively refine predictions by correcting errors from previous iterations. This capability enhances its adaptability to high-variability conditions, capturing nuanced relationships within the data. However, GBR’s computational demands, due to its complexity, may present scalability challenges for large-scale, real-time CE applications, potentially slowing processing times as model complexity increases. Future optimizations, such as parallelization techniques or fine-tuning the number of boosting rounds, could address these scalability issues and support broader implementation in real-world scenarios.

Both the GBR and neural network models underwent extensive hyperparameter tuning to enhance predictive performance and adaptability. For GBR, a grid search was employed to systematically optimize the learning rates (0.01–0.1), the number of estimators (50–150), and maximum depth values (5–20), refining its capability of capturing nonlinear dependencies within CE data. The neural network model was tuned for neuron counts (20–100) and learning rates (0.001–0.1), with cross-validation applied to mitigate overfitting and ensure stable performance across various market conditions. This tuning approach enhanced each model’s adaptability to short-term market fluctuations while supporting robust longer-term predictions.

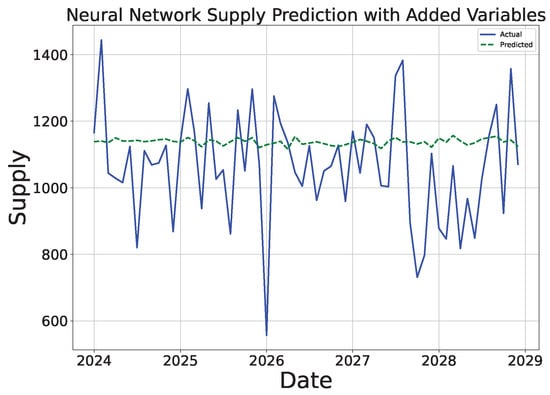

The neural network (NN) model, structured with multiple layers to accommodate nonlinear dependencies, also performed well in demand forecasting, with an MAE of 67.27. However, its performance in supply forecasting was less robust, with an MAE of 121.86 and a lower of 0.0151. These residual errors suggest that while the NNs captured complex demand patterns, they struggled to generalize effectively to the high volatility seen in the supply data. Further model refinement, such as integrating dropout regularization or attention mechanisms, could enhance their accuracy and stability, especially in broader market scenarios, where fluctuations are more pronounced. Scalability remains a consideration, as the computational intensity of neural networks can limit real-time applicability. Hardware optimizations or cloud-based implementations may be necessary to address this limitation in practical applications.

Dynamic pricing, which is essential for balancing supply-demand variations in CE digital marketplaces, was explored using Q-learning and deep Q-learning (Table 7). Both reinforcement learning models showed considerable effectiveness in stabilizing pricing under dynamic conditions, demonstrating adaptability to market fluctuations and helping to maintain marketplace equilibrium.

Table 7.

Performance metrics for pricing models (Q-learning and deep Q-learning).

Q-learning achieved a significant reduction in pricing fluctuations, as indicated by lower mean absolute error (MAE) and root mean square error (RMSE) values relative to the baseline pricing strategies. This model’s stability highlights its ability to optimize pricing by learning state-action values which align with real-time market conditions. Through systematic hyperparameter tuning, including adjustments to the learning rate and exploration rate, the model was optimized for better responsiveness to pricing fluctuations. This tuning allowed Q-learning to reduce pricing volatility, which is critical for supporting sustained resource circulation and avoiding artificial scarcity or waste within CE marketplaces.

Similarly, deep Q-learning (DQN) demonstrated a robust capability for pricing stabilization, with comparable residual errors to Q-learning, but it was enhanced by a greater capacity to manage complex state spaces. The neural network architecture within the DQN enables it to approximate Q-values over a larger action space, accommodating multi-faceted pricing decisions. Hyperparameter adjustments in the DQN, particularly in terms of network layer depth, learning rate, and experience replay settings, further optimized its effectiveness in handling frequent, intricate changes in market variables. This adaptability makes the DQN especially suited for CE marketplaces, where rapid shifts in market conditions require a responsive pricing model.

Both Q-learning and the DQN reinforce marketplace equilibrium by smoothing price adjustments and mitigating overcorrections. Their reinforcement learning approach supports the evolving demands of digital marketplaces, suggesting that further model scaling could improve real-time pricing stability in high-volume trading environments. Future iterations may focus on refining exploration-exploitation strategies and optimizing reward structures to further enhance model performance.

4.1.2. Impact of Additional Variables on Model Performance

The integration of additional variables, specifically economic growth, energy prices, and waste generation, provided a more nuanced understanding of CE marketplace dynamics and enhanced the models’ predictive capabilities. Table 8 presents a summary of the model performance with these variables included, detailing the mean absolute error (MAE), mean squared error (MSE), root mean squared error (RMSE), and values for both demand and supply predictions.

Table 8.

Performance summary of models with additional variables.

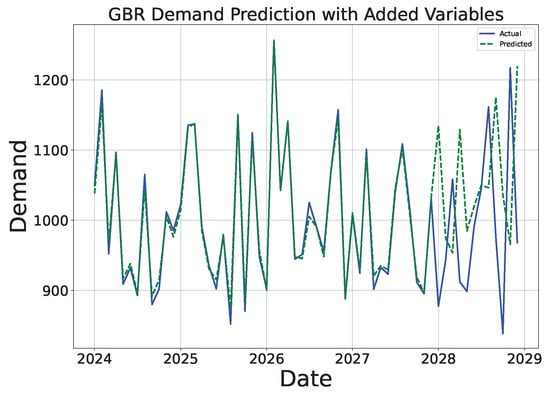

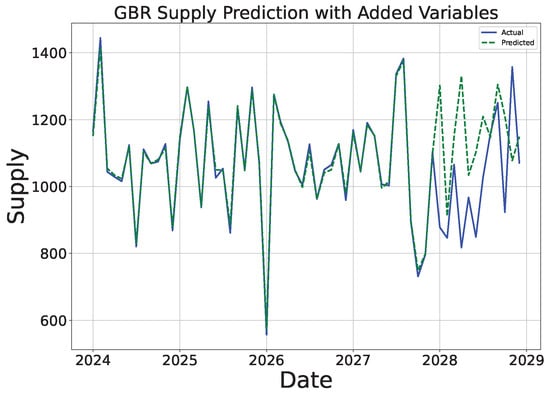

The gradient boosting regressor (GBR) model outperformed both the neural networks and linear regression in terms of predictive accuracy, particularly for supply, achieving an MAE of 45.09 and an of 0.5654, which suggests it can capture significant variances in supply data. GBR’s robustness in modeling nonlinear interactions makes it especially suitable for CE contexts, where variables like energy prices frequently impact resource flows.

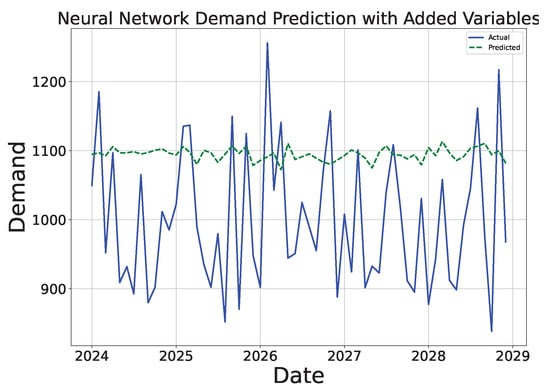

The neural networks, while typically proficient in modeling complex relationships, struggled in this instance, particularly with demand forecasting, where it displayed an value of −0.9112. This negative may reflect overfitting or an inability to generalize given the added complexity of new variables. Similarly, linear regression exhibited limited predictive accuracy, with low values for both demand (0.0024) and supply (0.1260), highlighting its limitations in handling the intricate relationships present in CE systems.

Analyzing the individual contributions of each additional variable revealed specific impacts on the models’ predictive strengths:

- Economic growth exhibited a modest impact on short-term demand and supply predictions, as indicated by small improvements in the values across the models. While this factor is crucial for strategic planning, its limited influence on immediate resource balances suggests it is better suited for long-term forecasting models, where economic trajectories impact resource circulation patterns over extended periods.

- Energy prices significantly influenced the pricing accuracy, enhancing model responsiveness, particularly within the GBR and random forest models, which excel at managing multi-variable dependencies. Given energy’s role in determining production and transportation costs, incorporating real-time energy pricing into predictive algorithms could yield better alignment with actual market dynamics, supporting efficient resource allocation and more accurate pricing strategies.

- Waste generation, though minimally affecting short-term forecasts, remains a critical indicator for sustainability planning. Patterns in waste generation inform long-term resource management and align CE marketplaces with conservation goals. Its integration into models offers strategic insights into waste reduction, supporting resource conservation, though immediate forecasting impacts remain limited.

Overall, including additional variables such as energy prices resulted in clear improvements in model accuracy and adaptability, while economic growth and waste generation contributed valuable insights for longer-term CE strategies. These findings highlight that a comprehensive framework incorporating both immediate market conditions and broader economic or environmental variables allows CE marketplaces to optimize real-time resource allocation while also supporting long-term sustainability objectives.

4.2. Visual Analysis of Model Performance

In addition to the performance metrics previously reported in Table 4, visual plots provide further insights into the predictive capabilities and residual patterns of the ARIMA and linear regression models. The following figures illustrate the actual versus predicted values and residuals for both demand and supply forecasting.

4.2.1. Analysis of Basic Models (ARIMA and Linear Regression)

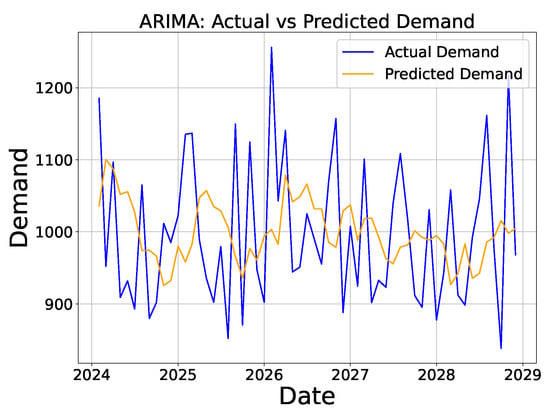

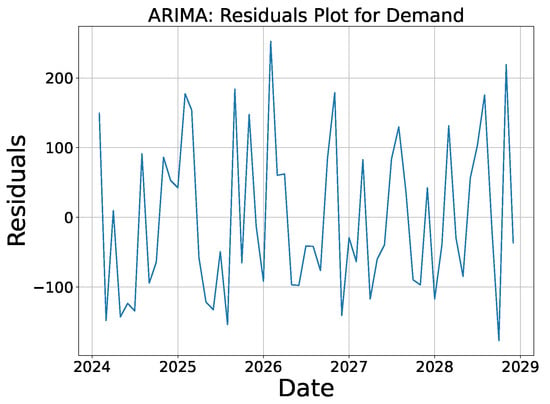

The performance of the basic models—ARIMA and linear regression—was evaluated for both demand and supply forecasting. These models serve as foundational algorithms for time series forecasting and linear trend analysis, providing baseline performances for comparison with more advanced approaches.

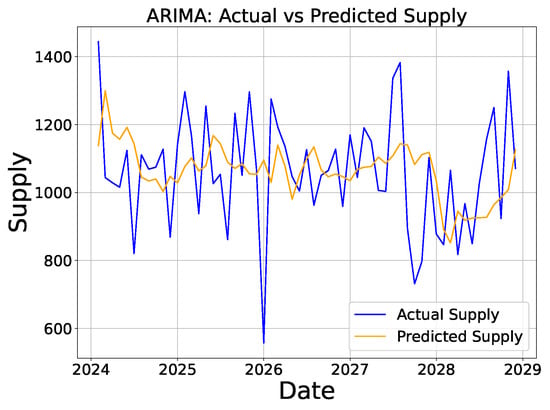

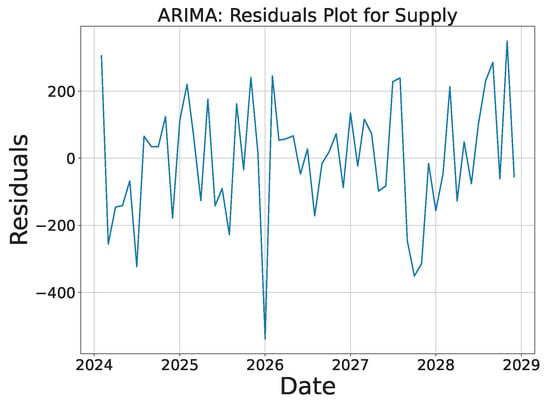

ARIMA model: The ARIMA model was applied to capture temporal dependencies and seasonality within the demand and supply data. For demand forecasting, the model was able to capture general trends in the data, but it struggled with accurately predicting sudden fluctuations, as shown in Figure 3. The residuals from the demand forecast (Figure 4) revealed an even distribution near zero, with notable errors during high-demand periods. The supply forecast using ARIMA (Figure 5) exhibited larger deviations between the actual and predicted values with consistently high residuals (Figure 6), reflecting the model’s challenges in accurately capturing the variability and dynamics of supply.

Figure 3.

ARIMA: actual vs. predicted demand.

Figure 4.

ARIMA: demand residuals.

Figure 5.

ARIMA: actual vs. predicted supply.

Figure 6.

ARIMA: supply residuals.

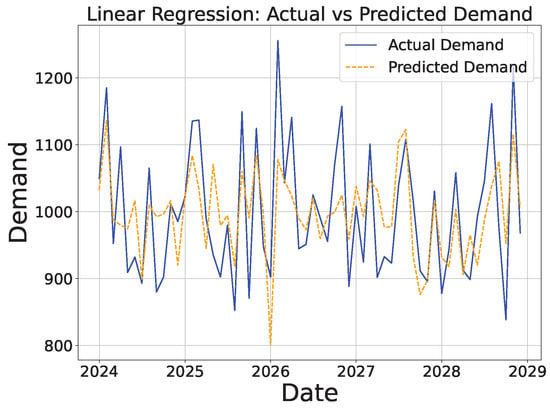

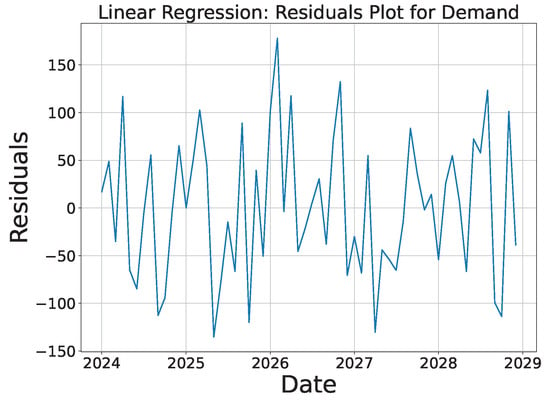

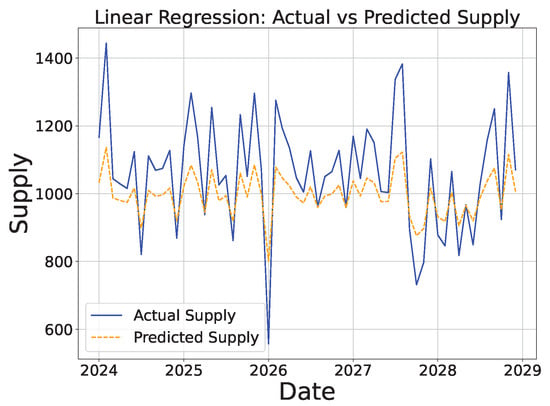

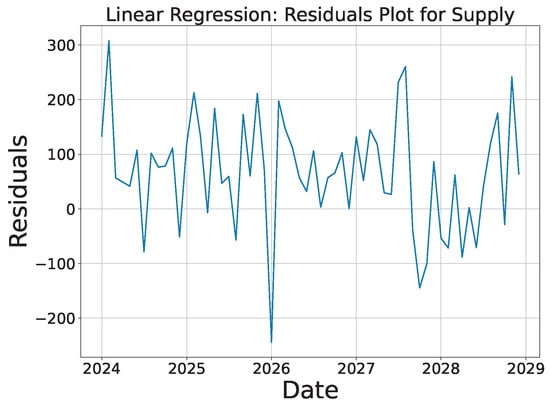

Linear regression model: In contrast, the linear regression model demonstrated strong alignment with the actual values for demand forecasting, as seen in Figure 7. The residuals (Figure 8) indicate that while the model performed well overall, minor deviations occurred during high-variance intervals, which is expected in simple linear models when addressing nonlinear dynamics. The supply forecast (Figure 9) closely followed the actual supply values, with minimal residual errors (Figure 10). This performance suggests that for relatively stable systems, the linear regression model can provide reliable forecasts, though it may still fall short in capturing more complex interactions.

Figure 7.

LR: actual vs. predicted demand.

Figure 8.

LR: demand residuals.

Figure 9.

LR: actual vs. predicted supply.

Figure 10.

LR: supply residuals.

While ARIMA and linear regression provided reasonable baseline performance, further tuning of their parameters can improve the forecasting accuracy for both demand and supply.

For ARIMA, the key parameters which can be adjusted include the autoregressive coefficients (), moving average coefficients (), and differencing order (d), which together control the model’s responsiveness to past values and errors. Optimizing these parameters can help in better capturing long-term patterns and reducing prediction errors, particularly in systems where seasonality or volatility are dominant.

In the linear regression model, feature selection and coefficient estimation () are crucial aspects for optimization. By selecting more relevant features and applying regularization techniques such as Ridge (L2) or Lasso (L1), the model can be fine-tuned to minimize overfitting while maintaining generalization. Additionally, residual errors () can be reduced through further refinement of model coefficients, leading to more accurate predictions.

4.2.2. Analysis of Intermediate Models (LSTM, Random Forest, and Prophet)

The intermediate models, including LSTM, random forest, and Prophet, were applied to address the complexities of forecasting demand and supply in a circular economy digital marketplace. These models offer more advanced techniques for managing nonlinear patterns, seasonality, and sequential dependencies, providing substantial improvements over simpler models like ARIMA or linear regression.

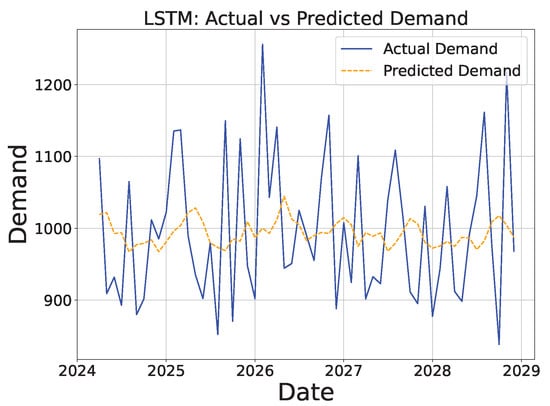

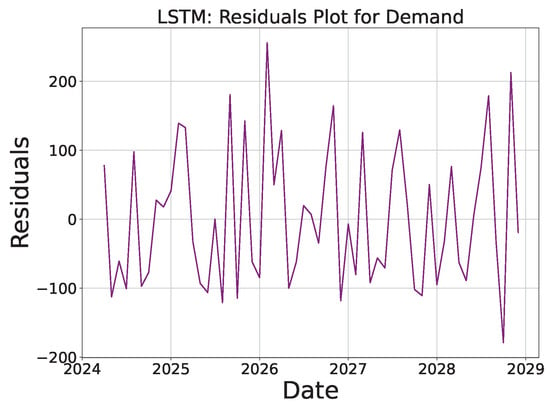

The LSTM model, a type of recurrent neural network, is especially effective at capturing the long-term dependencies and sequential patterns which are inherent in time series data. For demand forecasting, LSTM successfully identified overarching trends, as shown in Figure 11. However, the model exhibited difficulties during periods of rapid demand fluctuations, highlighting its limitations in handling high-variance periods. This shortcoming is reflected in the larger residual errors, as depicted in Figure 12. Despite its general success in trend identification, LSTM’s ability to manage short-term volatility remains limited, requiring more precise calibration.

Figure 11.

LSTM: actual vs. predicted demand.

Figure 12.

LSTM: demand residuals.

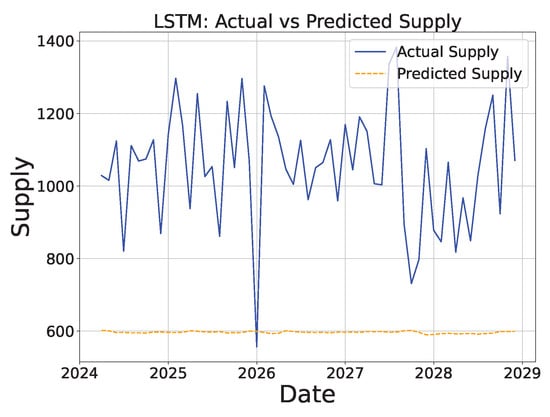

The performance of LSTM in supply forecasting (Figure 13) followed a similar pattern, with noticeable deviations between the actual and predicted values. These discrepancies, further illustrated by the residuals in Figure 14, indicate that the model struggled to maintain accuracy during volatile periods. These findings suggest that while LSTM is proficient at capturing long-term trends, its ability to handle real-world market volatility could be significantly enhanced through further tuning. Adjustments in hyperparameters such as the number of hidden units, learning rate, and batch size are critical for improving its performance. Additionally, incorporating mechanisms such as attention layers or hybrid models could help address the limitations seen in volatile environments, making the model more robust for dynamic market conditions.

Figure 13.

LSTM: actual vs. predicted supply.

Figure 14.

LSTM: supply residuals.

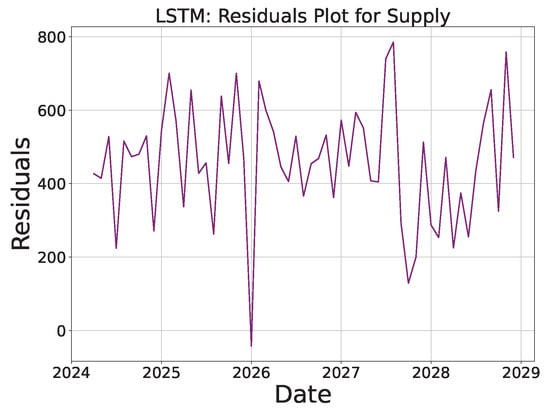

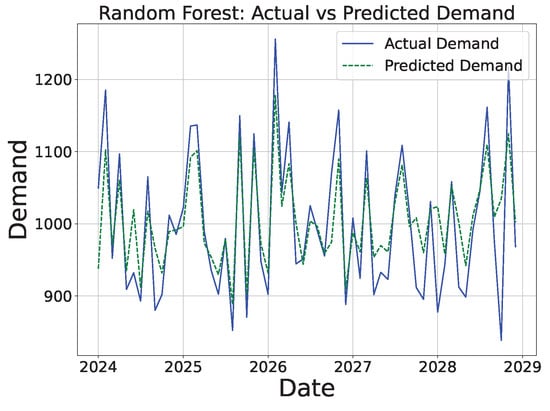

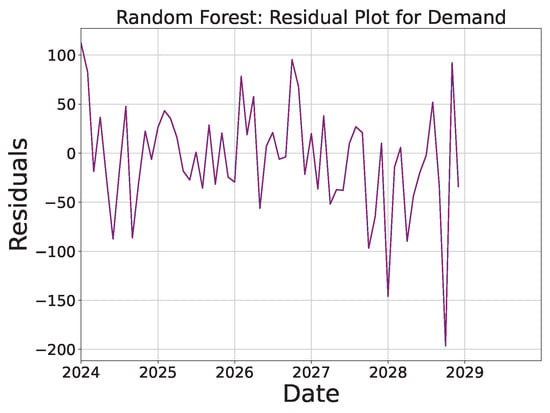

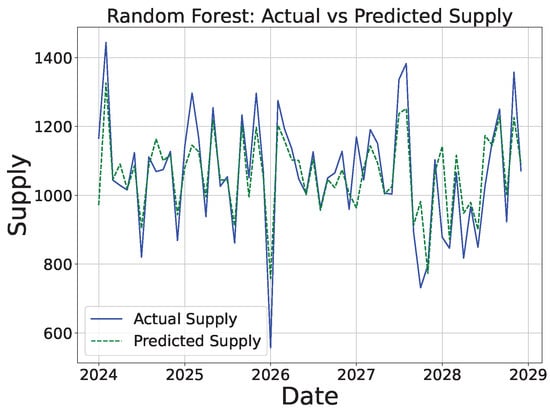

The random forest model, leveraging ensemble learning, outperformed LSTM in handling nonlinear relationships and complex interactions between variables. As shown in Figure 15, random forest demonstrated higher accuracy in demand prediction, although some deviations still occurred during periods of high variance. The residuals (Figure 16) highlight these deviations but show that random forest managed market fluctuations more effectively than LSTM. This can be attributed to the model’s inherent capability to reduce overfitting by aggregating multiple decision trees, thereby stabilizing predictions during volatile market conditions. Additionally, random forest’s robustness against multicollinearity and its ability to handle missing data further contributed to its superior performance in high-dimensional datasets.

Figure 15.

RF: actual vs. predicted demand.

Figure 16.

RF: demand residuals.

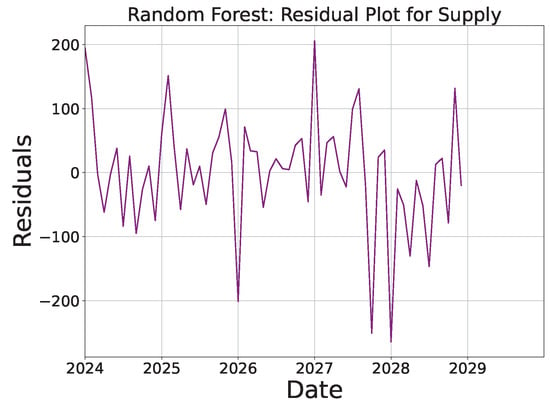

For supply forecasting (Figure 17), random forest performed robustly, closely following the actual supply trends with relatively low residual errors (Figure 18). This demonstrates that random forest, with its capacity for capturing nonlinear dependencies, is a more reliable model in a marketplace where supply-demand relationships are dynamic and multifaceted.

Figure 17.

RF: actual vs. predicted supply.

Figure 18.

RF: supply residuals.

Moreover, the model’s adaptability to complex data structures enables it to capture subtle interactions between predictors which may be overlooked by simpler models. Fine-tuning of hyperparameters such as the number of decision trees and tree depth can further enhance the model’s accuracy, particularly in scenarios where rapid shifts in supply-demand dynamics occur.

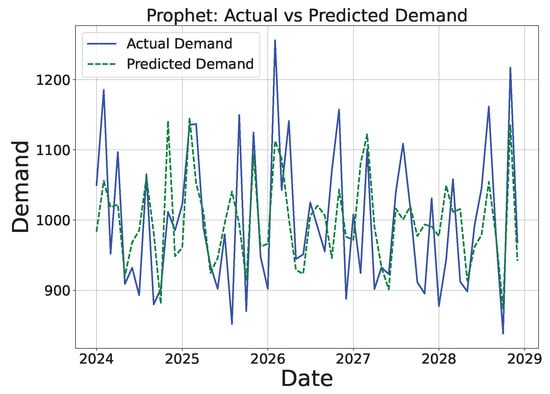

Prophet, designed specifically to capture long-term trends and seasonality, performed well when forecasting demand and supply in environments with periodic variations. As shown in Figure 19, the model successfully captured the general demand trends, although short-term fluctuations were less accurately predicted. This limitation was particularly evident during periods of abrupt market shifts, where Prophet’s emphasis on long-term seasonal patterns made it less responsive. The residuals (Figure 20) were smaller compared with LSTM and random forest, reflecting Prophet’s strength in handling seasonal components and its ability to decompose time series data into trend, seasonal, and holiday effects.

Figure 19.

Prophet: actual vs. predicted demand.

Figure 20.

Prophet: demand residuals.

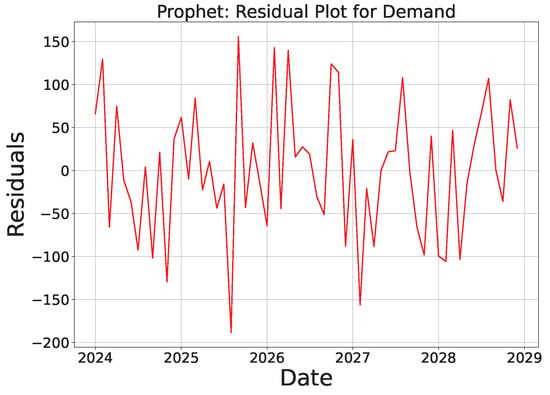

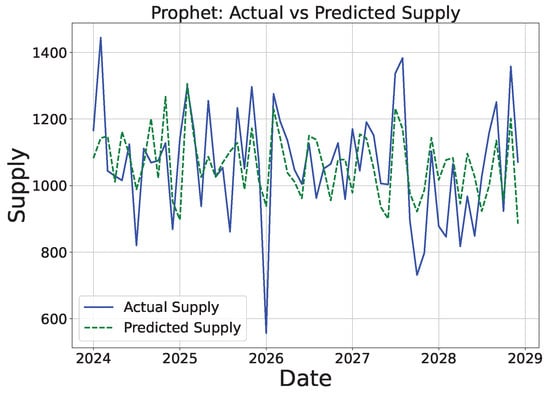

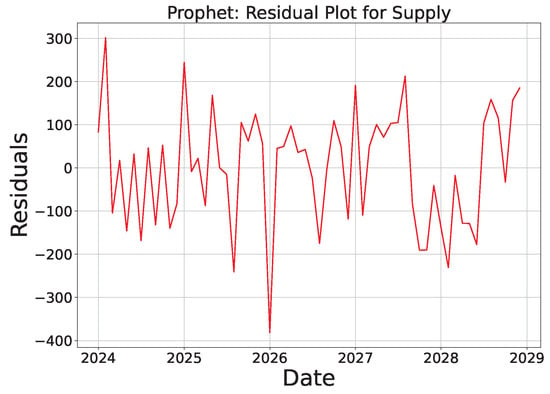

For supply forecasting (Figure 21), Prophet similarly tracked long-term trends effectively, though its performance diminished during rapid supply changes. The residuals (Figure 22) were relatively small, underscoring the model’s ability to manage periodic behavior with minimal error rates.

Figure 21.

Prophet: actual vs. predicted supply.

Figure 22.

Prophet: supply residuals.

However, its reduced accuracy during high-volatility periods highlights a potential weakness in its fixed seasonal structure. To improve Prophet’s performance, future efforts could focus on refining its ability to detect and adjust to changepoints, enhancing the model’s responsiveness to sudden shifts in supply-demand dynamics. Incorporating external regressors or applying more dynamic changepoint detection mechanisms could further improve the model’s flexibility in fast-evolving market environments.

Potential for Model Improvement

The LSTM model’s performance can be further improved by optimizing hyperparameters such as the number of units in the hidden layers, the learning rate, and the sequence length. Additionally, employing attention mechanisms could help the model focus on critical time steps, potentially reducing errors during high-variance periods. Fine-tuning the number of training epochs and incorporating regularization techniques could also enhance the model’s generalizability in volatile market conditions.

For the random forest model, adjusting the number of decision trees and the maximum tree depth could help mitigate overfitting and improve the predictive accuracy. Feature importance analysis may also provide deeper insights into which variables most influence demand and supply, guiding future model development. Implementing a hybrid model which combines random forest with other techniques, such as boosting methods, could further enhance performance.

Prophet’s performance could benefit from refining its seasonal components and adjusting the changepoint’s prior scale to increase its flexibility in handling sudden shifts. Introducing domain-specific knowledge into the model, such as incorporating more granular supply and demand factors, may also improve its accuracy in capturing short-term fluctuations. Future work could explore combining Prophet with machine learning models for further enhancement.

4.2.3. Analysis of Advanced Models (GBR and Neural Networks)

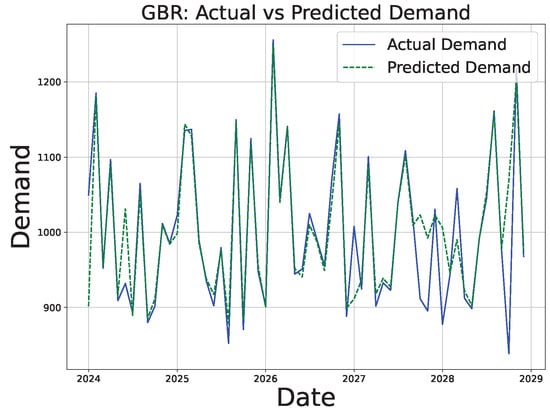

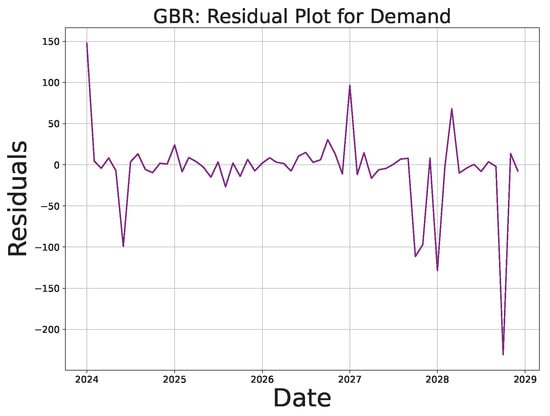

The advanced models—gradient boosting regressor (GBR) and neural networks (NNs)—demonstrated more sophisticated forecasting techniques by capturing nonlinear dependencies and complex interactions within the data. These models were evaluated based on their demand and supply forecasting capabilities, as visualized in the following figures.

For the GBR model, the demand forecast results (Figure 23) showed a strong alignment between the actual and predicted values, with the model able to capture both long-term trends and short-term fluctuations effectively. This can be attributed to the gradient boosting technique, which builds an ensemble of weak learners to minimize prediction errors iteratively. The residuals (Figure 24) revealed relatively small prediction errors, although there were minor deviations in the high-demand periods, where sudden market shifts introduced more variance. Despite these deviations, the model’s capacity to adjust to complex demand patterns underscores its strength in environments characterized by dynamic and nonlinear behavior.

Figure 23.

GBR: actual vs. predicted demand.

Figure 24.

GBR: demand residuals.

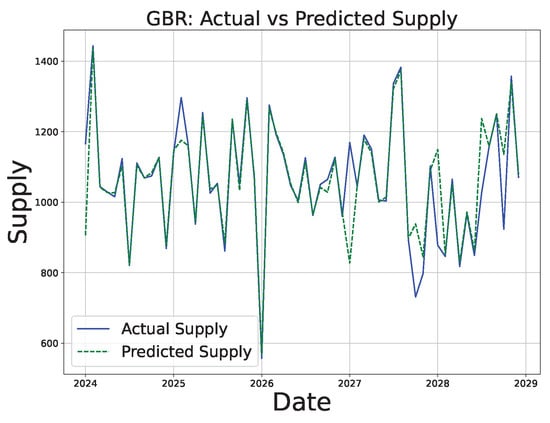

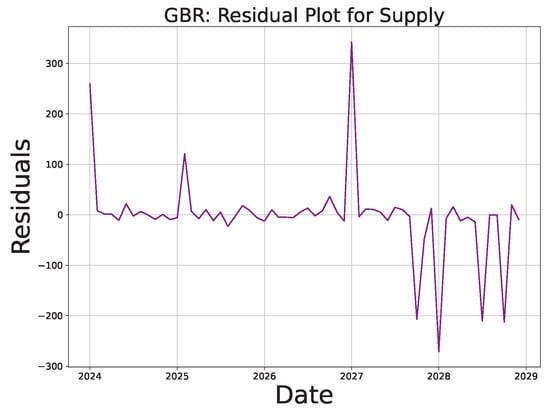

The GBR supply forecast (Figure 25) also closely followed the actual values, demonstrating the model’s proficiency in capturing supply dynamics. However, the residuals (Figure 26) indicate that there was room for improvement in handling supply volatility, particularly during periods of rapid changes in supply levels. This suggests that the model may benefit from further refinement in its loss function or the introduction of regularization techniques to mitigate overfitting in volatile periods. Additionally, fine-tuning hyperparameters such as the learning rate, the number of boosting iterations, and the maximum depth of the trees could improve its performance in high-volatility scenarios. Incorporating external factors, such as market shocks or other exogenous variables, could further enhance the GBR model’s robustness in supply-demand forecasting.

Figure 25.

GBR: actual vs. predicted supply.

Figure 26.

GBR: supply residuals.

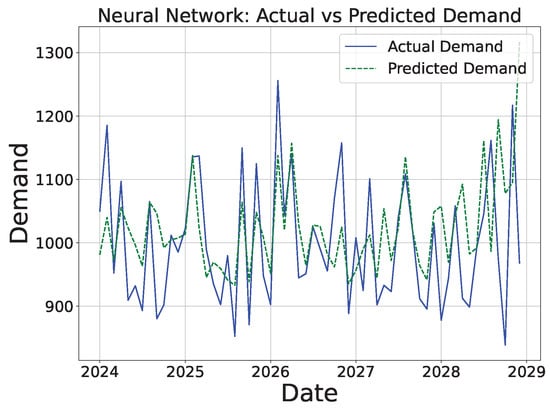

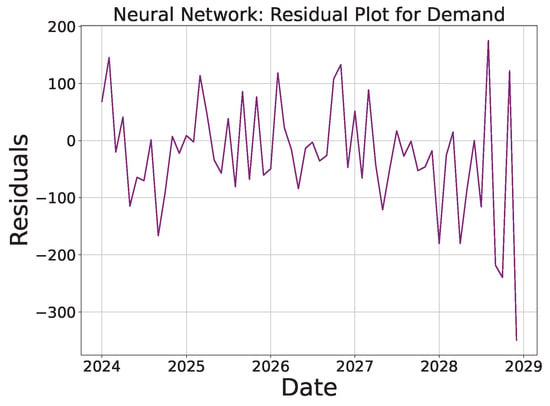

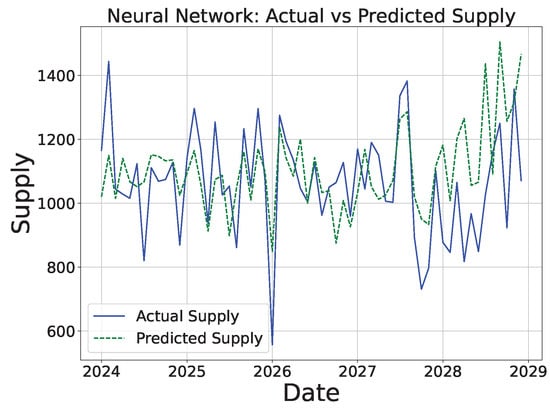

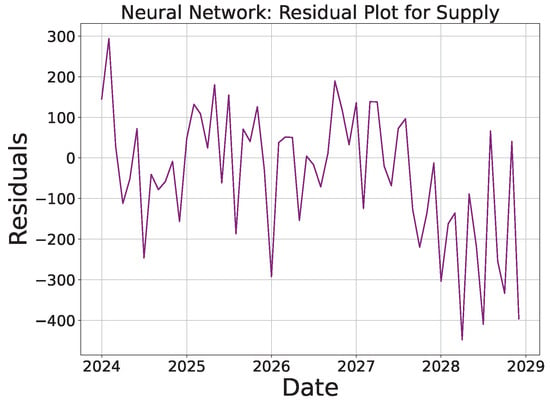

The neural network model demonstrated strong performance in demand forecasting, as shown in Figure 27, where the predicted values aligned well with the actual demand over time. The residuals (Figure 28) reflected minimal prediction errors, though there were some spikes during volatile periods, indicating that the model can be further fine-tuned for better accuracy. For supply, the NN model also performed well (Figure 29), with predicted values closely following the actual supply. The residuals (Figure 30) showed slightly higher errors compared with demand, suggesting that additional optimization might be required to enhance the model’s robustness in supply forecasting.

Figure 27.

NN: actual vs. predicted demand.

Figure 28.

NN: demand residuals.

Figure 29.

NN: actual vs. predicted supply.

Figure 30.

NN: supply residuals.

Potential for Model Optimization

Both the GBR and neural network models exhibited strong performance in forecasting demand and supply. However, further improvements can be achieved by optimizing key aspects of each model.

For the GBR model, tuning the number of boosting iterations, learning rate (), and depth of the decision trees can help to better capture complex interactions between variables. Additionally, regularizing the model can prevent overfitting, particularly in scenarios where high variability in supply and demand exists.

In the neural network model, improvements can be made by adjusting the number of hidden layers and the number of neurons in each layer, as well as by fine-tuning the learning rate and activation functions (f). The use of advanced techniques such as dropout or batch normalization can further improve the model’s generalization capabilities, especially in handling volatile supply-demand dynamics.

4.2.4. Analysis of Pricing Optimization Models (Q-Learning and Deep Q-Learning)

In this study, Q-learning and deep Q-learning (DQN) were employed to optimize pricing within the digital marketplace, which is crucial for balancing supply-demand dynamics and maintaining market equilibrium. Both models aimed to learn optimal pricing strategies which adapted based on the observed market conditions.

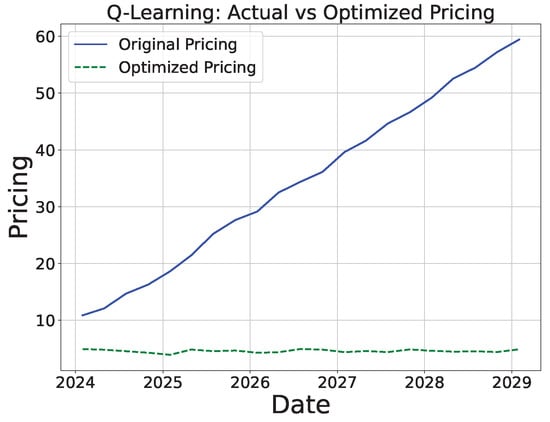

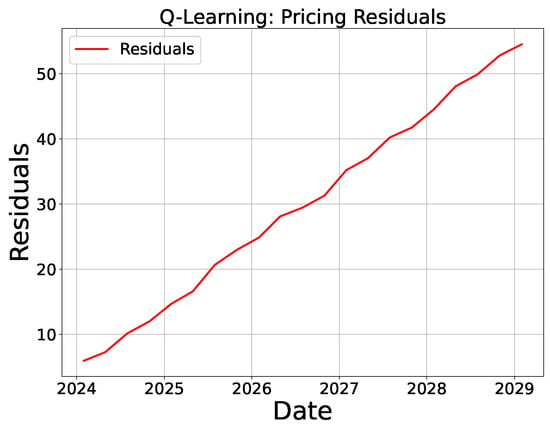

Q-Learning Pricing Model

Q-learning was utilized to dynamically adjust pricing based on the evolving state of the marketplace. As can be seen in Figure 31, the optimized pricing curve appears flat and stable, representing a minimalistic adjustment mechanism. While the original pricing fluctuated, the optimized pricing demonstrated less variation, emphasizing a conservative approach to reducing volatility. The residuals in Figure 32 show smaller and more controlled deviations, indicating the model’s focus on stabilizing pricing.

Figure 31.

QL: actual vs. optimized pricing.

Figure 32.

QL: pricing residuals.

Deep Q-Learning (DQN) Pricing Model

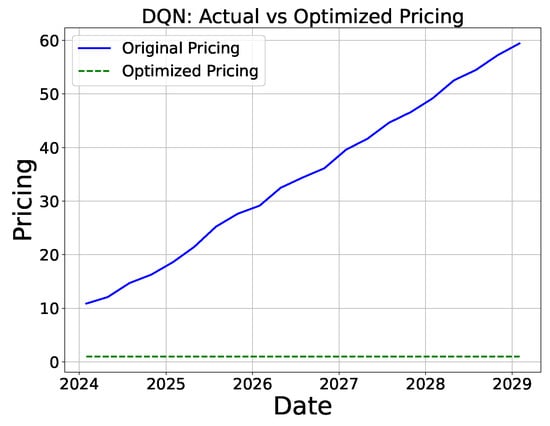

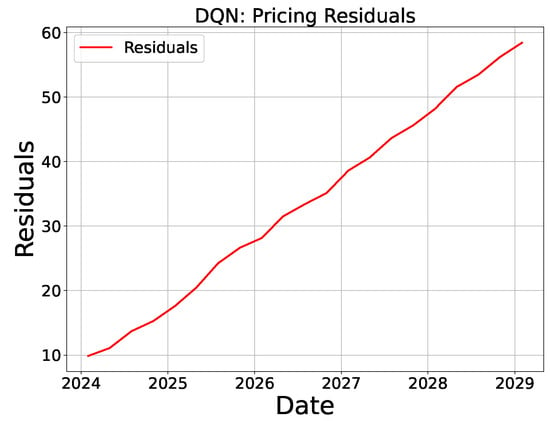

Deep Q-learning was applied to further enhance the pricing optimization by leveraging a neural network for estimating the Q-values. Figure 33 illustrates a similar pattern to Q-learning, with a stable optimized pricing curve which minimizes deviations from the original pricing. The residuals shown in Figure 34 confirm a reduction in prediction errors, demonstrating the DQN model’s ability to maintain pricing consistency while avoiding large fluctuations.

Figure 33.

DQN: actual vs. optimized pricing.

Figure 34.

DQN: pricing residuals.

Potential for Model Improvement

Both Q-learning and deep Q-learning can be further refined by adjusting key hyperparameters such as the learning rate () and discount factor () to improve convergence and pricing accuracy. For the DQN, the architecture of the neural network (e.g., number of layers and neurons) could be tuned to better capture complex pricing patterns. These improvements can enhance the models’ responsiveness to market dynamics and further stabilize pricing.

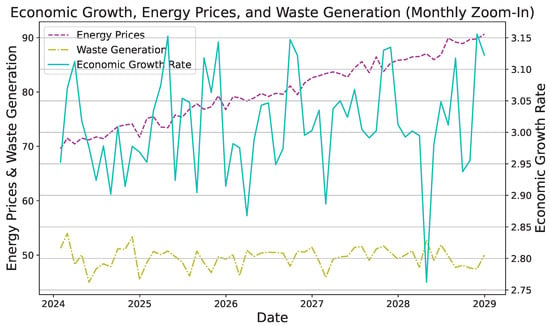

4.2.5. Analysis of Additional Variables (Economic Growth Rate, Energy Prices, and Waste Generation)

In addition to the core variables of demand, supply, and pricing, the introduction of the economic growth rate, energy prices, and waste generation provided further insights into the system dynamics. These variables, particularly energy prices, played a significant role in influencing market pricing. Energy prices exhibited a strong correlation with pricing (0.71), emphasizing the importance of accounting for real-time energy cost fluctuations in resource allocation and price optimization strategies.

Model Selection Rationale

We chose gradient boosting regressor (GBR), neural networks, and linear regression for this analysis due to their complementary strengths in handling different types of relationships in the data. GBR was selected for its ability to capture complex interactions between variables and its robust performance in nonlinear systems. Neural networks were chosen for their flexibility in learning intricate, nonlinear relationships, particularly when additional variables are introduced. Finally, linear regression was included as a baseline due to its simplicity in modeling linear relationships, allowing for comparison with the more sophisticated models.

Economic Growth and Energy Prices

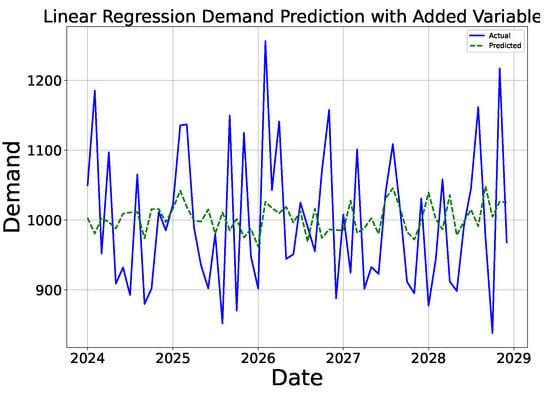

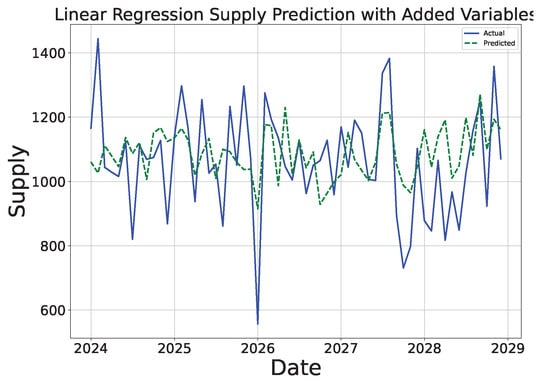

The results from the GBR and neural network models (Figure 35, Figure 36, Figure 37, Figure 38, Figure 39 and Figure 40) indicate that the economic growth rate had minimal direct impact on the short-term demand and supply predictions. However, energy prices played a more significant role in influencing pricing dynamics. As expected, the GBR model showed improved performance in terms of the and error metrics (MAE, MSE, and RMSE) compared with the neural networks and linear regression.

Figure 35.

GBR: demand prediction with added variables.

Figure 36.

GBR: supply prediction with added variables.

Figure 37.

LR: demand prediction with added variables.

Figure 38.

LR: supply prediction with added variables.

Figure 39.

NNs: demand prediction with added variables.

Figure 40.

NNs: supply prediction with added variables.

Waste Generation

The influence of waste generation on the short-term demand and supply forecasts was negligible, as reflected by the weak correlations and high residual errors. This suggests that waste generation may have a more pronounced impact on long-term planning and sustainability efforts rather than short-term resource allocation.

Integration of the economic growth, energy price, and waste generation variables required further hyperparameter adjustments to capture each model’s sensitivity to these factors. For instance, GBR and the neural networks were reoptimized to incorporate these additional variables, with adjustments in the learning rate and estimators to balance the predictive accuracy. This further reinforced the models’ capability to respond dynamically to evolving market conditions and environmental factors within CE systems.

5. Discussion

5.1. Practical Implications