Abstract

In the realm of sustainable IoT and AI applications for the well-being of elderly individuals living alone in their homes, falls can have severe consequences. These consequences include post-fall complications and extended periods of immobility on the floor. Researchers have been exploring various techniques for fall detection over the past decade, and this study introduces an innovative Elder Fall Detection system that harnesses IoT and AI technologies. In our IoT configuration, we integrate RFID tags into smart carpets along with RFID readers to identify falls among the elderly population. To simulate fall events, we conducted experiments with 13 participants. In these experiments, RFID tags embedded in the smart carpets transmit signals to RFID readers, effectively distinguishing signals from fall events and regular movements. When a fall is detected, the system activates a green signal, triggers an alarm, and sends notifications to alert caregivers or family members. To enhance the precision of fall detection, we employed various machine and deep learning classifiers, including Random Forest (RF), XGBoost, Gated Recurrent Units (GRUs), Logistic Regression (LGR), and K-Nearest Neighbors (KNN), to analyze the collected dataset. Results show that the Random Forest algorithm achieves a 43% accuracy rate, GRUs exhibit a 44% accuracy rate, and XGBoost achieves a 33% accuracy rate. Remarkably, KNN outperforms the others with an exceptional accuracy rate of 99%. This research aims to propose an efficient fall detection framework that significantly contributes to enhancing the safety and overall well-being of independently living elderly individuals. It aligns with the principles of sustainability in IoT and AI applications.

1. Introduction

An elderly fall refers to a situation where an older adult or senior citizen loses their balance and physically descends to the floor or any other surface. These falls pose significant challenges due to the potential for severe injuries and health complications. In 2019, there were 703 million individuals in the world aged 65 or older, a number expected to quadruple to 1.5 billion by 2050 []. Falls pose a significant threat to older adults’ health, with one in four experiencing a fall each year, according to the CDC’s 2022 data. The global market for IoT-based fall detection systems is expected to reach USD 4.5 billion by 2025, demonstrating the growing demand for these technologies. AI-powered fall detection systems have been shown to have a sensitivity of up to 98% and a specificity of up to 99%, indicating their accuracy in identifying falls. The use of IoT and AI for fall detection can reduce the risk of falls by up to 25%, potentially preventing injuries and hospitalizations. Considering that the average cost of a fall-related injury for an older adult is USD 30,000, the use of IoT and AI for fall detection can also save healthcare systems billions of dollars annually. Wearable sensors, smart home devices, and AI-powered cameras can collect data on movement patterns and environmental factors to identify fall risks and detect falls in real-time. These technologies can also provide interventions to prevent falls and support independent living in other ways, such as medication reminders and health monitoring. By utilizing IoT and AI, we can enhance elderly care and promote independent living sustainability. These falls are often attributed to factors such as diminished mobility, weakened muscles, balance issues, vision problems, medication side effects, and environmental hazards. Given the susceptibility of elderly individuals to injuries and their potential impact on overall well-being, preventing and effectively addressing elderly falls becomes a crucial focus in healthcare and senior care.

In the context of a global demographic shift toward an aging population, the issue of falls among elderly individuals has gained increased significance. With the elderly demographic projected to double by 2050, accounting for over 16% of the world’s population [], it is crucial to thoroughly understand and implement proactive measures to address the impact of falls within this segment. The World Health Organization (WHO) has identified falls as the second leading cause of unintentional grievous deaths worldwide, often resulting in fractures, sprains, and head injuries that require extensive medical care []. This introduction seeks to explore the multifaceted nature of elderly falls, including their causes, consequences, and the need for comprehensive preventive strategies. Elderly falls often result from a complex interplay between intrinsic factors associated with aging and external environmental conditions []. Factors such as muscle and bone weakening, reduced flexibility, and changes in sensory perception contribute to compromised balance and mobility among seniors. Chronic conditions, such as osteoporosis, arthritis, and neurological disorders, further elevate the risk of falls []. Moreover, the psychological impact on older adults is profound, with a fear of falling leading to reduced mobility, social isolation, and a diminished quality of life. The financial burden on healthcare systems is substantial, especially with the anticipated increase in healthcare costs associated with falls in an aging population. Environmental hazards, including uneven surfaces and inadequate lighting, exacerbate the likelihood of falls. Recognizing this intricate web of risk factors is essential for the development of effective fall prevention measures [].

Researchers, healthcare experts, and technology developers have made significant contributions to understanding and mitigating elderly falls. AI algorithms are refining sensor data analysis, leading to better fall detection accuracy, with some systems achieving up to 98% sensitivity and 99% specificity. Integration with smart home devices is creating comprehensive fall prevention and response solutions. They have harnessed sensor-based technologies, such as PIR sensors mounted on walls and RFID tags, to address this issue []. In the realm of assistive living, deep learning (DL) and computer vision techniques have been employed []. While monitoring systems such as cameras were initially used to tackle this problem, they proved inefficient due to privacy concerns. Researchers have conducted studies to identify risk factors, including impaired balance, muscle weakness, and environmental hazards, contributing to falls among older adults [,]. These findings have paved the way for targeted exercise programs, fall prevention interventions, and home modifications to enhance safety [,]. Moreover, wearable devices and sensor technologies have been designed to detect falls and promptly alert caregivers or medical professionals, resulting in quicker response times and reduced injury severity. Collaborative efforts aim to enhance the quality of life for seniors and alleviate the financial and healthcare burdens associated with fall-related injuries. However, it is essential to acknowledge the challenges faced by the elderly when it comes to wearing devices continuously []. The implications of elderly falls extend well beyond physical injuries, encompassing emotional, psychological, and societal dimensions. By integrating evidence-based interventions and fostering a culture of awareness and collaboration, we can usher in an era where older adults can age gracefully and independently, secure in the knowledge that their well-being is safeguarded []. The increasing prevalence of falls among independently living elderly individuals underscores the need for proactive solutions. These falls not only pose immediate risks but also compromise their overall well-being and autonomy. With the global aging trend on the rise, there is an urgent demand for innovative approaches to address this challenge. In a wearable device, wearing an airbag or smart gadget all the time can be bothersome, and people often forget to put it on, especially as they get older. Another option is using cameras to watch for falls among the elderly, which is good because it can cover a large area. However, having cameras always watching can make older people feel like their privacy is being invaded, and they may not feel at ease. In our work, we used advanced and modern techniques to detect and predict when elderly people might fall.

In this study, we used RFID technology in smart carpets to improve fall detection and help older people live safely on their own. RFID uses electromagnetic fields to automatically find and track special tags that are part of these smart carpets. Preventing elderly falls is important, and we want to do that by using the Internet of Things (IoT) and Artificial Intelligence (AI) to create a system that can quickly spot falls and fit into people’s daily routines. Our research draws inspiration from compelling statistics and research findings, envisioning a future where technology empowers the elderly to maintain their independence with enhanced safety. The proposed solution for precise and non-intrusive elderly fall detection has a multitude of potential applications, including home care for seniors, assisted living facilities, hospitals and healthcare facilities, telehealth, remote monitoring, research and data analysis, elderly care services, emergency response services, smart home integration, fall prevention programs, and aging in place initiatives. Its versatility ensures the safety and well-being of elderly individuals across various settings, enhancing the quality of their lives and providing peace of mind for caregivers and healthcare professionals.

The advantages of this work include its remarkable precision in fall detection, non-intrusive design, real-time alerts, and versatility in various applications. It offers a comprehensive solution to enhance the safety and well-being of elderly individuals. However, potential disadvantages encompass reliance on technology, possible interference issues, and user acceptance challenges. Balancing these aspects is essential to ensure the technology’s effective and user-friendly deployment in diverse settings.

1.1. Motivation

Every year, there are approximately 36 million reported cases of older individuals experiencing falls, resulting in over 32,000 deaths. Additionally, more than 3 million older individuals seek medical attention in emergency facilities for fall-related injuries []. The increasing frequency of fatal incidents linked to elderly falls highlights the pressing need for reliable and unintrusive fall detection solutions. The concerning statistics of deaths resulting from such incidents underscore the critical importance of advancing our methods in this field. While previous attempts have been made to utilize monitoring systems, such as cameras [] and wearable gadgets [], these often encroach upon the privacy of elderly individuals, and the challenge of elderly individuals wearing these gadgets continuously hinders the acceptance and effectiveness of these solutions. This research article aims to present an approach that leverages RFID technology for detecting elderly falls and utilizes AI to accurately predict these events. By incorporating RFID sensors into smart carpets and employing AI, we aim to overcome the limitations of existing methods and ensure unobtrusive yet precise fall detection. Our investigation into the effectiveness of RFID technology in this context is motivated by its potential to offer a cost-effective yet highly dependable means of safeguarding our elderly population from fall-related hazards. Through this study, we seek to bridge the gap in fall detection technology and contribute to a safer and more dignified life for elderly individuals.

1.2. Contribution

Our research aims to make a significant contribution to the pressing challenge of elderly fall detection through the utilization of RFID and IoT technologies. Recognizing the privacy limitations often associated with vision-based camera systems, we propose an alternative solution that offers both accuracy and discretion. By harnessing RFID sensors, our goal is to develop a sophisticated fall detection system that operates without invasive surveillance.

The integration of the latest deep learning models with the extensive dataset collected from our efficient RFID-based sensors represents a novel approach with the potential to enhance the precision and reliability of fall detection. Our dataset, thoughtfully designed to encompass two distinct parameters—falling and walking—provides a comprehensive representation of real-world scenarios, thereby strengthening the robustness of our proposed system. Through our research, we aspire to pioneer an innovative, unobtrusive, and technologically advanced approach to elderly fall detection, ultimately contributing to the well-being and safety of our aging population.

The contributions of our proposed system are listed below.

- We are utilizing sensor-based technologies, such as RFID tags and readers, which do not impact the privacy of the elderly. Unlike previous vision-based solutions that involved constant monitoring and could encroach upon the privacy of the elderly, making them uncomfortable in their daily lives, our approach is designed to be unobtrusive. Moreover, these vision-based solutions often come with higher costs.

- In our solution, the elderly are not required to wear any device or gadget. We have integrated RFID tags into the smart carpet, allowing the elderly to continue their daily lives without the need to wear any gadgets. Requiring individuals to wear devices at all times can be both frustrating and contrary to human nature. Additionally, as people age, it becomes progressively more challenging for the elderly to consistently remember to wear such a device.

- To improve the accuracy of elderly fall detection, we have employed machine learning and deep learning classifiers. We collected data from a dataset comprising 13 participants who voluntarily engaged in both falling and walking activities.

- To tackle and enhance the issues related to elderly falls, including extended periods of being unattended after a fall, this study introduces IoT-based methods for detecting fall events using RFID tags and RFID readers. When a fall event is detected, an alarm is generated to notify caregivers.

Our proposal introduces an innovative fall detection algorithm based on both Machine Learning and deep learning techniques. Importantly, this algorithm demonstrates scalability concerning the monitored areas where RFID tags are integrated into smart carpets. Its scalability is attributed to its focus on a specific region of interest and the associated tag observations within that area. Furthermore, this novel approach substantially reduces the computational workload for fall detection compared to our previous method. Our evaluation covers both fall detection and walking patterns. Assessing walking patterns is of significance as the proposed fall detection approach is anticipated to encounter walking activities more frequently than falls in real-world deployments.

1.3. Paper Organization

The rest of the paper is organized as follows: Section 2 describes related work, Section 3 designates the proposed method, Section 4 provides implementation facts, Section 5 describes ML and DL classifiers assessments, Section 6 presents the experiment’s findings, and Section 7 defines conclusions and defines further research.

2. Related Work

For many years, researchers have been working to improve how we detect falls in older people, as falls can have serious consequences for them. The methods have evolved from using cameras to sensors and advanced computer techniques. Wearable healthcare devices may not always be accurate, and this depends on various factors, such as the device itself and the sensors it uses. We need to test these fall detection technologies in real-world settings to see how well they work. Older people’s opinions are crucial in making effective fall detection systems. They value technology that is easy to use, respects their privacy, works quickly, looks good, and provides clear instructions.

In this section, we discussed in detail the work of the research for fall detection relevant to the sensors and vision-based techniques. Reference [] thoroughly examines all aspects of using wearable healthcare devices. Vision-based sensors have emerged as a pivotal technology in the field of elderly fall detection, utilizing cameras to capture and analyze the movements of aging individuals. Despite their potential, vision-based sensors face notable challenges and considerations. One significant concern with vision-based monitoring is privacy infringement. Continuous surveillance within personal living spaces can impinge upon the privacy of elderly individuals, leading to ethical dilemmas concerning the delicate balance between safety and the autonomy of seniors []. Maintaining the dignity of those under observation while ensuring effective fall detection presents a complex challenge. Moreover, the effectiveness of vision-based sensors can be compromised by environmental factors. Low lighting conditions and obscured viewpoints impact the reliability of these systems. Such limitations constrain their applicability in scenarios characterized by inadequate lighting or obstructed viewpoints, potentially resulting in missed fall events or erroneous alarms []. Despite these challenges, vision-based sensors have laid the groundwork for subsequent advancements in fall detection technology. Their early exploration underscored the necessity for unobtrusive yet accurate fall detection solutions that prioritize the privacy and well-being of elderly individuals. In response to these challenges, researchers have explored alternative technologies, such as sensor-based solutions and the integration of Radio-Frequency Identification (RFID) technology, to address the limitations and concerns associated with vision-based monitoring [,]. Elderly falls present a substantial challenge to healthcare systems worldwide, often leading to severe injuries and increased morbidity. To address this issue, researchers and healthcare practitioners have explored innovative approaches, including pressure sensors and vital sign monitoring. These technologies utilize sensors and wearable devices to monitor changes in pressure distribution and vital signs, providing valuable insights into a person’s posture, movement patterns, and physiological indicators. By continuously monitoring these parameters, healthcare providers can detect subtle changes that might indicate an increased risk of falls among the elderly. This real-time data can also assist in the development of personalized fall prevention strategies and timely interventions, ultimately contributing to enhanced elderly care and an improved quality of life [,,]. In reference [], the authors report that sensors achieve an accuracy of approximately 98.19%, a sensitivity of 97.50%, and a specificity of 98.63%. However, it is important to acknowledge that sensors can be influenced by environmental conditions such as lighting, temperature, and humidity, which may affect their performance and accuracy.

Sensor-based solutions have emerged as a promising avenue for detecting elderly falls, leveraging a variety of sensors, such as accelerometers, gyroscopes, and pressure sensors. These technologies are designed to provide accurate and unobtrusive fall detection while addressing the limitations associated with traditional vision-based approaches. Accelerometers, capable of measuring acceleration forces, are widely utilized in wearable devices to monitor changes in body position and acceleration patterns. Gyroscopes complement this functionality by detecting rotational movements, enhancing the capability to recognize falls by capturing the angular orientation of the body. In references [,], it is noted that pressure sensors integrated into the environment can further contribute by detecting changes in pressure distribution, revealing abrupt shifts indicative of a fall event. Sensor-based systems effectively address privacy concerns associated with vision-based solutions by focusing on unobtrusive data collection from body-worn devices or strategically positioned sensors in living spaces. These systems continuously analyze movement patterns and postures to distinguish between normal activities and fall-related events, thereby reducing false positives []. However, the challenge lies in developing algorithms capable of accurately distinguishing between genuine falls and common activities such as sitting or bending. Machine learning techniques, including Support Vector Machines (SVM) and Neural Networks (NN), have been employed to process the sensor data and identify fall patterns [,]. These methods enhance the systems’ ability to accurately detect falls while minimizing false alarms [,].

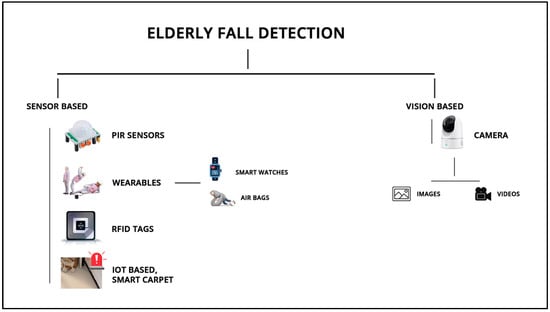

Audio-based methods for the detection of elderly falls involve utilizing sound or audio signals to identify fall events. These methods rely on analyzing audio patterns associated with falls, such as impact sounds or changes in ambient noise. In reference [], the authors state that an audio transformer model detects falls from environmental sounds with an accuracy of 0.8673. In 2020, Nooruddin et al. [] introduced a system based on a client–server architecture, adaptable to various IoT devices with internet connectivity, comprising four modules. Their linear classifier model achieved an impressive 99.7% accuracy in fall detection. In the same year, Clemente et al. [] presented a smart system designed for fall detection based solely on floor vibration data resulting from falls. This system goes beyond fall detection by incorporating person identification through the vibration produced by footsteps, thus providing information about the fallen individual. The system successfully detects fall events with an acceptance rate of 95.14%. The integration of Radio-Frequency Identification (RFID) technology, IoT, and Artificial Intelligence (AI) has opened new horizons in the realm of detecting elderly falls. This innovative approach leverages RFID-enabled sensors to discreetly monitor movements and enable real-time fall detection, addressing privacy concerns and advancing the accuracy of detection. RFID technology, known for its ability to wirelessly identify and track objects using radio waves, is applied to fall detection by embedding RFID tags in wearable devices or strategic locations within living spaces. These tags communicate with RFID readers, detecting abrupt changes in position or movements that could signal a fall event. The integration of IoT facilitates seamless data transmission from these RFID sensors to centralized systems, enabling caregivers or medical professionals to receive instant alerts and respond swiftly to fall incidents [,]. One of the primary advantages of RFID and IoT integration lies in their unobtrusive nature. Unlike camera-based solutions, RFID-enabled sensors operate without invading personal privacy, making them particularly suitable for sensitive environments such as homes or care facilities. Furthermore, this technology operates in real-time, reducing the response time to fall incidents and minimizing the potential consequences of delayed assistance. However, challenges remain in optimizing the accuracy of fall detection algorithms for RFID-based systems. Ensuring that the technology can distinguish between genuine falls and daily activities such as sitting or lying down requires advanced signal processing and machine learning techniques. In references [,,,,], by harnessing these methods, researchers have been able to enhance the sensitivity and specificity of fall detection, minimizing false alarms and maximizing the system’s reliability. The existing approaches that are used for elderly fall detection are shown in Figure 1.

Figure 1.

Taxonomy of existing approaches.

Machine learning and deep learning techniques have revolutionized the field of elderly fall detection, providing advanced capabilities in accurately identifying fall incidents, reducing false alarms, and enhancing the overall reliability of fall detection systems. These methods harness the power of data-driven approaches to improve sensitivity, specificity, and real-time responsiveness, significantly benefiting the safety and well-being of seniors. Traditional machine learning algorithms, such as Support Vector Machines (SVMs), Random Forest (RF), and K-Nearest Neighbors (KNN), have been employed to process data from various sensors, including accelerometers, gyroscopes, and pressure sensors. The algorithms discussed in references [,] analyze patterns of movement and positional changes, effectively distinguishing between normal activities and fall events. By selecting appropriate features and training the model on labeled fall data, these methods yield promising results in detecting falls with acceptable accuracy. Deep learning, a subset of machine learning, has emerged as a potent tool in this domain, particularly with the application of Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs). CNNs excel in extracting spatial features from image data, which is valuable for fall detection using camera-based systems. Meanwhile, RNNs excel in processing sequential data, making them suitable for time-series data from wearable sensors, enabling a more holistic understanding of movement patterns [,]. Transfer learning, a technique frequently used in deep learning, leverages pre-trained models on large datasets for accurate fall detection even with limited labeled data. This approach, as demonstrated by the author in reference [], proves especially beneficial in scenarios where collecting a substantial amount of fall-related data is challenging. Despite these advancements, challenges exist in optimizing the performance of machine learning (ML) and deep learning (DL) models for fall detection. Ensuring the generalization of models across diverse scenarios, addressing class imbalance, and minimizing false positives remain active areas of research [,,,,]. Additionally, the interpretability of deep learning models poses a concern, as understanding the rationale behind their decisions is crucial, particularly in medical and healthcare applications [,,]. Fall detection algorithms used in research papers on elderly falls can be broadly categorized into two main types: threshold-based algorithms and data-driven approaches. Threshold-based algorithms [,,,] employ a predefined threshold to determine whether a fall has occurred. For instance, an algorithm might be configured to detect a fall if the acceleration sensor data exceeds a certain value. Threshold-based algorithms are straightforward to implement and require minimal computation, but they can be inaccurate in noisy environments or when the elderly person’s movements do not align with the characteristics of a fall. Data-driven algorithms [,,] utilize machine learning or deep learning techniques to learn the patterns of human movement associated with falls. Data-driven algorithms can be more accurate than threshold-based algorithms but require more computation and can be more challenging to implement. ML and DL algorithms, such as Support Vector Machines (SVMs) [,], Random Forests [], Recurrent Neural Networks (RNNs) [], and Convolutional Neural Networks (CNNs) [], are used for fall detection with wearable sensors data and image data, respectively.

A summarized state-of-the-art analysis of additional research work is presented in Table 1.

Table 1.

State-of-the-art analysis.

The developed RFID-based AI model demonstrates the promising potential for elderly fall detection, offering a cost-effective, precise, and privacy-friendly solution. Its cost-effectiveness is evident in the affordability of RFID tags compared to expensive cameras and wearable sensors. A study found that RFID-based systems can reduce fall detection costs by up to 50% compared to vision-based systems [,]. This precision surpasses vision-based systems, which can be affected by lighting conditions and complex backgrounds. Moreover, the privacy-friendly nature of RFID tags, which do not capture visual data, addresses concerns associated with camera-based systems. While the limited range of RFID tags could be a constraint compared to vision-based systems, it may be sufficient for smaller homes or specific rooms where fall risks are higher. Addressing range limitations and potential environmental interference through careful tag placement and mitigation strategies can further enhance the model’s effectiveness. The RFID-based AI model has the potential to make a significant contribution to elderly care and fall prevention, particularly in settings where cost-effectiveness, privacy, and precise accuracy are paramount. The experiment improves fall detection for the elderly without them needing to wear special devices, respecting their privacy while ensuring their safety. We use advanced algorithms for high accuracy and have a quick alert system for caregivers. This solution represents the future of elderly fall detection, focusing on precision and dignity. The proposed approach has many uses, from home care to healthcare facilities, offering reassurance to caregivers and healthcare professionals. It is known for its precision, non-intrusive design, real-time alerts, and adaptability.

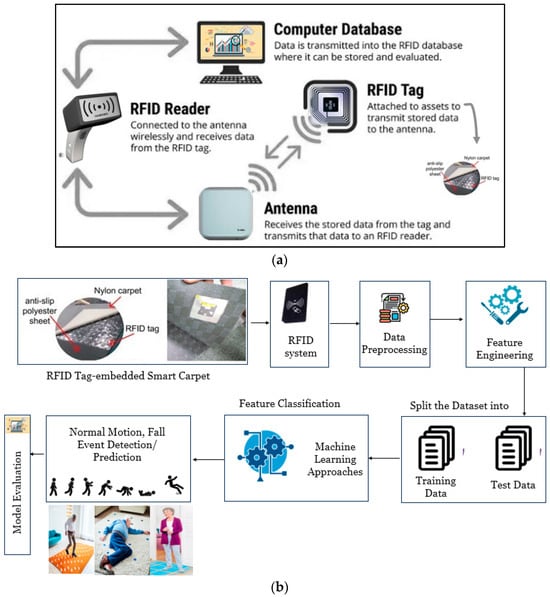

3. Proposed Methodology

This study introduces an innovative approach to tackle the critical issue of elderly fall detection by harnessing the capabilities of a smart carpet integrated with RFID tags for comprehensive activity capture. The core component of our smart carpet is the integration of RFID tags. RFID, which stands for Radio-Frequency Identification, is a wireless technology with the capability to autonomously and precisely identify objects and individuals. Modern RFID systems consist of three primary components: RFID tags, RFID readers, and antennas, along with backend systems used for data storage and accurate predictive purposes, beneficial for informing caregivers. As illustrated in Figure 2a, we provide an overview of our proposed methodology. The hardware components include the RFID reader and antennas in addition to the smart carpet. Software components encompass the RFID Reader Communication Driver and the Fall Detection Algorithm. RFID data collected from the smart carpet is captured by the RFID reader, and communication is facilitated through the RFID Reader Communication Driver. The system processes information obtained from RFID tags embedded in the smart carpet, identifying potential falls and subsequently classifying them through machine learning and deep learning algorithms. In the future, we envision a seamless integration of the fall detection algorithm with the RFID reader and deep learning classifiers.

Figure 2.

(a) RFID system and its components for elderly fall detection framework. (b) RFID IoT and AI-based elderly fall detection framework.

Our methodology encompasses several key stages, commencing with meticulous data preprocessing to ensure data quality. Subsequently, each activity is assigned a unique numerical label to enable supervised learning, followed by the extraction of pertinent features through careful feature engineering.

The dataset is then partitioned into training and testing sets, maintaining an 80:20 ratio to ensure robust model training and unbiased evaluation. The core contribution lies in the diverse set of classifiers employed, spanning both machine learning (ML) and deep learning (DL) domains. These classifiers include the Gated Recurrent Unit (GRU), Gradient Boosting (GB), XGBoost (XGB), Logistic Regression (LGR), K-Nearest Neighbors (KNN), and Random Forest (RF). The performance of these classifiers is rigorously evaluated considering established metrics such as accuracy, precision, recall, and F1-score to determine the most effective model for elderly fall detection, as shown in Figure 2b. The results underscore the potential and effectiveness of our proposed methodology, highlighting the synergy between wearable technology and advanced data analysis techniques in addressing real-world challenges concerning elderly well-being.

3.1. Data Collection

Central to the methodology is the application of RFID tags to the study participants. A smart carpet is present in the room with RFID tags embedded. These tags are associated with unique Electronic Product Codes (EPCs) that serve as identifiers for individual participants [,].

The IoT-based smart carpet is equipped with an array of sensors strategically positioned to capture the movement patterns of the participants. These sensors utilize various technologies, such as accelerometers and gyroscopes, to record precise motion data. These data encompass a spectrum of walking modes, including normal walking, brisk walking, and shuffling, as well as simulated falling motions. When a person walks on the carpet, a small amount of data is collected through RFID tags. However, when a person falls, a significant amount of data is collected compared to when they were just walking. These data are transmitted through RFID tags to RFID readers, indicating that the person has fallen and is not walking.

- (a)

- Smart Carpet dataset: This dataset consists of data collected from young volunteers falling and walking on the RFID tag-embedded smart carpet. Thirteen participants are identified by their number.

- (b)

- Dataset organization:Fall: This directory contains simulated fall data. The multiple files in each numbered directory correspond to the different types of activities performed by a single participant.Walking: Data related to comprehensive walking patterns are in this directory. The multiple files in each numbered directory are the different walking patterns.

- (c)

- Dataset format: All the values are stored as comma-separated values, and the dataset contains 09 columns.

- Sequence no.: Sequence number of the received observation.

- Timestamp: Data recorded timestamp given by the data collection computer.

- Mode: Class label.

- Epc: ID of the tag.

- readerID: reader ID.

- AntennaPortNumber: an antenna that captured the observation.

- ChannelInMhz: RFID reader transmission frequency.

- FirstSeenTime: The time at which the RFID tag was observed by the RFID reader for the current event cycle for the first time. This value is the nanoseconds from the Epoch.

- PeakRSSIInDbm: Maximum value of the RSSI received during a given event cycle.

3.2. Data Integration and Framework Utilization

The collected data, consisting of both RFID tag-generated movement data, is loaded into a Python-based framework utilizing the capabilities of the Contextual Sensing Platform (CSP). The framework streamlines data manipulation, preprocessing, and subsequent analysis, ensuring a cohesive and efficient work.

3.3. Data Preprocessing

The transformation of the Electronic Product Code (EPC) values associated with the RFID tags into their corresponding x and y coordinates represents a pivotal step in our methodology. This spatial transformation goes beyond simple data conversion; it serves as a bridge between raw data and meaningful insights. By linking spatial coordinates to each individual’s movements, the resulting dataset gains a profound layer of context. This contextual information enriches the analysis, enabling a more nuanced understanding of how different movement patterns unfold within the environment. This becomes particularly significant when considering fall events, as the intricacies of body movements and their spatial relationships provide vital clues for accurate detection.

Following spatial transformation, the process of numerical encoding imparts a quantitative dimension to the extracted spatial information. Through this encoding, the x and y coordinate values, now represented as integers ranging from 1 to 33, acquire a structured form that seamlessly integrates into machine learning algorithms. This encoding translates the continuous spatial data into discrete values, making it amenable to mathematical computations and pattern recognition algorithms. The act of assigning unique numerical identifiers to each spatial point not only facilitates algorithmic processing but also encapsulates the inherent characteristics of the original spatial context. In essence, this encoding preserves the essence of the initial spatial data while enabling the systematic application of machine learning methodologies.

In the comprehensive context of our fall detection methodology, the transformation and numerical encoding of spatial coordinates represent the convergence of spatial awareness and computational analysis. This orchestrated process empowers the subsequent stages of data processing, feature extraction, and model training. The transition from raw Electronic Product Code (EPC) values to quantified spatial features is a testament to the synergy between technology and innovation, reflecting a profound effort to harness the potential of data-driven insights in safeguarding the well-being of the elderly population.

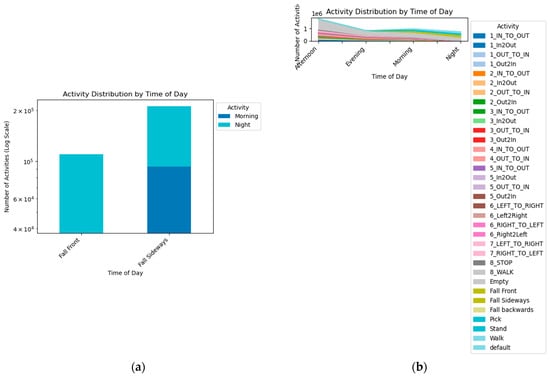

The datasets from walking and falling scenarios are combined to create a unified dataset that encapsulates a wide range of movement patterns. This integrated dataset serves as the foundation for subsequent analysis and model training. Given the inherent rarity of fall events compared to normal walking, the dataset is often imbalanced. To mitigate this, oversampling techniques are employed wherein instances of the minority class (falling) are duplicated to achieve a more balanced representation of the two classes. This balanced dataset serves as the basis for training and evaluating the machine learning models. Figure 3a,b shows fall activity by time of day and activity distribution by time of day, respectively.

Figure 3.

(a) Falls activity by time of day. (b) Activity distribution by time of day.

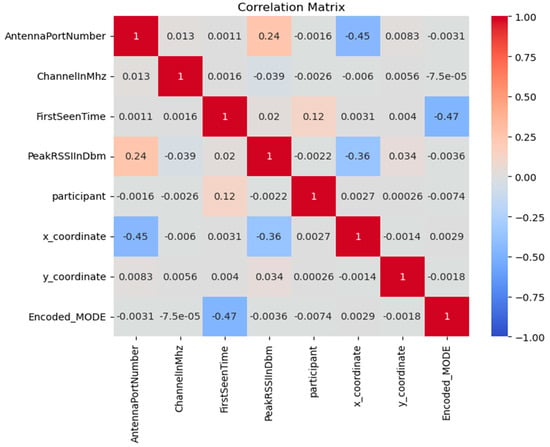

3.4. Correlation Matrix

The correlation matrix is a statistical tool used to quantify relationships between variables in a dataset. By displaying correlation coefficients, it reveals the strength and direction of linear associations. This matrix assists in identifying patterns and dependencies among variables, aiding feature selection and data exploration. Analyzing the correlation matrix guides data-driven decision-making in various fields, from finance to scientific research; the correlation matrix values of fall detection events are shown in Figure 4.

Figure 4.

Correlation matrix of fall event detection dataset.

4. Implementation Details

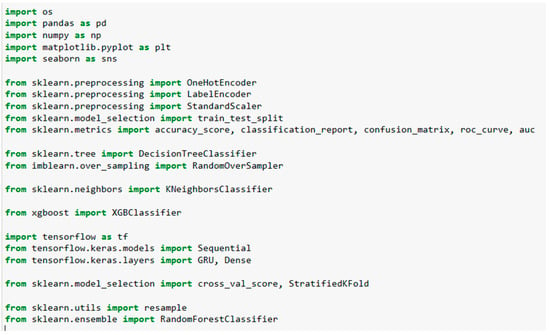

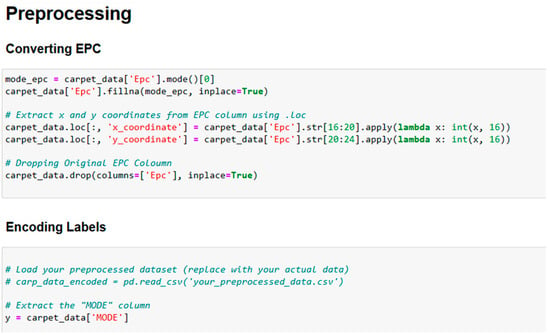

The methodology’s implementation was carried out using Google Colab, which offers a cloud-based environment for efficient computation. The implementation employed Python 3.9 as the programming language and made use of key libraries, including NumPy, pandas, sci-kit-learn, TensorFlow, and XGBoost. For data collection, participants were equipped with RFID tags and IoT-sensor data was gathered using a smart carpet. The Python-based framework utilizing Contextual Sensing Platform (CSP) techniques seamlessly integrates RFID tag data and sensor-generated movement data. Additionally, EPC-to-coordinate transformation and numerical encoding were conducted to prepare the data for machine learning algorithms. Notably, the ensemble of algorithms, which includes Random Forest, KNN, GRU, XGBoost, Gradient Boosting, and Logistic Regression, operates without fine-tuning, as shown in Figure 5 along with the libraries. The few preprocessing steps are illustrated in Figure 6 as well. The implementation exemplifies the symbiotic interaction of hardware, software, and advanced algorithms, enabling a comprehensive fall detection approach with notable accuracy achievements.

Figure 5.

Implementation details with respect to libraires.

Figure 6.

Data preprocessing details.

5. ML and DL Classifiers Analysis and Discussion

In this section, we delve into the experimental results for elderly fall detection. When a patient or elderly individual falls on the smart carpet, it covers the maximum tags compared to when they are walking. Consequently, when a fall event is detected, an alarm is generated to inform the caregivers. The data obtained from the RFID tags are further classified by applying machine learning (ML) and deep learning (DL) classifiers. The outcomes reveal that the K-Nearest Neighbors (KNN) classifier performed the best on RFID for accurately detecting fall events. Further details are discussed in this section.

5.1. Machine and Deep Learning Classifiers

To identify the most suitable algorithm for fall detection, an array of ML and DL algorithms is applied to the balanced dataset. The algorithms include Random Forest, K-Nearest Neighbors (KNN), Gradient Boosting (GB), GRU, XGBoost, and Logistic Regression (LR).

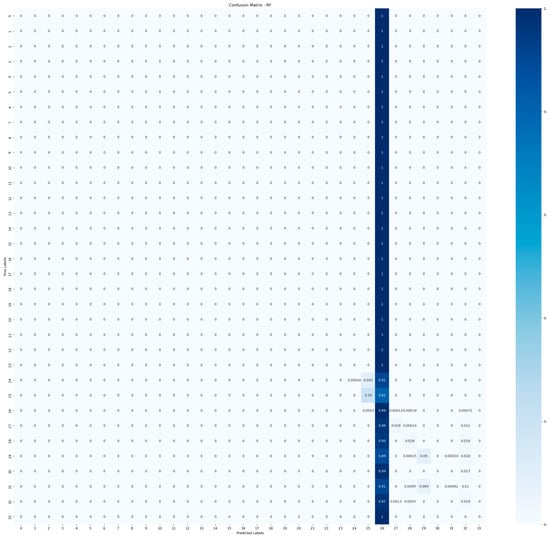

5.1.1. Random Forest (RF)

Within the context of elderly fall detection, the Random Forest algorithm assumes a pivotal role as a cornerstone of the methodology. Deployed on a dataset exclusively comprising fall-related data, the algorithm showcased its capacity to navigate the complexities of the elderly population’s movement patterns. Notably, achieving an accuracy of 42.6% without recourse to fine-tuning, the Random Forest algorithm unveiled a nuanced understanding of fall events. While the accuracy may appear modest in isolation, it signifies a substantial stride in comprehending the intricate interplay between sensor data and fall dynamics. The algorithm’s ensemble of decision trees aptly captures the diverse variables governing fall occurrences, demonstrating its ability to recognize subtle cues within the data. Although fine-tuning was not pursued, the achieved accuracy substantiates the potential of the Random Forest algorithm in enhancing elderly fall detection systems. This outcome serves as a springboard for further exploration, inviting inquiries into feature engineering, parameter optimization, and ensemble refinements that could potentially amplify the algorithm’s accuracy and its role in fostering safety among the elderly population. The fitness function of RF is shown in Equation (1).

where

Gini impurity = 1 − sum(pi2)

- pi is the probability that a data point in the node belongs to class i.

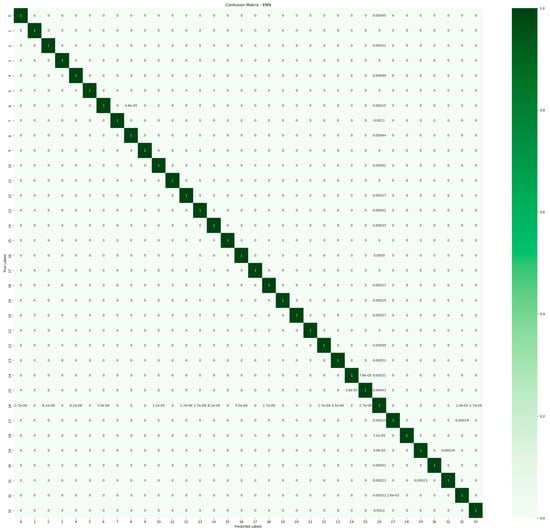

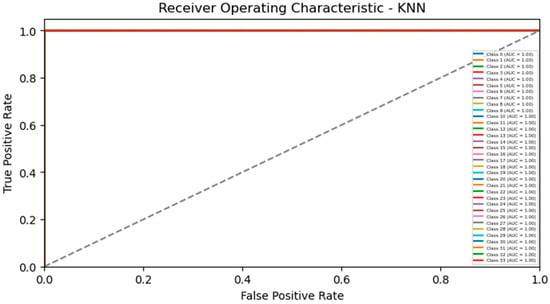

5.1.2. K-Nearest Neighbors (KNN)

In the realm of elderly fall detection, the K-Nearest Neighbors (KNN) algorithm emerges as a beacon of accuracy and efficiency. Leveraging a combined dataset encompassing both walking and fall instances without the need for fine-tuning, KNN remarkably achieved an accuracy of 99%. This remarkable accuracy attests to KNN’s efficacy in distinguishing between normal walking and fall events. The essence of KNN’s principle, proximity-based classification, demonstrates its ability to capture the underlying patterns present within the dataset. The algorithm capitalizes on the inherent spatial and temporal characteristics of the data, attributing each instance based on its similarity to its neighboring counterparts. While fine-tuning was deliberately eschewed, the achieved accuracy stands as a testament to KNN’s inherent power and adaptability. This accomplishment reverberates beyond its numerical value, underscoring the algorithm’s role in bolstering fall detection systems for the elderly population. As the methodology evolves, the KNN algorithm exemplifies a benchmark for meticulous exploration, with potential avenues to further refine and amplify its performance in real-world scenarios. The objective function of KNN is presented in Equation (2).

where

y_k = \mode\{y_i | x_i \in N_k(x)\}

- yk is the predicted class label for a new data point x.

- yi is the class label of the $i$th training data point.

- xi is the $i$th training data point.

- Nk(x) is the set of the K-Nearest Neighbors of x in the training set.

- $\mode$ is the function that returns the most frequent element in a set.

5.1.3. Gated Recurrent Unit (GRU)

In the pursuit of enhancing fall detection accuracy within the proposed methodology, the GRU algorithm emerged as a compelling candidate. GRU, a variant of recurrent neural networks, possesses a unique architecture that enables the modeling of sequential data by selectively retaining and updating information through its gating mechanisms. In the context of our study, the GRU algorithm was applied to the combined walking and falling dataset without resorting to fine-tuning techniques. Notably, this approach yielded an accuracy of 40% in fall detection. The GRU’s ability to capture temporal dependencies and recurrent patterns in movement data likely contributed to its performance. While the achieved accuracy may appear modest compared to some other algorithms, the significance lies in its integration within the broader methodology. This finding underscores the potential of employing advanced machine learning techniques, such as GRU, to further refine fall detection systems for the elderly population, with opportunities for iterative improvements as the methodology evolves. The working mechanism is shown in Equation (3).

where:

Z_t = \sigma (W_z x_t + U_z h_{t − 1} + b_z)

- zt is the update gate at time step t.

- xt is the input at time step t.

- ht−1 is the hidden state at time step t – 1.

- Wz is the weight matrix for the update gate.

- Uz is the recurrent weight matrix for the update gate.

- bz is the bias term for the update gate.

- σ is the sigmoid function.

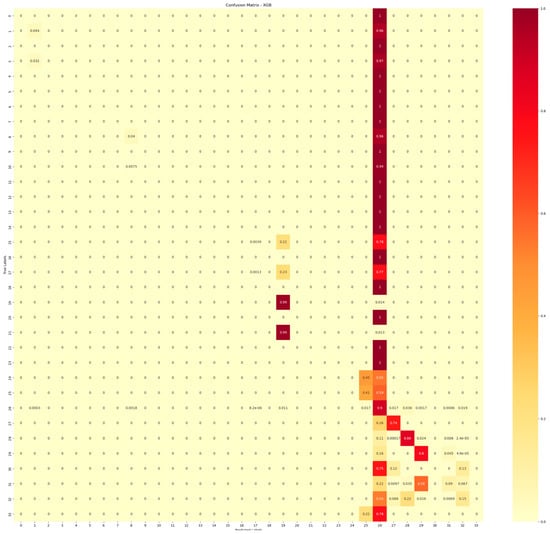

5.1.4. XGBoost

The integration of the XGBoost algorithm into our fall detection methodology brought forth a novel approach to harnessing machine learning for enhanced accuracy. XGBoost, an ensemble learning technique that combines the power of decision trees and gradient boosting, has garnered attention for its robust performance across various domains. In our study, the XGBoost algorithm was employed on the combined walking and falling dataset without the application of fine-tuning techniques. While achieving an accuracy of 48.4%, XGBoost demonstrated its potential to contribute to the realm of fall detection. The complexity of the algorithm’s ensemble structure and its capability to capture intricate relationships between data features could explain its ability to distinguish between normal walking and fall events. Although the achieved accuracy may be comparatively lower, this result opens avenues for exploration. Further investigation into feature engineering, parameter optimization, and potential ensemble variations could potentially elevate XGBoost’s performance within our methodology, ultimately enhancing the overall effectiveness of the fall detection system for elderly individuals. The fitness function is shown in Equation (4).

where the terms are the same as for gradient boosting, with the addition of the γi term, which controls the interaction between the weak learners.

h(x) = f(x) + \sum_{i = 1}^m \beta_i \left[ g(x; \theta_i) + \gamma_i h(x) \right]

5.1.5. Logistic Regression (LR)

Logistic Regression, a classification algorithm, is commonly used to predict the probability of an instance belonging to a certain class. In the context of detecting elderly falls, it relies on features extracted from sensors monitoring movement. Despite its simplicity, it can be effective when used appropriately. However, achieving an accuracy of 41.7% in this application suggests challenges. Potential reasons include inadequate features, imbalanced data, the complexity of fall events, or limitations of the algorithm itself. Enhancing accuracy could involve refining feature selection, addressing data quality issues, considering more complex models, and expanding the dataset. The fitness function is shown in Equation (5).

where

p(y = 1 | x) = \dfrac{1}{1 + e^{-(w_0 + w_1 x)}}

- p(y = 1∣x) is the probability that a data point with features x belongs to class 1.

- y is the target variable, which is either 0 or 1.

- x is the vector of features.

- w0 is the bias term.

- w1 is the weight for the first feature.

- e is the base of the natural logarithm.

5.1.6. Gradient Boosting (GB)

Gradient Boosting, an ensemble learning technique, is widely employed for classification tasks, aiming to improve predictive accuracy. In the realm of elderly fall detection, Gradient Boosting constructs a strong extrapolative model by compounding multiple weak learners, usually decision trees. Despite its powerful nature, achieving a 48% accuracy in this context presents challenges. Potential factors encompass suboptimal feature selection, imbalanced data distribution, intricate characteristics of fall incidents, or limitations of the gradient-boosting algorithm itself. To enhance accuracy, it is advisable to fine-tune feature selection, rectify data quality concerns, experiment with diverse ensemble models, and consider augmenting the dataset size for better generalization. The fitness function is shown in Equation (6).

where

h(x) = f(x) + \sum_{i = 1}^m \beta_i g(x; \theta_i)

- h(x) is the predicted label.

- f(x) is the initial prediction.

- m is the number of boosting steps.

- βi is the coefficient of the $i$th weak learner.

- g(x;θ i) is the $i$th weak learner.

- θi are the parameters of the $i$th weak learner.

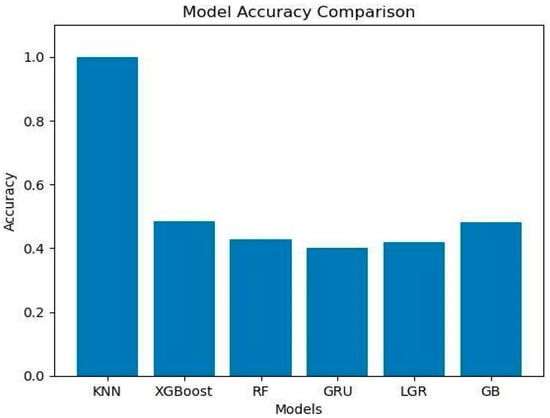

5.2. Classifiers Performance Assessment

We assessed the performance of various machine learning classifiers for detecting fall events among the elderly using RFID data. The classifiers under scrutiny include XGBoost (48.4%), GB (48%), RF (42.6%), LR (41.7%), GRU (40%), and KNN (99%). Notably, KNN and XGBoost showcased the highest accuracies at 99% and 48.4%, respectively, highlighting their effectiveness. Logistic Regression and GRU exhibited modest results at 41.7% and 40%.

These findings underscore the promise of ensemble methods such as XGBoost and KNN in RFID-based fall detection systems. This assessment sheds light on the intricate interplay between algorithm selection, data quality, and fall detection efficacy in an aging population context.

6. Experimental Results and Discussions

We will analyze various algorithms to determine their accuracy in our proposed platform. By conducting comprehensive evaluations, we aim to identify the most effective algorithms that ensure precise and reliable results. This process will enable us to enhance the overall performance and reliability of our system.

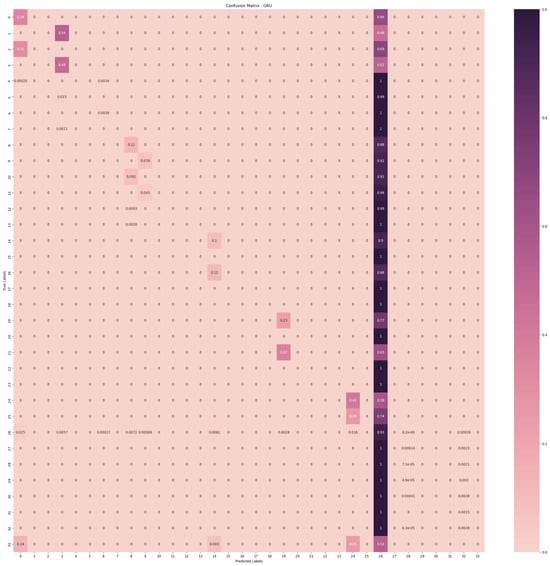

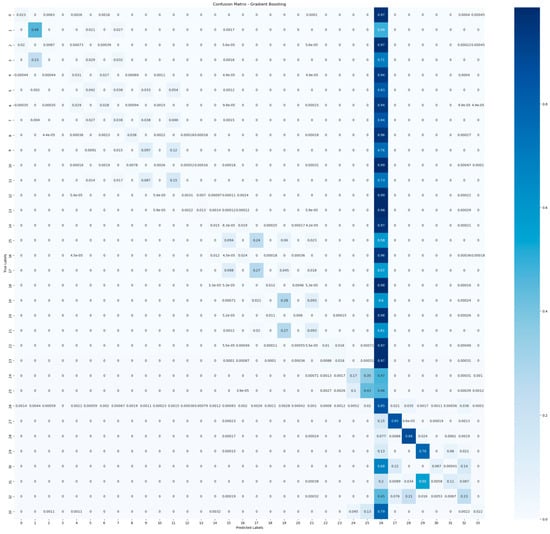

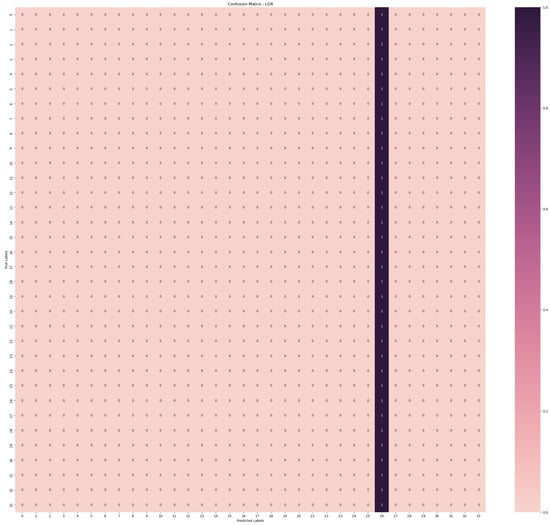

Confusion Matrix of ML and DL Models

A confusion matrix is a tabular representation commonly employed in classification to assess the performance of a classification model. The confusion matrix provides a comprehensive breakdown of the model’s predictions, offering insights into true positives, true negatives, false positives, and false negatives for each class in a multi-class scenario []. The confusion matrices of GRU, GB, LR, KNN, XGB, and RF are shown in Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12, respectively. The confusion matrix of GRU is presented in Figure 7. Figure 8 shows GB, Figure 9 shows LR, and Figure 10 shows KNN. Similarly, Figure 11 and Figure 12 show the confusion matrices of XGB and RF, respectively.

Figure 7.

Confusion matrix of GRU.

Figure 8.

Confusion matrix of gradient boosting.

Figure 9.

Confusion matrix of logistic regression (LR).

Figure 10.

Confusion matrix of KNN.

Figure 11.

Confusion matrix of XGB.

Figure 12.

Confusion matrix of Random Forest.

7. Evaluating Parameters

In this research, the primary evaluation parameters considered to assess the performance of the ML classifier are accuracy, precision, recall, and F-measure, as detailed in Table 2. Consequently, the specificity (precision) and sensitivity (recall) of the targeted class are calculated to assess the algorithm’s predictive accuracy for that specific class. These metrics in ML are determined based on the rates of “TP—True Positive, TN—True Negative, FP—False Positive, and FN—False Negative” []. True positive and true negative predictions are divided by all positive and negative predictions, respectively. The outcomes predicted by all models include TP, TN, FN, and FP. TP represents the correctly anticipated outcomes, while FN denotes the outcomes that were expected but not realized. FP, on the other hand, signifies outcomes that were anticipated but did not materialize. TN is not an actual outcome in the real world, and it is not anticipated to be one in the future.

Table 2.

Classification stats of classifiers.

- Accuracy is measured as the number of correctly identified examples divided by the total number of occurrences in the dataset, as seen in Equation (7).

- Precision can be defined as the average probability of successfully retrieving relevant information, as expressed in Equation (8).

- Recall represents the average probability of achieving complete retrieval, as defined in Equation (9).

- The F-Measure is calculated by combining the precision and recall scores for the classification problem. The conventional F-Measure is computed as depicted in Equation (10).

The recall, precision, and F1-score of GRU, XGB, LR, KNN, GB, and Random Forest are shown in Table 2.

The accuracy obtained for the fall detection event from the ML and DL classifiers is presented in Figure 11. The outcomes reveal that the KNN classifier best performs for elderly fall detection with the RFID dataset, achieving 99% accuracy, as shown in Figure 11.

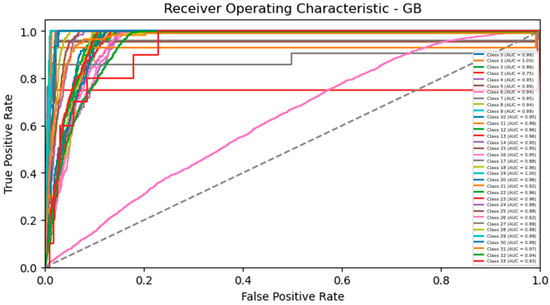

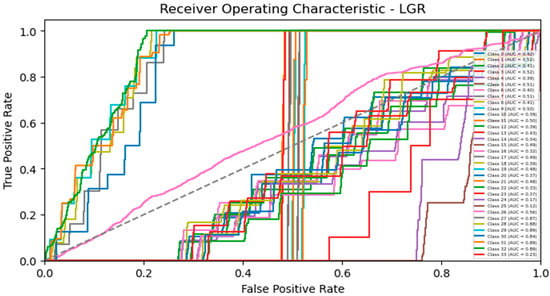

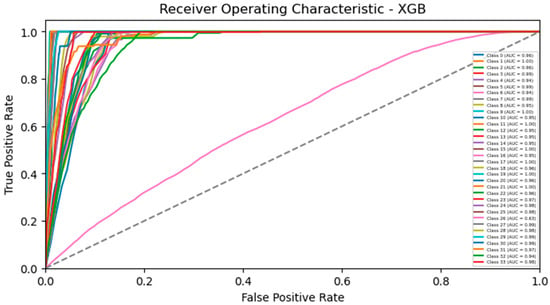

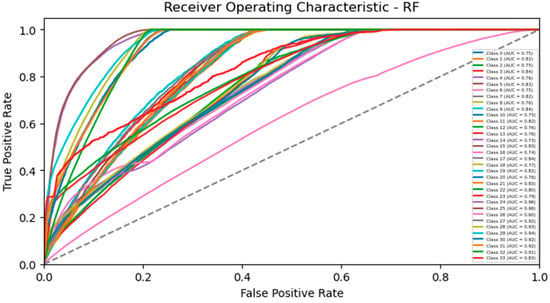

ROC Curves

The Receiver Operating Characteristic (ROC) curve is a widely used graphical representation in binary classification to assess the performance of a classification model []. This curve provides a visual representation of the trade-off between the true positive rate (sensitivity) and the false positive rate (1 − specificity) as the classification threshold changes. The ROC curves of GB, LR, KNN, XGB, and RF are shown in Figure 13, Figure 14, Figure 15, Figure 16, Figure 17 and Figure 18 respectively.

Figure 13.

ML and DL model accuracy chart.

Figure 14.

ROC curve GB.

Figure 15.

ROC curve LR.

Figure 16.

ROC curve KNN.

Figure 17.

ROC curve XGB.

Figure 18.

ROC curve Random Forest.

8. Conclusions and Future Work

This presented approach combines RFID technology and IoT-based smart carpets to create a sophisticated fall detection system for elderly individuals. By leveraging RFID tags, sensor data, comprehensive preprocessing, and a range of machine learning algorithms, the methodology demonstrates the potential for precise and efficient fall detection. KNN’s exceptional accuracy serves as a model for the methodology’s capabilities. While other algorithms, such as Random Forest, GRUs, and XGBoost, exhibit lower accuracy levels, they still contribute to the methodology’s overall effectiveness.

Continued exploration and refinement of algorithms hold the promise of even more accurate fall detection systems in the future. This innovative approach has the potential to make a significant contribution to the safety and well-being of the elderly population, offering a novel solution to a critical concern.

Author Contributions

All aspects: equal contribution H.A.A., K.K.A. and C.A.U.H. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported by King Salman Center for Disability Research through Research Group no. KSRG-2023-167.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this research can be obtained from the corresponding authors upon request.

Acknowledgments

The authors extend their appreciation to the King Salman Center for Disability Research for funding this work through Research Group no. KSRG-2023-167NA.

Conflicts of Interest

The authors declare no conflict of interest.

References

- United Nations. World Population Ageing 2019. 2019. Available online: https://www.un.org/en/development/desa/population/publications/pdf/ageing/WorldPopulationAgeing2019-Report.pdf (accessed on 2 August 2023).

- World Health Organization. Falls. 2021. Available online: https://www.cdc.gov/injury/features/older-adult-falls/index.html#:~:text=About%2036%20million%20falls%20are,departments%20for%20a%20fall%20injury (accessed on 2 August 2023).

- Stevens, J.A.; Corso, P.S.; Finkelstein, E.A.; Miller, T.R. The costs of fatal and non-fatal falls among older adults. Inj. Prev. 2006, 12, 290–295. [Google Scholar] [CrossRef]

- Sterling, D.A.; O’Connor, J.A.; Bonadies, J. Geriatric falls: Injury severity is high and disproportionate to mechanism. J. Trauma Acute Care Surg. 2001, 50, 116–119. [Google Scholar] [CrossRef]

- Tinetti, M.E.; Speechley, M.; Ginter, S.F. Risk factors for falls among elderly persons living in the community. N. Engl. J. Med. 1988, 319, 1701–1707. [Google Scholar] [CrossRef]

- Vyrostek, S.B.; Annest, J.L.; Ryan, G.W. Surveillance for fatal and nonfatal injuries—United States. Surveill. Summ. 2004, 53, 1–57. [Google Scholar]

- Alam, E.; Sufian, A.; Leo, M. Vision-based Human Fall Detection Systems using Deep Learning: A Review. arXiv 2022, arXiv:2207.10952. [Google Scholar] [CrossRef]

- Kellogg International Working Group on the Prevention of Falls by the Elderley. The prevention of falls in later life. Dan Med. Bull. 1987, 34 (Suppl. S4), 1–24. [Google Scholar]

- Rubenstein, L.Z.; Powers, C.M.; MacLean, C.H. Quality indicators for the management and prevention of falls and mobility problems in vulnerable elders. Ann. Intern. Med. 2001, 135, 686–693. [Google Scholar] [CrossRef]

- Maki, B.E.; McIlroy, W.E. Control of compensatory stepping reactions: Age-related impairment and the potential for remedial intervention. Physiother. Theory Pract. 1999, 15, 69–90. [Google Scholar] [CrossRef]

- Heart org. Falls Can Be a Serious, Poorly Understood Threat to People with Heart Disease. 2022. Available online: https://www.heart.org/en/news/2022/05/19/falls-can-be-a-serious-poorly-understood-threat-to-people-with-heart-disease (accessed on 4 August 2023).

- Santhagunam, S.N.; Li, E.P.; Buschert, K.; Davis, J.C. A theoretical framework to improve adherence among older adults to recommendations received at a falls prevention clinic: A narrative review. Appl. Nurs. Res. 2021, 62, 151493. [Google Scholar] [CrossRef]

- Ajami, S.; Rajabzadeh, A. Radio Frequency Identification (RFID) Technology and Patient Safety. J. Res. Med. Sci. 2013, 18, 809–813. [Google Scholar]

- Sabry, F.; Eltaras, T.; Labda, W.; Alzoubi, K.; Mallui, Q. Machine Learning for Healthcare Wearable Devices: The Big Picture. J. Healthc. Eng. 2022, 2022, 4653923. [Google Scholar] [CrossRef]

- Bourke, A.K.; O’Brien, J.V.; Lyons, G.M. Evaluation of a threshold-based tri-axial accelerometer fall detection algorithm. Gait Posture 2007, 26, 194–199. [Google Scholar] [CrossRef]

- Bagalà, F.; Becker, C.; Cappello, A.; Chiari, L.; Aminian, K.; Hausdorff, J.M.; Zijlstra, W.; Klenk, J. Evaluation of accelerometer-based fall detection algorithms on real-world falls. PLoS ONE 2012, 7, e37062. [Google Scholar] [CrossRef]

- Del Rosario, M.B.; Redmond, S.J.; Lovell, N.H.; Yang, A. Tracking the evolution of smartphone sensing for monitoring human movement. Pervasive Mob. Comput. 2015, 15, 18901–18933. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Cameron, I.D.; Dyer, S.M.; Panagoda, C.E.; Murray, G.R.; Hill, K.D.; Cumming, R.G.; Kerse, N. Interventions for preventing falls in older people in care facilities and hospitals. Cochrane Database Syst. Rev. 2018, 9, CD005465. [Google Scholar] [CrossRef]

- Del Rosario, M.B.; Redmond, S.J.; Lovell, N.H.; Yang, A. What Do Clinicians Want? Detection of Activities of Daily Living in People with Chronic Obstructive Pulmonary Disease Using Wearable Devices. JMIR mHealth uHealth 2023, 6, e113. [Google Scholar]

- Howcroft, J.; Kofman, J.; Lemaire, E.D. Review of fall risk assessment in geriatric populations using inertial sensors. J. NeuroEngineering Rehabil. 2016, 10, 91. [Google Scholar] [CrossRef]

- Zheng, L.; Zhao, J.; Dong, F.; Huang, Z.; Zhong, D. Fall Detection Algorithm Based on Inertial Sensor and Hierarchical Decision. Sensors 2023, 23, 107. [Google Scholar] [CrossRef]

- Kwolek, B.; Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Programs Biomed. 2014, 117, 489–501. [Google Scholar] [CrossRef]

- Aziz, O.; Park, E.J.; Mori, G.; Robinovitch, S.N.; Delp, E.J. A comprehensive dataset of falls in the real-world for developing human fall detection algorithms. Proc. Natl. Acad. Sci. USA 2019, 116, 25064–25073. [Google Scholar]

- Gjoreski, M.; Lustrek, M.; Gams, M. Accelerometer placement for posture recognition and fall detection. J. Ambient. Intell. Smart Environ. 2011, 3, 173–190. [Google Scholar]

- Cucchiara, R.; Grana, C.; Prati, A. Detecting moving objects, ghosts, and shadows in video streams. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1337–1342. [Google Scholar] [CrossRef]

- Han, J.H.; Park, S.H.; Lee, S.W. Review of machine learning algorithms for the fall detection and fall risk assessment of the elderly. Sensors 2019, 19, 3146. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Somkunwar, R.K.; Thorat, N.; Pimple, J.; Dhumal, R.; Choudhari, Y. A Novel Based Human Fall Detection System Using Hybrid Approach. J. Data Acquis. Process. 2023, 38, 3985. [Google Scholar]

- Kaur, P.; Wang, Q.; Shi, W. Fall detection from audios with Audio Transformers. arXiv 2022, arXiv:2208.10659. [Google Scholar] [CrossRef]

- Nooruddin, S.; Islam, M.M.; Sharna, F.A. An IoT based device-type invariant fall detection system. Internet Things 2020, 9 (Suppl. S2), 100130. [Google Scholar] [CrossRef]

- Clemente, J.; Li, F.; Valero, M.; Song, W. Smart seismic sensing for indoor fall detection, location, and notification. IEEE J. Biomed. Health Inform. 2019, 24, 524–532. [Google Scholar] [CrossRef]

- Kumar, A.; De, A.; Gill, R. Internet of things in healthcare: Technologies, applications, opportunities and challenges. AIP Conf. Proc. 2023, 2495, 020043. [Google Scholar]

- Gutiérrez, J.J.; Echeverría, J.C.; García, J.M. Implementation of an IoT system for the detection of falls in the elderly. Sensors 2019, 19, 4322. [Google Scholar]

- Nishat Tasnim, N.; Hanada, E. The methods of fall detection: A literature review. Sensors 2023, 23, 5212. [Google Scholar]

- Tapia, E.M.; Intille, S.S.; Haskell, W. Real-time recognition of physical activities and their intensities using wireless accelerometers and a heart rate monitor. In Proceedings of the 4th International Conference on Wearable and Implantable Body Sensor Networks, Aachen, Germany, 26–28 March 2007; pp. 1–4. [Google Scholar]

- Dehzangi, O.; Azimi, I.; Bahadorian, B. Activity recognition in patients with neurodegenerative diseases: An ensemble method. J. Ambient. Intell. Humaniz. Comput. 2019, 2019, 1–11. [Google Scholar]

- Maqbool, O.; Mahmud, S.; Hu, J. Fall detection and prevention mechanisms in IoT and sensor networks: A survey. Sensors 2019, 19, 3416. [Google Scholar]

- Figueiredo, R.; Cruz, J.F. A low-cost IoT platform for fall detection and fall risk assessment in community-dwelling elderly. IEEE Access 2019, 7, 9652–9665. [Google Scholar]

- Santos, G.L.; Endo, P.T.; Monteiro, K.H.D.C.; Rocha, E.D.S.; Silva, I.; Lynn, T. Accelerometer-based human fall detection using convolutional neural networks. Sensors 2019, 19, 1644. [Google Scholar] [CrossRef]

- Deep, S.; Zheng, X.; Karmakar, C.; Yu, D.; Hamey, L.G.; Jin, J. A survey on anomalous behavior detection for elderly care using dense-sensing networks. IEEE Commun. Surv. Tutor. 2019, 22, 352–370. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, J. A survey on multi-view learning. arXiv 2018, arXiv:1304.5634. [Google Scholar]

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 806–813. [Google Scholar]

- Yuan, Y.; Jiang, C. Towards automatic data annotation: A survey. arXiv 2018, arXiv:1809.09057. [Google Scholar]

- Igual, R.; Medrano, C.; Plaza, I.; Castro, M. Challenges, issues and trends in fall detection systems. Biomed. Eng. Online 2013, 12, 66. [Google Scholar] [CrossRef] [PubMed]

- Yavari, A.N.; Mahdiraji, M.S. Review on Fall Detection Systems: Techniques, Challenges, and Open Issues. In Smart Sensing for Monitoring Elderly People; Elsevier: Amsterdam, The Netherlands, 2021; pp. 65–89. [Google Scholar]

- Skubic, M.; Guevara, R.D.; Rand, M. Automated health alerts using in-home sensor data for embedded health assessment. IEEE J. Transl. Eng. Health Med. 2017, 5, 1800110. [Google Scholar] [CrossRef] [PubMed]

- Manga, S.; Muthavarapu, N.; Redij, R.; Baraskar, B.; Kaur, A.; Gaddam, S.; Gopalakrishnan, K.; Shinde, R.; Rajagopal, A.; Samaddar, P.; et al. Estimation of Physiologic Pressures: Invasive and Non-Invasive Techniques, AI Models, and Future Perspectives. Sensors 2023, 23, 5744. [Google Scholar] [CrossRef] [PubMed]

- Campbell, A.J.; Robertson, M.C.; Gardner, M.M.; Norton, R.N.; Buchner, D.M. Falls prevention over 2 years: A randomized controlled trial in women 80 years and older. Age Ageing 1999, 28, 513–518. [Google Scholar] [CrossRef]

- Lord, S.R.; Tiedemann, A.; Chapman, K.; Munro, B.; Murray, S.M. The effect of an individualized fall prevention program on fall risk and falls in older people: A randomized, controlled trial. J. Am. Geriatr. Soc. 2005, 53, 1296–1304. [Google Scholar] [CrossRef] [PubMed]

- Kulurkar, P.; Kumar Dixit, C.; Bharathi, V.C.; Monikavishnuvarthini, A.; Dhakne, A.; Preethi, P. AI based elderly fall prediction system using wearable sensors: A smart home-care technology with IOT. Meas. Sens. 2023, 25, 100614. [Google Scholar] [CrossRef]

- Delahoz, Y.S.; Labrador, M.A. Survey on Fall Detection and Fall Prevention Using Wearable and External Sensors Yueng. Sensors 2014, 14, 19806–19842. [Google Scholar] [CrossRef]

- Usmani, S.; Saboor, A.; Haris, M.; Khan, M.A.; Park, H. Latest Research Trends in Fall Detection and Prevention Using Machine Learning: A Systematic Review. Sensors 2021, 21, 5134. [Google Scholar] [CrossRef]

- Badgujar, S.; Pillai, A. Fall Detection for Elderly People using Machine Learning. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020. [Google Scholar]

- Zhu, L.; Zhou, P.; Pan, A.; Guo, J.; Sun, W.; Wang, L.; Chen, X.; Liu, Z. A Survey of Fall Detection Algorithm for Elderly Health Monitoring. In Proceedings of the 2015 IEEE Fifth International Conference on Big Data and Cloud Computing, Dalian, China, 26–28 August 2015. [Google Scholar]

- Khan, M.K.J.; Ud Din, N.; Bae, S.; Yi, J. Interactive Removal of Microphone Object in Facial Images. Electronics 2019, 8, 1115. [Google Scholar] [CrossRef]

- Hsu, D.; Shi, K.; Sun, X. Linear regression without correspondence. arXiv 2017, arXiv:1705.07048. [Google Scholar]

- Wickramasinghe, A.; Torres, R.L.S.; Ranasinghe, D.C. Recognition of falls using dense sensing in an ambient assisted living environment. Pervasive Mob. Comput. 2017, 34, 14–24. [Google Scholar] [CrossRef]

- Lee, T.; Mihailidis, A. An intelligent emergency response system: Preliminary development and testing of automated fall detection. J. Telemed. Telecare 2005, 11, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Rougier, C.; Meunier, J.; St-Arnaud, A.; Rousseau, J. Robust video surveillance for fall detection based on human shape deformation. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 611–622. [Google Scholar] [CrossRef]

- Wang, S.; Chen, L.; Zhou, Z.; Sun, X.; Dong, J. Human fall detection in surveillance video based on PCANet. Multimed. Tools Appl. 2016, 75, 11603–11613. [Google Scholar] [CrossRef]

- Lee, D.W.; Jun, K.; Naheem, K.; Kim, M.S. Deep Neural Network–Based Double-Check Method for Fall Detection Using IMU-L Sensor and RGB Camera Data. IEEE Access 2021, 9, 48064–48079. [Google Scholar] [CrossRef]

- Gutiérrez, J.; Rodríguez, V.; Martin, S. Comprehensive review of vision-based fall detection systems. Sensors 2021, 21, 947. [Google Scholar] [CrossRef]

- Zhou, Z.; Stone, E.E.; Skubic, M.; Keller, J.; He, Z. Nighttime in-home action monitoring for eldercare. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–1 September 2011; IEEE: New York, NY, USA, 2011; pp. 5299–5302. [Google Scholar]

- Alhimale, L.; Zedan, H.; Al-Bayatti, A. The implementation of an intelligent and video-based fall detection system using a neural network. Appl. Soft Comput. 2014, 18, 59–69. [Google Scholar] [CrossRef]

- Chen, J.; Romero, R.; Thompson, L.A. Motion Analysis of Balance Pre and Post Sensorimotor Exercises to Enhance Elderly Mobility: A Case Study. Appl. Sci. 2023, 13, 889. [Google Scholar] [CrossRef]

- Wang, X.; Ellul, J.; Azzopardi, G. Elderly fall detection systems: A literature survey. Front. Robot. AI 2020, 7, 71. [Google Scholar] [CrossRef]

- Khraief, C.; Benzarti, F.; Amiri, H. Elderly fall detection based on multi-stream deep convolutional networks. Multimed. Tools Appl. 2020, 79, 19537–19560. [Google Scholar] [CrossRef]

- Yacchirema, D.; de Puga, J.S.; Palau, C.; Esteve, M. Fall detection system for elderly people using IoT and ensemble machine learning algorithm. Pers. Ubiquitous Comput. 2019, 23, 801–817. [Google Scholar] [CrossRef]

- Hussain, F.; Umair, M.B.; Ehatisham-ul-Haq, M.; Pires, I.M.; Valente, T.; Garcia, N.M.; Pombo, N. An efficient machine learning-based elderly fall detection algorithm. arXiv 2019, arXiv:1911.11976. [Google Scholar]

- Bridenbaugh, S.A.; Kressig, R.W. Laboratory review: The role of gait analysis in seniors’ mobility and fall prevention. Gerontology 2011, 57, 256–264. [Google Scholar] [CrossRef] [PubMed]

- Baldewijns, G.; Claes, V.; Debard, G.; Mertens, M.; Devriendt, E.; Milisen, K.; Tournoy, J.; Croonenborghs, T.; Vanrumste, B. Automated in-home gait transfer time analysis using video cameras. J. Ambient. Intell. Smart Environ. 2016, 8, 273–286. [Google Scholar] [CrossRef]

- Yang, L.; Ren, Y.; Zhang, W. 3D depth image analysis for indoor fall detection of elderly people. Digit. Commun. Netw. 2016, 2, 24–34. [Google Scholar] [CrossRef]

- Leone, A.; Rescio, G.; Caroppo, A.; Siciliano, P.; Manni, A. Human Postures Recognition by Accelerometer Sensor and ML Architecture Integrated in Embedded Platforms: Benchmarking and Performance Evaluation. Sensors 2023, 23, 1039. [Google Scholar] [CrossRef]

- Shi, G.; Chan, C.S.; Luo, Y.; Zhang, G.; Li, W.J.; Leong, P.H.; Leung, K.S. Development of a human airbag system for fall protection using mems motion sensing technology. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; IEEE: New York, NY, USA, 2006; pp. 4405–4410. [Google Scholar]

- Shi, G.; Chan, C.S.; Li, W.J.; Leung, K.S.; Zou, Y.; Jin, Y. Mobile human airbag system for fall protection using MEMS sensors and embedded SVM classifier. IEEE Sens. J. 2009, 9, 495–503. [Google Scholar] [CrossRef]

- Saleh, M.; Jeannès, R.L.B. Elderly fall detection using wearable sensors: A low cost highly accurate algorithm. IEEE Sens. J. 2019, 19, 3156–3164. [Google Scholar] [CrossRef]

- Wang, Z.; Ramamoorthy, V.; Gal, U.; Guez, A. Possible life saver: A review on human fall detection technology. Robotics 2020, 9, 55. [Google Scholar] [CrossRef]

- Chuma, E.L.; Roger, L.L.B.; De Oliveira, G.G.; Iano, Y.; Pajuelo, D. Internet of things (IoT) privacy–protected, fall-detection system for the elderly using the radar sensors and deep learning. In Proceedings of the 2020 IEEE International Smart Cities Conference (ISC2), Piscataway, NJ, USA, 28 September–1 October 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar]

- Yu, X.; Jang, J.; Xiong, S. Machine learning-based pre-impact fall detection and injury prevention for the elderly with wearable inertial sensors. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Virtually, 25–29 July 2021; Springer: Cham, Switzerland, 2021; pp. 278–285. [Google Scholar]

- Sankaran, S.; Thiyagarajan, A.P.; Kannan, A.D.; Karnan, K.; Krishnan, S.R. Design and Development of Smart Airbag Suit for Elderly with Protection and Notification System. In Proceedings of the 2021 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatre, India, 8–10 July 2021; IEEE: New York, NY, USA, 2021; pp. 1273–1278. [Google Scholar]

- Chu, C.T.; Chang, C.H.; Chang, T.J.; Liao, J.X. Elman neural network identify elders fall signal base on second-order train method. In Proceedings of the 2017 6th International Symposium on Next Generation Electronics (ISNE), Keelung, Taiwan, 23–25 May 2017; IEEE: New York, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Sixsmith, A.; Johnson, N. A smart sensor to detect the falls of the elderly. IEEE Pervasive Comput. 2004, 3, 42–47. [Google Scholar] [CrossRef]

- Hayashida, A.; Moshnyaga, V.; Hashimoto, K. New approach for indoor fall detection by infrared thermal array sensor. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; IEEE: New York, NY, USA, 2017; pp. 1410–1413. [Google Scholar]

- Shinmoto Torres, R.L.; Wickramasinghe, A.; Pham, V.N.; Ranasinghe, D.C. What if Your Floor Could Tell Someone You Fell? A Device-Free Fall Detection Method. In Artificial Intelligence in Medicine; Springer: Berlin/Heidelberg, Germany, 2015; pp. 86–95. [Google Scholar]

- Selvaraj, S.; Sundaravaradhan, S. Challenges and opportunities in IoT healthcare systems: A systematic review. SN Appl. Sci. 2020, 2, 139. [Google Scholar] [CrossRef]

- ul Hassan, C.A.; Iqbal, J.; Hussain, S.; AlSalman, H.; Mosleh, M.A.; Sajid Ullah, S. A computational intelligence approach for predicting medical insurance cost. Math. Probl. Eng. 2021, 2021, 1162553. [Google Scholar] [CrossRef]

- Hassan, C.A.U.; Karim, F.K.; Abbas, A.; Iqbal, J.; Elmannai, H.; Hussain, S.; Ullah, S.S.; Khan, M.S. A Cost-Effective Fall-Detection Framework for the Elderly Using Sensor-Based Technologies. Sustainability 2023, 15, 3982. [Google Scholar] [CrossRef]

- Hassan, C.A.U.; Iqbal, J.; Irfan, R.; Hussain, S.; Algarni, A.D.; Bukhari, S.S.H.; Alturki, N.; Ullah, S.S. Effectively predicting the presence of coronary heart disease using machine learning classifiers. Sensors 2022, 22, 7227. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).