Enhancing Elderly Fall Detection through IoT-Enabled Smart Flooring and AI for Independent Living Sustainability

Abstract

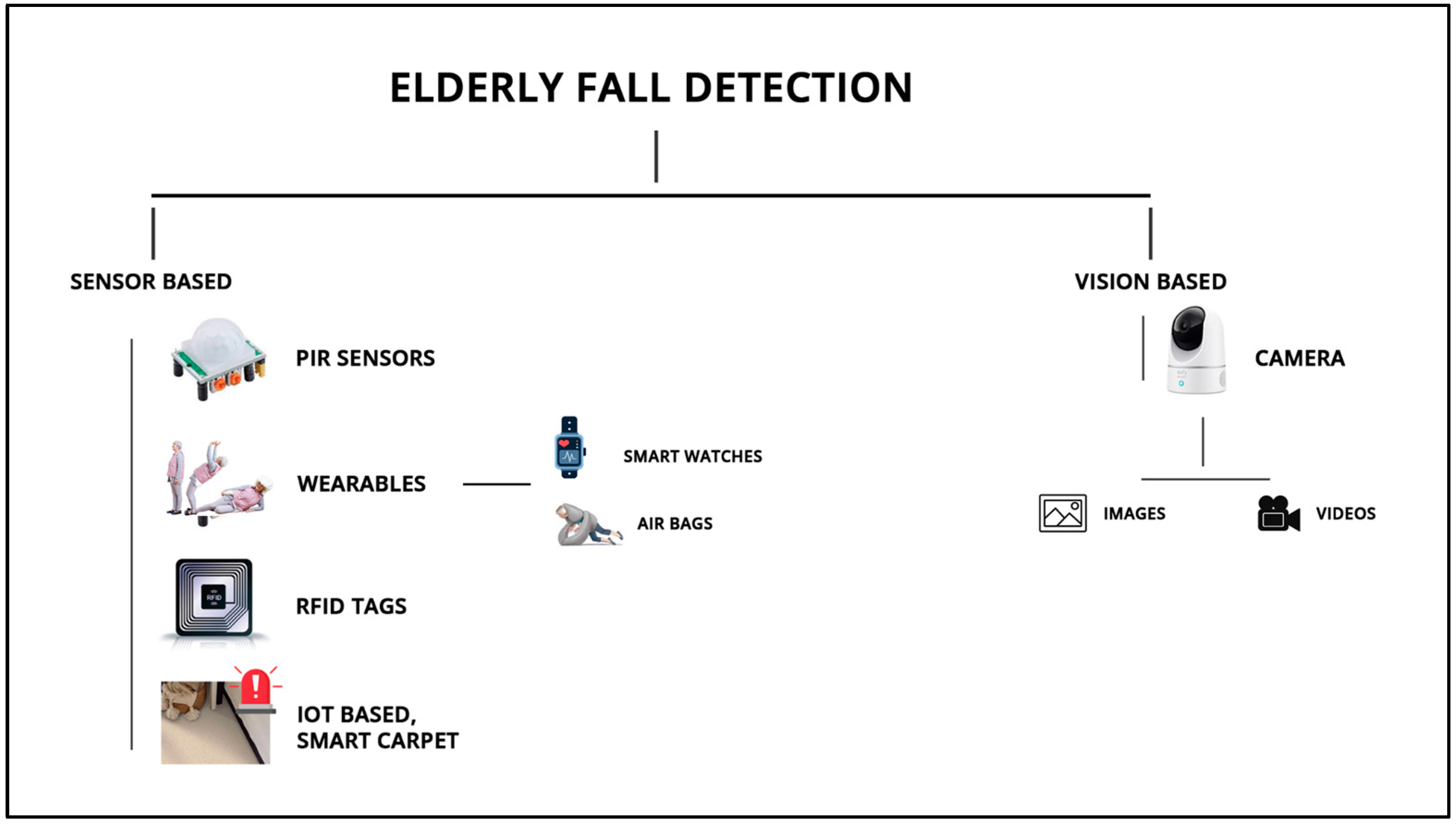

:1. Introduction

1.1. Motivation

1.2. Contribution

- We are utilizing sensor-based technologies, such as RFID tags and readers, which do not impact the privacy of the elderly. Unlike previous vision-based solutions that involved constant monitoring and could encroach upon the privacy of the elderly, making them uncomfortable in their daily lives, our approach is designed to be unobtrusive. Moreover, these vision-based solutions often come with higher costs.

- In our solution, the elderly are not required to wear any device or gadget. We have integrated RFID tags into the smart carpet, allowing the elderly to continue their daily lives without the need to wear any gadgets. Requiring individuals to wear devices at all times can be both frustrating and contrary to human nature. Additionally, as people age, it becomes progressively more challenging for the elderly to consistently remember to wear such a device.

- To improve the accuracy of elderly fall detection, we have employed machine learning and deep learning classifiers. We collected data from a dataset comprising 13 participants who voluntarily engaged in both falling and walking activities.

- To tackle and enhance the issues related to elderly falls, including extended periods of being unattended after a fall, this study introduces IoT-based methods for detecting fall events using RFID tags and RFID readers. When a fall event is detected, an alarm is generated to notify caregivers.

1.3. Paper Organization

2. Related Work

3. Proposed Methodology

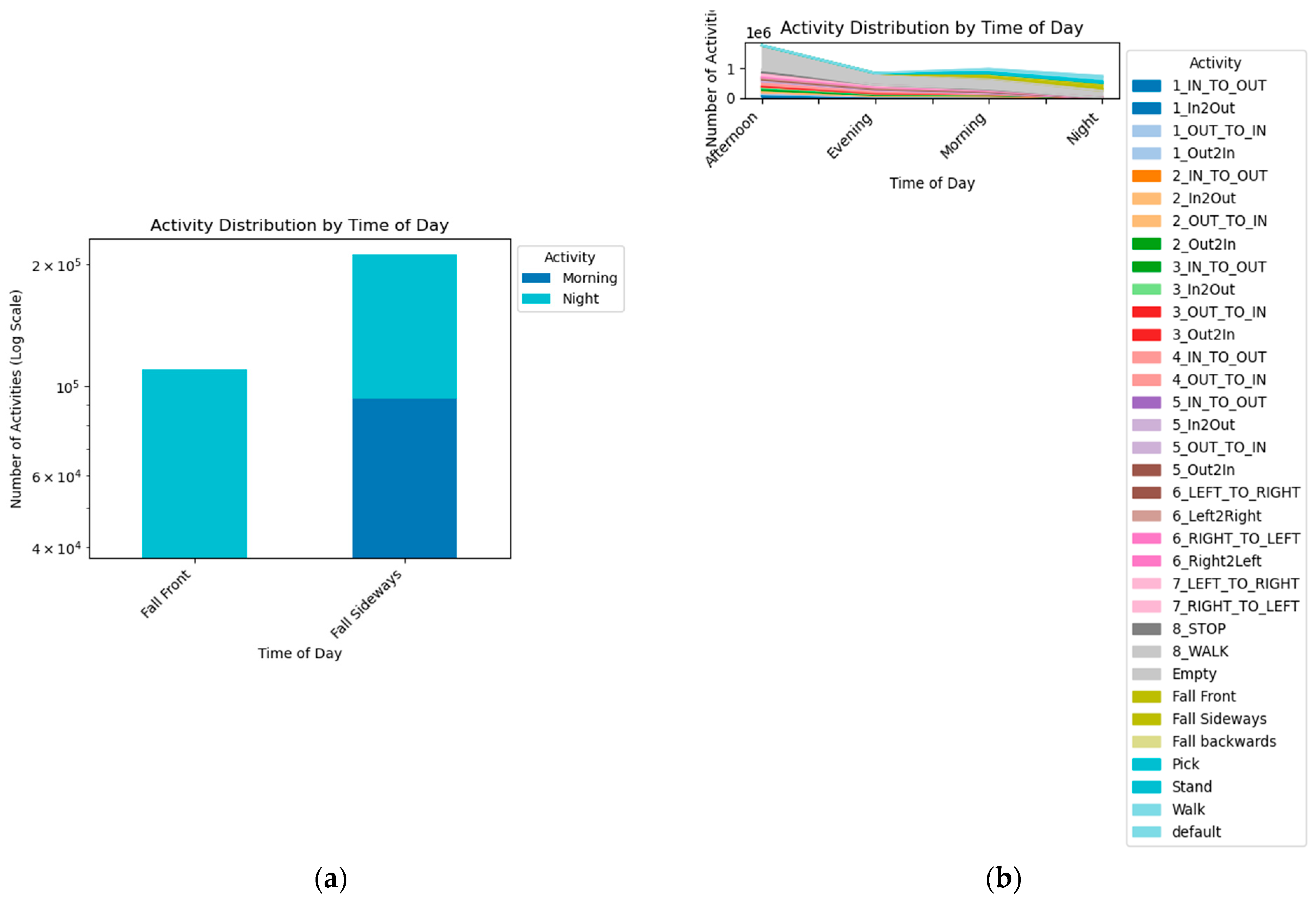

3.1. Data Collection

- (a)

- Smart Carpet dataset: This dataset consists of data collected from young volunteers falling and walking on the RFID tag-embedded smart carpet. Thirteen participants are identified by their number.

- (b)

- Dataset organization:Fall: This directory contains simulated fall data. The multiple files in each numbered directory correspond to the different types of activities performed by a single participant.Walking: Data related to comprehensive walking patterns are in this directory. The multiple files in each numbered directory are the different walking patterns.

- (c)

- Dataset format: All the values are stored as comma-separated values, and the dataset contains 09 columns.

- Sequence no.: Sequence number of the received observation.

- Timestamp: Data recorded timestamp given by the data collection computer.

- Mode: Class label.

- Epc: ID of the tag.

- readerID: reader ID.

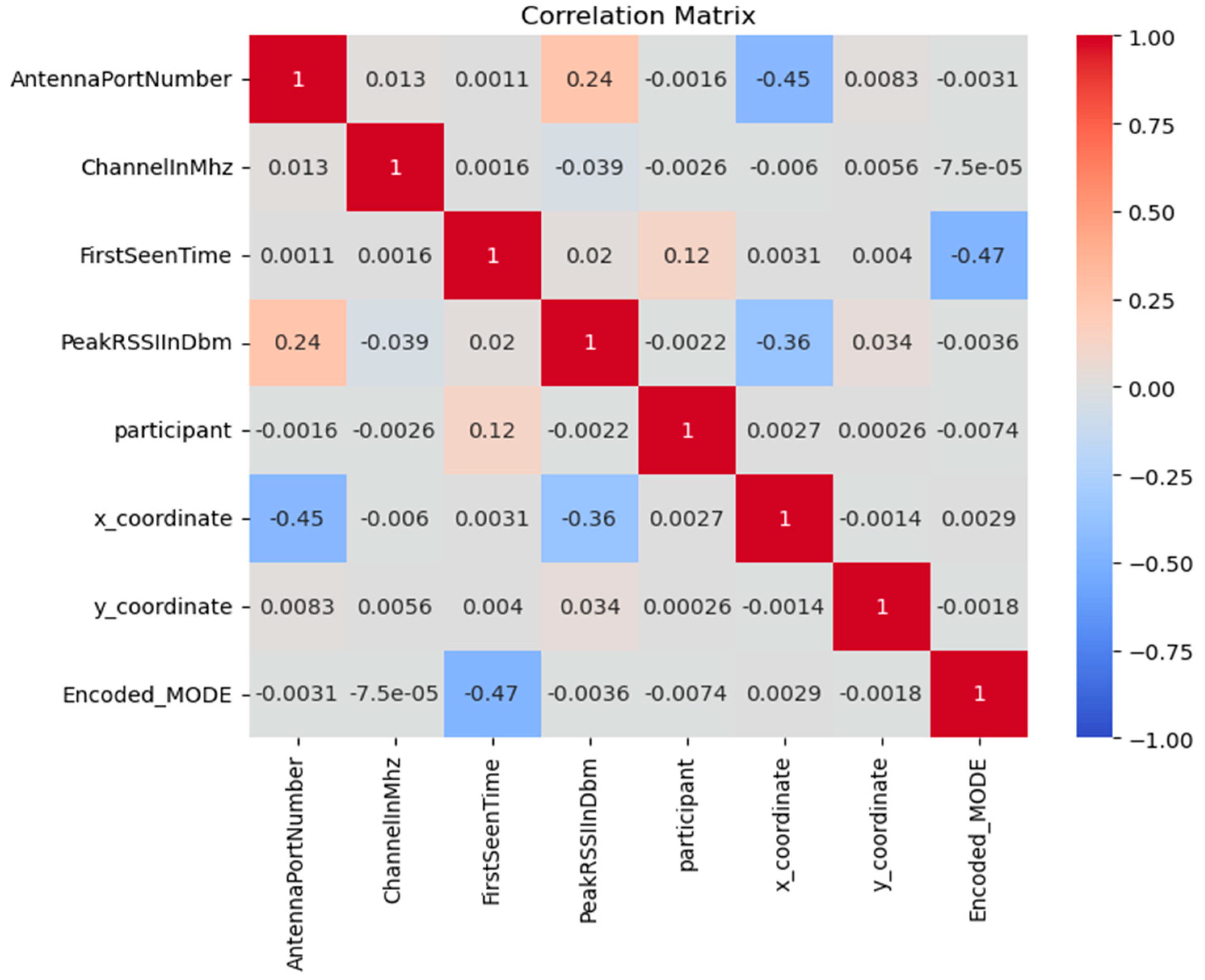

- AntennaPortNumber: an antenna that captured the observation.

- ChannelInMhz: RFID reader transmission frequency.

- FirstSeenTime: The time at which the RFID tag was observed by the RFID reader for the current event cycle for the first time. This value is the nanoseconds from the Epoch.

- PeakRSSIInDbm: Maximum value of the RSSI received during a given event cycle.

3.2. Data Integration and Framework Utilization

3.3. Data Preprocessing

3.4. Correlation Matrix

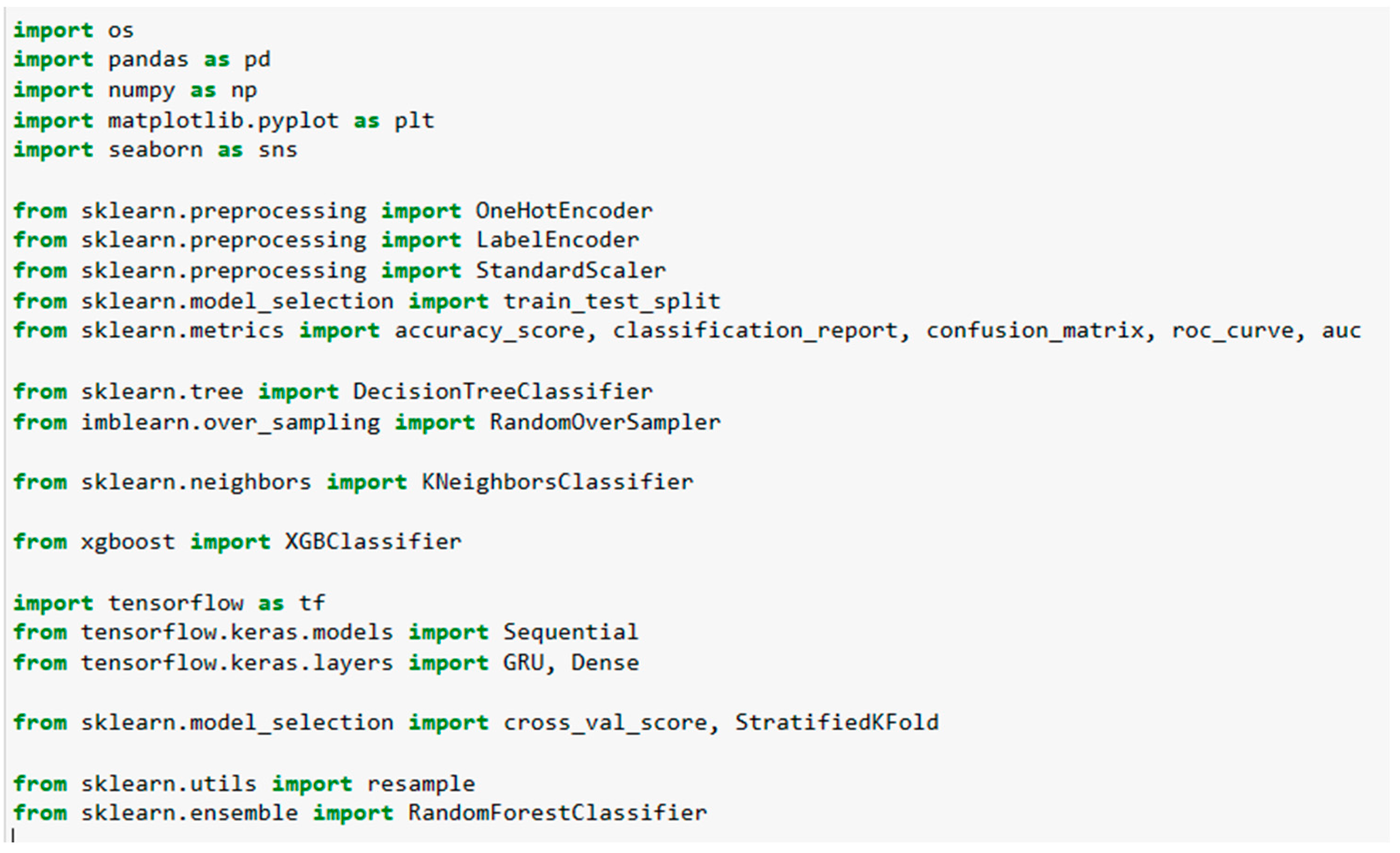

4. Implementation Details

5. ML and DL Classifiers Analysis and Discussion

5.1. Machine and Deep Learning Classifiers

5.1.1. Random Forest (RF)

- pi is the probability that a data point in the node belongs to class i.

5.1.2. K-Nearest Neighbors (KNN)

- yk is the predicted class label for a new data point x.

- yi is the class label of the $i$th training data point.

- xi is the $i$th training data point.

- Nk(x) is the set of the K-Nearest Neighbors of x in the training set.

- $\mode$ is the function that returns the most frequent element in a set.

5.1.3. Gated Recurrent Unit (GRU)

- zt is the update gate at time step t.

- xt is the input at time step t.

- ht−1 is the hidden state at time step t – 1.

- Wz is the weight matrix for the update gate.

- Uz is the recurrent weight matrix for the update gate.

- bz is the bias term for the update gate.

- σ is the sigmoid function.

5.1.4. XGBoost

5.1.5. Logistic Regression (LR)

- p(y = 1∣x) is the probability that a data point with features x belongs to class 1.

- y is the target variable, which is either 0 or 1.

- x is the vector of features.

- w0 is the bias term.

- w1 is the weight for the first feature.

- e is the base of the natural logarithm.

5.1.6. Gradient Boosting (GB)

- h(x) is the predicted label.

- f(x) is the initial prediction.

- m is the number of boosting steps.

- βi is the coefficient of the $i$th weak learner.

- g(x;θ i) is the $i$th weak learner.

- θi are the parameters of the $i$th weak learner.

5.2. Classifiers Performance Assessment

6. Experimental Results and Discussions

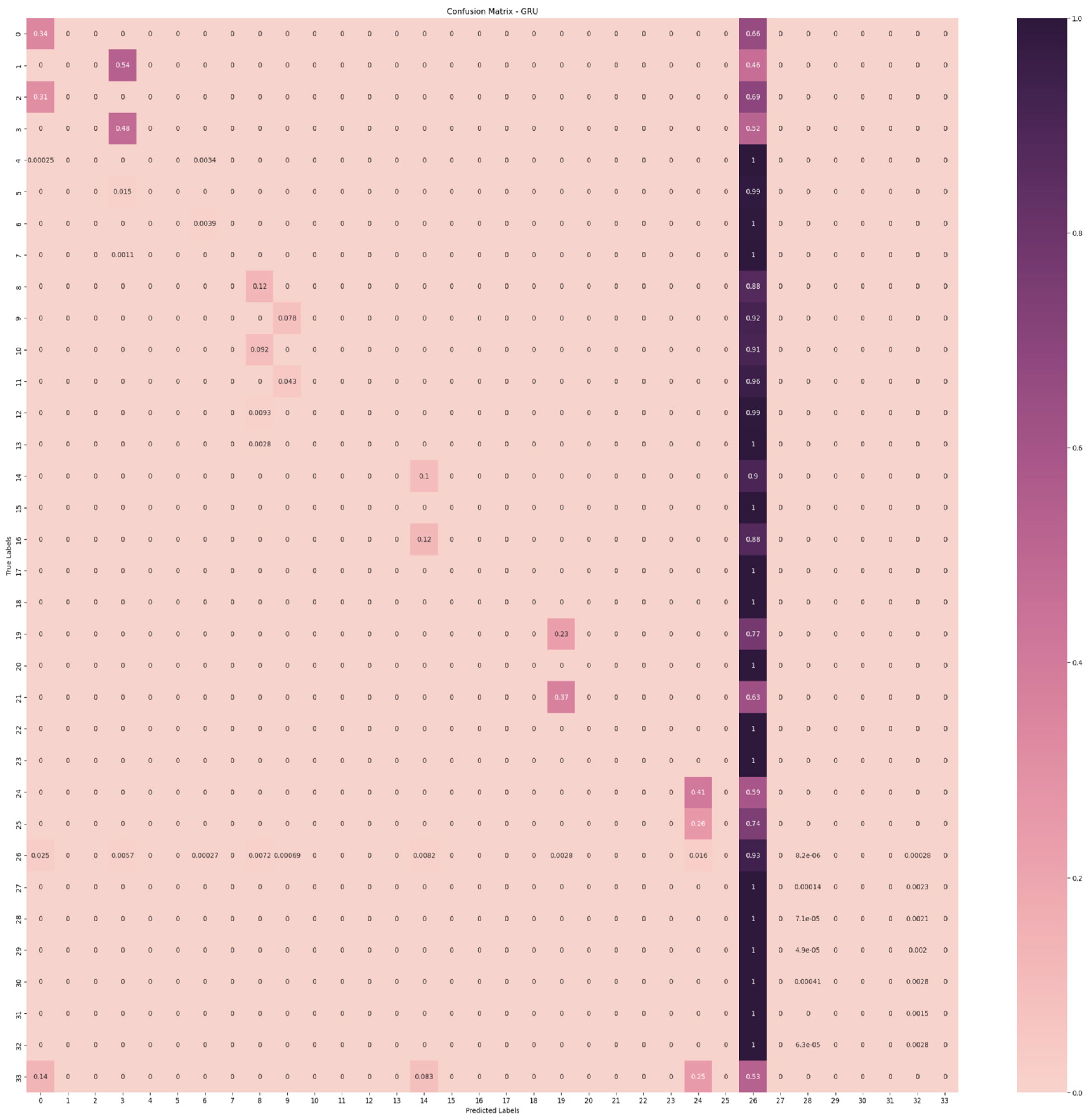

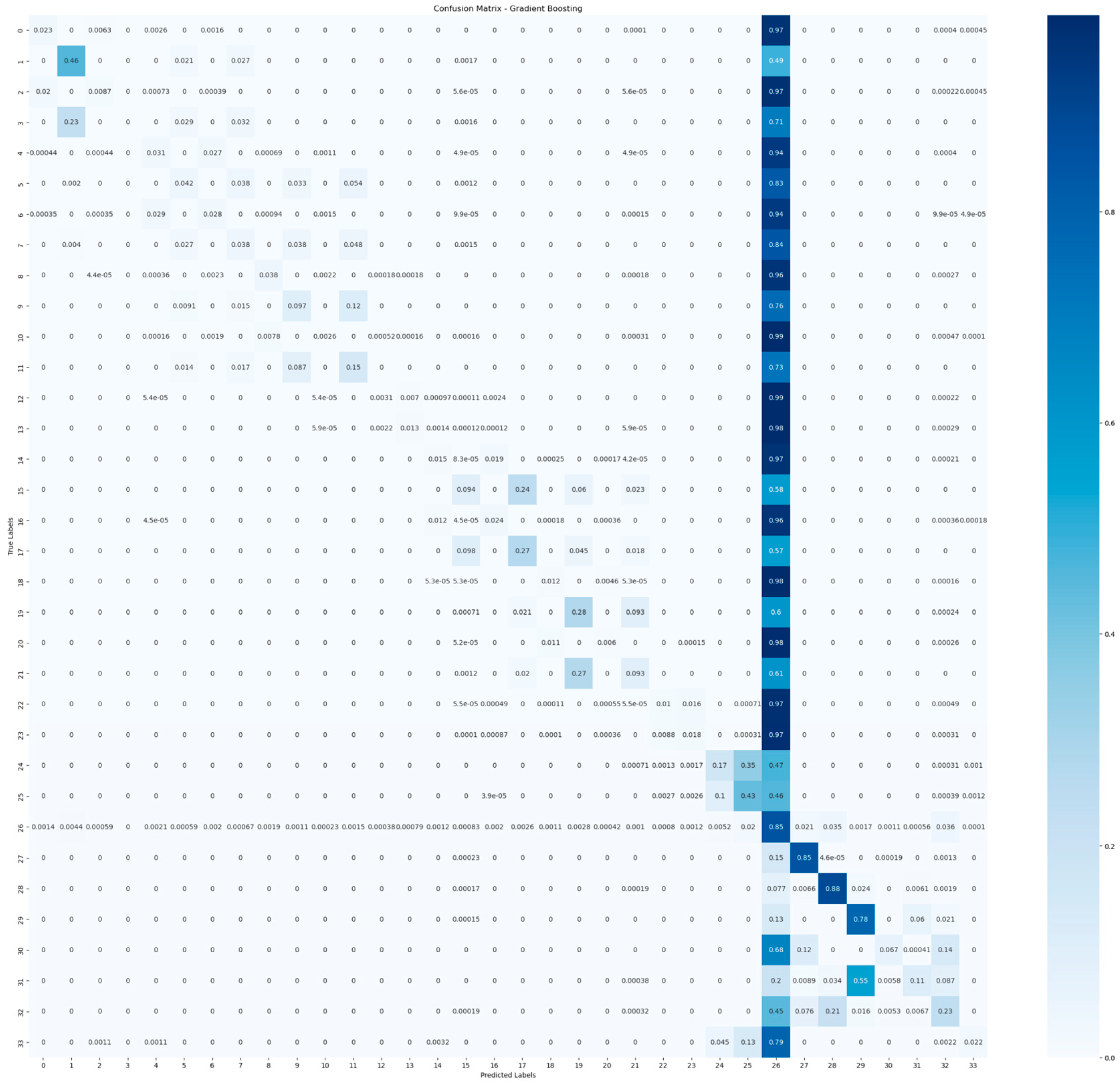

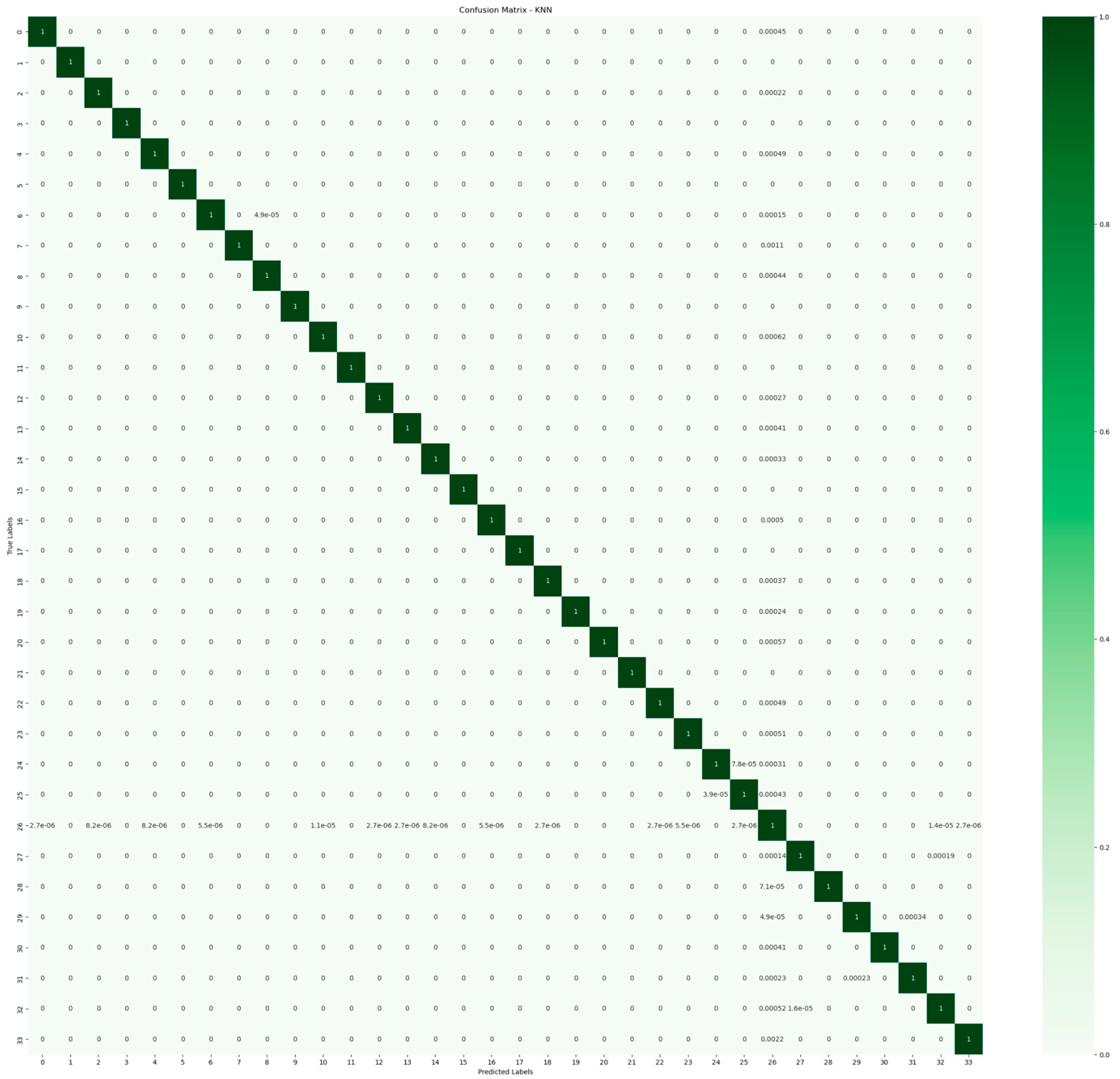

Confusion Matrix of ML and DL Models

7. Evaluating Parameters

- Accuracy is measured as the number of correctly identified examples divided by the total number of occurrences in the dataset, as seen in Equation (7).

- Precision can be defined as the average probability of successfully retrieving relevant information, as expressed in Equation (8).

- Recall represents the average probability of achieving complete retrieval, as defined in Equation (9).

- The F-Measure is calculated by combining the precision and recall scores for the classification problem. The conventional F-Measure is computed as depicted in Equation (10).

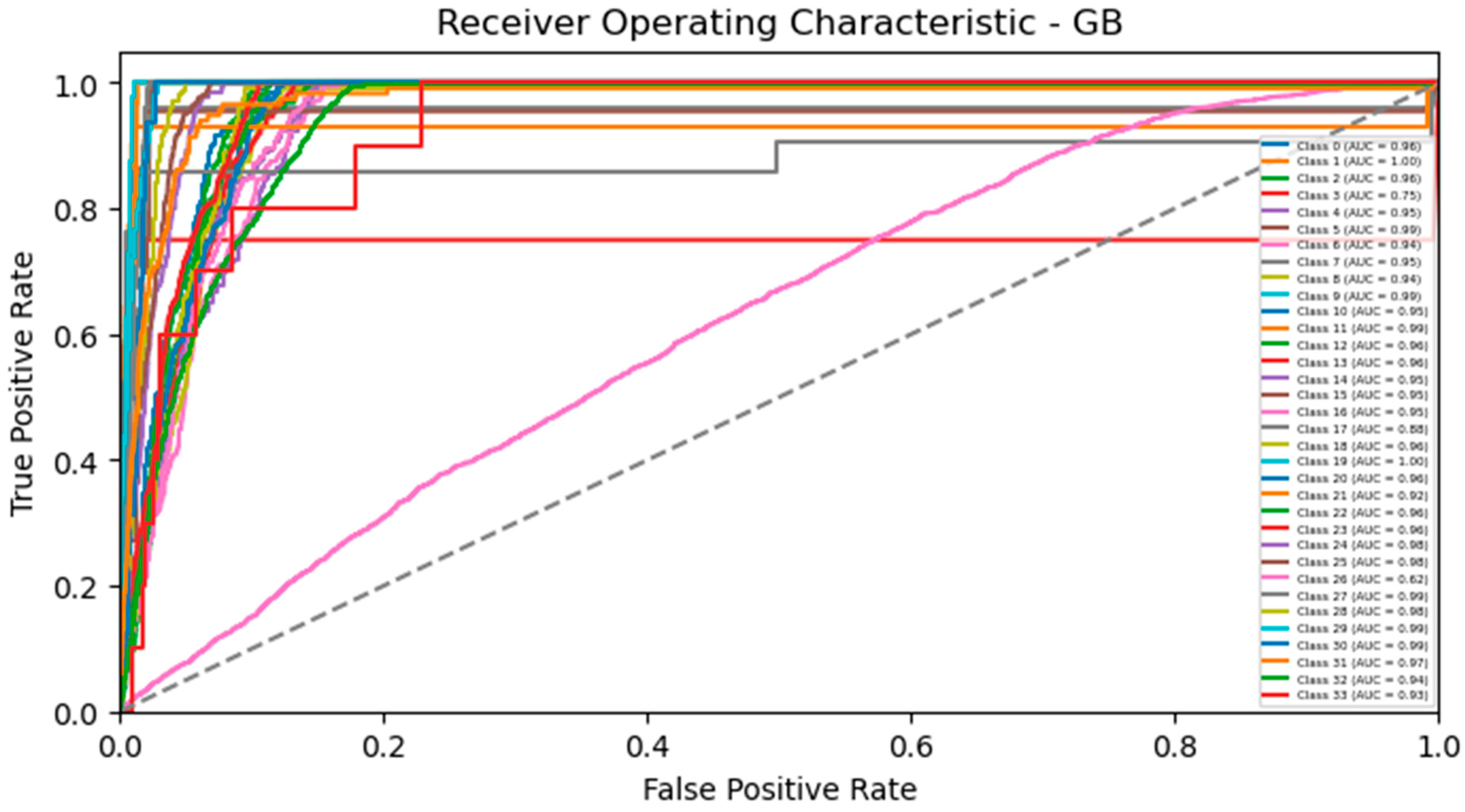

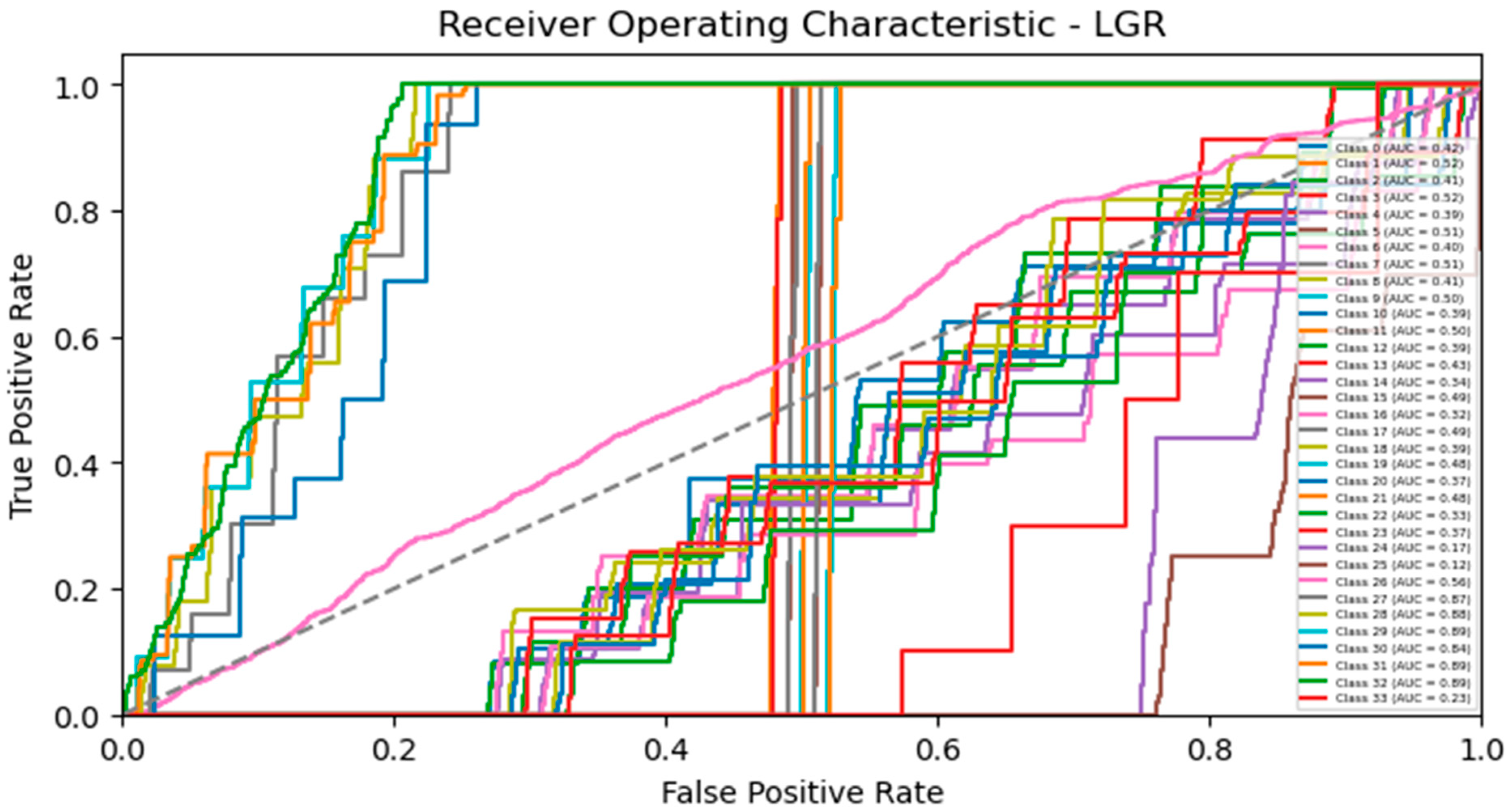

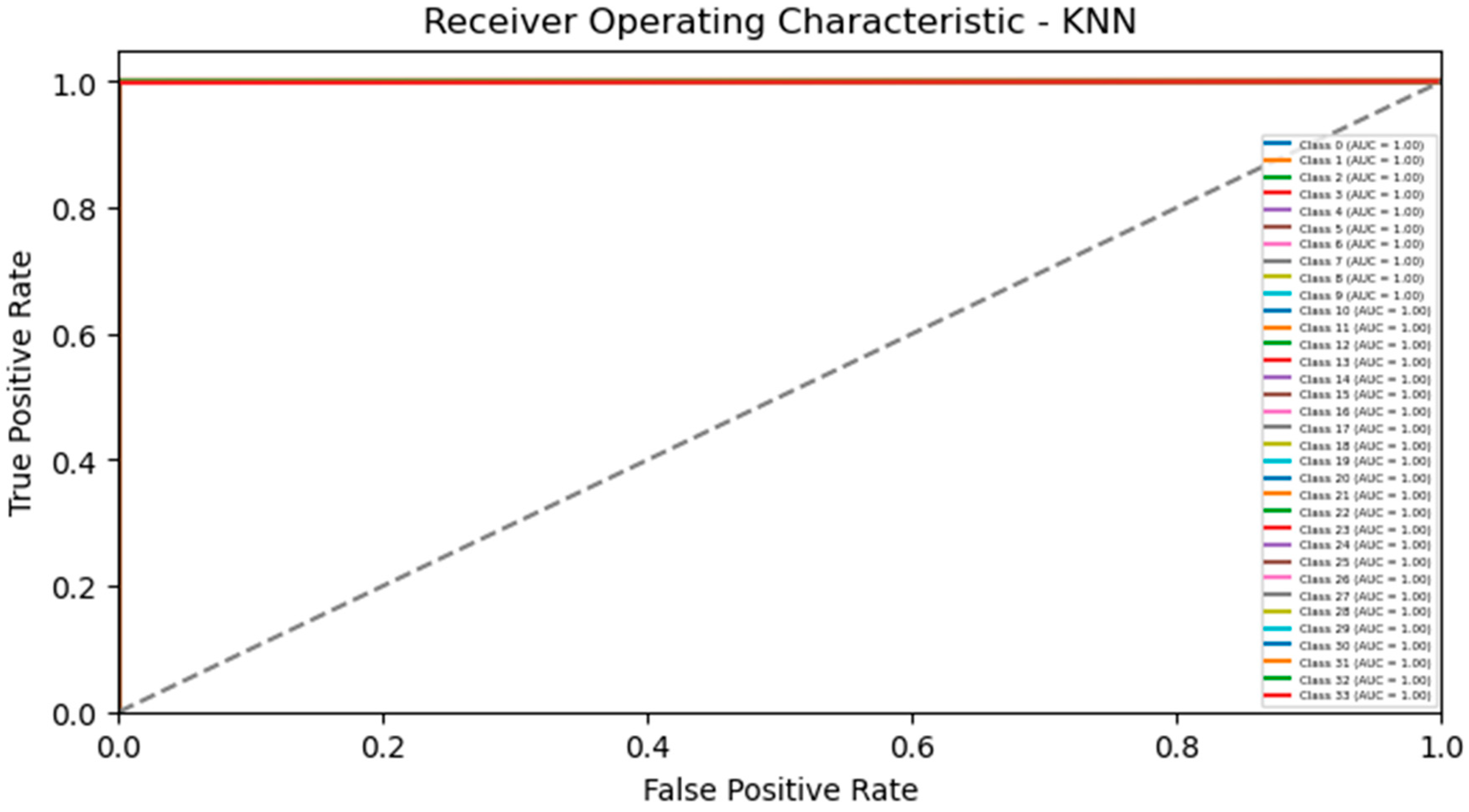

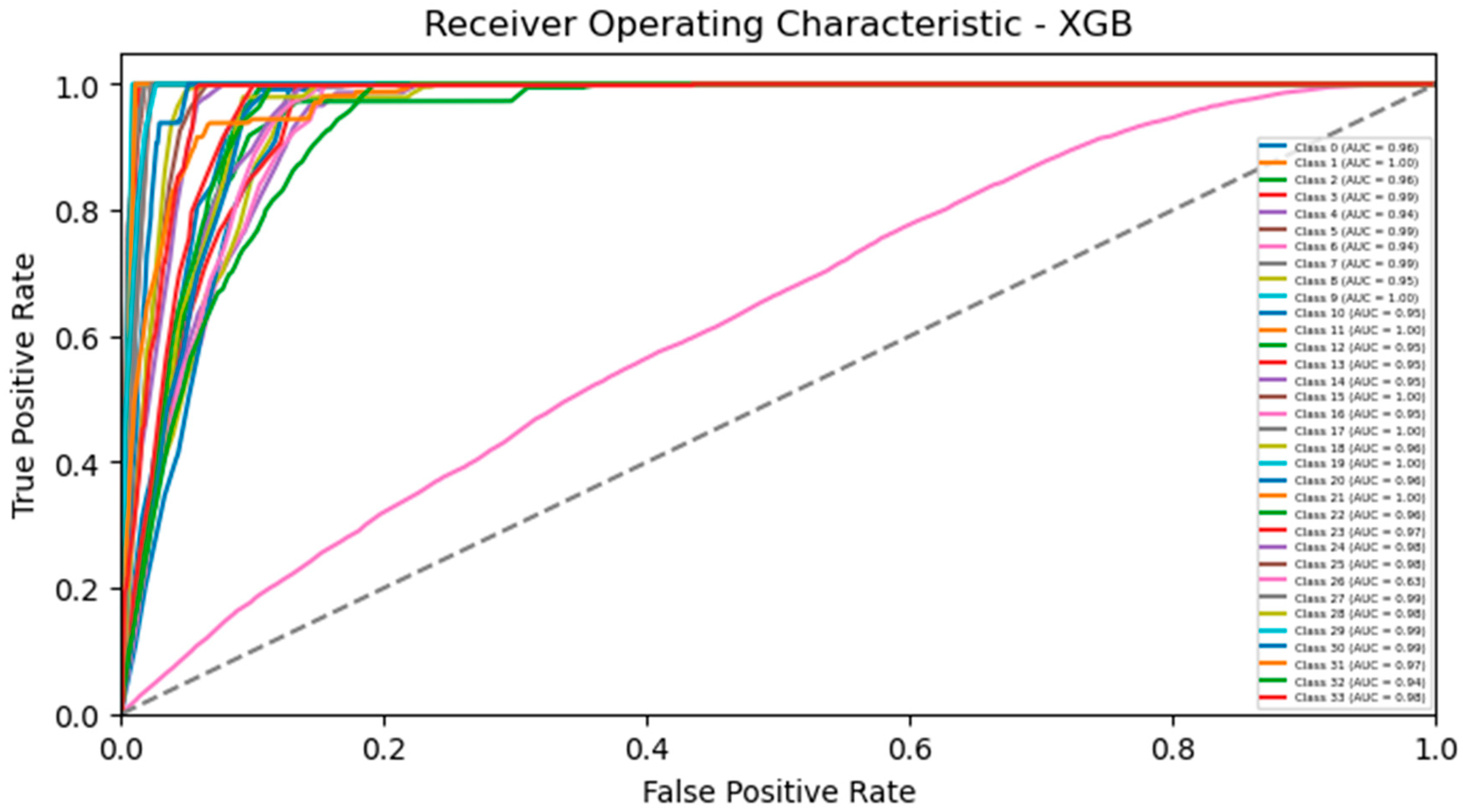

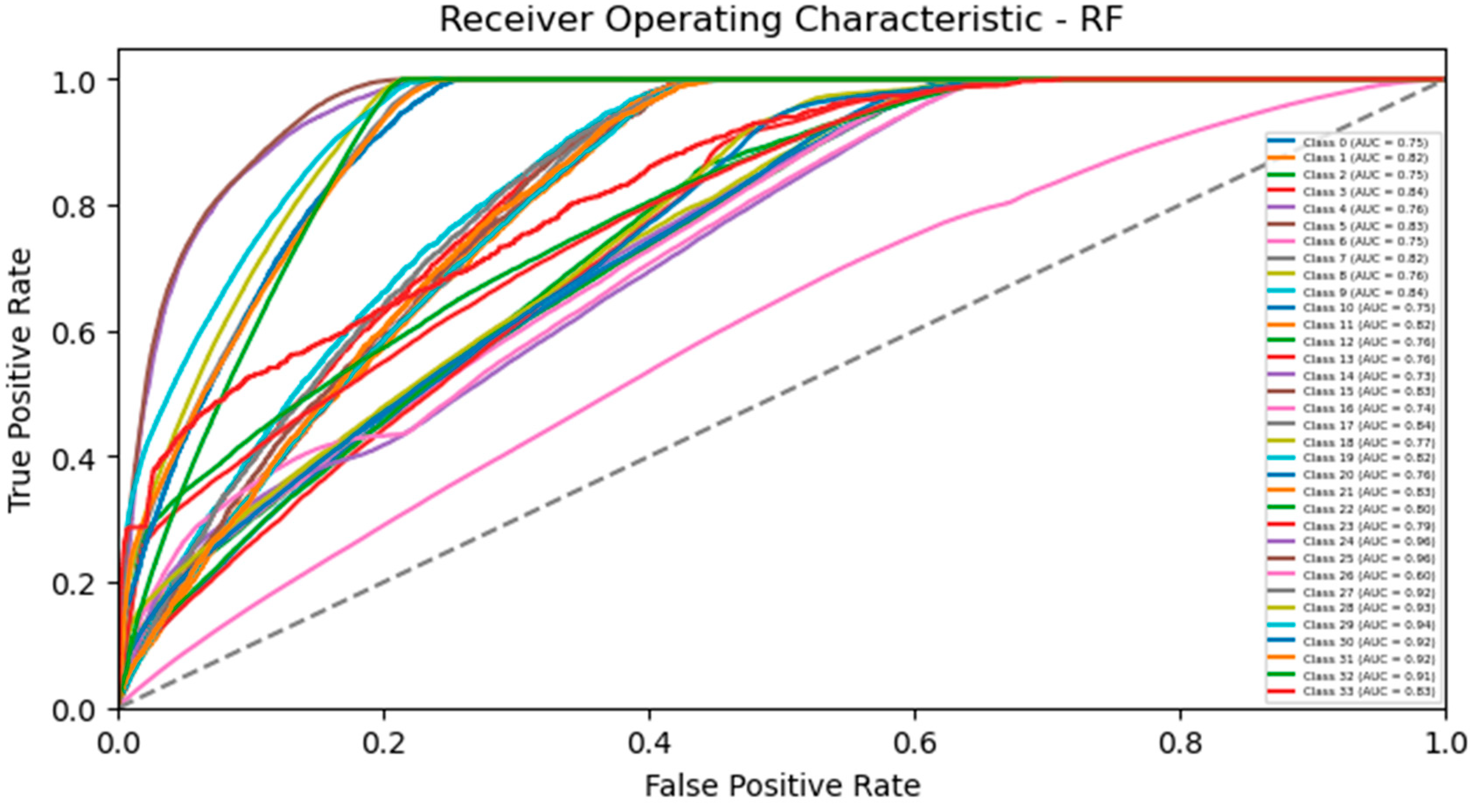

ROC Curves

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations. World Population Ageing 2019. 2019. Available online: https://www.un.org/en/development/desa/population/publications/pdf/ageing/WorldPopulationAgeing2019-Report.pdf (accessed on 2 August 2023).

- World Health Organization. Falls. 2021. Available online: https://www.cdc.gov/injury/features/older-adult-falls/index.html#:~:text=About%2036%20million%20falls%20are,departments%20for%20a%20fall%20injury (accessed on 2 August 2023).

- Stevens, J.A.; Corso, P.S.; Finkelstein, E.A.; Miller, T.R. The costs of fatal and non-fatal falls among older adults. Inj. Prev. 2006, 12, 290–295. [Google Scholar] [CrossRef]

- Sterling, D.A.; O’Connor, J.A.; Bonadies, J. Geriatric falls: Injury severity is high and disproportionate to mechanism. J. Trauma Acute Care Surg. 2001, 50, 116–119. [Google Scholar] [CrossRef]

- Tinetti, M.E.; Speechley, M.; Ginter, S.F. Risk factors for falls among elderly persons living in the community. N. Engl. J. Med. 1988, 319, 1701–1707. [Google Scholar] [CrossRef]

- Vyrostek, S.B.; Annest, J.L.; Ryan, G.W. Surveillance for fatal and nonfatal injuries—United States. Surveill. Summ. 2004, 53, 1–57. [Google Scholar]

- Alam, E.; Sufian, A.; Leo, M. Vision-based Human Fall Detection Systems using Deep Learning: A Review. arXiv 2022, arXiv:2207.10952. [Google Scholar] [CrossRef]

- Kellogg International Working Group on the Prevention of Falls by the Elderley. The prevention of falls in later life. Dan Med. Bull. 1987, 34 (Suppl. S4), 1–24. [Google Scholar]

- Rubenstein, L.Z.; Powers, C.M.; MacLean, C.H. Quality indicators for the management and prevention of falls and mobility problems in vulnerable elders. Ann. Intern. Med. 2001, 135, 686–693. [Google Scholar] [CrossRef]

- Maki, B.E.; McIlroy, W.E. Control of compensatory stepping reactions: Age-related impairment and the potential for remedial intervention. Physiother. Theory Pract. 1999, 15, 69–90. [Google Scholar] [CrossRef]

- Heart org. Falls Can Be a Serious, Poorly Understood Threat to People with Heart Disease. 2022. Available online: https://www.heart.org/en/news/2022/05/19/falls-can-be-a-serious-poorly-understood-threat-to-people-with-heart-disease (accessed on 4 August 2023).

- Santhagunam, S.N.; Li, E.P.; Buschert, K.; Davis, J.C. A theoretical framework to improve adherence among older adults to recommendations received at a falls prevention clinic: A narrative review. Appl. Nurs. Res. 2021, 62, 151493. [Google Scholar] [CrossRef]

- Ajami, S.; Rajabzadeh, A. Radio Frequency Identification (RFID) Technology and Patient Safety. J. Res. Med. Sci. 2013, 18, 809–813. [Google Scholar]

- Sabry, F.; Eltaras, T.; Labda, W.; Alzoubi, K.; Mallui, Q. Machine Learning for Healthcare Wearable Devices: The Big Picture. J. Healthc. Eng. 2022, 2022, 4653923. [Google Scholar] [CrossRef]

- Bourke, A.K.; O’Brien, J.V.; Lyons, G.M. Evaluation of a threshold-based tri-axial accelerometer fall detection algorithm. Gait Posture 2007, 26, 194–199. [Google Scholar] [CrossRef]

- Bagalà, F.; Becker, C.; Cappello, A.; Chiari, L.; Aminian, K.; Hausdorff, J.M.; Zijlstra, W.; Klenk, J. Evaluation of accelerometer-based fall detection algorithms on real-world falls. PLoS ONE 2012, 7, e37062. [Google Scholar] [CrossRef]

- Del Rosario, M.B.; Redmond, S.J.; Lovell, N.H.; Yang, A. Tracking the evolution of smartphone sensing for monitoring human movement. Pervasive Mob. Comput. 2015, 15, 18901–18933. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Cameron, I.D.; Dyer, S.M.; Panagoda, C.E.; Murray, G.R.; Hill, K.D.; Cumming, R.G.; Kerse, N. Interventions for preventing falls in older people in care facilities and hospitals. Cochrane Database Syst. Rev. 2018, 9, CD005465. [Google Scholar] [CrossRef]

- Del Rosario, M.B.; Redmond, S.J.; Lovell, N.H.; Yang, A. What Do Clinicians Want? Detection of Activities of Daily Living in People with Chronic Obstructive Pulmonary Disease Using Wearable Devices. JMIR mHealth uHealth 2023, 6, e113. [Google Scholar]

- Howcroft, J.; Kofman, J.; Lemaire, E.D. Review of fall risk assessment in geriatric populations using inertial sensors. J. NeuroEngineering Rehabil. 2016, 10, 91. [Google Scholar] [CrossRef]

- Zheng, L.; Zhao, J.; Dong, F.; Huang, Z.; Zhong, D. Fall Detection Algorithm Based on Inertial Sensor and Hierarchical Decision. Sensors 2023, 23, 107. [Google Scholar] [CrossRef]

- Kwolek, B.; Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Programs Biomed. 2014, 117, 489–501. [Google Scholar] [CrossRef]

- Aziz, O.; Park, E.J.; Mori, G.; Robinovitch, S.N.; Delp, E.J. A comprehensive dataset of falls in the real-world for developing human fall detection algorithms. Proc. Natl. Acad. Sci. USA 2019, 116, 25064–25073. [Google Scholar]

- Gjoreski, M.; Lustrek, M.; Gams, M. Accelerometer placement for posture recognition and fall detection. J. Ambient. Intell. Smart Environ. 2011, 3, 173–190. [Google Scholar]

- Cucchiara, R.; Grana, C.; Prati, A. Detecting moving objects, ghosts, and shadows in video streams. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1337–1342. [Google Scholar] [CrossRef]

- Han, J.H.; Park, S.H.; Lee, S.W. Review of machine learning algorithms for the fall detection and fall risk assessment of the elderly. Sensors 2019, 19, 3146. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Somkunwar, R.K.; Thorat, N.; Pimple, J.; Dhumal, R.; Choudhari, Y. A Novel Based Human Fall Detection System Using Hybrid Approach. J. Data Acquis. Process. 2023, 38, 3985. [Google Scholar]

- Kaur, P.; Wang, Q.; Shi, W. Fall detection from audios with Audio Transformers. arXiv 2022, arXiv:2208.10659. [Google Scholar] [CrossRef]

- Nooruddin, S.; Islam, M.M.; Sharna, F.A. An IoT based device-type invariant fall detection system. Internet Things 2020, 9 (Suppl. S2), 100130. [Google Scholar] [CrossRef]

- Clemente, J.; Li, F.; Valero, M.; Song, W. Smart seismic sensing for indoor fall detection, location, and notification. IEEE J. Biomed. Health Inform. 2019, 24, 524–532. [Google Scholar] [CrossRef]

- Kumar, A.; De, A.; Gill, R. Internet of things in healthcare: Technologies, applications, opportunities and challenges. AIP Conf. Proc. 2023, 2495, 020043. [Google Scholar]

- Gutiérrez, J.J.; Echeverría, J.C.; García, J.M. Implementation of an IoT system for the detection of falls in the elderly. Sensors 2019, 19, 4322. [Google Scholar]

- Nishat Tasnim, N.; Hanada, E. The methods of fall detection: A literature review. Sensors 2023, 23, 5212. [Google Scholar]

- Tapia, E.M.; Intille, S.S.; Haskell, W. Real-time recognition of physical activities and their intensities using wireless accelerometers and a heart rate monitor. In Proceedings of the 4th International Conference on Wearable and Implantable Body Sensor Networks, Aachen, Germany, 26–28 March 2007; pp. 1–4. [Google Scholar]

- Dehzangi, O.; Azimi, I.; Bahadorian, B. Activity recognition in patients with neurodegenerative diseases: An ensemble method. J. Ambient. Intell. Humaniz. Comput. 2019, 2019, 1–11. [Google Scholar]

- Maqbool, O.; Mahmud, S.; Hu, J. Fall detection and prevention mechanisms in IoT and sensor networks: A survey. Sensors 2019, 19, 3416. [Google Scholar]

- Figueiredo, R.; Cruz, J.F. A low-cost IoT platform for fall detection and fall risk assessment in community-dwelling elderly. IEEE Access 2019, 7, 9652–9665. [Google Scholar]

- Santos, G.L.; Endo, P.T.; Monteiro, K.H.D.C.; Rocha, E.D.S.; Silva, I.; Lynn, T. Accelerometer-based human fall detection using convolutional neural networks. Sensors 2019, 19, 1644. [Google Scholar] [CrossRef]

- Deep, S.; Zheng, X.; Karmakar, C.; Yu, D.; Hamey, L.G.; Jin, J. A survey on anomalous behavior detection for elderly care using dense-sensing networks. IEEE Commun. Surv. Tutor. 2019, 22, 352–370. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, J. A survey on multi-view learning. arXiv 2018, arXiv:1304.5634. [Google Scholar]

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 806–813. [Google Scholar]

- Yuan, Y.; Jiang, C. Towards automatic data annotation: A survey. arXiv 2018, arXiv:1809.09057. [Google Scholar]

- Igual, R.; Medrano, C.; Plaza, I.; Castro, M. Challenges, issues and trends in fall detection systems. Biomed. Eng. Online 2013, 12, 66. [Google Scholar] [CrossRef] [PubMed]

- Yavari, A.N.; Mahdiraji, M.S. Review on Fall Detection Systems: Techniques, Challenges, and Open Issues. In Smart Sensing for Monitoring Elderly People; Elsevier: Amsterdam, The Netherlands, 2021; pp. 65–89. [Google Scholar]

- Skubic, M.; Guevara, R.D.; Rand, M. Automated health alerts using in-home sensor data for embedded health assessment. IEEE J. Transl. Eng. Health Med. 2017, 5, 1800110. [Google Scholar] [CrossRef] [PubMed]

- Manga, S.; Muthavarapu, N.; Redij, R.; Baraskar, B.; Kaur, A.; Gaddam, S.; Gopalakrishnan, K.; Shinde, R.; Rajagopal, A.; Samaddar, P.; et al. Estimation of Physiologic Pressures: Invasive and Non-Invasive Techniques, AI Models, and Future Perspectives. Sensors 2023, 23, 5744. [Google Scholar] [CrossRef] [PubMed]

- Campbell, A.J.; Robertson, M.C.; Gardner, M.M.; Norton, R.N.; Buchner, D.M. Falls prevention over 2 years: A randomized controlled trial in women 80 years and older. Age Ageing 1999, 28, 513–518. [Google Scholar] [CrossRef]

- Lord, S.R.; Tiedemann, A.; Chapman, K.; Munro, B.; Murray, S.M. The effect of an individualized fall prevention program on fall risk and falls in older people: A randomized, controlled trial. J. Am. Geriatr. Soc. 2005, 53, 1296–1304. [Google Scholar] [CrossRef] [PubMed]

- Kulurkar, P.; Kumar Dixit, C.; Bharathi, V.C.; Monikavishnuvarthini, A.; Dhakne, A.; Preethi, P. AI based elderly fall prediction system using wearable sensors: A smart home-care technology with IOT. Meas. Sens. 2023, 25, 100614. [Google Scholar] [CrossRef]

- Delahoz, Y.S.; Labrador, M.A. Survey on Fall Detection and Fall Prevention Using Wearable and External Sensors Yueng. Sensors 2014, 14, 19806–19842. [Google Scholar] [CrossRef]

- Usmani, S.; Saboor, A.; Haris, M.; Khan, M.A.; Park, H. Latest Research Trends in Fall Detection and Prevention Using Machine Learning: A Systematic Review. Sensors 2021, 21, 5134. [Google Scholar] [CrossRef]

- Badgujar, S.; Pillai, A. Fall Detection for Elderly People using Machine Learning. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020. [Google Scholar]

- Zhu, L.; Zhou, P.; Pan, A.; Guo, J.; Sun, W.; Wang, L.; Chen, X.; Liu, Z. A Survey of Fall Detection Algorithm for Elderly Health Monitoring. In Proceedings of the 2015 IEEE Fifth International Conference on Big Data and Cloud Computing, Dalian, China, 26–28 August 2015. [Google Scholar]

- Khan, M.K.J.; Ud Din, N.; Bae, S.; Yi, J. Interactive Removal of Microphone Object in Facial Images. Electronics 2019, 8, 1115. [Google Scholar] [CrossRef]

- Hsu, D.; Shi, K.; Sun, X. Linear regression without correspondence. arXiv 2017, arXiv:1705.07048. [Google Scholar]

- Wickramasinghe, A.; Torres, R.L.S.; Ranasinghe, D.C. Recognition of falls using dense sensing in an ambient assisted living environment. Pervasive Mob. Comput. 2017, 34, 14–24. [Google Scholar] [CrossRef]

- Lee, T.; Mihailidis, A. An intelligent emergency response system: Preliminary development and testing of automated fall detection. J. Telemed. Telecare 2005, 11, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Rougier, C.; Meunier, J.; St-Arnaud, A.; Rousseau, J. Robust video surveillance for fall detection based on human shape deformation. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 611–622. [Google Scholar] [CrossRef]

- Wang, S.; Chen, L.; Zhou, Z.; Sun, X.; Dong, J. Human fall detection in surveillance video based on PCANet. Multimed. Tools Appl. 2016, 75, 11603–11613. [Google Scholar] [CrossRef]

- Lee, D.W.; Jun, K.; Naheem, K.; Kim, M.S. Deep Neural Network–Based Double-Check Method for Fall Detection Using IMU-L Sensor and RGB Camera Data. IEEE Access 2021, 9, 48064–48079. [Google Scholar] [CrossRef]

- Gutiérrez, J.; Rodríguez, V.; Martin, S. Comprehensive review of vision-based fall detection systems. Sensors 2021, 21, 947. [Google Scholar] [CrossRef]

- Zhou, Z.; Stone, E.E.; Skubic, M.; Keller, J.; He, Z. Nighttime in-home action monitoring for eldercare. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–1 September 2011; IEEE: New York, NY, USA, 2011; pp. 5299–5302. [Google Scholar]

- Alhimale, L.; Zedan, H.; Al-Bayatti, A. The implementation of an intelligent and video-based fall detection system using a neural network. Appl. Soft Comput. 2014, 18, 59–69. [Google Scholar] [CrossRef]

- Chen, J.; Romero, R.; Thompson, L.A. Motion Analysis of Balance Pre and Post Sensorimotor Exercises to Enhance Elderly Mobility: A Case Study. Appl. Sci. 2023, 13, 889. [Google Scholar] [CrossRef]

- Wang, X.; Ellul, J.; Azzopardi, G. Elderly fall detection systems: A literature survey. Front. Robot. AI 2020, 7, 71. [Google Scholar] [CrossRef]

- Khraief, C.; Benzarti, F.; Amiri, H. Elderly fall detection based on multi-stream deep convolutional networks. Multimed. Tools Appl. 2020, 79, 19537–19560. [Google Scholar] [CrossRef]

- Yacchirema, D.; de Puga, J.S.; Palau, C.; Esteve, M. Fall detection system for elderly people using IoT and ensemble machine learning algorithm. Pers. Ubiquitous Comput. 2019, 23, 801–817. [Google Scholar] [CrossRef]

- Hussain, F.; Umair, M.B.; Ehatisham-ul-Haq, M.; Pires, I.M.; Valente, T.; Garcia, N.M.; Pombo, N. An efficient machine learning-based elderly fall detection algorithm. arXiv 2019, arXiv:1911.11976. [Google Scholar]

- Bridenbaugh, S.A.; Kressig, R.W. Laboratory review: The role of gait analysis in seniors’ mobility and fall prevention. Gerontology 2011, 57, 256–264. [Google Scholar] [CrossRef] [PubMed]

- Baldewijns, G.; Claes, V.; Debard, G.; Mertens, M.; Devriendt, E.; Milisen, K.; Tournoy, J.; Croonenborghs, T.; Vanrumste, B. Automated in-home gait transfer time analysis using video cameras. J. Ambient. Intell. Smart Environ. 2016, 8, 273–286. [Google Scholar] [CrossRef]

- Yang, L.; Ren, Y.; Zhang, W. 3D depth image analysis for indoor fall detection of elderly people. Digit. Commun. Netw. 2016, 2, 24–34. [Google Scholar] [CrossRef]

- Leone, A.; Rescio, G.; Caroppo, A.; Siciliano, P.; Manni, A. Human Postures Recognition by Accelerometer Sensor and ML Architecture Integrated in Embedded Platforms: Benchmarking and Performance Evaluation. Sensors 2023, 23, 1039. [Google Scholar] [CrossRef]

- Shi, G.; Chan, C.S.; Luo, Y.; Zhang, G.; Li, W.J.; Leong, P.H.; Leung, K.S. Development of a human airbag system for fall protection using mems motion sensing technology. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; IEEE: New York, NY, USA, 2006; pp. 4405–4410. [Google Scholar]

- Shi, G.; Chan, C.S.; Li, W.J.; Leung, K.S.; Zou, Y.; Jin, Y. Mobile human airbag system for fall protection using MEMS sensors and embedded SVM classifier. IEEE Sens. J. 2009, 9, 495–503. [Google Scholar] [CrossRef]

- Saleh, M.; Jeannès, R.L.B. Elderly fall detection using wearable sensors: A low cost highly accurate algorithm. IEEE Sens. J. 2019, 19, 3156–3164. [Google Scholar] [CrossRef]

- Wang, Z.; Ramamoorthy, V.; Gal, U.; Guez, A. Possible life saver: A review on human fall detection technology. Robotics 2020, 9, 55. [Google Scholar] [CrossRef]

- Chuma, E.L.; Roger, L.L.B.; De Oliveira, G.G.; Iano, Y.; Pajuelo, D. Internet of things (IoT) privacy–protected, fall-detection system for the elderly using the radar sensors and deep learning. In Proceedings of the 2020 IEEE International Smart Cities Conference (ISC2), Piscataway, NJ, USA, 28 September–1 October 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar]

- Yu, X.; Jang, J.; Xiong, S. Machine learning-based pre-impact fall detection and injury prevention for the elderly with wearable inertial sensors. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Virtually, 25–29 July 2021; Springer: Cham, Switzerland, 2021; pp. 278–285. [Google Scholar]

- Sankaran, S.; Thiyagarajan, A.P.; Kannan, A.D.; Karnan, K.; Krishnan, S.R. Design and Development of Smart Airbag Suit for Elderly with Protection and Notification System. In Proceedings of the 2021 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatre, India, 8–10 July 2021; IEEE: New York, NY, USA, 2021; pp. 1273–1278. [Google Scholar]

- Chu, C.T.; Chang, C.H.; Chang, T.J.; Liao, J.X. Elman neural network identify elders fall signal base on second-order train method. In Proceedings of the 2017 6th International Symposium on Next Generation Electronics (ISNE), Keelung, Taiwan, 23–25 May 2017; IEEE: New York, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Sixsmith, A.; Johnson, N. A smart sensor to detect the falls of the elderly. IEEE Pervasive Comput. 2004, 3, 42–47. [Google Scholar] [CrossRef]

- Hayashida, A.; Moshnyaga, V.; Hashimoto, K. New approach for indoor fall detection by infrared thermal array sensor. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; IEEE: New York, NY, USA, 2017; pp. 1410–1413. [Google Scholar]

- Shinmoto Torres, R.L.; Wickramasinghe, A.; Pham, V.N.; Ranasinghe, D.C. What if Your Floor Could Tell Someone You Fell? A Device-Free Fall Detection Method. In Artificial Intelligence in Medicine; Springer: Berlin/Heidelberg, Germany, 2015; pp. 86–95. [Google Scholar]

- Selvaraj, S.; Sundaravaradhan, S. Challenges and opportunities in IoT healthcare systems: A systematic review. SN Appl. Sci. 2020, 2, 139. [Google Scholar] [CrossRef]

- ul Hassan, C.A.; Iqbal, J.; Hussain, S.; AlSalman, H.; Mosleh, M.A.; Sajid Ullah, S. A computational intelligence approach for predicting medical insurance cost. Math. Probl. Eng. 2021, 2021, 1162553. [Google Scholar] [CrossRef]

- Hassan, C.A.U.; Karim, F.K.; Abbas, A.; Iqbal, J.; Elmannai, H.; Hussain, S.; Ullah, S.S.; Khan, M.S. A Cost-Effective Fall-Detection Framework for the Elderly Using Sensor-Based Technologies. Sustainability 2023, 15, 3982. [Google Scholar] [CrossRef]

- Hassan, C.A.U.; Iqbal, J.; Irfan, R.; Hussain, S.; Algarni, A.D.; Bukhari, S.S.H.; Alturki, N.; Ullah, S.S. Effectively predicting the presence of coronary heart disease using machine learning classifiers. Sensors 2022, 22, 7227. [Google Scholar] [CrossRef]

| Author | Methods/Classifiers | Hardware/Evaluation Parameters | Limitations | Date of Publication |

|---|---|---|---|---|

| [59] | Radio-Frequency Identification tags (RFID). | RFID tags can provide valuable information through parameters such as Doppler Frequency Value (DFV) and Received Signal Strength (RSS). These parameters help in tracking and identifying objects or assets equipped with RFID tags in various applications, including inventory management, access control, and asset tracking. | Inadequate accuracy. The authors employed various equipment and devices in their study; nevertheless, the achieved accuracy levels were not sufficiently high. | 16 June 2016 |

| [60] | Metaheuristic algorithms are employed in the solution. | The floor RFID technique involves arranging RFID tags in a two-way grid on a smart carpet. This setup allows for efficient and location-based tracking and monitoring of objects or individuals as they move across the carpeted area. | However, the accuracy achieved with this floor-based RFID technique is often insufficient or not up to the desired level. | February 2005 |

| [61,62,63,64,65,66,67,68,69,70,71,72,73,74,75] | A digital camera is used to capture images for a vision-based system, which then employs 3D image shape analysis techniques. This analysis involves utilizing algorithms such as PCA, SVM, and NN to extract valuable information and make assessments based on the captured images. | A vision-based system relies on visual input from cameras or other optical sensors to perform its functions, such as fall detection or monitoring activities. | Cameras installed in the ceiling can detect fall cases with accuracies of 77% and 90%, respectively. However, it is important to note that this level of monitoring can potentially impact the privacy of the elderly and may also involve constant surveillance of their daily activities. | 2007, 2019, 2020 |

| [76,77,78,79] | Wearable-based solutions are designed to protect the head and thighs. | Sensor-based devices designed to detect fall events typically utilize both an accelerometer and angular velocity measurements. | Wearing both an airbag and a device at all times may be impractical. | 2007, 2011, 2020 |

| [79,80,81,82,83] | Fall detection system incorporates three-dimensional MEMS (Micro–Electro–Mechanical Systems) technology, Bluetooth connectivity, accelerometers, a Microcontroller Unit (MCU), gyroscopes, and high-speed cameras. | High-speed cameras are employed to record and analyze human motion. | Wearing such a device at all times may indeed be impractical. | 2021, 2009, 2013, 2019 |

| [84] | A neural network algorithm is employed for fall detection. | Implemented within a wearable device, this fall detection system is integrated with Bluetooth low-energy technology. | Wearing an exoskeleton all the time may appear impractical. | April–June 2004 |

| [85] | Smart inactivity detection using array-based detectors | The Intelligent Fall Indicator System relies on an array of infrared detectors for fall detection and notification. | Impact of infrared radiation on elderly fall. | August 2017 |

| Proposed Methodology | IoT-based solution RFID tags embedded on the smart carpet, RFID reader. For analysis: machine learning and deep learning classifiers. | Accuracy, precision (specificity), and recall (sensitivity) KNN achieves 99% accuracy in the detection of elderly fall events. | IoT-based system that is highly matured and state-of-the-art, according to the nature of the data and data representative algorithms. |

| Classifier | Accuracy | Recall | Precision | F1-Score |

|---|---|---|---|---|

| GRU | 41.37% | 0.410 | 0.235 | 0.265 |

| XGB | 48.42% | 0.485 | 0.332 | 0.361 |

| LR | 41.86% | 0.419 | 0.176 | 0.248 |

| KNN | 99.97% | 0.999 | 0.999 | 0.999 |

| GB | 48.9% | 0.492 | 0.176 | 0.392 |

| RF | 42.75% | 0.428 | 0.483 | 0.268 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alharbi, H.A.; Alharbi, K.K.; Hassan, C.A.U. Enhancing Elderly Fall Detection through IoT-Enabled Smart Flooring and AI for Independent Living Sustainability. Sustainability 2023, 15, 15695. https://doi.org/10.3390/su152215695

Alharbi HA, Alharbi KK, Hassan CAU. Enhancing Elderly Fall Detection through IoT-Enabled Smart Flooring and AI for Independent Living Sustainability. Sustainability. 2023; 15(22):15695. https://doi.org/10.3390/su152215695

Chicago/Turabian StyleAlharbi, Hatem A., Khulud K. Alharbi, and Ch Anwar Ul Hassan. 2023. "Enhancing Elderly Fall Detection through IoT-Enabled Smart Flooring and AI for Independent Living Sustainability" Sustainability 15, no. 22: 15695. https://doi.org/10.3390/su152215695

APA StyleAlharbi, H. A., Alharbi, K. K., & Hassan, C. A. U. (2023). Enhancing Elderly Fall Detection through IoT-Enabled Smart Flooring and AI for Independent Living Sustainability. Sustainability, 15(22), 15695. https://doi.org/10.3390/su152215695