Abstract

To protect our planet, the material recycling of domestic waste is necessary. Since the COVID-19 pandemic began, the volume of domestic waste has surged overwhelmingly, and many countries suffered from poor waste management. Increased demand for food delivery and online shopping led to a huge surge in plastic and paper waste which came from natural resources. To reduce the consumption of resources and protect the environment from pollution, such as that from landfills, waste should be recycled. One of precious recyclable materials from household waste is liquid cartons that are made of high-quality paper. To promote sustainable recycling, this paper proposes a vision-based inspection module based on convolutional neural networks via transfer learning (CNN-TL) for collecting liquid packaging cartons in the reverse vending machine (RVM). The RVM is an unmanned automatic waste collector, and thus it needs the intelligence to inspect whether a deposited item is acceptable or not. The whole processing algorithm for collecting cartons, including the inspection step, is presented. When the waste is inserted into the RVM by a user after scanning the barcode on the waste, it is relocated to the inspection module, and the item is weighed. To develop the inspector, an experimental set-up with a video camera was built for image data generation and preparation. Using the image data, the inspection agent was trained. To make a good selection for the model, 17 pretrained CNN models were evaluated, and DenseNet121 was selected. To access the performance of the cameras, four different types were also evaluated. With the same CNN model, this paper found the effect of the number of training epochs being set to 10, 100, and 500. In the results, the most accurate agent was the 500-epoch model, as expected. By using the RVM process logic with this model, the results showed that the accuracy of detection was over 99% (overall probability from three inspections), and the time to inspect one item was less than 2 s. In conclusion, the proposed model was verified for whether it would be applicable to the RVM, as it could distinguish liquid cartons from other types of paper waste.

1. Introduction

The onset of the COVID-19 pandemic had an adverse effect on waste in terms of quantity and patterns [1,2,3,4]. The amount of packaging waste generated has surged as well due to strong demand for both food and parcel delivery services, particularly after the onset of COVID-19 [4]. According to the Korean Ministry of Environment (MoE), the average daily volume of plastic waste generated in 2020 increased by 15.6 percent compared with the same period in 2019 [5]. Considering this plastic waste increase, it is not hard to conjecture an increase in paper waste volume as well. Among the paper waste, on average, about 70 percent of liquid cartons (or paper packs) were not recycled but simply thrown away. Regarding liquid carton waste, Korea imports all the high-grade pulp used to manufacture milk cartons domestically, with there being a paucity of wood and many other natural resources on the peninsula. Of the 1.45 million tons of recycled waste paper used in Korea, mixed paper waste accounted for 9.8 percent of the total in 2004 [6]. Packaging paper and other paper products are categorized as mixed paper waste, but 71 percent of milk cartons and other high-quality paper are being discarded [6]. Milk cartons in particular are often classified as general waste and not recycled, either being incinerated or sent to landfills. Packaging paper waste brought to certain public depots is appropriately sorted and collected but only at irregular intervals. For residencies, the conditions to realize a rational separation, collection, and recycling system have not yet materialized, making sorting and disposal inconvenient and inefficient. Some of these inefficiencies and inconveniences are described below.

The pulp used in the manufacturing of paper packs is of the highest quality, containing at least 75 percent natural pulp. Natural pulp is valuable and used in a wide variety of applications, including toilet paper, food-grade packaging paper, notebooks and legal pads, printer paper, shopping bags, paper stock for the manufacture of new paper, cardboard boxes, wrapping paper, wallpaper, and egg cartons. Moreover, internal coating agents used to contain liquid can also be profitably recycled into various products, including aluminum ingots and powders, kerosene, roof tiles, and aluminum sheets. The various components of paper packing materials that can be recycled have a wide range of applications and are capable of generating ample profits for waste management firms.

Currently, large volumes of liquid carton waste are collected by primary collection firms, and they are mixed with general paper waste. Liquid cartons should be separated from general paper because they are in a different regenerating process for stripping off the inner coating layer that contains the liquid. Collected paper waste is processed by secondary firms, but in this process, the liquid cartons are not separated from general paper waste but rather contaminated, making much of it unusable. Ultimately, those high-quality recyclable materials easily end up in landfills or incinerated.

There exists a system of sorts for compensating those that recycle some waste materials, but it is very limited and a source of much frustration. Consumers that dispose of paper waste at appropriate government-designated facilities are financially compensated for doing so, but participation guidelines and the rules for receiving the compensation change frequently and unexpectedly. Many participants have voiced dissatisfaction with the haphazard system. As of now, domestic household waste is sorted by hand in an unsystematic way following collection, after which the waste is subject to large-scale industrial sorting at processing facilities. There are some automated technologies used to classify and separate waste by material properties following secondary collection. These technologies are only really suitable for use at an industrial scale and cannot be applied to separating recycling at the individual waste resource unit level at large residential complexes. Therefore, it is essential to design a method to prevent secondary contamination and commingling. This in itself requires better separation and sorting at waste production sites and at large residential apartment complexes in particular. Automated separation and collection technology must be developed for this purpose. Such a machine that automatically sorts and classifies household waste at the level of the individual waste resource unit would enable returning small compensation to the user as a token of the value that he or she contributed. This machine is called the reverse vending machine (RVM) in that it returns money (or some reward) by taking a product in just the opposite way of how a vending machine works.

It is quite obvious that high-quality pulp recycling through RVMs will reduce the number of trees cut and save forests. This paper proposes inspecting the disposed liquid carton waste in an automated real-scale RVM for a sustainable environment in terms of material recycling in a new way. Typical compostable paper is easily confused with liquid cartons due to its product barcode. Thus, this paper is particularly interested in developing an inspection module for distinguishing liquid cartons from general paper wastes. The inspection system is particularly designed for the liquid carton RVM, and the prediction algorithm is based on an artificial intelligence (AI) vision technique. For the algorithm, the image data of liquid cartons and other paper waste are prepared and processed. This paper is composed of the following sections. In Section 2, the related research works and company products are discussed. Section 3 is devoted to the description of the proposed inspection module for a full-sized RVM and its working process. In Section 4, the data preparation for vision inspection is explained, and the CNN model selection and results follow in Section 5. Afterward, the discussion of the experimental results and concluding remarks are at the end.

2. Related Works

In Korea, RVMs have been introduced already by several domestic companies to create the new marketplace of recycled resources. Oysterable [7] manufactures recycling receptacles equipped with AI technologies. These bins, which roughly translate to “Today‘s recycling”, incentivize recycling by rewarding users with points to buy products in an app. Points are collected after users scan the barcodes of any recycling disposed of into the bins and processed. Paper packaging materials with barcodes are worth 10 points, and users can buy a 200-mL carton of milk with 100 points. However, the system has several drawbacks. For one, the bins will not accept waste paper or plastic without a barcode, and any points earned are nontransferable and can only be used within the Oysterable app. Moreover, Oysterable is not a waste management firm and does not directly manage its receptacles or the waste that they collect, so potential users often find Today‘s recycling bins unusable or otherwise incapable of accepting additional waste, with the areas around the bins and the exteriors of the bins themselves contaminated by waste not accepted by the bins. In addition, the app’s marketplace is extremely limited, with many listed items out of stock or otherwise unavailable to purchase. Finally, the technology itself is prone to errors. Many users have complained that the sensor often does not recognize or pick up the barcodes of some waste that it accepts, depriving them of points they would otherwise earn. Another manufacturer, Superbin [8], also provides RVMs with a similar compensation policy to Oysterable’s, but they accept only cans and plastic bottles. The Superbin RVMs can determine the disposed items by vision-based deep learning, and thus they can accept products without barcodes. However, this company is not a waste management firm either, and the vision technique is not for the purpose of inspecting entered items. It is important to inspect the items whether the waste is acceptable or not because the machine should be working by itself without any human operator. Anyone can try to gain unfair profits by disposing of wrong products with different barcodes or contaminated waste which must be thrown away.

Korean firm Greenstar [9] makes an RVM-type receptacle branded the PET Flake RVM. The machine accepts PET bottles and is capable of significantly reducing their volume through pulverization in the device itself. However, the technology is not yet capable of adequately distinguishing and separating PET bottles from other plastic materials such as labels, which is a major drawback. For this technology to expand, it needs to have a greater impact on the overall collection process. Resource Innovation [10] is a domestic household waste management company, and it has been developing various types of RVMs as well. They claim the RVM can be optimally developed by waste management companies because they have direct interests in RVM operations and recycling management. Additionally, proper waste recycling can be settled by waste management RVMs. One of their RVMs is the paper pack waste collector. However, it also needs an intelligent collecting module to automatically recognize the waste deposited and to make a decision on whether the entered item is acceptable or not.

Bobulski and Kubanek [11] designed an automatic waste segregation system. They used a deep convoluted neural network (CNN) to classify waste images. Their design was intended for the use of plastic waste segregation in a large facility (not an RVM), but the design scheme can be extended to the vision inspection of liquid carton waste in an RVM, as the inspection itself utilizes the same classification method. The automation technique was applied for the large-scale waste handling process. An RVM for plastic bottle recycling was proposed by Mariya et al. [12]. They built a prototype of the RVM design with the object detection methodology by the TensorFlow model. It is noted that the research dealt with the inspection system and whether the waste is appropriate or not by the vision-based technique, but this prototype was only for the plastic bottle, and the full-scale prototype was not shown. Another plastic RVM was proposed by Mwangi and Mokoena [13]. They used deep learning technology to detect the status of a plastic bottle. Even though this paper is also only for plastic waste in need of full-scale production, this paper provided an inspection system with four quality levels: (1) only the seal exists, (2) both the seal and cap exist, (3) only the cap exists, and (4) neither the seal nor the cap exist. Lopez et al. [14] studied the waste collection by an RVM. They used the YOLOv3 [15,16] framework algorithm for the training of images, and they built a prototype for a system with process logic. Their work was only for PET bottles, and some other inappropriate products were not regarded. Meng and Chu [17] studied garbage classification methodologies based on vision data. They utilized images from the Kaggle datasets [18]. They made six categories: cardboard, glass, metal, paper, plastic, and trash. In the paper, it is noted that different AI techniques (support vector machines and different CNN architecture models) are discussed. The work dealt with only the classification method and not RVMs. As noted in the paper, the study needs more data from real and complicated situations, and it also lacks the liquid carton class. Liquid cartons need to be separately collected from other paper waste; otherwise, liquid cartons are all filtered out due to its inner coating film. The problem is that liquid cartons are also recognized as packaging paper, similar to other paper waste, and consumers are always easily confused. For CNN training, a typical challenge is a small set of data, as described in [17]. Transfer learning is a method for storing knowledge gained while solving one problem and applying it to a different but related problem [15,19,20,21,22,23,24]. Through transfer learning, image classification can be more accurate with only a small amount of image data.

3. Proposed System for a Reverse Vending Machine (RVM)

Over half of Korea’s 51 million inhabitants live in large residential apartment complexes. These sites generate a great variety of waste resources, and so a machine or device of some sort able to sort and automatically classify these waste products could significantly increase the utilization rate of limited domestic resources. Moreover, given that increasing volumes of produced household waste have a deleterious environmental impact on living space, such a device should be able to accept and sort waste at any time day or night. Reforming the waste collection process in such a way would minimize the contamination of waste resources and raise the quality of recycled products, helping lay the groundwork for a sound sectoral profit structure. With such a structure in place, waste resource firms would be incentivized to raise collection rates, ultimately helping to achieve a virtuous cycle of resource circulation and recirculation. It follows that an automated system for collecting and inspecting paper waste is necessary.

Both for the sake of environmental preservation and to create a virtuous cycle, we need a policy that incentivizes the recycling of packaging paper (and milk cartons in particular) and plastic waste generated at large residential complexes through a direct compensation mechanism. Such a policy would enable better utilization of waste resources—making the proper disposal of trash valuable—and increase the recycling rate.

To this end, we propose the following system. It makes use of RVM-type technology based on a smart IoT platform. This solution is targeted at large apartment complexes comprising 400–500 units that produce an average of 1 kilogram of packaging paper waste every month. The device would be capable of accepting around 2000 200-mL, 500-mL, and 1-L liquid containers (milk cartons and forth), or about 50 kg of waste in 1 month. The system would collect and compile all collection data in order to directly compensate users and must include some service capable of effective sorting, collection, and transport.

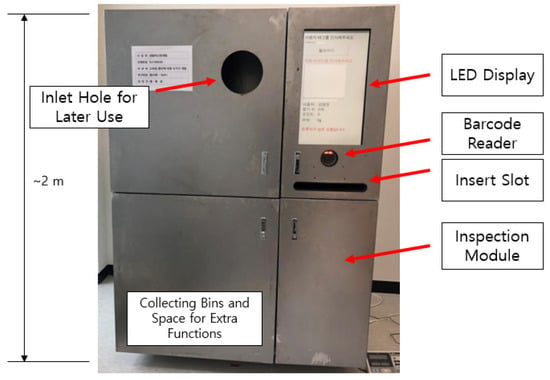

Such a system would leverage its compensation mechanism to encourage passive collection and separation, mitigating logistics costs and providing an innovative opportunity to transition away from manual, labor-intensive collection methods. To demonstrate this system, the RVM prototype was fabricated by Resource Innovation [10] as shown in Figure 1. For the RVM shown in Figure 1, the receiving slot of the paper waste RVM would have a length matching the measurements of the packaging waste (milk cartons and so forth) that it is designed to accept to prevent commingling. The waste materials would be classified by barcode. For the safety of young children, the slot of the RVM would be positioned at least 1.2 m off the ground, and for the convenience of users (and to better promote the use of the machine), a monitor displaying promotional information or guidance would be installed in the space above the machine. This space would also include the barcode sensor, camera, and other equipment.

Figure 1.

Proposed RVM.

The compressor would be a modified design of an existing compression device. (The compressor function can be seen in the lower left space in Figure 1.) The compressor has a suite of safety features that sets it apart and makes it especially suitable to this task. For example, the device will press some waste paper deposited haphazardly into the receptacle, but any load above a certain threshold will deactivate the compressor. Furthermore, the press speed can be limited to prevent severe accidents.

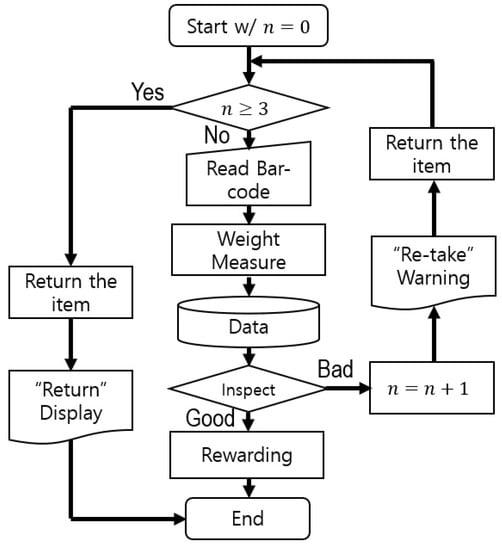

With a number of connected sensors and monitoring devices, as well as access to a wide variety of data, this device represents a new strategic development capable of producing real-time environmental data, providing an effective and low-cost solution to a critical waste management problem. Every stage of the process is automated in this system by the process logic shown in Figure 2, from the start of pushing a button to the final reward processing. After the paper waste is read by the barcode reader, the waste is deposited into the slot by the user. It is then relocated into the inspection module, and the internal weighing system calculates the mass of the packaging waste to the nearest five hundredths of a gram before vision-based inspection is initiated. The integrated IoT technologies will allow the device to interpret and communicate sensor data to users via a monitor, displaying real-time aggregate weight data and inspection results. If the inspection result is not good, such as having unmatched items compared with the barcode information, then the RVM returns the item for restarting the process up to three times. The aggregate data are designed to be linked to the incentive or compensation system apartment complex residents will use to earn rewards for recycling paper waste.

Figure 2.

RVM process logic.

4. Data Preparation for the Vision-Based Inspection

The inspection module developed in this study is based on the vision system. The cam is installed at the top of the module so that it can take a picture of the deposited item. The picture is then processed by the convolutional neural network (CNN) algorithm. The CNN is basically the trained database model which compares the taken picture with the target images assigned to a certain category. In this study, the target category was the paper pack. For the construction of the CNN model, many liquid carton and paper images were collected for the target database. For the comparison database for items not in the target category (e.g., aluminum cans, PET bottles, and glass bottles), the Kaggle open datasets were used as well.

Various cams in a certain range of prices were compared, because the RVM should be cost-competitive, and it is typically installed in an open area. The cam models compared in this study were ESP32-CAM, OV7670, OpenMV-CAM M7, and APC930. The specifications of the cam are summarized in Table 1. The cameras were evaluated based on the loss function results of the CNN training.

Table 1.

Camera comparison for taking pictures of liquid cartons and paper waste.

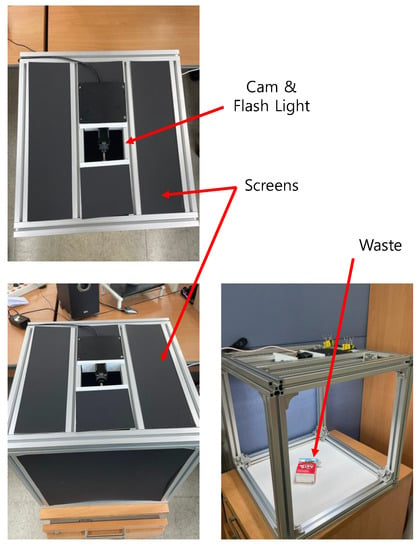

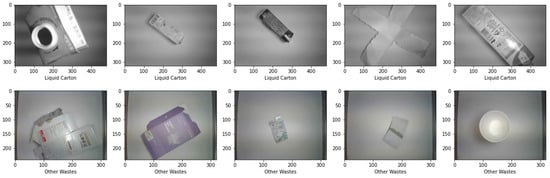

In this study, it was considered important to determine the categories of waste, particularly between the liquid cartons and other paper wastes, because people can be easily confused between the two types. The proper images tagged with appropriate classes were not found in the Kaggle database. Thus, typical wastes of liquid cartons and other papers were collected and had photos taken of them. To facilitate the process of image data construction, the experimental set-up, which provided a similar inspection environment to that of an RVM, was built to collect the images of liquid cartons and other paper wastes as shown in Figure 3. In Figure 3, the space in the aluminum frames was about the same as the one in an RVM, and the camera selected and switched from the cam models in Table 1 as well as a flashlight were installed at the top. The screens were attached on the top and sides. The waste was located in the middle of the bed, as shown in the bottom-right inset. Some of the images collected only for this study are shown in Figure 4. Additionally, the camera images taken by the four cams used in this study are shown in Figure 5.

Figure 3.

The experimental set-up to capture the images of liquid and non-liquid cartons and test the inspection agent.

Figure 4.

Waste sample random images (top row: liquid carton; bottom row: other paper waste).

Figure 5.

Waste sample images of four cameras used in this study.

A total 250 images, labeled as two classes of “Liquid Cartons” and “Other Paper Waste”, were imported into the data frame and split at a ratio of 8:1:1 as the training, validation, and test sets, respectively. Among the 250 images, 200 images were for the “Liquid Carton” class, and the others were for the “Other Paper Waste” class. Using the “ImageDataGenerator”, data augmentation was employed by rotating, zooming, flipping, and rescaling the taken images to reduce the possibility of overfitting during training. In this study, transfer learning was used as well to increase the speed and accuracy of training. While training, the sigmoid activation function and Adam optimizer were employed.

5. CNN Model Selection and Training Results

Various CNN models were also compared for selection of the RVM inspection agent. Some of the best and most well-known Keras pretrained models for image classification [25] were chosen and evaluated for RVM item inspection. These pretrained models were trained from the ImageNet dataset in advance. Based on the report in [25], an appropriate model for this study could be selected, as the CNN models’ performances were well summarized in terms of the accuracy, training time, and number of parameters among the considered models. However, neural networks are generally dependent on the characteristics of the data with which they are trained. For this reason, some of the CNN models from [25] were evaluated by training with waste images. In the evaluation, only three training epochs for all considered models were used to save time due to the observation that the first few epochs gave some important traits, showing a convergence tendency. The models rated for paper waste inspection were DenseNet121, DenseNet169, DenseNet201, InceptionResNetV2, InceptionV3, MobileNet, MobileNetV2, NASNetLarge, NASNetMobile, ResNet101, ResNet101V2, ResNet152, ResNet152V2, ResNet50, ResNet50V2, VGG16, and VGG19.

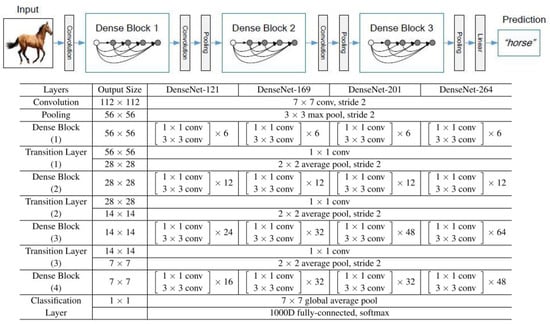

As shown in Table 2, the dense convolutional network (DenseNet) architecture was found to be most appropriate due to its compact size (in terms of the number of parameters and computational costs) and good accuracy. The authors in [26] confirmed its state-of-the-art results across several highly competitive datasets under multiple settings. The main structure of the DenseNet model is shown at the top of Figure 6, and the architectures of three typical DenseNet models are shown below it. Based on Table 2, DenseNet121 was selected due to it having the shortest training time, while its validation accuracy was only about 5% lower than the most accurate model of MobileNet. However, the time for MobileNet was close to six times greater than that of DenseNet121. The DenseNet121 model was found in various previous works to have good performance [19,27,28,29].

Table 2.

Performance comparison of pretrained CNN models from the research in [25], which was performed for paper waste images with three-epoch training.

Figure 6.

The structure of DenseNet models, with a block diagram in the top inset and the detailed structure at the bottom [26].

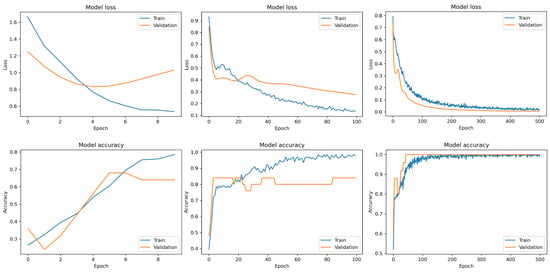

By using DenseNet121 and transfer learning, the inspection agent was trained with two classes of images. Figure 7 shows the validation loss and accuracy regarding the three sets of 10, 100, and 500 training epochs. In the left column of insets in Figure 7, the training results with only 10 epochs are shown. The training loss decreased over the epochs, but the validation loss did not converge in the same pattern (even though it was increasing), meaning that the number of epochs was not enough for a good classifier. The insets in the middle column show the results with 100 epochs of training. A good pattern of convergence can be observed in the figures, as the training loss approached about 0.1, and the accuracy reached close to 1.0. However, some gradient in the validation loss can still be observed, and this implies possible improvements in training with more epochs than 100. In the right column of insets, the results are for 500 epochs, showing the convergence of training loss and accuracy in a stable manner. The validation loss and accuracy were 0.05 at its minimum and almost 0.96 at its maximum, respectively. Due to the intrinsic nature of neural networks, the resultant training values could vary along with the trials at different instances. However, the convergence patterns and round numbers were similar to the ones reported here.

Figure 7.

The training results for the DenseNet121 model for various sets of epochs.

The classification performance for paper waste images was evaluated for the cams considered in Table 1. The experiments were performed in settings identical to those explained earlier with the same number of epochs. The number of training epochs was 100, and the same DenseNet121 network was trained using 40 different images taken by each cam for the cam performance. The best result as the minimum value of the loss function was found by the ESP32-CAM model, whose value was far smaller than those of the other cams, as shown in Table 3. When comparing the loss results of the other cams, the values were not remarkably high, meaning the price of the cam can be one of the major factors to consider for RVM construction.

Table 3.

Cam performance comparison for image classification training.

6. Discussion

As expected, a smaller loss function value could be obtained by training with more epochs, as shown in Figure 7. For the models with 100 and 500 epochs, the monotonic movements of the saturation were shown. The accuracy for the validation did not change over some range of epochs or jumps up and down. This could be due to the small validation set. The training loss results also showed the performances of a number of cams. Contrary to intuition, the cheapest cam showed the best result, implying the price could not be a critical factor to consider. Rather, the number of images or the diversity was more important. Of course, the training results could be altered by different datasets. In addition, the cams’ capabilities are very different from one another, and so a selection cannot be made solely by the CNN training.

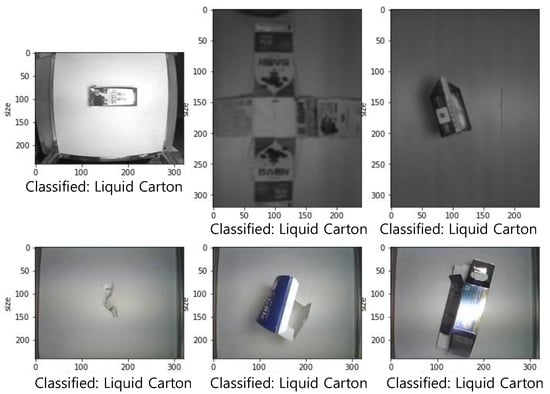

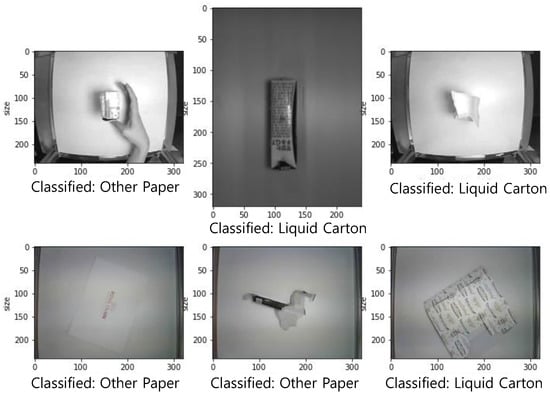

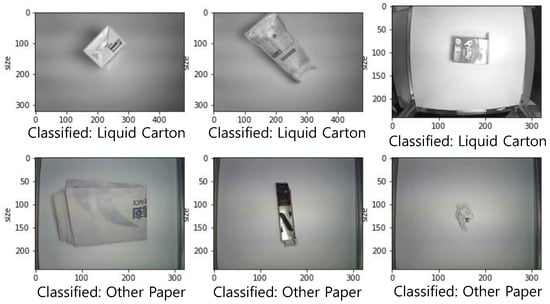

Each agent was tested in a real RVM with images not used during training and validation. The class prediction was decided with a threshold of 0.5. The agents trained by 10, 100, and 500 epochs, shown in Figure 7, were tested from the test dataframe of the image data. Random images from each class were tested, and they are shown in Figure 8, Figure 9 and Figure 10. Figure 8 shows the test results of the 10-epoch agent. The top row is the liquid carton images, and other papers are in the bottom row. The 10-epoch agent correctly classified the liquid carton images, but all the paper waste images in the shown inset figure were not correctly classified because of the low accuracy or insufficient number of training epochs. The 100-epoch agent (Figure 9) showed better test results than the 10-epoch agent. However, some of the images were still incorrect (the first in the top row and the last in the bottom row). The hand in the image (the first in the top inset) could have confused the inspection agent. The 500-epoch agent (Figure 10) showed the best results, with all images correctly classified, as expected, with the maximum accuracy. This implies that a number of epochs greater than 100 is required for good training, and with only 250 image data samples and transfer learning, a successful classification agent for waste inspection could be obtained. However, the successful results could not be guaranteed as in general neural network problems. When training was reset and initiated in a new trial, some instance with a wrong decision was observed even with 500-epoch training. At this time, the validation accuracy was not much smaller than the results in most instances.

Figure 8.

The classification test results for the DenseNet121 model trained by 10 epochs (top row: liquid carton; bottom row: other paper).

Figure 9.

The classification test results for the DenseNet121 model trained by 100 epochs (top row: liquid carton; bottom row: other paper).

Figure 10.

The classification test results for the DenseNet121 model trained by 500 epochs (top row: liquid carton; bottom row: other paper).

The validation accuracy was 0.96 for this round of training. The outcomes were even more accurate using the process logic in Figure 2, because it evaluated the inspection three times. Then, with 96% accuracy, the overall accuracy could be increased up to 99.99% because the possibility of false detection three times in a row is 0.0064%, and it is expressed as

7. Conclusions

This paper proposed a new inspection module for liquid carton recycling. The module was developed with vision-based technology using the DenseNet121 CNN model. Waste images taken by the camera at the top of the module were assessed to determine whether the disposed item was acceptable or not. The inspection algorithm dealt with the inspection agent accepting or returning the item. To design the agent for the CNN, 250 image data were prepared using a video camera. Then, using the imported images, the CNN model was trained by various pretrained CNN models. A number of cams were also evaluated by the training results. By using the pretrained models and transfer learning, the small set of image data (250 images in this study) could enable the training convergence trends without being stopped early by overfitting. Here, 17 pretrained models were evaluated with the same training settings.

As a result, the DesnNet121 pretrained CNN model was the most fitting model for this waste-inspection problem in terms of its having the shortest training time and a compact size. The validation loss and accuracy values were also comparable to the values of the best model. The trained agents with different numbers of epochs were also tested using the same DesnNet121 CNN model. As expected, the lower amounts of training yielded poor inspection outcomes. With the 500-epoch agent, all the classification decisions were correct. The time which was taken to inspect one item was less than 2 s (taken for running the inspection code after the trained CNN was obtained), and the accuracy was greater than 99%, meaning the proposed module can be applied to real-world RVMs, and an RVM with this inspection system will promote sustainable waste material recycling.

The inspection module needs more work to be built into RVMs. The algorithm and inspection agent should be embedded in the microboard. The conveying mechanism has to be constructed for smooth operation from deposition to inspection and storing the waste or returning it. Additionally, tending action is required for good vision conditions during automation. As discussed, some other objects in a different organization may degrade the inspector’s performance. Most of all, the inspection agent can be upgraded using images users have given RVMs because the user can claim incorrect consequences, which can correct the model further. This upgrading algorithm remains for future work. To enhance the accuracy and guarantee performance, additional information, including the physical characteristics of waste, can be incorporated into the inspector along with the CNN model. This hybrid inspection system also remains for future work.

Author Contributions

Conceptualization and methodology development, C.S.L. and D.-W.L.; validation, C.S.L.; writing—original draft preparation, C.S.L. and D.-W.L.; writing—review and editing, D.-W.L.; project administration, D.-W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was supported by a research grant from the University of Suwon in 2021.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The work was supported by the University of Suwon (2021). The authors thank Yong-Ho Hong for his thoughtful advice. The authors are also thankful for the data preparation by Young-Min Cha.

Conflicts of Interest

The authors declare no conflict of interest. The funder had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Di Maria, F.; Beccaloni, E.; Bonadonna, L.; Cini, C.; Confalonieri, E.; La Rosa, G.; Milana, M.R.; Testai, E.; Scaini, F. Minimization of spreading of SARS-CoV-2 via household waste produced by subjects affected by COVID-19 or in quarantine. Sci. Total Environ. 2020, 743, 140803. [Google Scholar] [CrossRef] [PubMed]

- Nzediegwu, C.; Chang, S.X. Improper solid waste management increases potential for COVID-19 spread in developing countries. Resour. Conserv. Recycl. 2020, 161, 104947. [Google Scholar] [CrossRef] [PubMed]

- Peng, J.; Wu, X.; Wang, R.; Li, C.; Zhang, Q.; Wei, D. Medical waste management practice during the 2019-2020 novel coronavirus pandemic: Experience in a general hospital. Am. J. Infect. Control 2020, 48, 918–921. [Google Scholar] [CrossRef] [PubMed]

- Yousefi, M.; Oskoei, V.; Jonidi Jafari, A.; Farzadkia, M.; Hasham Firooz, M.; Abdollahinejad, B.; Torkashvand, J. Municipal solid waste management during COVID-19 pandemic: Effects and repercussions. Environ. Sci. Pollut. Res. 2021, 28, 32200–32209. [Google Scholar] [CrossRef]

- Yoon, J.; Yoon, Y.; Yun, S.L.; Lee, W. The Current State of Management and Disposal of Wastes Related to COVID-19: A review. J. Korean Soc. Environ. Eng. 2021, 43, 739–746. (In Korean) [Google Scholar] [CrossRef]

- Korea Paper Carton Recycling Association. Paper company paper-pack recycling report. In Survey on Paper-Pack Dispose and Recycling; Korea Paper Carton Recycling Association: Seoul, Korea, 2005; pp. 74–88. [Google Scholar]

- Oysterable. Available online: Https://www.oysterable.com/ (accessed on 30 September 2022).

- Superbin. Available online: Https://www.superbin.co.kr/ (accessed on 30 September 2022).

- Greenstar. Available online: Https://green-star.kr/ (accessed on 30 September 2022).

- Resource Innovation. Available online: Http://reinnovation.godohosting.com/ (accessed on 30 September 2022).

- Bobulski, J.; Kubanek, M. Project of Sorting System for Plastic Garbage in Sorting Plant Based on Artificial Intelligence. In Proceedings of the CS & IT Conference Proceedings, Toronto, Canada, 11–12 July 2020; Volume 10. [Google Scholar]

- Mariya, D.; Usman, J.; Mathew, E.N.; Aa, P.H.H. Reverse vending machine for plastic bottle recycling. Int. J. Comput. Sci. Technol 2020, 8, 65–70. [Google Scholar]

- Mwangi, H.W.; Mokoena, M. Using deep learning to detect polyethylene terephthalate (PET) bottle status for recycling. Glob. J. Comput. Sci. Technol. 2019, 19, 1–6. [Google Scholar] [CrossRef]

- Lopez, M.; Sevilla, B.C.; Simborio, Y.D.; Cañizares, R.P. Reverse Vending Machine with Power Output for Mobile Devices using Vision–Based System. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Manila, Philippines, 28–30 November 2021; pp. 1–6. [Google Scholar]

- Ahmed, I.; Ahmad, M.; Ahmad, A.; Jeon, G. Top view multiple people tracking by detection using deep SORT and YOLOv3 with transfer learning: Within 5G infrastructure. Int. J. Mach. Learn. Cybern. 2021, 12, 3053–3067. [Google Scholar] [CrossRef]

- Zhao, L.; Li, S. Object detection algorithm based on improved YOLOv3. Electronics 2020, 9, 537. [Google Scholar] [CrossRef]

- Meng, S.; Chu, W.T. A study of garbage classification with convolutional neural networks. In Proceedings of the 2020 Indo—Taiwan 2nd International Conference on Computing, Analytics and Networks (Indo-Taiwan ICAN), Rajpura, Punjab, India, 7–15 February 2020; pp. 152–157. [Google Scholar]

- Kaggle. Available online: https://www.kaggle.com/ (accessed on 30 September 2022).

- Amin, Z.M.A.; Sami, K.N.; Hassan, R. An approach of classifying waste using transfer learning method. Int. J. Perceptive Cogn. Comput. 2021, 7, 41–52. [Google Scholar]

- Burkart, N.; Huber, M.F. A survey on the explainability of supervised machine learning. J. Artif. Intell. Res. 2021, 70, 245–317. [Google Scholar] [CrossRef]

- Hasan, M.M.; Srizon, A.Y.; Sayeed, A.; Hasan, M.A.M. High performance classification of caltech-101 with a transfer learned deep convolutional neural network. In Proceedings of the 2021 International Conference on Information and Communication Technology for Sustainable Development (ICICT4SD), Dhaka, Bangladesh, 27–28 February 2021; pp. 35–39. [Google Scholar]

- Glock, K.; Napier, C.; Gary, T.; Gupta, V.; Gigante, J.; Schaffner, W.; Wang, Q. Measles Rash Identification Using Transfer Learning and Deep Convolutional Neural Networks. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 3905–3910. [Google Scholar]

- Patil, M.; Shaikh, N.; Zalte, M.S. Waste Classification Using ANN, CNN And Transfer Learning. CNN And Transfer Learning. 10 June 2022. Available online: https://ssrn.com/abstract=4133206 (accessed on 10 June 2022).

- West, J.; Ventura, D.; Warnick, S. Spring Research Presentation: A Theoretical Foundation for Inductive Transfer; Brigham Young University, College of Physical and Mathematical Sciences: Provo, UT, USA, 2007; Volume 1. [Google Scholar]

- Best Keras Pre-Trained Model for Image Classification. Available online: Https://colab.research.google.com/github/stephenleo/keras-model-selection/blob/main/keras_model_selection.ipynb (accessed on 30 September 2022).

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Mao, W.L.; Chen, W.C.; Wang, C.T.; Lin, Y.H. Recycling waste classification using optimized convolutional neural network. Resour. Conserv. Recycl. 2021, 164, 105132. [Google Scholar] [CrossRef]

- Nandhini, S.; Ashokkumar, K. An automatic plant leaf disease identification using DenseNet-121 architecture with a mutation-based henry gas solubility optimization algorithm. Neural Comput. Appl. 2022, 34, 5513–5534. [Google Scholar] [CrossRef]

- Chhabra, M.; Kumar, R. A Smart Healthcare System Based on Classifier DenseNet 121 Model to Detect Multiple Diseases. In Mobile Radio Communications and 5G Networks; Springer: Singapore, 2022; pp. 297–312. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).