Abstract

Live weight monitoring is an important step in Hanwoo (Korean cow) livestock farming. Direct and indirect methods are two available approaches for measuring live weight of cows in husbandry. Recently, thanks to the advances of sensor technology, data processing, and Machine Learning algorithms, the indirect weight measurement has been become more popular. This study was conducted to explore and evaluate the feasibility of machine learning algorithms in estimating the body live weight of Hanwoo cow using ten body measurements as input features. Various supervised Machine Learning algorithms, including Multilayer Perceptron, k-Nearest Neighbor, Light Gradient Boosting Machine, TabNet, and FT-Transformer, are employed to develop the models that estimate the body live weight using body measurement data. Data analysis is exploited to explore the correlation between the body size measurements (the features) and the weights (target values that need to be estimated) of cows. Data analysis results show that ten body measurements have a high correlation with the body live weight. High performance of all applied Machine Learning models was obtained. It can be concluded that estimating the body live weight of Hanwoo cow is feasible by utilizing Machine Learning algorithms. Among all of the tested algorithms, LightGBM regression demonstrates not only the best model in terms of performance, model complexity and development time.

1. Introduction

In South Korea, among all types of beef in the market, people prefer native beef despite their prices being much higher than that of imported products. Among four types of native cattle breeds being raised for beef demand, Hanwoo is the most popular one [1]. With highly marbled fat, thin muscle fibers, and minimal content of connective tissues, Hanwoo beef is well-known for its distinctive flavor [2]. To maintain the valuable characteristics of beef, the livestock management procedure takes an important role. In that procedure, livestock live weight monitoring is critical since it is considered one of the most important traits affecting animal condition [3]. In the management procedure, accurately estimating or measuring live weight is of fundamental importance to any livestock research and development.

Currently, there are two main approaches available to measure the live weight of livestock, including direct and indirect methods. The direct measurement method using scales can get very high accuracy. However, it still has some limitations. First of all, the measurement process in this approach requires removing bulls from cages or paddocks, and guiding them one by one to the weighting station or the site of scale. This process is highly time-consuming and cumbersome. Sometimes workers who stay close to the bulls might get hurt as some cattle individuals are very stubborn. Secondly, this process is believed to be able to cause stress and potentially harmful to bulls, even leading to weight loss or death [4]. Because of those disadvantages of the direct measurement methods, various ways of indirect measurement have been proposed as the alternative approach [4,5,6,7,8,9,10,11,12]. The indirect approach is considered an estimation of the true value of live weight since it indirectly computes that value using sensor data and computational techniques. Weight estimation using body size measurements has been used extensively in the livestock industry, for both carcass weight and live weight. A typical indirect live weight measurement method consists of three steps. In the first step, different body characteristics and size of cattle are collected by sensors such as 2D camera [4], thermal camera [13], 3D camera [14,15] and ultrasonic sensor [16,17]. In the second step, body features are extracted by data processing techniques. Finally, body features are fed into a regression model to estimate the body weight.

The task of estimating body weight using body measurements can be considered a regression problem where the body measurements are input features and body weight is the target value that the regression model needs to predict. The estimation of dairy Holstein cattle live weight was reported by Tedde et al. with Root Mean Square Error (RMSE) ranging from 52 to 56 kg [12]. A study on estimating the live weight of pigs was conducted by Sungirai et al. [11,18]. Regarding sheep live weight estimation, a study was conducted by Sabbioni et al. [10]. It should be noted that one estimation model, when applied to different cattle breeds could have different prediction performances. For example, regression analysis was exploited to predict body weight from body measurements in Holstein, Brown Swiss, and crossbred cattle with R2 scores of 92%, 95%, and 68%, respectively [8]. In the case of the Hanwoo cattle, the study of live weight estimation was carried out by Jang et al. with the performance demonstrated by RMSE and MAPE errors of 51.4% and 17.1%, respectively, using body size measurements including body length, withers height, chest width, and body width [7].

Machine Learning (ML) has a long history dating back to the year 1959, and the term was coined by Arthur Samuel [19]. ML algorithms can be categorized into three types of learning: reinforcement learning, unsupervised learning, and supervised learning. Among three categories of learning algorithms, supervised learning is employed for the task of estimating live body weight of cattle in livestock. Supervised learning algorithms try to learn from the labeled datasets to approximate the mapping function between inputs (features) and outputs (target values). There are a huge number of supervised learning algorithms, such as Linear Regression, k-Nearest Neighbor (kNN), Support Vector Machine (SVM), Decision Tree, and Artificial Neural Network (ANN) or Neural Network (NN). Among existing supervised learning algorithms, ANN is considered an arbitrary accuracy function approximation [20]. ANN is a ML algorithm that utilizes data computational structure inspired by the nervous system of the superior organisms [21]. A typical NN consisted of an input layer, hidden layers, and an output layer. Deep Learning (DL) or Deep Neural Network (DNN) are special neural networks that consist of many layers of data processing units. The main advantage of DNN over NN is the ability to automatically learn features from raw data, without the hand-craft features. Nowadays, DL and DNNs are dominant in almost every kind of unstructured data: serial data, 2D data, and 3D data. Various types of DNN have been proposed for different types of data. For example, Convolutional Neural Network (CNN) models are suitable for image data [22] while attention-based neural network models are dominant in natural language processing applications [23]. In tabular data, tree-based ensemble learning is still believed to outperform other types of learning algorithms [24,25]. However, some DNN models proposed recently can have comparable performance in tabular data tasks [24].

In this paper, a dataset including 33,536 samples of Hanwoo cows is used to develop live weight predictive models. Each sample consists of 10 body measurements along with the age and body weight. Although previously, live weight body estimation of Hanwoo was studied by Jang et al. [7] with only 4 body measurements, the predictive performance is still low (RMSE 51.4 and MAPE 17.1%). In this paper, a dataset with more features and much more samples, besides conventional ML algorithms, more advanced ML-based sophisticated predictive models are employed to develop live weight predictive models. The main contributions of this paper are as follows:

- Analyze ten body measurements of Hanwoo and their impact on the prediction of body weight.

- Investigate ML algorithms in estimating live body weight.

- Improve predictive performance over previous studies.

2. Materials and Methods

2.1. Hanwoo Body Measurement Data

The Hanwoo data used in this research was provided by the National Institute of Livestock Science, Korean Rural Development Administration. The data consist of 33,546 records of male individuals with ages of 6, 12, 18, and 24 months. The total numbers of individuals in four age groups are 4088, 16,574, 7185, and 5699 respectively. The dataset is split into training, validation, and test datasets with a ratio of 70%–15%–15%. The training and validation datasets are used for developing predictive models. During the training process, the validation dataset helps avoid the over-fitting phenomenon of the training process. The test dataset is used to evaluate the performance of trained models on unseen data.

2.2. Body Size Measurements

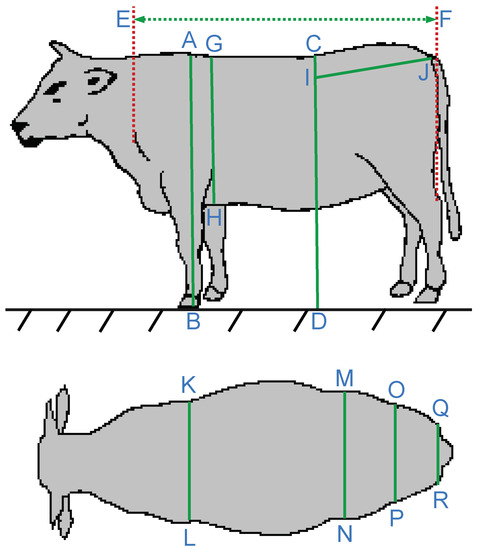

Each data sample is an observation of a cow individual, consisting of ten size measurements measured in centimeters (cm), age in month, and weight in kilogram (kg). The body size measurement annotations are taken from [26]. Details are demonstrated in Figure 1 and Table 1. The statistical summary of the data is in Table 2, Table 3, Table 4 and Table 5.

Figure 1.

Hanwoo cow body measurements.

Table 1.

Hanwoo cow body measurements.

Table 2.

Statistical summary of 6-month age cows.

Table 3.

Statistical summary of 12-month age cows.

Table 4.

Statistical summary of 18-month age cows.

Table 5.

Statistical summary of 24-month age cows.

2.3. Machine Learning-Based Predictive Models

In this work, one of the major goals is to investigate the performance of supervised ML algorithms in estimating Hanwoo cow live weight. Currently, there are a huge number of supervised ML algorithms. Therefore, an exhaustive investigation considering all of the algorithms is not feasible in the scope of this research. As a result, three representative algorithms are taken into consideration, including Light Gradient Boosting Machine (LightGBM) [27], TabNet [28], and FT-Transformer [29]. In tabular data, tree-based ensemble learning is still believed to outperform other types of learning algorithms [24,25]. Nowadays, among various types of tree-based machine learning algorithms that have been proposed, LightGBM is considered one of the most efficient algorithms [27]. Whiles, DNN models are extensively employed for unstructured data. Recently researchers have been attempting to use DNN models, the most prominent are TabNet and FT-Transformer, for solving tabular data tasks.

Besides three modern ML models, kNN and MLP are two traditional ML algorithms taken into consideration to make a comparison. Among the five models using in this work, TabNet and FT-Transformer are DNN models, whiles kNN, MLP, and LightGBM are shallow ML models. In order to evaluate the performance of weight estimation, two metrics are exploited including Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE).

2.3.1. Machine Learning Models

LightGBM is a Gradient Boosting Decision Tree (GBDT) algorithm invented by Ke et al. [27]. LightGBM incorporated two novel techniques: Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB). GOSS help to exclude a significant proportion of data samples with small gradients and keep the remaining data samples for estimating information gain. EFB helps to bundle mutually exclusive features to reduce the number of features. In this work, the LightGBM Python Package (version 3.3.2) was used to build the model with 100 base learners (decision tree) and the maximum tree depth of base learners is 32.

MLP is a conventional NN whose parameters are updated by the back-propagation training process [30]. MLPs are universal function approximators as shown by Cybenko’s theorem [31]. Model kNN is a non-parametric ML algorithm since it does not make any assumptions on the data [32]. kNN algorithm uses feature similarity to predict the target values of new samples. This means that the target value of a new sample is computed by its distances to the data samples in the training dataset. Model MLP composes of two hidden layers with 30 and 20 neurons, respectively; and model kNN with were built with the the Scikit-Learn Python Package (version 1.0.2).

2.3.2. Deep Neural Network Models

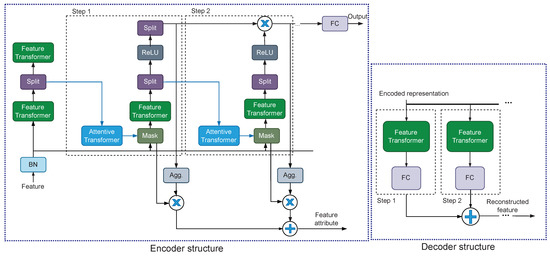

TabNet is a DNN model employing the attention mechanism for tabular data invented by Sercan O Ark and Tomas Pfister in 2021 [28]. A TabNet model consists of an encoder component and a decoder component as shown in Figure 2. The encoder component composes of a feature transformer, an attentive transformer, a feature masking, a split block, and a Rectified Leaky Unit (ReLU) layer. The decoder component composes of a feature transformer and a Fully-connected Layer (FC) in each step. In this work, the model TabNet was built with Pytorch-Tabnet Python Package (version 4.0). To train the model with back-propagation training, Adam optimization algorithm [33] was adopted with parameters , , and .

Figure 2.

Architecture of TabNet [28].

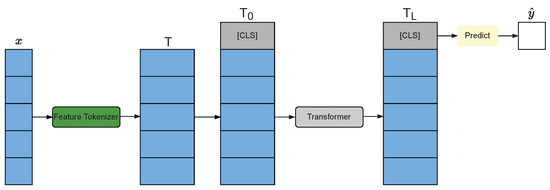

FT-Transformer was invented by Gorishniy Y. et al. [29] in 2021 is a simple and efficient adaptation of transformer architecture-based for the tabular data. The synonym FT-Transformer stands for Feature Tokenizer Transformer. The architecture of FT-Transformer consists of Feature Tokenizer module and Transformer module as shown in Figure 3. Feature Tokenizer module transforms the input features x into embedding T. After tokenizing, the stacked embedding is obtained by stacking the embedding T token . Transformer layers are applied to obtain , where . In this work, model FT-Transformer was built with RTDL Python Package (version 0.0.13) [29]. To train the model with back-propagation training, AdamW optimization algorithm [33] was adopted with parameters , , , and .

Figure 3.

Architecture of FT-Transformer [29].

3. Results

3.1. Correlation Analysis

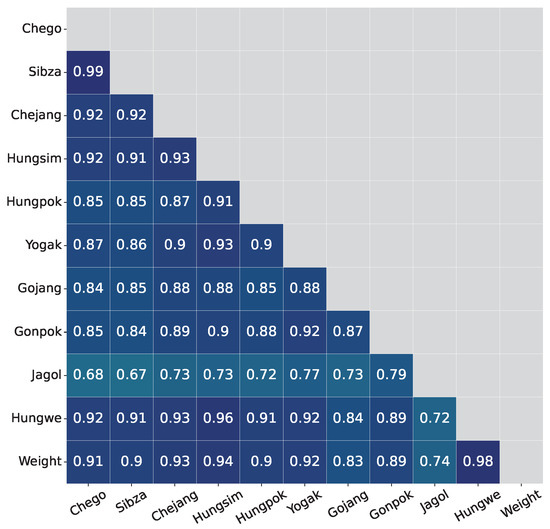

Pearson correlation [34] is employed to measure the linear correlation between two body size measurements. The correlation results are shown in Table 6. All the corresponding p-values are also computed and showed very small values which indicate that all measurements have significant correlations with the live weight of the cattle body. Of all body measurements, Hungwe has the highest correlation with body weight under all ages of cows. The analysis also shows that all body size measurements highly correlate with each other, as in Figure 4.

Table 6.

Pearson correlation between body measurements and body weight.

Figure 4.

Correlation values between body measurements.

It can be observed that Hungpok, Yogak, Gonpok, and Jagol change their correlation with live weight a lot among four categories of months. For example, in 6-month data, the correlation value between Jagol feature and weight is only 0.295, while in 18-month age data the correlation value is 0.65. Based on that observation, body size measurements are organized into three groups: group A is of stable correlation variables (Hungwe, Chego, Sibza, Chejang, Hungsim, and Gojang); group B includes unstable correlation variables (Hungpok, Yogak, Gonpok, and Jagol), and group C consists of all variables.

3.2. Live Weight Prediction Results

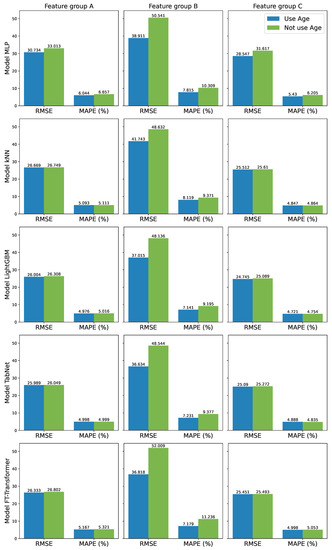

Each considered ML model will be developed with three different groups of variables: A, B, and C. Moreover, the age values of individuals are also considered an independent variable and used as input features to train predictive models. The training and validation datasets are used during the training process. The training dataset is used to update the model parameters while the validation dataset is used to stop the training process early to prevent overfitting. The test dataset is reserved for evaluating trained models. All final evaluation results computed on the test dataset are shown in and Figure 5.

Figure 5.

Estimation errors (RMSE and MAPE) of five models using different features.

Among three groups of features A, B, and C, it can be observed that group B which consists of unstable features (Hugok, Yogak, Gonpok, Jagol) has the poorest performance. Group A which consists of stable features (Chego, Sibza, Chejang, Hungsim, Hungpok, Yogak, Gojang, Gonpok, Jagol, Hungwe) has better performance. Group C which includes all features gives the best performance of all groups.

Compared between the two cases of using and not using age as an input feature, it can be observed that when a model uses age as an input feature, it often has lower RMSE and MAPE values. In the cases of feature groups A and C, the differences between using age and not using age are very small. However, in the case of feature group B, the difference is quite obvious. For example, in the FT-Transformer case, using age can have 36.818% and 7.179% for RMSE and MAPE, respectively. But if not using age, the performance decreases dramatically to 52.009% and 11.236% for RMSE and MAPE, respectively.

Among the five compared models, LightGBM has the best performance. After considering all combinations of cases, it can be noted that the best performance is in the case of using 10 features, which means 10 body size measurements without age, with model LightGBM (RMSE = 24.754, MAPE = 4.721%). The worst case is the case with the FT-Transformer model, using only four features Hungpok, Yogak, Gonpok, and Jagol; in this case, RMSE = 52.009, MAPE = 11.236%.

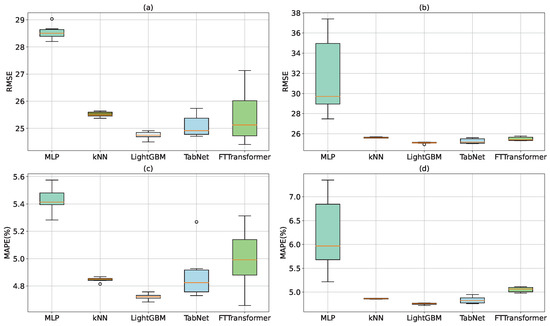

In order to attenuate the effect of the randomness in analyzing the results of predictions, experiences with each model are conducted 6 times with the random initialization of parameters and split of training set—validation set. Dispersion analysis of RMSE and MAPE errors are shown in Table 7 and Figure 6. In all the cases, it can be observed that the kNN model has the smallest dispersion and the MLP model has the biggest dispersion. The reason explaining for that small dispersion value is that kNN is a non-parametric model. kNN is not affected by the random initialization of parameters. In general, all models but MLP have small dispersion which indicates that the prediction results of these models are reliable.

Table 7.

Dispersion analysis of RMSE and MAPE errors in case of using all features.

Figure 6.

Dispersion analysis of RMSE and MAPE errors in case of using all features; (a,c): Using age as a feature; (b,d): Not using age.

3.3. Feature Importance

The feature importance of body measurements according to the LightGBM model in the case of using all features is shown in Figure 7. It can be observed that Hungwe is the most important feature while Jagol is the least important feature. The second most important feature is Chejang. All other features have lower and similar importance but still have a large contribution to the prediction result of the LightGBM model. This result indicates that all features should be included in the predictive models.

Figure 7.

Feature importance according to LightGBM model.

4. Conclusions

In this work, ten body measurements of Hanwoo cow were used as the input features for the estimation of the body live weight. Pearson correlation analysis showed that all of the body measurement has the high correlation with the body weight. Among all features, the girth of chest girth (Hungwe) has the highest correlation with the body weight, while the width of hip bone (Jagol) has lowest correlation with the body weight. Experiment results showed that using different sets of features affects the performance of weight estimation. Using all features together provided the best performance in all cases of the estimation models. Age value was used as another feature to estimate body weight, and that often give a slightly better results in most case. Five different ML models have been investigated and evaluated. The tree-based model LightGBM regression demonstrated the highest performance. The results of this work will be used to develop an indirect live weight estimation for Hanwoo, in which machine vision technology is utilized to automatically measure ten body features of cows.

Author Contributions

Conceptualization, C.D. and D.H.; methodology, D.H. and S.H.; software, D.H.; validation, M.P., S.L. (Seungsoo Lee), S.L. (Soohyun Lee) and M.A.; formal analysis, M.A. and J.L.; investigation, C.D. and M.P.; resources, S.L. (Seungsoo Lee), S.L. (Soohyun Lee) and M.A.; data curation, D.H., S.L. (Seungsoo Lee), S.L. (Soohyun Lee) and M.A.; writing—original draft preparation, D.H. and S.H.; writing—review and editing, D.H. and S.H.; visualization, S.H.; supervision, C.D., T.C., S.L. (Seungsoo Lee) and S.L. (Soohyun Lee); project administration, C.D. and M.A.; funding acquisition, C.D., T.C., M.P., S.L. (Seungsoo Lee), S.L. (Soohyun Lee), M.A. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Korea Institute of Planning and Evaluation for Technology in Food, Agriculture and Forestry (IPET) and Korea Smart Farm R&D Foundation (KosFarm) through Smart Farm Innovation Technology Development Program, funded by Ministry of Agriculture, Food and Rural Affairs (MAFRA) and Ministry of Science and ICT (MSIT), Rural Development Administration (421050-03).

Institutional Review Board Statement

The animal study protocol was approved by the IACUC at National Institute of Animal Science (approval number: NIAS20222380).

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| STD | Standard Deviation |

| Q1 | Quartile 1 |

| Q2 | Quartile 2 |

| Q3 | Quartile 3 |

| kNN | k-Nearest Neighbor |

| FT-Transformer | Feature Tokenizer Transformer |

| NN | Neural Network |

| ANN | Artificial Neural Network |

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Network |

| ML | Machine Learning |

| DL | Deep Learning |

| LightGBM | Light Gradient Boosting Machine |

| RMSE | Root Mean Squared Error |

| MAPE | Mean Absolute Percent Error |

References

- Jo, C.; Cho, S.H.; Chang, J.; Nam, K.C. Keys to production and processing of Hanwoo beef: A perspective of tradition and science. Anim. Front. 2012, 2, 32–38. [Google Scholar] [CrossRef]

- Kim, C.J.; Suck, J.S.; Ko, W.S.; Lee, E.S. Studies on the cold and frozen storage for the production of high quality meat of Korean native cattle II. Effects of cold and frozen storage on the drip, storage loss and cooking loss in Korean native cattle. J. Food Sci. 1994, 14, 151–154. [Google Scholar]

- Selk, G.E.; Wettemann, R.P.; Lusby, K.S.; Oltjen, J.W.; Mobley, S.L.; Rasby, R.J.; Garmendia, J.C. Relationship among weight change, body condition and reproductive performance of range beef cows. J. Anim. Sci. 1988, 66, 3153–3159. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Shadpour, S.; Chan, E.; Rotondo, V.; Wood, K.M.; Tulpan, D. Applications of machine learning for livestock body weight prediction from digital images. J. Anim. Sci. 2021, 99, skab022. [Google Scholar] [CrossRef]

- Gomes, R.A.; Monteiro, G.R.; Assis, G.J.; Busato, K.C.; Ladeira, M.M.; Chizzotti, M.L. Technical note: Estimating body weight and body composition of beef cattle trough digital image analysis. J. Anim. Sci. 2016, 94, 5414–5422. [Google Scholar] [CrossRef]

- Martins, B.M.; Mendes, A.L.; Silva, L.F.; Moreira, T.R.; Costa, J.H.; Rotta, P.P.; Chizzotti, M.L.; Marcondes, M.I. Estimating body weight, body condition score, and type traits in dairy cows using three dimensional cameras and manual body measurements. Livest. Sci. 2020, 236, 104054. [Google Scholar] [CrossRef]

- Jang, D.H.; Kim, C.; Ko, Y.G.; Kim, Y.H. Estimation of Body Weight for Korean Cattle Using Three-Dimensional Image. J. Biosyst. Eng. 2020, 45, 325–332. [Google Scholar] [CrossRef]

- Ozkaya, S.; Bozkurt, Y. The relationship of parameters of body measures and body weight by using digital image analysis in pre-slaughter cattle. Arch. Anim. Breed. 2008, 51, 120–128. [Google Scholar] [CrossRef]

- Lee, D.H.; Lee, S.H.; Cho, B.K.; Wakholi, C.; Seo, Y.W.; Cho, S.H.; Kang, T.H.; Lee, W.H. Estimation of carcass weight of hanwoo (Korean native cattle) as a function of body measurements using statistical models and a neural network. Asian-Australas. J. Anim. Sci. 2020, 33, 1633. [Google Scholar] [CrossRef]

- Sabbioni, A.; Beretti, V.; Superchi, P.; Ablondi, M. Body weight estimation from body measures in Cornigliese sheep breed. Ital. J. Anim. Sci. 2020, 19, 25–30. [Google Scholar] [CrossRef]

- Sungirai, M.; Masaka, L.; Benhura, T.M. Validity of weight estimation models in pigs reared under different management conditions. Vet. Med. Int. 2014, 2014, 530469. [Google Scholar] [CrossRef] [PubMed]

- Tedde, A.; Grelet, C.; Ho, P.N.; Pryce, J.E.; Hailemariam, D.; Wang, Z.; Plastow, G.; Gengler, N.; Brostaux, Y.; Froidmont, E.; et al. Validation of Dairy Cow Bodyweight Prediction Using Traits Easily Recorded by Dairy Herd Improvement Organizations and Its Potential Improvement Using Feature Selection Algorithms. Animals 2021, 11, 1288. [Google Scholar] [CrossRef] [PubMed]

- Vindis, P.; Brus, M.; Stajnko, D.; Janzekovic, M. Non invasive weighing of live cattle by thermal image analysis. In New Trends in Technologies: Control, Management, Computational Intelligence and Network Systems; IntechOpen: London, UK, 2010. [Google Scholar]

- Na, M.H.; Cho, W.H.; Kim, S.K.; Na, I.S. Automatic Weight Prediction System for Korean Cattle Using Bayesian Ridge Algorithm on RGB-D Image. Electronics 2022, 11, 1663. [Google Scholar] [CrossRef]

- Zin, T.T.; Seint, P.T.; Tin, P.; Horii, Y.; Kobayashi, I. Body condition score estimation based on regression analysis using a 3D camera. Sensors 2020, 20, 3705. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q. A Body Measurement Method Based on the Ultrasonic Sensor. In Proceedings of the 2018 IEEE International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 18–20 August 2018; pp. 168–171. [Google Scholar]

- Lawson, A.; Giri, K.; Thomson, A.; Karunaratne, S.; Smith, K.F.; Jacobs, J.; Morse-McNabb, E. Multi-site calibration and validation of a wide-angle ultrasonic sensor and precise GPS to estimate pasture mass at the paddock scale. Comput. Electron. Agric. 2022, 195, 106786. [Google Scholar] [CrossRef]

- Al Ard Khanji, M.S.; Llorente, C.; Falceto, M.V.; Bonastre, C.; Mitjana, O.; Tejedor, M.T. Using body measurements to estimate body weight in gilts. Can. J. Anim. Sci. 2018, 98, 362–367. [Google Scholar] [CrossRef]

- Samuel, A.L. Some studies in machine learning using the game of checkers. IBM J. Res. Dev. 2000, 44, 206–226. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Zador, A.M. A critique of pure learning and what artificial neural networks can learn from animal brains. Nat. Commun. 2019, 10, 3770. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems, Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 207; Neural Information Processing Systems Foundation, Inc. (NeurIPS): Long Beach, CA, USA, 2017. [Google Scholar]

- Borisov, V.; Leemann, T.; Seßler, K.; Haug, J.; Pawelczyk, M.; Kasneci, G. Deep neural networks and tabular data: A survey. arXiv 2021, arXiv:2110.01889. [Google Scholar]

- Grinsztajn, L.; Oyallon, E.; Varoquaux, G. Why do tree-based models still outperform deep learning on tabular data? arXiv 2022, arXiv:2207.08815. [Google Scholar]

- Lee, D.H.; Rho, S.H.; Park, M.N.; Lee, S.S.; Lee, S.H.; Mahboob, A.; Lee, Y.C.; Dang, C.G.; Chang, H.K.; Lee, J.G.; et al. Prediction of body weight with the body measurements in 12 months age Hanwoo. J. Anim. Breed. Genom. 2021, 5, 71–93. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. In Advances in Neural Information Processing Systems, Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 207; Neural Information Processing Systems Foundation, Inc. (NeurIPS): Long Beach, CA, USA, 2017. [Google Scholar]

- Arik, S.Ö.; Pfister, T. Tabnet: Attentive interpretable tabular learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 6679–6687. [Google Scholar]

- Gorishniy, Y.; Rubachev, I.; Khrulkov, V.; Babenko, A. Revisiting deep learning models for tabular data. Adv. Neural Inf. Process. Syst. 2021, 34, 18932–18943. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Hoboken: New Jersey, NJ, USA, 1994. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Fix, E.; Hodges, J.L. Discriminatory Analysis. Nonparametric Discrimination: Consistency Properties. Int. Stat. Rev./Rev. Int. De Stat. 1989, 57, 238–247. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Freedman, D.; Pisani, R.; Purves, R. Statistics (International Student Edition), 4th ed.; WW Norton & Company: New York, NY, USA, 2007. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).