Machine Learning-Based Live Weight Estimation for Hanwoo Cow

Abstract

1. Introduction

- Analyze ten body measurements of Hanwoo and their impact on the prediction of body weight.

- Investigate ML algorithms in estimating live body weight.

- Improve predictive performance over previous studies.

2. Materials and Methods

2.1. Hanwoo Body Measurement Data

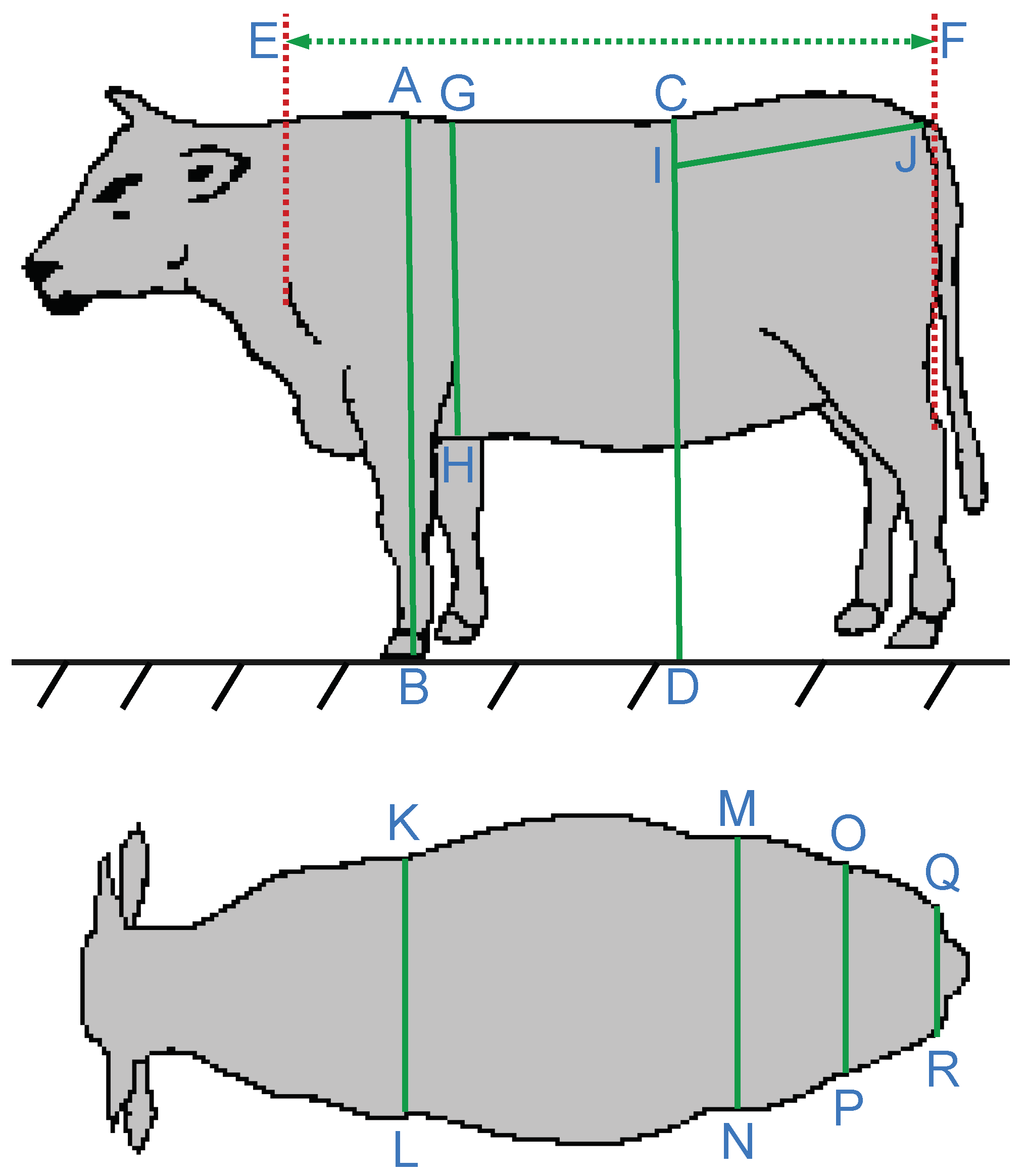

2.2. Body Size Measurements

2.3. Machine Learning-Based Predictive Models

2.3.1. Machine Learning Models

2.3.2. Deep Neural Network Models

3. Results

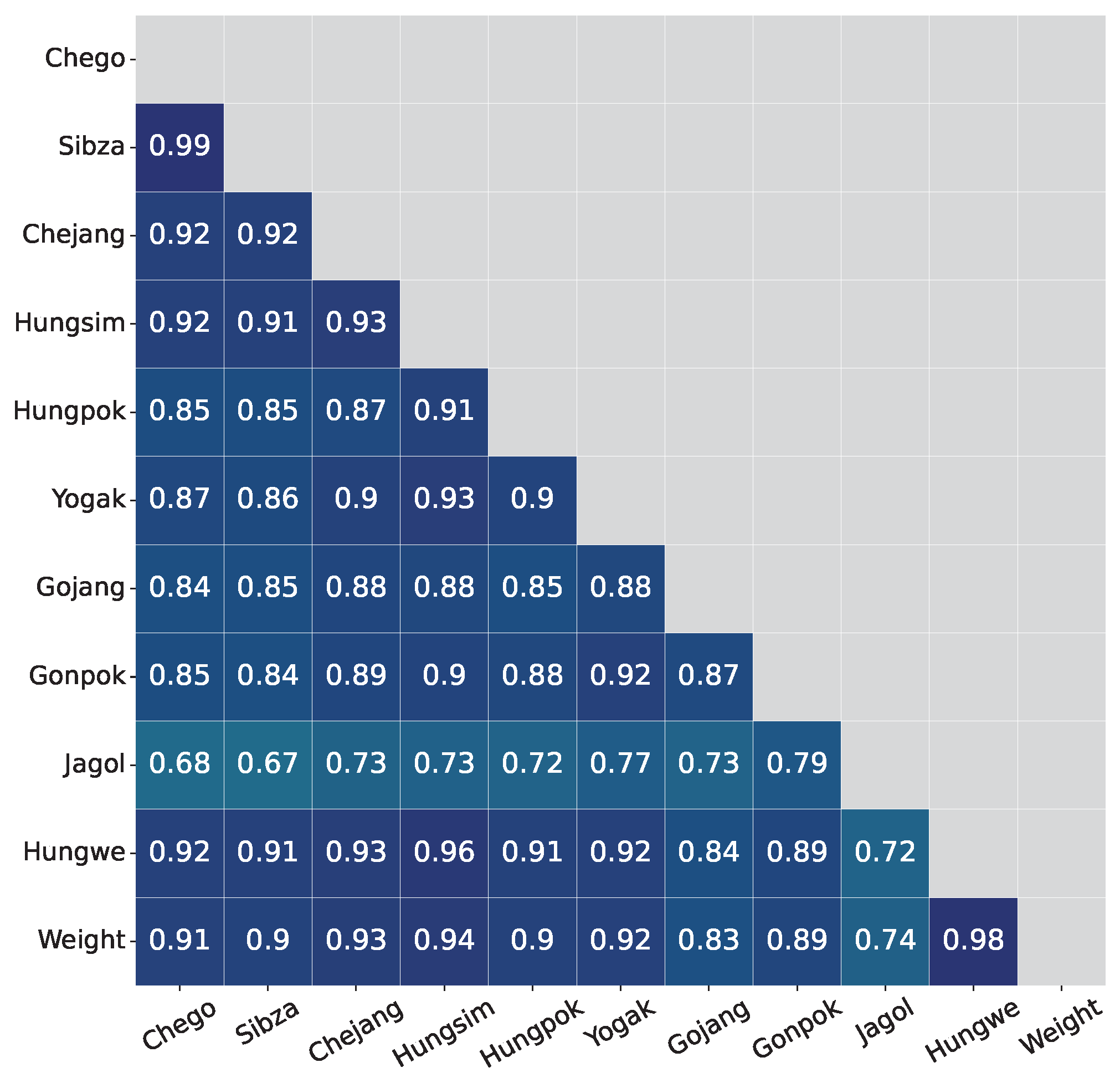

3.1. Correlation Analysis

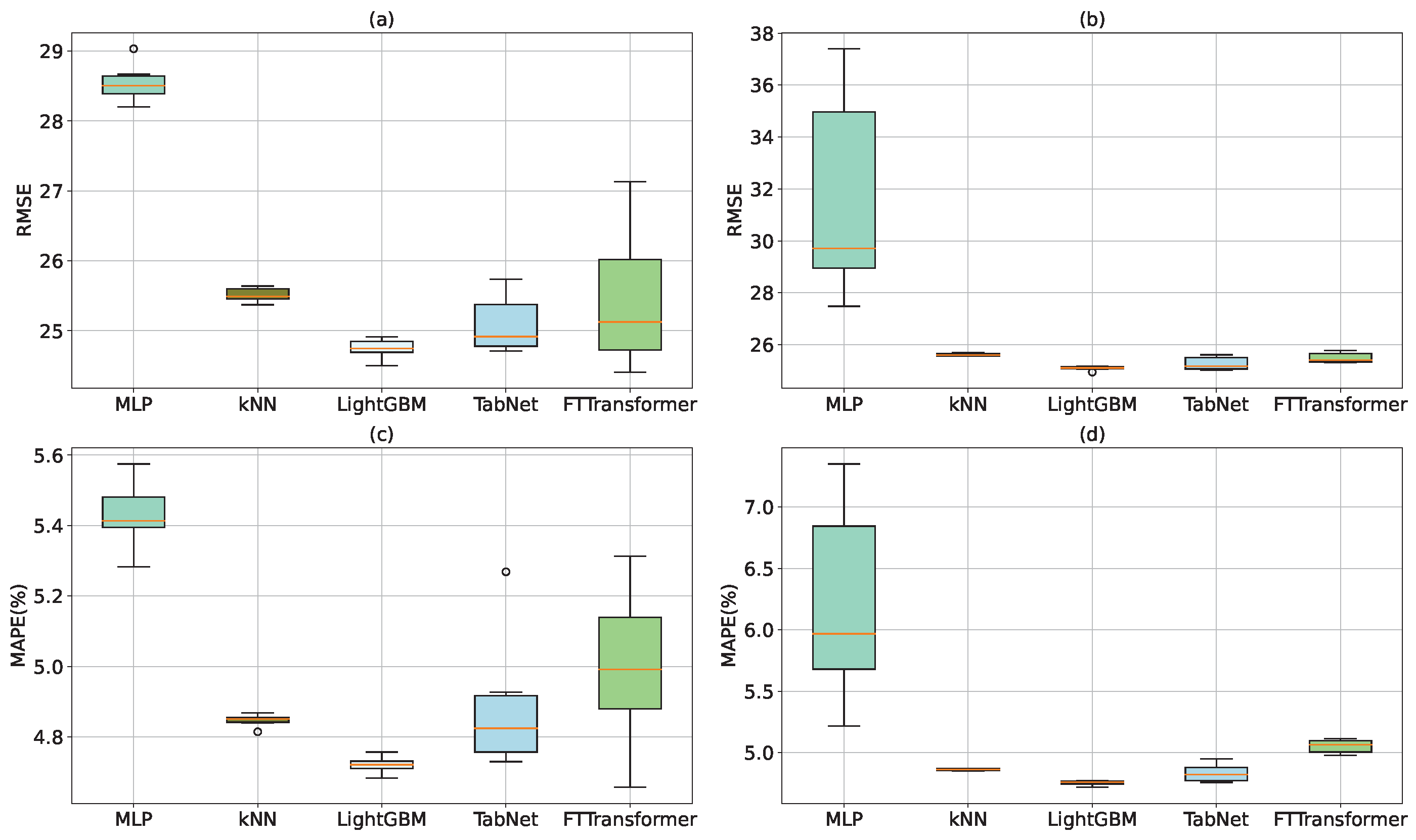

3.2. Live Weight Prediction Results

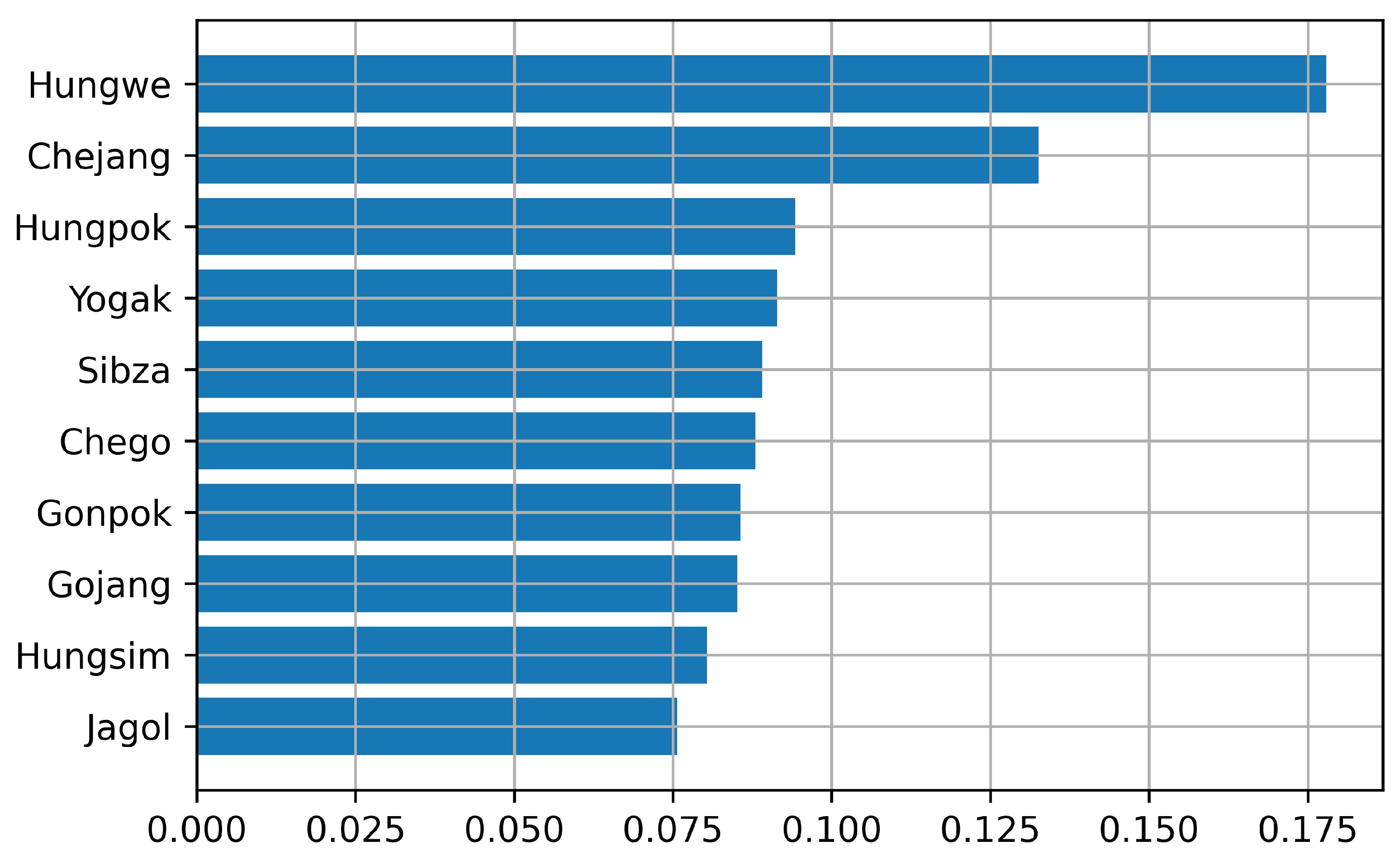

3.3. Feature Importance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| STD | Standard Deviation |

| Q1 | Quartile 1 |

| Q2 | Quartile 2 |

| Q3 | Quartile 3 |

| kNN | k-Nearest Neighbor |

| FT-Transformer | Feature Tokenizer Transformer |

| NN | Neural Network |

| ANN | Artificial Neural Network |

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Network |

| ML | Machine Learning |

| DL | Deep Learning |

| LightGBM | Light Gradient Boosting Machine |

| RMSE | Root Mean Squared Error |

| MAPE | Mean Absolute Percent Error |

References

- Jo, C.; Cho, S.H.; Chang, J.; Nam, K.C. Keys to production and processing of Hanwoo beef: A perspective of tradition and science. Anim. Front. 2012, 2, 32–38. [Google Scholar] [CrossRef]

- Kim, C.J.; Suck, J.S.; Ko, W.S.; Lee, E.S. Studies on the cold and frozen storage for the production of high quality meat of Korean native cattle II. Effects of cold and frozen storage on the drip, storage loss and cooking loss in Korean native cattle. J. Food Sci. 1994, 14, 151–154. [Google Scholar]

- Selk, G.E.; Wettemann, R.P.; Lusby, K.S.; Oltjen, J.W.; Mobley, S.L.; Rasby, R.J.; Garmendia, J.C. Relationship among weight change, body condition and reproductive performance of range beef cows. J. Anim. Sci. 1988, 66, 3153–3159. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Shadpour, S.; Chan, E.; Rotondo, V.; Wood, K.M.; Tulpan, D. Applications of machine learning for livestock body weight prediction from digital images. J. Anim. Sci. 2021, 99, skab022. [Google Scholar] [CrossRef]

- Gomes, R.A.; Monteiro, G.R.; Assis, G.J.; Busato, K.C.; Ladeira, M.M.; Chizzotti, M.L. Technical note: Estimating body weight and body composition of beef cattle trough digital image analysis. J. Anim. Sci. 2016, 94, 5414–5422. [Google Scholar] [CrossRef]

- Martins, B.M.; Mendes, A.L.; Silva, L.F.; Moreira, T.R.; Costa, J.H.; Rotta, P.P.; Chizzotti, M.L.; Marcondes, M.I. Estimating body weight, body condition score, and type traits in dairy cows using three dimensional cameras and manual body measurements. Livest. Sci. 2020, 236, 104054. [Google Scholar] [CrossRef]

- Jang, D.H.; Kim, C.; Ko, Y.G.; Kim, Y.H. Estimation of Body Weight for Korean Cattle Using Three-Dimensional Image. J. Biosyst. Eng. 2020, 45, 325–332. [Google Scholar] [CrossRef]

- Ozkaya, S.; Bozkurt, Y. The relationship of parameters of body measures and body weight by using digital image analysis in pre-slaughter cattle. Arch. Anim. Breed. 2008, 51, 120–128. [Google Scholar] [CrossRef]

- Lee, D.H.; Lee, S.H.; Cho, B.K.; Wakholi, C.; Seo, Y.W.; Cho, S.H.; Kang, T.H.; Lee, W.H. Estimation of carcass weight of hanwoo (Korean native cattle) as a function of body measurements using statistical models and a neural network. Asian-Australas. J. Anim. Sci. 2020, 33, 1633. [Google Scholar] [CrossRef]

- Sabbioni, A.; Beretti, V.; Superchi, P.; Ablondi, M. Body weight estimation from body measures in Cornigliese sheep breed. Ital. J. Anim. Sci. 2020, 19, 25–30. [Google Scholar] [CrossRef]

- Sungirai, M.; Masaka, L.; Benhura, T.M. Validity of weight estimation models in pigs reared under different management conditions. Vet. Med. Int. 2014, 2014, 530469. [Google Scholar] [CrossRef] [PubMed]

- Tedde, A.; Grelet, C.; Ho, P.N.; Pryce, J.E.; Hailemariam, D.; Wang, Z.; Plastow, G.; Gengler, N.; Brostaux, Y.; Froidmont, E.; et al. Validation of Dairy Cow Bodyweight Prediction Using Traits Easily Recorded by Dairy Herd Improvement Organizations and Its Potential Improvement Using Feature Selection Algorithms. Animals 2021, 11, 1288. [Google Scholar] [CrossRef] [PubMed]

- Vindis, P.; Brus, M.; Stajnko, D.; Janzekovic, M. Non invasive weighing of live cattle by thermal image analysis. In New Trends in Technologies: Control, Management, Computational Intelligence and Network Systems; IntechOpen: London, UK, 2010. [Google Scholar]

- Na, M.H.; Cho, W.H.; Kim, S.K.; Na, I.S. Automatic Weight Prediction System for Korean Cattle Using Bayesian Ridge Algorithm on RGB-D Image. Electronics 2022, 11, 1663. [Google Scholar] [CrossRef]

- Zin, T.T.; Seint, P.T.; Tin, P.; Horii, Y.; Kobayashi, I. Body condition score estimation based on regression analysis using a 3D camera. Sensors 2020, 20, 3705. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q. A Body Measurement Method Based on the Ultrasonic Sensor. In Proceedings of the 2018 IEEE International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 18–20 August 2018; pp. 168–171. [Google Scholar]

- Lawson, A.; Giri, K.; Thomson, A.; Karunaratne, S.; Smith, K.F.; Jacobs, J.; Morse-McNabb, E. Multi-site calibration and validation of a wide-angle ultrasonic sensor and precise GPS to estimate pasture mass at the paddock scale. Comput. Electron. Agric. 2022, 195, 106786. [Google Scholar] [CrossRef]

- Al Ard Khanji, M.S.; Llorente, C.; Falceto, M.V.; Bonastre, C.; Mitjana, O.; Tejedor, M.T. Using body measurements to estimate body weight in gilts. Can. J. Anim. Sci. 2018, 98, 362–367. [Google Scholar] [CrossRef]

- Samuel, A.L. Some studies in machine learning using the game of checkers. IBM J. Res. Dev. 2000, 44, 206–226. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Zador, A.M. A critique of pure learning and what artificial neural networks can learn from animal brains. Nat. Commun. 2019, 10, 3770. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems, Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 207; Neural Information Processing Systems Foundation, Inc. (NeurIPS): Long Beach, CA, USA, 2017. [Google Scholar]

- Borisov, V.; Leemann, T.; Seßler, K.; Haug, J.; Pawelczyk, M.; Kasneci, G. Deep neural networks and tabular data: A survey. arXiv 2021, arXiv:2110.01889. [Google Scholar]

- Grinsztajn, L.; Oyallon, E.; Varoquaux, G. Why do tree-based models still outperform deep learning on tabular data? arXiv 2022, arXiv:2207.08815. [Google Scholar]

- Lee, D.H.; Rho, S.H.; Park, M.N.; Lee, S.S.; Lee, S.H.; Mahboob, A.; Lee, Y.C.; Dang, C.G.; Chang, H.K.; Lee, J.G.; et al. Prediction of body weight with the body measurements in 12 months age Hanwoo. J. Anim. Breed. Genom. 2021, 5, 71–93. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. In Advances in Neural Information Processing Systems, Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 207; Neural Information Processing Systems Foundation, Inc. (NeurIPS): Long Beach, CA, USA, 2017. [Google Scholar]

- Arik, S.Ö.; Pfister, T. Tabnet: Attentive interpretable tabular learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 6679–6687. [Google Scholar]

- Gorishniy, Y.; Rubachev, I.; Khrulkov, V.; Babenko, A. Revisiting deep learning models for tabular data. Adv. Neural Inf. Process. Syst. 2021, 34, 18932–18943. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Hoboken: New Jersey, NJ, USA, 1994. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Fix, E.; Hodges, J.L. Discriminatory Analysis. Nonparametric Discrimination: Consistency Properties. Int. Stat. Rev./Rev. Int. De Stat. 1989, 57, 238–247. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Freedman, D.; Pisani, R.; Purves, R. Statistics (International Student Edition), 4th ed.; WW Norton & Company: New York, NY, USA, 2007. [Google Scholar]

| Feature Name | Description | Annotation |

|---|---|---|

| Chego | Wither height | A–B |

| Sibza | Hip height | C–D |

| Chejang | Body length | E–F |

| Hungsim | Chest depth | G–H |

| Gojang | Rump length | I–J |

| Hongpok | Chest width | K–L |

| Yogak | Rump width | M–N |

| Gonpok | Pelvic width | O–P |

| Jagol | Hip bone width | Q–R |

| Hungque | Chest girth | Circumference of G–H |

| Chego (cm) | Sibza (cm) | Chejang (cm) | Hungsim (cm) | Hungpok (cm) | Yogak (cm) | Gojang (cm) | Gonpok (cm) | Jogol (cm) | Hungwe (cm) | Weight (kg) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 102.168 | 105.003 | 108.249 | 50.309 | 29.036 | 28.914 | 36.322 | 32.225 | 19.222 | 131.345 | 183.366 |

| STD | 5.422 | 5.205 | 7.393 | 4.070 | 4.473 | 4.219 | 4.401 | 4.128 | 3.583 | 7.400 | 25.367 |

| Min | 85 | 87 | 85 | 32 | 16 | 17 | 24 | 20 | 11 | 106 | 104 |

| Q1 | 99 | 102 | 103 | 48 | 26 | 26 | 34 | 30 | 17 | 127 | 167 |

| Q2 | 102 | 105 | 108 | 50 | 29 | 28 | 36 | 32 | 19 | 132 | 184 |

| Q3 | 106 | 109 | 113 | 52 | 31 | 31 | 39 | 34 | 20 | 136 | 201 |

| Max | 119 | 123 | 132 | 65 | 47 | 42 | 50 | 45 | 32 | 155 | 266 |

| Chego (cm) | Sibza (cm) | Chejang (cm) | Hungsim (cm) | Hungpok (cm) | Yogak (cm) | Gojang (cm) | Gonpok (cm) | Jogol (cm) | Hungwe (cm) | Weight (kg) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 120.866 | 123.228 | 135.840 | 62.432 | 38.411 | 37.976 | 45.709 | 40.628 | 22.206 | 169.321 | 362.839 |

| STD | 4.424 | 4.527 | 6.708 | 2.782 | 3.762 | 2.945 | 3.217 | 3.102 | 2.641 | 8.201 | 44.940 |

| Min | 105 | 107 | 113 | 42 | 26 | 25 | 32 | 27 | 14 | 141 | 218 |

| Q1 | 118 | 120 | 132 | 61 | 36 | 36 | 44 | 39 | 20 | 164 | 332 |

| Q2 | 121 | 123 | 136 | 62 | 38 | 38 | 46 | 41 | 22 | 169 | 360 |

| Q3 | 124 | 126 | 140 | 64 | 41 | 40 | 48 | 43 | 24 | 175 | 392 |

| Max | 135 | 139 | 157 | 77 | 53 | 51 | 60 | 54 | 30 | 197 | 508 |

| Chego (cm) | Sibza (cm) | Chejang (cm) | Hungsim (cm) | Hungpok (cm) | Yogak (cm) | Gojang (cm) | Gonpok (cm) | Jogol (cm) | Hungwe (cm) | Weight (kg) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 129.598 | 130.950 | 148.816 | 70.228 | 44.386 | 44.712 | 49.506 | 45.579 | 24.536 | 193.284 | 498.297 |

| STD | 6.090 | 6.178 | 7.951 | 3.522 | 4.388 | 3.593 | 3.871 | 3.930 | 3.348 | 10.501 | 66.607 |

| Min | 110 | 111 | 124 | 59 | 31 | 32 | 38 | 34 | 16 | 159 | 296 |

| Q1 | 126 | 128 | 144 | 68 | 42 | 42 | 47 | 43 | 22 | 186 | 452 |

| Q2 | 130 | 132 | 149 | 70 | 44 | 45 | 50 | 46 | 24 | 194 | 496 |

| Q3 | 134 | 135 | 155 | 73 | 47 | 47 | 52 | 48 | 27 | 201 | 541 |

| Max | 148 | 150 | 172 | 81 | 57 | 59 | 61 | 57 | 34 | 224 | 700 |

| Chego (cm) | Sibza (cm) | Chejang (cm) | Hungsim (cm) | Hungpok (cm) | Yogak (cm) | Gojang (cm) | Gonpok (cm) | Jogol (cm) | Hungwe (cm) | Weight (kg) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 136.937 | 137.843 | 158.914 | 76.848 | 50.985 | 50.288 | 53.036 | 50.231 | 27.653 | 217.597 | 652.760 |

| STD | 4.256 | 4.278 | 7.166 | 3.107 | 3.936 | 3.193 | 3.618 | 3.608 | 3.088 | 9.225 | 66.245 |

| Min | 124 | 125 | 136 | 66 | 39 | 41 | 42 | 39 | 20 | 189 | 458 |

| Q1 | 134 | 135 | 154 | 75 | 48 | 48 | 50 | 48 | 25 | 211 | 606 |

| Q2 | 137 | 138 | 159 | 77 | 51 | 50 | 53 | 50 | 27 | 217 | 648 |

| Q3 | 140 | 141 | 164 | 79 | 54 | 52 | 56 | 53 | 30 | 224 | 696 |

| Max | 150 | 151 | 181 | 88 | 63 | 60 | 64 | 61 | 37 | 246 | 865 |

| Feature | 6-Month Cow | 12-Month Cow | 18-Month Cow | 24-Month Cow | STD |

|---|---|---|---|---|---|

| Chego | 0.695 | 0.627 | 0.669 | 0.559 | 0.059 |

| Sibza | 0.720 | 0.680 | 0.666 | 0.551 | 0.073 |

| Chejang | 0.736 | 0.799 | 0.753 | 0.703 | 0.040 |

| Hungsim | 0.558 | 0.699 | 0.707 | 0.670 | 0.069 |

| Hungpok | 0.464 | 0.708 | 0.676 | 0.569 | 0.111 |

| Yogak | 0.474 | 0.628 | 0.700 | 0.616 | 0.095 |

| Gojang | 0.520 | 0.619 | 0.577 | 0.490 | 0.058 |

| Gonpok | 0.466 | 0.710 | 0.682 | 0.662 | 0.111 |

| Jagol | 0.295 | 0.544 | 0.650 | 0.612 | 0.160 |

| Hungwe | 0.896 | 0.913 | 0.923 | 0.904 | 0.012 |

| Model | Features | RMSE | MAPE(%) |

|---|---|---|---|

| MLP | Use all features and use age as another feature | 28.547 ± 0.263 | 5.430 ± 0.091 |

| kNN | 25.512 ± 0.096 | 4.847 ± 0.017 | |

| LightGBM | 24.745 ± 0.135 | 4.721 ± 0.023 | |

| TabNet | 25.090 ± 0.388 | 4.888 ± 0.185 | |

| FT-Transformer | 25.451 ± 0.956 | 4.998 ± 0.212 | |

| MLP | Use all features (not use age) | 31.617 ± 3.911 | 6.205 ± 0.777 |

| kNN | 25.610 ± 0.049 | 4.864 ± 0.008 | |

| LightGBM | 25.089 ± 0.072 | 4.754 ± 0.019 | |

| TabNet | 25.272 ± 0.243 | 4.835 ± 0.069 | |

| FT-Transformer | 25.493 ± 0.192 | 5.053 ± 0.053 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dang, C.; Choi, T.; Lee, S.; Lee, S.; Alam, M.; Park, M.; Han, S.; Lee, J.; Hoang, D. Machine Learning-Based Live Weight Estimation for Hanwoo Cow. Sustainability 2022, 14, 12661. https://doi.org/10.3390/su141912661

Dang C, Choi T, Lee S, Lee S, Alam M, Park M, Han S, Lee J, Hoang D. Machine Learning-Based Live Weight Estimation for Hanwoo Cow. Sustainability. 2022; 14(19):12661. https://doi.org/10.3390/su141912661

Chicago/Turabian StyleDang, Changgwon, Taejeong Choi, Seungsoo Lee, Soohyun Lee, Mahboob Alam, Mina Park, Seungkyu Han, Jaegu Lee, and Duytang Hoang. 2022. "Machine Learning-Based Live Weight Estimation for Hanwoo Cow" Sustainability 14, no. 19: 12661. https://doi.org/10.3390/su141912661

APA StyleDang, C., Choi, T., Lee, S., Lee, S., Alam, M., Park, M., Han, S., Lee, J., & Hoang, D. (2022). Machine Learning-Based Live Weight Estimation for Hanwoo Cow. Sustainability, 14(19), 12661. https://doi.org/10.3390/su141912661