Developing a Mobile Mapping System for 3D GIS and Smart City Planning

Abstract

1. Introduction

2. Related Work

- (1)

- To acquire high-quality texture information for 3D building modeling and to create a hybrid approach;

- (2)

- To produce an automated 3D building model to improve the spatial perceptions of the urban area among the public; therefore, it is important that a mapping car can be made available;

- (3)

- To collect high-quality texture information on 3D buildings at a low price point, the application software was developed for the MMS.

3. Methods

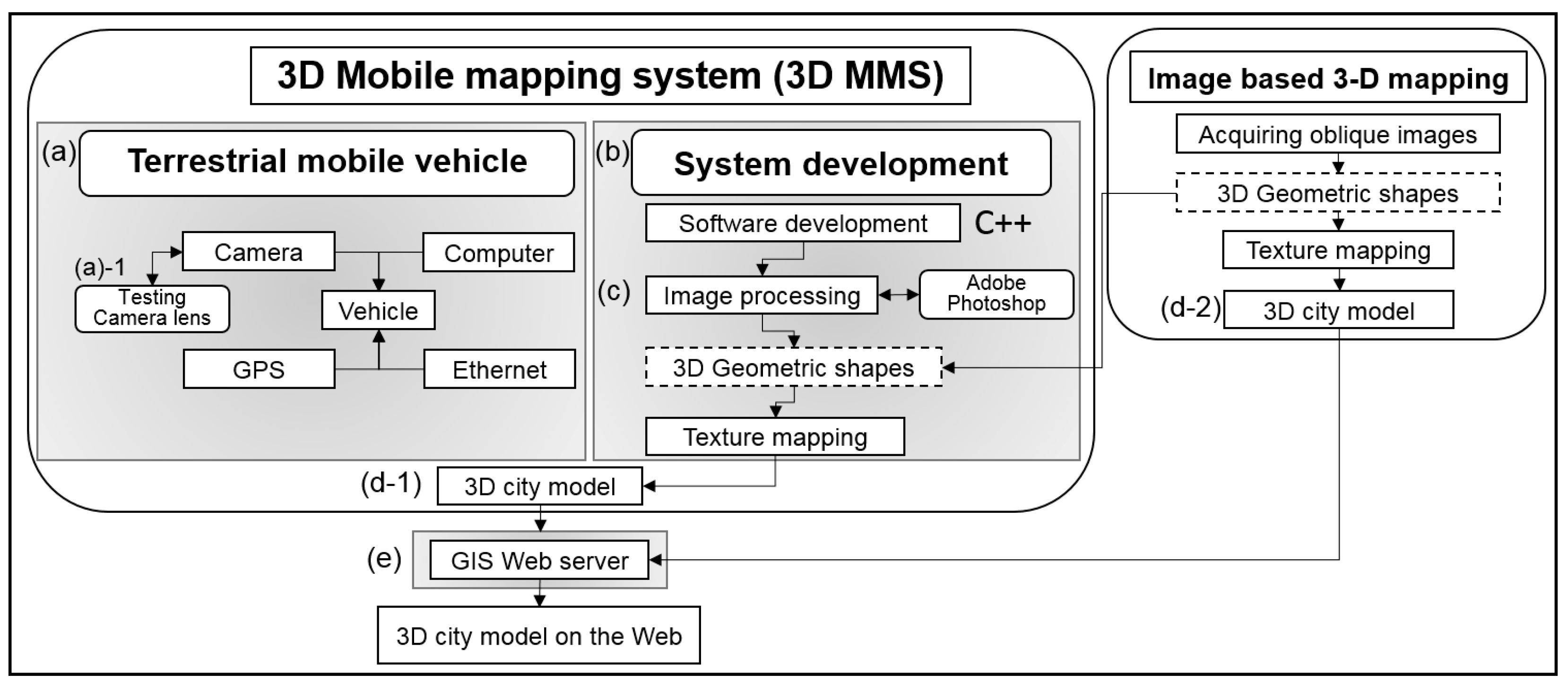

3.1. Workflow and Data Collection

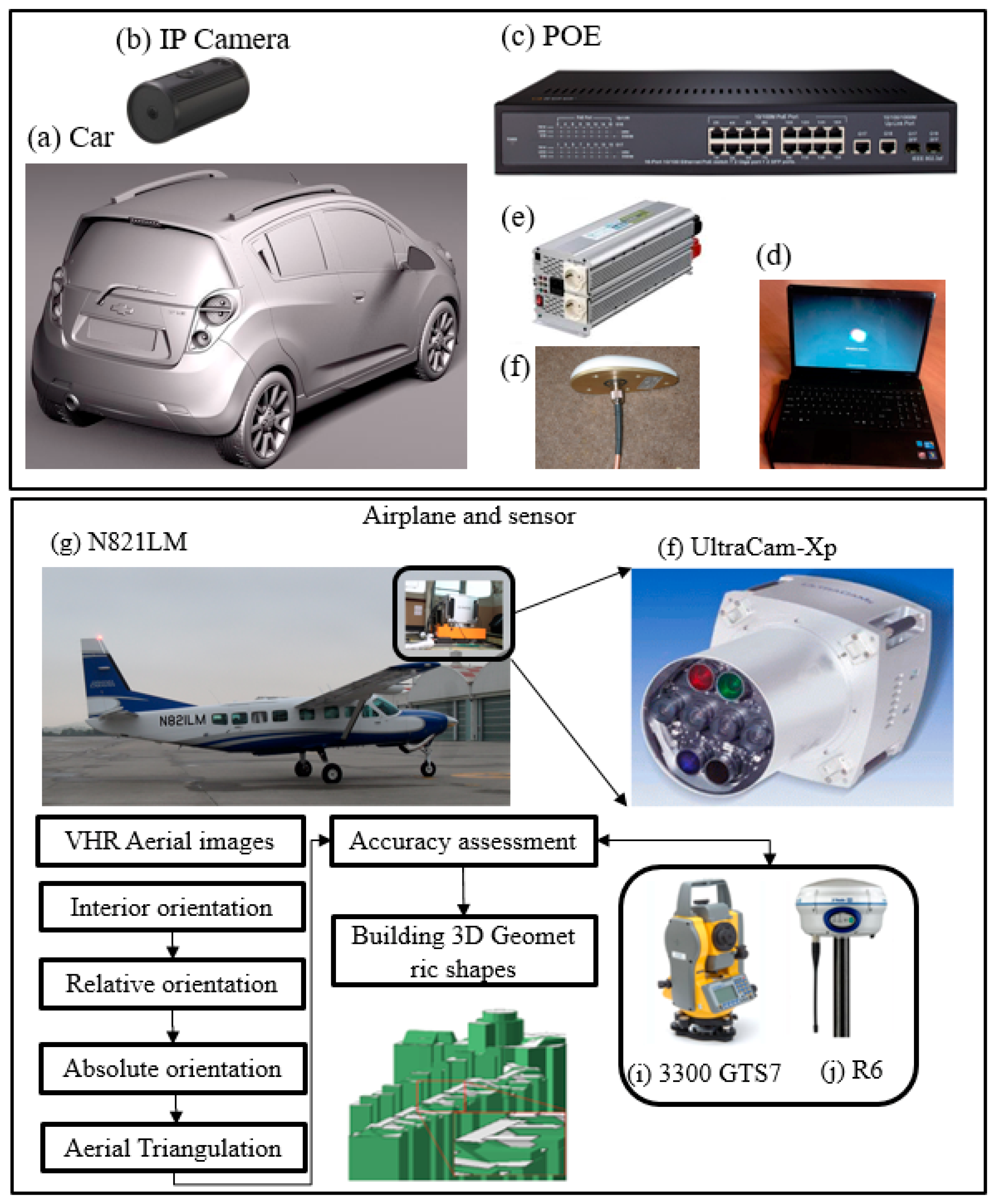

3.2. Materials and MMS Design

4. Results

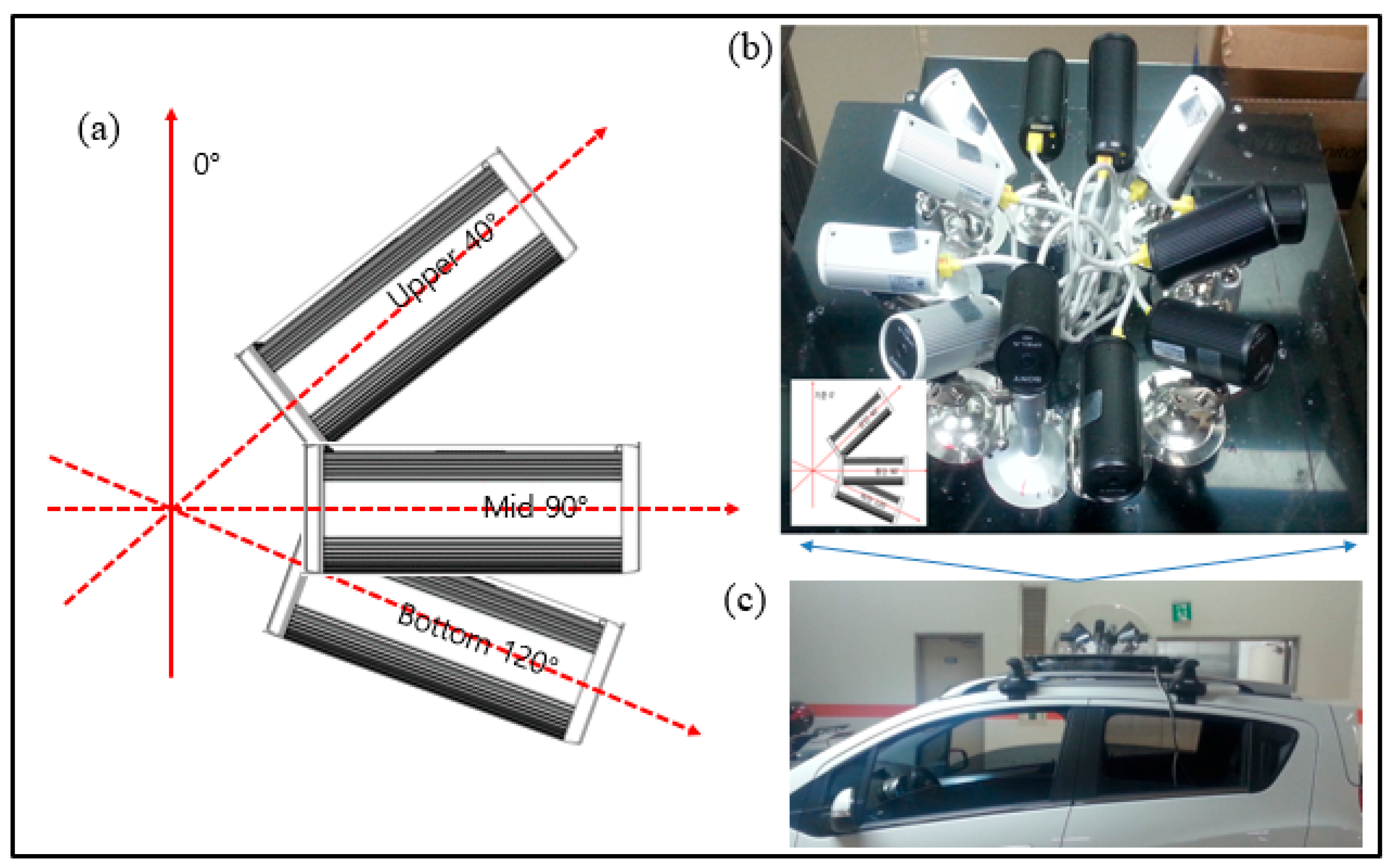

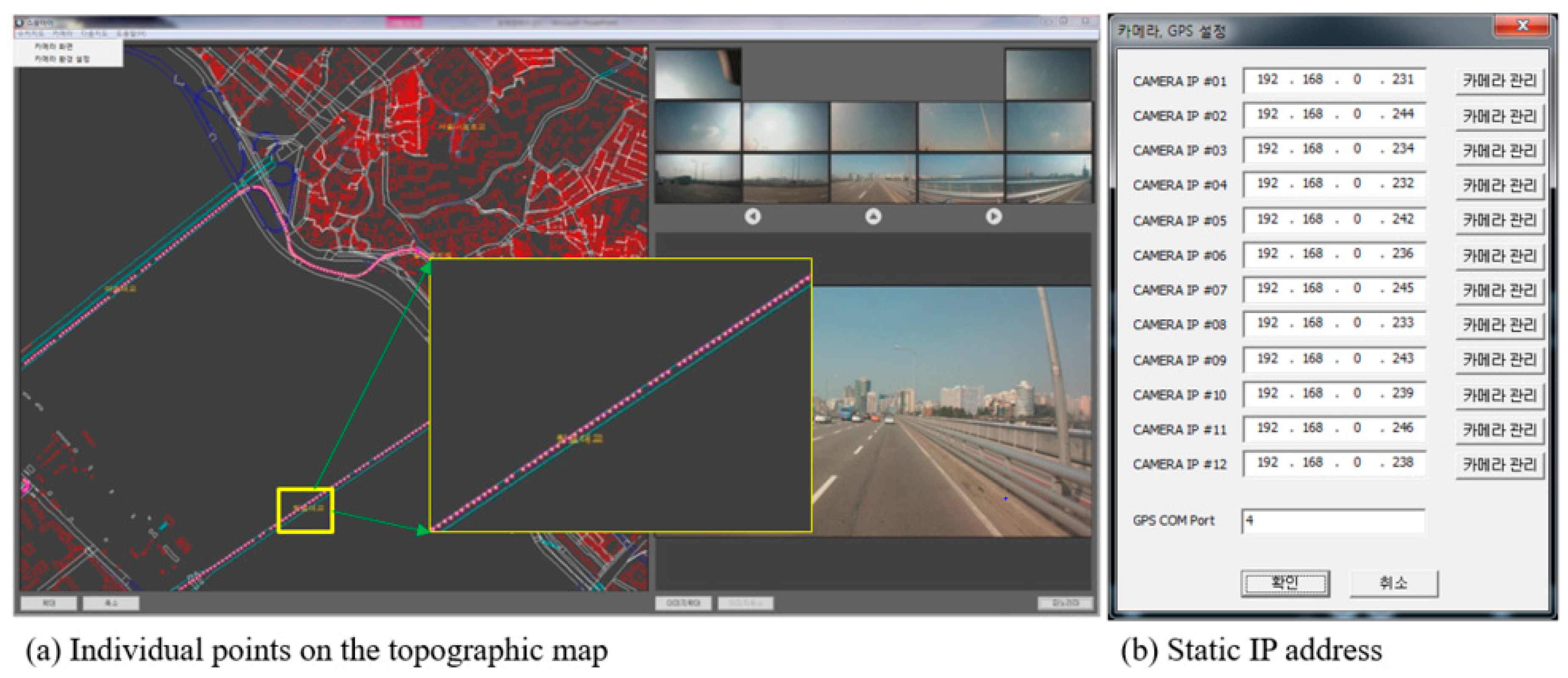

4.1. Developing a Mobile Vehicle for 3D Mapping System

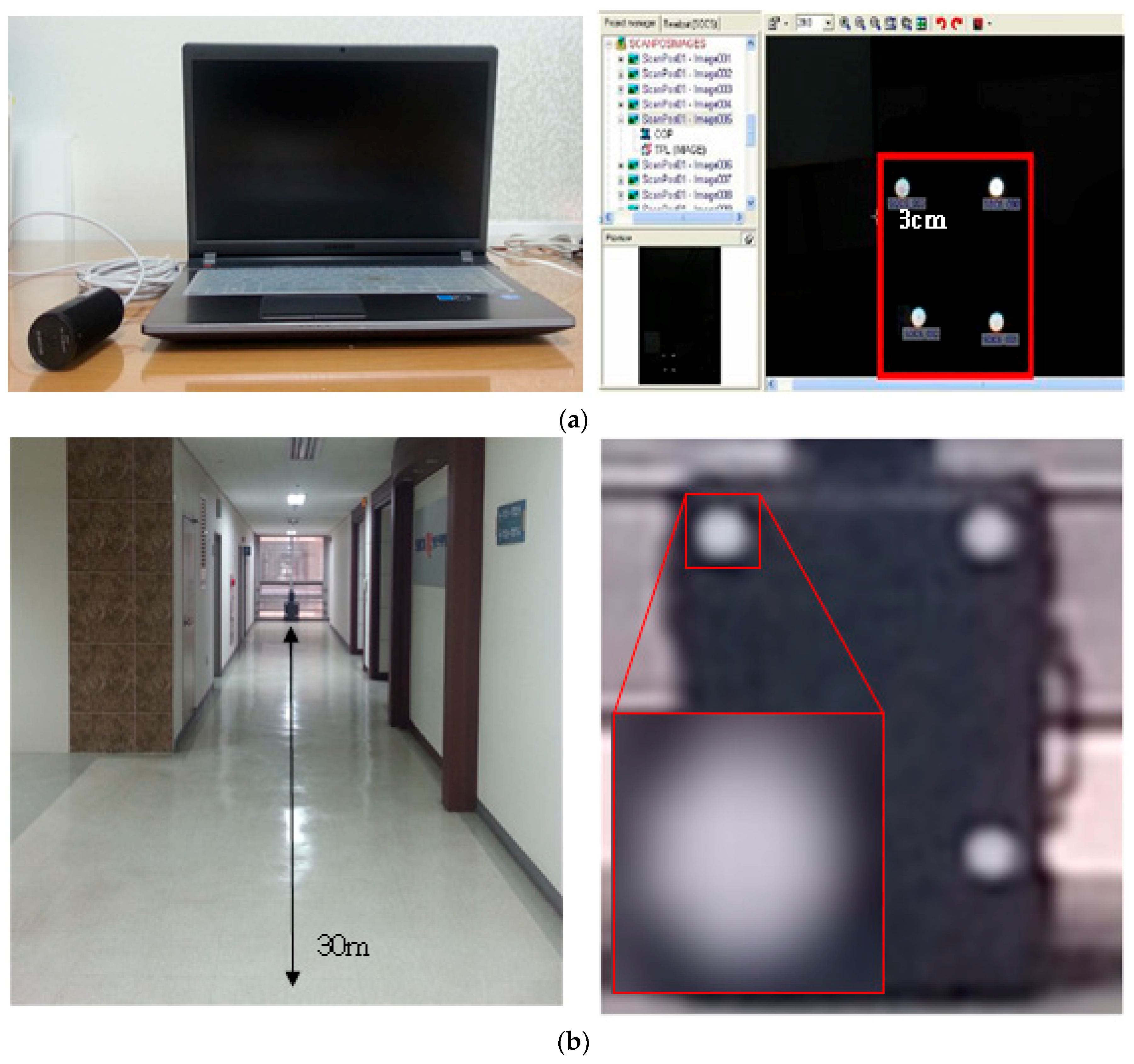

4.2. Assessing the GSD of the IP Camera

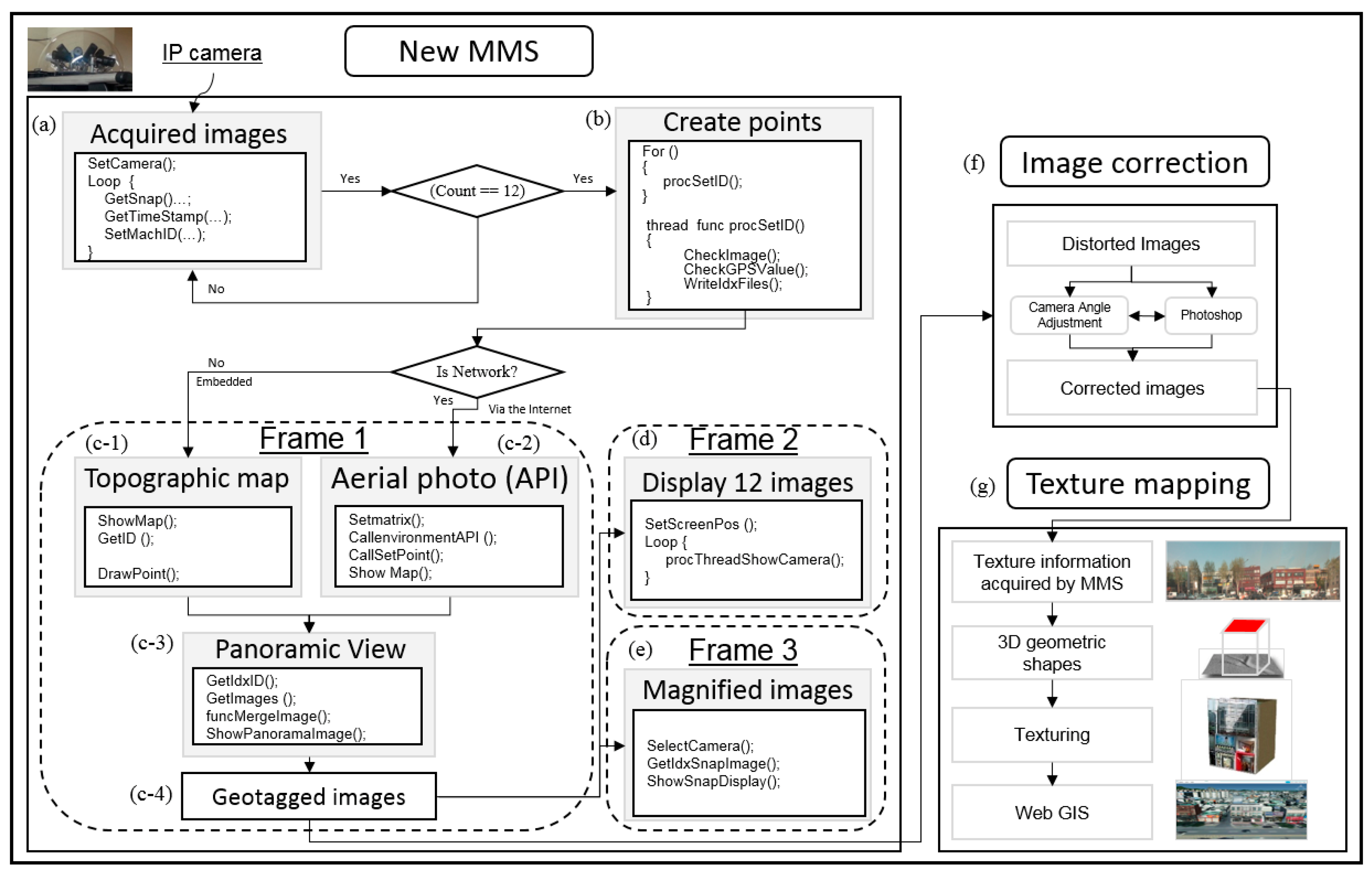

4.3. Programming Application for Automatic 3D Mapping: System Development

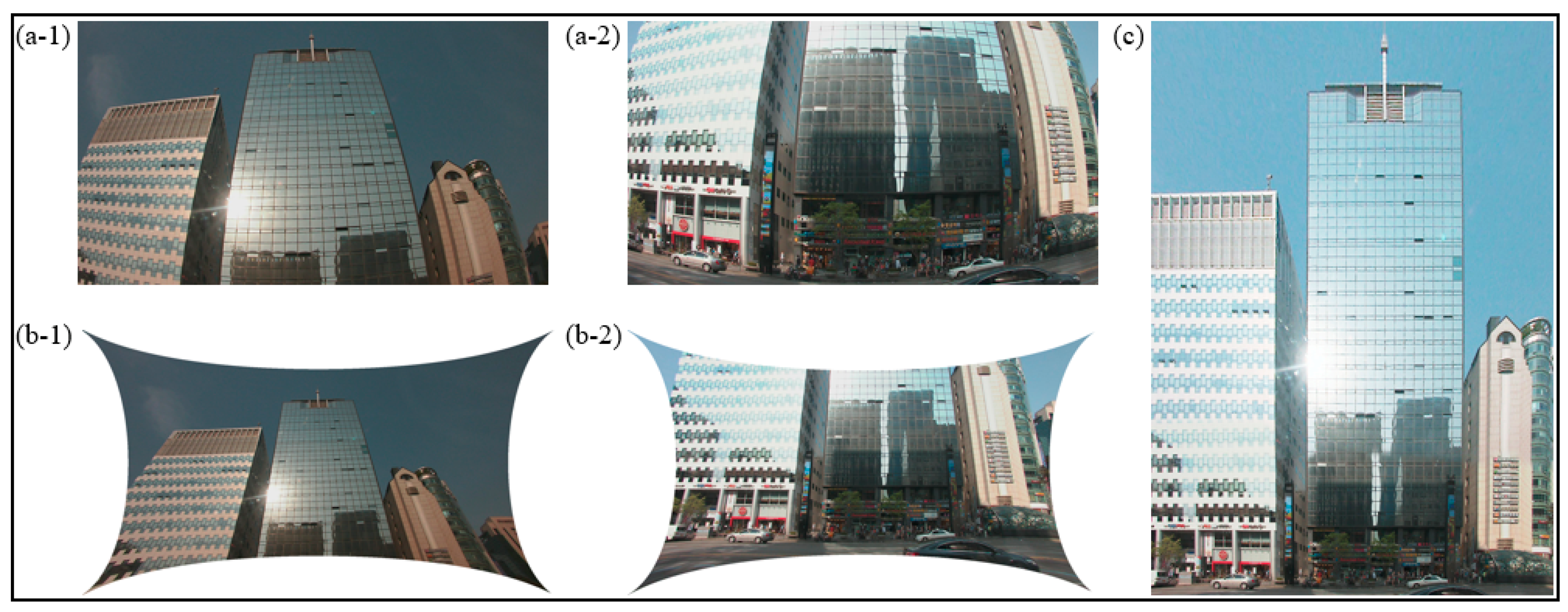

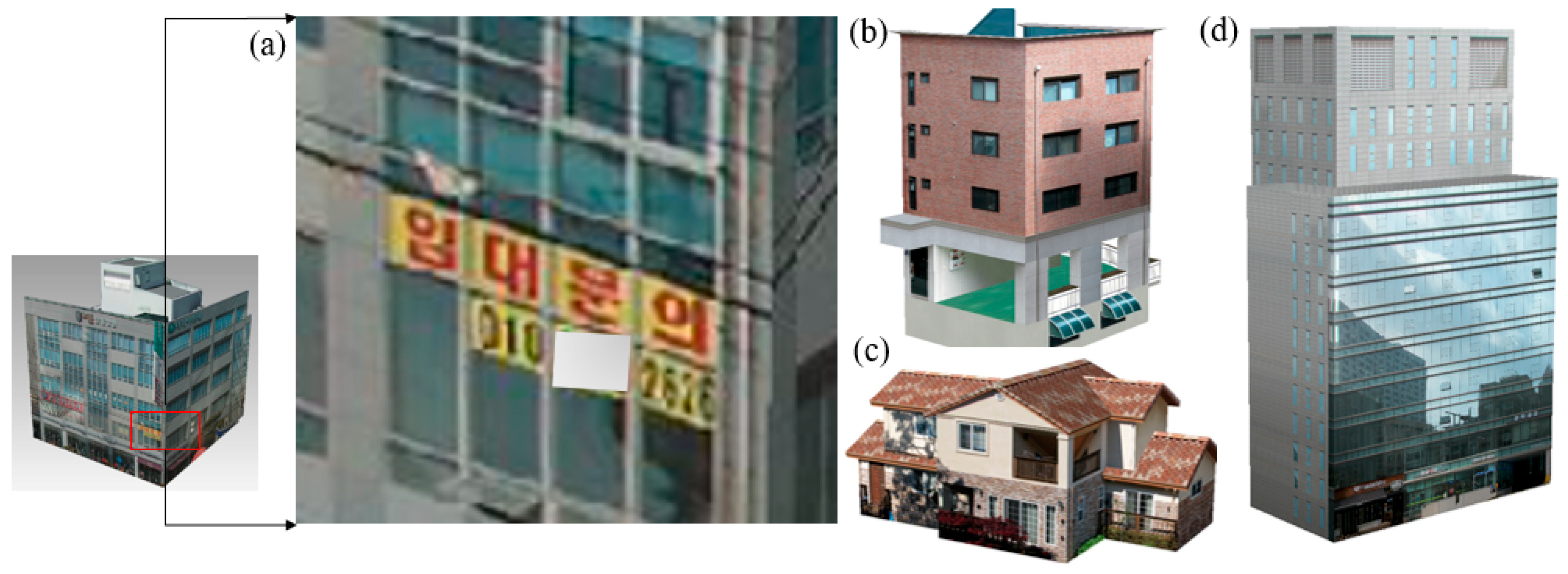

4.4. Processing Distorted Images from MMS and Comparison with 3D Textures from Aerial Photos

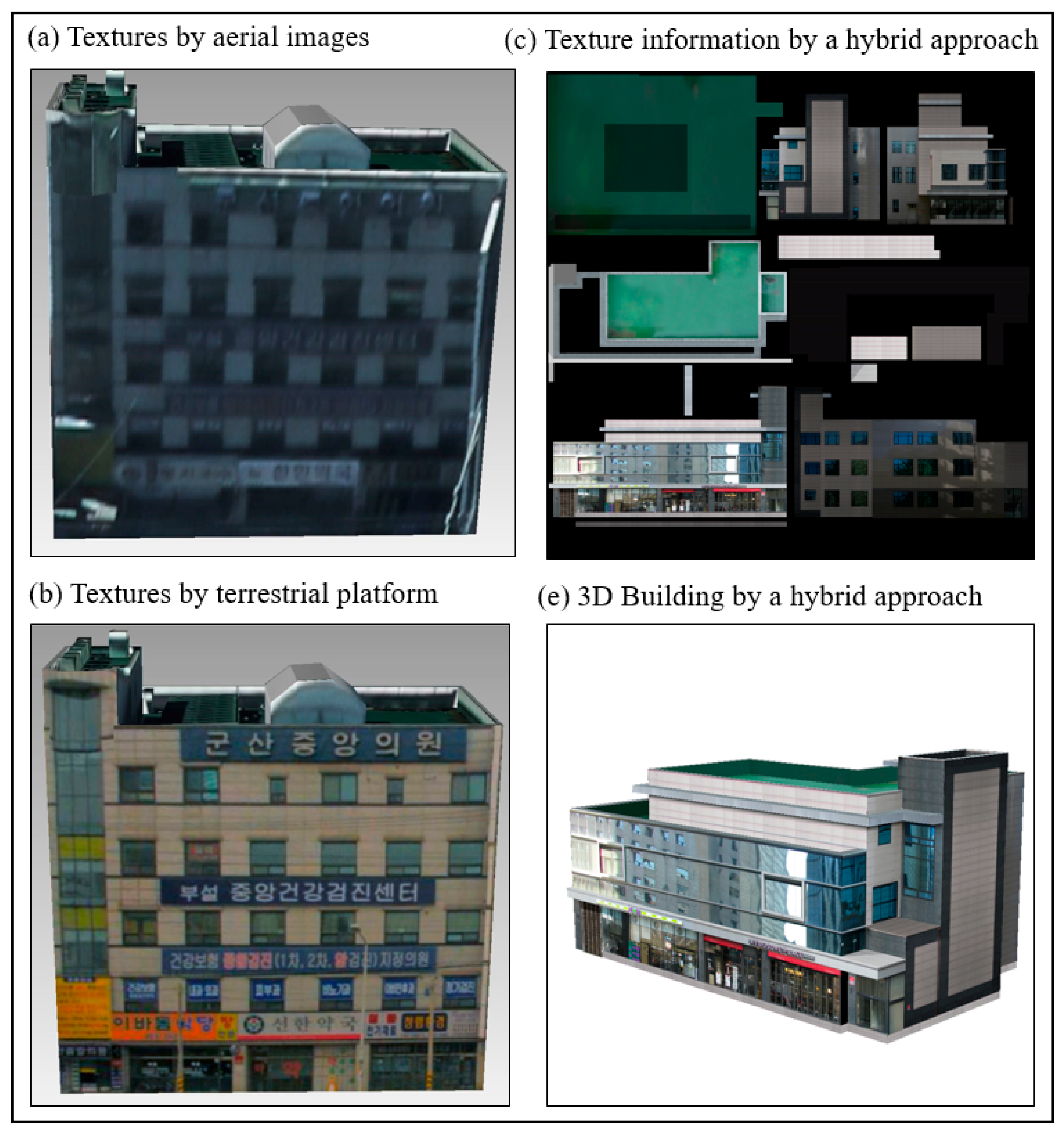

4.5. 3D Urban Models by Hybrid Approaches

5. Discussion

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Yang, B. GIS based 3-D landscape visualization for promoting citizen’s awareness of coastal hazard scenarios in flood prone tourism towns. Appl. Geogr. 2016, 76, 85–97. [Google Scholar] [CrossRef]

- Yang, B.; Lee, J. Improving accuracy of automated 3-D building models for smart cities. Int. J. Digit. Earth 2017, 12, 209–227. [Google Scholar] [CrossRef]

- Grün, A.; Baltsavias, E.; Henricsson, O. Automatic Extraction of Man-Made Objects from Aerial and Space Images (II); Birkhäuser Verlag: Basel, Switzerland, 1997. [Google Scholar]

- Grün, A.; Kuebler, O.; Agouris, P. Automatic Extraction of Man-Made Object from Aerial and Space Images; Birkhäuser Verlag: Basel, Switzerland, 1995. [Google Scholar]

- De la Losa, A.; Cervelle, B. 3D topological modeling and visualization for 3D GIS. Comput. Graph. 1999, 23, 469–478. [Google Scholar] [CrossRef]

- Gamba, P.; Houshmand, B.; Saccani, M. Detection and extraction of buildings from interferometric SAR data. IEEE Trans. Geosci. Remote Sens. 2000, 38, 611–617. [Google Scholar] [CrossRef]

- Xiao, J.; Gerke, M.; Vosselman, G. Building extraction from oblique airborne imagery based on robust façade detection. ISPRS J. Photogramm. Remote Sens. 2012, 68, 56–68. [Google Scholar] [CrossRef]

- Wang, R. 3D building modeling using images and LiDAR: A review. Int. J. Image Data Fusion 2013, 4, 273–292. [Google Scholar] [CrossRef]

- Lafarge, F.; Mallet, C. Creating large-scale city models from 3D-point clouds: A robust approach with hybrid representation. Int. J. Comput. Vis. 2012, 99, 69–85. [Google Scholar] [CrossRef]

- Turlapaty, A.; Gokaraju, B.; Du, Q.; Younan, N.H.; Aanstoos, J.V. A hybrid approach for building extraction from spaceborne multi-angular optical imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 89–100. [Google Scholar] [CrossRef]

- Sun, S.; Salvaggio, C. Aerial 3D building detection and modeling from airborne LiDAR point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1440–1449. [Google Scholar] [CrossRef]

- Haala, N.; Martin, K. An update on automatic 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2010, 65, 570–580. [Google Scholar] [CrossRef]

- Nebiker, S.; Susanne, B.; Martin, C. Rich point clouds in virtual globes—A new paradigm in city modeling? Comput. Environ. Urban Syst. 2010, 34, 508–517. [Google Scholar] [CrossRef]

- Barber, D.; Jon, M.; Sarah, S.V. Geometric validation of a ground-based mobile laser scanning system. ISPRS J. Photogramm. Remote Sens. 2008, 63, 128–141. [Google Scholar] [CrossRef]

- Frueh, C.; Avideh, Z. Constructing 3d city models by merging ground-based and airborne views. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 16–22 June 2003; p. II-562. [Google Scholar]

- Hammoudi, K.; Fadi, D.; Nicolas, P. Extracting building footprints from 3D point clouds using terrestrial laser scanning at street level. In Proceedings of the 2009 Workshop on ISPRS/CMRT09, Paris, France, 3–4 September 2009; Volume 38, pp. 65–70. [Google Scholar]

- Yang, B.; Song, H.; Kim, J. Developing a Reinforced Heritagescape using GIScience: A Case Study of Gyeongju. South Korea. Int. J. Tour. Sci. 2010, 10, 1–34. [Google Scholar] [CrossRef]

- Cornelis, N.; Bastian, L.; Kurt, C.; Luc, V.G. 3d urban scene modeling integrating recognition and reconstruction. Int. J. Comput. Vis. 2008, 78, 121–141. [Google Scholar] [CrossRef]

- Tao, C.V. Mobile mapping technology for road network data acquisition. J. Geospat. Eng. 2000, 2, 1–14. [Google Scholar]

- Kumar, P.; Conor, P.; McElhinney, P.L.; Timothy, M. Automated road markings extraction from mobile laser scanning data. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 125–137. [Google Scholar] [CrossRef]

- Jaakkola, A.; Juha, H.; Antero, K.; Yu, X.W.; Harri, K.; Matti, L.; Yi, L. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Mustafa, A.; Zhang, X.W.; Aliaga, D.G.; Bruwier, M.; Nishida, G.; Dewals, B.; Erpicum, S.; Archambeau, P.; Pirotton, M.; Teller, J. Procedural generation of flood-sensitive urban layouts. Environ. Plan. B 2018. [Google Scholar] [CrossRef]

- El-Halawany, S.I.; Derek, D.L. Detecting road poles from mobile terrestrial laser scanning data. GISci. Remote Sens. 2013, 50, 704–722. [Google Scholar] [CrossRef]

- Kremer, J.; Hunter, G. Performance of the StreetMapper mobile LiDAR mapping system in “real world” projects. Photogramm. Week 2007, 7, 215–225. [Google Scholar]

- Novak, K. Mobile mapping technology for GIS data collection. Photogramm. Eng. Remote Sens. 1995, 61, 493–501. [Google Scholar]

- Li, R.; Chapman, A.; Qian, L.; Xin, Y.; Tao, C. Mobile mapping for 3D GIS data acquisition. Int. Arch. Photogramm. Remote Sens. 1996, 31, 232–237. [Google Scholar]

- El-Sheimy, N.A.S.E.R.; Klaus, P.S. Navigating urban areas by VISAT—A mobile mapping system integrating GPS/INS/digital cameras for GIS applications. Navigation 1998, 45, 275–285. [Google Scholar] [CrossRef]

- Novak, K.; Bossler, J.D. Development and application of the highway mapping system of Ohio State University. Photogramm. Rec. 1995, 15, 123–134. [Google Scholar] [CrossRef]

- Karimi, H.A.; Grejner-Brzezinska, D.A. GQMAP: Improving performance and productivity of mobile mapping systems through GPS quality of service. Cartogr. Geogr. Inf. Sci. 2004, 31, 167–177. [Google Scholar] [CrossRef]

- Hunter, G.; Cox, C.; Kremer, J. Development of a commercial laser scanning mobile mapping system–StreetMapper. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 17–18. [Google Scholar]

- Anguelov, D.; Carole, D.; Daniel, F.; Christian, F.; Stéphane, L.; Richard, L.; Abhijit, O.; Vincent, L.; Josh, W. Google Street View: Capturing the World at Street Level. Computer 2010, 43, 32–38. [Google Scholar] [CrossRef]

- Vincent, L. Taking online maps down to street level. Computer 2007, 40, 118–120. [Google Scholar] [CrossRef]

- Noah, S.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. ACM Trans. Graph. 2006, 25, 835–846. [Google Scholar]

- Frome, A.; German, C.; Ahmad, A.; Marco, Z.; Bo, W.; Alessandro, B.; Hartwig, A.; Hartmut, N.; Vincent, L. Large-scale privacy protection in google street view. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2373–2380. [Google Scholar] [CrossRef]

- Carrino, F.; Tscherrig, J.; Mugellini, E.; Khaled, A.O.; Ingold, R. Head-computer interface: A multimodal approach to navigate through real and virtual worlds. In Human-Computer Interaction. Interaction Techniques and Environments; Springer: Berlin/Heidelberg, Germany, 2011; pp. 222–230. [Google Scholar]

- Haala, N.; Michael, P.; Jens, K.; Graham, H. Mobile LiDAR mapping for 3D point cloud collection in urban areas—A performance test. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1119–1127. [Google Scholar]

- Christian, F.; Sammon, R.; Zakhor, A. Automated texture mapping of 3D city models with oblique aerial imagery. In Proceedings of the 2nd International Symposium on 3D Data Processing, Visualization and Transmission, Thessaloniki, Greece, 6–9 September 2004; Volume 31, pp. 396–403. [Google Scholar]

- Kada, M. The 3D Berlin project. Photogramm. Week 2009, 31, 331–340. [Google Scholar]

- Lee, J.; Yang, B. Developing an optimized texture mapping for photorealistic 3D buildings. Trans. GIS 2019, 23, 1–21. [Google Scholar] [CrossRef]

- Hakim, B.; Landes, T.; Grussenmeyer, P.; Tarsha-Kurdi, F. Automatic segmentation of building facades using terrestrial laser data. In Proceedings of the ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, 12–14 September 2007. [Google Scholar]

- Pu, S. Generating Building Outlines from Terrestrial Laser Scanning; International Society for Photogrammetry and Remote Sensing (ISPRS): Enschede, The Netherlands, 2008. [Google Scholar]

- CycloMedia Technology, Inc., CycloMedia, CA. 2018. Available online: https://www.cyclomedia.com/us/ (accessed on 1 July 2019).

- Pu, S.; George, V. Knowledge based reconstruction of building models from terrestrial laser scanning data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 575–584. [Google Scholar] [CrossRef]

- Chevrolet. 2013 Spark Manual, Chevrolet; Chevrolet: Incheon, South Korea, 2013. [Google Scholar]

- Woorithech. POE-804S User’s Guide; Woorithech Corporation: Seoul, South Korea, 2012. [Google Scholar]

- AscenKorea. GPS641 User’s Guide; AscenKorea Corporation: Seoul, South Korea, 2013. [Google Scholar]

- NGI. Study on Improving Vehicle-Borne Multi-Sensor; National Geographic Information Institute: Suwon, South Korea, 2010.

- RIEGL. DataSheet_VMX-450, Riscan Pro Technical Documentation; RIEGL: Orlando, FL, USA, 2015. [Google Scholar]

| Items | Prices | Items | Prices |

|---|---|---|---|

| Compact Car | $8500 | Carrier | $1100 |

| Cameras (SONY) | $848 × 12 = $10,176 | Brackets | $854 = $340 |

| GPS receiver | $854 | Roof rail | $340 |

| POE | $135 × 2 = $270 | Other expenses | $1700 |

| Total | $23,280 | ||

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, B. Developing a Mobile Mapping System for 3D GIS and Smart City Planning. Sustainability 2019, 11, 3713. https://doi.org/10.3390/su11133713

Yang B. Developing a Mobile Mapping System for 3D GIS and Smart City Planning. Sustainability. 2019; 11(13):3713. https://doi.org/10.3390/su11133713

Chicago/Turabian StyleYang, Byungyun. 2019. "Developing a Mobile Mapping System for 3D GIS and Smart City Planning" Sustainability 11, no. 13: 3713. https://doi.org/10.3390/su11133713

APA StyleYang, B. (2019). Developing a Mobile Mapping System for 3D GIS and Smart City Planning. Sustainability, 11(13), 3713. https://doi.org/10.3390/su11133713