Abstract

Wireless Sensor Networks (WSNs) consist of distributed sensor nodes that collect and transmit environmental data, often in resource-constrained and unsecured environments. These characteristics make WSNs highly vulnerable to various security threats. To address this, the objective of this research is to design and evaluate a deep learning-based Intrusion Detection System (IDS) that is both accurate and efficient for real-time threat detection in WSNs. This study proposes a hybrid IDS model combining one-dimensional Convolutional Neural Networks (Conv1Ds), Gated Recurrent Units (GRUs), and Self-Attention mechanisms. A Conv1D extracts spatial features from network traffic, GRU captures temporal dependencies, and Self-Attention emphasizes critical sequence components, collectively enhancing detection of subtle and complex intrusion patterns. The model was evaluated using the WSN-DS dataset and demonstrated superior performance compared to traditional machine learning and simpler deep learning models. It achieved an accuracy of 98.6%, precision of 98.63%, recall of 98.6%, F1-score of 98.6%, and an ROC-AUC of 0.9994, indicating strong predictive capability even with imbalanced data. In addition to centralized training, the model was tested under cooperative, node-based learning conditions, where each node independently detects anomalies and contributes to a collective decision-making framework. This distributed approach improves detection efficiency and robustness. The proposed IDS offers a scalable and resilient solution tailored to the unique challenges of WSN security.

1. Introduction

Regardless of the widespread deployment of firewalls and automated attack prevention tools, network intrusions remain a significant concern. Malicious packets and sophisticated cyber-attacks can compromise network integrity, leading to substantial data breaches and operational disruptions. While traditional security measures such as encryption, authentication, and policy management offer foundational protection, they are not foolproof and can be circumvented by advanced persistent threats. Consequently, the implementation of Intrusion Detection Systems (IDSs) has become imperative as a secondary defense mechanism to identify and mitigate unauthorized activities within networks [1,2].

Recent breakthroughs in machine learning and deep learning have demonstrated potential in improving Intrusion Detection System capabilities within WSNs [3,4,5,6]. Talukder et al. suggested a machine learning-based intrusion detection method employing the SMOTE-Tomek Link algorithm to mitigate data imbalance in WSN datasets, resulting in high detection accuracy [1]. Gueriani et al. performed an extensive survey on the uses of deep reinforcement learning for intrusion detection in IoT environments, emphasizing the efficacy of these methods in dynamic and resource-limited networks [3]. The amalgamation of CNNs with recurrent architectures such as GRUs, in conjunction with attention processes, has been investigated to effectively capture both spatial and temporal characteristics in network traffic data, resulting in enhanced detection efficacy. WSNs represent a class of wireless communication systems composed of compact, resource-constrained sensor nodes that collaborate to collect, transmit, and analyze environmental or physical data [7]. These networks offer several compelling benefits, such as cost efficiency, scalable deployment, low power consumption, and the ability to function without a pre-existing communication infrastructure. Such advantages make WSNs highly suitable for applications in smart cities, environmental monitoring, precision agriculture, and military surveillance [8].

However, WSNs also face inherent challenges due to their physical and operational limitations. These include limited processing power, restricted battery life, narrow bandwidth, short transmission range, and susceptibility to signal interference and hardware noise. These constraints not only impact the reliability of the data being collected and transmitted but also make WSNs vulnerable to external threats, including cyber-attacks, data redundancy, and environmental disruptions. Research has shown that such vulnerabilities significantly reduce the effectiveness and resilience of WSN deployments in real-world environments [7,8].

Given these challenges, there is a critical need for intelligent and lightweight security mechanisms that can operate effectively within the constraints of WSN architecture. This study addresses this need by proposing a hybrid deep learning-based intrusion detection model capable of detecting a wide range of attacks while maintaining computational efficiency and adaptability across distributed nodes [8]. The primary objective of this study is to develop a lightweight and accurate deep learning-based intrusion detection model tailored for WSNs. Leveraging a hybrid architecture that integrates Conv1D, GRU, and Self-Attention layers, the model is designed to efficiently detect multiple types of network intrusions while addressing the constraints of WSN environments, such as limited energy, computational resources, and data imbalance. This paper presents a hybrid deep learning model that integrates one-dimensional convolutional layers (Conv1Ds), GRU, and Self-Attention techniques to improve intrusion detection in WSNs. The Conv1D layers are used to collect local spatial characteristics from the input data, whilst the GRU layers capture temporal dependencies, allowing the model to comprehend sequential patterns linked to different sorts of assaults. The self-attention technique enables the model to concentrate on the most relevant segments of the input sequence, thereby enhancing its capacity to identify tiny irregularities. The suggested model is assessed using the WSN-DS dataset, and its performance is juxtaposed with existing methods to illustrate its effectiveness in detecting intrusions within WSN environments, achieving a precision of 98.63%. Our proposed method represents a substantial performance improvement.

- The major contribution of the model in the field of intrusion detection for WSNs lies in the novel integration of Conv1D, GRU, and Self-Attention mechanisms into a unified deep learning architecture that achieves both high detection accuracy and real-time performance, specifically addressing challenges unique to WSNs such as resource constraints, data imbalance, and temporal dependencies in attack patterns.

- Hybrid Architecture for Temporal and Spatial Feature Extraction: The integration of Conv1D and GRU facilitates the model’s ability to concurrently identify spatial patterns through convolutions and temporal dependencies through recurrent units in network traffic data, essential for detecting intricate attack behaviors in WSNs.

- Incorporation of Self-Attention for Interpretability and Focused Learning: the Self-Attention layer empowers the model to dynamically prioritize important features such as anomalous packet frequency, sudden data rate increases, irregular routing intervals, and rare protocol combinations. These temporal signals are passed through the attention mechanism, which then learns to focus on the most predictive ones depending on the attack context, improving interpretability and the model’s sensitivity to subtle attack signals—an essential property in low-data or noisy environments like WSNs.

- Effective Handling of Class Imbalance by Integrating SMOTE in the Preprocessing Pipeline: The model effectively tackles severe class imbalance, a common issue in intrusion detection datasets, thereby ensuring fair detection across all attack categories, not just the majority class.

The remainder of the paper is organized as follows: Section 2 provides a review of related work, while Section 3 introduces and describes the proposed model. Section 4 outlines the experimental setup, and Section 5 presents the ablation study. Section 6 details the results analysis, followed by a broader discussion in Section 7. Finally, Section 8 concludes the paper and highlights potential directions for future work.

2. Related Work

IDSs for WSNs have been extensively explored in recent years due to the increasing security demands of distributed and resource-constrained network environments. Existing research has primarily focused on two major categories: machine learning-based approaches and deep learning-based frameworks. Both strategies aim to improve detection accuracy, reduce false positives, and efficiently handle large volumes of heterogeneous network data.

2.1. Machine Learning Approaches for Intrusion Detection in WSNs

In light of the increasing attention on wireless networks, Kolias et al. advocated the development of a distinct publicly accessible AWID for IDS, encompassing both ‘intruder’ and ‘regular’ traffic concerning 802.11 networks. Kolias et al. contributed by identifying various ‘intruder’ types targeting the 802.11 network and their distinct characteristics. Multiclass classification was conducted using a feature set of 156 and another of 20, employing various machine learning methodologies: Naïve Bayes, AdaBoost, Hyperpipes, J48, OneR, Random Forest, Random Tree, and ZeroR. J48 had optimal performance on both feature sets of 156 and 20 [3]. Machine learning models require considerable time during the training phase; thus, a restricted set of characteristics is advantageous for expediting the training process. Bhandari et al. [4] introduced the Shapley Additive Explanations (SHAP) method for feature reduction utilizing tree-based approach classifiers, included CatBoost, Random Forest, LightGBM, and XGBoost. SHAP identifies 15 aspects of AWID that were most beneficial for classification. The accuracy scores show minimal variation; however, SHAP was significantly enhanced training duration.

IDS are essential for smart cities; thus, to predict assaults on these systems, Gaber et al. used recursive feature reduction and constant removal techniques to preprocess the AWID dataset. These processed data are used to classify the ‘injection’ category using Support Vector Machine (SVM), Random Forest (RF), and Decision Tree (DT) with Feature Sets (FS) of 76, 13, and 8, respectively. After conducting all these trials, the decision tree-based classification with feature selection of 8 achieved the highest accuracy of 99% [5]. Feature engineering is considered an effective method to improve the effectiveness of the intrusion detection system. Thanthrige et al. asserted that feature reduction strategies, including information gain and Chi-squared statistics, enhanced detection accuracy and classification speed. Intrusion detection experiments were performed using three feature sets of 111, 41, and 10, employing machine learning models including Random Tree, OneR, Random Forest, AdaBoost, and J48, all of which attained an accuracy surpassing 90% [6].

Rahman et al. demonstrated that employing a combinatorial approach for feature extraction and selection is more effective than utilizing any singular technique. This study employed a combination of C4.8, SVM, and NB for wrapper-based feature selection. Subsequently, the ANN was utilized for the classification of the ‘normal’ and ‘impersonation’ categories employing 20 features, yielding an accuracy of 99.95%. Intrusion detection is a prevalent concern that arises when a network fails to ensure sufficient security for an application. To avert this, the network must possess the requisite qualities [9]. Gavel et al. provided an effective approach to build a defense mechanism that considers the diverse aspects influencing the correlation between attributes and their assigned rank. The beneficial characteristics are subsequently extracted via the OMCFR (Optimized Maximum Correlation Feature Reduction) was subsequently applied to Random Forest for AWID-based IDS, yielding an accuracy of 99.2 [10].

Park et al. present G-IDCS, a graph-based system for intrusion detection and classification aimed at enhancing security within in-vehicle networks. It addresses the shortcomings of current intrusion detection systems often necessitate extensive CAN messages and fail to categorize attack types. The threshold-based method decreases detection time by more than 1/30 and enhances combined attack detection accuracy by over 9%. The classifier based on machine learning demonstrates superior performance compared to current methodologies [11]. Kandhro et al. proposed a deep learning approach for intrusion detection in IoT-driven IIC networks, addressing challenges such as low accuracy, ineffectiveness, elevated false positive rates, and the inability to manage novel intrusion types. The framework, employing a generative adversarial network, enhanced accuracy, reliability, and efficiency by 95% to 97%, surpassing leading deep learning classifiers [12]. Boahen et al. introduced OPT_NSDAE, a deep learning approach for unsupervised feature learning that efficiently identifies compromised accounts on Online Social Networks (OSNs) by minimizing human involvement in feature selection and extraction, surpassing conventional methods [13]. Multiclass intrusion detection systems using classical machine 186 learning techniques are summarized in Table 1.

Table 1.

Machine learning approach.

Table 1.

Machine learning approach.

| Ref | Feature Set | Approach | Classification |

|---|---|---|---|

| [12] | 156, 20 | Naïve Bayes, AdaBoost, Hyperpipes, J48, OneR, Random Forest, Random Tree, ZeroR | Multiclass |

| [13] | 15 | CatBoost, Random Forest, LightGBM, XGBoost (with SHAP feature selection) | Multiclass |

| [4] | 76, 13, 8 | SVM, Random Forest, Decision Tree (recursive feature selection) | Injection class detection |

| [5] | 111, 41, 10 | Random Tree, OneR, Random Forest, AdaBoost, J48 | Multiclass |

| [6] | 20 | C4.8, SVM, Naive Bayes (feature selection), ANN (classification) | Normal vs. Impersonation |

| [7] | 140 | Optimized Maximum Correlation Feature Reduction + Random Forest | Multiclass (AWID-based IDS) |

A variety of machine learning-based methodologies have been suggested for intrusion detection in WSNs, each leveraging different feature sets and classification algorithms. In [14], a comprehensive comparison was conducted using two feature sets (156 and 20) with classifiers such as Naïve Bayes, AdaBoost, Hyperpipes, J48, OneR, Random Forest, Random Tree, and ZeroR for multiclass classification. Reference [15] employed a 15-feature subset derived through SHAP-based feature selection, integrating ensemble models including CatBoost, Random Forest, LightGBM, and XGBoost, also targeting multiclass classification. Focusing on injection attack detection, Ref. [4] used recursive feature selection with feature sets of 76, 13, and 8, combined with SVM, Random Forest, and Decision Tree classifiers. The study in [5] explored the impact of various tree-based classifiers Random Tree, OneR, Random Forest, AdaBoost, and J48—on feature sets of 111, 41, and 10, achieving effective results in multiclass scenarios. In contrast, Ref. [6] applied a hybrid feature selection approach combining C4.8, SVM, and Naive Bayes, followed by classification using an artificial neural network (ANN), specifically to distinguish between normal and impersonation traffic using a 20-feature input. Lastly, Ref. [9] utilized 140 features selected via an Optimized Maximum Correlation Feature Reduction technique, combined with Random Forest, to build a high-accuracy AWID-based intrusion detection system for multiclass classification.

2.2. Deep Learning Approaches for Intrusion Detection in WSNs

K. Sedhuramalingam and N. Saravanakumar performed comprehensive study comparing the COA-GS-IDNN model with conventional machine learning classifiers across several publicly available malware benchmark datasets. The findings indicate that DNNs surpass traditional machine learning models in the real-time surveillance of network activity and host-level incidents for intrusion detection and prevention. Experimental findings indicate that the COA-GS-IDNN model surpasses current models across all critical performance parameters, achieving an accuracy of 95%, precision of 94%, recall of 96%, F1-score of 95%, ROC AUC of 98%, detection time of 1.0068754 s, and a latency of 0.8016 ms [16]. The AWID dataset primarily comprises 16 categories of intrusions and IDS are employed to identify malicious network traffic. Aminanto et al. proposed a completely unsupervised k-means algorithm. Clustering machine learning methodology utilizing 50 features derived from a stacked auto-encoder for the ‘impersonation’ attack category. This method requires no prior labelling of the dataset during the training phase and achieved a 92% detection rate [17]. In any IDS, feature engineering, feature extraction, and feature selection are critical processes prior to the use of any classification method. Kim et al. conducted feature engineering utilizing a Stacked Autoencoder (SAE) with two hidden layers, layers containing 100 and 50 neurons. Feature selection from SAE, utilized in the deep k-means clustering technique for the classification of ‘normal’ and ‘impersonation’ classes with k = 2, achieved an accuracy of 94.81% [18]. Wang et al. proposed deep learning-based methodologies, including Stacked Autoencoders (SAE), a three-layer DNN, and a seven-layer DNN, to enhance the Intrusion Detection System (IDS) for categorization purposes Feature collection including 71 elements generated post-preprocessing. The best accuracy rates attained by the 7-layered DNN for ‘normal’, ‘impersonation’, ‘injection’, and ‘flooding’ were 98.46%, 99.99%, 98.39%, and 73.12%, respectively [19]. A summary of recent 232 deep learning-based approaches for WSN intrusion detection is presented in Table 2.

Table 2.

Deep learning approach.

Table 2.

Deep learning approach.

| Ref | Feature Set | Approach | Classification |

|---|---|---|---|

| [14] | 23 | COA-GS optimized IDNN | Multi-class classification |

| [15] | 50 | Stacked Autoencoder + Unsupervised K-Means | Impersonation class |

| [16] | 100, 50 | Stacked Autoencoder + Deep K-Means Clustering (k = 2) | Normal vs. impersonation |

| [17] | 71 | Stacked Autoencoder, 3-layer DNN, 7-layer DNN | Multiclass |

Recent deep learning approaches have demonstrated significant potential in enhancing intrusion detection systems, particularly for WSNs. In [16], a DNN optimized using the Coyote Optimization Algorithm with Global Search (COA-GS) was employed using a 23-feature input, achieving effective results in multi-class classification tasks. Reference [17] proposed an unsupervised learning approach targeting the impersonation class by applying K-Means clustering on 50 features extracted from a stacked autoencoder, eliminating the need for labeled data during training. Expanding on this, Ref. [18] utilized a stacked autoencoder with two hidden layers (100 and 50 neurons) to perform deep K-Means clustering with k = 2, achieving robust binary classification between normal and impersonation traffic. In [19], a series of architectures including a stacked autoencoder, a 3-layer DNN, and a more complex 7-layer DNN were evaluated using a 71-feature input, demonstrating strong performance across multiple attack classes in a multiclass intrusion detection setting. Collectively, these studies highlight the effectiveness of combining deep feature extraction and unsupervised or semi-supervised learning techniques to improve detection accuracy and reduce reliance on labeled data.

2.3. Broader Research Overview

The research process follows a structured and methodical approach to address the challenges of intrusion detection in WSNs. It begins with the selection of a relevant dataset (WSN-DS) containing multiple attack types. After preprocessing the data to ensure balance and consistency, the dataset is divided into partitions, each simulating a node in a distributed WSN. A hybrid deep learning model is then constructed using Conv1D for spatial feature extraction, GRU for temporal sequence modeling, and a Self-Attention mechanism to enhance focus on critical data patterns. The model is trained on each node separately to simulate a decentralized learning process. Finally, the results from all nodes are aggregated to evaluate the overall detection accuracy, highlighting the model’s suitability for real-world WSN applications where cooperation between nodes is essential.

3. Methodology

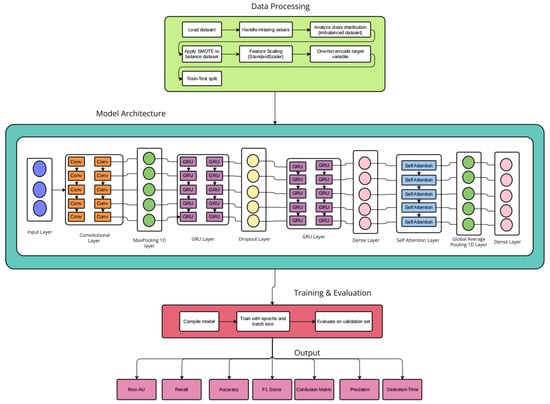

The study technique was organized, commencing with the collecting and preparation of the WSN-DS dataset. This included balancing the dataset using SMOTE and manual resampling, then scaling the features and labeling them. The next step required creating a deep learning architecture that incorporated Conv1D, GRU, and Self-Attention components. The model was constructed and trained using improved hyperparameters before being verified on a different test set. Finally, performance was assessed using a variety of measures, including accuracy, precision, recall, F1-score, ROC-AUC, detection time, and latency. An ablation research and node-based partitioning were also carried out to determine component contribution and distributed detection capacity. The proposed intrusion detection model in Figure 1 is designed to effectively classify multi-class attacks within WSNs using a hybrid deep learning architecture that integrates Conv1D, GRU, and Self-Attention mechanisms. The model begins with a comprehensive data (packet arrival rate, number of hops, source and destination node IDs, traffic rate, sequence numbers, routing path length) preprocessing pipeline applied to the WSN-DS dataset, including SMOTE for class balancing, one-hot encoding of categorical labels, standardization of input features, and reshaping of data for temporal modeling. The model architecture initiates with a 1D Convolutional layer to capture local spatial patterns in the input sequences, followed by a MaxPooling layer to reduce dimensionality and computational cost. Next, two stacked GRU layers are employed to extract long-term dependencies from the time-series data. A dropout layer is inserted between GRUs to mitigate overfitting. To enhance the model’s focus on relevant temporal features, a Self-Attention layer is integrated, allowing dynamic weighting of sequence components based on their importance. The attention outputs are then passed through a GlobalAveragePooling1D layer and a Dense softmax output layer for final classification. The model employs the Adam optimizer, utilizes categorical crossentropy as the loss function, and measures accuracy as the main metric. The hyperparameters consist of 10 training epochs, a batch size of 62, and a dropout rate of 0.3. This amalgamation of convolutional, recurrent, and attention-based elements endows the model with the capacity to identify nuanced patterns and abnormalities in WSN data, resulting in elevated detection accuracy and strong generalization performance.

Figure 1.

Proposed model.

3.1. One-Dimensional CNN (Conv1D)

CNN is a type of deep learning architecture primarily used for processing structured grid data. In this study, a one-dimensional CNN is utilized to process sequential network traffic features, enabling localized pattern extraction along the temporal dimension of the input data. The Conv1D layer scans across feature vectors using a set of learnable filters and generates spatial representations by applying convolutional operations. The transformation can be mathematically expressed as:

where and denote the input and output of the convolutional layer, respectively, and are the weight matrix and bias, and is the ReLU activation function. This operation enables the extraction of local temporal features, which are crucial for identifying attack patterns in network sequences.

3.2. GRU

GRU is a version of recurrent neural networks (RNNs) that successfully captures long-term relationships and mitigates the vanishing gradient issue. Unlike traditional RNNs, GRUs introduce gating mechanisms namely, the reset gate and update gate to regulate information flow. The GRU computation at time step is defined as follows:

This defines the input (a set of network communication features that describe the behavior of the sensor nodes) at time t, is the concealed state, indicates element-wise multiplication, and represents the sigmoid function. The reset gate controls the influence of the previous hidden state, while the update gate ascertains the extent of the new candidate state is used. This structure permits the GRU to efficiently encode sequential data without requiring separate memory cells.

3.3. Self-Attention Mechanism

The Self-Attention technique improves the model’s capacity to concentrate on relevant input segments by calculating pairwise relationships inside the sequence. It draws inspiration from human attention, allowing the model to dynamically assign weights to characteristics over various time steps. In this work, a custom Self-Attention layer is introduced following the GRU and dense layers to improve context sensitivity. Given input X, the attention layer computes the output as:

where Q, K, and V are the query, key, and value matrices derived from learnt modifications of the input, representing the dimensionality of the key vectors. The resulting weighted representation allows the model to emphasize the most informative parts of the input sequence. This improves both classification accuracy and model interpretability by highlighting which time steps contribute most to decision-making. The integration of Self-Attention with GRU and convolutional layers results in a hybrid architecture that combines spatial feature extraction, temporal sequence modeling, and adaptive attention-based weighting, effectively addressing the complexities of multi-class intrusion detection in WSNs.

The following algorithm outlines the process of training and evaluating a hybrid deep learning-based Intrusion Detection System (IDS) tailored for Wireless Sensor Networks (WSNs). Given a labeled dataset D = {()}, where each xi∈Rdx_i \in \mathbb{R}^dxi∈Rd represents a feature vector and yi∈{1,…,C}y_i \in \{1, \ldots, C\}yi∈{1,…,C} denotes the corresponding class label, the algorithm performs data preprocessing, addresses class imbalance using SMOTE, and constructs a hybrid model integrating Conv1D layers, GRUs, and a Self-Attention mechanism. The model is trained to classify network traffic into multiple categories and is evaluated using standard classification metrics, including accuracy, precision, recall, F1-score, ROC-AUC, as well as prediction time and delay, to assess its effectiveness in detecting intrusions in real-time WSN environments. The complete training and evaluation process of the proposed Conv1D–GRU–350 Self Attention-based model is summarized in Algorithm 1.

| Algorithm 1: Algorithm of the model Conv1D-GRU-Self Attention |

| Input: Dataset D = {( )} with feature vectors ∈ , labels {1,…,C} Output: Trained model M, predicted labels , performance metrics 1: function TrainHybridIDS(D, E, B) 2: # Step 1: Preprocessing 3: Normalize each feature in X using Z-score: 4: X ← (X—mean(X))/std(X) 5: Encode labels y into one-hot vectors: Y ∈ R^{N×C} 6: (X_train, Y_train), (X_test, Y_test) ← TrainTestSplit(X, Y, ratio = 0.8) 7: 8: # Step 2: Handle class imbalance 9: (X_bal, Y_bal) ← SMOTE(X_train, Y_train) 10: Reshape X_bal to shape (N, d, 1) for Conv1D input 11: 12: # Step 3: Build model M 13: Define input shape: (d, 1) 14: x ← Conv1D(filters = 32, kernel_size = 3, activation = ReLU)(input) 15: x ← MaxPooling1D(pool_size = 2)(x) 16: x ← GRU(units = 64, return_sequences = True)(x) 17: x ← Dropout(0.3)(x) 18: x ← GRU(units = 64, return_sequences = True)(x) 19: x ← Dense(units = 64, activation = ReLU)(x) 20: 21: # Self-Attention Mechanism 22: Q ← x · W_q; K ← x · W_k; V ← x · W_v 23: A ← softmax(Q · Kᵀ) 24: x_attn ← A · V 25: 26: x ← GlobalAveragePooling1D()(x_attn) 27: output ← Dense(C, activation = softmax)(x) 28: M ← Model(inputs = input, outputs = output) 29: 30: # Step 4: Train model 31: Compile M with Adam optimizer, categorical crossentropy loss 32: Train M on (X_bal, Y_bal) for E epochs with batch size B 33: 34: # Step 5: Evaluation 35: Ŷ ← M.predict(X_test) 36: For each i in 1 to |X_test|: 37: ŷ_i ← argmax(Ŷ_i) 38: 39: Compute: 40: Accuracy = (1/N) Σ_i 1(ŷ_i == y_i) 41: Precision, Recall, F1 ← weighted classification metrics 42: ROC_AUC ← one-vs-rest AUC(Ŷ, Y_test) 43: DetectionTime ← time to predict(X_test) 44: Delay ← 1 − Recall 45: 46: return M, ŷ, Accuracy, F1, Precision, Recall, ROC_AUC |

4. Experimental Setup

4.1. Dataset

WSN-DS is a dataset specifically designed for intrusion detection systems in WSNs. These Denial of Service (DoS) attacks include Blackhole, Grayhole, Flooding, and Scheduling. Following the preprocessing of data from Network Simulator 2 (NS-2) using the Low Energy Adaptive Clustering Hierarchy (LEACH) method, 23 characteristics were identified [8]. Moreover, each partitioned subset simulates a unique node in the network, with the model learning from traffic-related attributes such as packet frequency, hop count, and routing paths. This focus ensures that intrusion detection is based on anomalous communication behaviors rather than environmental fluctuations. Table 3 provides dataset statistics.

Table 3.

WSN-DS dataset statistics.

4.2. Evaluation Matrices

To thoroughly evaluate the reliability of the proposed Conv1D–GRU–Self-Attention model, a range of assessment metrics was utilized. These metrics provide insight into diverse facets of model performance, encompassing overall accuracy and its capacity to identify and differentiate between various intrusion types in a WSN environment.

Accuracy: Accuracy denotes the ratio of accurately predicted cases to the total number of forecasts. This metric provides an overall assessment of performance across all classes and is particularly effective when the dataset is balanced.

where TP = true positives, TN = true negatives, FP = false positives, and FN = false negatives

Precision: Precision quantifies the ratio of accurately predicted positive cases to the total instances projected as positive. Minimizing false alarms (false positives) is crucial in intrusion detection settings.

Recall: Also referred to as sensitivity or true positive rate, recall is the ratio of accurately detected genuine positive events.

F1 Score: The F1 score is the harmonic mean of accuracy and recall, offering a unified measure that equilibrates both factors. It is particularly advantageous when managing unbalanced datasets or when both false positives and false negatives incur significant costs.

ROC-AUC Score: The receiver operating characteristic—area under the curve (ROC-AUC) score quantifies the model’s aptitude to distinguish across classes, independent of classification thresholds. A perfect score is 1.0.

Detection Time: Detection time refers to the duration required for the model to process and classify incoming data. This is crucial in real-time applications like intrusion detection.

Delay: Delay is calculated as the complement of recall (1—recall), representing the fraction of actual intrusions not detected.

4.3. Baseline

To provide a comprehensive evaluation of the proposed intrusion detection system for WSNs, several baseline models are considered. These models span classical, hybrid, and deep learning approaches commonly used in the field of network security.

GWO-LSTM: The Grey Wolf Optimizer-based Long Short-Term Memory (GWO-LSTM) model combines evolutionary optimization with recurrent neural networks. GWO is used to optimize the LSTM’s hyperparameters, enhancing its capability to model sequential data and detect DNN temporal patterns associated with intrusions. This integration allows the model to better adapt to dynamic attack behaviors in WSN environments [20].

DNN: A standard serves as a baseline for deep learning-based intrusion detection. Comprising multiple fully connected layers, the DNN is capable of learning complex feature representations from input data. However, it lacks temporal modeling or specialized optimization, making it a useful benchmark for evaluating the benefits of more advanced hybrid models [10].

COA-GS-IDNN: The Coyote Optimization Algorithm with Global Search-enhanced Deep Neural Network (COA-GS-IDNN) integrates metaheuristic optimization into the training of a deep neural model. COA facilitates effective exploration of the search space, while the global search component enhances convergence to optimal solutions. This model is designed to improve detection accuracy and generalization in complex intrusion scenarios [16].

AdaBoost-RBFSVM: This hybrid model combines AdaBoost, an ensemble learning technique, with a Support Vector Machine using a Radial Basis Function kernel (RBF-SVM). The ensemble approach strengthens classification by combining multiple weak learners, while the RBF kernel enables nonlinear decision boundaries. This method provides a classical machine learning baseline with strong generalization capabilities in non-sequential data settings [21].

4.4. Experimental Setting

The efficacy of the proposed deep learning model for intrusion detection in WSNs was assessed via tests carried out on a Victus HP laptop including an Intel Core CPU, 16 GB of RAM, and 1.5 TB of SSD storage, operating on a 64-bit OS. The model was executed using the Python programming language, employing TensorFlow and Keras as the principal deep learning frameworks. The studies used the WSN-DS dataset, a well-established benchmark for network intrusion detection, which was preprocessed utilizing methods like SMOTE, feature standardization, and one-hot encoding to achieve data balance and numerical stability. The proposed model was trained with the following key hyperparameters presented in Table 4.

Table 4.

Hyperparameter settings.

The selection of hyperparameters in this study was guided by a combination of experimental tuning, architectural compatibility, and the resource limitations typical of WSNs. Each parameter was chosen to optimize the trade-off between detection accuracy, training stability, and computational efficiency. The number of GRU units was set to 32 to ensure the model could capture temporal patterns without incurring excessive computational overhead. Larger configurations (e.g., 64 or 128 units) were tested during preliminary trials but showed diminishing returns in accuracy while increasing training time and memory usage—a critical concern for energy-constrained environments like WSNs. The dropout rate of 0.3 was selected to introduce moderate regularization. This helped prevent overfitting during training, especially given the relatively small size of WSN traffic data per node. Lower dropout rates (e.g., 0.1) were insufficient to generalize well, while higher rates (above 0.5) degraded convergence. The kernel size for the Conv1D layer was fixed at 3, which is a standard choice for capturing short-range spatial dependencies in sequential data. A larger kernel size (e.g., 5 or 7) led to an unnecessary increase in parameters without significant performance gain, while a smaller kernel (size 1) limited the model’s ability to learn local patterns effectively. The batch size was set to 62 based on empirical testing. This size was small enough to support stable mini-batch gradient descent without overloading memory resources during training. It also ensured consistent convergence across partitions of the dataset simulating different nodes. The number of training epochs was limited to 10. This was found to be sufficient for the model to converge on the WSN-DS dataset. Increasing the epoch count showed minimal improvement while risking overfitting, particularly when using balanced but limited node-level data.

These settings were selected to optimize learning efficiency while mitigating overfitting and ensuring generalization. The Adam optimizer was chosen for its adaptive learning rate capabilities, and categorical crossentropy was used as the loss function due to the multi-class nature of the classification problem. All training and evaluation tasks were performed locally without GPU acceleration. Despite this, the model exhibited strong performance and reasonable training speed, demonstrating its suitability for deployment even on modest hardware configurations.

5. Ablation Experiment

To assess the contribution of each component within the proposed Conv1D–GRU–Self-Attention architecture, an ablation study was conducted. This experiment involves systematically removing specific layers from the model to observe the effect on both accuracy and detection time. The results clearly demonstrate the individual importance of each architectural element. When the Self-Attention layer was removed, the model’s accuracy dropped to 98.48%, with a detection time of 25.14 s, indicating that while accuracy remained high, the absence of contextual feature weighting slightly impaired performance. Removing the dense layer resulted in an accuracy of 98.33% and a detection time of 22.94 s, highlighting the role of the dense layer in refining the feature representations for final classification. Further, omitting the second GRU layer led to a minor decrease in accuracy to 98.31% but notably reduced the detection time to 20.53 s, showing a trade-off between depth and speed. Excluding the dropout layer caused the accuracy to decline to 98.23%, while the detection time improved to 17.22 s, suggesting that while regularization adds slight computational overhead, it helps maintain generalization. In the most minimal setup, using only the Conv1D layer, the model achieved significantly lower accuracy at 90.88%, with a detection time of 20.53 s. This result confirms that relying solely on convolutional feature extraction is insufficient for capturing the temporal dependencies inherent in WSN traffic data. Overall, the ablation results affirm that each component particularly the recurrent and attention mechanisms plays an essential function in achieving the high accuracy and robustness of the final model. The integration of these layers contributes meaningfully to both predictive performance and temporal learning, validating the design of the proposed architecture. The impact of removing each component of the model is shown in Table 5.

Table 5.

Ablation experiment.

6. Results

The proposed Conv1D–GRU–Self-Attention model was evaluated on a preprocessed and balanced version of the WSN-DS dataset using several standard performance metrics to assess classification accuracy, detection robustness, and real-time efficiency. The evaluation focused on both predictive performance and operational responsiveness, which are crucial in WSN intrusion detection systems.

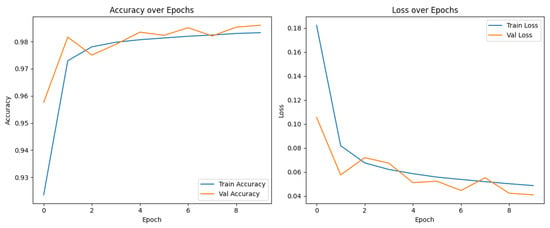

Figure 2 illustrates the training and validation performance graphs, showcasing the efficacy and stability of the proposed Conv1D–GRU–Self-Attention model throughout 10 epochs. The accuracy plot illustrates a consistent growth in both training and validation accuracy, with training accuracy reaching about 98.4% and validation accuracy slightly exceeding this at around 98.8%, indicating robust generalization to novel data and little overfitting. The loss curves demonstrate a consistent drop, with training loss reducing from 0.18 to below 0.05, while validation loss mirrors this pattern, ultimately stabilizing around 0.04. The strong correspondence between training and validation curves in both accuracy and loss indicates that the model is well-regularized and well trained, making it highly dependable for multi-class intrusion detection in WSNs.

Figure 2.

Accuracy and Loss over epochs.

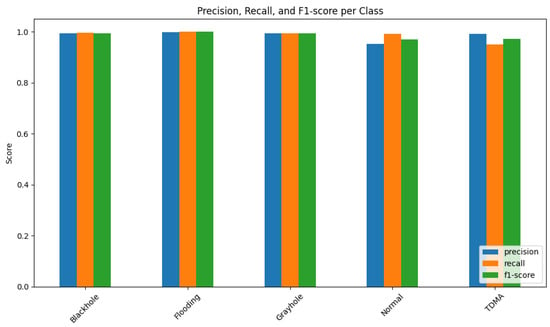

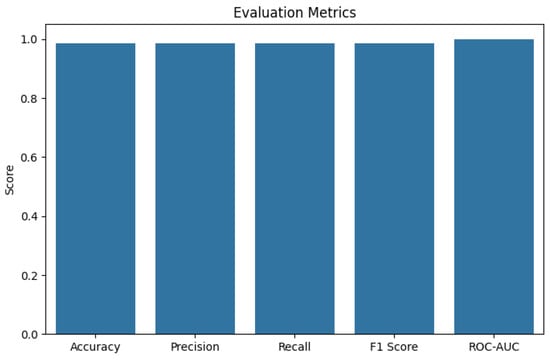

The precision was recorded at 98.63% in Figure 3, confirming that the majority of positive predictions were indeed true intrusions. Likewise, the recall value of 98.60% demonstrates the model’s strong capacity to detect nearly all relevant attack events without omission.

Figure 3.

Precision, recall, F1-score per class of attacks.

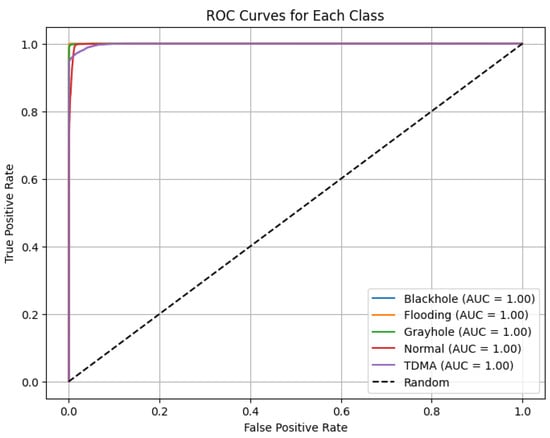

In terms of class discrimination in Figure 4, the ROC-AUC score reached 0.9994, which suggests the model is nearly perfect in distinguishing between attack and normal traffic patterns. This is particularly valuable for multi-class classification in cybersecurity applications, where subtle differences in traffic behavior must be captured accurately.

Figure 4.

ROC curves for each class of attacks.

The ROC curves for each attack class—Blackhole, Flooding, Grayhole, Normal, and TDMA—exhibit the exceptional efficacy of the proposed Conv1D–GRU–Self-Attention model in multi-class intrusion detection. The graphic demonstrates that all class-specific ROC curves are tightly aligned with the top-left corner, signifying almost flawless true positive rates and little false positives. Every class attained an area under the curve (AUC) value of 1.00, validating the model’s capacity to impeccably differentiate among distinct incursion types. This remarkable outcome underscores the efficacy of the hybrid deep learning architecture, together with balanced preprocessing methods like SMOTE and manual upsampling, in optimizing the model’s discriminative capability. The optimal AUC values for all classes confirm the model’s robustness, dependability, and suitability for implementation in real-world WSN situations.

Figure 5 demonstrates that the proposed model effectively achieves both high predicted accuracy and realistic reaction time, establishing it as a viable solution for real-world WSN intrusion detection tasks.

Figure 5.

Evaluation metrics.

To validate the effectiveness of the proposed Conv1D–GRU–Self-Attention model, a comparative analysis was conducted against several established intrusion detection models, including GWO-LSTM [19] Long Short-Term Memory network optimized using the Grey Wolf Optimizer for sequence modeling, DNN [8] with multiple dense layers for general classification tasks, COA-GS-IDNN [14], an IDNN enhanced with Coyote Optimization Algorithm and Global Search for parameter tuning, and AdaBoost-RBFSVM [22] Ensemble learning via AdaBoost combined with Radial Basis Function kernel-based SVM. The comparison was based on five key evaluation metrics: accuracy, F1-score, precision, recall, and ROC-AUC. Performance comparison of the proposed model with baseline 569 methods is illustrated in Table 6.

Table 6.

Result analysis.

Cooperative Node-Based Detection Simulation

To simulate distributed intrusion detection, the balanced dataset was partitioned randomly into five distinct subsets, each representing data from a separate WSN node. The hybrid deep learning model (Conv1D–GRU–Self-Attention) was trained independently on each partition to evaluate its adaptability and generalization across varying data sources. The detection accuracy across five independently trained nodes is listed in Table 7.

Table 7.

Node detection simulation.

The average accuracy across all five nodes was 97.12%, with only minor variations between nodes. These results indicate strong consistency and generalizability of the proposed model across decentralized data sources. It also demonstrates the model’s potential for real-world deployment in a cooperative intrusion detection framework, where individual sensor nodes analyze localized traffic and share their predictions with a central aggregator for final decision-making.

7. Discussion

Compared to existing intrusion detection models, the proposed Conv1D-GRU-Self Attention architecture demonstrates superior performance across key metrics. For instance, it outperforms models such as GWO-LSTM and COA-GS-IDNN, which have shown moderate accuracy improvements but lack attention mechanisms that enhance feature prioritization. Furthermore, models like AdaBoost-RBFSVM and traditional DNNs achieve lower precision and recall, especially under class imbalance conditions. Unlike these approaches, our model incorporates temporal dependencies through GRUs and leverages attention to dynamically focus on significant input features, resulting in a balanced trade-off between detection accuracy and computational efficiency. These improvements position our method as a robust and scalable solution for WSN intrusion detection in dynamic and resource-constrained environments.

This study explored a hybrid deep learning approach combining Conv1D, GRU, and Self-Attention mechanisms for intrusion detection in WSNs. The choice of the WSN dataset is motivated by the growing need for lightweight and accurate models in resource-constrained environments where real-time threat detection is critical. Our proposed model is designed to efficiently capture spatial and temporal dependencies in the data while enhancing feature relevance through the attention mechanism. The Conv1D layers in the model serve as the initial stage for spatial feature extraction. Unlike traditional feature engineering techniques, Conv1D can autonomously learn low-level patterns from raw input sequences, which improves adaptability across different network environments. This approach reduces preprocessing overhead and allows the model to remain flexible in varying sensor data scenarios.

Following the convolutional layers, GRU units are applied to capture sequential dependencies in the network traffic data. GRUs are computationally more efficient than LSTMs while still maintaining the ability to learn long-term dependencies, making them ideal for WSN applications where low-latency inference is important. Their bidirectional configuration ensures that both past and future contextual information are taken into account, improving detection sensitivity. The integration of the Self-Attention mechanism plays a vital role in highlighting the most informative time steps within the input sequences. This allows the model to assign higher importance to certain network behaviors while downplaying irrelevant or redundant features. As a result, the Self-Attention layer improves the interpretability and robustness of the intrusion detection process, which is crucial for real-world deployment in critical infrastructure. Performance evaluation metrics such as accuracy, F1-score, precision, recall, and ROC-AUC indicate that the proposed model performs exceptionally well on the WSN dataset. It achieved a high ROC-AUC of 0.9994 and F1-score of 0.9860, suggesting both excellent classification accuracy and balanced efficacy across all classes, even in the presence of class imbalance. These results validate the effectiveness of combining convolutional, recurrent, and attention-based methods in a unified architecture.

An important observation from our experiments is the significantly improved detection time. The proposed model achieves a detection latency of just 53 s, a substantial improvement over many existing deep learning models in the literature. This rapid inference time suggests that the model can be effectively used in near real-time scenarios, which is a major requirement in intrusion detection for WSNs. Although the excellent demonstration of the proposed model in detection performance, energy efficiency remains a crucial consideration for WSN nodes, which often operate on limited battery power. The Conv1D–GRU–Self-Attention architecture is more computationally demanding than shallow classifiers but offers a trade-off between performance and complexity. The use of Conv1D instead of 2D convolutions, moderate GRU units (32), and a single attention layer helps to maintain relatively low inference costs. In theoretical terms, the model’s complexity scales linearly with sequence length and features. Future work will focus on optimizing the architecture further potentially through model pruning, quantization, or edge deployment using lightweight frameworks to ensure energy-efficient operation on embedded WSN hardware.

The proposed model demonstrates strong accuracy across all simulated WSN nodes, with individual accuracies ranging between 96.06% and 97.96%. This consistency illustrates the model’s ability to generalize effectively across distributed environments with diverse traffic patterns. Unlike traditional centralized IDS solutions, the node-based simulation shows how detection can be performed locally without compromising performance, which is particularly advantageous for WSNs where energy consumption and bandwidth are constrained. The hybrid architecture allows the model to learn both short-term and long-term attack signatures, while the Self-Attention mechanism improves interpretability and precision by dynamically weighting features. Compared to earlier studies that relied on static features or centralized architectures, our approach aligns more closely with the operational nature of WSNs, where nodes must detect anomalies autonomously and collaboratively. This cooperative setup reflects a practical intrusion detection paradigm, addressing both real-time responsiveness and architectural scalability. The approach still has many shortcomings in spite of the encouraging outcomes. Further testing on real-time, noisy, and partial data from actual WSN environments would be beneficial, even if the existing architecture works well on the dataset that has been provided. Furthermore, model stability and convergence may be impacted by hyperparameter sensitivity, specifically learning rate and batch size. When applying the model to different datasets or applications, these must be adjusted.

8. Conclusion and Future Work

In this work, we introduced a deep learning model tailored for intrusion detection in WSNs, combining Conv1D, GRU, and Self-Attention layers. The model achieved an accuracy of 98.60% and a ROC-AUC score of 99.94%, outperforming other benchmark methods such as GWO-LSTM and COA-GS-IDNN. Through the use of a detailed ablation study, it was found that the Self-Attention layer played a critical role in enhancing the model’s ability to identify complex attack patterns by dynamically focusing on relevant features. Additionally, we simulated a distributed learning scenario by training models across partitioned subsets of node data. The results showed consistent performance across all nodes, supporting the model’s applicability in decentralized WSN environments. These findings demonstrate that the proposed model not only excels in centralized learning tasks but also adapts well to distributed architectures, which are common in real-world IoT-based sensor networks. Although the performance is encouraging, there are a few areas that could be improved in the future. Deployment on edge devices with stringent latency constraints may be made possible by further cutting the detection time by model quantization, architectural pruning, or switching to lightweight frameworks (like TensorFlow Lite). The model may also be able to adjust to changing intrusion patterns with less labelled data if domain adaptation or semi-supervised learning approaches are used. Transparency would be increased by incorporating explainability techniques like SHAP or LIME, which would enable stakeholders to trust the model’s judgements and understand its forecasts, particularly in mission-critical settings.

Author Contributions

Conceptualization, K.H.R.M. and A.A. (Ainur Akhmediyarova); Methodology, K.A., F.Z. and A.A. (Assem Ayapbergenova); Software, K.H.R.M., O.M., A.A. (Ainur Akhmediyarova) and A.A. (Assem Ayapbergenova); Validation, K.H.R.M.; Formal analysis, K.A. and N.M.; Investigation, G.W.; Resources, O.M.; Data curation, A.A. (Ainur Akhmediyarova); Writing—original draft, O.M. and N.M.; Writing—review & editing, G.W. and F.Z.; Visualization, N.M. and A.A. (Assem Ayapbergenova); Supervision, K.A., G.W. and F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the Committee of science of the Ministry of Science and Higher Education of the Republic of Kazakhstan (Grant No. BR24993166).

Data Availability Statement

The data presented in this study are openly available at https://www.kaggle.com/datasets/bassamkasasbeh1/wsnds, accessed on 29 May 2025.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Talukder, M.A.; Sharmin, S.; Uddin, M.A.; Islam, M.M.; Aryal, S.; Zomaya, A.Y. MLSTL-WSN: Machine learning-based intrusion detection using SMOTETomek in WSNs. Int. J. Inf. Secur. 2024, 23, 2139–2158. [Google Scholar] [CrossRef]

- Gueriani, A.; Kheddar, H.; Mazari, A.C. Deep Reinforcement Learning for Intrusion Detection in IoT: A Survey. arXiv 2024, arXiv:2405.20038. [Google Scholar]

- Kolias, C.; Kambourakis, G.; Stavrou, A.; Gritzalis, S. Intrusion Detection in 802.11 Networks: Empirical Evaluation of Threats and a Public Dataset. IEEE Commun. Surv. Tutor. 2015, 18, 184–208. [Google Scholar] [CrossRef]

- Bhandari, S.; Kukreja, A.K.; Lazar, A.; Sim, A.; Wu, K. Feature selection improves tree-based classification for wireless intrusion detection. In Proceedings of the 3rd International Workshop on Systems and Network Telemetry and Analytics, Stockholm, Sweden, 23 June 2020; pp. 19–26. [Google Scholar]

- Gaber, T.; El-Ghamry, A.; Hassanien, A.E. Injection attack detection using machine learning for smart IoT applications. Phys. Commun. 2022, 52, 101685. [Google Scholar] [CrossRef]

- Thanthrige, U.S.K.P.M.; Samarabandu, J.; Wang, X. Machine learning techniques for intrusion detection on public dataset. In Proceedings of the 2016 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Vancouver, BC, Canada, 15–18 May 2016; pp. 1–4. [Google Scholar]

- Kang, H.; Kim, J.; Lee, J. Energy-Efficient and Reliable Wireless Sensor Networks: Challenges and Solutions. J. Low Power Electron. Appl. 2024, 15, 705. [Google Scholar]

- Patel, S.; Jawarkar, D.N. Comprehensive survey on WSN: Current trends, challenges and future scope. Mater. Today Proc. 2021, 47, 1975–1982. [Google Scholar]

- Rahman, M.A.; Asyhari, A.T.; Wen, O.W.; Ajra, H.; Ahmed, Y.; Anwar, F. Effective combining of feature selection techniques for machine learning-enabled IoT intrusion detection. Multimed. Tools Appl. 2021, 80, 31381–31399. [Google Scholar] [CrossRef]

- Gavel, S.; Raghuvanshi, A.S.; Tiwari, S. An optimized maximum correlation based feature reduction scheme for intrusion detection in data networks. Wirel. Netw. 2022, 28, 2609–2624. [Google Scholar] [CrossRef]

- Park, S.B.; Jo, H.J.; Lee, D.H. G-IDCS: Graph based intrusion detection and classification system for CAN protocol. IEEE Access 2023, 11, 39213–39227. [Google Scholar] [CrossRef]

- Kandhro, I.A.; Alanazi, S.M.; Ali, F.; Kehar, A.; Fatima, K.; Uddin, M.; Karuppayah, S. Detection of real-time malicious intrusions and attacks in IoT empowered cybersecurity infrastructures. IEEE Access 2023, 11, 9136–9148. [Google Scholar] [CrossRef]

- Boahen, E.K.; Bouya-Moko, B.E.; Qamar, F.; Wang, C. A deep learning approach to online social network account compromisation. IEEE Trans. Comput. Soc. Syst. 2022, 10, 3204–3216. [Google Scholar] [CrossRef]

- Shirazi, H.; Muramudalige, S.R.; Ray, I.; Jayasumana, A.P.; Wang, H. Adversarial autoencoder data synthesis for enhancing machine learning-based phishing detection algorithms. IEEE Trans. Serv. Comput. 2023, 16, 2411–2422. [Google Scholar] [CrossRef]

- Tao, L.; Xueqiang, M. Hybrid strategy improved sparrow search algorithm in the field of intrusion detection. IEEE Access 2023, 11, 32134–32151. [Google Scholar] [CrossRef]

- Sedhuramalingam, K.; Saravanakumar, N. A novel optimal deep learning approach for designing intrusion detection system in wireless sensor networks. Egypt. Inform. J. 2024, 27, 100522. [Google Scholar] [CrossRef]

- Aminanto, M.E.; Kim, K. Improving detection of Wi-Fi impersonation by fully unsupervised deep learning. Inf. Secur. Appl. 2018, 18, 212–223. [Google Scholar]

- Kim, K.; Aminanto, M.E.; Tanuwidjaja, H.C. Deep feature learning. In Network Intrusion Detection Using Deep Learning: A Feature Learning Approach; Springer: Berlin/Heidelberg, Germany, 2018; pp. 47–68. [Google Scholar]

- Wang, S.; Li, B.; Yang, M.; Yan, Z. Intrusion detection for Wi-Fi network: A deep learning approach. In Proceedings of the International Wireless Internet Conference, Taipei, Taiwan, 15–16 October 2018; pp. 95–104. [Google Scholar]

- Karthic, S.; Kumar, M.S.; Prakash, P.N.S. Grey wolf based feature reduction for intrusion detection in WSN using LSTM. Int. J. Inf. Technol. 2022, 14, 3719–3724. [Google Scholar] [CrossRef]

- Jianjian, D.; Yang, T.; Feiyue, Y. A novel intrusion detection system based on IABRBFSVM for wireless sensor networks. Procedia Comput. Sci. 2018, 131, 1113–1121. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Alazab, M.; Soman, K.P.; Poornachandran, P.; Al-Nemrat, A.; Venkatraman, S. Deep learning approach for intelligent intrusion detection system. IEEE Access 2019, 7, 41525–41550. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).