Abstract

As the main area for photosynthesis in trees, the canopy absorbs a large amount of carbon dioxide and plays an irreplaceable role in regulating the carbon cycle in the atmosphere and mitigating climate change. Therefore, monitoring the growth of the canopy is crucial. However, traditional field investigation methods are often limited by time-consuming and labor-intensive methods, as well as limitations in coverage, which may result in incomplete and inaccurate assessments. In response to the challenges encountered in the application of tree crown segmentation algorithms, such as adhesion between individual tree crowns and insufficient generalization ability of the algorithm, this study proposes an improved algorithm based on Mask R-CNN (Mask Region-based Convolutional Neural Network), which identifies irregular edges of tree crowns in RGB images obtained from drones. Firstly, it optimizes the backbone network by improving it to ResNeXt and embedding the SENet (Squeeze-and-Excitation Networks) module to enhance the model’s feature extraction capability. Secondly, the BiFPN-CBAM module is introduced to enable the model to learn and utilize features more effectively. Finally, it optimizes the mask loss function to the Boundary-Dice loss function to further improve the tree crown segmentation effect. In this study, TCSNet also incorporated the concept of panoptic segmentation, achieving the coherent and consistent segmentation of tree crowns throughout the entire scene through fine tree crown boundary recognition and integration. TCSNet was tested on three datasets with different geographical environments and forest types, namely artificial forests, natural forests, and urban forests, with artificial forests performing the best. Compared with the original algorithm, on the artificial forest dataset, the precision increased by 6.6%, the recall rate increased by 1.8%, and the F1-score increased by 4.2%, highlighting its potential and robustness in tree detection and segmentation.

1. Introduction

In terms of plant growth, the shape and size of the tree crown directly reflect the growth status and environmental adaptability [1]. By monitoring the growth of tree crowns, it is possible to understand the health status, growth rate, and response to the environment of plants in a timely manner. This is crucial information for agricultural and forestry managers, which helps them develop reasonable management strategies, improve plant growth efficiency, and protect the ecological environment. Therefore, obtaining accurate crown information is not only a scientific research requirement, but also an urgent need for agricultural and forestry production practice [2,3].

The current tree crown segmentation algorithm faces a series of complex problems in practical applications [4]. Firstly, the complex and varied backgrounds and overlapping tree crowns make it difficult to accurately identify individual tree crowns, especially in dense forest environments. Secondly, the shape and size of tree crowns vary significantly among different tree species and growth stages, which requires algorithms to have a high degree of flexibility and adaptability to cope with various changes. However, the influence of lighting conditions and shadows further increases the difficulty of tree crown segmentation, as different lighting conditions alter the representation characteristics of tree crowns. The generalization ability of algorithms is also an important issue. Due to differences in forest environments, tree species, and growth conditions in different regions, tree crown segmentation algorithms are required to have a robust generalization ability to adapt to different application scenarios. However, this often requires a large amount of annotated data to train the algorithm, and manually annotating tree crowns is a time-consuming and difficult task, limiting the number of samples available for algorithm training.

With the continuous advancement of remote sensing technology, the development of high spatial resolution remote sensing images and information extraction methods [5,6] provides a new solution for extracting individual tree crowns. This tree crown detection method based on remote sensing technology not only improves work efficiency and reduces costs compared to traditional manual depiction, but also achieves efficient monitoring and management of large areas. The method used in this study is based on Mask R-CNN and is specifically designed to identify irregular edges of individual tree crowns in unmanned aerial vehicle (UAV) RGB images. The use of traditional Mask R-CNN for tree crown segmentation is prone to complex background interference, poor segmentation performance for small targets, and difficulty in capturing irregular tree crown boundaries. Therefore, further improvement is needed. Through this method, automatic detection and segmentation of tree crowns is achieved, providing a more convenient and accurate solution for the management and monitoring of tree resources.

The innovative points of this study are as follows:

- Enhance the model’s feature extraction capability by introducing the SE-ResNeXt framework.

- Add BiFPN-CBAM to help the model learn and utilize multi-scale features more efficiently.

- Optimize the mask loss function to the Boundary-Dice loss function, focusing on improving the model’s ability to handle boundary details in tree crown segmentation tasks.

2. Related Work

2.1. Machine Learning Algorithms

In machine learning, the commonality between tree crown detection and segmentation lies in accurately identifying and dividing tree crown regions through features in images, whether it is isolated trees or individual tree crowns in dense forests. In order to achieve accurate segmentation in complex backgrounds, algorithms typically rely on features such as color, texture, and shape of images for classification, especially when dealing with overlapping tree crowns, changes in environmental lighting, and blurred tree boundaries. Models need to have strong adaptability. For sub-meter resolution satellite images, an algorithm based on the marker point process (MPP) and geometric optics model iteratively satisfies the density factor, achieving accurate detection and depiction of semi isolated trees (TOF) [7]. This algorithm considers the variable of tree crown radius and performs well in various environmental tests, providing a new approach for tree crown detection in complex environments. As an emerging technology platform, UAVs also demonstrate significant potential in tree crown segmentation. Torresan et al. [8] explored the application of UAV laser scanners in double-layer dense mixed forests. By adjusting algorithm parameters, accurate division of individual tree crowns and estimation of biomass parameters were achieved, although the accuracy was affected by the complexity of multi-layer and multi-species canopies. In terms of tree species classification, Maschler et al. [9] used visible to near-infrared hyperspectral datasets, combined with object-based random forest classification, to achieve accurate classification of 13 tree species, further expanding the application scope of crown segmentation algorithms.

The advantages of machine learning in tree crown detection and segmentation are mainly reflected in its powerful modeling ability and flexibility. Traditional machine learning algorithms such as support vector machines and decision trees can construct models by learning large amounts of sample data, achieving effective recognition of tree crowns. These algorithms perform well in handling small-scale datasets and have high computational efficiency, making them suitable for scenarios with high real-time requirements. In addition, machine learning algorithms can further improve detection accuracy and robustness through techniques such as ensemble learning and feature fusion. However, machine learning also has certain limitations in crown detection and segmentation. Firstly, the performance of machine learning algorithms is highly dependent on feature selection and extraction, and manually designed features often struggle to fully reflect the complexity and diversity of tree crowns. Secondly, machine learning algorithms may face computational bottlenecks and overfitting issues when processing large-scale datasets, which can affect the model’s generalization ability. Finally, machine learning algorithms have poor adaptability to environmental factors such as lighting and occlusion, which can easily lead to a decrease in detection accuracy.

2.2. Object Detection Methods

Object detection algorithms typically rely on the ability to locate and classify objects in the image when detecting tree crowns. This type of algorithm needs to handle crown recognition in complex backgrounds, be able to detect tree crowns at different scales, and handle diversity in shape, size, and color. The main feature of these algorithms is the ability to quickly locate tree crown regions in larger input images, and then determine whether each region contains tree crowns through feature extraction and classification. The improved Local Maximum algorithm accurately identifies individual trees from high-resolution remote sensing images by searching for local maximum values in the grid direction and combining them with a variable window confirmation strategy [10]. This algorithm is sensitive to parameter settings but achieves high overall accuracy and low false detection and missed detection rates, providing a feasible solution for individual tree detection. In urban subtropical mixed forests, a detection method based on crown morphology information uses UAV LiDAR data to generate a crown height model and identifies crown morphology through local maximum filters and Gi* statistics [11]. This method exhibits high detection rates under different forest structures, providing an effective means for individual tree detection in urban forests. The automatic tree detection method based on vegetation index and drone images achieves precise monitoring of crops such as chestnut trees by calculating vegetation index and crown height models [12]. This method improves the efficiency and sustainability of agricultural management, providing a new perspective for crop monitoring.

Object detection algorithms demonstrate high accuracy and flexibility in tree crown detection. Deep learning-based object detection algorithms, such as Faster R-CNN, YOLO, etc., can automatically recognize the position and boundaries of tree crowns from images without the need for manual intervention for efficient detection. These algorithms extract image features through convolutional neural networks, combined with region proposal networks and classifiers, to achieve accurate recognition of tree crowns. In addition, object detection algorithms perform well in handling complex scenes and can cope with changes in tree crown under different lighting conditions, angles, and occlusion situations. Although object detection algorithms have achieved significant results in tree crown detection, they still face some challenges. Firstly, when the shape of the tree crown undergoes deformation or is affected by environmental factors such as lighting and obstruction, the detection accuracy may be affected. Secondly, object detection algorithms require high computational resources and memory, especially when processing high-resolution images, which may result in a decrease in processing speed and are not suitable for scenarios with high real-time requirements. In addition, for the detection of dense tree crown areas, object detection algorithms may encounter false or missed detections, which affects the accuracy of detection.

2.3. Deep Learning Methods

Deep learning demonstrates powerful capabilities in tree crown detection and segmentation, and its commonality lies in automatically learning tree crown morphological features through neural networks. In response to the complex and diverse tree crown morphology, deep learning captures subtle differences through large-scale data training and has strong generalization ability to adapt to different environments. Its segmentation method combines pixel-level accurate segmentation to address challenges such as occlusion and lighting changes. A semi-supervised deep learning neural network achieved effective detection of tree crowns in RGB images by combining tree data generated from unsupervised LIDAR segmentation with a small amount of manually labeled RGB images [13]. This method not only overcomes the problem of insufficient labeled training data, but also significantly improves the prediction accuracy of the model in natural landscapes, demonstrating the enormous potential of semi-supervised learning in tree crown detection. The real-time tree crown detection method based on FPGA (PF-TCD) addresses the issues of high power consumption and low speed in large-scale satellite image processing. By optimizing algorithm design, it achieves a significant improvement in processing speed [14]. Experiments have shown that PF-TCD processes large-scale remote sensing images at a much faster speed than traditional CPU platforms, while maintaining high detection accuracy, meeting the demand for real-time processing of data obtained from satellites. The universality of deep learning in tree crown detection has also been widely studied. Cross-site learning experiments have shown that deep learning models exhibit strong detection performance in various forest types, especially among structurally similar forests [15]. This provides strong support for building a universal tree crown detection algorithm. The deep learning ensemble method further improves the accuracy of tree crown detection and species classification by combining the advantages of multiple models [16]. The experimental results show that the integrated model is superior to the single model in detection performance and accuracy, which provides a new way for high-precision crown detection. In the application of high-resolution RGB images in UAVs, the convolutional neural network (CNN) method combined with advanced object detection algorithms such as Faster R-CNN [17], YOLOv3 [18], and RetinaNet [19] has achieved efficient detection of protected tree species [20]. The experimental results show that these methods not only have high accuracy, but also have fast processing speed, providing strong support for the protection of endangered tree species. SUN et al. [21] utilized the YOLOv4 deep learning network and airborne LiDAR data to generate altitude maps, combined with computer graphics algorithms, significantly improving the accuracy and robustness of tree crown segmentation, overcoming the limitations of traditional methods affected by uneven lighting.

Deep learning has demonstrated significant advantages in tree crown detection and segmentation. Deep learning algorithms can achieve accurate detection and segmentation of tree crowns by automatically learning hierarchical feature representations in images. Compared with traditional machine learning algorithms, deep learning algorithms have stronger feature extraction capabilities and higher detection accuracy. In addition, deep learning algorithms can also handle large-scale datasets and improve detection performance and generalization ability by training more complex models. Deep learning algorithms such as Mask R-CNN have achieved significant results in tree crown detection and segmentation tasks, becoming the mainstream method in this field. However, deep learning also has certain limitations in tree crown detection and segmentation. Deep learning algorithms have high demands on computing resources and memory, requiring high-performance hardware support. Additionally, the training process of deep learning models is complex and time-consuming, requiring a large amount of labeled data to support model training. In addition, deep learning models may experience performance degradation when dealing with complex scenes and extreme lighting conditions. Finally, the interpretability of deep learning models is poor, making it difficult to directly understand the decision-making process within the model, which poses certain challenges for model optimization and debugging.

3. The Method

3.1. The Proposed TCSNet Structure

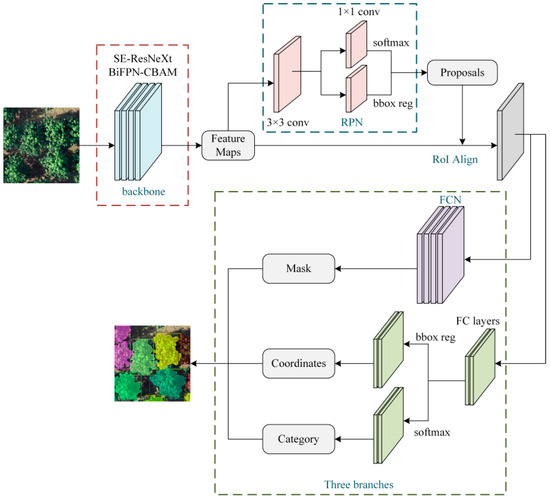

Mask R-CNN [22] is an advanced deep learning model used for simultaneous object detection and instance segmentation. It has been improved on the basis of Faster R-CNN; in addition to being able to recognize the position and category of targets in images, it also adds a branch to generate pixel-level segmentation masks for each target. Figure 1 shows the improved Mask R-CNN structure. The backbone network was optimized by improving it to ResNeXt and embedding the SENet module to enhance the model’s feature extraction capability. Additionally, the BiFPN-CBAM module was introduced to enable the model to learn and utilize features more effectively.

Figure 1.

The proposed TCSNet structure.

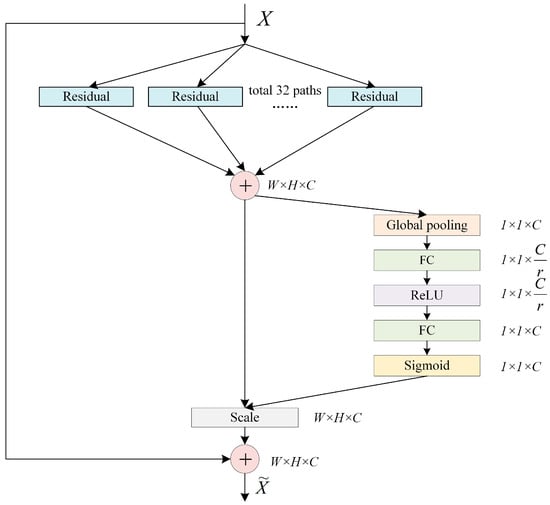

3.2. SE-ResNeXt Model

To further enhance the backbone of the proposed TCSNet, we employ the SE-ResNeXt model, which plays a critical role in boosting feature extraction and improving model performance (Figure 2). The SE-ResNeXt model is an efficient image classification network built upon the ResNeXt [23] model by incorporating the Squeeze-and-Excitation (SE) [24] module. The model enhances the representational ability of the network by explicitly modeling the interdependence between feature channels. The SE module learns the importance of each feature channel and recalibrates these feature channels, enabling the model to automatically enhance useful features and suppress redundant features during training.

Figure 2.

SE-ResNeXt structure.

In SE-ResNeXt, each ResNeXt base block is embedded with an SE module. Specifically, the SE module first compresses the spatial dimension of the input feature map through global average pooling operation, obtaining a feature vector representing global information. Then, the feature vector is fed into two fully connected layers to learn the nonlinear relationship between each channel and output the importance weight of each channel. Finally, these weights are used to recalibrate the various channels of the original feature map, achieving the reassignment of feature channels.

3.3. BiFPN Structure

Following the improvements brought by SE-ResNeXt to the backbone, we now turn our focus to multi-scale feature fusion using the BiFPN structure, which is crucial for handling features across different resolutions. BiFPN [25], also known as Bidirectional Feature Pyramid Network, is an efficient multi-scale feature fusion network that significantly optimizes the traditional Feature Pyramid Network (FPN). Taking P5 feature fusion as an example, the weighted bidirectional pyramid network structure is represented by the following formula:

where is the middle feature in the channel from right to left, and is the output of the fifth layer. and are learnable weights. ,, and are the learned weights. is a fixed coefficient of 0.0001 to avoid numerical instability.

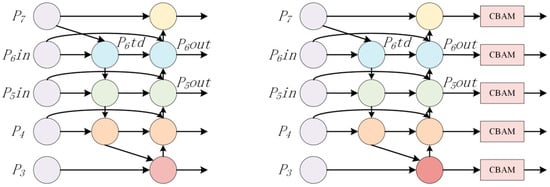

In Bifans, feature maps of different scales are usually fused using simple addition or concatenation methods. However, due to the uneven contribution of features at different scales to the final output, simply adding or concatenating these features may not fully utilize them. To address this issue, BiFPN introduces learnable weights. These weights are obtained through neural network training and can learn the importance of features at different scales. When performing feature fusion, BiFPN will weigh and add different features based on these weights to obtain more accurate and robust feature representations. BiFPN tends to treat all channels and spatial positions equally when fusing features, which limits its ability to highlight key features. To address this issue, the CBAM module is introduced to make the model more sensitive to important features through channel and spatial attention mechanisms. As shown in Figure 3, each layer’s output from BiFPN is enhanced by CBAM, providing several benefits. The channel attention mechanism allows the network to focus on the most relevant channels, filtering out irrelevant features. Meanwhile, the spatial attention mechanism helps the network zero in on critical areas of the image, such as edges and key regions, while ignoring background noise. This makes CBAM particularly effective for high-precision tasks like object detection or detailed segmentation.

Figure 3.

BiFPN and BiFPN-CBAM.

3.4. Boundary-Dice Loss

Boundary Loss [26] is mainly used for instance segmentation tasks, which improves the accuracy of segmentation boundaries by minimizing the difference between predicted boundaries and real boundaries. In tree crown segmentation, the edges of the tree crown often have dynamics and fuzziness, so accurately identifying the boundaries of the tree crown is crucial. Dice Loss (DLoss) is a loss function used for image segmentation tasks, which is calculated based on the Dice coefficient. DLoss has significant advantages in dealing with class imbalance problems and effectively handles situations where there is a large difference in the number of positive and negative samples in segmentation tasks. The following are the formulas related to Boundary Loss and DLoss:

where is a representation of the boundary of the ground truth region . represents the softmax probability output for the split network. is the level set representation of the boundary .

where P is the predicted segmentation region, and G is the actual segmentation region.

Combining Boundary Loss and DLoss fully leverages the advantages of both. Boundary loss focuses on the accuracy of boundaries, while DLoss focuses on the balance of categories. The combination of the two ensures both accurate identification of the tree crown boundary and the correctness and importance of the tree crown region in the overall segmentation results. This combination method enables the segmentation model to exhibit higher performance and robustness in tree crown segmentation tasks. The formula for Boundary-Dice Loss is as follows:

where represents the weight of DLoss during the model training process. As the training progresses toward the later stage, gradually decreases and the weight of Boundary Loss gradually increases.

4. Experimental Regions

4.1. Overview of the Research Areas

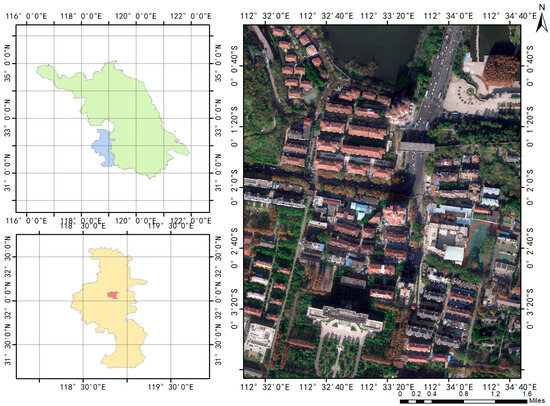

The research area of the urban forest dataset is located near Xuanwu Lake in Nanjing, Jiangsu Province, China (Figure 4). Nanjing has a typical subtropical humid climate, with hot and humid summers and relatively cold winters. Xuanwu Lake is a famous scenic spot in Nanjing, surrounded by abundant vegetation, including common tree species such as camphor, ginkgo, pine, and willow. The dataset consists of 16 high-resolution remote sensing images with a resolution of 10,000 × 8000 m, including various types of areas such as parks and green spaces. The arrangement of trees is diverse and the tree species are abundant.

Figure 4.

Urban forest scene. The research area is located near Xuanwu Lake in Nanjing, Jiangsu Province, China. The forest type is mixed forest, and common tree species include camphor (Camphora officinarum Nees ex Wall), ginkgo (Ginkgo biloba L.), pine (Pinus L.), and willow (Salix).

The study area of the natural forest dataset [27] is located in Malaysia. Malaysia’s climate belongs to the tropical rainforest climate, with warm and humid temperatures throughout the year, abundant rainfall, and minimal temperature fluctuations. This climate condition has nurtured Malaysia’s rich tropical rainforests, which have extremely high biodiversity and numerous unique species of flora and fauna, making them one of the most precious natural heritages on Earth.

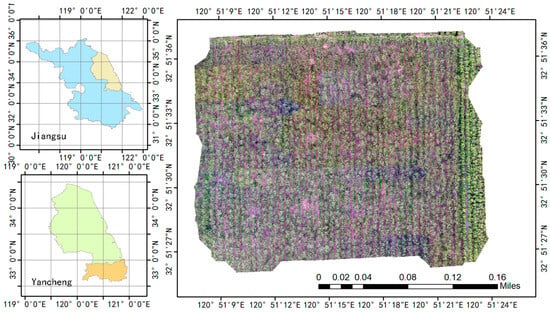

The experimental area of the artificial forest dataset is located in Huanghai Forest Farm, Dongtai, Yancheng, Jiangsu Province, at 32°51′ N and 120°50′ E (Figure 5). The forest farm has abundant tree resources, and this survey covers two blocks of poplar trees, with block 1 covering an area of about 10,000 square meters and block 2 covering an area of about 3600 square meters. The diameters at breast height and tree height have differences and certain representativeness. Figure 6 shows the equipment used for this data collection. Below is some information about drone data acquisition:

Figure 5.

Artificial forest scene. The research area of the artificial forest dataset is located in Jiangsu Huanghai Haibin National Forest Park in Yancheng. Here, there are vast artificial ecological forests with extremely high forest coverage. Common tree species in the park include metasequoia (Metasequoia glyptostroboides Hu et Cheng) and poplar (Populus spp.).

Figure 6.

UAV image collection equipment: M350RTK.

- Flight Path: Grid flight;

- Flight Altitude: 60 m;

- Scanning Parameters: Multi-echo repeated scanning;

- Data Acquisition Frequency: 240 kHz.

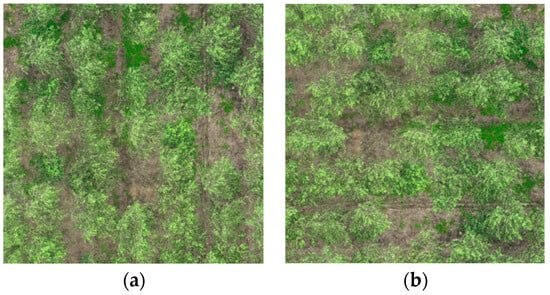

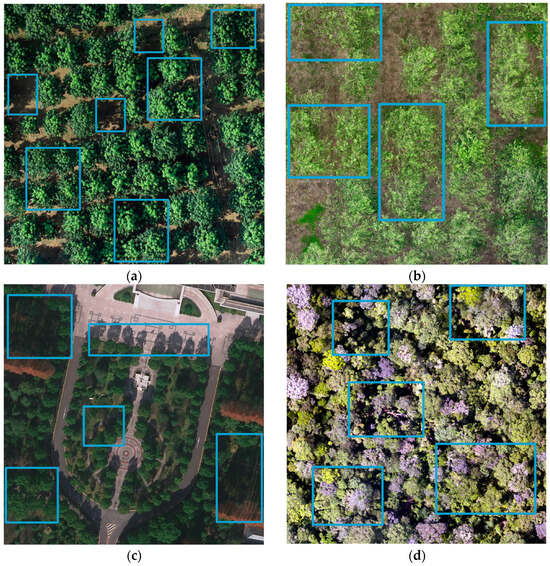

4.2. Data Preprocessing

The canopy of some trees in the study area was treated according to the following steps. Firstly, the contour boundaries of the canopy were manually annotated with QGIS (https://qgis.org, accessed on 12 March 2024), and then the canopy was digitized and linked to the data, including the number, species name, year, etc., of each tree. In further experiments, a total of 3538 digitized tree crowns and corresponding data were used. These steps ensure the accuracy and reliability of tree crown segmentation based on real scene data, providing a reliable foundation for subsequent research. When dividing the dataset, the drone image was cut into square tiles and the minimum coverage area of manually labeled canopy was set to 60%. This prevents the tree canopy in the dataset from being too sparse, which affects the sensitivity and available data of the algorithm. Randomly select 15% of the image tiles that meet the standards for the test set to ensure that enough data is available for training. When learning new tasks in deep convolutional neural networks, a large number of training samples are usually required, and manually annotating tree crown edges is time-consuming. To alleviate this burden, training data are expanded through data augmentation techniques, including flipping, rotating, scaling, adjusting contrast, etc. Randomly rotate the images in the dataset by 90°, with a 50% probability of horizontal flipping. The scaling ratio is 0.8–1.2, and the contrast range is 0.8–1.2. Increasing the frequency of rotation and flipping can enhance the robustness of the model, making it better-suited for objects in different directions. On the contrary, if these transformations are reduced, the model may overfit to targets in specific directions or poses. Expanding the zoom range allows the model to see more objects of different sizes, which helps improve its recognition ability. But if the scaling range is too large, it may make some targets difficult to recognize, affecting the stability of the model. Increasing the contrast range can enable the model to adapt to more lighting conditions, thereby improving its performance in complex environments. If the contrast range is reduced, the model may become sensitive to changes in lighting and perform poorly. We only applied data augmentation during the preprocessing stage to expand the dataset and improve data quality, without further data augmentation during the model training stage.

We used cross-validation as a technique to evaluate the performance of the model. Cross-validation is a commonly used and effective model validation method that maximizes the utilization of data resources on a limited dataset, helping to reduce the chance bias caused by data partitioning in model evaluation. Specifically, we divide the dataset into several equal subsets, selecting one subset as the validation set each time and using the rest for training the model. By repeating this process multiple times in a loop, each subset is given the opportunity to serve as a validation set, and the overall performance of the model is obtained by averaging the results of each validation (Figure 7).

Figure 7.

Data augmentation of poplar (Populus spp.) trees in artificial forest datasets. (a) Original image; (b) the image is obtained by rotation; (c) the image is obtained by changing the contrast; (d) the image is obtained by changing the saturation.

4.3. Training Environment and Parameter Settings

We trained our model using the following training environment: on the Windows 10 operating system, use NVIDIA RTX3060 GPU (Manufacturer: NVIDIA Corporation, Santa Clara, CA, USA) for training. PyTorch version is 1.12.0, Python version is 3.9.18, and CUDA version is 11.6. The maximum number of iterations is 4000. The initial learning rate is 0.001, batch size is 16, and optimizer is Adam. Our work has been improved based on Detectree2 [28]. The Detectree2 is a Python package based on Mask R-CNN, specifically designed for automatic tree crown detection and segmentation tasks.

5. Evaluation Methods

The manually annotated tree crown is randomly divided into a training set and a testing set. Using the improved Mask R-CNN framework, combined with the RGB images in the training set, the model learns how to automatically depict tree crown contours from the RGB images. After adjustment and training, the model with the best performance is selected for evaluating image tiles for the test set. For quantitative evaluation, the Intersection over Union is used. IoU is determined by calculating the intersection area between the predicted tree crown and the manually drawn tree crown, and then dividing it by the total area of these two regions. This indicator intuitively reflects the degree of consistency between the predicted tree crown and the actual tree crown. The calculation formula for crown overlap is as follows:

where A is the tree crown predicted by the model, and B is the manually drawn tree crown. When IoU is greater than 0.5, it is considered that the prediction matches the manual depiction of the tree crown.

When the IoU threshold is greater than 0.5, it is considered that the overlap between prediction and reality is high enough, and the accuracy, recall, and F1-score of the model are further calculated. Accuracy measures the proportion of tree crowns detected by the model that are truly real tree crowns. The recall rate focuses on the proportion of all real tree crowns in the test set that are correctly detected by the model. F1-score takes into account the overall accuracy of accuracy and recall rate. A higher F1-score indicates that the model has a higher accuracy and recall rate in tree crown detection, so its detection accuracy is also higher. The equations for precision (P), recall (R), and F1-score (F) are defined as follows:

The above three indicators are calculated from true positive (TP, number of correctly detected tree crowns), false positive (FP, number of incorrectly detected tree crowns), and false negative (FN, number of ignored tree crowns) values.

6. Experimental Results and Discussion

6.1. Visualization Results

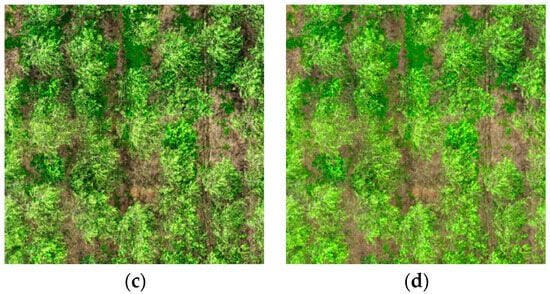

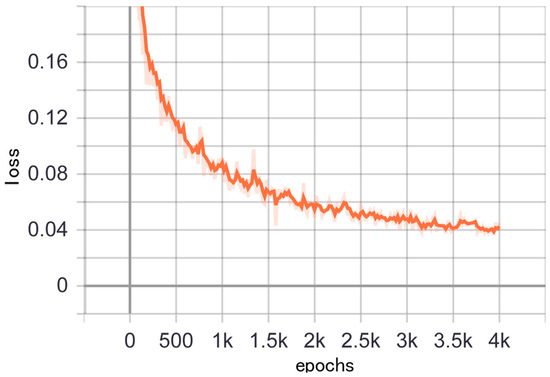

According to the trend observation of the chart, it is seen that the loss value showed a sharp decline in the first 750 iterations, and then gradually flattened after 1000 iterations. The total loss considers the training effect of multiple loss function comprehensive indicators (Figure 8). The decrease in loss value indicates that the model gradually optimizes during the learning process, improves the fitting effect and generalization ability of the data, and enhances the effect of depicting tree crown boundaries. The classification loss focuses on evaluating the loss function of model prediction accuracy in classification tasks (Figure 9). The loss for bounding box regression is used to measure the prediction error of bounding box regression in tree crown detection (Figure 10).

Figure 8.

Total loss. The total loss considers the training effect of multiple loss function comprehensive indicators.

Figure 9.

Classification loss. The classification loss focuses on evaluating the loss function of model prediction accuracy in classification tasks.

Figure 10.

Loss for bounding box regression. The loss for bounding box regression is used to measure the prediction error of bounding box regression in tree crown detection.

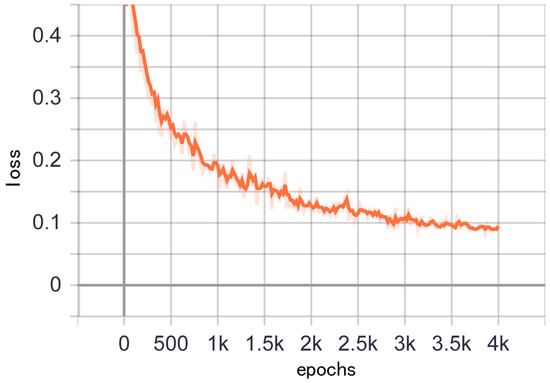

After the model training is completed, the best performing model parameters are retained to predict the test set. The segmentation performance on three different datasets is shown in Figure 11. The model performs the best on artificial forest datasets, with most tree crowns being able to be segmented and fewer missed detections. This is related to the high image resolution and orderly arrangement of trees in the artificial forest dataset. Due to the slightly lower image resolution of the urban forest dataset, the segmentation effect is slightly poor. Some tree crowns are difficult to segment, and some shadows may be mistakenly detected as tree crowns. The segmentation results in natural forest datasets are satisfactory, but the segmentation results are reduced due to the dense tree crowns and the interference of weeds on the ground.

Figure 11.

Segmentation performance of each dataset. (a,b) and (c,d) respectively demonstrate the segmentation performance of TCSNet on two tree species. (e–h) demonstrate the segmentation performance of TCSNet in urban parks and green spaces. (i–l) demonstrated the segmentation performance of TCSNet in tropical rainforests.

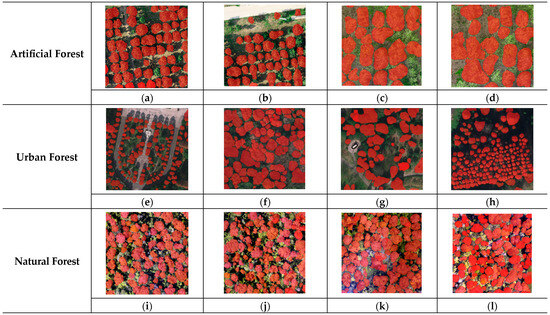

The algorithm proposed in this paper demonstrates improved segmentation performance in forests with similar tree species and crown morphology. This is mainly because similar tree species usually exhibit consistent morphological characteristics during their growth process, and the shape, size, color, and other indicators of the tree crown are relatively stable in the algorithm. However, when faced with some deciduous trees with complex and difficult to distinguish features, or trees with blurry and indistinct canopy shapes, the performance of the algorithm becomes less than ideal. The color and texture features of these trees often blend in with the surrounding environment, lacking significant differences, making it difficult for algorithms to accurately identify the outline of each individual tree. Especially during the growth period or seasonal changes of deciduous trees, the color of leaves changes rapidly and the expression of texture is also different, which increases the difficulty of segmentation due to this uncertainty. In addition, trees with irregular crown shapes have asymmetric growth patterns that make it difficult for algorithms to effectively identify them through regular patterns, further exacerbating the problem of inaccurate segmentation. In this case, the mutual occlusion between tree crowns becomes a key factor affecting the accuracy of the algorithm. Due to the interlocking and overlapping of some tree crowns, the algorithm is prone to recognizing multiple tree crowns as the same unit, resulting in frequent occurrences of over-segmentation or insufficient segmentation. This not only affects the overall performance of the algorithm, but also makes it difficult to distinguish between individual trees clearly, making it impossible to achieve accurate tree crown segmentation (Figure 12).

Figure 12.

Differences in different datasets. Blue squares represent tree crowns that are difficult to segment. (a,b) Both belong to artificial forests, but (a) has a similar canopy size and a more orderly arrangement, so the segmentation effect is better. (b) More affected by grass, and the crown is irregular, the effect is average. (c) The canopy size is similar, some ordered and some disorderly, but it will still be affected by the shadow, and the segmentation effect is general. (d) The trees are natural forests with small gaps and inconsistent canopy sizes, so segmentation is the most difficult.

There are significant differences in the performance of tree crown segmentation between artificial forests and natural forests. Compared to other types of forests, mature and closed artificial forests have relatively lower difficulty in crown segmentation. This is because trees in artificial forests are usually cultivated according to specific plans and planting spacing, resulting in high consistency in tree size, crown shape, and species, making it easier for algorithms to capture the unique boundaries of each tree during segmentation. In artificial forests, the arrangement of trees is relatively orderly, and there are fewer environmental interference factors, so the occlusion problem is relatively light. The algorithm can accurately identify the crown shape of individual trees, avoiding the phenomenon of excessive or insufficient segmentation. In contrast, forests that grow naturally have a wide variety of tree species and uneven tree sizes, and the phenomenon of mutual obstruction between trees is more severe, which increases the difficulty of segmentation. As shown in Table 1, the precision of the algorithm on the artificial forest dataset is 14.8% higher than that on urban forests and 21.3% higher than that on natural forests. When processing artificial forest datasets, FPS is 5.7 frames higher than natural forest datasets and 7.5 frames higher than urban forests, indicating that images of artificial forests have higher computational efficiency and faster processing speed during segmentation.

Table 1.

Results of ablation experiments.

However, even in artificial forests, the expansion and overlap of tree crowns still pose certain challenges to segmentation algorithms as the trees grow. When the canopy of a tree gradually expands to contact or even overlap with neighboring trees, the boundaries of the canopy become blurred, and the accuracy of segmentation may gradually decrease. In future research, the accuracy and robustness of the model can be further improved through various ways. Consider increasing the number of multi-type training samples to enrich the learning basis of the model. Secondly, the multi-scale feature fusion mechanism is introduced to make the model better capture the key information at different scales. In addition, combined with multi-source remote sensing data, it is expected to improve the detection and recognition ability of the model in complex environments. In view of the problems such as high difficulty in data acquisition and long time spent on annotation, cutting-edge technologies such as semi-supervised learning and small sample learning can be explored in the future. These methods can not only effectively alleviate the dilemma of data scarcity, but also improve the generalization performance and training efficiency of single tree crown recognition model, providing a new way to solve the problem of model optimization under data scarcity.

6.2. Ablation Experiments

To demonstrate the experimental results, ablation experiments were conducted on the model. As shown in Table 1, the improved Mask R-CNN showed improvements on all three datasets. When BiFPN-CBAM and SE-ResNeXt were introduced simultaneously, the model exhibited optimal performance on all datasets. This indicates that these two improved modules have a strong synergistic effect when used in combination, which significantly enhances the overall performance of the model.

6.3. Comparisons of Different Algorithms

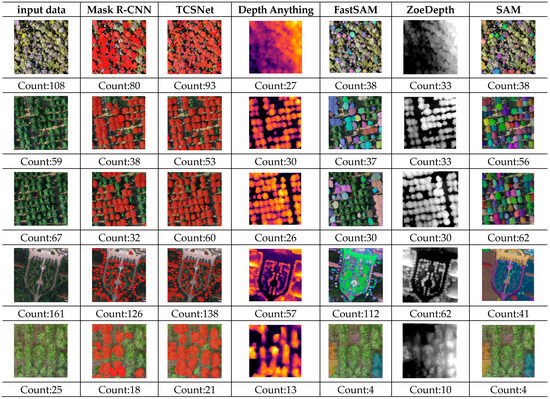

In order to show the performance of the improved algorithm more intuitively, four algorithms, namely Depth Anything, FastSAM, ZoeDepth, and SAM, are selected for qualitative analysis. As shown in Figure 13, TCSNet has satisfactory performance on the three datasets. The crown adhesion in Depth Anything and ZoeDepth is too much, and the effect is suboptimal in the area of dense crown. FastSAM has a certain segmentation ability with the original Mask R-CNN, but there are many missed errors. SAM has the strongest segmentation ability but is less effective at partitioning more complex canopy conditions.

Figure 13.

Comparisons with other algorithms [29,30,31]. We achieved most tree crown instances from input data.

7. Conclusions

The TCSNet algorithm presented in this study offers advantages in tree crown segmentation. By enhancing the traditional Mask R-CNN, it significantly improves feature extraction and boundary segmentation accuracy. Specifically, the upgraded ResNeXt backbone and embedded SENet module boost feature representation, while the BiFPN-CBAM module optimizes feature learning and fusion. Additionally, the Boundary-Dice loss function enhances tree crown boundary segmentation, effectively addressing the issue of crown adhesion. TCSNet integrates panoptic segmentation to ensure consistent and accurate segmentation across entire scenes, addressing limitations of traditional methods such as low precision and high workload. Experimental results show that this approach outperforms the original algorithm on artificial forest datasets, with a 6.6% increase in precision, a 1.8% improvement in recall, and a 4.2% boost in F1-score.

The TCSNet algorithm has broad application potential, adaptable to various geographical environments and forest types. With further refinement, it holds promise for automated, high-precision extraction of forest parameters, advancing UAV forestry remote sensing technology.

Author Contributions

Conceptualization, Y.C.; Methodology, Y.C. and S.X.; Writing—original draft preparation, Y.C. and C.W.; Writing—review and editing, Z.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Scientific and Technological Innovation 2030—Major Projects (NO.2023ZD0405605).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, Q.; Liu, Z.; Jin, G. Impacts of stand density on tree crown structure and biomass: A global meta-analysis. Agric. For. Meteorol. 2022, 326, 109181. [Google Scholar] [CrossRef]

- Shahi, T.B.; Xu, C.-Y.; Neupane, A.; Guo, W. Machine learning methods for precision agriculture with UAV imagery: A review. Electron. Res. Arch. 2022, 30, 4277–4317. [Google Scholar] [CrossRef]

- Shahi, T.B.; Dahal, S.; Sitaula, C.; Neupane, A.; Guo, W. Deep Learning-Based Weed Detection Using UAV Images: A Comparative Study. Drones 2023, 7, 624. [Google Scholar] [CrossRef]

- Qiu, L.; Jing, L.; Hu, B.; Li, H.; Tang, Y. A New Individual Tree Crown Delineation Method for High Resolution Multispectral Imagery. Remote Sens. 2020, 12, 585. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Cao, L.; Shen, Z.; Xu, S. Efficient forest fire detection based on an improved YOLO model. Vis. Intell. 2024, 2, 20. [Google Scholar] [CrossRef]

- Gomes, M.F.; Maillard, P.; Deng, H. Individual tree crown detection in sub-meter satellite imagery using Marked Point Processes and a geometrical-optical model. Remote Sens. Environ. 2018, 211, 184–195. [Google Scholar] [CrossRef]

- Torresan, C.; Carotenuto, F.; Chiavetta, U.; Miglietta, F.; Zaldei, A.; Gioli, B. Individual Tree Crown Segmentation in Two-Layered Dense Mixed Forests from UAV LiDAR Data. Drones 2020, 4, 10. [Google Scholar] [CrossRef]

- Maschler, J.; Atzberger, C.; Immitzer, M. Individual Tree Crown Segmentation and Classification of 13 Tree Species Using Airborne Hyperspectral Data. Remote Sens. 2018, 10, 1218. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, Z.; Tang, Y.; Qu, Y. Individual tree crown detection from high spatial resolution imagery using a revised local maximum filtering. Remote Sens. Environ. 2021, 258, 112397. [Google Scholar] [CrossRef]

- Xu, W.; Deng, S.; Liang, D.; Cheng, X. A Crown Morphology-Based Approach to Individual Tree Detection in Subtropical Mixed Broadleaf Urban Forests Using UAV LiDAR Data. Remote Sens. 2021, 13, 1278. [Google Scholar] [CrossRef]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Peres, E.; Sousa, A.; Sousa, J.J. UAV-Based Automatic Detection and Monitoring of Chestnut Trees. Remote Sens. 2019, 11, 855. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual Tree-Crown Detection in RGB Imagery Using Semi-Supervised Deep Learning Neural Networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

- Li, W.; He, C.; Fu, H.; Zheng, J.; Dong, R.; Xia, M.; Yu, L.; Luk, W. A Real-Time Tree Crown Detection Approach for Large-Scale Remote Sensing Images on FPGAs. Remote Sens. 2019, 11, 1025. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.A.; Zare, A.; White, E.P. Cross-site learning in deep learning RGB tree crown detection. Ecol. Informatics 2020, 56, 101061. [Google Scholar] [CrossRef]

- Pleșoianu, A.-I.; Stupariu, M.-S.; Șandric, I.; Pătru-Stupariu, I.; Drăguț, L. Individual Tree-Crown Detection and Species Classification in Very High-Resolution Remote Sensing Imagery Using a Deep Learning Ensemble Model. Remote Sens. 2020, 12, 2426. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Dos Santos, A.A.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef]

- Sun, C.; Huang, C.; Zhang, H.; Chen, B.; An, F.; Wang, L.; Yun, T. Individual Tree Crown Segmentation and Crown Width Extraction from a Heightmap Derived From Aerial Laser Scanning Data Using a Deep Learning Framework. Front. Plant Sci. 2022, 13, 914974. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Kervadec, H.; Bouchtiba, J.; Desrosiers, C.; Granger, E.; Dolz, J.; Ben Ayed, I. Boundary loss for highly unbalanced segmentation. In Proceedings of the 2nd International Conference on Medical Imaging with Deep Learning, PMLR, London, UK, 8–10 July 2019; pp. 285–296. [Google Scholar]

- Coomes, D.; Jackson, T. Airborne LiDAR and RGB imagery from Sepilok Reserve and Danum Valley in Malaysia in 2020; NERC EDS Centre for Environmental Data Analysis: London, UK, 2022. [Google Scholar] [CrossRef]

- Ball, J.G.C.; Hickman, S.H.M.; Jackson, T.D.; Koay, X.J.; Hirst, J.; Jay, W.; Archer, M.; Aubry-Kientz, M.; Vincent, G.; Coomes, D.A. Accurate delineation of individual tree crowns in tropical forests from aerial RGB imagery using Mask R-CNN. Remote Sens. Ecol. Conserv. 2023, 9, 641–655. [Google Scholar] [CrossRef]

- Yang, L.; Kang, B.; Huang, Z.; Xu, X.; Feng, J.; Zhao, H. Depth anything: Unleashing the power of large-scale unlabeled data. In Proceedings of the IEEE/CVF Con-ference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 10371–10381. [Google Scholar]

- Shariq Farooq, B.; Birkl, R.; Wofk, D.; Wonka, P.; Müller, M. Zoedepth: Zero-shot transfer by combining relative and metric depth. arXiv 2023, arXiv:2302.12288. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).