Abstract

Adam (Adaptive Moment Estimation) is a well-known algorithm for the first-order gradient-based optimization of stochastic objective functions, based on adaptive estimates of lower-order moments. As shown by computational experiments, with an increase in the degree of conditionality of the problem and in the presence of interference, Adam is prone to looping, which is associated with difficulties in step adjusting. In this paper, an algorithm for step adaptation for the Adam method is proposed. The principle of the step adaptation scheme used in the paper is based on reproducing the state in which the descent direction and the new gradient are found during one-dimensional descent. In the case of exact one-dimensional descent, the angle between these directions is right. In case of inexact descent, if the angle between the descent direction and the new gradient is obtuse, then the step is large and should be reduced; if the angle is acute, then the step is small and should be increased. For the experimental analysis of the new algorithm, test functions of a certain degree of conditionality with interference on the gradient and learning problems with mini-batches for calculating the gradient were used. As the computational experiment showed, in stochastic optimization problems, the proposed Adam modification with step adaptation turned out to be significantly more efficient than both the standard Adam algorithm and the other methods with step adaptation that are studied in the work.

1. Introduction

The ability to solve stochastic optimization problems is of key practical importance in many areas of science and technology such as energy management [,,,,], healthcare [], marketing [] and various others.

Many problems in these areas can be represented as optimization problems of some objective function. If the function is differentiable with respect to its parameters, gradient descent, which does not use the values of the objective function, can become a relatively effective optimization method.

Often, the objective functions are stochastic. For example, many objective functions consist of a sum of subfunctions computed on different subsamples of the data. In this case, optimization can be more efficient by using gradient steps on individual subfunctions, such as stochastic gradient descent (SGD), which has played a key role in machine learning, particularly in deep learning [,,,,]. Problems may also have other causes of noise. Such noisy problems require efficient stochastic optimization methods.

Adam [] is an algorithm used for optimizing stochastic objective functions based on gradients. The method is simple to implement, computationally efficient, memory-efficient and well suited for both data-intensive and parameter-intensive problems. The method is also suitable for solving very noisy problems. Computational results show that Adam works well in practice [,].

Adam converges quickly in most scenarios, but sometimes it may not converge to an optimal solution, especially in cases with noisy or sparse gradients [,]. Tuning its hyperparameters can be critical to the model’s performance and is sometimes more sensitive than simpler optimizers. Additionally, for some problems, implementing annealing of the learning rate with Adam is necessary to achieve convergence, which adds another layer of complexity [].

As we will see further, at a certain level of interference and degree of conditionality of the problem, the Adam algorithm is subject to looping. When solving applied problems, it is difficult to determine the reasons for the impossibility of obtaining a solution. This may be a defect caused by the algorithm or a defect caused by the formulation of the problem. Therefore, it is important to eliminate the defects generated by the lack of convergence of the algorithm.

Adaptive methods that adjust the step size have become widespread in large-scale optimization for their ability to converge robustly []. In [], the authors proposed a method where each dimension has its own dynamic rate: large gradients have smaller learning rates and small gradients have large learning rates. The AdaGrad family of methods [,,,,,,] is based on this principle and shows good results on large-scale learning problems. In [], instead of accumulating the sum of squared gradients, the author fixed the window of past gradients that are accumulated. In [,], AdaGrad-Norm with a single step size adaptation based on the gradient norm was developed. Theoretical results for the advantage of AdaGrad-like step sizes over the plain stochastic gradient descent in the non-convex setting can be found in [].

An adaptive optimization algorithm with gradient bias correction (AdaGC) was proposed in []. This algorithm improves the iterative direction through the gradient deviation and momentum, and the step size is adaptively revised using the second-order moment of gradient deviation.

In [], AdaBelief is presented to adjust the step size according to the exponential moving average of the noisy gradient. If the observed gradient greatly deviates from the prediction, this method takes a small step; if the observed gradient is close to the prediction, the method takes a large step. AdaDerivative [], in contrast to AdaBelief, can adaptively adjust step sizes by applying the exponential moving average of the derivative term using past gradient information without the smoothing parameter.

In [], new variants of the Adam algorithm with a long-term memory of past gradients were proposed. The authors in [] apply the variance reduction technique to construct the adaptive step size in Adam. The Cycle-Norm-Adam (CN-Adam) algorithm based on the cyclic exponential decay learning rate is proposed in []. Some well-known adaptive methods are shown in Table 1.

Table 1.

Some well-known adaptive methods.

The Adam algorithm has three main ideas: gradient smoothing, variable scaling and step selection. Our goal is to analyze the effectiveness of the degree of presence of these components in the minimization algorithm. In our proposed algorithms, the way to select the step multiplier was completely excluded.

In this paper, we present a method for tuning the step of the Adam method using the fact that in exact one-dimensional descent, the current descent direction is orthogonal to the gradient calculated at the new point. Based on observations of the angle between the current descent direction and the new gradient, the step will be tuned. If the angle is acute, the step is small and should be increased. If the angle is obtuse, the step is large and should be decreased.

The main property of the minimization algorithm is its convergence rate. It is known that with an increase in the degree of conditionality of the problem, the convergence rate of the gradient method decreases. We study the effect of the gradient noise on the convergence rate of the algorithm at a certain fixed level of conditionality that does not depend on the problem dimension. At the same level of conditionality for different dimensions, the convergence rate of the gradient method depends slightly on the dimension. As shown by our experiments, when moving, for example, from the dimension n = 100 to n = 1000, the methods under study show more stable results with respect to changes in the noise level. This allows us to conduct an extensive computational experiment with insignificant dimensions.

The proposed step adaptation procedure was applied to the Adam algorithm, momentum algorithm and gradient method. A computational experiment was conducted on functions with gradient noise, as well as on training problems with mini-batches for gradient calculation. As the computational experiment showed, the step adaptation algorithm allows eliminating loops inherent in the Adam method. The Adam algorithm with step adaptation, unlike other methods, managed to solve all test problems.

The rest of the paper is organized as follows. In Section 2, we give the problem statement and description of Adam algorithm. In Section 3, we consider principles of step adaptation and present the modified algorithms of Adam, momentum and the gradient method with step adaptation. In Section 4, we conduct a numerical experiment. Section 5 summarizes the work.

2. Problem Statement and Background

Let the problem of minimizing the function f(x), x ∈ Rn be solved. In relaxation minimization methods, successive approximations are constructed using the formulas:

Here, sk is the descent direction, hk is the step of one-dimensional (line) search, while f(xk+1) ≤ f(xk). These and other frequently used designations are given in Table A1.

In exact one-dimensional descent

Denote the current gradient value by gk = ∇f(xk). In gradient noise conditions, smoothed gradient values are used:

Previously, in [,], we proposed several algorithms for adapting the step of the gradient method without one-dimensional search. In this study, we extend the ideas of one of these algorithms to a number of gradient methods used for training neural networks. We consider algorithms in which space transformation is carried out using diagonal metric matrices, the diagonal elements of which we will store as a vector. Denote the Hadamard product for vectors a and b by a·b = a ʘ b. In the algorithms presented below, instead of matrices, scaling factors specified as a vector are used, for example, bk ∈ Rn. The descent direction in this case is calculated according to the following rule:

sk = pk ʘ bk.

In what follows we will assume that we have the ability to calculate the gradient with noise and that the function value is unavailable. Thus, we do not have the ability to use one-dimensional search (2). In gradient algorithms in this case, various types of step multiplier settings are used.

Consider the Adam algorithm using the structure of the method with a metric space transformation, where a gradient and a transformed metric matrix are used to form the descent direction. In the Adam algorithm, exponentially smoothed gradients are used as directions, the scales of which are changed by variables using exponentially smoothed squares of gradients . The metric matrix is diagonal and its main diagonal elements are formed based on exponentially smoothed squares of the gradient components. The next element is the method for forming a step along the descent direction.

In the algorithms presented below, the operations of multiplication, the division of vectors and raising them to a power will mean that there will be operations for the components of the vectors involved in the operation. The addition of a vector and a number will mean adding a number to each component of the vector. The initial values of the parameters in Algorithm 1 are chosen as in []. Let us consider the Adam algorithm.

| Algorithm 1 (Adam) |

| 1. Set β1, β2 ∈ [0, 1), ε > 0, step size α > 0, the initial point x0 ∈ Rn. The algorithm uses parameters β1 = 0.9, β2 = 0.999, ε = 10−8, α = 0.001. 2. Set k = 0, pk = 0, bk = 0, where pk, bk ∈ Rn. 3. Set k = k + 1, gk = ∇f(xk). 4. . 5. . 6. . 7. ). 8. Get the descent direction . 9. Find a new approximation of the minimum . 10. If the stopping criterion is met, then stop, else go to step 3. |

Step 4 gives us the exponential average for the gradient. Step 5 gives us the exponential average for the squares of the gradient components. Steps 6, 7 and 8 form the descent direction. Steps 6, 7, 8 and 9 can be represented as follows:

where first the descent direction is found and then the step multiplier is determined.

To assess the importance of the presence of the parameters pk, bk in the algorithm, we first use only pk and then this together with bk to organize the algorithm with step adaptation.

3. Algorithms with Step Adaptation

Consider gradient methods for minimizing a quadratic function

In the gradient method with a constant optimal step, the step size is determined by the expression

where M and m are the maximal and minimal eigenvalues of the matrix of second derivatives A. The estimate of the method (1) convergence rate for different ways of selecting the steps (2) and (7) is the same. To estimate step (2), the function derivative along the descent direction is used. The step is determined from the condition of the extremum of the one-dimensional function. The derivative at the optimal point must be zero.

Denoted by y(h) = −(∇f(xk − hsk), sk), the derivative of function ϕ(h) = f(xk − hsk), with respect to the parameter h, can be computed in a minimization algorithm based on gradient information. If the function is quadratic, then y(h) is a linear function of h. In [], the following estimate of the optimal step was obtained

Estimate (8) is achieved only after obtaining a new point and calculating the gradient of it. To use estimate (8), the following reasoning is required. The gradient method with a constant step converges in a wide range of step size selection, but this range and the optimal step are fixed for a quadratic function, or for strongly convex functions with a Lipschitz gradient []. Therefore, in [], estimate (8) is adopted as an estimate for step (7), towards which the current search step is moved. The simplest way to organize an algorithm of this kind is machine learning.

We form a quality functional

The gradient minimization method (9) at a certain step is

In [], several methods of organizing update (10) were used. The most suitable for solving minimization problems under interference conditions was the following type of method:

In (11), possible oscillations in values hk − hk* are excluded.

Process (11) can be organized in another way. For this, we only need information about whether the step hk value is greater or less than step hk*. Initially, the derivative is negative. In the case when the boundary of the zero value of the derivative is not reached and y(hk) < 0, the step should be increased. When the boundary is passed, then y(hk) > 0 and the step should be decreased. In this case, (11) is rewritten as

In [], the following method of step adaptation in Algorithm 1 is used with q = (1 + α) > 1:

where we made the replacement y(hk) = −(∇f(xk − hsk), sk) = −(∇f(xk+1), sk).

In some cases, the following step adjustment may be effective:

The step adaptation algorithm will be used to minimize functions with gradient interference. Therefore, for a random variable sign(y(hk)) with E(sign(y(hk))) = 0, we must have equality hk+m = hk for the same number of positive and negative values of sign(y(hk)).

Update (13) ensures that the required equality hk+m = hk; that is, the absence of a trend component, with the same number of positive and negative values of sign(y(hk)) on a segment of m updates.

In the case of high interference on the gradient, or essentially non-quadratic functions, instead of (1), we will use a process with the normalization of the descent vector

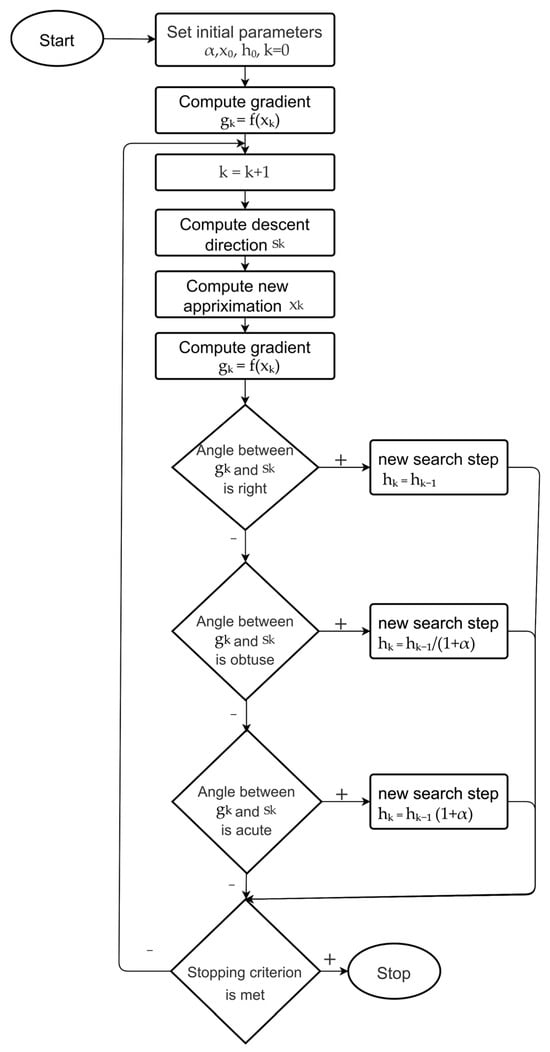

In the following Algorithm 2 (Figure 1), we use the gradient descent along the gradient gk and step adaptation.

| Algorithm 2 (H_GR) |

| 1. Set the step adaptation parameter α > 0, the initial point x0 ∈ Rn and the initial step h0 ∈ R1. The algorithm uses parameter α = 0.01. 2. Set k = 0, compute gk = ∇f(xk). 3. Set k = k + 1. 4. Compute the descent direction. 5. Find a new approximation of minimum 6. Compute gk = ∇f(xk). 7. Compute a new search step 7.1 If |(gk, sk)|≤ 10−15 then hk = hk−1 else 7.2 If (gk, sk)≤ 0 then hk = hk−1/(1 + α) else 7.3 hk = hk−1(1 + α). 8. If the stopping criterion is met, then stop, else go to step 3. |

Figure 1.

Flowchart of Algorithm 2.

In Step 7, the step size of the method is adjusted. Condition 7.1 is necessary for cases where, due to noise or when calculating gradients based on mini-batches, the gradient vanishes. In 7.2, the step decrease is produced when the angle between sk and gk is obtuse. In 7.3, the step increase is produced when the angle between sk and gk is acute. The small value 10−15 in Steps 7.1–7.3 was chosen to exclude step adaptation at zero gradients.

The algorithm with a normalization of the descent direction is more stable when minimizing functions with interference and enables a wider range of the step adaptation algorithm parameter α in (13). Taking into account the variety of functions other than quadratic, where fluctuations in the gradient values can be significant, we will further use the algorithm (14) with step adaptation (13). Here, α is the adjustable parameter.

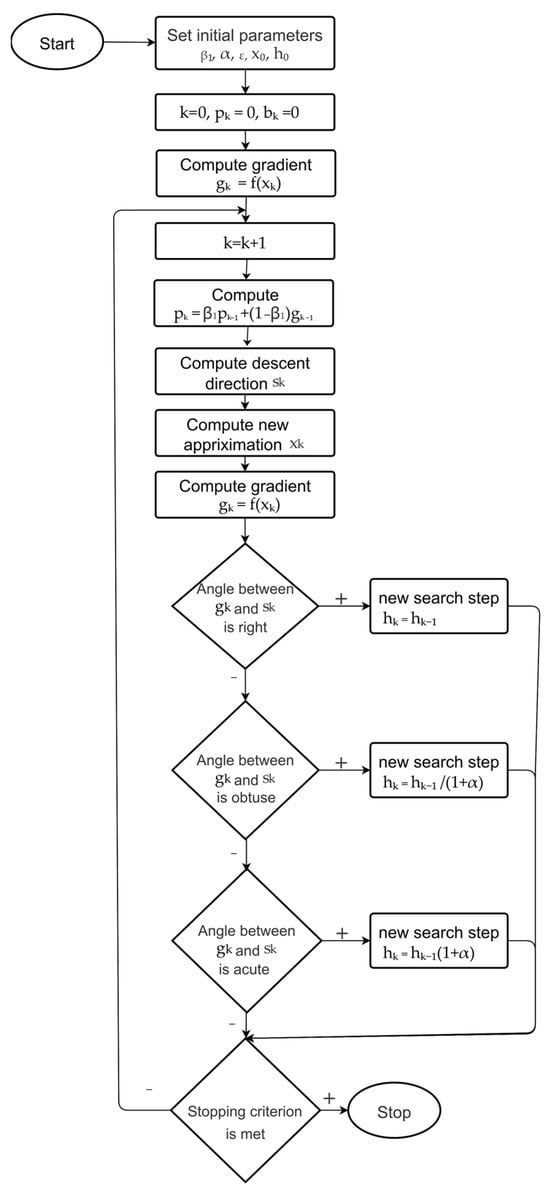

In the following Algorithm 3 (Figure 2), we use descent along the smooth gradient pk and step adaptation to select the step.

| Algorithm 3 (H_ MOMENTUM) |

| 1. Set the exponential smoothing parameter β1 ∈ [0, 1), ε > 0, step adaptation parameter α > 0, the initial point x0 ∈ Rn and the initial step h0 ∈ R1. The algorithm uses parameters β1 = 0.9, ε = 10−8, α = 0.01. 2. Set k = 0, pk = 0, bk = 0, where pk, bk ∈ Rn, compute gk = ∇f(xk). 3. Set k = k + 1. 4. Compute 5. Find a descent direction . 6. Find a new approximation of minimum 7. Compute gk = ∇f(xk). 8. Compute a new search step 8.1 If |(gk, sk)| ≤ 10−15 then hk = hk−1 else 8.2 If (gk, sk) ≤ 0 then hk = hk−1/(1 + α) else 8.3 hk = hk−1(1 + α). 9. If the stopping criterion is met, then stop, else go to step 3. |

Figure 2.

Flowchart of Algorithm 3.

In Step 8, the step size of the method is adjusted. Using this algorithm, we will verify the efficiency of space metric transformation for the Adam algorithm.

In the Adam algorithm with step adaptation (Algorithm 4), we use components pk and bk together to form the descent direction. We called this algorithm Adam with step adaptation, although, to be completely precise, it is Adagrad with elements of momentum and step adaptation.

| Algorithm 4 (H_ADAM) |

| 1. Set β1, β2 ∈ [0, 1), ε > 0, step adaptation parameter α > 0, the initial point x0 ∈ Rn, the initial step h0 ∈ R1. The algorithm uses parameters β1 = 0.9, β2 = 0.999, ε = 10−8, α = 0.01. 2. Set k = 0, pk = 0, bk = 0, where pk, bk ∈ Rn. Compute gk = ∇f(xk). 3. Set k = k + 1. 4. . 5. . 6. Get the descent direction . 7. Find a new approximation of the minimum . 8. Compute gk = ∇f(xk). 9. Compute a new search step 9.1 If |(gk, sk)| ≤ 10−15 then hk = hk−1 else 9.2 If (gk, sk) ≤ 0 then hk = hk−1/(1 + α) else 9.3 hk = hk−1(1 + α). 10. If the stopping criterion is met, then stop, else go to step 3. |

The required moving averages are calculated in Steps 4 and 5. The descent direction is calculated based on the components pk, bk and normalized in Step 6. The meaning of the adaptation step in Step 9 is the same as for Algorithm 2.

By varying the parameters of exponential smoothing β1, β2 ∈ [0, 1), we can study the effect of gradient and gradient squares smoothing on the efficiency of the minimization. As a result of the computational experiment, it was found that the parameter values β1 = 0.9 and β2 = 0.999 were the best for complex situations, with both large noise or with small mini-batches for calculating the gradient.

4. Numerical Results and Discussion

The goal of the numerical experiment is to experimentally study the ability of methods to maintain operability under interference conditions. The use of multi-step gradient methods is justified primarily on ill-conditioned functions that are difficult to minimize using the gradient method. Test functions are selected based on this position. Some functions differ significantly from quadratic ones and contain curvilinear ravines, which reflects the situation of rapidly changing eigenvectors of the matrix of second derivatives.

The local convergence rate of the gradient method in a certain neighborhood of the current minimum is largely determined by how effective it is in minimizing ill-conditioned quadratic functions. Therefore, testing was primarily carried out on quadratic functions, where it is easy to control the degree of conditionality of the problem.

Testing is performed on smooth test functions. The minimum of test functions is uniquely defined. Test functions include functions with curvilinear ravines and functions that differ significantly in properties from quadratic ones. The well-known Adam algorithm and algorithms with step adaptation were chosen as the algorithms under study: H_GR—gradient method with step adaptation, H_MOMENTUM—momentum method with step adaptation and H_ADAM—Adam algorithm with step adaptation. The Delphi 7 software environment was used to implement the algorithms.

For test functions, we use the notation f(x,[parameters]). In calculations, we use the gradient of the function with noise , where V is a random vector uniformly distributed in the unit sphere and the value of r varies. A higher value of parameter r means higher noise.

All the methods under study use only gradient values. The function values are calculated for the purpose of assessing the method’s progress in function minimizing and as a stopping criterion. The stopping criterion is

The initial point x0 and the ε value are given in the description of the corresponding function. To solve the problem, for each function, the algorithm has a given number of iterations, n_max, if the stopping criterion is not met.

The result tables for each function indicate the number of algorithm iterations required to achieve the specified accuracy. If the algorithm does not achieve the specified accuracy in the allotted number of iterations n_max, then only the achieved function value is indicated underlined.

4.1. Preliminary Analysis of Algorithm Parameters

When solving an optimization problem, it is important to evaluate the ability of the algorithm to maintain its inherent convergence rate on functions with a given degree of problem conditionality. For these purposes, we will conduct a preliminary analysis of the method’s convergence rate under deep optimization conditions in order to analyze its descent trajectory. We carry this out by setting a stopping criterion corresponding to deep optimization and a significant number of iterations for monitoring the optimization process, which is set as one of the stopping criteria.

In [], a quadratic growth of the number of iterations is shown depending on the ratio of the noise value to the gradient norm. In order to be sure that the algorithm actually converges not by chance and to verify the method convergence under noise conditions, we also need to set a deep optimization criterion and perform the required number of iterations, which can be very large. Such statistics will allow us to obtain an idea of the non-random nature of the algorithm convergence. Thus, with stopping criteria that ensure deep optimization, we obtain information on the algorithm behavior over a significant segment of the function decrease.

4.1.1. Convergence Rate Analysis

We conduct an experiment on the following function:

Eigenvalue ai of this function has the boundaries λmin = 1 and λmax = amax. Increasing the amax value leads to an increase of problem conditioning. The initial points are x0 = (100, 100, …, 100), n = 100, n_max = 100,000. The parameters are β1 = 0.9, β2 = 0.999 and α = 0.01. Table 2 shows the results of minimization with different values of stopping criterion.

Table 2.

Number of iterations for the fQ(x,[amax]) function minimization depending on the amax.

The H_GR algorithm most accurately reflects the dependence of the method on the degree of the problem conditioning. With a certain selection of the gradient smoothing parameter β1, the other methods will also show an increasing number of iterations as the conditionality worsens. Such a selection should be carried out for the class of applied problems.

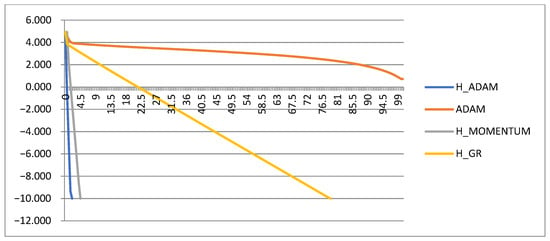

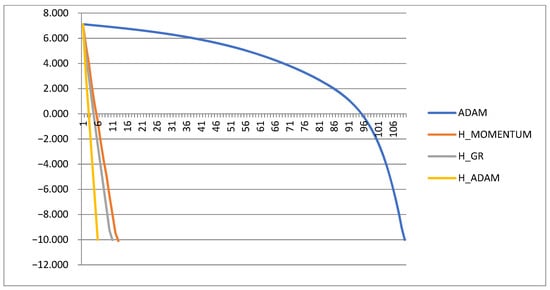

For the function fQ(x,[amax = 104]) from Table 2, the trajectory of the function decrease for all the studied methods on a logarithmic scale is shown in Figure 3.

Figure 3.

Trajectories of the function fQ(x, [amax= 104]) decrease. The number of iterations (X axis) is given in thousands.

All step-adaptive methods converge linearly on each section of the trajectory. Since we did not obtain complete information about the convergence of the ADAM algorithm with the number of iterations of 100,000, we will conduct an experiment with a doubled number of iterations (Figure 4).

Figure 4.

Trajectory of the function fQ(x, [amax= 104]) decrease. The number of iterations (X axis) is given in thousands.

For the ADAM method, the trajectory has a very small segment of high convergence rate. From Figure 3 and Figure 4, we can draw the following conclusions. For step-adaptive methods, the convergence rate is constant along the descent trajectory. The ADAM method has three possible situations. If the step is small then the convergence rate is low, which we observe in the first data segment. If the step is optimal, then the speed is high. If the step is large, then oscillations occur in the minimum neighborhood. Based on the findings, it is necessary to choose the optimal step size for the ADAM method while a narrow range of its high efficiency exists. The graphs can be used to track the required level of criterion optimization, for example, 10−4.

Our goal is to identify defects in the convergence rate of methods. Comparing the results for the H_ADAM and ADAM methods in Table 2, we can note a sharp deterioration in their convergence rate with increasing optimization accuracy. It is especially important to set overestimated optimization criteria when minimizing functions with noise. In [], a quadratic increase in the number of iterations is shown depending on the ratio of the noise value to the gradient norm. For example, with a tenfold noise increase, the cost of the number of iterations increases by 100 times. In order to verify the convergence of the method under noise conditions, we also need to set a deep optimization criterion and perform a large number of iterations. Such statistics will allow us to obtain an idea of the non-random nature of the algorithm convergence. Therefore, in the tests below, the stopping criteria will be set to a high degree of accuracy with large stopping values for the number of iterations.

4.1.2. Analysis of Step Adaptation Parameter

For the function fQ, we will conduct a sensitivity analysis for the step adaptation parameter α; the results are presented in Table 3.

Table 3.

Number of iterations for the fQ(x, [amax]) function minimization depending on the α.

The value of the parameter α determines the rate of the step change. For complex problems, it should be decreased (α = 0.001); on the contrary, for simple problems, it should be increased (α = 0.1). This thesis is confirmed by the results of Table 3. For a more complex problem, α = 0.01 is better. For a simpler problem, α = 0.1 is more suitable.

4.1.3. Analysis of Exponential Smoothing Parameters

For the function fQ, we will conduct a sensitivity analysis for the parameter β2, which is responsible for smoothing squares of gradient components. The parameters α = 0.01, n_max = 200,000 were set. The results are presented in Table 4.

Table 4.

Number of iterations for the fQ(x, [amax = 10]) function minimization depending on the parameter β2.

The analysis shows that, here, the best value for ADAM is β2 = 0.999, and for H_ADAM, it is β2 = 0.9. In general, it is recommended in the original ADAM to set β2 = 0.999. Therefore, all experiments with the ADAM and H_ADAM algorithms are carried out with this value. In the example of this problem, there are no big differences in the behavior of the algorithms with β2 = 0.999 and β2 = 0.9. Nevertheless, this parameter can be selected for a set of similar problems.

There is also an optimum for the β1 parameter, but the dependence of the parameter β1 on the degree of degeneracy of the function follows from a comparison of the gradient method (β1 = 0) and other methods. It is obvious that this parameter should be reduced as the conditionality of the function decreases.

4.2. Test Functions

4.2.1. Rosenbrock Function

The Rosenbrock function has the following form:

The initial point is x0 = (−1.2, 1) and the minimum point is x* = (1, 1), ε = 10−10, n_max = 1,000,000. Note that the Rosenbrock function is not convex. Table 5 shows the results of minimization.

Table 5.

Number of iterations for the Rosenbrock function minimization with gradient interference.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_GR, H_MOMENTUM and H_ADAM. The most stable results are obtained by the H_ADAM algorithm. The ADAM algorithm is unstable under large interference and stops minimizing at a certain point. Attempts to improve the situation by using an additional multiplier during step adjusting also do not lead to success.

4.2.2. Quadratic Functions

To study the effect of problem conditioning, the following quadratic function was used:

Eigenvalues ai of this function have the boundaries λmin = 1 and λmax = amax. Increasing the amax value leads to an increase in problem conditioning. The initial point is x0 = (100, 100, …, 100), ε = 10−10, n_max = 3,000,000. Table 6, Table 7 and Table 8 show the results of minimization.

Table 6.

Number of iterations for the function fQ(x, [amax = 100]) minimization with gradient interference.

Table 7.

Number of iterations for the function fQ(x, [amax = 1000]) minimization with gradient interference.

Table 8.

Number of iterations for the function fQ(x, [amax = 10,000]) minimization with gradient interference.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_MOMENTUM, H_ADAM, H_GR. With low conditionality of the problem, the gradient method with step adaptation H_GR is beyond competition. In this case, gradient smoothing leads to the opposite result. The ADAM algorithm is practically inoperative.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_GR, H_ADAM and H_MOMENTUM. A slight deterioration in conditionality results in an equalization of the convergence rate of the gradient method and the methods using a smoothed gradient. The metric transformation does not play a significant role here.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_GR, H_ADAM and H_MOMENTUM. The metric transformation does not play a significant role here. The H_GR step adaptation methods also cope with the task successfully.

The next quadratic function is

The eigenvalues of matrix A have the boundaries λmin = 1 and λmax = amax. Matrix B is formed so that it has an eigenvector z with a unit eigenvalue. The purpose of using this function is to create a ravine in the direction of a given vector z and to move away from the system of eigenvectors directed along the coordinate axes. The initial point is x0 = (100, 100, …, 100), ε = 10−10, n_max = 3,000,000. Table 9 shows the results of minimization.

Table 9.

Number of iterations for the function fQZ(x, [amax = 10,000]) minimization with gradient interference.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_GR, H_MOMENTUM and H_ADAM. The metric transformation here manifests itself at low noise levels. The H_GR step adaptation method also copes with the task successfully.

4.2.3. Functions with Ellipsoidal Ravine

To study the effect of problem dimension, the following function was used:

The initial point is = −1, = i, i = 2, 3, … n, ε = 10−4, n_max = 3,100,000. As n increases, the dimension of the problem increases. Table 10 shows the results of minimization.

Table 10.

Number of iterations for the function fEEL(x, [amax = 100, bmax = 1000]) minimization with gradient interference.

Here, we can see that the efficiency of the algorithms increase in the following order: H_GR, ADAM, H_MOMENTUM and H_ADAM. In this problem, the minimum point is degenerate. Therefore, the gradient method moves into the extremum region and loops. The ADAM algorithm has a slow convergence, and with large noise, it tends to start looping. The H_ADAM algorithm copes with all tasks. This example shows the advantages of the H_ADAM algorithm compared to the H_MOMENTUM algorithm, obtained through the use of diagonal metric matrices.

The following function has a multidimensional ellipsoidal ravine. Minimization occurs when moving along a curvilinear ravine to a minimum point.

The initial point is = −1, = i, i = 2, 3, …n, ε = 10−10, n_max = 41,000,000. Due to the additional quadratic term in fEX, the minimum point ceases to be degenerate. This allows the gradient method to find the minimum of the function with higher accuracy compared to the function fEEL. Table 11 shows the results of minimization.

Table 11.

Number of iterations for the function fEx(x, [amax = 100, bmax = 1000]) minimization with gradient interference.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_GR, H_MOMENTUM and H_ADAM. In this non-convex problem, the minimum point is non-degenerate, but with a large spread of the eigenvalues of the matrix of second derivatives in the minimum region. Therefore, the gradient method has a slow convergence. The ADAM algorithm does not work in the presence of interference. The H_MOMENTUM algorithm works here. The H_ADAM algorithm copes with all problems. This example demonstrates the advantages of the H_ADAM algorithm compared to the H_MOMENTUM algorithm, obtained by using diagonal metric matrices, which are especially noticeable in conditions of small interference.

4.2.4. Non-Quadratic Function

The following function was used to analyze the effect of interference on the gradient components:

The initial point is x0 = (1, 1, …, 1), ε = 10−10, n_max = 1,000,000. Table 12 shows the results of minimization.

Table 12.

Number of iterations for the function fQ^2(x, [amax = 10,000]) minimization with gradient interference.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_GR, H_MOMENTUM and H_ADAM. The best results are obtained by the H_ADAM algorithm. It copes with all tasks. The ADAM, H_GR and H_MOMENTUM algorithms do not cope in conditions of significant interference.

In all tests, the algorithms H_ADAM and H_MOMENTUM are almost equivalent except for the last three cases, where the H_MOMENTUM method is somewhat worse than the H_ADAM. Also, in all tests, the ADAM algorithm tends to start looping and shows worse results.

4.3. Application Problem (Regression)

We use the problem of estimating the parameters of a linear model as an application problem. When estimating the parameters of a neural network, there may be a significant number of factors that cause the loss of convergence of the learning method. For example, one of them is that the parameters of a neuron fall into the zone where the function ceases to be sensitive to changes in the parameters of this neuron. Special methods are needed to eliminate such shortcomings. The main goal of the optimization (learning) method is to effectively overcome the issues of ill-conditioning in the minimization problem. In this regard, the problem of estimating the linear model parameters enables us to simulate the conditionality of the problem and evaluate the behavior of the learning algorithm depending on the degree of its conditionality. At the same time, the presence of a single minimum point enables us to evaluate the speed qualities of the method.

Denote, using x* ∈ Rn, a vector of model parameters that we have to find based on observations of the values of a linear model with these parameters at a number of given points. Set xi* = 1, i = 1, …, n − 1, xn* = 0, matrix m ∈ RN×n, matrix rows mi ∈ Rn and model values at observation points y ∈ RN

where q ∈ [−ai, ai] is a random uniformly distributed number, . Scaling the data using the coefficients in (23) determines the degree of conditionality of the problem. The task is to find unknown parameters x* ∈ Rn given m, y. The solution can be obtained by minimizing the function

We will search for a solution using stochastic gradient methods, based on the gradients of a function formed on a randomly selected segment of data.

where Nc is a random number on a segment Nc ∈ [0, N − 1] and k(i) = i mod N is the remainder of division. The first stopping criterion is f(xk) − f* ≤ ε = 10−10. For these purposes, we will compute the function (24) at each step of the algorithm. The second stopping criterion is the maximum number of method iterations n_max. If the method does not cope with the task within the allotted number of iterations, then we indicate the achieved value of the objective function in the table cell. Table 13, Table 14, Table 15 and Table 16 show the results of minimization.

Table 13.

Linear regression without interference for the function fr(x, [amax = 10]) minimization with gradient information frb(x, Nc, Nb, [amax = 10]), n = 100, N = 1000, n_max = 250,000.

Table 14.

Linear regression without interference for the function fr(x, [amax = 100]) minimization with gradient information frb(x, Nc, Nb, [amax = 100]), n = 100, N = 1000, n_max = 250,000.

Table 15.

Linear regression without interference for the function fr(x,[amax = 10]) minimization with gradient information frb(x, Nc, Nb, [amax = 10]), n = 100, N = 1000, c = 1, n_max = 100,000.

Table 16.

Linear regression without interference for the function fr(x, [amax = 10]) minimization with gradient information frb(x, Nc, Nb, [amax = 10]), n = 100, N = 1000, c = 10, n_max = 100,000.

Here, at a low degree of degeneracy (amax = 10), the gradient method turns out to be effective. Here, the H_ADAM and H_MOMENTUM methods turn out to be the best. The ADAM method turns out to be unstable, even at a low degree of degeneracy.

At amax = 100, only the H_ADAM method retains the ability to train the model with small portions of data for calculating the gradient (Nb = 1, 10). However, the ADAM method turns out to be preferable to the H_GR and H_MOMENTUM.

Set the values of the optimal model parameters xi* = qi ∈ [−c, c], i = 1, …, n − 1, xn* = 0, where q is a random uniformly distributed number.

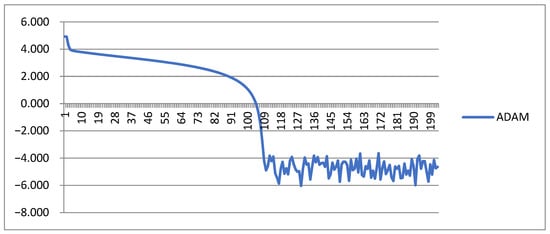

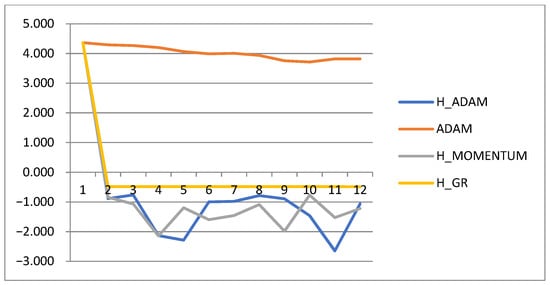

For the function fr(x,[amax = 10]), the trajectory of the function decrease for all the studied methods on a logarithmic scale is shown in Figure 5 at Nb = 100.

Figure 5.

Trajectories of the function fr (x, [amax= 10]) decrease. The results of the function observation are given every 100 iterations.

Figure 5 demonstrates the linear nature of the convergence rate of the methods with step adaptation. The ADAM algorithm in this case has a low convergence rate at the initial stage with small step. Due to the fact that the zone of action of the optimal step is limited, it will not be possible to choose it as optimal for the entire descent trajectory. A similar problem is solved in the H_ADAM algorithm.

Next, we consider the problem of regression with interference. We add noise to the data

where is a random uniformly distributed number. We will denote such a function as fξ,r(x, [amax]).

Due to the limited batch used to calculate the gradient, we will have many minimum points for this batch. Due to this, in the batch mode, there will be oscillations in the neighborhood of the function fξ,r minimum. We need to choose the boundary of the minimum approximation, the achievement of which we will consider as the solution of the problem. Based on the calculated minima of the functions fξ,rb(x, Nc, Nb, [amax]) for different values of the Nb parameter, the largest achievable boundary was chosen among them. As before, the stopping criteria for the function and for the number of gradient calculations were used. Table 17 and Table 18 show the results of minimization.

Table 17.

Linear regression with interference for the function fξ,r(x, [amax = 10]) minimization with gradient information fξ,rb(x, Nc, Nb, [amax = 10]), n = 100, N = 1000, n_max = 250,000.

Table 18.

Linear regression with interference for the function fξ,r(x,[amax = 100]) minimization with gradient information fξ,rb(x, Nc, Nb, [amax = 100]), n = 100, N = 1000, n_max = 250,000.

Here, we can see that the efficiency of the algorithms increase in the following order: ADAM, H_MOMENTUM and H_GR, H_ADAM. Preference is given to the H_ADAM algorithm only because it solved all the problems. At a low degree of degeneracy (amax = 10), the gradient method H_GR turns out to be more effective compared to the ADAM algorithm. If the H_GR method converges, its results are better compared to other methods. The ADAM method is not operational with small portions of data, even at a low degree of degeneracy. The H_ADAM method works stably.

Here, we can see that the efficiency of the algorithms increase in the following order: H_MOMENTUM, H_GR, ADAM and H_ADAM. At a higher degree of degeneracy (amax = 100), the H_ADAM method has better results compared to other methods and retains the ability to train the model with small portions of data for calculating the gradient (Nb = 1, 10). The ADAM method is preferable to the H_GR and H_MOMENTUM methods.

In general, the imposition of interference did not significantly affect the convergence and convergence rate of the methods. At the same time, the noise did not affect the efficiency of the H_ADAM method.

Methods for solving optimization problems with interference under drift conditions are of great importance in robotics and artificial intelligence problems. For these purposes, methods are needed that implement the process of searching and tracking the position of the extremum. In this regard, suitable methods are algorithms with step adaptation, in which the step can take sizes corresponding to the drift speed of the extremum and there is no need to use one-dimensional search. Obviously, methods that allow accelerating gradient descent can also be implemented with step adaptation and are applicable to solving such problems.

Consider a function in which the position of the extremum changes along a given trajectory x*(m):

Define the trajectory of the extremum point x*(m):

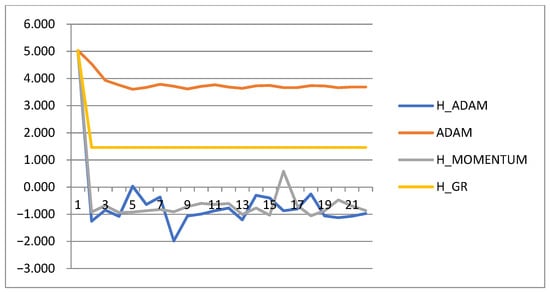

When testing methods, m is equal to the number of calls to the gradient of the function. Relation (28) shows the optimum point moving along a circle in the plane of the first and last components of the vector x while the other components of the optimum vector are zero. The starting point is x0 = (10, 10, …, 10). At amax = 10, the dynamics of changes in the values of the function (27) during minimization by the studied methods in a logarithmic scale are presented in Figure 6. It is obvious that the ADAM method with a small step is not suitable for solving the problem of chasing a minimum point. Step adaptation methods solve this problem.

Figure 6.

Trajectories of the function (27) decrease at amax = 10. The results of the function observation are given every 1000 iterations.

At amax = 100, the dynamics of changes in the values of the function (27) during minimization by the studied methods are presented in Figure 7. Here, the H_ADAM and H_MOMENTUM methods outperform the gradient-based H_GR method.

Figure 7.

Trajectories of the function (27) decrease at amax = 100. The results of the function observation are given every 5000 iterations.

Thus, increasing the degree of the problem conditionality leads to a decrease in the accuracy of tracking the minimum. This follows from a comparison of the achieved values of the function shown in Figure 6 and Figure 7. The solution to the minimization problem under conditions of extremum drift can be found by methods with step adaptation.

Let us consider the efficiency of the studied methods in minimizing test functions generally. Here, the ADAM method is inferior to all algorithms. Adjusting the step in the ADAM method brings it to the first positions in efficiency. It should also be noted here that the gradient method and MOMENTUM with step adaptation are also relevant when solving problems with noise. On quadratic functions (Table 8 and Table 9), the H_ADAM and H_MOMENTUM algorithms are almost equivalent. On functions with curvilinear ravines (Table 5, Table 10 and Table 11), the efficiency of the H_ADAM algorithm is higher.

On the regression problem, the ADAM method turns out to be more effective than the H_GR and H_MOMENTUM methods when the conditionality of the problem increases. The distribution of algorithms by efficiency in Table 14 and Table 18 corresponds to a higher degree of problem conditionality, where the ADAM algorithm outperforms the H_GR and H_MOMENTUM methods. However, for the ADAM algorithm, problems with a small amount of data for computing the gradient become problematic.

5. Conclusions

We have developed a number of new gradient-type algorithms with step adaptation, intended for the minimization of functions with gradient interference; these are the gradient method with step adaptation (H_GR), momentum method with step adaptation (H_MOMENTUM) and Adam algorithm with step adaptation (H_ADAM). Such algorithms are used for training neural networks, where a gradient on a limited number of randomly selected data is used to perform individual steps of the minimization algorithm.

One of the challenges for effective minimization is the conditionality of the problem. In order to study the effect of the conditionality on gradient-type methods, we have developed several functions with conditionality characteristics that do not depend on the problem dimension. Since the number of gradient method iterations depends to a greater extent on the degree of problem conditionality, we can conduct research on problems of low dimension, which enables us to reduce the computation time and thereby expand the range of issues under study.

To analyze the stability of the algorithm, we selected optimization criteria to observe the algorithm’s operation over a long period of increasing the accuracy of function minimization. We investigated the well-known Adam algorithm and the proposed algorithms with step adaptation. All the used test problems were intended to study the convergence rate of the algorithms under the conditions of a single local minimum with a given degree of conditionality. It can be concluded that the step adaptation proposed in the work can be useful in combination with the gradient method, momentum and Adam algorithms. At the same time, it turned out that the structure for obtaining the descent direction in the Adam algorithm was the most effective for organizing an algorithm with step adaptation. In future research, we plan to expand the list of algorithms to study the possibility of proposed step adaptation and to extend the algorithms to a wider range of applied problems.

Author Contributions

Conceptualization, V.K.; methodology, V.K. and E.T.; software, V.K.; validation, L.K. and E.T.; formal analysis, L.K.; investigation, V.K.; resources, V.K. and L.K.; data curation, E.T.; writing—original draft preparation, V.K.; writing—review and editing, E.T. and L.K.; visualization, V.K. and E.T.; supervision, L.K.; project administration, L.K.; funding acquisition, L.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Ministry of Science and Higher Education of the Russian Federation, project no. FEFE-2023-0004.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Frequently used designations.

Table A1.

Frequently used designations.

| Designation | Meaning |

|---|---|

| gk,∇f | Gradient of a function |

| hk | Minimization step |

| hk* | Optimal value of minimization step |

| pk | Exponentially smoothed gradients |

| bk | Exponentially smoothed squares of gradients |

| sk | Descent direction |

| xk | Approximation of minimum |

| E | Mathematical expectation |

| Exponential decay rates for the moment estimates | |

| α | Stepsize parameter |

| ε | Small value |

| (a,b) | Scalar product for vectors a and b |

| a·b = a ʘ b | Hadamard product for vectors a and b |

| ‖ ‖ | Vector norm |

| r | Noise level |

| n_max | Maximum number of iterations |

References

- Alamir, N.; Kamel, S.; Abdelkader, S. Stochastic multi-layer optimization for cooperative multi-microgrid systems with hydrogen storage and demand response. Int. J. Hydrogen Energy 2025, 100, 688–703. [Google Scholar] [CrossRef]

- Fan, J.; Yan, R.; He, Y.; Zhang, J.; Zhao, W.; Liu, M.; An, S.; Ma, Q. Stochastic optimization of combined energy and computation task scheduling strategies of hybrid system with multi-energy storage system and data center. Renew. Energy 2025, 242, 122466. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, Z.; Chen, R.; Chen, X.; Zhao, X.; Yuan, J.; Chen, Y. Stochastic optimization for joint energy-reserve dispatch considering uncertain carbon emission. Renew. Sustain. Energy Rev. 2025, 211, 115297. [Google Scholar] [CrossRef]

- Duan, F.; Bu, X. Stochastic optimization of a hybrid photovoltaic/fuel cell/parking lot system incorporating cloud model. Renew. Energy 2024, 237, 121727. [Google Scholar] [CrossRef]

- Zheng, H.; Ye, J.; Luo, F. Two-stage stochastic robust optimization for capacity allocation and operation of integrated energy systems. Electr. Power Syst. Res. 2025, 239, 111253. [Google Scholar] [CrossRef]

- Malik, A.; Devarajan, G. A momentum-based stochastic fractional gradient optimizer with U-net model for brain tumor segmentation in MRI. Digit. Signal Process. 2025, 159, 104983. [Google Scholar] [CrossRef]

- Hikima, Y.; Takeda, A. Stochastic approach for price optimization problems with decision-dependent uncertainty. Eur. J. Oper. Res. 2025, 322, 541–553. [Google Scholar] [CrossRef]

- Krizhevsky, A. One weird trick for parallelizing convolutional neural networks. arXiv 2024, arXiv:1404.5997. Available online: https://arxiv.org/abs/1404.5997v2 (accessed on 29 January 2025).

- Güçlü, U.; van Gerven, M.A.J. Deep Neural Networks Reveal a Gradient in the Complexity of Neural Representations across the Ventral Stream. J. Neurosci. 2015, 35, 10005–10014. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Kingsbury, B.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Chevalier, S. A parallelized, Adam-based solver for reserve and security constrained AC unit commitment. Electr. Power Syst. Res. 2024, 235, 110685. [Google Scholar] [CrossRef]

- Cheng, W.; Pu, R.; Wang, B. AMC: Adaptive Learning Rate Adjustment Based on Model Complexity. Mathematics 2025, 13, 650. [Google Scholar] [CrossRef]

- Taniguchi, S.; Harada, K.; Minegishi, G.; Oshima, Y.; Jeong, S.; Nagahara, G.; Iiyama, T.; Suzuki, M.; Iwasawa, Y.; Matsuo, Y. ADOPT: Modified Adam Can Converge with Any β2 with the Optimal Rate. In Proceedings of the 38th Conference on Neural Information Processing Systems (NeurIPS 2024), Vancouver, BC, Canada, 16 December 2024. [Google Scholar]

- Loizou, N.; Vaswani, S.; Laradji, I.; Lacoste-Julien, S. Stochastic Polyak step-size for SGD: An adaptive learning rate for fast convergence. In Proceedings of the 24th International Conference on Artificial Intelligence and Statistics (AISTATS-2021), Virtual, 13–15 April 2021; Volume 130, pp. 1306–1314. [Google Scholar] [CrossRef]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Zeiler, M.D. ADADELTA: An Adaptive Learning Rate Method. arXiv 2012, arXiv:1212.5701. Available online: https://arxiv.org/abs/1212.5701 (accessed on 8 February 2025).

- Orabona, F.; Pál, D. Scale-Free Algorithms for Online Linear Optimization. In Algorithmic Learning Theory; Chaudhuri, K., Gentile, C., Zilles, S., Eds.; ALT 2015, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9355. [Google Scholar] [CrossRef]

- Vaswani, S.; Laradji, I.; Kunstner, F.; Meng, S.Y.; Schmidt, M.; Lacoste-Julien, S. Adaptive Gradient Methods Converge Faster with Over-Parameterization (But You Should Do a Line-Search). arXiv 2020, arXiv:2006.06835. Available online: https://arxiv.org/abs/2006.06835 (accessed on 5 February 2025).

- Vaswani, S.; Mishkin, A.; Laradji, I.; Schmidt, M.; Gidel, G.; Lacoste-Julien, S. Painless Stochastic Gradient: Interpolation, Line-Search, and Convergence Rates. arXiv 2019, arXiv:1905.09997. Available online: https://arxiv.org/abs/1905.09997 (accessed on 8 February 2025).

- Reddi, S.J.; Kale, S.; Kumar, S. On the convergence of Adam and beyond. In Proceedings of the 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Tan, C.; Ma, S.; Dai, Y.-H.; Qian, Y. Barzilai-Borwein step size for stochastic gradient descent. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 685–693. [Google Scholar]

- Chen, J.; Zhou, D.; Tang, Y.; Yang, Z.; Cao, Y.; Gu, Q. Closing the Generalization Gap of Adaptive Gradient Methods in Training Deep Neural Networks. arXiv 2018, arXiv:1806.06763. Available online: https://arxiv.org/abs/1806.06763 (accessed on 8 February 2025).

- Ward, R.; Wu, X.; Bottou, L. AdaGrad stepsizes: Sharp convergence over nonconvex landscapes. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; PMLR. Volume 97, pp. 6677–6686. [Google Scholar]

- Xie, Y.; Wu, X.; Ward, R. Linear convergence of adaptive stochastic gradient descent. In Proceedings of the 33 International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020; PMLR. Volume 108, pp. 1475–1485. [Google Scholar]

- Li, X.; Orabona, F. On the convergence of stochastic gradient descent with adaptive stepsizes. In Proceedings of the 22 International Conference on Artificial Intelligence and Statistics (AISTATS) 2019, Naha, Japan, 16–18 April 2019; PMLR. Volume 89, pp. 983–992. [Google Scholar]

- Wang, Q.; Su, F.; Dai, S.; Lu, X.; Liu, Y. AdaGC: A Novel Adaptive Optimization Algorithm with Gradient Bias Correction. Expert Syst. Appl. 2024, 256, 124956. [Google Scholar] [CrossRef]

- Zhuang, J.; Tang, T.; Ding, Y.; Tatikonda, S.; Dvornek, N.; Papademetris, X.; Duncan, J. AdaBelief Optimizer: Adapting Stepsizes by the Belief in Observed Gradients. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 1–12. [Google Scholar]

- Zou, W.; Xia, Y.; Cao, W. AdaDerivative optimizer: Adapting step-sizes by the derivative term in past gradient information. Eng. Appl. Artif. Intell. 2023, 119, 105755. [Google Scholar] [CrossRef]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the Variance of the Adaptive Learning Rate and Beyond. arXiv 2019, arXiv:1908.03265. Available online: https://arxiv.org/abs/1908.03265 (accessed on 8 February 2025).

- Shao, Y.; Yang, J.; Zhou, W.; Sun, H.; Xing, L.; Zhao, Q.; Zhang, L. An Improvement of Adam Based on a Cyclic Exponential Decay Learning Rate and Gradient Norm Constraints. Electronics 2024, 13, 1778. [Google Scholar] [CrossRef]

- Krutikov, V.; Tovbis, E.; Gutova, S.; Rozhnov, I.; Kazakovtsev, L. Gradient Method with Step Adaptation. Mathematics 2025, 13, 61. [Google Scholar] [CrossRef]

- Krutikov, V.; Gutova, S.; Tovbis, E.; Kazakovtsev, L.; Semenkin, E. Relaxation Subgradient Algorithms with Machine Learning Procedures. Mathematics 2022, 10, 3959. [Google Scholar] [CrossRef]

- Polyak, B.T. Gradient methods for the minimization of functional. USSR Comput. Math. Math. Phys. 1963, 3, 864–878. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).