1. Introduction

In the field of computational intelligence, a multitude of swarm intelligence optimization algorithms have been proposed or evolved, such as genetic algorithms (GA) [

1], particle swarm optimization (PSO) [

2], Snow ablation optimizer [

3], Starfish optimization algorithm (SFOA), [

4] and others. These methods have increasingly become the go-to solutions for complex problems that traditional optimization algorithms struggle to address. Among them, the PSO algorithm has attracted widespread interest in many fields such as image encryption [

5], multi-AGV path planning [

6], UWB base station deployment optimization [

7], satellite navigation [

8,

9], and resource allocation [

10] due to its simplicity, few parameter requirements, fast convergence and strong optimization ability.

In the original PSO algorithm, each individual converges or concentrates on the personal history best position (

pbest) and global best position (

gbest) within the population, which can lead to a loss of diversity and premature convergence. As a result, its performance tends to degrade when faced with optimization problems that feature many local optima or are non-separable [

11]. To address this, various PSO variations have been developed with the aim of striking the right balance between global exploration and local exploitation in different aspects of the problem, as well as enhance convergence speed in an effort to find global optimum solutions for diverse challenges. These variants can generally be classified into four main types: firstly, population neighborhood topology and multi-swarm tactics; secondly, parameter control techniques; thirdly, novel learning strategies; and lastly, hybrid PSO methods that integrate with other algorithms. However, a conflict often arises between uni-modal and multi-modal problems. For example, the former benefit from reduced population diversity, which aids in exploitation, while the latter require greater population diversity to help the algorithm move away from local optima and facilitate global exploration. A concise summary of these four categories is presented as follows.

The first category of PSO variants involves the introduction of new topological structures in the neighborhood and multi-swarm strategies to manage exploration and exploitation through different information-sharing mechanisms among particles, thus mitigating premature convergence. In FIPS [

12], each individual updates its position by considering the

pbest of all its neighbors, meaning that every particle is influenced by all individuals in the population. Nasir [

13] developed a DNLPSO algorithm where a particle selects an exemplar from the best positions of its neighbors, allowing its velocity to be influenced by both historical and neighbor-based information. Qu recently proposed the LIPS algorithm to address multi-modal problems, utilizing local best information from nearby particles (based on Euclidean distance) to guide the search for the global optimum [

14]. Liang [

15] introduced the DMS-PSO algorithm in 2006 that divides the population into smaller swarms with a continuously evolving neighborhood structure. To tackle large-scale optimization problems, the CCPSO2 [

16] algorithm splits the decision variables into smaller multi-swarms.

The second category focuses on adaptive parameter control techniques. In HPSO-TVAC, individual velocity updates use time-varying acceleration coefficients c1 and c2. In the FST-PSO method [

17], a novel fuzzy logic (FL) approach independently calculates the inertia weight, cognitive/social component, and velocity limits for each particle, eliminating the need for predefined parameter settings. APSO employs evolutionary state estimation to categorize the population’s state into exploration, exploitation, convergence, and escape phases, and adjusts the inertia weights and acceleration coefficients based on these states [

18]. Additionally, APSO-VI [

19] dynamically adjusts the inertia weight based on the average absolute velocity, using feedback control to align with a specified nonlinear ideal velocity.

The third category of PSO variants introduces a novel learning approach to enhance search efficiency. This approach, known as the generalized “learning PSO” paradigm, integrates learning operators or specific mechanisms into the traditional PSO algorithm, allowing particles to acquire learning abilities to improve the universality and robustness. The CLPSO algorithm can be a typical representative of this category [

20], where each particle updates its decision variables by learning from multiple previous

pbest positions. This strategy can increase the population search effectiveness. OLPSO employs orthogonal experimental design (OED) to merge the

pbest and

gbest positions, creating a learning exemplar for each particle [

21]. This orthogonal approach helps guide the search more effectively, improving overall performance. GOPSO utilizes Cauchy mutation and generalized opposition-based learning techniques [

22]. Additionally, SL-PSO [

23] incorporates social learning strategies, where particles learn from the demonstrators (better-performing particles within the swarm).

GL-PSO [

24] divides operation into two stages. Genetic algorithm operators—crossover, mutation, and selection—are employed in the first stage to generate promising learning examples. The second stage updates the swarm’s position and velocity using the standard PSO algorithm. Another approach, Dimensional Learning PSO (DLPSO), introduced by Xu [

25], enhances learning by allowing each particle’s

pbest to learn from the corresponding dimension of the

gbest. This results in a learning example that combines the best information from both the individual and global experiences. However, no single PSO algorithm with a single learning method has proven universally effective across all problem types. The Hybrid Collaborative Learning PSO (HCLPSO) [

26] addresses this by dividing the population into two sub-populations: one focused on exploration, updating velocities using the Comprehensive Learning Strategy (CLS), and the other focused on exploitation, using a global version of the CLS for velocity updates. TSLPSO combines dimensional learning strategies (DLS) and CLS to achieve a relative balance between exploration and exploitation capabilities [

25]. HCLDMS-PSO [

27] further divides the population into two subgroups: one using CLS with

gbest for exploitation, and the other employing the DMS-PSO algorithm to enhance exploration. In Self-Learning PSO (SLPSO) [

28], each individual can deceptively switch between four different learning strategies, including learning from its own

pbest, a randomly chosen nearby position, another particle’s

pbest, and the

gbest position. Numerous other learning mechanisms are also being actively researched, expanding the potential of PSO [

29,

30,

31,

32].

The fourth category are hybrid PSO with other algorithms to enhance its performance on complex problems. Each method integrated into a hybrid PSO brings its own strengths. For example, Xin [

33] explored several hybrid combinations of Differential Evolution (DE) and PSO. Kran and Gündüz [

34] developed a hybrid PSO and Artificial Bee Colony (ABC) algorithm. In those hybrid algorithms, the information is exchanged between the particle swarm and other individuals to improve search capabilities. In addition, various specific search operators have been integrated into PSO, such as mutation operators [

25], two differential mutations [

35], chaos-based initialization with robust update strategies [

36], and aging mechanisms [

37]. These auxiliary techniques aim to increase the diversity of the population and accelerate convergence.

Previous studies have highlighted issues with PSO, such as “oscillation” and the “two steps forward, one step back” phenomenon. These problems can be mitigated, and search efficiency enhanced, by using PSO variants with unique learning techniques [

20,

21,

23,

24,

25,

28]. However, for both uni-modal and multi-modal problems, it is difficult for a single learning strategy to achieve a balance between exploration and exploitation capability. In response, we propose a Heterogeneous Genetic Learning and Comprehensive Learning Strategy PSO (HGCLPSO), which combines the GLS and CLS to efficiently identify and preserve promising solutions. This approach is inspired by algorithms such as HCLPSO, HCLDMS-PSO, TSLPSO, and HCLDMS-PSO. The whole population is compartmentalized into two sections to guide the particle search. The GLS sub-population uses a global topology to generate learning examples, optimizing exploitation, while the CLS sub-population learns from the

pbest of multiple particles across different dimensions, enhancing exploration. However, the GLS sub-population may suffer from a lack of exploitation capacity due to misleading information or insufficient convergence if the ideal solution is not found. To address this, we introduce a new Potential Excellent Gene Activation (PEGA) mechanism, which improves the exploitation ability of the GLS sub-population by updating the archived position of

gbest (

Abest) with high-quality genes from individual particles. Additionally, a repulsive mechanism is incorporated into the CLS sub-population, causing particles to generate repulsive responses based on their distance from the global optimal solution (

Abest), preventing premature convergence and maintaining diversity. Lastly, a local search operator utilizing the BFGS Quasi-Newton method is employed in the latter phases of evolution to enhance the optimal solution.

The performance of HGCLPSO is initially measured using 30D and 50D CEC2014 test suite problems, and it is compared to eight advanced PSO variants. Next, the 30D CEC2017 test suite is utilized to further assess HGCLPSO, comparing it against six non- PSO meta heuristics and five advanced PSO variants. The algorithm’s effectiveness is then tested on a real-world coverage control problem in wireless sensor networks (WSNs). The experimental results demonstrate that HGCLPSO achieves the best average rank across most test problems, confirming it as a robust and competitive optimization tool for continuous optimization problems.

3. The Proposed HGCLPSO Method

In GL-PSO, the learning exemplar is created using both the particle’s

pbest and

gbest in a global topology, similarly to a global version of PSO. While this mechanism enables a high convergence rate, it can also lead to a rapid loss of particle diversity, reducing the algorithm’s exploration capability. Experimental results indicate that GL-PSO performs less effectively on complex multi-modal functions compared to uni-modal and simpler multi-modal functions [

24], highlighting GLS’s strong exploitation ability. In contrast, CLPSO adjusts individual velocity by considering the

pbest position of all other particles, which allows each dimension of a particle to draw knowledge from various sources. Therefore, CLS enhances population diversity and strengthens global exploration, which helps a particle escape a local optimum by learning from others, thus avoiding premature convergence. By combining the strengths of GLS and CLS, it is possible to effectively balance exploration and exploitation capabilities.

- (1)

GLS sub-population and CLS sub-population hybrid

We suggest employing GLS and CLS to form heterogeneous sub-populations, which in turn boost the canonical PSO algorithm search capacity within the search space. The first sub-population is allocated to the GLS with the population size of N1 responsible for exploitation in the search range and the second sub-population is assigned to the CLS with the remaining population size N2 (i.e., N-N1) for exploration, respectively. In this way, it is worth noting here that the learning exemplar constructed by CLS sub-population particles is learning from different dimensions of the whole population of individuals, not just the CLS sub-population range.

- (2)

Potentially excellent gene activation mechanism (PEGA)

In the GLS sub-population, if the gbest has not yet converged to the optimal solution, particles in the GLS sub-population may maintain good diversity but lack sufficient exploitation, which negatively impacts the balance of search capabilities in the proposed HGCLPSO algorithm. In canonical PSO, the gbest is updated or replaced by the pbest information from all dimensions. This can result in valuable information being lost. There are two opposing factors that affect the gbest position: One is that improvements in certain dimensions of gbest can enhance the fitness of the overall best individual. On the other hand, while the overall fitness may improve, some dimensions of the gbest position may worsen, leading to the “two steps forward, one step back” phenomenon.

To address or mitigate this issue, we introduce the Potentially Excellent Gene Activation (PEGA) mechanism, which monitors the

pbest position to help accelerate the improvement of the

gbest position, specifically the archived

gbest position (

Abest). The

Abest position is initially the same as the

gbest position in the first generation. As a particle’s

pbest improves over time, a counter,

success_count, is incremented. When

success_count exceeds a threshold value

S, the

pbest position may contain useful information in certain dimensions, even if its overall fitness is low. In such cases, the

gbest position should incorporate potentially valuable information from the corresponding dimensions of the

pbest. However, identifying which dimensions or combinations of dimensions from the improved

pbest are beneficial for the

Abest position is challenging. To address this, we define a learning probability (PL) for each dimension of

Abest, based on a Sigmoid function, to determine how much information should be transferred from the

pbest particle, as follows:

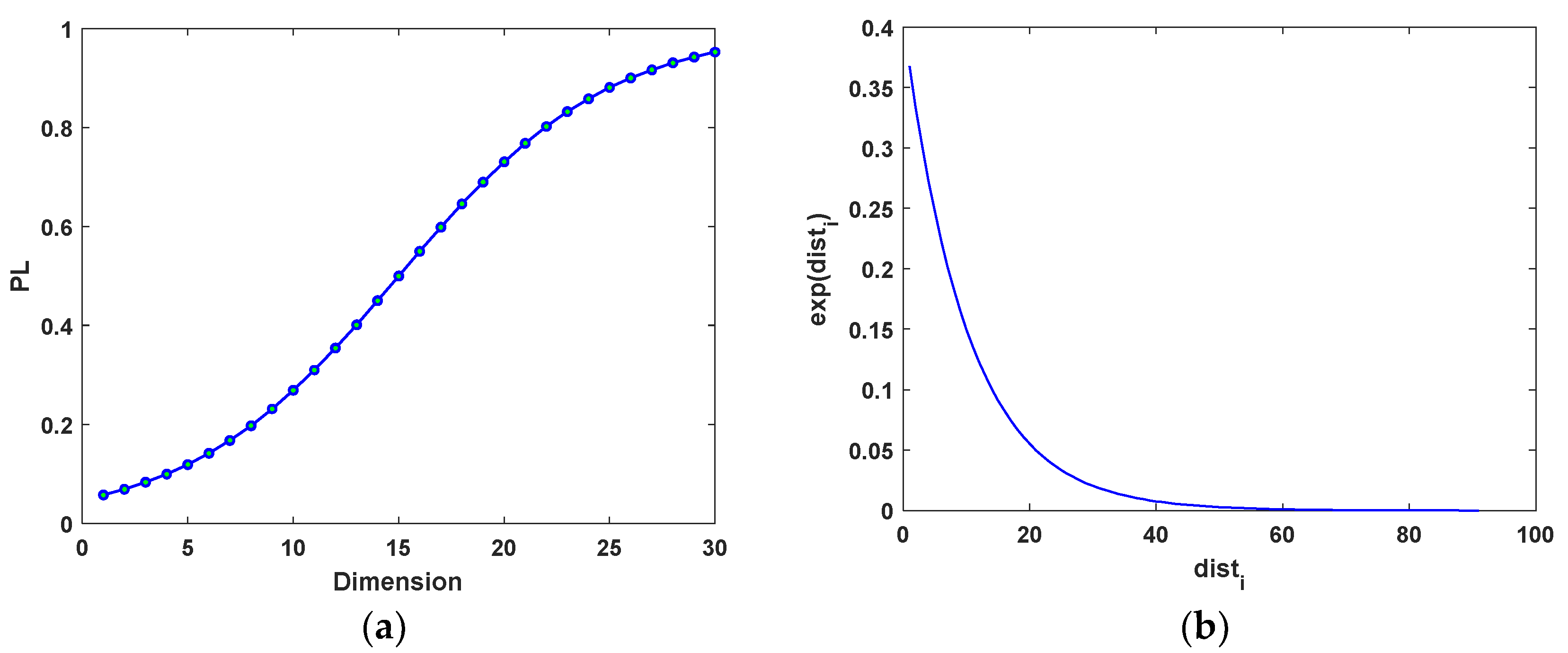

where

distribute between 0.0 and 1.0 for the

dth dimension of all particles. For 30D problems, the PL values for different dimensions are shown in

Figure 1a. For a certain dimension d, if a random number is less than PL, the

is replaced by

, and the new

Abest position fitness

is evaluated. If the new

Abest position performs better than the previous one, it replaces the old one; otherwise, the update is skipped. Algorithm 2 outlines the process for updating the

Abest position. Introducing the learning probability has two key advantages: first, it helps HGCLPSO save computational resources, and second, it reduces the likelihood of incorporating potentially irrelevant information from the

pbest position.

| Algorithm 2. Pseudo code for updated Abest position |

| 1: | For i = 1 to N do |

| 2: | If success_count(i) > S then |

| 3: | For d = 1 to D do |

| 4: | If rand < PLd then |

| 5: | temp = Abest; |

| 6: | If tempd = = pbestd then |

| 7: | continue |

| 8: | End if |

| 9: | tempd = pbestd |

| 10: | If fit(temp) < fit(Abest) then |

| 11: | Abestd = tempd |

| 12: | End if |

| 13: | End if |

| 14: | End for |

| 15: | End if |

| 16: | End for |

After generating the

Abest position, the formula for breeding a GLS exemplar

in Algorithm 1, line 6 will be replaced as follows:

- (3)

Repulsive mechanism

Although the CLS sub-population can maintain high population diversity, with the development of evolution, its particles will gradually learn from the excellent genes in the GLS sub-population and gradually lose diversity. However, when solving complex problems with many local optimums, the population may locate a pseudo optimal area and spread misinformation. Then, the CLS sub-population particles are easily attracted to the same region. To prevent premature convergence in the CLS sub-population and avoid clustering, we introduce a repulsive mechanism [

38]. If a particle in the CLS sub-population becomes too close to the current optimal position (

Abest), its position and velocity are adjusted using Equations (8) and (9). Meanwhile, the GLS sub-population continues its operation unaffected by this mechanism.

where

is a random number that is uniformly distributed within the interval [0, 1], while

and

represent the upper and lower limits of the search space, respectively. It is worth noting that the second part in Equation (8) is an exponential decay function related to the distance

. According to the repulsive force curve shown in

Figure 1b, if a particle in the CLS sub-population is closer to

Abest, it will be repulsed by greater repulsive force to explore further regions of the search range, while a particle far from

Abest is hardly affected. Since this repulsive mechanism has a strong destructive effect on the evolution of the CLS sub-population, it is operated every

R generations to stimulate the CLS sub-population to retain high diversity.

- (4)

BFGS Quasi-Newton local search

Since exploitation is key to improving solution accuracy, we incorporate the widely used BFGS Quasi-Newton method [

39,

40] in HGCLPSO as a local search operator. Only

Abest is chosen to conduct the local-searching procedure at the later evolutionary stage of the HGCLPSO. In all tests, the Quasi-Newton technique receives no gradient information; hence, we assign [0.05 *

MaxFEs] evaluations to refine

Abest based on the BFGS Quasi-Newton method. In this study, the BFGS Quasi-Newton local-searching operator is realized using the function “

fminunc” in Matlab R2021a.

Incorporating all the aforementioned features, the pseudo code for HGCLPSO is provided in Algorithm 3. Seeing in

Supplementary Materials, the MATLAB source code for HGCLPSO can be accessed at

https://github.com/wangshengliang2018/HGCLPSO (accessed on 2 September 2022).

| Algorithm 3. Pseudo code for the proposed HGCLPSO algorithm |

| 1: | Set population size parameter N, N1, N2; Set learning probability parameter PL; |

| 2: | Set GLS and CLS parameter; |

| 3: | Initialize particle position Xi and velocity Vi (1 ≤ i ≤ N); |

| 4: | Evaluate Xi and record the fitness fit(Xi), fes = N; |

| 5: | Initialize the pbesti = Xi and the pbest fitness value fit(pbesti) = fit(Xi); |

| 6: | Initialize the gbest = [pbesti |min(fit(pbesti)),1 ≤ I ≤ N]; Abest = gbest; |

| 7: | Initialize the GLS exemplar by Algorithm 1 with Equation (7) for the first sub-population; |

| 8: | Initialize the CLS exemplar for the second sub-population; |

| 9: | While (iter <= max_iteration) and (fes <= 0.95*MaxFEs) |

| 10: | fit(last_pbest) = fit(pbest); |

| 11: | For i = 1:N1 |

| 12: | Update the GLS sub-population Xi and Vi according to Equations (3) and (2); |

| 13: | End for |

| 14: | If mod(iter, R) == 0 then |

| 15: | For i = N1 + 1:N |

| 16: | Update the CLS sub-population Xi and Vi according to Equations (8) and (9); |

| 17: | End for |

| 18: | Else |

| 19: | For i = N1 + 1:N |

| 20: | Update the CLS sub-population Xi and Vi according to Equations (4) and (2); |

| 21: | End for |

| 22: | End if |

| 23: | For i = 1:N |

| 24: | Evaluate all Xi fitness; |

| 25: | Update the pbesti and gbest; |

| 26: | If fit(gbest) < fit(Abest) then |

| 27: | Abest = gbest; |

| 28: | End if |

| 29: | If fit(pbesti) < fit(last_pbesti) then |

| 30: | success_count(i) = success_count(i) + 1; |

| 31: | Else |

| 32: | success_count(i) = 0; |

| 33: | End if |

| 34: | Update Abest position by Algorithm 2; |

| 35: | End for |

| 36: | Update the GLS exemplar by Algorithm 1 with Equation (7); |

| 37: | Update the CLS exemplar; |

| 38: | End While |

| 39: | Assign 0.05∗MaxFEs for Abest to carry out the BFGS Quasi-newton local search operator. |

Population diversity is a key indicator for assessing both exploitation and exploration abilities. We analyze the diversity of the GLS sub-population, the CLS sub-population, and the overall population throughout the evolution process. The formulas for calculating the diversity measure are given in Equations (10) and (11):

where

N represents the population size, and

refers to the average position of the

dth dimension across the entire population. Small population diversity suggests that the population is being exploited within a constrained area since the particles condense close to the population center. High population diversity suggests that a larger area is being explored and that the population’s particles are spread out from its core.

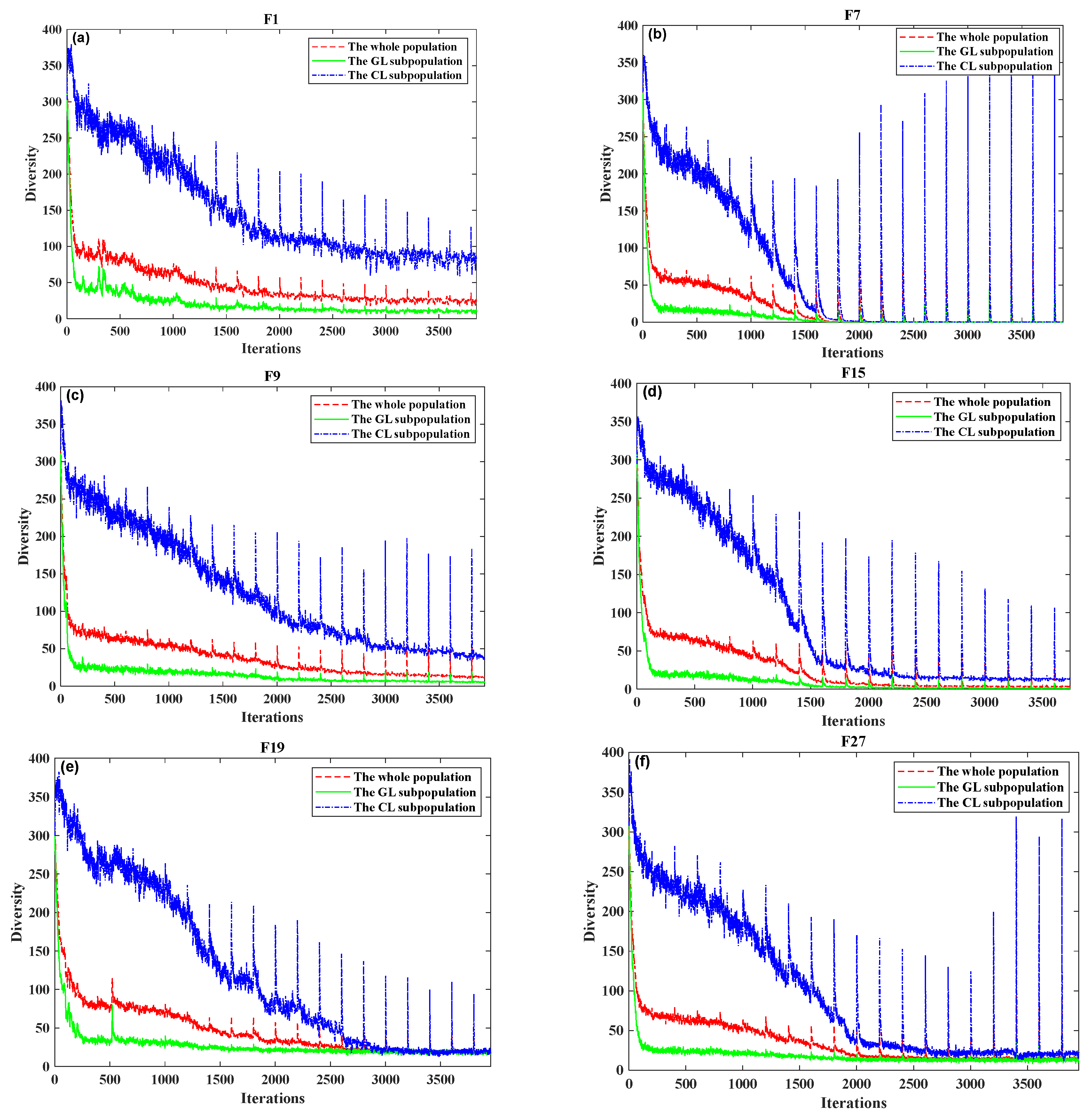

Figure 2 displays the diversity measure value curves of F1, F7, F9, F15, F19, and F27 in the CEC2014 test suite function [

41]. According to

Figure 2, the CLS sub-population often has higher diversity than the GLS sub-population, while the whole population diversity falls between the two. Since there are no data from

gbest to serve as a central guiding direction, it is not surprising that the CLS subgroup maintains the maximum diversity. Additionally, the presence of the repulsive mechanism is clearly established to have caused a rise in the diversity of CLS sub-populations or mutation. In the early stages of evolution, the CLS population members are relatively distant from the

gbest individuals, resulting in a weak repulsive force. As the evolution progresses, the CLS population moves closer to the ideal individuals, generating a stronger repulsion that helps prevent premature convergence. The global topological structure and PEGA updating mechanism for the GLS example generate the smallest diversity and the fastest convergence in the GLS sub-population, respectively. Due to the collaborative effort of the GLS and CLS sub-populations in balancing exploitation and exploration, the overall population diversity remains moderate. The role design of the GLS and CLS sub-populations fulfills our expectations, as demonstrated by the experimental results comparing diversity measures.

4. Experimental Results and Analysis

In

Section 4.1, we modify the key parameters of the HGCLPSO algorithm using the CEC2014 test suite, focusing on uni-modal and simple multi-modal functions (F1~F16).

Section 4.2 compares the performance of HGCLPSO with eight advanced PSO variants using the CEC2014 test suite to evaluate its robustness against shift and rotation functions. In

Section 4.3, we compare HGCLPSO with other state-of-the-art evolutionary algorithms (EAs) on the CEC2017 test suite [

42].

Section 4.4 applies HGCLPSO and other EAs to solve the coverage problem in WSNs. The CEC2014 test suite contains 30 test functions, and the problem definitions and code are available for download from the GitHub repository (

https://github.com/P-N-Suganthan/CEC2014) (accessed on 2 September 2022). The CEC2017 test suite (

https://github.com/P-N-Suganthan/CEC2017-BoundContrained) (accessed on 2 September 2022) consists of 29 test functions. In this suite, F2 is excluded due to its instability, especially in higher dimensions.

The evaluation criteria involve calculating the mean error and standard deviation for each algorithm, then ranking their performance. The final ranking for each algorithm is determined by averaging its individual ranks. In

Section 4.2 and

Section 4.3, we apply the widely used non-parametric Wilcoxon rank sum test [

43] at a 0.05 significance level to statistically assess the significance of the differences between the results of the algorithms compared, strengthening the validity of the experimental findings. The symbols “+”, “=”, and “−” respectively indicate that the performance of the HGCLPSO algorithm is significantly better than, without significant difference, and worse than the compared algorithms. In addition, we also performed the Friedman test to determine whether the algorithm results were statistically significant, further supporting the experimental conclusions. All algorithms are implemented in MATLAB R2021a and executed on a laptop with an Intel Core i5 11400 CPU (2.60 GHz) and 8 GB RAM, running Microsoft Windows 10.

4.1. HGCLPSO Algorithm Parameter Tuning

There exist two parameters, i.e., two sub-population sizes (N1 and N2) and threshold value parameter S in the Abest update process, which need to be tuned in the proposed HGCLPSO algorithm. Our preliminary experiments indicate that setting the parameter S to 3 leads to excessive use of computational resources, which negatively impacts the population’s evolution. On the other hand, when S is set greater than 5, the update probability of Abest significantly decreases, hindering the GLS sub-population’s exploitation ability. However, when S is set to 4, these issues are well-balanced. Therefore, we will not discuss the tuning of this parameter further. We just use the CEC2014 benchmark function to test and compare the influence of two different sub-population sizes (N1 and N2) on the parameters. The runtime, population size, dimensions, and MaxFEs are set to 31, 40, 30, and 300,000, respectively. The final ranking is determined by calculating the average rank across 16 benchmark functions.

When

N1 equals zero, the proposed HGCLPSO algorithm degenerates into the canonical CLPSO algorithm with the repulsive mechanism and BFGS Quasi-Newton local search. When

N1 equals

N, the proposed HGCLPSO algorithm will become the GL-PSO algorithm with the

Abest update mechanism and BFGS Quasi-Newton local search. So, the above two cases are not included in our discussion of parameter adjustment. We assign the value of

N1 from 0.1*

N to 0.9*

N with an interval of 0.1*

N, for a total of nine cases for the final ranking statistical analysis.

Table 1 presents the results of tuning the sizes of two sub-populations (

N1 and

N2). Based on the final rankings, the combination of

N1 = 0.8*N and

N2 = 0.2*

N yielded the best average rank for F1–F16 compared to other configurations. Thus, the sub-population sizes

N1 = 0.8*

N and

N2 = 0.2*

N, along with the threshold parameter

S = 4, are selected in subsequent experiments. While this configuration may not be optimal for every minimization problem, it generally enables HGCLPSO to achieve satisfactory solutions across various functions.

4.2. Experimental Results and Analysis of CEC2014 Test Suite

In this section, we compare HGCLPSO with eight other state-of-the-art PSO variants to validate its effectiveness on the CEC2014 test suite. These variants include CLPSO [

20], OLPSO [

21], SL-PSO [

23], HCLPSO [

26], GL-PSO [

24], EPSO [

44], TSLPSO [

25], and HCLDMS-PSO [

27]. Detailed parameter settings are provided in

Table 2. CLPSO and GL-PSO are discussed in

Section 2.2 and

Section 2.3, respectively. OLPSO leverages an orthogonal learning strategy to generate learning exemplars for particle updates. SL-PSO integrates a dimension-dependent parameter management strategy with social learning for updating positions and velocities. HCLPSO employs a comprehensive learning strategy and divides the population into two subgroups to perform exploration and exploitation functions, respectively. EPSO combines five PSO strategies—such as inertia weight PSO and CLPSO-

gbest—through a self-adaptive mechanism. TSLPSO uses DLS and CLS techniques to manage exploration and exploitation. Meanwhile, HCLDMS-PSO separates the population into CL and DMS subgroups, with the CL subgroup focused on exploitation using CLS and the DMS subgroup on exploration using DMS-PSO.

4.2.1. Results for 30 Dimensional Problems

The unified parameters for all 30 CEC2014 test problems are as follows: population size = 40, problem dimension = 30, number of runs = 31, and

MaxFEs = 300,000. The experimental outcomes are listed in

Table 3, with the best rank results highlighted in bold. Additionally,

Table 4 presents the statistical results of the Wilcoxon rank sum test, while

Table 5 displays the Friedman test rankings for all the algorithms when applied to 30-dimensional problems.

For F1–F3, HGCLPSO outperforms others on functions F1 and F3. GL-PSO, however, provides the best solution on F2. Notably, HGCLPSO ranks third on F2, demonstrating that it benefits from the strong exploitation capabilities of GL-PSO, contributing to its excellent performance on uni-modal problems. In the case of F4–F16, HGCLPSO ranks first on F4, F5, F8, and F12, and second on F10. SL-PSO performs best on multi-modal functions F6, F9, and F11. HCLDMS-PSO leads on F13, F15, and F16, while TSLPSO excels on F7 and F14. GL-PSO ranks highest on F10. For hybrid and composition functions F17–F30, HGCLPSO delivers the best results on F17, F20, and F21, as well as on F23, F25, and F30—totaling six out of 14 test functions. CLPSO performs best on F18, F24, and F29. HCLDMS-PSO achieves the best solution on F19 and F26, while SL-PSO excels on F27 and F28. HCLPSO outperforms others on F22. Overall, HGCLPSO demonstrates the strongest performance, mainly due to the exploration capabilities of its CLS sub-population. The final row of

Table 3 summarizes the number of (Best/2nd Best/Worst) rankings for each algorithm. In total, HGCLPSO delivers the best performance on 12 out of 30 benchmark problems, achieving the highest overall ranking, while TSLPSO ranks second among the compared PSO algorithms.

Table 4 demonstrate that HGCLPSO outperforms the other eight PSO variants on most of the 30D CEC2014 test problems. Notably, it achieves significantly better solutions on F1, F3, F4, F17, F20, F21, F25, and F30, surpassing all other algorithms in these cases. Additionally, the Friedman test results in the last row of

Table 5 reveal a

p-value of 2.30461 × 10

−13, indicating that there are statistically significant differences between the nine algorithms. The average rank of HGCLPSO across all 30D CEC2014 benchmark functions is 3.62, confirming that it is the top-performing PSO variant in the comparison.

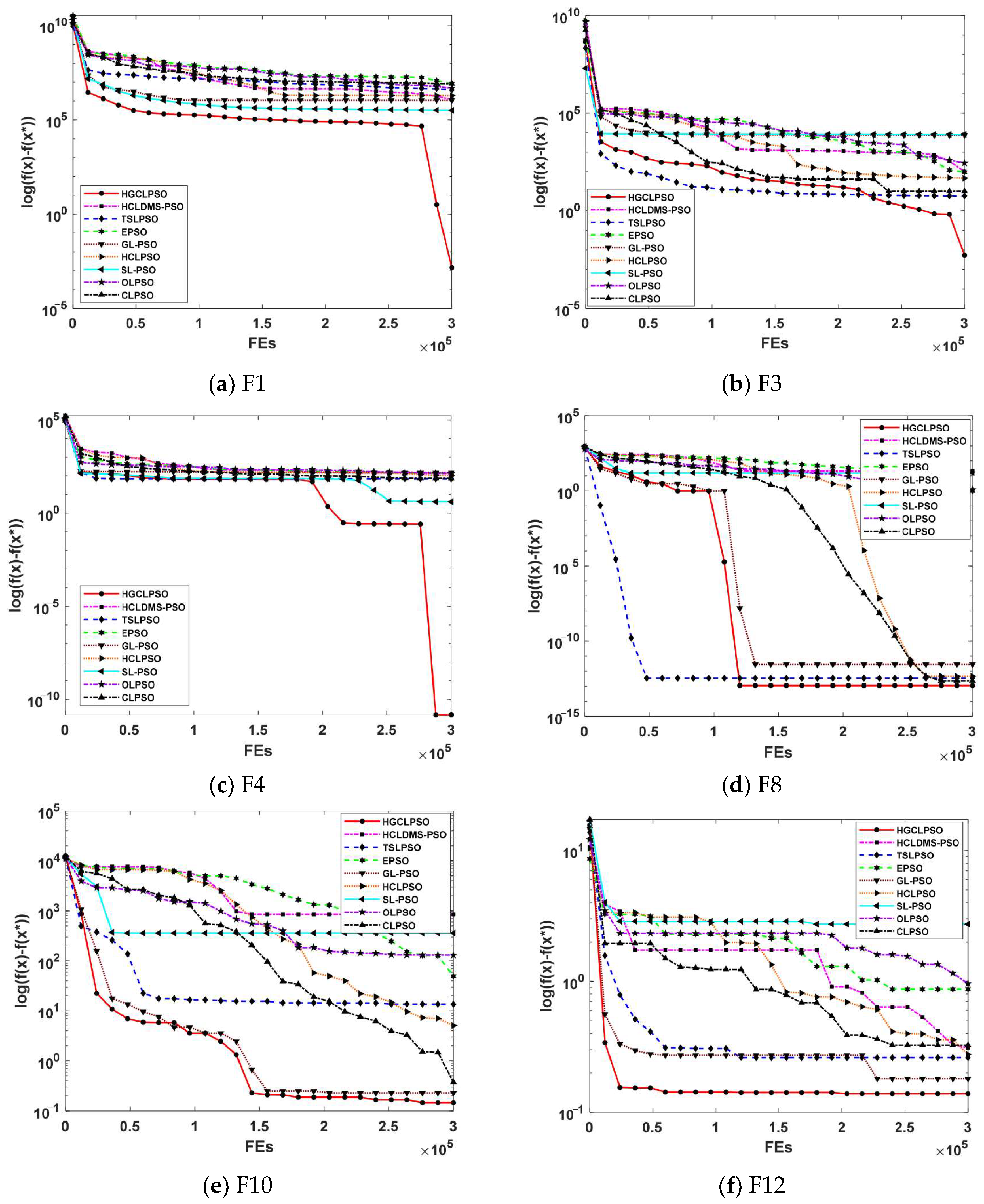

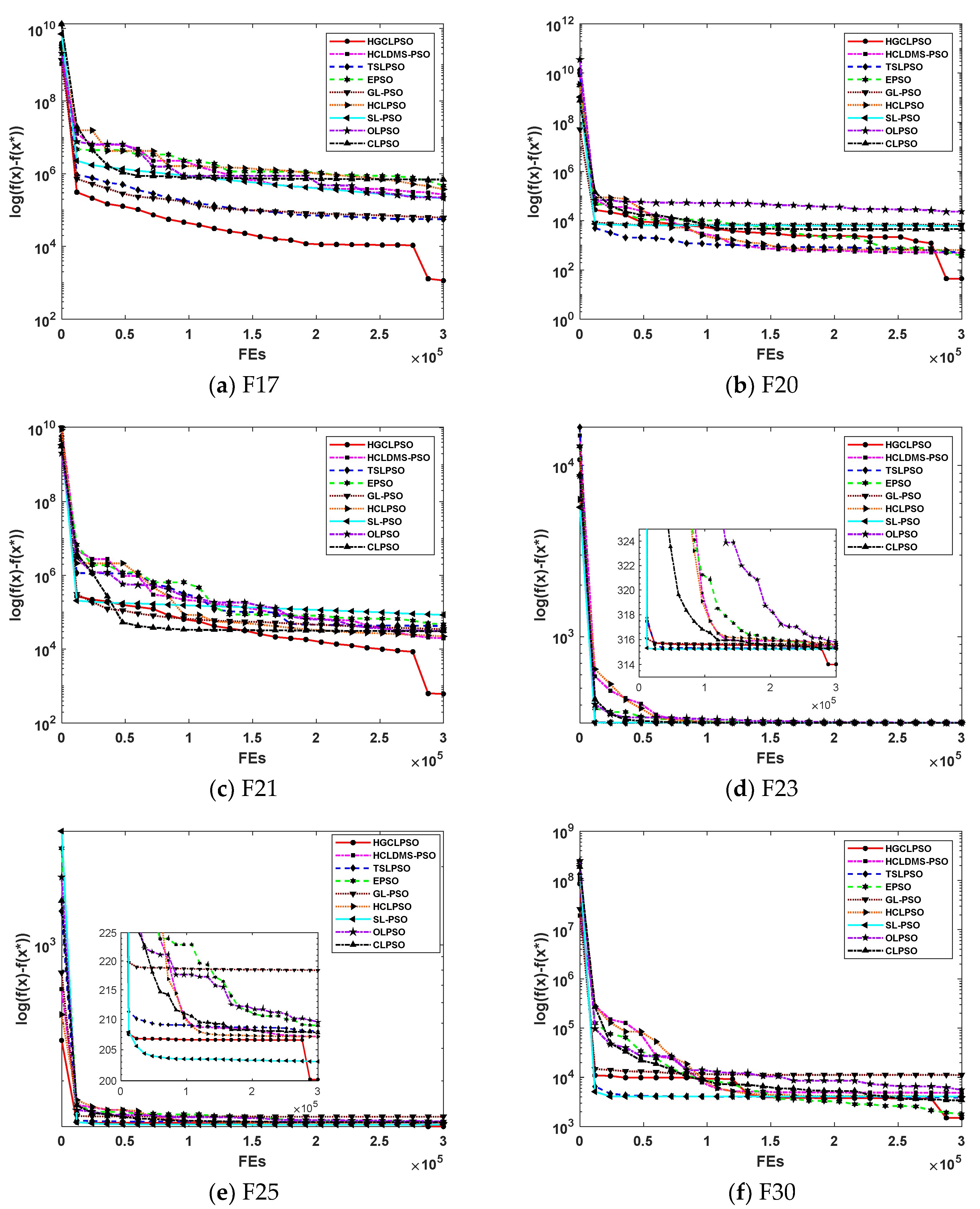

To analyze the convergence performance, convergence graphs based on 31 runs for the 30D problems are presented.

Figure 3 and

Figure 4 show the convergence curve of the nine PSO variants for 12 CEC2014 benchmark functions, i.e., F1, F3, F4, F8, F10, F12, F17, F20, F21, F23, F25, and F30. It is evident from the graphs that HGCLPSO demonstrates a faster convergence rate compared to the other eight PSO variants in the early stages of evolution, particularly on F1, F10, F17, F21, and F25. While algorithms like TSLPSO, SL-PSO, and GL-PSO also show rapid convergence on functions such as F3, F4, F10, F12, F20, F23, and F30, HGCLPSO leverages its local search capabilities in the later stages to achieve better overall solutions. In summary, the convergence analysis confirms that HGCLPSO not only converges quickly but also consistently delivers superior performance across most 30D benchmark problems.

4.2.2. Results for 50 Dimensional Problems

For the 50D CEC2014 test suite functions, the parameters are set as follows: population

size = 40, number of

runs = 51, and

MaxFEs = 500,000. The experimental results are summarized in

Table 6,

Table 7 and

Table 8. Since the convergence behavior is similar to that observed for the 30D problems, the corresponding convergence graphs are not included in this section.

For F1–F16, HGCLPSO outperforms the others on F1 and F3, and achieves the best results on F4, F5, F8, F12, and F16, totaling seven out of 16 test functions. CLPSO performs best on the uni-modal function F2, with HGCLPSO ranking second. TSLPSO excels on functions F7, F14, and F15. HCLDMS-PSO delivers the best solutions for F9 and F13. SL-PSO performs best on F6 and F11, while GL-PSO excels on F10. For the hybrid and composition functions F17–F30, HGCLPSO shows excellent performance on F17, F20, F21, F23, F25, and F30, achieving the best results in six out of 14 test problems. HCLDMS-PSO leads on F22, F26, and F28. TSLPSO performs best on F19 and F24, while CLPSO is the top performer on F18 and F29. SL-PSO achieves the best results on F27. As indicated by the average ranks in

Table 6, HGCLPSO achieves the optimal average rank of 3.03, while TSLPSO ranks second with an average of 3.07. This outcome mirrors the 30D test problem results, where both algorithms are influenced by HGCLPSO’s performance on specific functions like F6, F9, F11, F19, and F27.

Table 7 reveals that HGCLPSO significantly outperforms the eight other PSO variants on most of the 50D CEC2014 test functions. Additionally,

Table 8 presents the Friedman test rankings for all nine algorithms applied to the 50D problems. The

p-value of 3.4904 × 10

−17, reported in the last row of

Table 8, strongly suggests that there are substantial differences between the nine algorithms. Among them, HGCLPSO ranks the highest with an average rank of 3.37, indicating its superior performance.

4.2.3. Results for Computational Time

Table 9 presents the mean computation time of nine PSO algorithms. The computation time (in seconds) for each run was recorded for 30D and 50D CEC2014 problems. Among the compared algorithms, OLPSO requires the least computation time, followed by SL-PSO, which takes a slightly longer time. The computation times of the HGCLPSO and GL-PSO algorithms are nearly identical. The use of the CL strategy enhances the search performance of CLPSO, HCLPSO, and TSLPSO, but results in higher computational costs. EPSO and HCLDMS-PSO, which combine various PSO strategies to leverage their complementary strengths, also incur greater computational costs. Overall, HGCLPSO strikes a balance between competitive performance and computational efficiency, delivering excellent results without excessive time consumption.

4.3. Experimental Results and Analysis of CEC2017 Test Suite

In this section, the performance of HGCLPSO is also evaluated using 30D CEC2017 test suite problems [

42], using the same parameter settings: MaxFEs set to 300,000, a population size of 40, and each algorithm is run independently 31 times. This comparison aims to show that HGCLPSO remains competitive when contrasted with several state-of-the-art EAs. In addition to HCLDMS-PSO, TSLPSO, GL-PSO, HCLPSO, and CLPSO discussed in

Section 4.2, other advanced EAs are also included in the comparison, as follows:

moth flame optimization (MFO) [

45]

whale optimization algorithm (WOA) [

46]

Artificial Bee Colony Algorithm (ABC) [

47]

butterfly optimization algorithm (BOA) [

48]

grey wolf optimizer (GWO) [

50]

The parameter settings for all PSO variants remain consistent with those used in the previous experiments, and the other EAs are based on the configurations from their original references. The mean errors and standard deviations are presented in

Table 10, while the ranks of mean performance and the Wilcoxon rank sum test results are shown in

Table 11.

From the results in

Table 10 and

Table 11, HGCLPSO performs best on 12 out of 29 test functions (F1, F3, F4, F12–F15, F18, F25, F27, F28, F30), ranking first. HCLDMS-PSO excels on functions F5, F7–F9, F16, F17, F20, F21, F23, F24, and F29. HCLPSO achieves the best rank results on F10 and F26. CLPSO provides the best solution for F19 and F22. TSLPSO performs best on F11, while ABC ranks first on F6. Based on the average ranks, HCLDMS-PSO takes the top spot, followed by HCLPSO in second and HCLDMS-PSO in third.

Table 11 clearly shows that HGCLPSO is superior to most other evolutionary algorithms, with its performance being nearly on par with HCLDMS-PSO, TSLPSO, and HCLPSO. However, HGCLPSO outperforms algorithms like GL-PSO and CLPSO on most test functions. Notably, HGCLPSO also surpasses several well-known EAs, including ABC, MFO, WOA, BOA, GWO, and BA.

Table 12 shows that HGCLPSO differs significantly from all other PSO variants and six other EAs on the CEC2017 test suite, with a Friedman test

p-value of 3.98E-49 and average rank of 3.25.

4.4. HGCLPSO Performance on the WSNs Coverage Problem

HGCLPSO is further evaluated using the WSNs coverage control problem, a widely recognized real-world optimization challenge [

51]. WSNs are integral to various applications such as target tracking, disaster warning, and wearable technology. These networks are typically large and dense, with significant overlap in their coverage areas. Random deployment of sensor nodes often fails to ensure complete coverage, leading to coverage gaps within the WSNs. Therefore, coverage control plays a crucial role in optimizing WSN architecture and minimizing energy consumption.

Suppose

sensor nodes are deployed randomly in a two-dimensional target monitoring area, which can be viewed as a node set

, where

denotes node

. The coordinate of node

is

, and its sensing radius is

. If point

is within the sensing radius of node

, we assume that it is covered by node

. The sensing rate

for pixel

covered by

is as follows:

The distance between pixel

and node

is

. Because pixel

can be covered by several nodes at the same time, we consider whether pixel

is covered by node set

:

Finally, the coverage rate of the target area is formulated as follows:

where

and

denote the length and width of the target area. Swarm intelligence is an effective approach for optimizing node deployment and enhancing network performance. This experiment uses a 30D WSNs coverage control problem with the sensor node

set to 15. The sensing radius

is 15 m, while the target region is

m

2 in size. For this experiment, all 15 EA algorithms from

Section 4.2 and

Section 4.3 were used.

MaxFEs, run times, and population size were all set to 40, 30, and 300,000, respectively.

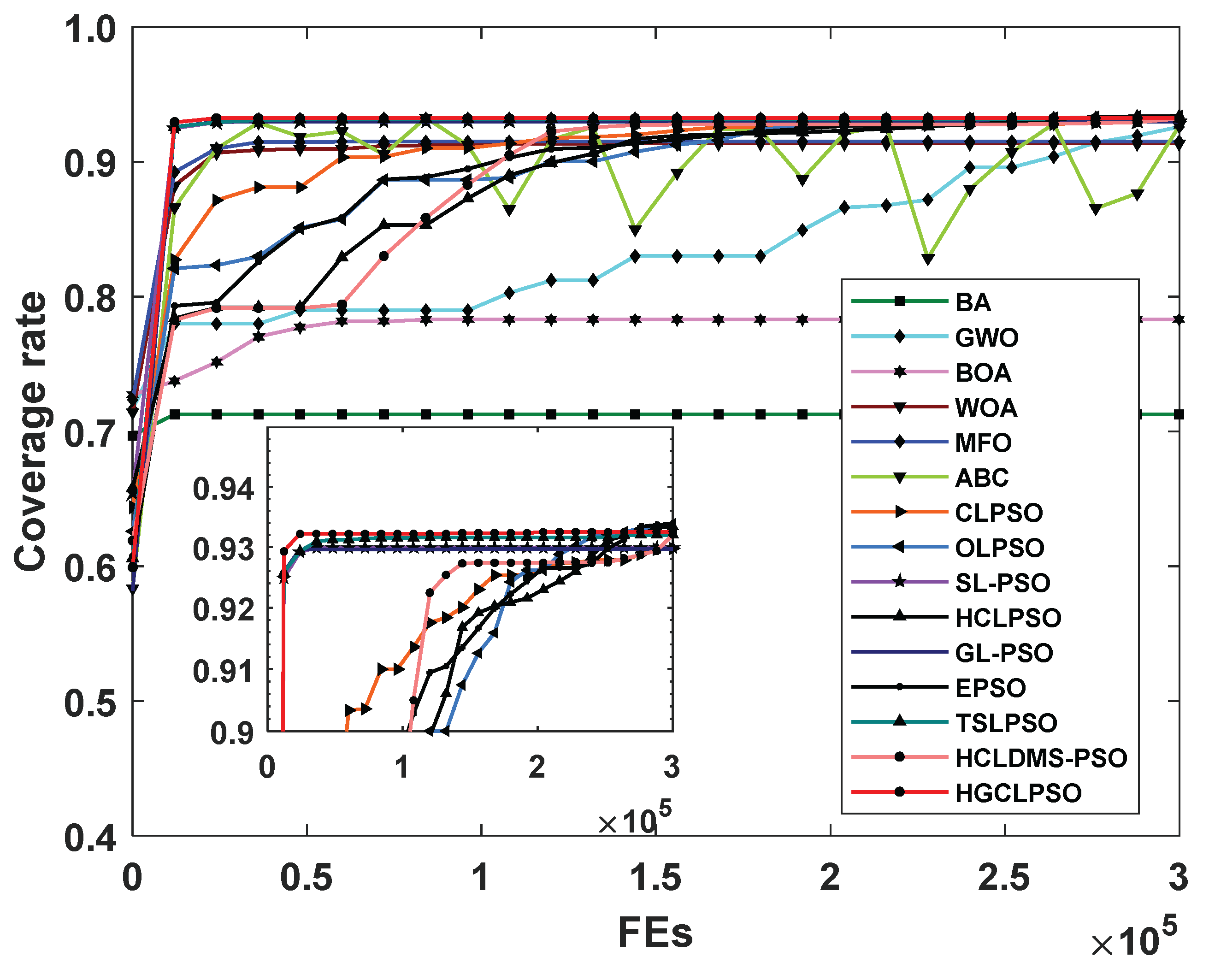

As shown in

Table 13, HGCLPSO achieves the highest mean coverage rate of 0.9351. It outperforms EPSO and ABC, which rank second and third, respectively. Additionally, HGCLPSO surpasses six other EAs and seven PSO variants. The method demonstrates a notably fast convergence in the early stages, as evidenced by the coverage rate convergence curve in

Figure 5. Overall, HGCLPSO is not only effective for a wide range of benchmark functions but also well-suited to address real-world engineering problems.

4.5. Discussion

The above experimental results demonstrate the effectiveness of the HGCLPSO algorithm in tackling a range of problems from the CEC2014 and CEC2017 test suites, as well as a real-world engineering challenge. It excels in terms of convergence accuracy, speed, and reliability. The learning approaches used by algorithms such as CLPSO, OLPSO, SL-PSO, and GL-PSO, which rely on a single learning strategy, are insufficient for addressing more complex problems. In contrast, HGCLPSO employs both GLS and CLS to construct exploitation and exploration sub-populations, respectively. Similarly to HCLPSO, TSLPSO, and HCLDMS-PSO, HGCLPSO uses different learning strategies to generate these sub-populations. A novel PEGA mechanism is introduced to enhance exploitation, and a repulsive mechanism is incorporated to prevent premature convergence of the CLS sub-population. Additionally, a local search operator is applied in the later stages of evolution to help HGCLPSO avoid local optima. Thanks to these strategies, HGCLPSO demonstrates strong optimization performance on most problems, achieving the best results on 12 and 13 functions out of the 30D and 50D CEC2014 test suite problems, respectively. However, the HGCLPSO algorithm does not perform as well on certain functions, such as F6, F7, F22, and F27, which affects its overall ranking. In the CEC2017 test suite, HGCLPSO shows sub-optimal performance on F7, F8, F9, F10, F21, and F24. This behavior can be explained by the “no free lunch” theorem [

52], which states that no single algorithm can outperform all others across every type of problem.

The ranks from each function, along with two statistical tests, indicate that HGCLPSO, as well as three two-sub-population PSO variants utilizing the CL strategy (HCLPSO, TSLPSO, and HCLDMS-PSO), generally outperform the other PSO variants. By leveraging the enhanced exploration ability of the CL strategy, these PSO variants construct exploitation sub-populations through different mechanisms. This combination creates an effective paradigm to balance exploration and exploitation capacity, helping particles explore new areas, increase their chances of escaping local optima, and avoid premature convergence. Furthermore, in HGCLPSO, GL and CL exemplars guide particle searches, replacing the conventional pbest and gbest. This single-guided learning mechanism helps avoid the “oscillation” problem often seen with dual guidance in traditional PSOs. In GL-PSO, there is no guarantee that the learning exemplars will remain effective across all dimensions. If exemplars degrade in certain dimensions, particles learning from them may hinder the algorithm’s efficiency, leading to the “two steps forward, one step back” issue. To address this, the proposed PEGA mechanism helps preserve the valuable information in particles, ensuring that the exemplars do not degrade and mitigating this phenomenon.

5. Conclusions

In this paper, we introduce a novel PSO variant, HGCLPSO, which utilizes GLS and CLS to create two distinct sub-populations, effectively balancing exploration and exploitation—a long-standing core challenge in the field of swarm intelligence optimization. To enhance the exploitation capability of the GLS sub-population, we propose a Potentially Excellent Gene Activation (PEGA) mechanism. This mechanism updates the Abest position by learning from high-performing genes of individual particles, ensuring the discovery of better global solutions and filling the gap in targeted gene-driven optimization for PSO variants. Additionally, to prevent premature convergence in the CLS sub-population and preserve diversity, we incorporate a repulsive mechanism that increases the repulsive force when individuals are too close to the optimal position. Finally, in the later stages of evolution, a BFGS quasi-Newton local search operator is applied to the Abest to help avoid local optima, which strengthens the reliability of optimization results for complex problems.

We compare HGCLPSO with eight state-of-the-art PSO variants using the CEC2014 test suite, evaluating solution accuracy, convergence speed, statistical significance, and computational time. The results show that HGCLPSO achieves competitive accuracy while maintaining fast convergence. Additional experiments on the CEC2017 test suite and real-world engineering problems further demonstrate the superiority of HGCLPSO over several other PSO variants and EAs, highlighting its potential to provide more efficient and robust optimization tools for both academic research and industrial applications. The research significance of HGCLPSO lies in two key aspects: first, it enriches the theoretical framework of PSO variants by integrating multi-strategy collaboration and adaptive mechanism design, offering new insights for the development of swarm intelligence algorithms; second, it addresses critical limitations of existing algorithms in balancing exploration and exploitation, as well as maintaining diversity, which promotes the advancement of optimization technology for complex problems.

Future research will focus on developing new mechanisms or exploring alternative strategies to better balance exploitation and exploration capabilities, particularly for high-dimensional and multi-objective optimization problems. Additionally, in our ongoing studies, we plan to apply HGCLPSO to more complex real-world engineering challenges, such as renewable energy system optimization and intelligent manufacturing process scheduling, to expand its application scope and maximize its practical impact.