Domain-Specific Few-Shot Table Prompt Question Answering via Contrastive Exemplar Selection

Abstract

1. Introduction

2. Related Work

2.1. Table QA on General Domains

2.2. Table QA on Specific Domains

2.3. Few-Shot Table QA

3. Methodology

3.1. Task Formulation

3.2. Data Characteristics in Specific Domains

3.3. Architecture

3.4. Subsection

| Algorithm 1. The Prompt Template Generation Strategy Algorithm |

| The input information is as follows: 1: Question: {0} 2: Table name: {1} 3: Table headers: {2} 4: Given function list: [““, “AVG”, “MAX”, “MIN”, “COUNT”, “SUM”] 5: Given condition operator list: [“>“, “<“, “=“, “!=“] 6: Return results in the following format: 7: { 8: “SQL”: ““, 9: “Aggregate function”: ““, 10: “Query column”: ““, 11: “Where condition column”: [], 12: “Where condition operator”: [], 13: “Where condition value”: [], 14: “Number of where conditions”: ““ 15: } |

3.5. Exemplar Retrieval Approach

| Algorithm 2. The Prompt Template Retrieval Strategy |

| 1: Input: Training dataset , test set . 2: Output: Relevant subset for the test set. 3: (1). Initialize a candidate set of training exemplars . 4: (2). Use the large language model to independently score each as . 5: (3). Based on the scores from (2), define a positive exemplar set and a negative exemplar set for each . 6: (4). Construct contrastive learning training data . 7: (5). Compute the inner product . 8: (6). Minimize . |

| Algorithm 3. ESQL Application Example | |

| Input | 1: Question: For projects with an estimated net profit year-on-year growth rate (compared to the previous period) below 44.33% and project estimated total profit (excluding price re-duction impact) higher than 3471.44, what is the average project estimated total profit? 2: Table Name: table_6 |

| Output | Example 1: 1: Question: What is the lowest estimated net profit year-on-year growth rate (compared to the previous period) in projects with funding source types and year-end balances equal to 3975.46 and 1985.13, respectively? 2: Expected SQL Query Result: 3: { 4: “sql”: “select MIN(estimated net profit year-on-year growth (compared to the previous period)) from table_6 where funding source type = ‘3975.46’ and year-end balance = ‘1985.13’”, 5: “Aggregation Function”: “MIN”, 6: “Query Column”: “estimated net profit year-on-year growth (compared to the previous period)”, 7: “where condition columns”: [“funding source type”, “year-end balance”], 8: “where condition operators”: [“=“, “=“], 9: “where condition values”: [“3975.46”, “1985.13”], 10: “where condition count”: “2” 11: } |

| Algorithm 4. SMI-SQL Application Example | |

| Input | 1: Question: How many provincial-level departments are there? 2: Table Name: cbms_dept |

| Output | Example 1: 1: Question: How many municipal-level departments are there? 2: Expected SQL Query Result: 3: { 4: “sql”: “select COUNT(DepartmentCode) from cbms_dept where Department-Level = ‘municipal’“, 5: “Aggregation Function”: “COUNT”, 6: “Query Column”: “DepartmentCode”, 7: “Where Condition Columns”: [“DepartmentLevel”], 8: “Where Condition Operators”: [“=”], 9: “Where Condition Values”: [“municipal”], 10: “Where Condition Count”: “1” 11: } |

4. Experiments

4.1. Experimental Settings

4.2. Experimental Results

4.3. Case Analysis

4.4. Model Performance

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Meimarakis, G.K.; Koutrika, G. A survey on deep learning approaches for text-to-SQL. VLDB J. 2023, 32, 905–936. [Google Scholar] [CrossRef]

- Wang, L.-J.; Zhang, A.; Wu, K.; Sun, K.; Li, Z.-H.; Wu, H.; Zhang, M.; Wang, H.-F. DuSQL: A large-scale and pragmatic Chinese text-to-SQL dataset. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 8–12 November 2020. [Google Scholar]

- Chen, Z.-Y.; Chen, W.-H.; Smiley, C.; Shah, S.; Borova, I.; Langdon, D.; Moussa, R.; Beane, M.; Huang, T.-H.; Routledg, B.; et al. FinQA: A Dataset of Numerical Reasoning over Financial Data. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021. [Google Scholar]

- Demirhan, H.; Zadrozny, W. Survey of Multimodal Medical Question Answering. BioMedInformatics 2023, 4, 50–74. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 NAACL-HLT, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Yin, P.-C.; Neubig, G.; Yih, W.T.; Riedel, S. TaBERT: Pretraining for Joint Understanding of Textual and Tabular Data. In Proceedings of the 2020 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Liu, Q.; Chen, B.; Guo, J.-Q.; Ziyadi, M.; Lin, Z.-Q.; Chen, W.-Z.; Lou, J.-G. Tapex: Table pre-training via learning a neural sql executor. In Proceedings of the 2021 International Conference on Learning Representations, Vienna, Austria, 4–8 May 2021. [Google Scholar]

- He, B.; Dong, B.; Guan, Y.; Yang, J.-F.; Jiang, Z.-P.; Yu, Q.-B.; Cheng, J.-Y.; Qu, C.-Y. Building a comprehensive syntactic and semantic corpus of Chinese clinical texts. J. Biomed. Inform. 2017, 100, 203–217. [Google Scholar] [CrossRef] [PubMed]

- Chang, S.-C.; Liu, P.-F.; Tang, Y.; Huang, J.; He, X.-D.; Zhou, B.-W. Zero-shot text-to-SQL learning with auxiliary task. In Proceedings of the 2020 AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Wang, J.-N.; Wang, C.-Y.; Qiu, M.-H.; Shi, Q.-H.; Wang, H.-B.; Huang, J.; Gao, M. KECP: Knowledge Enhanced Contrastive Prompting for Few-shot Extractive Question Answering. In Proceedings of the 2022 Conference on Empirical Methods in Natu-ral Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022. [Google Scholar]

- Xu, W.-W.; Li, X.; Zhang, W.-X.; Zhou, M.; Lam, W.; Si, L.; Bing, L.-D. From Cloze to Comprehension: Retrofitting Pre-trained Masked Language Models to Pre-trained Machine Reader. In Proceedings of the 2023 Thirty-seventh Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Shoeybi, M.; Patwary, M.; Puri, R.; Gresley, P.L.; Casper, J.; Catanzaro, B. Megatron-lm: Training multi-billion parameter language models using model parallelism. arXiv 2019, arXiv:1909.08053. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the 2020 34th International Conference on Neural Information Processing Systems, Online, 6–12 December 2020. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Train-ing language models to follow instructions with human feedback. In Proceedings of the 2022 Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar]

- Yang, A.-Y.; Xiao, B.; Wang, B.-G.; Zhang, B.-R.; Bian, C.; Yin, C.; Lv, C.-X.; Pan, D.; Wang, D.; Yan, D.; et al. Baichuan 2: Open large-scale language models. arXiv 2023, arXiv:2309.10305. [Google Scholar]

- Vincenzo, S.; Licia, S.; Roberto, T. A primer on seq2seq models for generative chatbots. ACM Comput. Surv. 2023, 56, 1–58. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding with unsupervised learning. Citado 2018, 17, 1–12. [Google Scholar]

- Herzig, J.; Nowak, P.K.; Müller, T.; Piccinno, F.; Eisenschlos, J. TaPas: Weakly Supervised Table Parsing via Pre-training. In Proceedings of the 2020 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Wang, C.-L.; Tatwawadi, K.; Brockschmidt, M.; Huang, P.-S.; Mao, Y.; Polozov, O.; Singh, R. Robust text-to-sql generation with execution-guided decoding. arXiv 2018, arXiv:1807.03100. [Google Scholar]

- Hu, T.; Chen, Z.; Ge, J.; Yang, Z.; Xu, J. A Chinese few-shot text classification method utilizing improved prompt learning and unlabeled data. Appl. Sci. 2023, 13, 3334. [Google Scholar] [CrossRef]

- Dang, Y.; Chen, W.; Zhang, X.; Chen, H. REKP: Refined External Knowledge into Prompt-Tuning for Few-Shot Text Classification. Mathematics 2023, 11, 4780. [Google Scholar] [CrossRef]

- Zhong, V.; Xiong, C.-M.; Socher, R. Seq2sql: Generating structured queries from natural language using reinforcement learning. arXiv 2017, arXiv:1709.00103. [Google Scholar]

- Guo, X.-N.; Chen, Y.-R.; Qi, G.-L.; Wu, T.-X.; Xu, H. Improving Few-Shot Text-to-SQL with Meta Self-Training via Column Specificity. In Proceedings of the 2022 International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022. [Google Scholar]

- Pan, Y.-C.; Wang, C.-H.; Hu, B.-T.; Xiang, Y.; Wang, X.-L.; Chen, Q.-C.; Chen, J.-J.; Du, J.-C. A BERT-based generation model to transform medical texts to SQL queries for electronic medical records: Model development and validation. JMIR Med. Inform. 2021, 9, e32698. [Google Scholar] [CrossRef] [PubMed]

- He, J.-H.; Liu, X.-P.; Shu, Q.; Wan, C.-X.; Liu, D.-X.; Liao, G.-Q. SQL generation from natural language queries with complex calculations on financial dat. J. Zhejiang Univ. (Eng. Sci.) 2023, 57, 277–286. [Google Scholar]

- Lin, P.-G.; Li, Q.-T.; Zhou, J.-Q.; Wang, J.-H.; Jian, M.-W.; Zhang, C. Financial Forecasting Method for Generative Adversarial Networks based on Multi-model Fusion. J. Comput. 2023, 34, 131–145. [Google Scholar]

- Lv, J.-Q.; Wang, X.-B.; Chen, G.; Zhang, H.; Wang, M.-G. Chinese Text-to-SQL model for industrial production. J. Comput. Appl. 2022, 42, 2996–3002. [Google Scholar]

- Lu, J.; Gong, P.-H.; Ye, J.-P.; Zhang, J.-W.; Zhang, C.-S. A survey on machine learning from few samples. Pattern Recognit. 2023, 139, 109480. [Google Scholar] [CrossRef]

- Ye, F.; Huang, L.; Liang, S.; Chi, K. Decomposed Two-Stage Prompt Learning for Few-Shot Named Entity Recognition. Information 2023, 14, 262. [Google Scholar] [CrossRef]

- Yang, J.-F.; Jiang, H.-M.; Yin, Q.-Y.; Zhang, D.-Q.; Yin, B.; Yang, D.-Y. SEQZERO: Few-shot Compositional Semantic Parsing with Sequential Prompts and Zero-shot Models. In Proceedings of the 2022 Association for Computational Linguistics: NAACL 2022, Online, 10–15 July 2022. [Google Scholar]

- Wei, C.; Huang, S.-B.; Li, R.-S. Enhance text-to-SQL model performance with information sharing and reweight loss. Multimed. Tools Appl. 2022, 81, 15205–15217. [Google Scholar] [CrossRef]

- Zhong, V.; Lewis, M.; Wang, S.I.; Zettlemoyer, L. Grounded Adaptation for Zero-shot Executable Semantic Parsing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 8–12 November 2020. [Google Scholar]

- Nan, L.-Y.; Zhao, Y.-L.; Zou, W.-J.; Ri, N.; Tae, J.; Zhang, E.; Cohan, A.; Radev, D. Enhancing Text-to-SQL Capabilities of Large Language Models: A Study on Prompt Design Strategies. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023. [Google Scholar]

- Gu, Z.-H.; Fan, J.; Tang, N.; Cao, L.; Jia, B.-W.; Madden, S.; Du, X.-Y. Few-shot text-to-sql translation using structure and content prompt learning. ACM Manag. Data 2023, 1, 1–28. [Google Scholar] [CrossRef]

- Shin, R.; Lin, C.; Thomson, S.; Chen, C.; Roy, S.; Platanios, E.A.; Pauls, A.; Klein, D.; Eisner, J.; Durme, B.V. Constrained Language Models Yield Few-Shot Semantic Parsers. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021. [Google Scholar]

- Liu, J.-C.; Shen, D.-H.; Zhang, Y.-Z.; Dolan, B.; Carin, L.; Chen, W.-Z. What Makes Good In-Context Examples for GPT-3? In Proceedings of the 2022 3rd Workshop on Knowledge Extraction and Integration for Deep Learning Architectures, Dublin, Ireland, 26–27 May 2022.

- Rubin, O.; Herzig, J.; Berant, J. Learning to Retrieve Prompts for In-Context Learning. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 10–15 July 2022. [Google Scholar]

- Wang, X.-Y.; Zhu, W.-R.; Saxon, M.; Steyvers, M.; Wang, W.-Y. Large Language Models Are Latent Variable Models: Explaining and Finding Good Demonstrations for In-Context Learning. In Proceedings of the 2023 Thirty-seventh Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Das, R.; Zaheer, M.; Thai, D.; Godbole, A.; Perez, E.; Lee, J.Y.; Tan, L.-Z.; Polymenakos, L.; McCallum, A. Case-based Reasoning for Natural Language Queries over Knowledge Bases. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021. [Google Scholar]

- Zhang, Y.-M.; Feng, S.; Tan, C.-H. Active Example Selection for In-Context Learning. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022. [Google Scholar]

- Liu, D.; Yao, S.-W.; Zhao, H.-L.; Sui, X.; Guo, Y.-Q.; Zheng, M.-L.; Li, L. Research on Mutual Information Feature Selection Algorithm Based on Genetic Algorithm. J. Comput. 2022, 33, 131–143. [Google Scholar]

- Chen, Y.-R.; Guo, X.-N.; Wang, C.-J.; Qiu, J.; Qi, G.-L.; Wang, M.; Li, H.-Y. Leveraging table content for zero-shot text-to-sql with meta-learning. In Proceedings of the 2021 AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021. [Google Scholar]

- Karpukhin, V.; Oğuz, B.; Min, S.; Lewis, P.; Wu, L.; Edunov, S.; Chen, D.-Q.; Yih, W.T. Dense Passage Retrieval for Open-Domain Question Answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 8–12 November 2020. [Google Scholar]

- Stephen, R.; Hugo, Z. The probabilistic relevance framework: BM25 and beyond. Found. Trends® Inf. Retr. 2009, 3, 333–389. [Google Scholar]

| Dataset | Number of Columns | 0~5 | 5~10 | 10~15 | 15~20 | >20 |

|---|---|---|---|---|---|---|

| ESQL | Quantity | 0 | 0 | 0 | 300 | 1200 |

| Proportion | 0 | 0 | 0 | 20% | 80% | |

| SMI-SQL | Quantity | 14 | 320 | 342 | 547 | 277 |

| Proportion | 0.93% | 21.33% | 22.80% | 36.47% | 18.47% |

| Dataset | 1 Shot | Test |

|---|---|---|

| ESQL | 1 | 1500 |

| SMI-SQL | 1 | 1500 |

| Hyperparameter | Optimizer | Batch Size | Learning Rate | Epoch |

|---|---|---|---|---|

| Value | Adam | 120 | 1 × 10−4 | 30 |

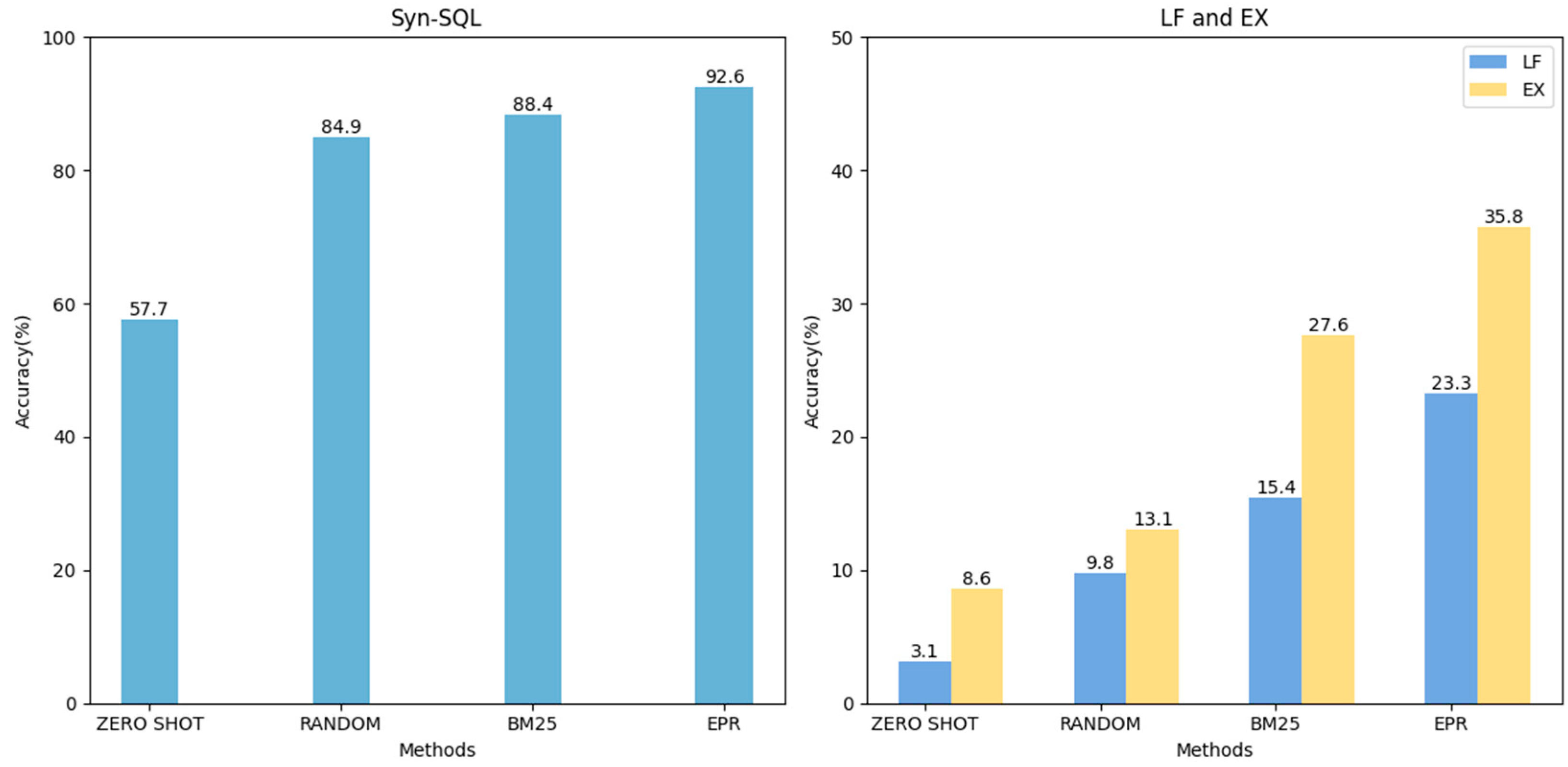

| Models | Syn-SQL | LF | EX |

|---|---|---|---|

| ZERO SHOT | 57.7% | 3.1% | 8.6% |

| RANDOM | 84.9% | 9.8% | 13.1% |

| BM25 | 88.4% | 15.4% | 27.6% |

| EPR(Ours) | 92.6% | 23.3% | 35.8% |

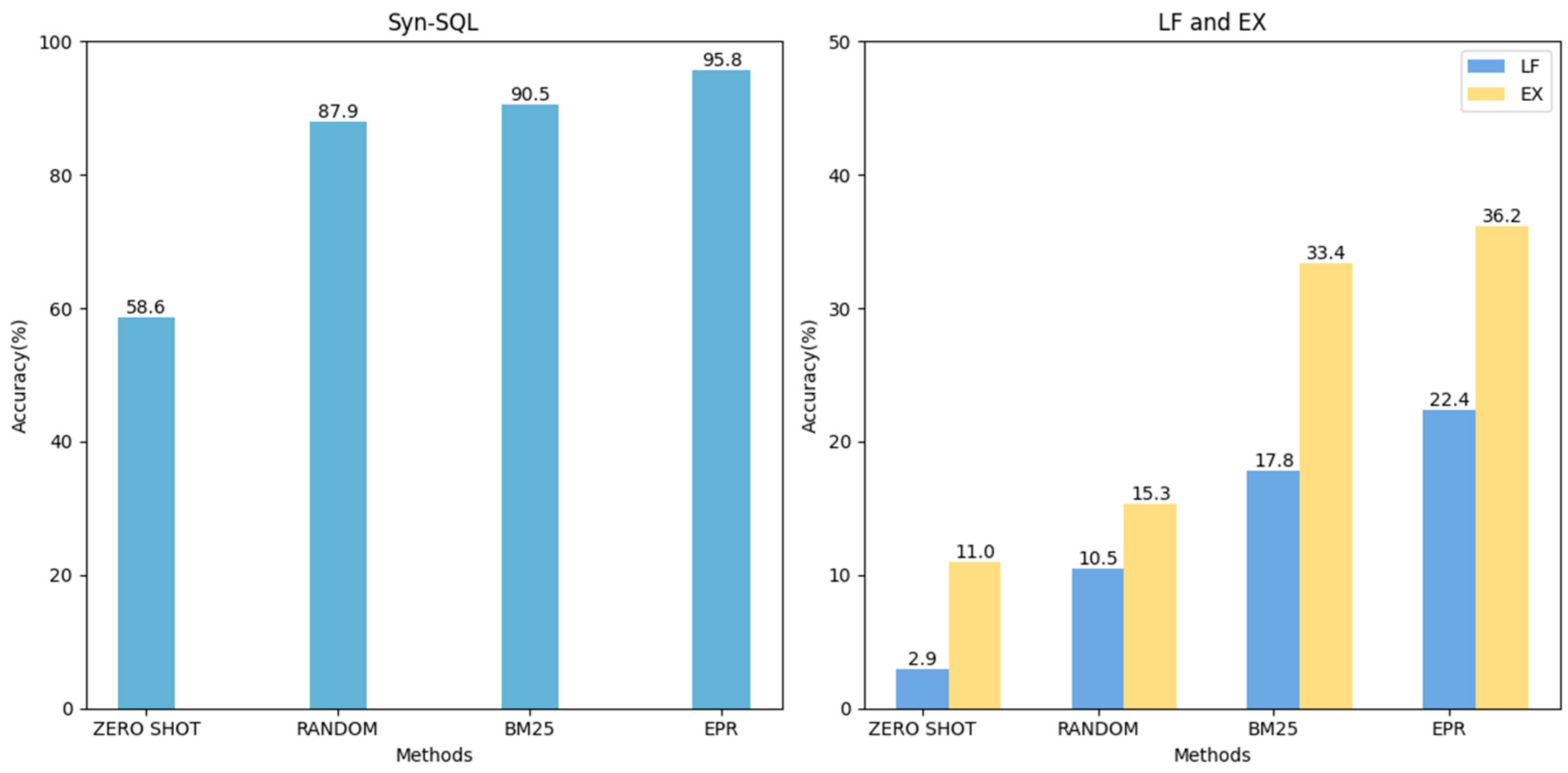

| Models | Syn-SQL | LF | EX |

|---|---|---|---|

| ZERO SHOT | 58.6% | 2.9% | 11.0% |

| RANDOM | 87.9% | 10.5% | 15.3% |

| BM25 | 90.5% | 17.8% | 33.4% |

| EPR(Ours) | 95.8% | 22.4% | 36.2% |

| Models | SC | SA | WN | WC | WO | WV |

|---|---|---|---|---|---|---|

| ZERO SHOT | 22.3% | 28.9% | 41.4% | 17.6% | 24.4% | 22.9% |

| RANDOM | 41.8% | 33.5% | 44.6% | 27.4% | 36.8% | 26.4% |

| BM25 | 59.6% | 37.6% | 49.7% | 34.6% | 37.4% | 27.5% |

| EPR(Ours) | 61.8% | 44.5% | 52.3% | 37.5% | 45.8% | 34.2% |

| Models | SC | SA | WN | WC | WO | WV |

|---|---|---|---|---|---|---|

| ZERO SHOT | 45.3% | 24.5% | 33.7% | 15.6% | 20.3% | 17.1% |

| RANDOM | 53.4% | 32.9% | 45.2% | 22.8% | 32.7% | 22.5% |

| BM25 | 58.9% | 37.4% | 47.9% | 30.5% | 38.9% | 27.8% |

| EPR(Ours) | 63.5% | 43.6% | 50.4% | 39.7% | 47.5% | 33.7% |

| Models | Result |

|---|---|

| Question-1 For an organization with a net profit lower than 4845.59 and a factory reporting a total amount higher than 1383.54, what is the highest amount of goods replenishment? | |

| Golden | SELECT MAX (‘The amount of goods replenishment’) FROM table_2 WHERE ‘The net profit’ < “4845.59” AND ‘The factory reporting total amount’ > “1383.54” |

| ZERO SHOT | SELECT MAX (‘The amount of goods replenishment’) FROM table_2 WHERE ‘Institution’ < “4845.59” AND ‘The factory reporting total amount’ > “1383.54” GROUP BY ‘Institution’ |

| RANDOM | SELECT |

| BM25 | SELECT MAX (‘The amount of goods replenishment’) FROM table_2 WHERE ‘The net profit’ < “4845.59” OR ‘The factory reporting total amount’ > “1383.54” |

| EPR(Ours) | SELECT MAX (‘The amount of goods replenishment’) FROM table_2 WHERE ‘The net profit’ < “4845.59” AND ‘The factory reporting total amount’ > “1383.54” |

| Question-2 What is the total amount of non-current assets due within one year outside of Taizhou City? | |

| Golden | SELECT SUM(‘The non-current assets due within one year’) FROM table_5 WHERE ‘City of residence’!=“Taizhou” |

| ZERO SHOT | NOSQL |

| RANDOM | SELECT SUM (‘The non-current assets due within one year’) AS ‘Sum’ FROM table_5 WHERE ‘Province of residence.’ = “Taizhou” AND ‘The non-current assets due within one year’ IS NOT NULL GROUP BY ‘Province of residence’ HAVING ‘Province of residence’ != “Taizhou” |

| BM25 | SELECT |

| EPR(Ours) | SELECT SUM (‘The non-current assets due within one year’) FROM table_5 WHERE ‘City of residence’!=“Taizhou” |

| Models | Result |

|---|---|

| Question-1 The expansion joint device on the Dongdagou Bridge was evaluated at what time? | |

| Golden | SELECT ‘Evaluation time’ FROM evaluate_bridge_part_evaluation WHERE ‘Bridge name’ = ‘Dongdagou Bridge’ AND ‘Bridge component name’ = “Expansion joint” |

| ZERO SHOT | NOSQL |

| RANDOM | SELECT ‘Evaluation time’ FROM evaluate_bridge_part_evaluation WHERE ‘Bridge name’ = ‘Dongdagou Bridge’ AND ‘Bridge component code’ = “Expansion joint” |

| BM25 | SELECT SUM (‘Evaluation time’) FROM evaluate_bridge_part_evaluationWHERE ‘Bridge name’ = ‘Dongdagou Bridge’ AND ‘Bridge component name’ = “Expansion joint” |

| EPR(Ours) | SELECT ‘Evaluation time’ FROM evaluate_bridge_part_evaluation WHERE ‘Bridge name’ = ‘Dongdagou Bridge’ AND ‘Bridge component name’ = “Expansion joint” |

| Question-2 The minimum abnormal value collected for anchor cable gauge 28-9 on Yuehai Bridge is how much? | |

| Golden | SELECT MIN (‘The abnormal value collection amount’) FROM analy_monitor_type WHERE ‘Bridge name’ = ‘Yuehai Bridge’ AND ‘Sensor name’ = “Anchor cable gauge 28-9” |

| ZERO SHOT | SELECT MIN (‘The abnormal value collection amount’) FROM analy_monitor_type WHERE ‘Bridge code’ = “Yuehai Bridge” AND ‘Sensor code’ = “Anchor cable gauge 28-9” AND ‘The abnormal value collection amount’ < (SELECT MIN(‘The abnormal value collection amount’) FROM analy_monitor_type WHERE ‘Bridge code’ = “Yuehai Bridge” AND ‘Sensor code’ = “Anchor cable gauge 28-9”) GROUP BY ‘Sensor code’ |

| RANDOM | SELECT MIN (‘The abnormal value collection amount’) FROM analy_monitor_type WHERE ‘Bridge name’ = ‘Yuehai Bridge’ AND ‘Sensor code’ = “Anchor cable gauge 28-9” |

| BM25 | SELECT AVG (‘The abnormal value collection amount’) FROM analy_monitor_type WHERE ‘Bridge name’ = ‘Yuehai Bridge’ AND ‘Sensor name’ = “Anchor cable gauge 28-9” |

| EPR(Ours) | SELECT MIN (‘The abnormal value collection amount’) FROM analy_monitor_type WHERE ‘Bridge name’ = ‘Yuehai Bridge’ AND ‘Sensor name’ = “Anchor cable gauge 28-9” |

| Dataset | Models | Result |

|---|---|---|

| ESQL | Question-1 The total number of units for which the Engineering Project Management Index is not equal to 3959.82 is how many? | |

| Golden | SELECT COUNT (‘Company name’) FROM table_4 WHERE ‘the Engineering Project Management Index’ != “3959.82” | |

| EPR | SELECT COUNT (‘Company name’) FROM table_4 WHERE ‘the Engineering Project Management Index’ < “3959.82” | |

| Question-2 In companies located in Jiangxi province with a product inventory turnover rate of less than 45.42%, how many regions have operating revenues higher than 4730.85 units? | ||

| Golden | SELECT COUNT (‘Company name’) FROM table_8 WHERE ‘province’ = “Jiangxi” AND ‘The product inventory turnover rate’ < “45.42%” AND ‘The regional operating income’ > “4730.85” | |

| EPR | SELECT COUNT(*) FROM table_8 WHERE ‘The product inventory turnover rate’ < “45.42%” AND ‘The regional operating income’ > “4730.85” AND | |

| SMI-SQL | Question-3 The status of anchor cable gauge 45–14 of the main bridge (steel-concrete composite arch bridge) of the Yuehai Bridge is what? | |

| Golden | SELECT ‘Sensor status’ FROM t_sensor WHERE ‘Bridge name’ = “The main bridge (steel-concrete composite arch bridge) of the Yuehai Bridge” AND ‘Sensor name’ = “The anchor cable gauge 45-14” | |

| EPR | SELECT ‘Grade code’, ‘Sensor status’ FROM t_sensor WHERE ‘Grade code’ = “45-14” AND ‘Sensor name’ = “The anchor cable gauge” | |

| Question-4 How many very long tunnels are there in Yuanzhou District? | ||

| Golden | SELECT COUNT (‘Tunnel name’) FROM tunnel_basic WHERE ‘Locality’ = “Yuanzhou District” AND ‘Tunnel classification’ = “Very long tunnels” | |

| EPR | SELECT COUNT(*) FROM tunnel_basic WHERE ‘Tunnel classification’ = “Very long tunnels” | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mo, T.; Xiao, Q.; Zhang, H.; Li, R.; Wu, Y. Domain-Specific Few-Shot Table Prompt Question Answering via Contrastive Exemplar Selection. Algorithms 2024, 17, 278. https://doi.org/10.3390/a17070278

Mo T, Xiao Q, Zhang H, Li R, Wu Y. Domain-Specific Few-Shot Table Prompt Question Answering via Contrastive Exemplar Selection. Algorithms. 2024; 17(7):278. https://doi.org/10.3390/a17070278

Chicago/Turabian StyleMo, Tianjin, Qiao Xiao, Hongyi Zhang, Ren Li, and Yunsong Wu. 2024. "Domain-Specific Few-Shot Table Prompt Question Answering via Contrastive Exemplar Selection" Algorithms 17, no. 7: 278. https://doi.org/10.3390/a17070278

APA StyleMo, T., Xiao, Q., Zhang, H., Li, R., & Wu, Y. (2024). Domain-Specific Few-Shot Table Prompt Question Answering via Contrastive Exemplar Selection. Algorithms, 17(7), 278. https://doi.org/10.3390/a17070278