Abstract

Support Vector Regression (SVR), which converts the original low-dimensional problem to a high-dimensional kernel space linear problem by introducing kernel functions, has been successfully applied in system modeling. Regarding the classical SVR algorithm, the value of the features has been taken into account, while its contribution to the model output is omitted. Therefore, the construction of the kernel space may not be reasonable. In the paper, a Feature-Weighted SVR (FW-SVR) is presented. The range of the feature is matched with its contribution by properly assigning the weight of the input features in data pre-processing. FW-SVR optimizes the distribution of the sample points in the kernel space to make the minimizing of the structural risk more reasonable. Four synthetic datasets and seven real datasets are applied. A superior generalization ability is obtained by the proposed method.

1. Introduction

SVR is a powerful kernel-based method for regression problems [1,2,3]. It converts the original low-dimensional problem to a high-dimensional kernel space linear problem by introducing kernel functions. Regarding the system modeling with limited training samples, it balances the empirical risk and the confidence interval based on the principle of structural risk minimization. It avoids the over-fitting problem resulting from the overcomplex model and ensures the generalization performance of the model when it is sufficiently close to the training sample data [4,5,6,7,8]

The generalization ability of the SVR model is determined by the kernel space feature [9]. The value of kernel elements can be regarded as the similarity measure between samples in kernel space. The kernel function can simplify the calculation of the inner product in kernel space, and the curse of dimensionality is avoided. The contribution of the feature to the output is omitted in classical SVR. In some cases, such as when the dynamic range of an unimportant feature is large, the similarity of samples in kernel space may be dominated by the feature, so that the kernel matrix cannot deliver sufficient information about the training set to the model. Then, the optimization of structural risk minimization is affected.

At present, the research about SVR modeling focuses on the construction of the model and the optimization of the parameters [10,11,12,13], while the preprocessing of data is neglected. Data normalization methods, such as min-max normalization and Z-normalization, are the most widely-used preprocessing methods [14,15,16]. Min-max normalization converts raw data to [0, 1] or [−1, 1] by linearization. The Z-normalization method normalize the raw dataset to a dataset with a mean value of zero and a variance of one. The normalization method can overcome the numerical difficulties caused by the large difference of the dynamic range among input features. However, there is no evidence showing that normalization method will definitely improve the generalization performance. Whether to adopt the normalization is still based on the experience of engineers. In some literature, the feature selection is used to remove unimportant features from the training dataset and avoid the dominant influence of unimportant features on kernel space feature [17,18,19]. However, this will obviously lead to a lack of training information.

The weighted method is also used to improve the generalization ability. Zhang fan et al. developed a forecasting model using weighted SVR in which the weights were determined by the DE algorithm, and this model yielded high accuracy for building energy consumption forecasting [20]. Limei Liu combined weighted support vector regression machine with feature selection to predict electricity load, and the algorithm gave good prediction results [21]. Han, Xadditionally added weights to the slack variables in the constraints to predict house prices [22]. The above weighted SVR algorithms took the importance of sample points into account, and they can be used to minimize the influence of outliers or noises. However, the importance of the individual features is omitted. Recently, there have been some research works on feature weighting for the Support Vector Machine (SVM) classification problem [23,24,25,26,27]. Regretfully, it cannot be applied to the SVR because of the differences in the output.

The paper proposes an Feature-Weighted (FW)-SVR modeling method based on the kernel space feature. Firstly, we concluded that the classical methods are not reasonable by analyzing the similarity of sample points in the kernel space; because the value of the features has been taken into account, while the contribution to the model output is omitted. Then, we present the FW-SVR algorithm that makes the value of the features match their contribution by analyzing the limitation of the normalization algorithm and feature selection SVR algorithm.

The contribution of this work is two-fold. Firstly, the feature importance should be matched with the influence of the kernel space by analyzing the similarity of sample points in the kernel space. Secondly, a data pre-processing method of feature weighting based on the above conclusion is given. By adjusting the range of feature values by properly assigning the weight, the feature importance is matched with the influence of the kernel space, and the generalization ability of the model is improved. Then, the first conclusion is verified. The proposed method can be used to guide the data pre-processing of SVR modeling.

The paper is organized as follows: In Section 2 “Basic Review of SVR”, SVR theory is briefly described. In Section 3 “Feature-Weighted Support Vector Regression”, the necessity of the feature weighting is analyzed in theory, and then, the realization process of FW-SVR is introduced in detail. Simulation examples are given in Section 4 “Simulation Examples”. In Section 5 “Conclusions”, we come to a conclusion.

2. Basic Review of SVR

The training set is given as , where each is the i-th input sample containing n features and is the output sample. The model function determined by the SVR method can be regarded as a hyperplane in the kernel space. It is expressed as follows:

where maps the raw data of input features to a high-dimensional kernel space, is a weight vector of the hyperplane and b is a bias term.

An insensitive loss function is introduced to avoid over-fitting, and additional nonnegative slack variables are adopted to weaken the constraints of some certain sample points. SVR modeling is formulated as a convex quadratic programming problem expressed as follows:

subject to:

where is a penalty parameter. The above convex quadratic programming problem can be solved by constructing a Lagrange function:

The above convex quadratic programming problem can be reformulated by constructing the Lagrange function:

where and are Lagrange multipliers.

The kernel function , which satisfies the Mercer condition, is introduced to replace the inner product of the high dimensional kernel space in Equation (4). The commonly-used kernel functions are Gaussian kernel, linear kernel, sigmoid kernel, polynomial kernel, and so on [28,29,30]. These kernel functions are listed in Table 1.

Table 1.

Admissible kernel functions.

The optimized problem can be expressed as follows:

subject to:

The optimal solution can be obtained as follows:

The model function Equation (1) can be further developed as follows:

3. Feature-Weighted Support Vector Regression

3.1. The Necessity of Feature Weighting

The similarity between sample points and in kernel space can be measured by calculating the distance between and .

When the Gauss kernel is adopted, can be expressed as:

We can deduce that the greater similarity of the sample points, the smaller the distance between the mapping in the kernel space from Equation (10). When , the most similarity is shown, and the distance is zero.

A simple example is given to illustrate that the construction of the kernel space is not reasonable when the value of the feature is the only consideration and its contribution to the model output is neglected. There is a set of sample points . Let , , where , , , , ; the first item of the sample point is Feature 1, and the second is Feature 2. Regarding and , we can measure which one is more similar to by comparing the value of and .

The difference of the similarities of the two sample groups is decided by and accordingly. However, the contribution of the two features to the model output is quite different for the actual system in some situations. Assume that Feature 1 has a great contribution to the output and a small change of it can lead to a great change in the output. On the contrary, assume that the contribution of Feature 2 to the output is very small, and a large change can lead to a slight change in the output. When , it is obvious that the impact of the sample point on the output is more similar to that of . When , the influence of the sample point on the output may be more similar to that of . The similarity to the contrary is deduced without considering the contribution of the features to the output. Therefore, the similarity of the sample points generated by the classical algorithm may be influenced greatly by the unimportant features with a large value range, resulting in the inconsistency of the similarities in the kernel space and in the actual situation.

We can deduce that the kernel element is used to simplify the computation of the inner product in the kernel space in solving the convex quadratic programming problems from Formulas (4)–(5). If the similarity cannot reflect the actual rule of the dataset and is dominated by unimportant features, the solution to the optimization problem by applying the structural risk minimization principle is unreasonable.

In the paper, the feature-weighting method is used to match the effect of the feature on the kernel space feature with its contribution to the model output. Regarding the k-th feature, if a weight value is given, the kernel element will be changed as:

Likewise, the weighted elements of the linear kernel can be rewritten as follows:

The weighted sigmoid kernel can be expressed as follows:

If the contribution of each feature to the output can be confirmed before model training and an appropriate weight value is assigned, the role played by the feature in the kernel space matches its contribution. When all the weights of the feature are one, the FW-SVR is degraded to the classical SVR. When the weight of a feature tends to be zero, it shows that the input feature has little influence on the output and means a dimension reduction. Moreover, the distance between the sample points is shortened, and the distribution of the samples is more compact.

3.2. The Implementation of the FW-SVR

The optimal combination of weights is the premise of realizing FW-SVR. In order to verify the conclusion of Section 3.1, we use the grid search method to get the optimal weight combination. Grid search is an exhaustive search method. Each feature has a set of weight values to select. All combinations are listed to generate the “grid”. Every combination is tested by SVR, and the optimal one is obtained.

The SVR training that introduces the weight value is shown as follows.

According to Equation (12), the convex quadratic programming problem of Formulas (5)–(6) can be rewritten as follows:

subject to:

The optimal solution can be obtained as .

The model function of FW-SVR can be expressed as follows:

4. Simulation Examples

In this Section, four synthetic datasets and seven real datasets are employed to verify the feasibility of the FW-SVR. All the simulations are implemented on a Windows 10 PC with Intel Core i5-3740 CPU (3.2 GHz) and 4.0 GB RAM by MATLAB R2013a. The SVR training and the test algorithm are implemented in LIBSVM 3.22 [31]. The parameters for each approach on each dataset are optimized by using grid search with five-fold cross-validation on a sample of the training set [32,33].

The Root Mean Square Error (RMSE) is employed to evaluate the feasibility of the FW-SVR method.

where is the actual output sample and is its corresponding predicted value. The smaller the value of RMSE, the better its generalization ability.

Synthetic Datasets

The definitions of these functions are listed in Table 2.

Table 2.

Functions used to generate synthetic datasets.

Where, is the added Gaussian noise with a mean of zero and a standard deviation of 0.01.

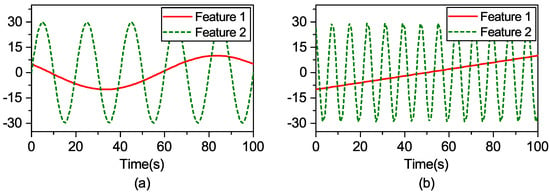

The synthetic dataset “F1” is chosen as an example. Feature 1 () and Feature 2 () in the training data are taken from the sinusoidal signals of 0.01 Hz and 0.05 Hz, respectively. Their corresponding test data are extracted from linear functions and the sinusoidal signal 0.125 Hz, respectively, as shown in Figure 1.

Figure 1.

The input feature data of the training dataset and the test dataset: (a) training dataset; (b) test dataset.

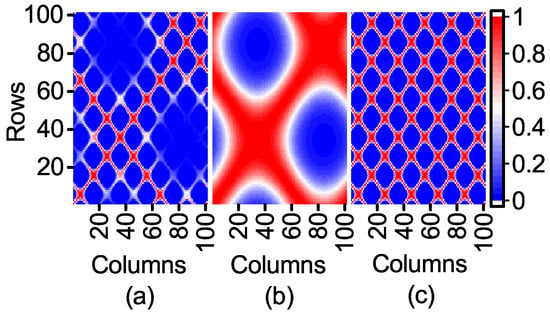

The expression of F1 shows that the range of the first item affected by Feature 1 is [−0.2172, 1] and that of the second item affected by Feature 2 is restricted to [−0.03, 0.03]. It is deduced that Feature 1, which has a great contribution to the output, is an important feature, while Feature 2 is to the contrary. However, the contribution of Feature 2 to the output of the model is neglected when the kernel matrix is calculated. In order to observe the influence of Feature 1 and Feature 2 on the kernel matrix, a kernel width = 0.01 is used to compare the three kernel matrices visualized in 2D heat-maps as follows.

According to Equation (12), the kernel matrix can fully reflect the similarity between the sample points in kernel space. The similarity of the sample points in Figure 2c is clearly shown in Figure 2a, while the influence of Feature 1 with a high contribution to the output is obviously weakened.

Figure 2.

Three 2D heat-maps of kernel matrices: (a) kernel matrix generated by two features; (b) kernel matrix generated by Feature 1; (c) kernel matrix generated by Feature 2.

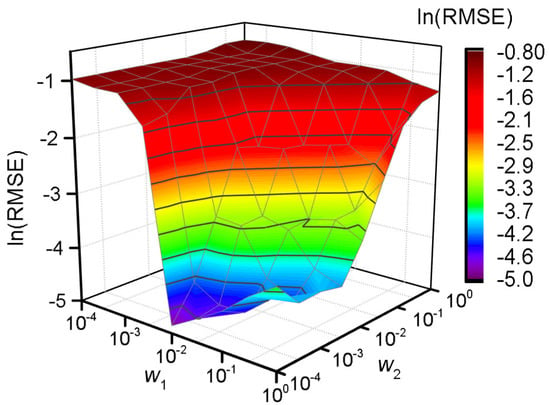

A grid search method is applied to obtain the corresponding RMSE for each possible combination of to verify the necessity of feature weighting [34]. The value of and is searched from the set . The model performance is shown as Figure 3 accordingly.

Figure 3.

Model generalization ability of different weights’ combination.

According to Figure 3, a better generalization ability occurs when the value of is around 100, and the best is acquired when and . As a whole, a better generalization ability can be obtained when vs. when . The FW-SVR is degraded to the classical SVR when , and the generalization ability of the model is poor.

We compare the feature weighting method with the feature selection and the normalization. In feature weighting, and . In feature selection, Feature 2 is deleted. In the normalization method, the min-max normalization and the Z-normalization are employed.

Min-max normalization converts raw data to [0, 1] by linearization. The k-th feature of the i-th sample is normalized to :

where is the maximum and minimum value of the k-th feature, respectively.

The Z-normalization method normalizes the raw data to a dataset with a mean value of zero and a variance of one.

where is the mean and standard deviation of the k-th feature, respectively.

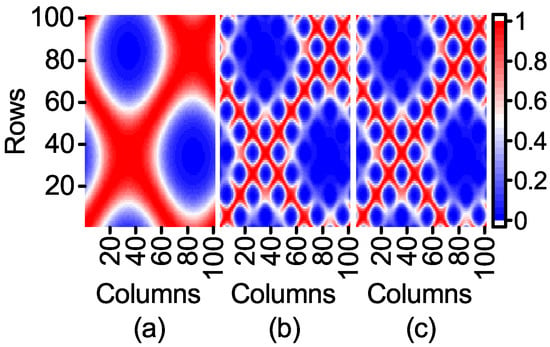

Firstly, the kernel matrix generated by feature weighting and by other methods is compared. In order to facilitate the comparison, when the parameter is selected, the result consistency of Feature 1 is calculated as the standard of nuclear element calculation, because weighting and normalization will change the value of the feature. The kernel matrix generated by the above method is shown in Figure 4.

Figure 4.

Three 2D heat-maps of kernel matrices: (a) feature weighting; (b) min-max normalization; (c) Z-normalization.

As can be seen from Figure 4, the feature weighting reduces the influence of Feature 2 on the kernel matrix. Figure 4a is similar to Figure 2b. However, the feature weighting preserves the information of Feature 2 compared to the feature selection. It can be deduced from Figure 4b,c that the normalization method can weaken the influence of Feature 2. However, its influence is still great. As the range of Feature 1 and Feature 2 is essentially the same in normalization, the contributions of Feature 1 and Feature 2 are much the same. When the range of unimportant features is greatly wider than that of important features, the normalization method can largely reduce the influence of unimportant features. On the contrary, the normalization method will increase their influence.

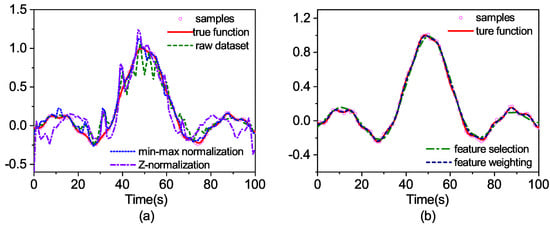

Then, the feature weighting is compared with the raw dataset, the feature selection and the normalization to observe the differences in the generalization ability. The search range of parameters C and is and , respectively, and is set to 0.0064. The optimal hyper-parameter is obtained by five-fold cross-validation. The prediction outputs for the test set are shown in Figure 5.

Figure 5.

The prediction outputs for test set: (a) raw data and normalized data; (b) feature weighted and feature selected dataset.

As can be seen from Figure 5, the prediction curve with raw data is quite different from the real output and the two prediction curves with normalized data, as well. The feature selection achieves better results. However, under-fitting occurs because of the deletion of Feature 2. The best prediction result is derived from the feature weighting method.

We model the synthetic datasets in Table 2 to compare the above methods. For the feature selection, all possible feature combinations are tested in order to get the optimal one. It is used to be compared with the feature weighting. The program is repeated 10 times. The optimal combination of weights is shown in Table 3, and the results are shown in Table 4, in which bold values indicate the method with the best performance.

Table 3.

The optimal combination of weights for the synthetic datasets.

Table 4.

Performance comparison of SVR modeling for the synthetic datasets.

Finally, we randomly chose seven UCIbenchmark datasets [35]. The grid search method is used to select the optimal combination of weights from the set . The optimal combination of weights is shown in Table 5, and the results are compared as in Table 6, in which bold values indicate the method with the best performance.

Table 5.

The optimal combination of weights for the UCIdatasets.

Table 6.

Performance comparison of SVR modeling for the UCI datasets.

The Wilcoxon signed rank tests [36] at the 0.05 significance level are implemented to test the differences between the feature weighting and other data pre-processing techniques to substantiate the indications in Table 4 and Table 6. The test results are presented in Table 7. The prediction results on F1 and F2 using linear and sigmoid kernels were both unacceptable and were not included in this test.

Table 7.

Wilcoxon signed rank test for the prediction results.

According to Table 4 and Table 6, FW-SVR achieves a competitive generalization performance with both synthetic datasets and real datasets. The FW-SVR that uses the Gaussian kernel performs reasonably well on all 11 datasets. Note that the results on F1 and F2 are both unacceptable because of under-fitting for the linear kernel and the sigmoid kernel. The two datasets are not included in the following comparison. As for the linear kernel, three optimal results and three suboptimal results are obtained by FW-SVR. In addition, there are three results that are close to the optimal ones. As for the sigmoid kernel, FW-SVR achieves a competitive generalization performance on synthetic datasets. For example, the mean RMSE o 0.0024 on F4 is better than the value of 0.0120 of the Gaussian kernel. However, FW-SVR is not the optimal choice for the UCI datasets, as shown in Table 6. In general, the overall results obtained by the Wilcoxon tests presented in Table 7 show that the FW-SVR achieves the best generalization performance in comparison with the other five data pre-processing methods when the most suitable kernel type is selected. Comparing the five methods, we deduce that the contribution of the feature to the output is taken into account by FW-SVR, which reduces the influence of unimportant features on the kernel space feature.

5. Conclusions

In the paper, we propose an FW-SVR that matches the effect of the feature on the kernel space feature with its contribution to the model output. Analyzing the similarity of sample points in kernel space, we concluded that the FW-SVR makes the distribution of the sample points in kernel space more reasonable and is important to increase the generalization ability. Numerical experiments show the effectiveness of the proposed algorithm. Our future work will focus on automatic identification of the contribution to assign an optimal weight combination.

Author Contributions

D.C. conceived the main idea of the proposed method. M.H. performed the experiments. M.H. and L.L. analyzed the data. M.H. wrote the paper.

Acknowledgments

This work was funded by the National Natural Science Foundation of China (41604022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Wang, D.; Lin, H. A new class of dual support vector machine NPID controller used for predictive control. IEEJ Trans. Electr. Electron. Eng. 2015, 10, 453–457. [Google Scholar] [CrossRef]

- Paliwal, M.; Kumar, U.A. Neural networks and statistical techniques: A review of applications. Expert Syst. Appl. 2009, 36, 2–17. [Google Scholar] [CrossRef]

- Sapankevych, N.I.; Sankar, R. Time series prediction using support vector machines: a survey. IEEE Comput. Intell. Mag. 2009, 4, 24–38. [Google Scholar] [CrossRef]

- Tanveer, M.; Mangal, M.; Ahmad, I.; Shao, Y.H. One norm linear programming support vector regression. Neurocomputing 2016, 173, 1508–1518. [Google Scholar] [CrossRef]

- Nekoei, M.; Mohammadhosseini, M.; Pourbasheer, E. QSAR study of VEGFR-2 inhibitors by using genetic algorithm-multiple linear regressions (GA-MLR) and genetic algorithm-support vector machine (GA-SVM): A comparative approach. Med. Chem. Res. 2015, 24, 3037–3046. [Google Scholar] [CrossRef]

- Fujita, K.; Deng, M.; Wakimoto, S. A Miniature Pneumatic Bending Rubber Actuator Controlled by Using the PSO-SVR-Based Motion Estimation Method with the Generalized Gaussian Kernel. Actuators. 2017, 6, 6. [Google Scholar] [CrossRef]

- Xie, M.; Wang, D.; Xie, L. One SVR modeling method based on kernel space feature. IEEJ Trans. Electr. Electron. Eng. 2018, 13, 168–174. [Google Scholar] [CrossRef]

- Zhang, X.; Qiu, D.; Chen, F. Support vector machine with parameter optimization by a novel hybrid method and its application to fault diagnosis. Neurocomputing 2015, 149, 641–651. [Google Scholar] [CrossRef]

- Tian, M.; Wang, W. An efficient Gaussian kernel optimization based on centered kernel polarization criterion. Inf. Sci. 2015, 322, 133–149. [Google Scholar] [CrossRef]

- Fu, Y.; Wang, S. A No Reference Image Quality Assessment Metric Based on Visual Perception. Algorithms 2016, 9, 87. [Google Scholar] [CrossRef]

- Meighani, H.M.; Ghotbi, C.; Behbahani, T.J.; Sharifi, K. Evaluation of PC-SAFT model and Support Vector Regression (SVR) approach in prediction of asphaltene precipitation using the titration data. Fluid Phase Equilib. 2018, 456, 171–183. [Google Scholar] [CrossRef]

- Dunn, J.C. A Fuzzy Relative of the ISODATA Process and Its Use in Detecting Compact Well-Separated Clusters. Cybern. Syst. 1973, 3, 32–57. [Google Scholar] [CrossRef]

- Lu, C.J. Hybridizing nonlinear independent component analysis and support vector regression with particle swarm optimization for stock index forecasting. Neural Comput. Appl. 2013, 23, 2417–2427. [Google Scholar] [CrossRef]

- Yalavarthi, R.; Shashi, M. Atmospheric Temperature Prediction using Support Vector Machines. Int. J. Comput. Theory Eng. 2009, 1, 55–58. [Google Scholar]

- Miao, F.; Fu, N.; Zhang, Y.T.; Ding, X.R.; Hong, X.; He, Q.; Li, Y. A Novel Continuous Blood Pressure Estimation Approach Based on Data Mining Techniques. IEEE J. Biomed. Health Inform. 2017, 21, 1730–1740. [Google Scholar] [CrossRef] [PubMed]

- Papari, B.; Edrington, C.S.; Kavousi-Fard, F. An Effective Fuzzy Feature Selection and Prediction Method for Modeling Tidal Current: A Case of Persian Gulf. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4956–4961. [Google Scholar] [CrossRef]

- Taghizadeh-Mehrjardi, R.; Neupane, R.; Sood, K.; Kumar, S. Artificial bee colony feature selection algorithm combined with machine learning algorithms to predict vertical and lateral distribution of soil organic matter in South Dakota, USA. Carbon Manag. 2017, 8, 277–291. [Google Scholar] [CrossRef]

- Zhang, F.; Deb, C.; Lee, S.E.; Yang, J.; Shah, K.W. Time series forecasting for building energy consumption using weighted Support Vector Regression with differential evolution optimization technique. Energy Build. 2016, 126, 94–103. [Google Scholar] [CrossRef]

- Liu, L. Short-term load forecasting based on correlation coefficient and weighted support vector regression machine. In Proceedings of the 2015 4th International Conference on Information Technology and Management Innovation (ICITMI 2015), Shenzhen, China, 12–13 September 2015. [Google Scholar]

- Han, X.; Clemmensen, L. On Weighted Support Vector Regression. Qual. Reliab. Eng. Int. 2014, 30, 891–903. [Google Scholar] [CrossRef]

- Preetha, R.; Bhanumathi, R.; Suresh, G.R. Immune Feature Weighted Least-Squares Support Vector Machine for Brain Tumor Detection Using MR Images. IETE J. Res. 2016, 62, 873–884. [Google Scholar] [CrossRef]

- Qi, B.; Zhao, C.; Yin, G. Feature weighting algorithms for classification of hyperspectral images using a support vector machine. Appl. Opt. 2014, 53, 2839–2846. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Zhang, S.; Qiu, L. Credit scoring by feature-weighted support vector machines. J. Zhejiang Univ. Sci. C Comput. Electron. 2013, 14, 197–204. [Google Scholar] [CrossRef]

- Deng, W.; Zhou, J. Approach for feature weighted support vector machine and its application in flood disaster evaluation. Disaster Adv. 2013, 6, 51–58. [Google Scholar]

- Guo, L.; Zhao, L.; Wu, Y.; Li, Y.; Xu, G.; Yan, Q. Tumor Detection in MR Images Using One-Class Immune Feature Weighted SVMs. IEEE Trans. Magn. 2011, 47, 3849–3852. [Google Scholar] [CrossRef]

- Babaud, J.; Witkin, A.P.; Baudin, M.; Duda, R.O. Uniqueness of the Gaussian Kernel for Scale-Space Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 26–33. [Google Scholar] [CrossRef]

- Keerthi, S.S.; Lin, C.J. Asymptotic Behaviors of Support Vector Machines with Gaussian Kernel. Neural Comput. 2003, 15, 1667–1689. [Google Scholar] [CrossRef] [PubMed]

- Howley, T.; Madden, M.G. The Genetic Kernel Support Vector Machine: Description and Evaluation. Artif. Intell. Rev. 2005, 24, 379–395. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Zhang, P. Model Selection Via Multifold Cross Validation. Ann. Stat. 1993, 21, 299–313. [Google Scholar] [CrossRef]

- Refaeilzadeh, P.; Tang, L.; Liu, H. Cross-Validation. In Encyclopedia of Database Systems; Liu, L., Özsu, M.T., Eds.; Springer: Boston, MA, USA, 2009; pp. 532–538. [Google Scholar]

- Ataei, M.; Osanloo, M. Using a Combination of Genetic Algorithm and the Grid Search Method to Determine Optimum Cutoff Grades of Multiple Metal Deposits. Int. J. Surf. Min. Reclam. Environ. 2004, 18, 60–78. [Google Scholar] [CrossRef]

- Bache, K.; Lichman, M. UCI Machine Learning Repository; School of Information and Computer Science, University of California: Irvine, CA, USA, 2013. [Google Scholar]

- Wilcoxon, F. Individual comparisons by ranking methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).