Abstract

It is well known that dynamic thermal line rating has the potential to use power transmission infrastructure more effectively by allowing higher currents when lines are cooler; however, it is not commonly implemented. Some of the barriers to implementation can be mitigated using modern battery energy storage systems. This paper proposes a combination of dynamic thermal line rating and battery use through the application of deep reinforcement learning. In particular, several algorithms based on deep deterministic policy gradient and soft actor critic are examined, in both single- and multi-agent settings. The selected algorithms are used to control battery energy storage systems in a 6-bus test grid. The effects of load and transmissible power forecasting on the convergence of those algorithms are also examined. The soft actor critic algorithm performs best, followed by deep deterministic policy gradient, and their multi-agent versions in the same order. One-step forecasting of the load and ampacity does not provide any significant benefit for predicting battery action.

1. Introduction

Incorporating a battery energy storage system (BESS) into a utility-scale transmission system can increase its reliability, its control can be challenging due to the uncertainty in forecasting operational parameters [1]. Deep Reinforcement Learning (DRL) is a framework combining neural networks with reinforcement learning function approximators to find behavioral policies that approximate actions in complex environments given [2]. DRL methods have been shown to be effective at solving problems in demand response [3], voltage regulation [4], and transactive energy markets [5], and are being incorporated into smart grids at an accelerating pace.

Deep deterministic policy gradient (DDPG) [6], multi-agent DDPG (MADDPG) [7], soft actor critic (SAC) [8], and multi-agent SAC (MASAC) [9] methods are methods that have been found to be successful at tasks of BESS demand response [9] and voltage control [10]. These algorithms are suitable for applications where state and action space are continuous, and may be effective at solving nonlinear BESS control problems involving continuous action and state space.

Transmission lines are designed and rated at maximum conductor temperatures that are representative of worst-case scenarios and assumed to be static or varied only seasonally. In reality, line temperatures vary with ambient temperature, solar irradiance, precipitation and notably wind speed and direction [11]. Compared to static line rating (SLR), dynamic thermal line rating (DTLR) takes the real-life temperature changes into account to estimate the maximum ampacity, or the highest current the line can carry at the actual operating conditions. DTLR can be multiple times higher than SLR [12]. Using DTLR instead of SLR in planning and operation of transmission lines could relieve network congestion and reduce or delay the need for new construction. Recent advancements in deep learning enable more accurate DTLR and load forecasts that can be used in combination with outage schedules to better allocate resources and plan for the future [13].

System demand does not necessarily coincide with the most favorable environmental conditions, which limits the practical effectiveness of DTLR on its own. Adding a BESS can help to buffer loads to when DTLR can be effectively taken advantage of, if it can be adequately controlled.

Mixed-integer Linear Programming (MILP) is often used for sizing BESS capacity in a multi-bus utility network given the topology of the grid and generator capacities. Once the BESS is sized given costs and load data, the prediction of charging and discharging, generator output, and power flows across the lines can be calculated using a combination of forecasting and MILP methods [14].

This paper considers how the integration of a BESS can be used to optimize use of DTLR by increasing power capacity and improving the reliability of the system. However, it also adds new operational challenges to maximize the benefits offered by DTLR. This study develops and evaluates a DRL-based controller for a practical case considering transmission line outages and storage system degradation using a modified IEEE 6-bus reliability test system (RTS) [15]. The controller is designed using several common DRL methods and then augmented by a 1-step load and transmissible power forecaster. Hourly data from the British Atmospheric Data Center (BADC) Merseyside weather dataset [16] are used. Prior to the application of DRL algorithms, the nonlinear programming (NLP) method is used to size the BESS appropriately.

Section 2 covers the background, including BESS sizing (Section 2.1), deep reinforcement learning algorithms (Section 2.2), forecasting of load and transmissible power (Section 2.3), and other recent applications of reinforcement learning algorithms in the power system (Section 2.4). The optimization problem is formulated in Section 3 and experimental setup described in Section 4. Obtained results are presented and discussed in Section 5. Finally, Section 6 provides major conclusions and possible directions of future work.

2. Background and Related Work

2.1. BESS Capacity Sizing

BESS sizing is formulated as a direct current optimal power flow (DC-OPF) problem. The power of the transmission line, P, is calculated as , where V is the voltage, A is the ampacity, and is the power factor. The power flow of transmission line l, at time step t, , is limited by the transmissible power of the line, , as in for line l in a set of transmission lines T, and time step t in a set of time steps H.

The balance of power at each bus b must be satisfied as in:

where is the load, is the generation, is the charging power, is the discharging power, is the BESS efficiency, for bus b, and lines l in a set of lines feeding into bus b. The stored battery energy at bus b and time step t, , evolves according to [17]:

where is the self-discharge constant, d is the number of time steps in a day, and is the time interval. The state of health, , in the time step t and the bus b is the fraction of storage capacity remaining in the time step t. The state of health depends on the superposition of cyclic aging (aging due to cycles of BESS charging and discharging), and calendric aging (BESS aging due to the increased speed of chemical reactions), which can be expressed as [17]:

Calendric aging depends on the progression of time as in:

where is the calendric aging constant [17]. Cyclic aging has the following recursive relationship:

where is the cyclic aging constant [17]. The objective of the optimization is to find the minimum BESS capacity, , and power rating, . The maximum amount of energy that the BESS can store and supply is bounded by , and , respectively. The charging power of BESS is bounded by the power rating and the maximum level to which the BESS can charge is expressed as [17]:

The discharging power of the BESS is bounded by the power rating, and the minimum power the BESS can discharge:

The BESS energy is bounded by the minimum energy defined by , and the BESS maximum capacity, controlled by , which is decreased by , as in:

The objective of the optimization is to minimize the BESS capacity, expressed as:

2.2. Deep Reinforcement Learning

2.2.1. DDPG and MADDPG

DDPG is a model-free off-policy actor–critic method involving target actor and target critic networks which are used to compute the critic loss. This algorithm is well suited for continuous state and action spaces [6]. DDPG uses a replay buffer to store past transitions and uses them to train the actor and critic networks. Given that s is the state, a is the action, and r is the reward, the tuple is then the batch from the replay buffer W. The target network weights are updated via a soft update [6]:

where and are the critic and target critic networks, respectively, and is the polyak constant. is the actor network and is the target actor. The critic loss is computed as [6]:

where , and is the batch size. The actor loss is updated via:

Exploration in DDPG is ensured by adding Gaussian noise to the actions [6]:

where is the exploration policy, and . Once the noise is added, the actions are clipped to the required ranges. When DDPG is used in an inference task, the noise is not added to the actions.

MADDPG extends DDPG into the multi-agent domain via the centralized training, decentralized execution approach where each agent outputs actions per its own actor network, but actions and observations from all agents are concatenated together to be passed as inputs into each agent’s critic network [7]. Similarly to DDPG, exploration is ensured by adding noise to the actions, and target networks are updated with the soft update. The actor loss is then updated [7]:

for each agent j, where is the global state, , is the agent j’s observation, and is the number of agents. Each agent receives its own reward .

2.2.2. SAC and MASAC

SAC is an off-policy actor–critic algorithm that uses an entropy regularization coefficient for exploration. Similarly to Twin-delyaed DDPG (TD3) [18], SAC trains two critic policies and picks the minimum one [8]. Entropy regularization trains a policy in a stochastic way, and exploration is carried out on-policy. The policy network is updated via [8]:

where . Soft update is used to correct the weights of the target networks, similarly to DDPG. Centralized training, decentralized execution is used to generalize SAC to MASAC, as in MADDPG.

2.2.3. PINNs

Physics-informed neural networks [19] (PINNs) are neural networks with the physical laws governing the problem incorporated into the loss function or the neural network architecture. In some cases, PINNs require fewer data and less complicated neural network architectures. From Equation (1), we can observe that the sum of the actions representing the generator power, BESS charge or discharge power, and line flow from the policy network must add up to 0 for all busses in the network, represented as , where j refers to the individual generator, BESS, and line flow actions. Using SAC as the algorithm, this physical knowledge can be incorporated into the policy loss network as:

where is a small regularization constant. Backpropagation may be forced to minimize the introduced term, which may improve the learning process of the algorithm and improve its convergence.

2.3. Load and Ampacity Forecasting

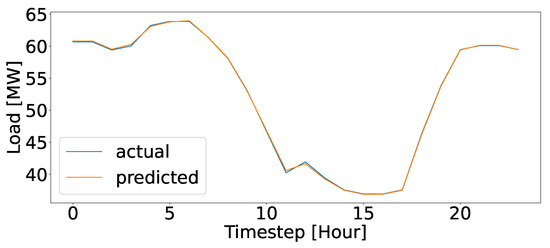

Combining convolutional neural networks with bidirectional long-short term memory networks (CNN-BiLSTM) has been shown to be effective at forecasting load [20]. The CNN-BiLSTM model with a non-trainable attention layer is used to forecast the load and transmissible power forward. Two one-dimensional convolutional layers, with 64 kernels, 3 kernel size, and ReLU activation are used in sequence, followed by a one-dimensional max-pooling layer with a pooling size of 2. Afterwards, the output is flattened, repeated once, and put through a BiLSTM layer. Two separate LSTM layers, with 200 units and ReLU activation, act on the output and are put through a non-trainable attention layer. Its output goes through another attention layer with the output of the 2nd LSTM layer. This is put through two consecutive TimeDistributed wrappers, the first with 100 units and the 2nd with a single unit. The forecasted and predicted loads on bus 4 are shown in Figure 1.

Figure 1.

Predicted and actual load for bus 4.

2.4. Related Work

Zhang et al. [3] applied SAC, DDPG, Double Deep Q-Network (DDQN), and Particle Swarm Optimization (PSO) algorithms to the problem of controlling a combined heating power plant (CHP), wind turbine generator (WTG), BESS output, and wind power conversion ratio in a network heating natural-gas power network. SAC outperformed other algorithms. When training on Danish load data, 24-step episodes of 1-h each were considered. To account for the proper reward function, constraint violations were assigned a penalty amount and the SAC algorithm learned to virtually eliminate over-constraint violations. The problem was tested on three scenarios and operating system cost minimization was taken as the reward.

Khalid et al. in [4] applied TD3, DDPG, Genetic Algorithm (GA), and PSO to the problem of frequency control in a two-area interconnected power system. The algorithms output the values of the proportional, integral, and derivative gains of the PID controller. A linear combination of the absolute values of the frequency deviations and tie-line power was used as the reward. The purpose of the simulation was to reduce the Area Control Error between the two interconnected areas. TD3 outperformed the other algorithms and was able to maintain the frequency of the system within ±0.05 Hz over 60 s of operation. Yan et al. in [21] employed DDPG to minimize the sum of the generation, battery aging, and unscheduled interchange costs, taking into account BESS degradation. Over 200 s, DDPG was shown to be effective at keeping the frequency deviation within ±0.05 Hz limits. The critic neural network was trained separately before applying DDPG. Then, the actor network was trained using the pre-trained critic network.

Zhang et al. in [22] tested TD3, SAC, DDPG, DQN, Advantage Actor Critic (A3C), Cycle Charging (CC), and Proximal Policy Optimization algorithms on the task of managing a microgrid using Indonesian data. The algorithms were tested with the reward function based on the linear combination of the negative of the fuel consumption over the year and the total number of blackouts occurring. The microgrid was assumed to contain BESS, photovoltaic (PV) panels, a wind turbine, a diesel generator, and customer loads. TD3 outperformed other algorithms, with DQN being the close second. Cao et al. in [10] use MADDPG to control voltage drop in IEEE 123-bus RTS. They assign a reward function as the sum of the absolute voltage deviations per bus and demonstrate effective control.

Cao et al. in [9] applied the MASAC algorithm, multi-agent TD3 (MATD3), and decentralized and centralized training versions of SAC algorithm, to the task of voltage control on the IEEE 123 bus system. The control region was divided into four sub-regions and multiple agents were assigned to control the static VAR compensator and PV output. The reward function consisted of the linear combination of voltage deviation, penalty terms, and curtailment. Centrally-trained SAC outperformed other algorithms, with MATD3 performing similarly. Over 90 s, both algorithms were able to regulate the voltage to be within ±0.05 p.u. Hussain et al. in [1] trained the SAC algorithm to effectively control the BESS at a fast-charging EV station. SAC was compared with TD3 and DDPG and outperformed the other algorithms. The reward function was composed of the load reward, the battery discharging and charging reward, and the peak load reward. A summary of recent studies using RL for BESS control is shown in Table 1.

Table 1.

Summary of recent studies on BESS control using RL.

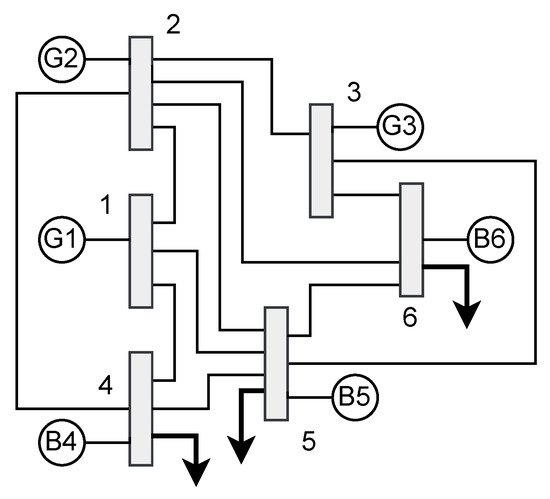

3. Problem Formulation

The experiments were conducted using a modified 6-bus IEEE test grid shown in Figure 2. This system is selected as the test bed for the experiments to demonstrate the application of RL algorithms on a relatively simple setup while preserving the properties of variable load and allowing the presence of numerous buses and multiple BESSs. As shown in the diagram, BESSs and loads are present at busses 4, 5, and 6, together with 11 transmission lines, comprising 17 actions. It is expected that, for systems with higher numbers of buses, training and convergence will require a higher number of episodes, but the behavior of the system will be qualitatively similar.

Figure 2.

Modified IEEE 6-bus test grid.

3.1. Single-Agent Setting

With DDPG, in a single-agent setting, a single actor network will output all 17 actions. The generator action is derived from an actor node with a sigmoid activation and is then multiplied by the value of the maximum action of the respective generator. This is expressed as:

where is the clipped generator action, is the output of the actor network for generator action at bus b, is the maximum generation at bus b, and is the Gaussian noise. For the power flow across each transmission line, the value is derived from the node with activation and is then multiplied by the value of the maximum transmissible power in all time steps t, to ensure that power across lines can flow backwards, before being clipped between the negative and positive values of the transmissible power at time t. This can be written as:

where is the clipped line action, is the output of the actor network for the line action, and is the maximum absolute line rating for line l for all time steps t in set H. For each BESS, the value is derived from the node with activation and is then multiplied by the power rating of the BESS; before being clipped by the positive amount, the BESS can charge at the time step t, and, for the negative amount, the BESS can discharge at time step t. The clipping values for the BESS action are calculated using Equations (6) and (7). This can be expressed as:

where is the clipped BESS action. It was possible to clip the term between and and introduce a penalty in the reward function if is outside of its allowable range, but constraining the BESS action was believed to allow the algorithm to learn easier.

In the case of SAC, the actor network will also output 17 actions but uses no activation. Instead, the actions are squashed with the function and multiplied by the maximum amounts they are allowed to take on, before being clipped by the maximum generator output, the transmissible power of the lines at time step t, or the BESS limits to charge and discharge at time step t, for generators, transmission line flows, and BESSs, respectively. This is also expressed by Equations (17)–(19), but with .

In a single-agent setting to define the state, the normalized energy of every BESS, , is calculated given the maximum and minimum state of charge, and . The state can then be represented as an array of current conditions, including BESS energy, load at each bus, transmissible power, and time-step:

where

and

where is the number of time steps in a single episode. Normalizing the battery energy and calculating the fractions of load, transmissible power, and time-step ensures the numbers remain small to speed up learning. Equation (1) can be rewritten as:

where if BESS is charging, and 0 otherwise. At every time step t, the power balance must be satisfied at every bus b. The algorithm aims to train the agent to produce BESS action, line flow, and generation that would satisfy the power balance. Maximizing the absolute value of will then yield 0 in an optimal scenario. The reward at bus b and time step t can then be written as:

where is a positive constant representing the offset that controls the positive over-generation, ensuring enough power is supplied to serve the load. Here, we take . When forecasted load, , and forecasted transmissible power, , are taken into account in the state, the state definition becomes:

where the forecasted scaled loads are calculated using Equation (22).

3.2. Multi-Agent Setting

In the multi-agent setting, output a pre-defined combination of generator, line flow, and BESS actions. Agent 1 outputs bus 4 BESS action, generator 1 action, line flows coming from bus 1, and . Agent 2 outputs bus 5 BESS action, generator 2 action, line flows from bus 2, and . Agent 3 outputs bus 6 BESS action, generator 3 action, line flows from bus 3, and . In this case, the reward vector is adjusted to accommodate individual rewards for each agent. The system was tested with three agents, each with the power balances from busses 1/4, 2/5, and 3/6 as rewards. This can be expressed as:

When forecasted actions are involved, the same procedure as in the single-agent setting is used. In both multi-agent and single-agent settings, the replay buffer W does not pick random samples of but instead picks a batch of samples as they appear in sequence to help the algorithm learn the transition dynamics.

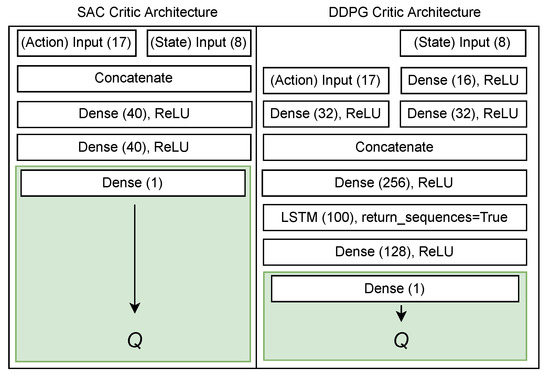

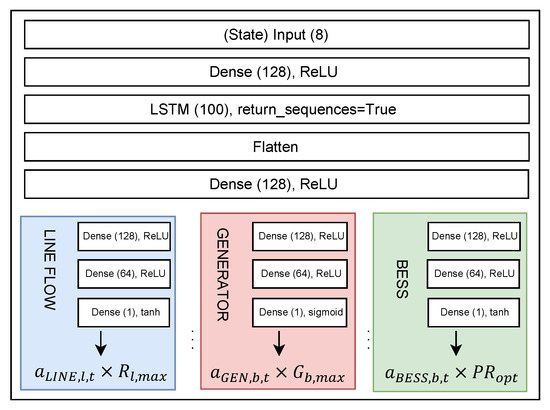

4. Experimental Setup

The neural network architectures used for DDPG and SAC critic and actor networks are shown in Figure 3, Figure 4 and Figure 5. and are chosen to be 20% and 80%, respectively. Hourly ambient temperature, wind speed, wind direction, and solar irradiance data were obtained from the BADC dataset and the maximum ambient temperature for each of the four seasons was used in the calculation of the ampacity [24], which was achieved using the IEEE 738 standard [25]. Lithium Nickel-Manganese Cobalt BESS was assumed with calendric aging and cyclic aging constants found in [17]. Drake conductor was assumed with 138kV lines in the 6-bus test grid. Twenty three steps were simulated, and 12 time step inputs were used in forecasting load and transmissible power. The modified 6-bus test grid was supplemented with varying bus loads. The loads for busses 4, 5, and 6 were taken from the corresponding IEEE 24-bus test grid [26] loads for busses 1, 2, and 3. The generator maximum values for generators at busses 1, 2, and 3, were taken to be 50 MWs, 60 MWs, and 50 MWs, respectively. At the start of each simulation episode, the BESS capacity was assumed to be at 80% of the calculated BESS capacity. NLP optimization was performed using the Gurobi solver with neural network training using TensorFlow modules. The hyper-parameters for DDPG and SAC algorithm training are shown in Table 2.

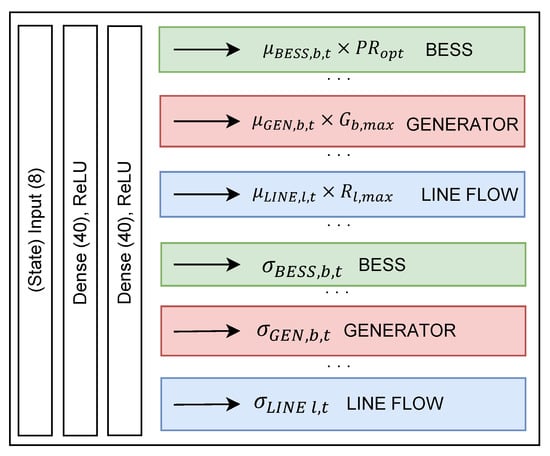

Figure 3.

DDPG and SAC critic network architectures.

Figure 4.

DDPG actor network architecture.

Figure 5.

SAC actor network architecture.

Table 2.

Hyper-parameters used in the algorithms.

5. Results and Discussion

The optimal BESS capacity and power rating were calculated to be 1042 MWh and 73 MWs, respectively. The cumulative episodic reward is calculated according to Equation (23) as the sum of 6 bus power balances over 24 h. To calculate the power balance per bus per hour , we divide the cumulative episodic reward by . We use this metric to compare the performance of algorithms against each other. The learning curves without load and ampacity forecasting and with load and ampacity forecasting are shown in Figure 6 and Figure 7, respectively. The training of each model using one of DDPG, SAC, MADDPG, and MASAC algorithms, for cases with and without load forecasting, took approximately 1 day using a 3.30 GHz 125 Gigabyte RAM 10-core Intel Core i9-9820X CPU virtual machine. Training the load and ampacity forecast models took approximately 1 h per model per hyper-parameter setting using a Tesla V100 GPU on the same virtual machine. Grids with a higher number of buses will require more training time if the same hardware is employed. After the models are trained and run in real-time, the desired quantities are calculated virtually instantaneously.

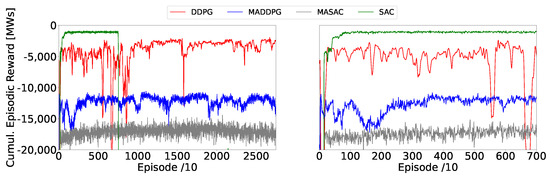

Figure 6.

Algorithm convergence without load and ampacity forecasting, stable region on the right.

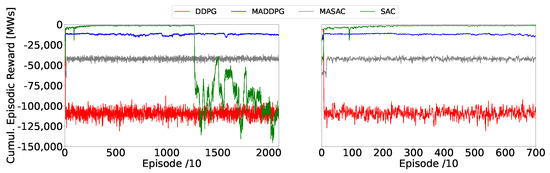

Figure 7.

Algorithm convergence with load and ampacity forecasting, stable region on the right.

From Figure 6, we can see that, while the SAC algorithm achieves the highest cumulative episodic reward, the learning becomes unstable after about 7500 episodes. Zooming in on Figure 6, we can observe the learning of the algorithms in the stable regions shown on the right-hand side of Figure 6. As can be seen in Table 3, when forecasting is not involved in the definition of the state as per Equation (20), SAC performs best with the highest cumulative reward of −5.48 [u], followed by DDPG, with −7.66 [u]. SAC converged after around 1200 episodes, whereas DDPG converged at about 2700 episodes. Interestingly, both SAC and DDPG converged around a similar value between 6 [u] and 8 [u]. Multi-agent versions of both algorithms under-performed their single-agent counterparts, with MASAC converging to an average value of −132.8 [u] in about 5000 episodes while MADDPG under-performed with an average value of −83.9 [u] in approximately 4000 episodes.

Table 3.

Algorithm performance on BESS action prediction.

SAC achieved the highest cumulative episodic reward, and learning is unstable after about 12,500 episodes as can be seen in Figure 7. We can observe the performance of the algorithms in the stable region, by zooming in on the right-hand side of Figure 7. When forecasting is involved, as per Equation (25), SAC again outperformed other algorithms with the highest cumulative episodic reward of −6.20 [u] and converged around the value of −9.70 [u] in approximately 2500 episodes. DDPG performed much worse at the best value of −609 [u], converging around 2000 episodes, while MASAC converged to a value of −289 [u] with the best performance of −241 [u] in approximately 200 episodes. MADDPG had better convergence properties reaching about −77.2 [u] in about 250 episodes.

It appears that single-agent algorithms outperformed their multi-agent versions, most likely because multiple neural networks added additional complexity to the problem. Forecasting seems to provide no beneficial effect towards improving the final result of the algorithms or their speed of convergence.

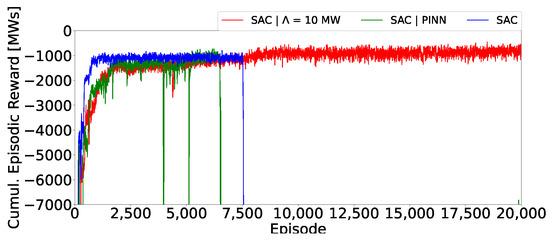

Given the relative performance of SAC compared to the other algorithms, we increased the over-generation constant from 0 to 10 MW. We also modify the actor network loss to match the PINN loss stated in Equation (16). We can observe that allowing the algorithm to learn to over-generate improved the best cumulative reward to –2.70 [u] and the average cumulative reward to −4.93 [u], while SAC with PINN produces the best cumulative reward of −5.07 [u] and the average cumulative reward of −6.77 [u]. SAC with MW also produces the most stable learning among the three. The resulting cumulative episodic reward curves are shown in Figure 8.

Figure 8.

SAC, SAC with PINNs, and SAC with MW.

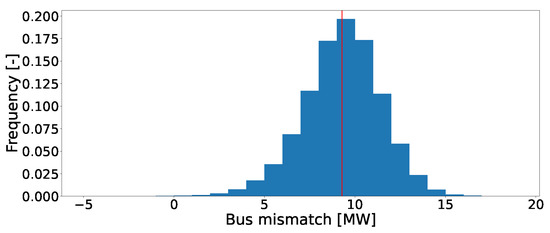

With over-generation, Equation (23) can be used to compute the histogram for power balance for every bus. When we compute this for bus 1, the mean of the distribution is 9.29 MW, close to the expected 10 MW. The histogram showing the power balance for bus 1 is shown in Figure 9, where positive mean implies that, on average, the actor over-generates.

Figure 9.

Error distribution for bus 1.

When we calculate the means for power balances for other busses, we can see that buses 1–5 have positive means, meaning in most cases that the load is met, while bus 6 has negative mean, and, in some cases, the load is not completely met. Bus 6 has the worst average mean power balance at −5.28 MW. The means of the power balance for other busses are shown in Table 4.

Table 4.

Mean bus power balances.

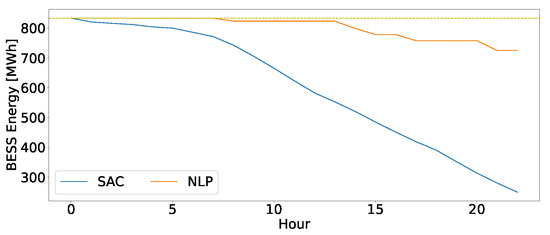

The nonlinear programming (NLP) method is used to calculate the optimal BESS performance. Since the BESS capacity and the BESS power rating are known, we re-ran the NLP method in Section 2.1 but define the BESS capacity and power rating. The objective function was chosen to be minimizing BESS action, which is expressed as:

where N is the number of time intervals . We can compare the BESS energy calculated using the NLP method and using SAC with PINN at MW. We can see that the energy of the BESS at bus 5 decreases monotonically but at a slower rate than predicted by the NLP method. The energy level of the BESS at bus 5 calculated with a trained actor is shown in Figure 10.

Figure 10.

Energy of BESS at bus 5.

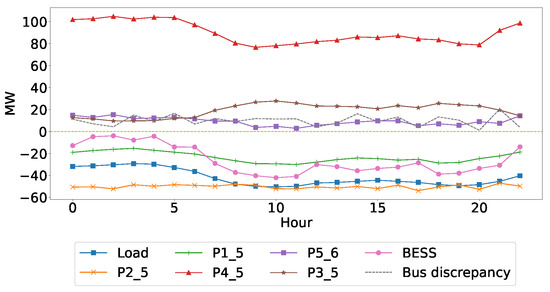

We can also calculate the power through the lines, the bus power balance, and the BESS output power entering every bus. For bus 5, the power balance is positive, signifying the actor over-generates ensuring all load is met. The power through the line, the BESS output power, and the power balance for bus 5 are shown in Figure 11.

Figure 11.

Power flow over lines and BESS power at bus 5.

6. Conclusions

This paper presents the application of DRL algorithms, in single- and multi-agent settings, to forecast BESS, line flow, and generator actions for a modified IEEE 6-bus test grid. The standard test grid was adapted to consider the combined application of DTLR with utility-scale battery storage as a solution to improve the use of transmission systems. This approach can be used, for example, to increase the current-carrying capacity of the systems and defer the construction of new lines.

The performances of DDPG, MADDPG, SAC, and MASAC algorithms are compared, with SAC outperforming the other methods based on both the accuracy of the solution and the speed of convergence. As the main result, SAC achieved the best bus power balance of −5.48 , on an hourly average. DDPG presented the second-best result with −7.66 . Their multi-agent versions reached several times higher values, indicating that they are not suitable for this application. When overgeneration of 10 MW was introduced, the best power bus balance improved up to −2.70 . The introduction of the physics-informed neural network loss function and 1-step forecasting of load and transmissible power were also considered, and it was found that their introduction does not affect the convergence and the best bus power balance during learning.

Finally, the authors suggest as future work that the effect of multi-step forecasting on the performance of the algorithms can be explored, the reward function can be modified to enable more accurate learning, and the clipping for BESS action can be changed to include a penalty. In addition, SAC and other continuous state and action algorithms can also be tested on larger test grids.

Author Contributions

Conceptualization, V.A. and P.M.; methodology, V.A. and M.G.; software, V.A.; validation, V.A. and M.G.; formal analysis, V.A.; investigation, V.A.; resources, P.M.; data curation, V.A.; writing—original draft preparation, V.A. and M.G.; writing—review and editing, P.M. and T.W.; supervision, P.M. and T.W.; project administration, P.M.; funding acquisition, P.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Sciences and Engineering Research Council (NSERC) of Canada Grant No. ALLRP 549804-19 and by the Alberta Electric System Operator (AESO), AltaLink, ATCO Electric, ENMAX, EPCOR Inc., and FortisAlberta.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hussain, A.; Bui, V.H.; Kim, H.M. Deep reinforcement learning-based operation of fast charging stations coupled with energy storage system. Electr. Power Syst. Res. 2022, 210, 108087. [Google Scholar] [CrossRef]

- Cao, D.; Hu, W.; Zhao, J.; Zhang, G.; Zhang, B.; Liu, Z.; Chen, Z.; Blaabjerg, F. Reinforcement learning and its applications in modern power and energy systems: A review. J. Mod. Power Syst. Clean Energy 2020, 8, 1029–1042. [Google Scholar] [CrossRef]

- Zhang, B.; Hu, W.; Cao, D.; Li, T.; Zhang, Z.; Chen, Z.; Blaabjerg, F. Soft actor–critic–based multi-objective optimized energy conversion and management strategy for integrated energy systems with renewable energy. Energy Convers. Manag. 2021, 243, 114381. [Google Scholar] [CrossRef]

- Khalid, J.; Ramli, M.A.; Khan, M.S.; Hidayat, T. Efficient Load Frequency Control of Renewable Integrated Power System: A Twin Delayed DDPG-Based Deep Reinforcement Learning Approach. IEEE Access 2022, 10, 51561–51574. [Google Scholar] [CrossRef]

- Zhang, S.; May, D.; Gül, M.; Musilek, P. Reinforcement learning-driven local transactive energy market for distributed energy resources. Energy AI 2022, 8, 100150. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Lowe, R.; Wu, Y.I.; Tamar, A.; Harb, J.; Pieter Abbeel, O.; Mordatch, I. Multi-agent actor–critic for mixed cooperative-competitive environments. Adv. Neural Inf. Process. Syst. (NIPS) 2017, 30, 1–12. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor–critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Cao, D.; Zhao, J.; Hu, W.; Ding, F.; Huang, Q.; Chen, Z.; Blaabjerg, F. Data-driven multi-agent deep reinforcement learning for distribution system decentralized voltage control with high penetration of PVs. IEEE Trans. Smart Grid 2021, 12, 4137–4150. [Google Scholar] [CrossRef]

- Cao, D.; Hu, W.; Zhao, J.; Huang, Q.; Chen, Z.; Blaabjerg, F. A multi-agent deep reinforcement learning based voltage regulation using coordinated PV inverters. IEEE Trans. Power Syst. 2020, 35, 4120–4123. [Google Scholar] [CrossRef]

- Barton, T.; Musilek, M.; Musilek, P. The Effect of Temporal Discretization on Dynamic Thermal Line Rating. In Proceedings of the 2020 21st International Scientific Conference on Electric Power Engineering (EPE), Prague, Czech Republic, 19–21 October 2020; pp. 1–6. [Google Scholar]

- Karimi, S.; Musilek, P.; Knight, A.M. Dynamic thermal rating of transmission lines: A review. Renew. Sustain. Energy Rev. 2018, 91, 600–612. [Google Scholar] [CrossRef]

- Faheem, M.; Shah, S.B.H.; Butt, R.A.; Raza, B.; Anwar, M.; Ashraf, M.W.; Ngadi, M.A.; Gungor, V.C. Smart grid communication and information technologies in the perspective of Industry 4.0: Opportunities and challenges. Comput. Sci. Rev. 2018, 30, 1–30. [Google Scholar] [CrossRef]

- Hussain, A.; Bui, V.H.; Kim, H.M. Impact analysis of demand response intensity and energy storage size on operation of networked microgrids. Energies 2017, 10, 882. [Google Scholar] [CrossRef]

- Wood, A.J.; Wollenberg, B.F.; Sheblé, G.B. Power Generation, Operation, and Control; John Wiley & Sons: New York, NY, USA, 2013. [Google Scholar]

- British Atmospheric Data Center (BADC). Available online: https://data.ceda.ac.uk/badc/ukmo-midas-open/data/ (accessed on 31 August 2022).

- Hesse, H.C.; Martins, R.; Musilek, P.; Naumann, M.; Truong, C.N.; Jossen, A. Economic optimization of component sizing for residential battery storage systems. Energies 2017, 10, 835. [Google Scholar] [CrossRef]

- Fujimoto, S.; Hoof, H.; Meger, D. Addressing function approximation error in actor–critic methods. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 1587–1596. [Google Scholar]

- Misyris, G.S.; Venzke, A.; Chatzivasileiadis, S. Physics-informed neural networks for power systems. In Proceedings of the 2020 IEEE Power & Energy Society General Meeting (PESGM), Virtual Event, 3–6 August 2020; pp. 1–5. [Google Scholar]

- Wang, Z.; Jia, L.; Ren, C. Attention-Bidirectional LSTM Based Short Term Power Load Forecasting. In Proceedings of the 2021 Power System and Green Energy Conference (PSGEC), Shanghai, China, 20–22 August 2021; pp. 171–175. [Google Scholar]

- Yan, Z.; Xu, Y.; Wang, Y.; Feng, X. Data-driven economic control of battery energy storage system considering battery degradation. In Proceedings of the 2019 9th International Conference on Power and Energy Systems (ICPES), Perth, Australia, 10–12 December 2019; pp. 1–5. [Google Scholar]

- Zhang, S.; Nandakumar, S.; Pan, Q.; Yang, E.; Migne, R.; Subramanian, L. Benchmarking Reinforcement Learning Algorithms on Island Microgrid Energy Management. In Proceedings of the 2021 IEEE PES Innovative Smart Grid Technologies-Asia (ISGT Asia), Brisbane, Australia, 5–8 December 2021; pp. 1–5. [Google Scholar]

- Bui, V.H.; Hussain, A.; Kim, H.M. Double deep Q-learning-based distributed operation of battery energy storage system considering uncertainties. IEEE Trans. Smart Grid 2019, 11, 457–469. [Google Scholar] [CrossRef]

- Metwaly, M.K.; Teh, J. Probabilistic Peak Demand Matching by Battery Energy Storage Alongside Dynamic Thermal Ratings and Demand Response for Enhanced Network Reliability. IEEE Access 2020, 8, 181547–181559. [Google Scholar] [CrossRef]

- IEEE Std 738-2012; Standard for Calculating the Current-Temperature Relationship of Bare Overhead Conductors. IEEE: Piscataway, NJ, USA, 2013; pp. 1–72. [CrossRef]

- Probability Methods Subcommittee. IEEE reliability test system. IEEE Trans. Power Appar. Syst. 1979, PAS-98, 2047–2054. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).